cfdb3e417e57916dcd9021305721ca52.ppt

- Количество слайдов: 178

Q 2010 course on “Quality reporting and metadata” May 2010, Helsinki August Götzfried and Eva Elvers

Q 2010 course on “Quality reporting and metadata” May 2010, Helsinki August Götzfried and Eva Elvers

SESSION 2 09: 45 – 10: 15 THE ESS FRAMEWORK FOR QUALITY REPORTING (Code of Practice, Statistical Law, ESQR, Dat. Qam, etc. ) Eva Elvers 2

SESSION 2 09: 45 – 10: 15 THE ESS FRAMEWORK FOR QUALITY REPORTING (Code of Practice, Statistical Law, ESQR, Dat. Qam, etc. ) Eva Elvers 2

European Statistics Code of Practice n June 2004: Council invited COM to make by June 2005 a proposal to develop minimum European standards on the independence, integrity and accountability of the European Statistical System n February 2005: adoption of the Code by the SPC based on the proposal of its Task Force n 25 May 2005: COM adoption of a Communication and a Recommendation on the independence, integrity and accountability of the national and Community statistical authorities incl. the Code of Practice (Co. P) 3

European Statistics Code of Practice n June 2004: Council invited COM to make by June 2005 a proposal to develop minimum European standards on the independence, integrity and accountability of the European Statistical System n February 2005: adoption of the Code by the SPC based on the proposal of its Task Force n 25 May 2005: COM adoption of a Communication and a Recommendation on the independence, integrity and accountability of the national and Community statistical authorities incl. the Code of Practice (Co. P) 3

European Statistics Code of Practice Co. P has two aims: n Improving trust and confidence in the independence, integrity and accountability of both National Statistical Authorities and Eurostat, and in the credibility and quality of the statistics they produce and disseminate (i. e. an external focus); n Promoting the application of best international statistical principles, methods and practices by all producers of European Statistics to enhance their quality (i. e. an internal focus). 4

European Statistics Code of Practice Co. P has two aims: n Improving trust and confidence in the independence, integrity and accountability of both National Statistical Authorities and Eurostat, and in the credibility and quality of the statistics they produce and disseminate (i. e. an external focus); n Promoting the application of best international statistical principles, methods and practices by all producers of European Statistics to enhance their quality (i. e. an internal focus). 4

European Statistics Code of Practice n 15 Principles addressing the institutional environment, the statistical processes and their outputs (inspired by existing international standards and the ESS quality definition) n self-regulation of NSIs and Eurostat n indicators to provide a reference for periodical reviews of the implementation of the Code 5

European Statistics Code of Practice n 15 Principles addressing the institutional environment, the statistical processes and their outputs (inspired by existing international standards and the ESS quality definition) n self-regulation of NSIs and Eurostat n indicators to provide a reference for periodical reviews of the implementation of the Code 5

European Statistics Code of Practice Institutional environment • Principle 1: Professional Independence • Principle 2: Mandate for data collection • Principle 3: Adequacy of Resources • Principle 4: Quality Commitment Example of indicators: I. The mandate to collect information for the production and dissemination of official statistics is specified in law. II. The statistical authority is allowed by national legislation to use administrative records for statistical purposes. III. On the basis of a legal act, the statistical authority may compel response to statistical surveys. • Principle 5: Statistical Confidentiality • Principle 6: Impartiality and Objectivity 6

European Statistics Code of Practice Institutional environment • Principle 1: Professional Independence • Principle 2: Mandate for data collection • Principle 3: Adequacy of Resources • Principle 4: Quality Commitment Example of indicators: I. The mandate to collect information for the production and dissemination of official statistics is specified in law. II. The statistical authority is allowed by national legislation to use administrative records for statistical purposes. III. On the basis of a legal act, the statistical authority may compel response to statistical surveys. • Principle 5: Statistical Confidentiality • Principle 6: Impartiality and Objectivity 6

European Statistics Code of Practice Example of indicators: • Principle 7: Sound Methodology I. Where European Statistics are based on administrative data, the definitions and concepts used for the administrative purpose must be a good approximation to those required for statistical purposes. • Principle 8: Appropriate Statistical Procedures II. In case of statistical surveys, questionnaires are systematically tested prior to the data collection. • Principle 9: Non-Excessive Burden on Respondents III. Survey designs, sample selections, and sample weights are well based and regularly reviewed, revised or updated as required. Statistical Processes • Principle 10: Cost Effectiveness IV. Field operations, data entry, and coding are routinely monitored and revised as required. V. Appropriate editing and imputation computer systems are used and regularly reviewed, revised or updated as required. VI. Revisions follow standard, wellestablished and transparent procedures. 7

European Statistics Code of Practice Example of indicators: • Principle 7: Sound Methodology I. Where European Statistics are based on administrative data, the definitions and concepts used for the administrative purpose must be a good approximation to those required for statistical purposes. • Principle 8: Appropriate Statistical Procedures II. In case of statistical surveys, questionnaires are systematically tested prior to the data collection. • Principle 9: Non-Excessive Burden on Respondents III. Survey designs, sample selections, and sample weights are well based and regularly reviewed, revised or updated as required. Statistical Processes • Principle 10: Cost Effectiveness IV. Field operations, data entry, and coding are routinely monitored and revised as required. V. Appropriate editing and imputation computer systems are used and regularly reviewed, revised or updated as required. VI. Revisions follow standard, wellestablished and transparent procedures. 7

European Statistics Code of Practice Statistical Output • Principle 11: Relevance • Principle 12: Accuracy and Reliability • Principle 13: Timeliness and Punctuality • Principle 14: Coherence and Comparability • Principle 15: Accessibility and Clarity Example of indicators: I. Source data, intermediate results and statistical outputs are assessed and validated. II. Sampling errors and non-sampling errors are measured and systematically documented according to the framework of the ESS quality components. III. Studies and analysis of revisions are carried out routinely and used internally to inform statistical processes. 8

European Statistics Code of Practice Statistical Output • Principle 11: Relevance • Principle 12: Accuracy and Reliability • Principle 13: Timeliness and Punctuality • Principle 14: Coherence and Comparability • Principle 15: Accessibility and Clarity Example of indicators: I. Source data, intermediate results and statistical outputs are assessed and validated. II. Sampling errors and non-sampling errors are measured and systematically documented according to the framework of the ESS quality components. III. Studies and analysis of revisions are carried out routinely and used internally to inform statistical processes. 8

UN Frameworks § Fundamental Principles of Official Statistics (1994) - Sets the basic rules for the statistics producers - 10 Fundamental Principles to establish a quality management system § Principles Governing International Statistical Activities (2005) - Further recalls the UN Fundamental Principles and the Declaration of Good Practices in Technical Cooperation in Statistics (1999) - 10 Principles and 40 Good practices 9

UN Frameworks § Fundamental Principles of Official Statistics (1994) - Sets the basic rules for the statistics producers - 10 Fundamental Principles to establish a quality management system § Principles Governing International Statistical Activities (2005) - Further recalls the UN Fundamental Principles and the Declaration of Good Practices in Technical Cooperation in Statistics (1999) - 10 Principles and 40 Good practices 9

Total Quality Management n n LEG on Quality outputs as well as Co. P pinpoint two quality aspects: - Total quality management as a basic quality framework. - Promoting CBM’s in processes and outputs. Note the connections to ethical issues: - UN Fundamental Principles of Official Statistics (1994). - ISI Declaration on Professional Ethics (1985, upd. ). 10

Total Quality Management n n LEG on Quality outputs as well as Co. P pinpoint two quality aspects: - Total quality management as a basic quality framework. - Promoting CBM’s in processes and outputs. Note the connections to ethical issues: - UN Fundamental Principles of Official Statistics (1994). - ISI Declaration on Professional Ethics (1985, upd. ). 10

Product/ output quality components OECD: relevance, accuracy, credibility, timeliness (and punctuality), accessibility, interpretability, coherence (within dataset, across datasets, over time, across countries) Eurostat: relevance, accuracy, timeliness and punctuality, accessibility and clarity, coherence (within dataset, across dataset), comparability (over time, across countries) ECB: accuracy/reliability, methodological soundness, timeliness, consistency IMF: prerequisites of quality, accuracy and reliability, assurances of integrity, methodological soundness, serviceability (timeliness and periodicity), accessibility, serviceability (within dataset, across dataset, over time, across countries) FAO: relevance (completeness), accuracy, timeliness, punctuality, accessibility, clarity (sound metadata), coherence, comparability UNESCO: relevance, accuracy, interpretability, coherence UNECE: relevance, accuracy (credibility), timeliness, punctuality, accessibility, clarity, comparability (across datasets, over time, across countries) 11

Product/ output quality components OECD: relevance, accuracy, credibility, timeliness (and punctuality), accessibility, interpretability, coherence (within dataset, across datasets, over time, across countries) Eurostat: relevance, accuracy, timeliness and punctuality, accessibility and clarity, coherence (within dataset, across dataset), comparability (over time, across countries) ECB: accuracy/reliability, methodological soundness, timeliness, consistency IMF: prerequisites of quality, accuracy and reliability, assurances of integrity, methodological soundness, serviceability (timeliness and periodicity), accessibility, serviceability (within dataset, across dataset, over time, across countries) FAO: relevance (completeness), accuracy, timeliness, punctuality, accessibility, clarity (sound metadata), coherence, comparability UNESCO: relevance, accuracy, interpretability, coherence UNECE: relevance, accuracy (credibility), timeliness, punctuality, accessibility, clarity, comparability (across datasets, over time, across countries) 11

Product/output quality components – a possible summary n Relevance n Accuracy (and reliability) n Timeliness n Punctuality n Accessibility n Clarity/interpretability n Coherence/consistency n Comparability 12

Product/output quality components – a possible summary n Relevance n Accuracy (and reliability) n Timeliness n Punctuality n Accessibility n Clarity/interpretability n Coherence/consistency n Comparability 12

EU Legislation on Quality Reporting n Council/EP Regulations - Revision « Statistical Law » - Sectoral Regulations n Commission Regulations n Gentlemen’s agreements 13

EU Legislation on Quality Reporting n Council/EP Regulations - Revision « Statistical Law » - Sectoral Regulations n Commission Regulations n Gentlemen’s agreements 13

EU Legislation on Quality Reporting n The new Regulation on European Statistics (signed by the Parliament and Council on 11. 03. 09) - References to Code of Practice (Whereas, art. 1, art. 2, art. 7, art. 11) - Article 12 – Statistical quality 14

EU Legislation on Quality Reporting n The new Regulation on European Statistics (signed by the Parliament and Council on 11. 03. 09) - References to Code of Practice (Whereas, art. 1, art. 2, art. 7, art. 11) - Article 12 – Statistical quality 14

EU Legislation on Quality Reporting n Article 12(1) specifies the quality criteria (relevance, accuracy, timeliness, punctuality, accessibility and clarity, comparability, and coherence) that should be applied. n Article 12(2) specifies that the modalities, structure and periodicity of quality reports provided for in sectoral legislation shall be defined by the Commission in accordance with (simple) regulatory procedure. 15

EU Legislation on Quality Reporting n Article 12(1) specifies the quality criteria (relevance, accuracy, timeliness, punctuality, accessibility and clarity, comparability, and coherence) that should be applied. n Article 12(2) specifies that the modalities, structure and periodicity of quality reports provided for in sectoral legislation shall be defined by the Commission in accordance with (simple) regulatory procedure. 15

EU Legislation on Quality Reporting n Article 12(2) the specific quality requirements, such as target values and minimum standards for the statistical production, may also be laid down in sectoral legislation. n Article 12(3) Member States shall provide the Commission (Eurostat) with reports on the quality of the data transmitted. The Commission (Eurostat) shall assess the quality of data transmitted and shall prepare and publish reports on the quality of European Statistics. 16

EU Legislation on Quality Reporting n Article 12(2) the specific quality requirements, such as target values and minimum standards for the statistical production, may also be laid down in sectoral legislation. n Article 12(3) Member States shall provide the Commission (Eurostat) with reports on the quality of the data transmitted. The Commission (Eurostat) shall assess the quality of data transmitted and shall prepare and publish reports on the quality of European Statistics. 16

EU Legislation on Quality Reporting n Proposal for a Regulation of the Parliament and of the Council on population and housing censuses. Brussels, 11 January 2008 “ 1. For the purpose of this Regulation, the following quality assessment dimensions shall apply to the data transmitted: ”. . “ 4. The Commission (Eurostat), in cooperation with the competent authorities of the Member States, shall provide methodological recommendations designed to ensure the quality of the data and metadata produced, acknowledging, in particular, the Conference of European Statisticians Recommendations for the Censuses of population and Housing”. 17

EU Legislation on Quality Reporting n Proposal for a Regulation of the Parliament and of the Council on population and housing censuses. Brussels, 11 January 2008 “ 1. For the purpose of this Regulation, the following quality assessment dimensions shall apply to the data transmitted: ”. . “ 4. The Commission (Eurostat), in cooperation with the competent authorities of the Member States, shall provide methodological recommendations designed to ensure the quality of the data and metadata produced, acknowledging, in particular, the Conference of European Statisticians Recommendations for the Censuses of population and Housing”. 17

EU Legislation on Quality Reporting n Commission Regulations of quality evaluation Standard articles: – Structure and evaluation criteria (details specified in annex) – Variables included (and breakdowns) – Schedule (first quality report and subsequent reports) – Review (if optional items included) – Assessment of quality (by Eurostat of MS’s statistics) – Entry into force 18

EU Legislation on Quality Reporting n Commission Regulations of quality evaluation Standard articles: – Structure and evaluation criteria (details specified in annex) – Variables included (and breakdowns) – Schedule (first quality report and subsequent reports) – Review (if optional items included) – Assessment of quality (by Eurostat of MS’s statistics) – Entry into force 18

Self-Assessment Checklist for Survey Managers n The DESAP project (“Development of a Self-Assessment Programme for Surveys”), co-ordinated by DESTATIS (Germany), with Statistics Austria, Statistics Finland, ISTAT (Italy), Statistics Sweden and the ONS (UK) as partners, was carried out during the period October 2002 -October 2003. In response to the LEG on Quality recommendation nr. 15. n DESAP ü is tailored for statistics production and it aims to help survey managers to develop the survey that is under their responsibility ü is fully compliant with the ESS quality criteria ü applies to individual statistics collecting micro-data ü has questions with numerous response categories, assessment questions, and open questions 19

Self-Assessment Checklist for Survey Managers n The DESAP project (“Development of a Self-Assessment Programme for Surveys”), co-ordinated by DESTATIS (Germany), with Statistics Austria, Statistics Finland, ISTAT (Italy), Statistics Sweden and the ONS (UK) as partners, was carried out during the period October 2002 -October 2003. In response to the LEG on Quality recommendation nr. 15. n DESAP ü is tailored for statistics production and it aims to help survey managers to develop the survey that is under their responsibility ü is fully compliant with the ESS quality criteria ü applies to individual statistics collecting micro-data ü has questions with numerous response categories, assessment questions, and open questions 19

Objectives of DESAP n Objectives of DESAP: – raising awareness for the quality components and survey quality concepts – to provide a tool for a systematic, even though subjective, assessment of statistical products and processes – to provide helpful guidance in the consideration of improvement measures n Additional potential applications: – assistance for a basic appraisal of the risk of potential quality problems – to provide a means for simple comparisons of the level of quality over time – to provide support for resource allocation within statistical offices or for the training of new staff 20

Objectives of DESAP n Objectives of DESAP: – raising awareness for the quality components and survey quality concepts – to provide a tool for a systematic, even though subjective, assessment of statistical products and processes – to provide helpful guidance in the consideration of improvement measures n Additional potential applications: – assistance for a basic appraisal of the risk of potential quality problems – to provide a means for simple comparisons of the level of quality over time – to provide support for resource allocation within statistical offices or for the training of new staff 20

Handbook on Improving Quality by Analysis of Process Variables n Then project was coordinated by ONS (UK), with INE Portugal, NSS Greece and Statistics Sweden as partners, and carried out June 2002 - June 2004. In response to LEG on Quality Recommendation nr. 3. n General approach to and useful tools for the task of identifying, measuring and analysing key process variables. n Explains how ‘the process quality is improved by identifying key process variables (i. e. those variables with the greatest effect on product quality), measuring these variables, adjusting the process based on these measurements, and checking what happens to product quality. n Includes many practical examples of the application of the approach to various statistical processes. n The handbook does not aim to provide a list of recommended key process variables across all statistical processes. 21

Handbook on Improving Quality by Analysis of Process Variables n Then project was coordinated by ONS (UK), with INE Portugal, NSS Greece and Statistics Sweden as partners, and carried out June 2002 - June 2004. In response to LEG on Quality Recommendation nr. 3. n General approach to and useful tools for the task of identifying, measuring and analysing key process variables. n Explains how ‘the process quality is improved by identifying key process variables (i. e. those variables with the greatest effect on product quality), measuring these variables, adjusting the process based on these measurements, and checking what happens to product quality. n Includes many practical examples of the application of the approach to various statistical processes. n The handbook does not aim to provide a list of recommended key process variables across all statistical processes. 21

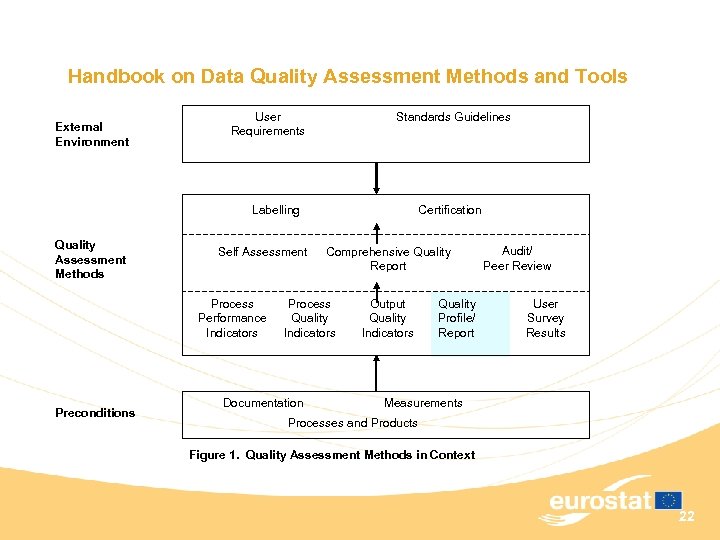

Handbook on Data Quality Assessment Methods and Tools External Environment User Requirements Standards Guidelines Labelling Quality Assessment Methods Self Assessment Process Performance Indicators Preconditions Certification Comprehensive Quality Report Process Quality Indicators Documentation Output Quality Indicators Quality Profile/ Report Audit/ Peer Review User Survey Results Measurements Processes and Products Figure 1. Quality Assessment Methods in Context 22

Handbook on Data Quality Assessment Methods and Tools External Environment User Requirements Standards Guidelines Labelling Quality Assessment Methods Self Assessment Process Performance Indicators Preconditions Certification Comprehensive Quality Report Process Quality Indicators Documentation Output Quality Indicators Quality Profile/ Report Audit/ Peer Review User Survey Results Measurements Processes and Products Figure 1. Quality Assessment Methods in Context 22

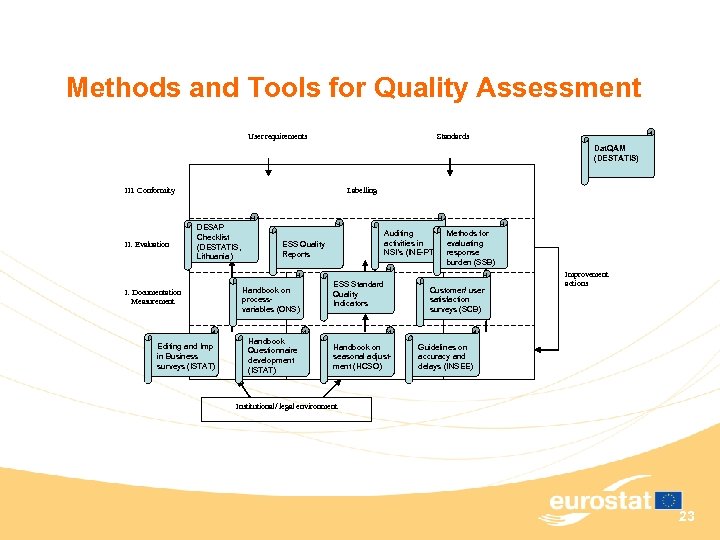

Methods and Tools for Quality Assessment User requirements Standards Dat. QAM (DESTATIS) III. Conformity II. Evaluation Labelling DESAP Checklist (DESTATIS, Lithuania) I. Documentation Measurement Editing and Imp in Business surveys (ISTAT) Auditing Methods for activities in reviews evaluating Quality NSI’s (INE-PT) response burden (SSB) Self ESS Quality assessments Reports Handbook on processvariables (ONS) Handbook Questionnaire development (ISTAT) ESS Standard Quality Indicators Handbook on seasonal adjustment (HCSO) Customer/ user satisfaction surveys (SCB) Improvement actions Guidelines on accuracy and delays (INSEE) Institutional/ legal environment 23

Methods and Tools for Quality Assessment User requirements Standards Dat. QAM (DESTATIS) III. Conformity II. Evaluation Labelling DESAP Checklist (DESTATIS, Lithuania) I. Documentation Measurement Editing and Imp in Business surveys (ISTAT) Auditing Methods for activities in reviews evaluating Quality NSI’s (INE-PT) response burden (SSB) Self ESS Quality assessments Reports Handbook on processvariables (ONS) Handbook Questionnaire development (ISTAT) ESS Standard Quality Indicators Handbook on seasonal adjustment (HCSO) Customer/ user satisfaction surveys (SCB) Improvement actions Guidelines on accuracy and delays (INSEE) Institutional/ legal environment 23

SESSION 3 10: 15 – 11: 00 PROCESS QUALITY AND OUTPUT QUALITY Eva Elvers & August Götzfried 24

SESSION 3 10: 15 – 11: 00 PROCESS QUALITY AND OUTPUT QUALITY Eva Elvers & August Götzfried 24

Process Quality and Output Quality n Content a. To present ESS quality models in detail (Eva Elvers) b. GSBPM follows (August Götzfried) 25

Process Quality and Output Quality n Content a. To present ESS quality models in detail (Eva Elvers) b. GSBPM follows (August Götzfried) 25

Content of Module n General definition of quality n Output quality components n Process quality components – Institutional environment – Individual statistical processes 26

Content of Module n General definition of quality n Output quality components n Process quality components – Institutional environment – Individual statistical processes 26

General Definition of Quality n “Quality” not well defined – in sense that there are many definitions – most general and succinc: fitness for use n Start with international standards n ISO 9000 definition: – degree to which a set of inherent characteristics fulfils requirements n ISO 8402: 1986 gives more comprehensible definition: – totality of features and characteristics of a product or service that bear on its ability to satisfy stated or implied needs 27

General Definition of Quality n “Quality” not well defined – in sense that there are many definitions – most general and succinc: fitness for use n Start with international standards n ISO 9000 definition: – degree to which a set of inherent characteristics fulfils requirements n ISO 8402: 1986 gives more comprehensible definition: – totality of features and characteristics of a product or service that bear on its ability to satisfy stated or implied needs 27

General Definition of Quality n These definitions provide basic notion of product quality – Need to be supplemented by more precise interpretation of quality in ESS context n ESS Quality Definition – Presented to October 2003 meeting of ESS Working Group Assessment of Quality in Statistics – Basis for defining output quality components in all subsequent quality related documents, including • Code of Practice (Co. P) and • forthcoming basic legal framework on European Statistics 28

General Definition of Quality n These definitions provide basic notion of product quality – Need to be supplemented by more precise interpretation of quality in ESS context n ESS Quality Definition – Presented to October 2003 meeting of ESS Working Group Assessment of Quality in Statistics – Basis for defining output quality components in all subsequent quality related documents, including • Code of Practice (Co. P) and • forthcoming basic legal framework on European Statistics 28

Output Quality Components n Relevance n Accuracy and Reliability n Timeliness and Punctuality n Accessibility and Clarity n Coherence and Comparability 29

Output Quality Components n Relevance n Accuracy and Reliability n Timeliness and Punctuality n Accessibility and Clarity n Coherence and Comparability 29

Output Quality Components n Relevance: – outputs meet current and potential users’ needs n Accuracy and Reliability: – outputs accurately and reliably portray reality n Timeliness and Punctuality: – outputs are disseminated in timely, punctual manner 30

Output Quality Components n Relevance: – outputs meet current and potential users’ needs n Accuracy and Reliability: – outputs accurately and reliably portray reality n Timeliness and Punctuality: – outputs are disseminated in timely, punctual manner 30

Output Quality Components n Accessibility and Clarity: – outputs are presented in clear, understandable form – disseminated in a suitable and convenient manner – made available and accessible on impartial basis – accompanied by supporting metadata and guidance 31

Output Quality Components n Accessibility and Clarity: – outputs are presented in clear, understandable form – disseminated in a suitable and convenient manner – made available and accessible on impartial basis – accompanied by supporting metadata and guidance 31

Output Quality Components n Coherence and Comparability: – coherence means that outputs are mutually consistent and can be used in combination – comparability is an aspect of coherence and means that outputs referring to same data items are mutually consistent and can be used for comparisons across time, region, or any other relevant domain. 32

Output Quality Components n Coherence and Comparability: – coherence means that outputs are mutually consistent and can be used in combination – comparability is an aspect of coherence and means that outputs referring to same data items are mutually consistent and can be used for comparisons across time, region, or any other relevant domain. 32

Process Quality Components n Output quality is achieved through process quality n Process quality has two broad aspects: – Effectiveness: which leads to the outputs of good quality; and – Efficiency: which leads to production of outputs at minimum cost to statistical office and to respondents that provide the original data 33

Process Quality Components n Output quality is achieved through process quality n Process quality has two broad aspects: – Effectiveness: which leads to the outputs of good quality; and – Efficiency: which leads to production of outputs at minimum cost to statistical office and to respondents that provide the original data 33

Process Quality Components n Guidance on formulation of process quality components provided by first 10 principles in ESS Code of Practice (as previously described) n Principles formulated in two groups: – institutional environment - within which programme of statistical processes is conducted – individual statistical processes 34

Process Quality Components n Guidance on formulation of process quality components provided by first 10 principles in ESS Code of Practice (as previously described) n Principles formulated in two groups: – institutional environment - within which programme of statistical processes is conducted – individual statistical processes 34

Process Quality Components Based on ESS Code of Practice n Institutional Environment – – – Professional independence Mandate for data collection Adequacy of resources Quality commitment Statistical confidentiality n Individual Statistical Process – – Sound methodology Appropriate statistical procedures Non-excessive burden on respondents Cost effectiveness: resources are effectively used 35

Process Quality Components Based on ESS Code of Practice n Institutional Environment – – – Professional independence Mandate for data collection Adequacy of resources Quality commitment Statistical confidentiality n Individual Statistical Process – – Sound methodology Appropriate statistical procedures Non-excessive burden on respondents Cost effectiveness: resources are effectively used 35

Process Quality Components Institutional Environment n Professional independence – professional independence of staff from other policy, regulatory or administrative departments and from private sector operators – required to support credibility of outputs n Mandate for data collection – organisation has a clear legal mandate to collect the particular information required – For survey conducted under statistics act providers compelled by law to provide or allow access to data 36

Process Quality Components Institutional Environment n Professional independence – professional independence of staff from other policy, regulatory or administrative departments and from private sector operators – required to support credibility of outputs n Mandate for data collection – organisation has a clear legal mandate to collect the particular information required – For survey conducted under statistics act providers compelled by law to provide or allow access to data 36

Process Quality Components Institutional Environment n Adequacy of resources – resources available are sufficient to meet systems and processing requirements n Quality commitment – staff commit themselves to work and cooperate according to principles in ESS Quality Declaration 37

Process Quality Components Institutional Environment n Adequacy of resources – resources available are sufficient to meet systems and processing requirements n Quality commitment – staff commit themselves to work and cooperate according to principles in ESS Quality Declaration 37

Process Quality Components Institutional Environment n Statistical confidentiality – guarantees of privacy of data providers, confidentiality of information they provide, and use only for statistical purposes n Impartiality and objectivity: – production and dissemination of statistics respect scientific independence – conducted in an objective, professional and transparent manner – in which all users are treated equitably. 38

Process Quality Components Institutional Environment n Statistical confidentiality – guarantees of privacy of data providers, confidentiality of information they provide, and use only for statistical purposes n Impartiality and objectivity: – production and dissemination of statistics respect scientific independence – conducted in an objective, professional and transparent manner – in which all users are treated equitably. 38

The Generic Statistical Business Process Model August Götzfried 39

The Generic Statistical Business Process Model August Götzfried 39

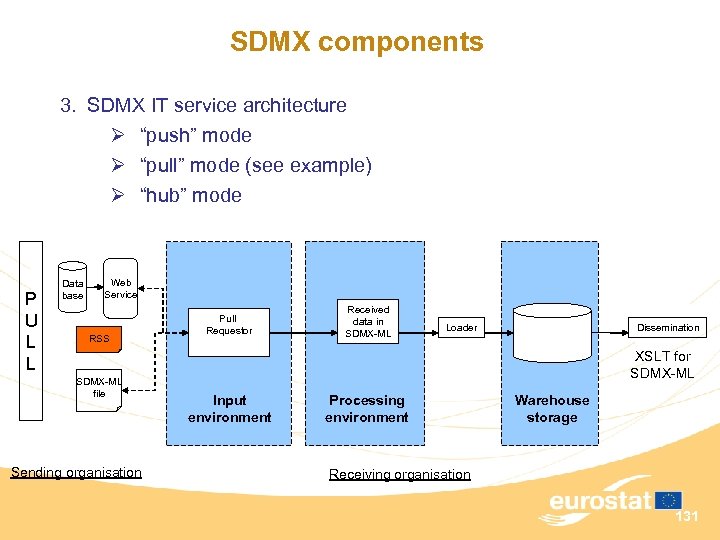

Contents n n n Background Modelling statistical business processes Applicability Structure and key features Relevance to SDMX Next steps 40

Contents n n n Background Modelling statistical business processes Applicability Structure and key features Relevance to SDMX Next steps 40

Background n Defining and mapping business processes in statistical organisations started at least 10 years ago – – “Statistical value chain” “Survey life-cycle” “Statistical process cycle” “Business process model” 41

Background n Defining and mapping business processes in statistical organisations started at least 10 years ago – – “Statistical value chain” “Survey life-cycle” “Statistical process cycle” “Business process model” 41

Background n Defining and mapping business processes in statistical organisations started at least 10 years ago – – “Statistical value chain” “Survey life-cycle” “Statistical process cycle” “Business process model” X X Generic Statistical Business Process Model 42

Background n Defining and mapping business processes in statistical organisations started at least 10 years ago – – “Statistical value chain” “Survey life-cycle” “Statistical process cycle” “Business process model” X X Generic Statistical Business Process Model 42

Modelling Statistical Business Processes n Reached a stage of maturity where a generic international standard could be drawn up n Many drivers for a generic model: – – – “End-to-end” metadata systems development Harmonization of terminology Software sharing Process-based organization structures Process quality management requirements The Eurostat vision. . . 43

Modelling Statistical Business Processes n Reached a stage of maturity where a generic international standard could be drawn up n Many drivers for a generic model: – – – “End-to-end” metadata systems development Harmonization of terminology Software sharing Process-based organization structures Process quality management requirements The Eurostat vision. . . 43

Why do we need a model? n n n To define, describe and map statistical processes in a coherent way To standardize process terminology To compare / benchmark processes within and between organisations To identify synergies between processes To inform decisions on systems architectures and organisation of resources 44

Why do we need a model? n n n To define, describe and map statistical processes in a coherent way To standardize process terminology To compare / benchmark processes within and between organisations To identify synergies between processes To inform decisions on systems architectures and organisation of resources 44

History of the GSBPM n Based on the business process model developed by Statistics New Zealand n Added phases for: – Archive (inspired by Statistics Canada) – Evaluate (Australia and others) n Three rounds of comments; now quite accepted; n Terminology and descriptions made more generic n Wider applicability? 45

History of the GSBPM n Based on the business process model developed by Statistics New Zealand n Added phases for: – Archive (inspired by Statistics Canada) – Evaluate (Australia and others) n Three rounds of comments; now quite accepted; n Terminology and descriptions made more generic n Wider applicability? 45

Applicability of the model n All activities undertaken by producers of official statistics which result in data outputs n National and international statistical organisations n Independent of data source, can be used for: – Surveys / censuses – Administrative sources / register-based statistics – Mixed sources 46

Applicability of the model n All activities undertaken by producers of official statistics which result in data outputs n National and international statistical organisations n Independent of data source, can be used for: – Surveys / censuses – Administrative sources / register-based statistics – Mixed sources 46

Applicability of the model n Producing statistics from end-to-end (micro or macro-data) n Revision of existing data / re-calculation of time-series n Development and maintenance of statistical and administrative registers 47

Applicability of the model n Producing statistics from end-to-end (micro or macro-data) n Revision of existing data / re-calculation of time-series n Development and maintenance of statistical and administrative registers 47

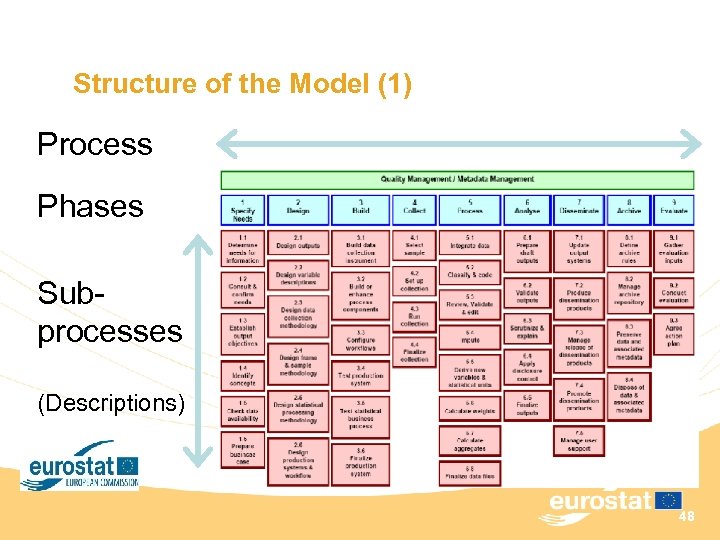

Structure of the Model (1) Process Phases Subprocesses (Descriptions) 48

Structure of the Model (1) Process Phases Subprocesses (Descriptions) 48

Structure of the Model (2) n National implementations may need additional levels n Over-arching processes – – – Quality management Metadata management Statistical framework management Statistical programme management. . . . (8 more – see paper) 49

Structure of the Model (2) n National implementations may need additional levels n Over-arching processes – – – Quality management Metadata management Statistical framework management Statistical programme management. . . . (8 more – see paper) 49

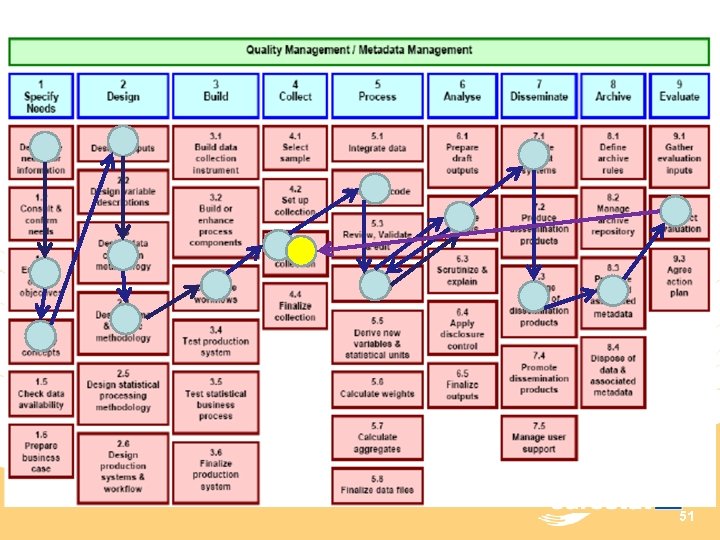

Key features (1) n Not a linear model n Sub-processes do not have to be followed in a strict order n It is a matrix, through which there are many possible paths, including iterative loops within and between phases n Some iterations of a regular process may skip certain subprocesses 50

Key features (1) n Not a linear model n Sub-processes do not have to be followed in a strict order n It is a matrix, through which there are many possible paths, including iterative loops within and between phases n Some iterations of a regular process may skip certain subprocesses 50

51

51

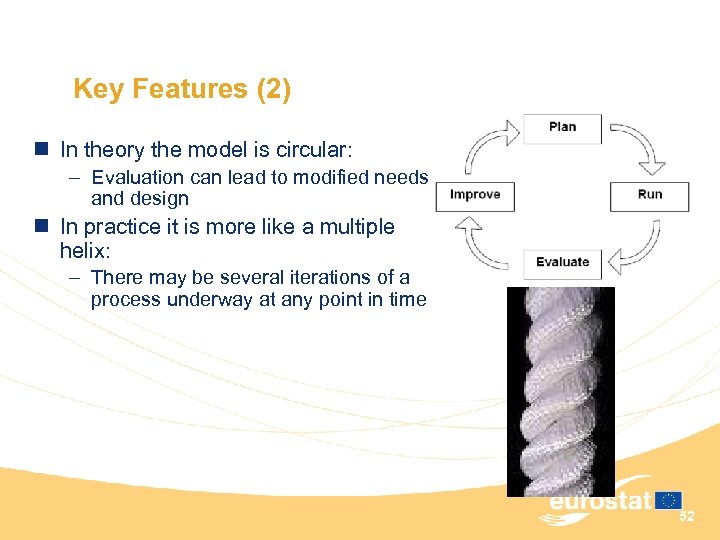

Key Features (2) n In theory the model is circular: – Evaluation can lead to modified needs and design n In practice it is more like a multiple helix: – There may be several iterations of a process underway at any point in time 52

Key Features (2) n In theory the model is circular: – Evaluation can lead to modified needs and design n In practice it is more like a multiple helix: – There may be several iterations of a process underway at any point in time 52

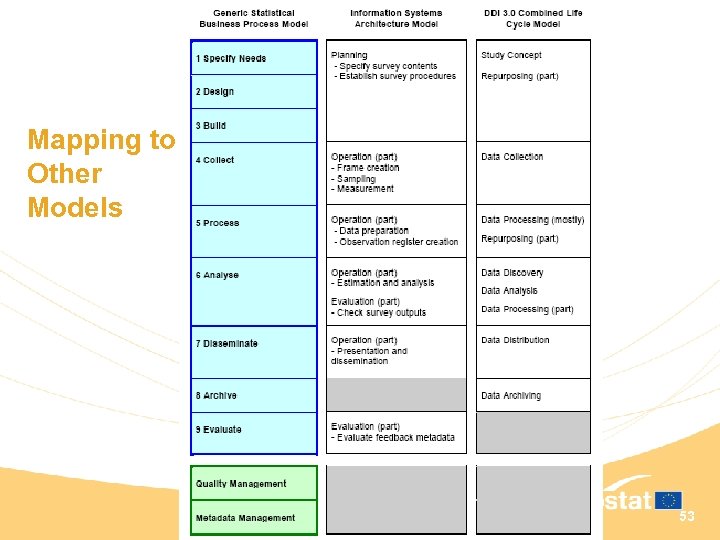

Mapping to Other Models 53

Mapping to Other Models 53

Relevance to SDMX n Process modelling already mentioned in: – SDMX User Guide – SDMX Technical Standards (version 2. 0) – Euro SDMX Metadata Structure n Common terminology n If inputs and outputs use SDMX formats, why not the intermediate processes? 54

Relevance to SDMX n Process modelling already mentioned in: – SDMX User Guide – SDMX Technical Standards (version 2. 0) – Euro SDMX Metadata Structure n Common terminology n If inputs and outputs use SDMX formats, why not the intermediate processes? 54

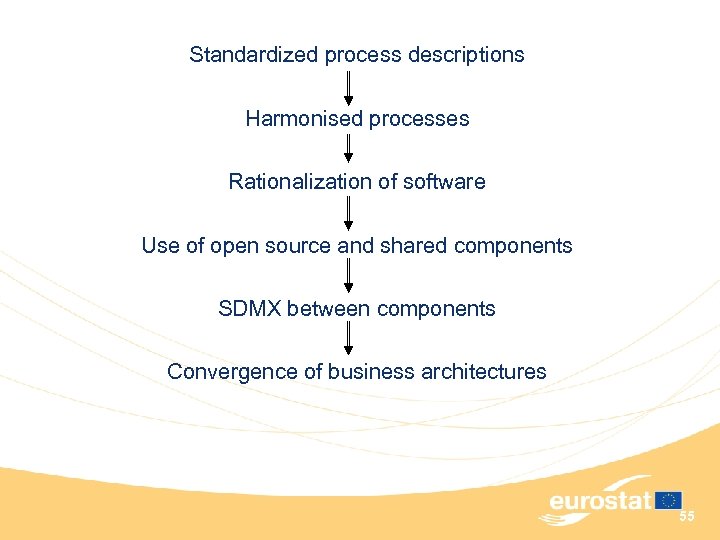

Standardized process descriptions Harmonised processes Rationalization of software Use of open source and shared components SDMX between components Convergence of business architectures 55

Standardized process descriptions Harmonised processes Rationalization of software Use of open source and shared components SDMX between components Convergence of business architectures 55

Next steps n The model is more and more commonly accepted n Several statistical organisations are implementing this model or similar ones n Gather implementation experiences and other comments as input for Part C of the “Common Metadata Framework” n Present to the Bureau of the Conference of European Statisticians n Role in SDMX? 56

Next steps n The model is more and more commonly accepted n Several statistical organisations are implementing this model or similar ones n Gather implementation experiences and other comments as input for Part C of the “Common Metadata Framework” n Present to the Bureau of the Conference of European Statisticians n Role in SDMX? 56

Questions and Comments? For more information see the METIS wiki: www 1. unece. org/stat/platform/display/metis 57

Questions and Comments? For more information see the METIS wiki: www 1. unece. org/stat/platform/display/metis 57

SESSION 4 11: 30 – 12: 00 TYPES (USED) OF QUALITY REPORTS AND STATISTICAL PROCESSES Eva Elvers 58

SESSION 4 11: 30 – 12: 00 TYPES (USED) OF QUALITY REPORTS AND STATISTICAL PROCESSES Eva Elvers 58

Types of Quality Report and Statistical Process n Purpose – To describe various aspects of a quality report • types of quality report • types of statistical process for which report prepared – To describe structure of ESQR 59

Types of Quality Report and Statistical Process n Purpose – To describe various aspects of a quality report • types of quality report • types of statistical process for which report prepared – To describe structure of ESQR 59

Content n Types of quality report – Scope/level of quality report – User/producer orientation – Process/output orientation n Types of statistical process n Level of detail and role n Quality reporting structure used in ESQR and EHQR 60

Content n Types of quality report – Scope/level of quality report – User/producer orientation – Process/output orientation n Types of statistical process n Level of detail and role n Quality reporting structure used in ESQR and EHQR 60

Types of Statistical Process – six in all n Sample Survey – based on usually probabilistic sampling procedure – involving direct collection of data from respondents (mostly) n Census – survey where all frame units are covered n Statistical Process Using Administrative Source(s) – process making use of data collected for administrative purposes - purposes other than direct production of statistics – example: statistical tabulations produced from database maintained by Department of Education 61

Types of Statistical Process – six in all n Sample Survey – based on usually probabilistic sampling procedure – involving direct collection of data from respondents (mostly) n Census – survey where all frame units are covered n Statistical Process Using Administrative Source(s) – process making use of data collected for administrative purposes - purposes other than direct production of statistics – example: statistical tabulations produced from database maintained by Department of Education 61

Types of Statistical Process (cont. ) n Statistical Process Involving Multiple Data Sources – Survey with different questionnaire designs, sampling procedures for different segments of population – Mixture of direct data collection & administrative data n Price or Other Economic Index Process – Involving complex sample surveys, often with nonprobabilistic designs – Targets complex and model-based n Statistical Compilation – Economic aggregates like National Accounts and Balance of Payments 62

Types of Statistical Process (cont. ) n Statistical Process Involving Multiple Data Sources – Survey with different questionnaire designs, sampling procedures for different segments of population – Mixture of direct data collection & administrative data n Price or Other Economic Index Process – Involving complex sample surveys, often with nonprobabilistic designs – Targets complex and model-based n Statistical Compilation – Economic aggregates like National Accounts and Balance of Payments 62

Types of Statistical Process – how many? n The Generic Statistical Business Process Model – ONE Statistical Process – Six types currently in the ESS – Similarities rather than differences 63

Types of Statistical Process – how many? n The Generic Statistical Business Process Model – ONE Statistical Process – Six types currently in the ESS – Similarities rather than differences 63

Types of Quality Report: by Scope and Level n Scope of Quality Report – – – Institution Broad statistical domain Statistical process Sub domain within statistical process Individual statistical indicator(s) n Level of Quality Report – National level – European level 64

Types of Quality Report: by Scope and Level n Scope of Quality Report – – – Institution Broad statistical domain Statistical process Sub domain within statistical process Individual statistical indicator(s) n Level of Quality Report – National level – European level 64

European Level Quality Report n European level statistics may include – aggregations of national estimates for European entity - EU-27, EEA, Euro area – comparisons and contrasts of national estimates n Possible objectives of an ESS quality report – quality of European aggregate statistics – quality of comparisons of national statistics – comparisons of qualities of estimates 65

European Level Quality Report n European level statistics may include – aggregations of national estimates for European entity - EU-27, EEA, Euro area – comparisons and contrasts of national estimates n Possible objectives of an ESS quality report – quality of European aggregate statistics – quality of comparisons of national statistics – comparisons of qualities of estimates 65

Types of QR Producer/User Orientation n Quality report may be user-oriented, produceroriented or both – May aim communicate quality between producers n Producer of statistics may also be user of other statistics n Users may be sophisticated/not – advanced analysts/researchers, or public at large 66

Types of QR Producer/User Orientation n Quality report may be user-oriented, produceroriented or both – May aim communicate quality between producers n Producer of statistics may also be user of other statistics n Users may be sophisticated/not – advanced analysts/researchers, or public at large 66

Types of QR by Producer/User Orientation n ESQR is producer-oriented with focus what is needed to ensure quality of ESS n User-oriented quality reporting requires its own standard n Producer oriented report according to ESQR will include all information for user-oriented reports 67

Types of QR by Producer/User Orientation n ESQR is producer-oriented with focus what is needed to ensure quality of ESS n User-oriented quality reporting requires its own standard n Producer oriented report according to ESQR will include all information for user-oriented reports 67

Types of QR by Process/Output Orientation n Quality report may focus on processes or outputs or both n ESQR has output orientation even though aimed at producers 68

Types of QR by Process/Output Orientation n Quality report may focus on processes or outputs or both n ESQR has output orientation even though aimed at producers 68

Types of QR by Level of Detail n Quality report can vary from brief to detailed – Quality profile covers only a few specific attributes and indicators – DESAP checklist covers all aspects but not in detail n ESQR is for the most comprehensive form of quality report commonly prepared – dealing with all important aspects of output and process quality including – descriptions of processes and quality measurements – quantitative quality measures and – discussions of how to deal with deficiencies 69

Types of QR by Level of Detail n Quality report can vary from brief to detailed – Quality profile covers only a few specific attributes and indicators – DESAP checklist covers all aspects but not in detail n ESQR is for the most comprehensive form of quality report commonly prepared – dealing with all important aspects of output and process quality including – descriptions of processes and quality measurements – quantitative quality measures and – discussions of how to deal with deficiencies 69

Types of QR by Reporting Frequency n Quality reports may be prepared for every cycle, annually, or periodically – the more frequent the report, the less detail n ESQR is aimed at comprehensive document produced periodically – say every five years, or after major changes n In between less detailed reports envisaged – for example, quality and performance indicators for every survey occasion – checklist completed annually 70

Types of QR by Reporting Frequency n Quality reports may be prepared for every cycle, annually, or periodically – the more frequent the report, the less detail n ESQR is aimed at comprehensive document produced periodically – say every five years, or after major changes n In between less detailed reports envisaged – for example, quality and performance indicators for every survey occasion – checklist completed annually 70

Role of Quality Reporting n Quality report is a means to an end, not an end in itself n Should provide – factual account of quality – recommendations for quality improvements, and – justification for their implementation 71

Role of Quality Reporting n Quality report is a means to an end, not an end in itself n Should provide – factual account of quality – recommendations for quality improvements, and – justification for their implementation 71

ESQR: Reporting Structure n Process quality leads to product quality – if quality report contains an explicit assessment of quality in terms of each process and each output quality component – there must be considerable duplication n Reporting structure in ESQR – based on output quality components – supplemented by headings covering aspects of process quality not readily reported under any output components 72

ESQR: Reporting Structure n Process quality leads to product quality – if quality report contains an explicit assessment of quality in terms of each process and each output quality component – there must be considerable duplication n Reporting structure in ESQR – based on output quality components – supplemented by headings covering aspects of process quality not readily reported under any output components 72

ESQR: Reporting Structure – 11 parts 1. Introduction to statistical process and its outputs - overview required to provide context for report 2. Relevance 3. Accuracy 4. Timeliness and punctuality 5. Accessibility and clarity 6. Coherence and comparability 73

ESQR: Reporting Structure – 11 parts 1. Introduction to statistical process and its outputs - overview required to provide context for report 2. Relevance 3. Accuracy 4. Timeliness and punctuality 5. Accessibility and clarity 6. Coherence and comparability 73

ESQR: Reporting Structure – 11 parts 7. Trade-offs between output quality components – Output quality components not mutually exclusive – Many cases where improvements with respect to one component may lead to deterioration with respect to another – Example: accuracy versus timeliness – Trade-offs that have to be made should be described 74

ESQR: Reporting Structure – 11 parts 7. Trade-offs between output quality components – Output quality components not mutually exclusive – Many cases where improvements with respect to one component may lead to deterioration with respect to another – Example: accuracy versus timeliness – Trade-offs that have to be made should be described 74

ESQR: Reporting Structure – 11 parts 8. Assessment of user needs and perceptions – Users are starting point for quality considerations – Information regarding their needs and perceptions • obtained for all output components at the same time • not just each one individually • Need for a separate section 9. Cost, performance and respondent burden – important process quality components – not readily covered under output quality components – trade-offs versus output quality components 75

ESQR: Reporting Structure – 11 parts 8. Assessment of user needs and perceptions – Users are starting point for quality considerations – Information regarding their needs and perceptions • obtained for all output components at the same time • not just each one individually • Need for a separate section 9. Cost, performance and respondent burden – important process quality components – not readily covered under output quality components – trade-offs versus output quality components 75

ESQR: Reporting Structure – 11 parts 10. Confidentiality, transparency and security – also important process quality components – not readily covered under output quality components 11. Conclusion – summary of principal quality problems – improvements proposed to deal with them 76

ESQR: Reporting Structure – 11 parts 10. Confidentiality, transparency and security – also important process quality components – not readily covered under output quality components 11. Conclusion – summary of principal quality problems – improvements proposed to deal with them 76

ESQR: Reporting Structure - Note n Aim of quality report is for producer to describe all aspects of statistical process and its outputs that influence the usefulness of the outputs n The key is to make use of the ESQR structure – but to be flexible in its application – to focus effort on the strengths and weaknesses likely to be of most importance – and on known issues and problem areas 77

ESQR: Reporting Structure - Note n Aim of quality report is for producer to describe all aspects of statistical process and its outputs that influence the usefulness of the outputs n The key is to make use of the ESQR structure – but to be flexible in its application – to focus effort on the strengths and weaknesses likely to be of most importance – and on known issues and problem areas 77

SESSION 5 12: 00 – 12: 45 QUALITY REPORTING STANDARDS (ESQR) AND GUIDELINES (EHQR) Eva Elvers 78

SESSION 5 12: 00 – 12: 45 QUALITY REPORTING STANDARDS (ESQR) AND GUIDELINES (EHQR) Eva Elvers 78

Quality Reporting Standards and Guidelines In accordance with ESQR reporting structure n n Introduction Relevance, Accuracy, Coherence and Comparability, Timeliness and Punctuality, Accessibility and Clarity, Trade-offs, Performance, Cost and Respondent Burden Assessment of User Needs and Perceptions, Confidentiality, Transparency and Security 79

Quality Reporting Standards and Guidelines In accordance with ESQR reporting structure n n Introduction Relevance, Accuracy, Coherence and Comparability, Timeliness and Punctuality, Accessibility and Clarity, Trade-offs, Performance, Cost and Respondent Burden Assessment of User Needs and Perceptions, Confidentiality, Transparency and Security 79

Quality Reporting Standards and Guidelines Important: n n What to include How to measure, estimate, or assess Evaluation, possibly later QPI’s: Quality and Performance Indicators EG on Quality Barometer; not details here 80

Quality Reporting Standards and Guidelines Important: n n What to include How to measure, estimate, or assess Evaluation, possibly later QPI’s: Quality and Performance Indicators EG on Quality Barometer; not details here 80

Introduction to the Statistical Process n (To provide context for report) n Historical background to process, objectives and outputs n Domain – broad – to which outputs belong n The quality report at hand; the boundary and references to related quality reports n Outputs produced – overview in general terms n References to other related reports 81

Introduction to the Statistical Process n (To provide context for report) n Historical background to process, objectives and outputs n Domain – broad – to which outputs belong n The quality report at hand; the boundary and references to related quality reports n Outputs produced – overview in general terms n References to other related reports 81

Relevance n Relevance is the degree to which statistical outputs meet current and potential user needs. It depends on whether all the statistics that are needed are produced and the extent to which concepts used (definitions, classifications etc. , ) reflect user needs n Relevance depends on the use, and relevance may depend on user n So, not a single simple description, but a broad perspective 82

Relevance n Relevance is the degree to which statistical outputs meet current and potential user needs. It depends on whether all the statistics that are needed are produced and the extent to which concepts used (definitions, classifications etc. , ) reflect user needs n Relevance depends on the use, and relevance may depend on user n So, not a single simple description, but a broad perspective 82

Relevance Reporting n Content-oriented description of all outputs – key indicators, reference period(s) n Definitions of statistical target concepts – population, units – relation to target definitions that would be ideal from a user perspective – discrepancies between definitions used and accepted ESS or international definitions – trade-off between relevance and accuracy n Assessment of key outputs – Unmet user needs and reasons – Completeness relative to regulations 83

Relevance Reporting n Content-oriented description of all outputs – key indicators, reference period(s) n Definitions of statistical target concepts – population, units – relation to target definitions that would be ideal from a user perspective – discrepancies between definitions used and accepted ESS or international definitions – trade-off between relevance and accuracy n Assessment of key outputs – Unmet user needs and reasons – Completeness relative to regulations 83

Relevance Reporting (cont. ) n For administrative statistics – Definitions fixed, or influenced, by primary purpose of administrative regulation – Possible problems n For price indexes – (Discuss important issues) n For statistical compilations – Comparison of target concepts with definitions and concepts in international standards 84

Relevance Reporting (cont. ) n For administrative statistics – Definitions fixed, or influenced, by primary purpose of administrative regulation – Possible problems n For price indexes – (Discuss important issues) n For statistical compilations – Comparison of target concepts with definitions and concepts in international standards 84

Accuracy n The accuracy of statistical outputs in the general statistical sense is the degree of closeness of estimates to the true values n Overall and error sources n Sampling errors and non-sampling errors n Process type? 85

Accuracy n The accuracy of statistical outputs in the general statistical sense is the degree of closeness of estimates to the true values n Overall and error sources n Sampling errors and non-sampling errors n Process type? 85

Overall accuracy n A presentation of the methodology sufficient for (i) judging whether it lives up to internationally accepted standards and best practice and (ii) enabling the reader to understand specific error assessments. n Identification of the main sources of error for the main variables. n A summary assessment of all sources of error with special focus on the key estimates. n An assessment of the potential for bias (sign and order of magnitude) for each key indicator in quantitative or qualitative terms. 86

Overall accuracy n A presentation of the methodology sufficient for (i) judging whether it lives up to internationally accepted standards and best practice and (ii) enabling the reader to understand specific error assessments. n Identification of the main sources of error for the main variables. n A summary assessment of all sources of error with special focus on the key estimates. n An assessment of the potential for bias (sign and order of magnitude) for each key indicator in quantitative or qualitative terms. 86

Sampling Errors: Always; Probability and Non-Probability Sampling n Presentation, formulas, . . . n Presentation device: CV, confidence interval n Sampling error cannot be estimated without reference to a model – model implying that sample is “effectively random” can sometimes be used – for example, for price indices n For cut-off random sampling – error for sampled portion should be reported – for non-sampled portion discuss sampling bias n Sampling biases may be significant – need to be assessed as well 87

Sampling Errors: Always; Probability and Non-Probability Sampling n Presentation, formulas, . . . n Presentation device: CV, confidence interval n Sampling error cannot be estimated without reference to a model – model implying that sample is “effectively random” can sometimes be used – for example, for price indices n For cut-off random sampling – error for sampled portion should be reported – for non-sampled portion discuss sampling bias n Sampling biases may be significant – need to be assessed as well 87

/Sample Surveys/ Coverage Errors n Information on survey frame – reference period, updating actions – references to other documents on frame quality n Quantitative information on overcoverage and multiple listing n Assessment (preferably quantitative) on – extent of undercoverage – associated bias risks n Actions taken to reduce undercoverage and bias risks 88

/Sample Surveys/ Coverage Errors n Information on survey frame – reference period, updating actions – references to other documents on frame quality n Quantitative information on overcoverage and multiple listing n Assessment (preferably quantitative) on – extent of undercoverage – associated bias risks n Actions taken to reduce undercoverage and bias risks 88

/Sample Surveys/ Measurement Errors n Why ? n Data editing identifies inconsistencies due to – errors in the original data – processing errors due to coding or data entry n Inconsistencies removed by clerical correction and/or automatic imputation n Edit rule failure rates are indicative of – quality of data collection and processing – not of quality of final data n Attention paid to data editing should reflect significance of such errors 89

/Sample Surveys/ Measurement Errors n Why ? n Data editing identifies inconsistencies due to – errors in the original data – processing errors due to coding or data entry n Inconsistencies removed by clerical correction and/or automatic imputation n Edit rule failure rates are indicative of – quality of data collection and processing – not of quality of final data n Attention paid to data editing should reflect significance of such errors 89

Measurement Errors (cont. ) Methods of error evaluation n Comparisons with other data at unit level – requires common unit identification scheme – accounting for conceptual or timing differences n Re-interview with superior method – preferably for random sample of units 90

Measurement Errors (cont. ) Methods of error evaluation n Comparisons with other data at unit level – requires common unit identification scheme – accounting for conceptual or timing differences n Re-interview with superior method – preferably for random sample of units 90

Measurement Errors (cont. ) Methods of error evaluation (continued) n Replication – Differences between replicates indicate stability of measurement process – Analyses often assume replication errors are independent – rarely fully justified n Effects of data editing – Comparisons of original and edited data gives a minimum estimate of error levels 91

Measurement Errors (cont. ) Methods of error evaluation (continued) n Replication – Differences between replicates indicate stability of measurement process – Analyses often assume replication errors are independent – rarely fully justified n Effects of data editing – Comparisons of original and edited data gives a minimum estimate of error levels 91

/Sample Surveys/ Non-Response Errors n Non-response error – difference between statistics computed from collected data and those that would be computed if there were no missing values n Types of non-response: – unit non-response - no data are collected from unit – item non-response - some missing values in data collected from a unit 92

/Sample Surveys/ Non-Response Errors n Non-response error – difference between statistics computed from collected data and those that would be computed if there were no missing values n Types of non-response: – unit non-response - no data are collected from unit – item non-response - some missing values in data collected from a unit 92

/Sample Surveys/ Non-Response Errors Impact of nonresponse n Introduction of bias – nonrespondents not similar to respondents for all variables in all strata – whereas standard methods for handling nonresponse assume they are. n Increase in sampling error – as available number of responses is reduced n Many definitions of response rates – slightly different numerators and denominators 93

/Sample Surveys/ Non-Response Errors Impact of nonresponse n Introduction of bias – nonrespondents not similar to respondents for all variables in all strata – whereas standard methods for handling nonresponse assume they are. n Increase in sampling error – as available number of responses is reduced n Many definitions of response rates – slightly different numerators and denominators 93

Non-Response Errors Reporting n Definitions of response rates n Unit non-response rates for whole survey and important sub-domains n Item non-response rates for key variables n Breakdown of non-respondents by cause n Qualitative statement on risk of bias n Measures to reduce non-response n Treatment of non-response in estimation 94

Non-Response Errors Reporting n Definitions of response rates n Unit non-response rates for whole survey and important sub-domains n Item non-response rates for key variables n Breakdown of non-respondents by cause n Qualitative statement on risk of bias n Measures to reduce non-response n Treatment of non-response in estimation 94

/Sample Surveys/ Processing Errors n Identification of main issues n Include manual coding of response data that are in free format – Quality control procedures n Analysis of processing errors (where available) otherwise qualitative assessment 95

/Sample Surveys/ Processing Errors n Identification of main issues n Include manual coding of response data that are in free format – Quality control procedures n Analysis of processing errors (where available) otherwise qualitative assessment 95

Accuracy: Censuses n Report non-sampling errors as for sample surveys n Include – Assessments of measurement, classification errors – Assessment of processing errors, especially where manual coding of data in free text format is used. n For censuses based on extensive field work: – Assessment of undercoverage and overcoverage (undercount and over- or double count) – Description of methods used to correct 96

Accuracy: Censuses n Report non-sampling errors as for sample surveys n Include – Assessments of measurement, classification errors – Assessment of processing errors, especially where manual coding of data in free text format is used. n For censuses based on extensive field work: – Assessment of undercoverage and overcoverage (undercount and over- or double count) – Description of methods used to correct 96

Accuracy: Statistics from Administrative Sources n Report non-sampling errors as for sample surveys n Include assessment of over- and under-coverage due to lags in register updating n Include assessment of errors in classification variables n For statistics based on event reporting include assessment of rate of unreported events 97

Accuracy: Statistics from Administrative Sources n Report non-sampling errors as for sample surveys n Include assessment of over- and under-coverage due to lags in register updating n Include assessment of errors in classification variables n For statistics based on event reporting include assessment of rate of unreported events 97

Accuracy: Price and Other Economic Indices n Information on all sampling dimensions – for weights, products, outlets/companies etc. n Attempts at assessing sampling error – in all or some dimensions n Quality adjustment methods – including replacement and re-sampling rules – for at least major product groups n Assessment of other types of error – where they could have a significant influence 98

Accuracy: Price and Other Economic Indices n Information on all sampling dimensions – for weights, products, outlets/companies etc. n Attempts at assessing sampling error – in all or some dimensions n Quality adjustment methods – including replacement and re-sampling rules – for at least major product groups n Assessment of other types of error – where they could have a significant influence 98

Accuracy: Statistical Compilations n Information and indicators relating to accuracy required by IMF Data Quality Assessment Framework (DQAF) or equivalent. n Analysis of revisions between successively published estimates n For National Accounts – Analysis of causes for statistical discrepancy – Assessment of non-observed economy 99

Accuracy: Statistical Compilations n Information and indicators relating to accuracy required by IMF Data Quality Assessment Framework (DQAF) or equivalent. n Analysis of revisions between successively published estimates n For National Accounts – Analysis of causes for statistical discrepancy – Assessment of non-observed economy 99

Other Issues Concerning Accuracy n Model Assumptions and Associated Errors n Seasonal Adjustment n Imputation n Mistakes 100

Other Issues Concerning Accuracy n Model Assumptions and Associated Errors n Seasonal Adjustment n Imputation n Mistakes 100

Special Issues Concerning Accuracy: Revisions n Planned revisions should follow standard, wellestablished and transparent procedures – pre-announcements are desirable – reasons for revision and nature of the revision should be made clear – For example new source data available, new methods, etc 101

Special Issues Concerning Accuracy: Revisions n Planned revisions should follow standard, wellestablished and transparent procedures – pre-announcements are desirable – reasons for revision and nature of the revision should be made clear – For example new source data available, new methods, etc 101

Coherence and Comparability: Short Definitions n Coherence: capacity of outputs to be combined and reliably used in combination n Comparability: special case of coherence for outputs involving the same data items 102

Coherence and Comparability: Short Definitions n Coherence: capacity of outputs to be combined and reliably used in combination n Comparability: special case of coherence for outputs involving the same data items 102

Coherence and Comparability: Explanation n The coherence of two or more statistical outputs refers to the degree to which the statistical processes by which they were generated used the same concepts - classifications, definitions, and target populations – and harmonised methods. n Coherent statistical outputs have the potential to be validly combined and used jointly. 103

Coherence and Comparability: Explanation n The coherence of two or more statistical outputs refers to the degree to which the statistical processes by which they were generated used the same concepts - classifications, definitions, and target populations – and harmonised methods. n Coherent statistical outputs have the potential to be validly combined and used jointly. 103

Coherence and Comparability: Explanation (cont. ) n Examples of joint use are where the statistical outputs refer to the same population, reference period and region but comprise different sets of data items (say, employment data and production data) or where they comprise the same data items (say, employment data) but for different reference periods, regions, or other domains. n Comparability is a special case of coherence and refers to the latter example above where statistical outputs refer to the same data items and the aim of combining them is to make comparisons over time, or across regions, or across other domains. 104

Coherence and Comparability: Explanation (cont. ) n Examples of joint use are where the statistical outputs refer to the same population, reference period and region but comprise different sets of data items (say, employment data and production data) or where they comprise the same data items (say, employment data) but for different reference periods, regions, or other domains. n Comparability is a special case of coherence and refers to the latter example above where statistical outputs refer to the same data items and the aim of combining them is to make comparisons over time, or across regions, or across other domains. 104

Coherence and Comparability: Notes n Distinction between coherence and accuracy – Coherence measured in terms of design metadata – Accuracy depends upon operational metadata – Differences between preliminary, revised and final estimates are an accuracy issue n Reasons for lack of coherence/comparability – Concepts: target population – units and coverage, reference period, data item definitions, classifications – Methods: frame construction, sources of data and sample design, data collection, capture, editing, imputation, estimation 105

Coherence and Comparability: Notes n Distinction between coherence and accuracy – Coherence measured in terms of design metadata – Accuracy depends upon operational metadata – Differences between preliminary, revised and final estimates are an accuracy issue n Reasons for lack of coherence/comparability – Concepts: target population – units and coverage, reference period, data item definitions, classifications – Methods: frame construction, sources of data and sample design, data collection, capture, editing, imputation, estimation 105

Coherence and Comparability Reporting General n Descriptions of conceptual and methodological metadata elements that could affect coherence/ comparability n Assessment (preferably quantitative) of possible effect of each reported difference on outputs n Differences between statistical process and applicable European regulations/standards and/or international standards 106

Coherence and Comparability Reporting General n Descriptions of conceptual and methodological metadata elements that could affect coherence/ comparability n Assessment (preferably quantitative) of possible effect of each reported difference on outputs n Differences between statistical process and applicable European regulations/standards and/or international standards 106

Timeliness and Punctuality Definition/Description n Timeliness: length of time between the event or phenomenon and the availability of the statistics. n Punctuality: time lag between the release date of data and the scheduled date for release. 107

Timeliness and Punctuality Definition/Description n Timeliness: length of time between the event or phenomenon and the availability of the statistics. n Punctuality: time lag between the release date of data and the scheduled date for release. 107

Reporting § Timeliness and punctuality profile for each version (preliminary, revised, final) whenever statistics are released in multiple versions. § Reasons for possible long production times and non-punctual releases and description of the efforts made to improve situation. § Timeliness : for annual or more frequent releases the average production time for each release of data; maximum production time to provide worst recorded case. § Punctuality : the percentage of releases delivered on time (based on scheduled release dates). 108

Reporting § Timeliness and punctuality profile for each version (preliminary, revised, final) whenever statistics are released in multiple versions. § Reasons for possible long production times and non-punctual releases and description of the efforts made to improve situation. § Timeliness : for annual or more frequent releases the average production time for each release of data; maximum production time to provide worst recorded case. § Punctuality : the percentage of releases delivered on time (based on scheduled release dates). 108

Accessibility and Clarity Definition/Description § Accessibility : measure of ease with which users can obtain the data (where to go, how to order, delivery time, pricing policy, marketing conditions, availability of micro data etc). § Clarity : measure of the ease with which users can understand the data (depends upon the quality of metadata). Summary: both refer to the simplicity and ease with which users can access statistics with appropriate supporting information. 109

Accessibility and Clarity Definition/Description § Accessibility : measure of ease with which users can obtain the data (where to go, how to order, delivery time, pricing policy, marketing conditions, availability of micro data etc). § Clarity : measure of the ease with which users can understand the data (depends upon the quality of metadata). Summary: both refer to the simplicity and ease with which users can access statistics with appropriate supporting information. 109

Reporting § Description of the conditions of access to the data: media, support, pricing policies, possible restrictions, etc. § Summary description of the metadata accompanying the statistics (documentation, explanation, quality limitations, etc. ) § Description of how well both less sophisticated and advanced users needs have been addressed. § Summary of user feedback on accessibility and clarity. § Recent and planned improvements to accessibility and clarity. 110

Reporting § Description of the conditions of access to the data: media, support, pricing policies, possible restrictions, etc. § Summary description of the metadata accompanying the statistics (documentation, explanation, quality limitations, etc. ) § Description of how well both less sophisticated and advanced users needs have been addressed. § Summary of user feedback on accessibility and clarity. § Recent and planned improvements to accessibility and clarity. 110

Trade-offs between Output Quality Components Definition/Description § Quality components are not mutually exclusive, there are relationships between the factors that contribute to them. § In some cases there are factors leading to improvements with respect to one component but result in deterioration with respect to another. § Decisions for trade-offs have to be made in such circumstances. 111

Trade-offs between Output Quality Components Definition/Description § Quality components are not mutually exclusive, there are relationships between the factors that contribute to them. § In some cases there are factors leading to improvements with respect to one component but result in deterioration with respect to another. § Decisions for trade-offs have to be made in such circumstances. 111

Types (most significant ones): § Trade-off between Relevance and Accuracy § Trade-off between Relevance and Timeliness § Trade-off between Relevance and Coherence § Trade-off between Relevance and Comparability over Time § Trade-off between Comparability over Region and Comparability across Time § Trade-off between Accuracy and Timeliness 112