b1c18125539714f9177802c7bb27c6a6.ppt

- Количество слайдов: 24

PULSAR Perception Understanding Learning Systems for Activity Recognition Theme: Cognitive Systems Cog C Multimedia data: interpretation and man-machine interaction Multidisciplinary team: Computer vision, artificial intelligence, software engineering September 2007

PULSAR Perception Understanding Learning Systems for Activity Recognition Theme: Cognitive Systems Cog C Multimedia data: interpretation and man-machine interaction Multidisciplinary team: Computer vision, artificial intelligence, software engineering September 2007

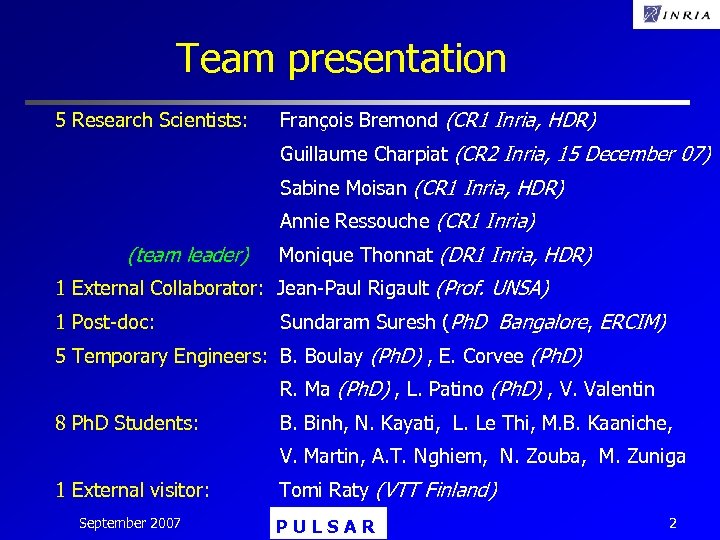

Team presentation 5 Research Scientists: François Bremond (CR 1 Inria, HDR) Guillaume Charpiat (CR 2 Inria, 15 December 07) Sabine Moisan (CR 1 Inria, HDR) Annie Ressouche (CR 1 Inria) (team leader) Monique Thonnat (DR 1 Inria, HDR) 1 External Collaborator: Jean-Paul Rigault (Prof. UNSA) 1 Post-doc: Sundaram Suresh (Ph. D Bangalore, ERCIM) 5 Temporary Engineers: B. Boulay (Ph. D) , E. Corvee (Ph. D) R. Ma (Ph. D) , L. Patino (Ph. D) , V. Valentin 8 Ph. D Students: B. Binh, N. Kayati, L. Le Thi, M. B. Kaaniche, V. Martin, A. T. Nghiem, N. Zouba, M. Zuniga 1 External visitor: September 2007 Tomi Raty (VTT Finland) PULSAR 2

Team presentation 5 Research Scientists: François Bremond (CR 1 Inria, HDR) Guillaume Charpiat (CR 2 Inria, 15 December 07) Sabine Moisan (CR 1 Inria, HDR) Annie Ressouche (CR 1 Inria) (team leader) Monique Thonnat (DR 1 Inria, HDR) 1 External Collaborator: Jean-Paul Rigault (Prof. UNSA) 1 Post-doc: Sundaram Suresh (Ph. D Bangalore, ERCIM) 5 Temporary Engineers: B. Boulay (Ph. D) , E. Corvee (Ph. D) R. Ma (Ph. D) , L. Patino (Ph. D) , V. Valentin 8 Ph. D Students: B. Binh, N. Kayati, L. Le Thi, M. B. Kaaniche, V. Martin, A. T. Nghiem, N. Zouba, M. Zuniga 1 External visitor: September 2007 Tomi Raty (VTT Finland) PULSAR 2

PULSAR Objective: Cognitive Systems for Activity Recognition Activity recognition: Real-time Semantic Interpretation of Dynamic Scenes Dynamic scenes: n Several interacting human beings, animals or vehicles n Long term activities (hours or days) n Large scale activities in the physical world (located in large space) n Observed by a network of video cameras and sensors Real-time Semantic interpretation: n Real-time analysis of sensor output n Semantic interpretation with a priori knowledge of interesting behaviors September 2007 PULSAR 3

PULSAR Objective: Cognitive Systems for Activity Recognition Activity recognition: Real-time Semantic Interpretation of Dynamic Scenes Dynamic scenes: n Several interacting human beings, animals or vehicles n Long term activities (hours or days) n Large scale activities in the physical world (located in large space) n Observed by a network of video cameras and sensors Real-time Semantic interpretation: n Real-time analysis of sensor output n Semantic interpretation with a priori knowledge of interesting behaviors September 2007 PULSAR 3

PULSAR Scientific objectives: Objective: Cognitive Systems for Activity Recognition Cognitive systems: perception, understanding and learning systems n Physical object recognition n Activity understanding and learning n System design and evaluation Two complementary research directions: n Scene Understanding for Activity Recognition n Activity Recognition Systems September 2007 PULSAR 4

PULSAR Scientific objectives: Objective: Cognitive Systems for Activity Recognition Cognitive systems: perception, understanding and learning systems n Physical object recognition n Activity understanding and learning n System design and evaluation Two complementary research directions: n Scene Understanding for Activity Recognition n Activity Recognition Systems September 2007 PULSAR 4

PULSAR target applications Two application domains: n Safety/security (e. g. airport monitoring) n Healthcare (e. g. assistance to the elderly) September 2007 PULSAR 5

PULSAR target applications Two application domains: n Safety/security (e. g. airport monitoring) n Healthcare (e. g. assistance to the elderly) September 2007 PULSAR 5

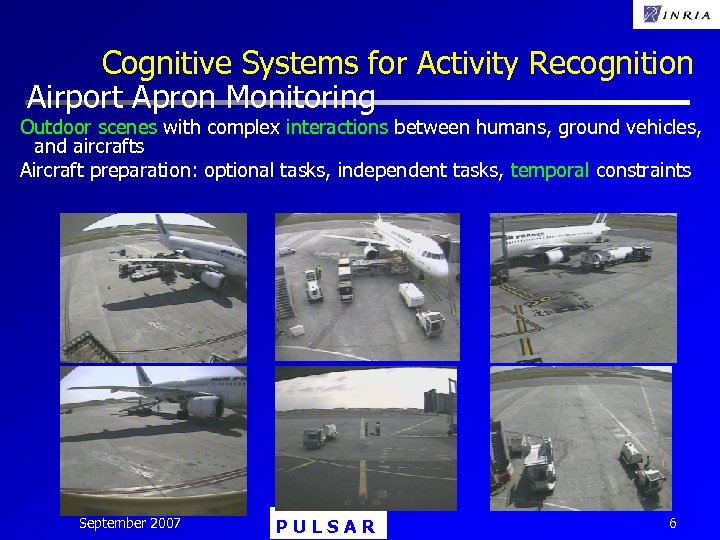

Cognitive Systems for Activity Recognition Airport Apron Monitoring Outdoor scenes with complex interactions between humans, ground vehicles, and aircrafts Aircraft preparation: optional tasks, independent tasks, temporal constraints September 2007 PULSAR 6

Cognitive Systems for Activity Recognition Airport Apron Monitoring Outdoor scenes with complex interactions between humans, ground vehicles, and aircrafts Aircraft preparation: optional tasks, independent tasks, temporal constraints September 2007 PULSAR 6

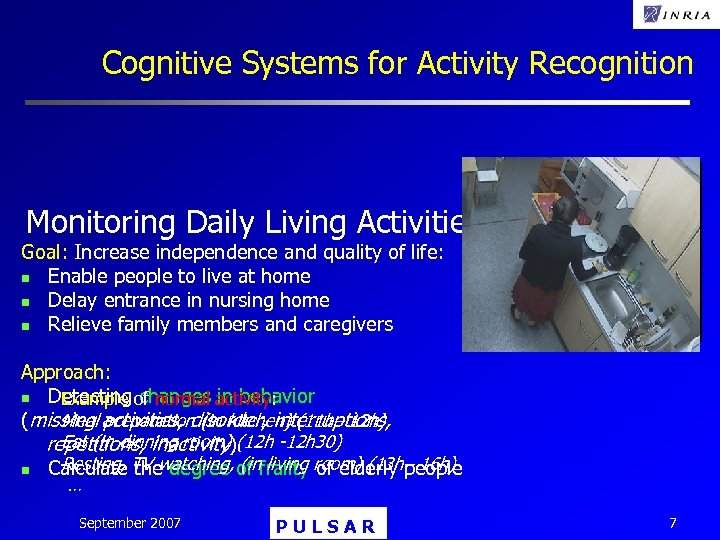

Cognitive Systems for Activity Recognition Monitoring Daily Living Activities of Elderly Goal: Increase independence and quality of life: n Enable people to live at home n Delay entrance in nursing home n Relieve family members and caregivers Approach: n Detecting of normal activity: Example changes in behavior Meal preparation (in kitchen) (11 h– 12 h) (missing activities, disorder, interruptions, Eat (in dinning room) repetitions, inactivity)(12 h -12 h 30) Resting, the degree (in living of elderly people n Calculate TV watching, of frailty room) (13 h– 16 h) … September 2007 PULSAR 7

Cognitive Systems for Activity Recognition Monitoring Daily Living Activities of Elderly Goal: Increase independence and quality of life: n Enable people to live at home n Delay entrance in nursing home n Relieve family members and caregivers Approach: n Detecting of normal activity: Example changes in behavior Meal preparation (in kitchen) (11 h– 12 h) (missing activities, disorder, interruptions, Eat (in dinning room) repetitions, inactivity)(12 h -12 h 30) Resting, the degree (in living of elderly people n Calculate TV watching, of frailty room) (13 h– 16 h) … September 2007 PULSAR 7

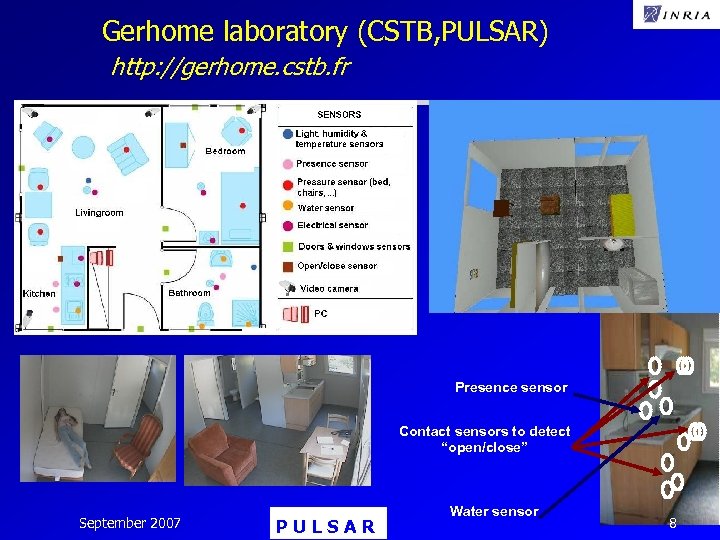

Gerhome laboratory (CSTB, PULSAR) http: //gerhome. cstb. fr Presence sensor Contact sensors to detect “open/close” September 2007 PULSAR Water sensor 8

Gerhome laboratory (CSTB, PULSAR) http: //gerhome. cstb. fr Presence sensor Contact sensors to detect “open/close” September 2007 PULSAR Water sensor 8

From ORION to PULSAR Orion contributions n 4 D semantic approach to Video Understanding n Program supervision approach to Software Reuse n VSIP platform for real-time video understanding Keeneo start-up n LAMA platform for knowledge-based system design September 2007 PULSAR 9

From ORION to PULSAR Orion contributions n 4 D semantic approach to Video Understanding n Program supervision approach to Software Reuse n VSIP platform for real-time video understanding Keeneo start-up n LAMA platform for knowledge-based system design September 2007 PULSAR 9

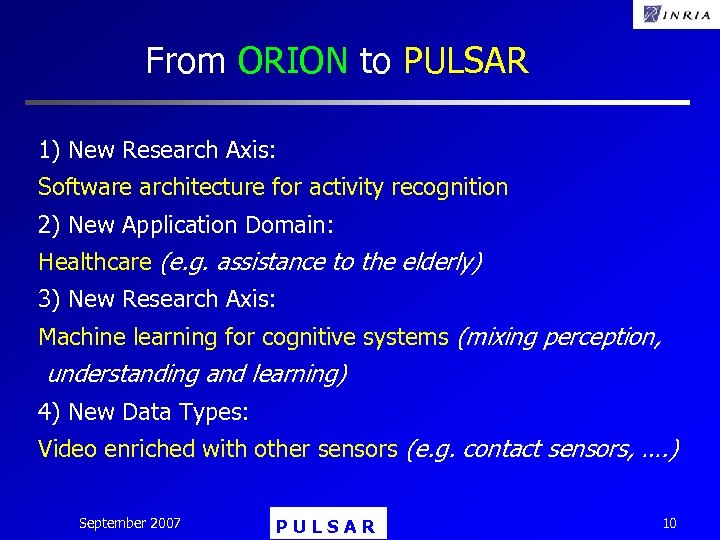

From ORION to PULSAR 1) New Research Axis: Software architecture for activity recognition 2) New Application Domain: Healthcare (e. g. assistance to the elderly) 3) New Research Axis: Machine learning for cognitive systems (mixing perception, understanding and learning) 4) New Data Types: Video enriched with other sensors (e. g. contact sensors, …. ) September 2007 PULSAR 10

From ORION to PULSAR 1) New Research Axis: Software architecture for activity recognition 2) New Application Domain: Healthcare (e. g. assistance to the elderly) 3) New Research Axis: Machine learning for cognitive systems (mixing perception, understanding and learning) 4) New Data Types: Video enriched with other sensors (e. g. contact sensors, …. ) September 2007 PULSAR 10

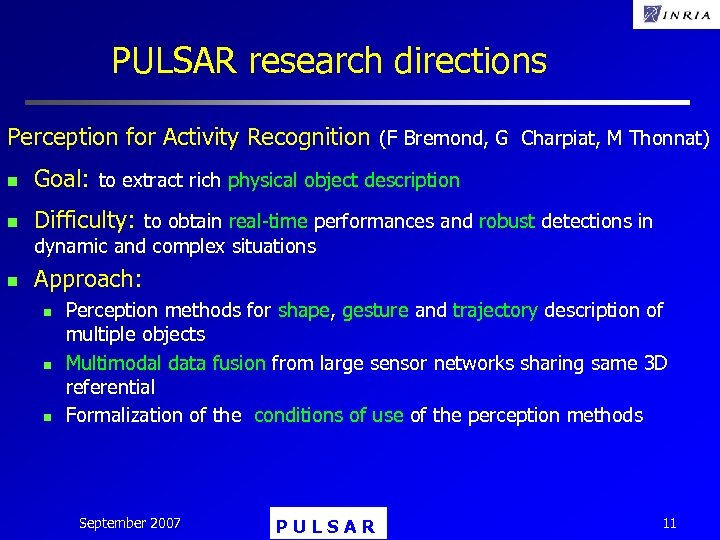

PULSAR research directions Perception for Activity Recognition (F Bremond, G Charpiat, M Thonnat) n Goal: to extract rich physical object description n Difficulty: to obtain real-time performances and robust detections in dynamic and complex situations n Approach: n n n Perception methods for shape, gesture and trajectory description of multiple objects Multimodal data fusion from large sensor networks sharing same 3 D referential Formalization of the conditions of use of the perception methods September 2007 PULSAR 11

PULSAR research directions Perception for Activity Recognition (F Bremond, G Charpiat, M Thonnat) n Goal: to extract rich physical object description n Difficulty: to obtain real-time performances and robust detections in dynamic and complex situations n Approach: n n n Perception methods for shape, gesture and trajectory description of multiple objects Multimodal data fusion from large sensor networks sharing same 3 D referential Formalization of the conditions of use of the perception methods September 2007 PULSAR 11

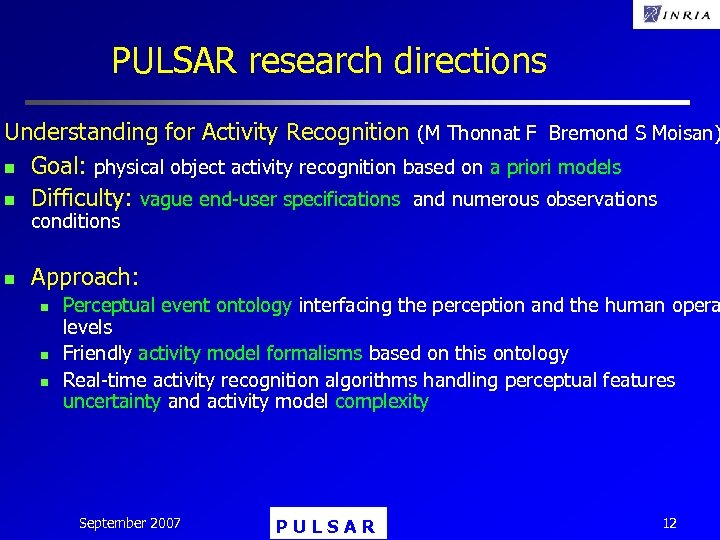

PULSAR research directions Understanding for Activity Recognition (M Thonnat F Bremond S Moisan) n Goal: physical object activity recognition based on a priori models n Difficulty: vague end-user specifications and numerous observations conditions n Approach: n n n Perceptual event ontology interfacing the perception and the human opera levels Friendly activity model formalisms based on this ontology Real-time activity recognition algorithms handling perceptual features uncertainty and activity model complexity September 2007 PULSAR 12

PULSAR research directions Understanding for Activity Recognition (M Thonnat F Bremond S Moisan) n Goal: physical object activity recognition based on a priori models n Difficulty: vague end-user specifications and numerous observations conditions n Approach: n n n Perceptual event ontology interfacing the perception and the human opera levels Friendly activity model formalisms based on this ontology Real-time activity recognition algorithms handling perceptual features uncertainty and activity model complexity September 2007 PULSAR 12

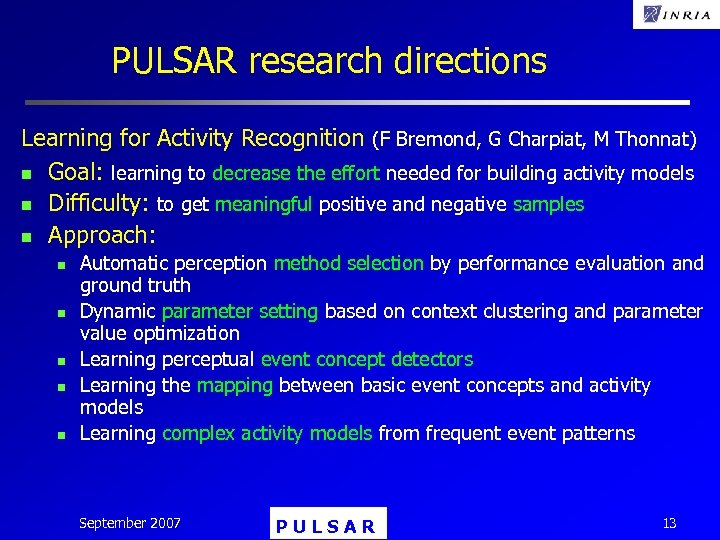

PULSAR research directions Learning for Activity Recognition (F Bremond, G Charpiat, M Thonnat) n Goal: learning to decrease the effort needed for building activity models n Difficulty: to get meaningful positive and negative samples n Approach: n n n Automatic perception method selection by performance evaluation and ground truth Dynamic parameter setting based on context clustering and parameter value optimization Learning perceptual event concept detectors Learning the mapping between basic event concepts and activity models Learning complex activity models from frequent event patterns September 2007 PULSAR 13

PULSAR research directions Learning for Activity Recognition (F Bremond, G Charpiat, M Thonnat) n Goal: learning to decrease the effort needed for building activity models n Difficulty: to get meaningful positive and negative samples n Approach: n n n Automatic perception method selection by performance evaluation and ground truth Dynamic parameter setting based on context clustering and parameter value optimization Learning perceptual event concept detectors Learning the mapping between basic event concepts and activity models Learning complex activity models from frequent event patterns September 2007 PULSAR 13

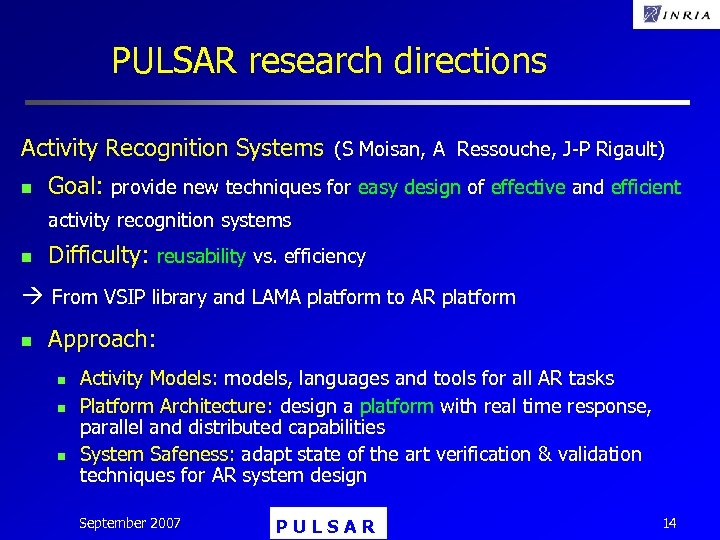

PULSAR research directions Activity Recognition Systems (S Moisan, A Ressouche, J-P Rigault) n Goal: provide new techniques for easy design of effective and efficient activity recognition systems n Difficulty: reusability vs. efficiency From VSIP library and LAMA platform to AR platform n Approach: n n n Activity Models: models, languages and tools for all AR tasks Platform Architecture: design a platform with real time response, parallel and distributed capabilities System Safeness: adapt state of the art verification & validation techniques for AR system design September 2007 PULSAR 14

PULSAR research directions Activity Recognition Systems (S Moisan, A Ressouche, J-P Rigault) n Goal: provide new techniques for easy design of effective and efficient activity recognition systems n Difficulty: reusability vs. efficiency From VSIP library and LAMA platform to AR platform n Approach: n n n Activity Models: models, languages and tools for all AR tasks Platform Architecture: design a platform with real time response, parallel and distributed capabilities System Safeness: adapt state of the art verification & validation techniques for AR system design September 2007 PULSAR 14

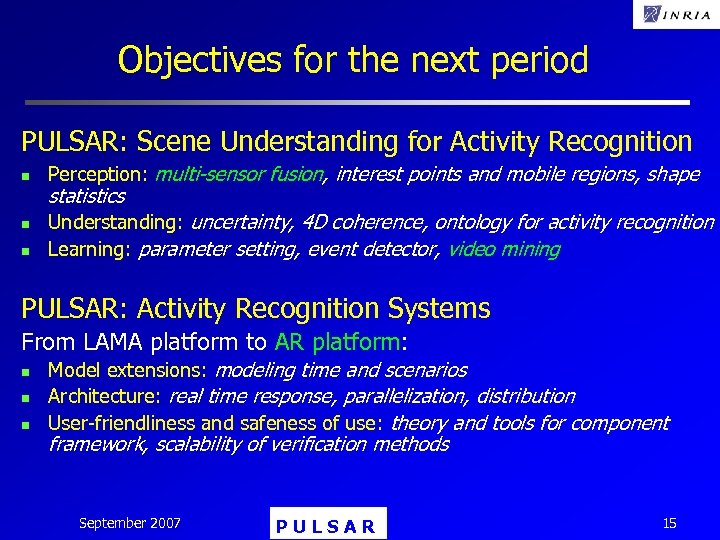

Objectives for the next period PULSAR: Scene Understanding for Activity Recognition n Perception: multi-sensor fusion, interest points and mobile regions, shape statistics Understanding: uncertainty, 4 D coherence, ontology for activity recognition Learning: parameter setting, event detector, video mining PULSAR: Activity Recognition Systems From LAMA platform to AR platform: n n n Model extensions: modeling time and scenarios Architecture: real time response, parallelization, distribution User-friendliness and safeness of use: theory and tools for component framework, scalability of verification methods September 2007 PULSAR 15

Objectives for the next period PULSAR: Scene Understanding for Activity Recognition n Perception: multi-sensor fusion, interest points and mobile regions, shape statistics Understanding: uncertainty, 4 D coherence, ontology for activity recognition Learning: parameter setting, event detector, video mining PULSAR: Activity Recognition Systems From LAMA platform to AR platform: n n n Model extensions: modeling time and scenarios Architecture: real time response, parallelization, distribution User-friendliness and safeness of use: theory and tools for component framework, scalability of verification methods September 2007 PULSAR 15

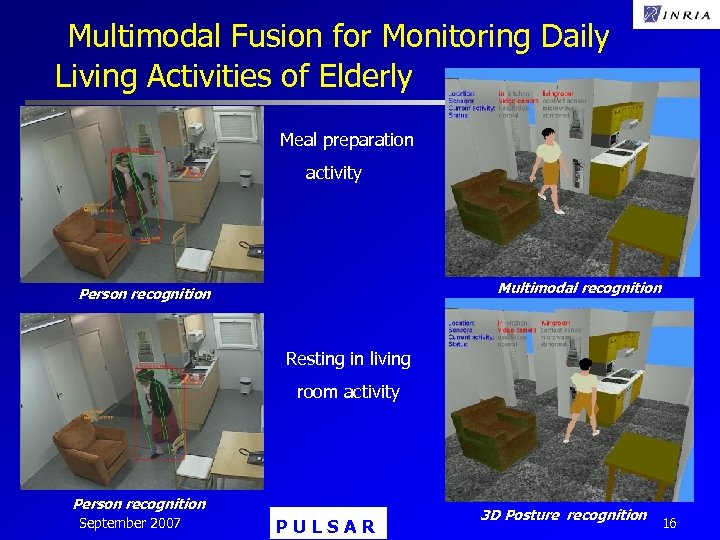

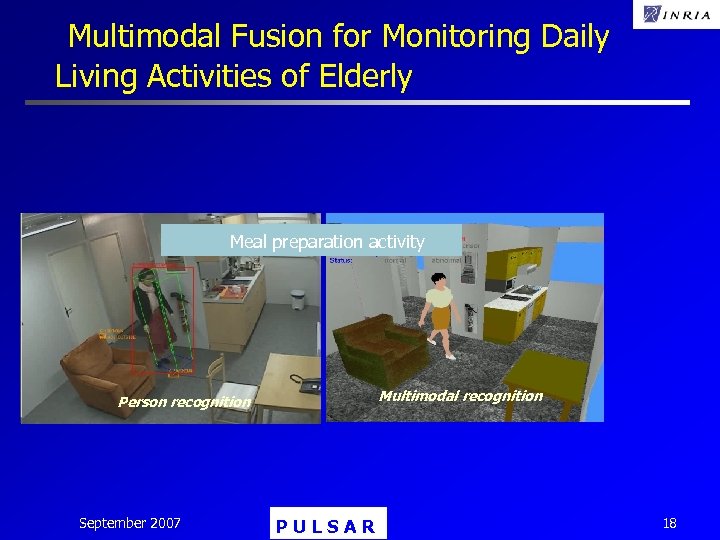

Multimodal Fusion for Monitoring Daily Living Activities of Elderly Meal preparation activity Multimodal recognition Person recognition Resting in living room activity Person recognition September 2007 PULSAR 3 D Posture recognition 16

Multimodal Fusion for Monitoring Daily Living Activities of Elderly Meal preparation activity Multimodal recognition Person recognition Resting in living room activity Person recognition September 2007 PULSAR 3 D Posture recognition 16

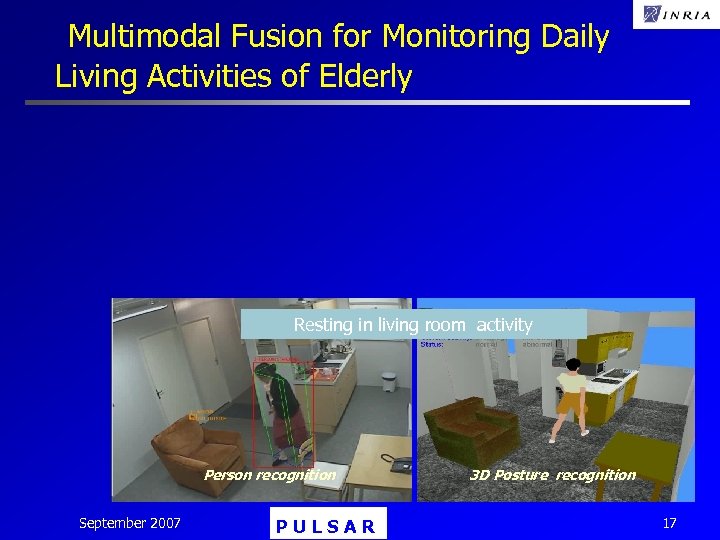

Multimodal Fusion for Monitoring Daily Living Activities of Elderly Resting in living room activity Person recognition September 2007 PULSAR 3 D Posture recognition 17

Multimodal Fusion for Monitoring Daily Living Activities of Elderly Resting in living room activity Person recognition September 2007 PULSAR 3 D Posture recognition 17

Multimodal Fusion for Monitoring Daily Living Activities of Elderly Meal preparation activity Multimodal recognition Person recognition September 2007 PULSAR 18

Multimodal Fusion for Monitoring Daily Living Activities of Elderly Meal preparation activity Multimodal recognition Person recognition September 2007 PULSAR 18

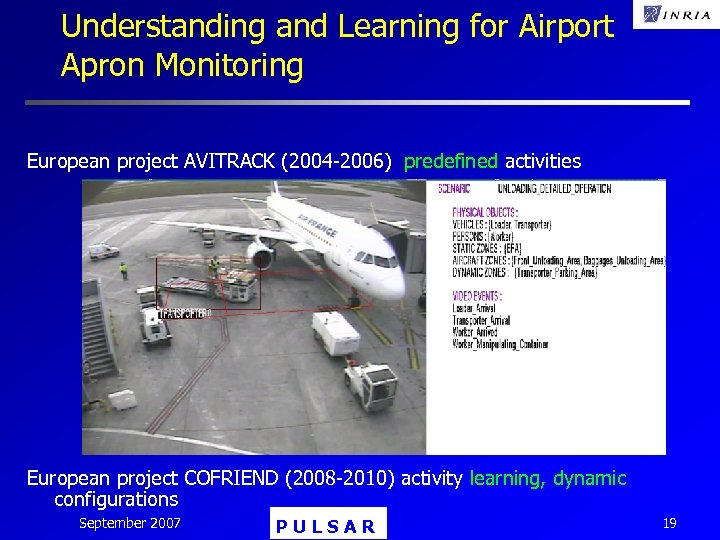

Understanding and Learning for Airport Apron Monitoring European project AVITRACK (2004 -2006) predefined activities European project COFRIEND (2008 -2010) activity learning, dynamic configurations September 2007 PULSAR 19

Understanding and Learning for Airport Apron Monitoring European project AVITRACK (2004 -2006) predefined activities European project COFRIEND (2008 -2010) activity learning, dynamic configurations September 2007 PULSAR 19

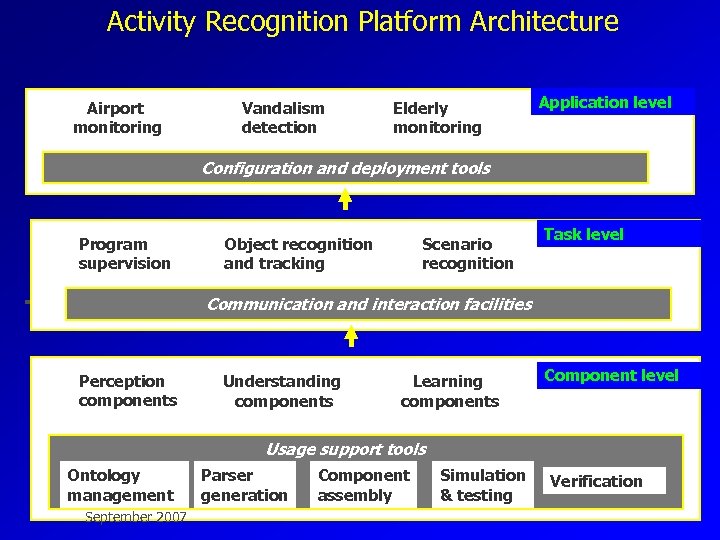

Activity Recognition Platform Architecture Airport monitoring Vandalism detection Elderly monitoring Application level Configuration and deployment tools Program supervision Object recognition and tracking Scenario recognition Task level Communication and interaction facilities Perception components Understanding components Learning components Component level Usage support tools Ontology management September 2007 Parser generation Component assembly Simulation & testing Verification

Activity Recognition Platform Architecture Airport monitoring Vandalism detection Elderly monitoring Application level Configuration and deployment tools Program supervision Object recognition and tracking Scenario recognition Task level Communication and interaction facilities Perception components Understanding components Learning components Component level Usage support tools Ontology management September 2007 Parser generation Component assembly Simulation & testing Verification

PULSAR Project-team Any Questions? September 2007

PULSAR Project-team Any Questions? September 2007

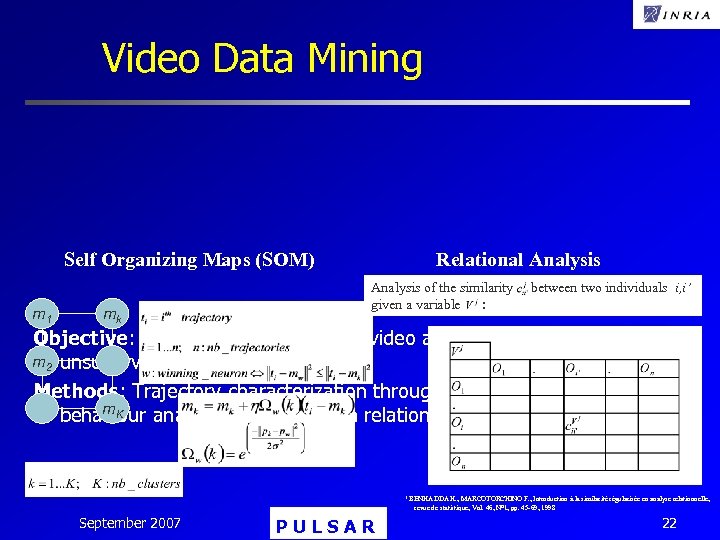

Video Data Mining Self Organizing Maps (SOM) m 1 mk Relational Analysis of the similarity between two individuals i, i’ given a variable : Objective: Knowledge Extraction for video activity monitoring with m 2 unsupervised learning techniques. Methods: Trajectory characterization through clustering (SOM) and m. K behaviour analysis of objects with relational analysis 1. 1 BENHADDA H. , MARCOTORCHINO F. , Introduction à la similarité régularisée en analyse relationnelle, revue de statistique, Vol. 46, N° 1, pp. 45 -69, 1998 September 2007 PULSAR 22

Video Data Mining Self Organizing Maps (SOM) m 1 mk Relational Analysis of the similarity between two individuals i, i’ given a variable : Objective: Knowledge Extraction for video activity monitoring with m 2 unsupervised learning techniques. Methods: Trajectory characterization through clustering (SOM) and m. K behaviour analysis of objects with relational analysis 1. 1 BENHADDA H. , MARCOTORCHINO F. , Introduction à la similarité régularisée en analyse relationnelle, revue de statistique, Vol. 46, N° 1, pp. 45 -69, 1998 September 2007 PULSAR 22

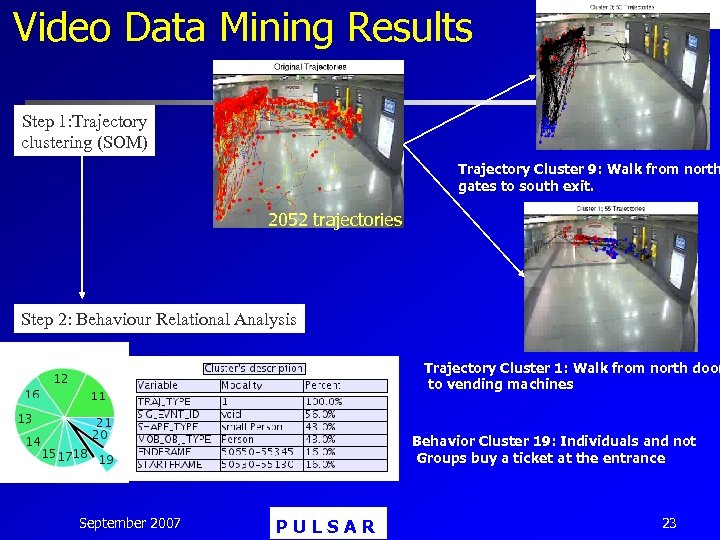

Video Data Mining Results Step 1: Trajectory clustering (SOM) Trajectory Cluster 9: Walk from north gates to south exit. 2052 trajectories Step 2: Behaviour Relational Analysis Trajectory Cluster 1: Walk from north door to vending machines Behavior Cluster 19: Individuals and not Groups buy a ticket at the entrance September 2007 PULSAR 23

Video Data Mining Results Step 1: Trajectory clustering (SOM) Trajectory Cluster 9: Walk from north gates to south exit. 2052 trajectories Step 2: Behaviour Relational Analysis Trajectory Cluster 1: Walk from north door to vending machines Behavior Cluster 19: Individuals and not Groups buy a ticket at the entrance September 2007 PULSAR 23

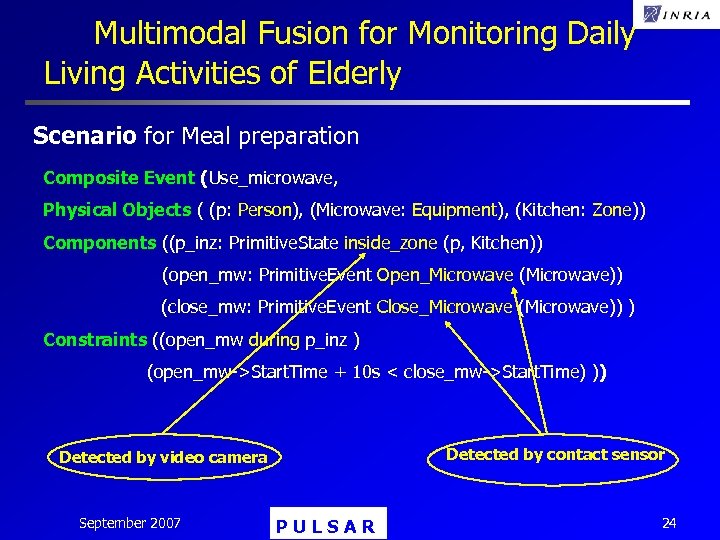

Multimodal Fusion for Monitoring Daily Living Activities of Elderly Scenario for Meal preparation Composite Event (Use_microwave, Physical Objects ( (p: Person), (Microwave: Equipment), (Kitchen: Zone)) Components ((p_inz: Primitive. State inside_zone (p, Kitchen)) (open_mw: Primitive. Event Open_Microwave (Microwave)) (close_mw: Primitive. Event Close_Microwave (Microwave)) ) Constraints ((open_mw during p_inz ) (open_mw->Start. Time + 10 s < close_mw->Start. Time) )) Detected by contact sensor Detected by video camera September 2007 PULSAR 24

Multimodal Fusion for Monitoring Daily Living Activities of Elderly Scenario for Meal preparation Composite Event (Use_microwave, Physical Objects ( (p: Person), (Microwave: Equipment), (Kitchen: Zone)) Components ((p_inz: Primitive. State inside_zone (p, Kitchen)) (open_mw: Primitive. Event Open_Microwave (Microwave)) (close_mw: Primitive. Event Close_Microwave (Microwave)) ) Constraints ((open_mw during p_inz ) (open_mw->Start. Time + 10 s < close_mw->Start. Time) )) Detected by contact sensor Detected by video camera September 2007 PULSAR 24