Psychometrics_lecture_4.ppt

- Количество слайдов: 20

Psychometrics: test development and adaptation Lecture 4 Dr. psych. Tatjana Kanonire 12. 02. 2015

Psychometrics: test development and adaptation Lecture 4 Dr. psych. Tatjana Kanonire 12. 02. 2015

Classical test theory It is assumed that for any trait each individual has a true score. But obtained score differs from his/her true score on account of random error. X=T+e x – test’ score T – true score e – error If we want to obtain the most accurate measurement possible we should test subjects on many occasions. -> It is impossible, so we make the best from one obtained score.

Classical test theory It is assumed that for any trait each individual has a true score. But obtained score differs from his/her true score on account of random error. X=T+e x – test’ score T – true score e – error If we want to obtain the most accurate measurement possible we should test subjects on many occasions. -> It is impossible, so we make the best from one obtained score.

Classical test theory (2) p p p The less the error in the test the more reliable it is (= repeatable). Reliability reflects the principle of replicability (! each test as an experiment). Two kinds of errors: systematic and random. n n Systematic errors occur in tests that seems to measure one variable, but measure also other variable. Systematic errors are often of relatively little importance in psychology, because they effect all measurement equally. Systematic errors can cause the problems where comparisons were made with a test free from such error. Random error more seriously effects the accuracy of measurement. In psychological tests there are many possible sources of random error: how the subject feels when tested; guessing; poor instruction; mistakes in record of responses; interrupted testing, etc.

Classical test theory (2) p p p The less the error in the test the more reliable it is (= repeatable). Reliability reflects the principle of replicability (! each test as an experiment). Two kinds of errors: systematic and random. n n Systematic errors occur in tests that seems to measure one variable, but measure also other variable. Systematic errors are often of relatively little importance in psychology, because they effect all measurement equally. Systematic errors can cause the problems where comparisons were made with a test free from such error. Random error more seriously effects the accuracy of measurement. In psychological tests there are many possible sources of random error: how the subject feels when tested; guessing; poor instruction; mistakes in record of responses; interrupted testing, etc.

Reliability The concept of reliability is best viewed as a continuum ranging from minimal consistency of measurement to near-perfect repeatability of results: simple reaction time - psychological tests weight +

Reliability The concept of reliability is best viewed as a continuum ranging from minimal consistency of measurement to near-perfect repeatability of results: simple reaction time - psychological tests weight +

Reliability (2) We can distinguish two types of reliability: p reliability as stability other time, p reliability as internal consistency Reliability as stability other time: p test-retest reliability n used for personality tests, not for intelligence tests because of training. p the reliability of parallel or alternative forms n is used then you have parallel form and usually for intelligence and achievement tests.

Reliability (2) We can distinguish two types of reliability: p reliability as stability other time, p reliability as internal consistency Reliability as stability other time: p test-retest reliability n used for personality tests, not for intelligence tests because of training. p the reliability of parallel or alternative forms n is used then you have parallel form and usually for intelligence and achievement tests.

Test-retest reliability (1) Test-retest reliability is measured by correlating the scores from a set of subjects who take the test on two occasions. p The correlation coefficient measures the degree of agreement between two sets of scores. p The more similar they are the higher the correlation coefficient. Factors influencing the measurement of test-retest reliability (Kline, 2000): 1. Changes in subjects: we have to take account of the sample from which it was obtained and the nature of variable. This factor is important for choosing appropriate interval between two measurements.

Test-retest reliability (1) Test-retest reliability is measured by correlating the scores from a set of subjects who take the test on two occasions. p The correlation coefficient measures the degree of agreement between two sets of scores. p The more similar they are the higher the correlation coefficient. Factors influencing the measurement of test-retest reliability (Kline, 2000): 1. Changes in subjects: we have to take account of the sample from which it was obtained and the nature of variable. This factor is important for choosing appropriate interval between two measurements.

Test-retest reliability (2) 2. Factors contributing the measurement error: n n p Subjective factors by respondents themselves (as headache, emotional problems, the accident of turning over two pages at once etc. ). Poor test instructions. Subjective scoring. Guessing. It mainly affects intelligence tests. But if multiple-choice items are used, and there is a large number of items, the effects are small. 3. Factors boosting or otherwise distorting test-retest reliability: n n n Time gap: very small lapse of time between two settings -> subjects remember their answers. Difficulty level of items. Actual for ability tests – very easy and very hard items leads to very high or low test-retest reliability. Sample size: samples should contain at least 100 subjects.

Test-retest reliability (2) 2. Factors contributing the measurement error: n n p Subjective factors by respondents themselves (as headache, emotional problems, the accident of turning over two pages at once etc. ). Poor test instructions. Subjective scoring. Guessing. It mainly affects intelligence tests. But if multiple-choice items are used, and there is a large number of items, the effects are small. 3. Factors boosting or otherwise distorting test-retest reliability: n n n Time gap: very small lapse of time between two settings -> subjects remember their answers. Difficulty level of items. Actual for ability tests – very easy and very hard items leads to very high or low test-retest reliability. Sample size: samples should contain at least 100 subjects.

Test-retest reliability (3) High test-retest reliability is. 80 ≤ r ≥ 1 Acceptable test-retest reliability is. 70 ≤ r ≥. 80 (especially if children are tested). => This is because the standard error of measurement of a score increases as the reliability decreases => the test become useless for practical application.

Test-retest reliability (3) High test-retest reliability is. 80 ≤ r ≥ 1 Acceptable test-retest reliability is. 70 ≤ r ≥. 80 (especially if children are tested). => This is because the standard error of measurement of a score increases as the reliability decreases => the test become useless for practical application.

Internal consistency reliability Indicates how well the items of a test that are proposed to measure the same construct produce similar results (are homogeneous). p p Split-half reliability. Cronbach’s alpha. Kuder-Richardson reliability coefficient (similar to Cronbach’s α, but for dichotomous items). Testers reliability.

Internal consistency reliability Indicates how well the items of a test that are proposed to measure the same construct produce similar results (are homogeneous). p p Split-half reliability. Cronbach’s alpha. Kuder-Richardson reliability coefficient (similar to Cronbach’s α, but for dichotomous items). Testers reliability.

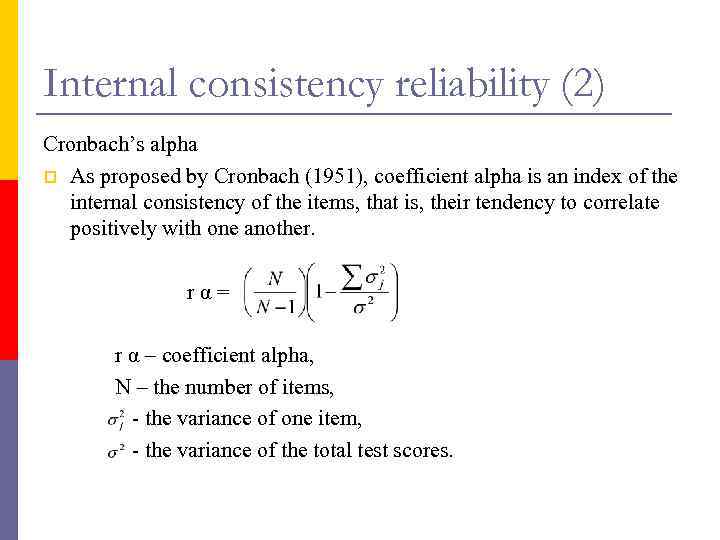

Internal consistency reliability (2) Cronbach’s alpha p As proposed by Cronbach (1951), coefficient alpha is an index of the internal consistency of the items, that is, their tendency to correlate positively with one another. r α = r α – coefficient alpha, N – the number of items, - the variance of one item, - the variance of the total test scores.

Internal consistency reliability (2) Cronbach’s alpha p As proposed by Cronbach (1951), coefficient alpha is an index of the internal consistency of the items, that is, their tendency to correlate positively with one another. r α = r α – coefficient alpha, N – the number of items, - the variance of one item, - the variance of the total test scores.

Internal consistency reliability (3) Coefficient alpha can vary between 0 and 1. Acceptable α >. 70, good α >. 90! High internal consistency is a prerequisite of high validity.

Internal consistency reliability (3) Coefficient alpha can vary between 0 and 1. Acceptable α >. 70, good α >. 90! High internal consistency is a prerequisite of high validity.

Validity The test is said to be valid if it measures what it claims to measure. The measurement of validity is not a straightforward procedure. Face validity – a test is said to be face valid if it appears to be measuring what it claims to measure. But there are no logic relationship between face validity and real validity. p Can increase the motivation of the subjects. Most adults are loath to complete tests which seem to be ridiculous or time-wasting.

Validity The test is said to be valid if it measures what it claims to measure. The measurement of validity is not a straightforward procedure. Face validity – a test is said to be face valid if it appears to be measuring what it claims to measure. But there are no logic relationship between face validity and real validity. p Can increase the motivation of the subjects. Most adults are loath to complete tests which seem to be ridiculous or time-wasting.

Validity (2) Content validity is determined by the degree to which the items on the test are representative of the universe of behavior the test. Content validity is applicable to tests where the domain of items is particularly clear cut. The experts can be used in content evaluation.

Validity (2) Content validity is determined by the degree to which the items on the test are representative of the universe of behavior the test. Content validity is applicable to tests where the domain of items is particularly clear cut. The experts can be used in content evaluation.

Criterial validity Concurrent validity The test is said to possess concurrent validity if it can be shown to correlate highly with another test of the same variable which was administered at the same time. The best criterion test is a benchmark test for variable to be measured. In most fields of psychology there are no such tests. p But in practice correlation beyond. 75 would be regarded as good support for the concurrent validity. If you have a good benchmark test/criterion why do you need new one? p New tests must have some special qualities that differentiate them from other tests. p Concurrent validity is only useful where good criterion tests exist. Where they do not concurrent validity studies are best regarded as aspects of construct validity (Kline, 2000).

Criterial validity Concurrent validity The test is said to possess concurrent validity if it can be shown to correlate highly with another test of the same variable which was administered at the same time. The best criterion test is a benchmark test for variable to be measured. In most fields of psychology there are no such tests. p But in practice correlation beyond. 75 would be regarded as good support for the concurrent validity. If you have a good benchmark test/criterion why do you need new one? p New tests must have some special qualities that differentiate them from other tests. p Concurrent validity is only useful where good criterion tests exist. Where they do not concurrent validity studies are best regarded as aspects of construct validity (Kline, 2000).

Criterial validity (2) Predictive validity A test may be said to have predictive validity if it will predict some criterion or other. It is good support for the efficacy of a test. What could be clear criterion for prediction?

Criterial validity (2) Predictive validity A test may be said to have predictive validity if it will predict some criterion or other. It is good support for the efficacy of a test. What could be clear criterion for prediction?

Construct validity A construct is some postulated attribute of people, assumed to be reflected in test performance (Cronbach, & Meehl, 1955). In establishing the construct validity of a test we carry out a number of studies with it and demonstrate that the results are consonant with definition, i. e. the psychological nature of the construct.

Construct validity A construct is some postulated attribute of people, assumed to be reflected in test performance (Cronbach, & Meehl, 1955). In establishing the construct validity of a test we carry out a number of studies with it and demonstrate that the results are consonant with definition, i. e. the psychological nature of the construct.

Approaches to construct validity (Gregory, 1992) p Analyse to determine whether the test items or subtests are homogeneous and therefore measure a single construct. p Study of developmental changes to determine whether they are consistent with theory of the construct. p Research to ascertain whether group differences on test scores are theory-consistent. p Analysis to determine whether intervention effects on test scores are theory-consistent. p Correlation of the tests with other related (convergent validity) and unrelated (divergent validity) tests and measures. p Factor analysis of test scores (also in relation to other sources of information).

Approaches to construct validity (Gregory, 1992) p Analyse to determine whether the test items or subtests are homogeneous and therefore measure a single construct. p Study of developmental changes to determine whether they are consistent with theory of the construct. p Research to ascertain whether group differences on test scores are theory-consistent. p Analysis to determine whether intervention effects on test scores are theory-consistent. p Correlation of the tests with other related (convergent validity) and unrelated (divergent validity) tests and measures. p Factor analysis of test scores (also in relation to other sources of information).

Factorial validity - is the sort of construct validity. We use the factor analysis for establishing validity. p Factor analysis is a statistical method in which variations in scores on a number of variables (items) are expressed in a smaller number of dimensions or constructs. These are factors. p The sample for factor analysis should be no less than 200. Items with a loading no less than. 30 are kept in the factor. Even if item loading is greater than. 30 and it is no included in other factors, but it differs by content, we should take this item away. p p

Factorial validity - is the sort of construct validity. We use the factor analysis for establishing validity. p Factor analysis is a statistical method in which variations in scores on a number of variables (items) are expressed in a smaller number of dimensions or constructs. These are factors. p The sample for factor analysis should be no less than 200. Items with a loading no less than. 30 are kept in the factor. Even if item loading is greater than. 30 and it is no included in other factors, but it differs by content, we should take this item away. p p

Factorial validity (2) The description of factor analysis (principal component analysis) on test items should include: p the type of analysis – usually – principal component analysis. p the determinant – should be more than. 0001. If the determinant is zero, than a factor analytic solution can not be obtained, because this would require dividing by zero. p tests of assumptions – Kaiser-Meyer-Olkin (KMO) and Bartlett test. KMO measure should be greater than. 70, it tells whether or not enough items are predicted by each factor. The Bartlett test should be significant, this means that the variables are correlated highly enough to provide a reasonable basis for factor analysis.

Factorial validity (2) The description of factor analysis (principal component analysis) on test items should include: p the type of analysis – usually – principal component analysis. p the determinant – should be more than. 0001. If the determinant is zero, than a factor analytic solution can not be obtained, because this would require dividing by zero. p tests of assumptions – Kaiser-Meyer-Olkin (KMO) and Bartlett test. KMO measure should be greater than. 70, it tells whether or not enough items are predicted by each factor. The Bartlett test should be significant, this means that the variables are correlated highly enough to provide a reasonable basis for factor analysis.

Factorial validity (3) The description of factor analysis (principal component analysis) on test items should include: p total variance explained and each factor eigenvalues. p the rotation method (orthogonal or oblique rotation) and factor loading for the rotated factors. Usually the varimax rotation is used for test development.

Factorial validity (3) The description of factor analysis (principal component analysis) on test items should include: p total variance explained and each factor eigenvalues. p the rotation method (orthogonal or oblique rotation) and factor loading for the rotated factors. Usually the varimax rotation is used for test development.