fec3149867414a38d94de6bf03affea3.ppt

- Количество слайдов: 1

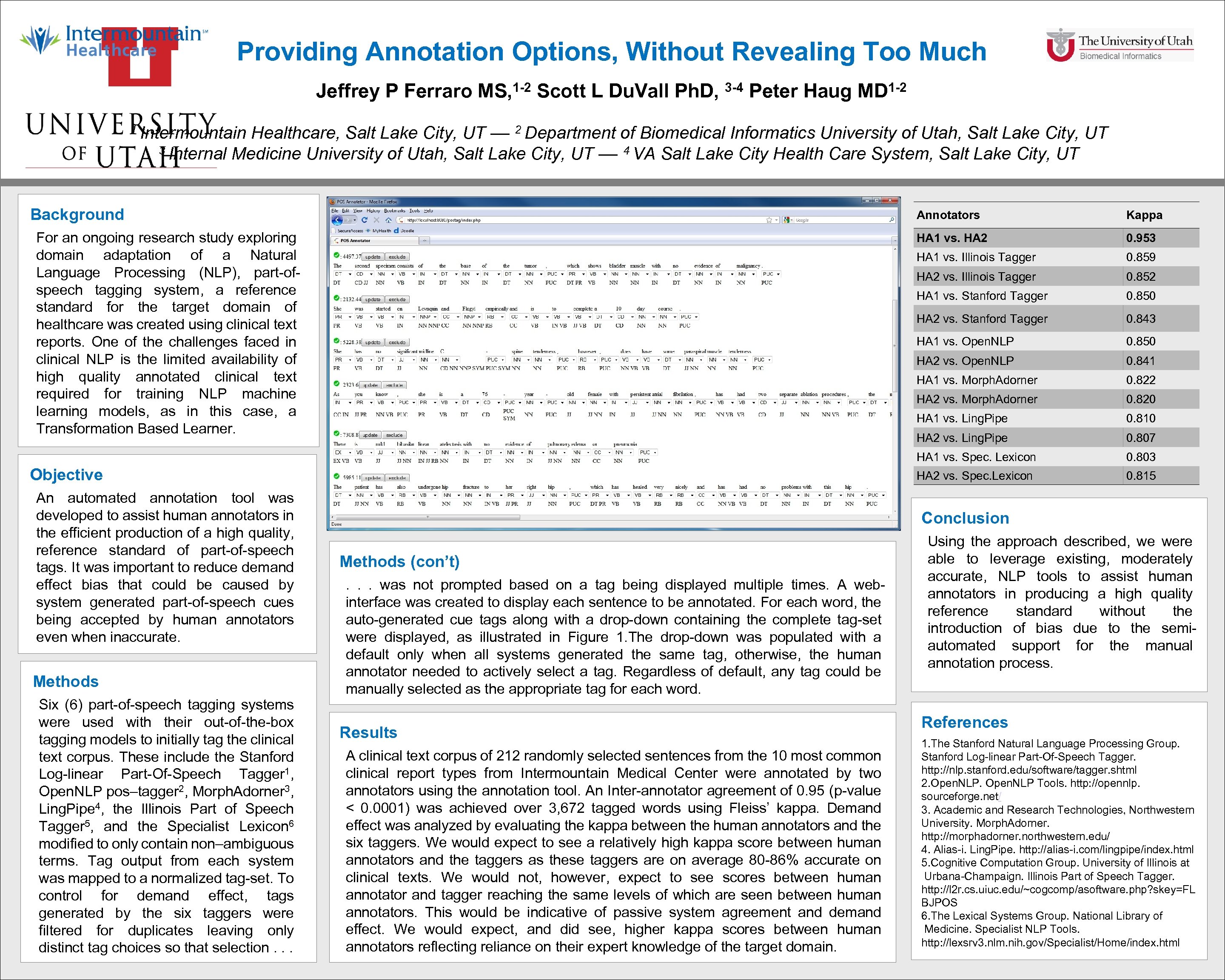

Providing Annotation Options, Without Revealing Too Much Jeffrey P Ferraro 1 -2 MS, Scott L Du. Vall Ph. D, 3 -4 Peter Haug 1 -2 MD 1 Intermountain Healthcare, Salt Lake City, UT –– 2 Department of Biomedical Informatics University of Utah, Salt Lake City, UT 3 Internal Medicine University of Utah, Salt Lake City, UT –– 4 VA Salt Lake City Health Care System, Salt Lake City, UT Background Annotators HA 1 vs. HA 2 Methods Six (6) part-of-speech tagging systems were used with their out-of-the-box tagging models to initially tag the clinical text corpus. These include the Stanford Log-linear Part-Of-Speech Tagger 1, Open. NLP pos–tagger 2, Morph. Adorner 3, Ling. Pipe 4, the Illinois Part of Speech Tagger 5, and the Specialist Lexicon 6 modified to only contain non–ambiguous terms. Tag output from each system was mapped to a normalized tag-set. To control for demand effect, tags generated by the six taggers were filtered for duplicates leaving only distinct tag choices so that selection. . . 0. 852 HA 1 vs. Stanford Tagger 0. 850 HA 2 vs. Stanford Tagger 0. 843 HA 1 vs. Open. NLP 0. 850 HA 2 vs. Open. NLP 0. 841 HA 1 vs. Morph. Adorner 0. 822 HA 2 vs. Morph. Adorner 0. 820 HA 1 vs. Ling. Pipe 0. 810 HA 2 vs. Ling. Pipe 0. 807 HA 1 vs. Spec. Lexicon An automated annotation tool was developed to assist human annotators in the efficient production of a high quality, reference standard of part-of-speech tags. It was important to reduce demand effect bias that could be caused by system generated part-of-speech cues being accepted by human annotators even when inaccurate. 0. 859 HA 2 vs. Illinois Tagger 0. 803 HA 2 vs. Spec. Lexicon Objective 0. 953 HA 1 vs. Illinois Tagger For an ongoing research study exploring domain adaptation of a Natural Language Processing (NLP), part-ofspeech tagging system, a reference standard for the target domain of healthcare was created using clinical text reports. One of the challenges faced in clinical NLP is the limited availability of high quality annotated clinical text required for training NLP machine learning models, as in this case, a Transformation Based Learner. Kappa 0. 815 Conclusion Methods (con’t). . . was not prompted based on a tag being displayed multiple times. A webinterface was created to display each sentence to be annotated. For each word, the auto-generated cue tags along with a drop-down containing the complete tag-set were displayed, as illustrated in Figure 1. The drop-down was populated with a default only when all systems generated the same tag, otherwise, the human annotator needed to actively select a tag. Regardless of default, any tag could be manually selected as the appropriate tag for each word. Results A clinical text corpus of 212 randomly selected sentences from the 10 most common clinical report types from Intermountain Medical Center were annotated by two annotators using the annotation tool. An Inter-annotator agreement of 0. 95 (p-value < 0. 0001) was achieved over 3, 672 tagged words using Fleiss’ kappa. Demand effect was analyzed by evaluating the kappa between the human annotators and the six taggers. We would expect to see a relatively high kappa score between human annotators and the taggers as these taggers are on average 80 -86% accurate on clinical texts. We would not, however, expect to see scores between human annotator and tagger reaching the same levels of which are seen between human annotators. This would be indicative of passive system agreement and demand effect. We would expect, and did see, higher kappa scores between human annotators reflecting reliance on their expert knowledge of the target domain. Using the approach described, we were able to leverage existing, moderately accurate, NLP tools to assist human annotators in producing a high quality reference standard without the introduction of bias due to the semiautomated support for the manual annotation process. References 1. The Stanford Natural Language Processing Group. Stanford Log-linear Part-Of-Speech Tagger. http: //nlp. stanford. edu/software/tagger. shtml 2. Open. NLP Tools. http: //opennlp. sourceforge. net/ 3. Academic and Research Technologies, Northwestern University. Morph. Adorner. Acknowledgements http: //morphadorner. northwestern. edu/ 4. Alias-i. Ling. Pipe. http: //alias-i. com/lingpipe/index. html 5. Cognitive Computation Group. University of Illinois at Contact Information Urbana-Champaign. Illinois Part of Speech Tagger. http: //l 2 r. cs. uiuc. edu/~cogcomp/asoftware. php? skey=FL BJPOS 6. The Lexical Systems Group. National Library of Medicine. Specialist NLP Tools. http: //lexsrv 3. nlm. nih. gov/Specialist/Home/index. html

Providing Annotation Options, Without Revealing Too Much Jeffrey P Ferraro 1 -2 MS, Scott L Du. Vall Ph. D, 3 -4 Peter Haug 1 -2 MD 1 Intermountain Healthcare, Salt Lake City, UT –– 2 Department of Biomedical Informatics University of Utah, Salt Lake City, UT 3 Internal Medicine University of Utah, Salt Lake City, UT –– 4 VA Salt Lake City Health Care System, Salt Lake City, UT Background Annotators HA 1 vs. HA 2 Methods Six (6) part-of-speech tagging systems were used with their out-of-the-box tagging models to initially tag the clinical text corpus. These include the Stanford Log-linear Part-Of-Speech Tagger 1, Open. NLP pos–tagger 2, Morph. Adorner 3, Ling. Pipe 4, the Illinois Part of Speech Tagger 5, and the Specialist Lexicon 6 modified to only contain non–ambiguous terms. Tag output from each system was mapped to a normalized tag-set. To control for demand effect, tags generated by the six taggers were filtered for duplicates leaving only distinct tag choices so that selection. . . 0. 852 HA 1 vs. Stanford Tagger 0. 850 HA 2 vs. Stanford Tagger 0. 843 HA 1 vs. Open. NLP 0. 850 HA 2 vs. Open. NLP 0. 841 HA 1 vs. Morph. Adorner 0. 822 HA 2 vs. Morph. Adorner 0. 820 HA 1 vs. Ling. Pipe 0. 810 HA 2 vs. Ling. Pipe 0. 807 HA 1 vs. Spec. Lexicon An automated annotation tool was developed to assist human annotators in the efficient production of a high quality, reference standard of part-of-speech tags. It was important to reduce demand effect bias that could be caused by system generated part-of-speech cues being accepted by human annotators even when inaccurate. 0. 859 HA 2 vs. Illinois Tagger 0. 803 HA 2 vs. Spec. Lexicon Objective 0. 953 HA 1 vs. Illinois Tagger For an ongoing research study exploring domain adaptation of a Natural Language Processing (NLP), part-ofspeech tagging system, a reference standard for the target domain of healthcare was created using clinical text reports. One of the challenges faced in clinical NLP is the limited availability of high quality annotated clinical text required for training NLP machine learning models, as in this case, a Transformation Based Learner. Kappa 0. 815 Conclusion Methods (con’t). . . was not prompted based on a tag being displayed multiple times. A webinterface was created to display each sentence to be annotated. For each word, the auto-generated cue tags along with a drop-down containing the complete tag-set were displayed, as illustrated in Figure 1. The drop-down was populated with a default only when all systems generated the same tag, otherwise, the human annotator needed to actively select a tag. Regardless of default, any tag could be manually selected as the appropriate tag for each word. Results A clinical text corpus of 212 randomly selected sentences from the 10 most common clinical report types from Intermountain Medical Center were annotated by two annotators using the annotation tool. An Inter-annotator agreement of 0. 95 (p-value < 0. 0001) was achieved over 3, 672 tagged words using Fleiss’ kappa. Demand effect was analyzed by evaluating the kappa between the human annotators and the six taggers. We would expect to see a relatively high kappa score between human annotators and the taggers as these taggers are on average 80 -86% accurate on clinical texts. We would not, however, expect to see scores between human annotator and tagger reaching the same levels of which are seen between human annotators. This would be indicative of passive system agreement and demand effect. We would expect, and did see, higher kappa scores between human annotators reflecting reliance on their expert knowledge of the target domain. Using the approach described, we were able to leverage existing, moderately accurate, NLP tools to assist human annotators in producing a high quality reference standard without the introduction of bias due to the semiautomated support for the manual annotation process. References 1. The Stanford Natural Language Processing Group. Stanford Log-linear Part-Of-Speech Tagger. http: //nlp. stanford. edu/software/tagger. shtml 2. Open. NLP Tools. http: //opennlp. sourceforge. net/ 3. Academic and Research Technologies, Northwestern University. Morph. Adorner. Acknowledgements http: //morphadorner. northwestern. edu/ 4. Alias-i. Ling. Pipe. http: //alias-i. com/lingpipe/index. html 5. Cognitive Computation Group. University of Illinois at Contact Information Urbana-Champaign. Illinois Part of Speech Tagger. http: //l 2 r. cs. uiuc. edu/~cogcomp/asoftware. php? skey=FL BJPOS 6. The Lexical Systems Group. National Library of Medicine. Specialist NLP Tools. http: //lexsrv 3. nlm. nih. gov/Specialist/Home/index. html