88ece394f85de0277974a31ee60f3c1f.ppt

- Количество слайдов: 62

Prototype of the “new” Software metrics tool Gordana Rakić, Zoran Budimac

Prototype of the “new” Software metrics tool Gordana Rakić, Zoran Budimac

Contents Abstract (what we did) Motivation (why we did it) Background (basic information on the subject) Related work (what others did) Description (how we did it) Example (how does it work) Conclusion and results. Future work References

Contents Abstract (what we did) Motivation (why we did it) Background (basic information on the subject) Related work (what others did) Description (how we did it) Example (how does it work) Conclusion and results. Future work References

Abstract • First prototype of “universal” software metrics tool • Characteristics – Platform independency • Developed in Java Programming Language – Application range • Two programming languages (Modula-2 and Java) • Two software metrics (CC and LOC) – Annotated syntax tree based source code and metric values representation - enables • Source code and metric values history • Language independency of the tool

Abstract • First prototype of “universal” software metrics tool • Characteristics – Platform independency • Developed in Java Programming Language – Application range • Two programming languages (Modula-2 and Java) • Two software metrics (CC and LOC) – Annotated syntax tree based source code and metric values representation - enables • Source code and metric values history • Language independency of the tool

Contents Abstract (what we did) Motivation (why we did it) Background (basic information on the subject) Related work (what others did) Description (how we did it) Example (how does it work) Conclusion and results. Future work References

Contents Abstract (what we did) Motivation (why we did it) Background (basic information on the subject) Related work (what others did) Description (how we did it) Example (how does it work) Conclusion and results. Future work References

Motivation • Systematic application of software metrics techniques can significantly improve the quality of a software product • The most important weaknesses of current techniques and tools: – Many techniques/tools are appropriate for only one programming language or for one type of programming languages – Support of object-oriented metrics is still weak – Many techniques/tools compute numerical results with no real interpretation of their meanings – There are no hints and advices what typical actions should be taken in order to improve the software – The technique/tool should discourage cheating – Current techniques/tools are not sensitive to the existence of additional code – Sometimes is not clear which specific software metric have to be applied to accomplish the specific goal. [Budimac, Hericko, 2010]

Motivation • Systematic application of software metrics techniques can significantly improve the quality of a software product • The most important weaknesses of current techniques and tools: – Many techniques/tools are appropriate for only one programming language or for one type of programming languages – Support of object-oriented metrics is still weak – Many techniques/tools compute numerical results with no real interpretation of their meanings – There are no hints and advices what typical actions should be taken in order to improve the software – The technique/tool should discourage cheating – Current techniques/tools are not sensitive to the existence of additional code – Sometimes is not clear which specific software metric have to be applied to accomplish the specific goal. [Budimac, Hericko, 2010]

![…From the reviewers’ point of view [ICSOFT 2010] “This paper motivates the needs of …From the reviewers’ point of view [ICSOFT 2010] “This paper motivates the needs of](https://present5.com/presentation/88ece394f85de0277974a31ee60f3c1f/image-6.jpg) …From the reviewers’ point of view [ICSOFT 2010] “This paper motivates the needs of a “universal” software metrics tool and presents the main design lines of the prototype. Having a robust and flexible metric tools is really valuable for any software engineering effort” “The authors present a vision of a unified software metrics tool that is able to wipe away the frustrating differences between programming languages and provide a single, universal set of metrics that can be applied uniformly to all software. This is a laudable vision, and if it were achievable it would be a truly remarkable step forward. “

…From the reviewers’ point of view [ICSOFT 2010] “This paper motivates the needs of a “universal” software metrics tool and presents the main design lines of the prototype. Having a robust and flexible metric tools is really valuable for any software engineering effort” “The authors present a vision of a unified software metrics tool that is able to wipe away the frustrating differences between programming languages and provide a single, universal set of metrics that can be applied uniformly to all software. This is a laudable vision, and if it were achievable it would be a truly remarkable step forward. “

… From the colleagues’ point of view* • The most important step forward, but the most complicated one would be development of support for all, as well as for “ancient” programming languages… • Programmers’ cheatingin measuring process is frequent problem • Reasoning in measurement process is important v Thanks to Nataša Ibrajter Zvonimir Dudan Dragan Maćoš

… From the colleagues’ point of view* • The most important step forward, but the most complicated one would be development of support for all, as well as for “ancient” programming languages… • Programmers’ cheatingin measuring process is frequent problem • Reasoning in measurement process is important v Thanks to Nataša Ibrajter Zvonimir Dudan Dragan Maćoš

…Motivation and this thesis… • Many techniques/tools are appropriate for only one programming language or for one type of programming languages • Support of object-oriented metrics is still weak • Many techniques/tools compute numerical results with no real interpretation of their meanings • There are no hints and advices what typical actions should be taken in order to improve the software • The technique/tool should discourage cheating • Current techniques/tools are not sensitive to the existence of additional code • Sometimes is not clear which specific software metric have to be applied to accomplish the specific goal. • PLUS – Platform dependency – Support for other metrics – History of source code – History of calculated metrics

…Motivation and this thesis… • Many techniques/tools are appropriate for only one programming language or for one type of programming languages • Support of object-oriented metrics is still weak • Many techniques/tools compute numerical results with no real interpretation of their meanings • There are no hints and advices what typical actions should be taken in order to improve the software • The technique/tool should discourage cheating • Current techniques/tools are not sensitive to the existence of additional code • Sometimes is not clear which specific software metric have to be applied to accomplish the specific goal. • PLUS – Platform dependency – Support for other metrics – History of source code – History of calculated metrics

Contents Abstract (what we did) Motivation (why we did it) Background (basic information on the subject) Related work (what others did) Description (how we did it) Example (how does it work) Conclusion and results. Future work References

Contents Abstract (what we did) Motivation (why we did it) Background (basic information on the subject) Related work (what others did) Description (how we did it) Example (how does it work) Conclusion and results. Future work References

![Background (1) • Software metrics [Kan, 2003], [Budimac et al. , 2008] – numerical Background (1) • Software metrics [Kan, 2003], [Budimac et al. , 2008] – numerical](https://present5.com/presentation/88ece394f85de0277974a31ee60f3c1f/image-10.jpg) Background (1) • Software metrics [Kan, 2003], [Budimac et al. , 2008] – numerical values – reflect some property of • whole software product • one piece of software product • specification of software product Software metrics : software property numeric value

Background (1) • Software metrics [Kan, 2003], [Budimac et al. , 2008] – numerical values – reflect some property of • whole software product • one piece of software product • specification of software product Software metrics : software property numeric value

Background (2) (Some of) Software metrics classifications • By target –Process –Product –Resources • By availability –Internal –External • By measurement object –Size –Complexity –Structure –Architecture • By applicability –Specification –Design –Source code –…

Background (2) (Some of) Software metrics classifications • By target –Process –Product –Resources • By availability –Internal –External • By measurement object –Size –Complexity –Structure –Architecture • By applicability –Specification –Design –Source code –…

Background (3) • Some of Software metrics – Lines of Code (LOC) family • LOC • Lines of Source Code (SLOC) • Lines of Comments (CLOC) • Lines of Commented Source Code (CSLOC) • Physical LOC (FLOC) • Logical LOC (LLOC) – Cyclomatic Complexity (CC) – Halstead Metrics (H) – Object Oriented Metrics (OO) – family – …

Background (3) • Some of Software metrics – Lines of Code (LOC) family • LOC • Lines of Source Code (SLOC) • Lines of Comments (CLOC) • Lines of Commented Source Code (CSLOC) • Physical LOC (FLOC) • Logical LOC (LLOC) – Cyclomatic Complexity (CC) – Halstead Metrics (H) – Object Oriented Metrics (OO) – family – …

Contents Abstract (what we did) Motivation (why we did it) Background (basic information on the subject) Related work (what others did) Description (how we did it) Example (how does it work) Conclusion and results. Future work References

Contents Abstract (what we did) Motivation (why we did it) Background (basic information on the subject) Related work (what others did) Description (how we did it) Example (how does it work) Conclusion and results. Future work References

Related work • Review of software metric tools available for usage – Based on testing • Review of available literature – Based on • research papers • available development and product documentation

Related work • Review of software metric tools available for usage – Based on testing • Review of available literature – Based on • research papers • available development and product documentation

Tools review • Criteria – Platform dependency – Programming language dependency – Supported metrics – History of code storing facility – History of metrics storing facility

Tools review • Criteria – Platform dependency – Programming language dependency – Supported metrics – History of code storing facility – History of metrics storing facility

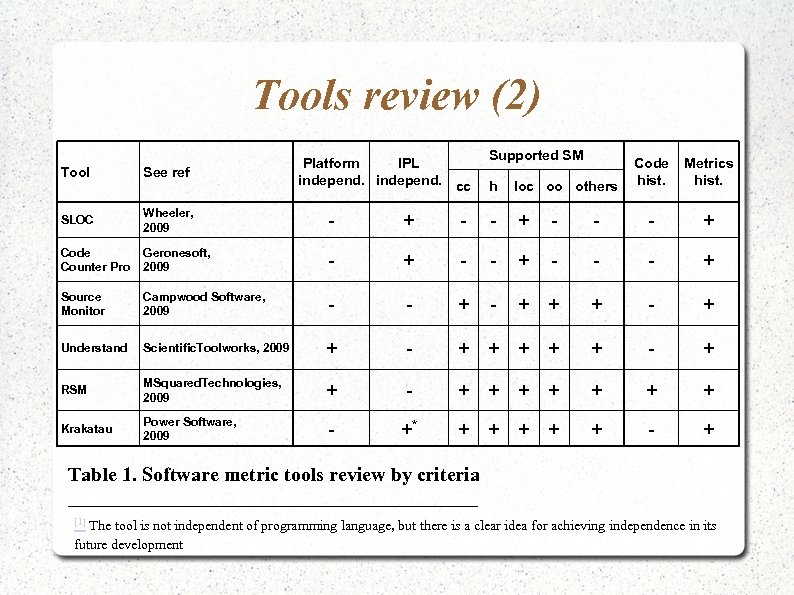

Tools review (2) Platform IPL independ. cc Supported SM Code hist. Metrics hist. - - + - - - + + + - + + + + + - +* + + + - + Tool See ref SLOC Wheeler, 2009 - + - Code Counter Pro Geronesoft, 2009 - + - - + Source Monitor Campwood Software, 2009 - - + - Understand Scientific. Toolworks, 2009 + RSM MSquared. Technologies, 2009 Krakatau Power Software, 2009 h loc oo others Table 1. Software metric tools review by criteria _____________________ [1] The tool is not independent of programming language, but there is a clear idea for achieving independence in its future development

Tools review (2) Platform IPL independ. cc Supported SM Code hist. Metrics hist. - - + - - - + + + - + + + + + - +* + + + - + Tool See ref SLOC Wheeler, 2009 - + - Code Counter Pro Geronesoft, 2009 - + - - + Source Monitor Campwood Software, 2009 - - + - Understand Scientific. Toolworks, 2009 + RSM MSquared. Technologies, 2009 Krakatau Power Software, 2009 h loc oo others Table 1. Software metric tools review by criteria _____________________ [1] The tool is not independent of programming language, but there is a clear idea for achieving independence in its future development

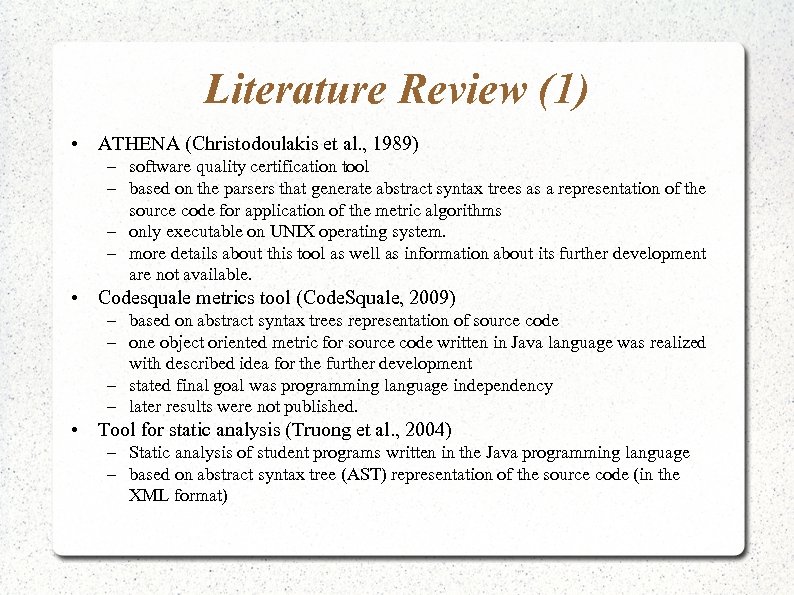

Literature Review (1) • ATHENA (Christodoulakis et al. , 1989) – software quality certification tool – based on the parsers that generate abstract syntax trees as a representation of the source code for application of the metric algorithms – only executable on UNIX operating system. – more details about this tool as well as information about its further development are not available. • Codesquale metrics tool (Code. Squale, 2009) – based on abstract syntax trees representation of source code – one object oriented metric for source code written in Java language was realized with described idea for the further development – stated final goal was programming language independency – later results were not published. • Tool for static analysis (Truong et al. , 2004) – Static analysis of student programs written in the Java programming language – based on abstract syntax tree (AST) representation of the source code (in the XML format)

Literature Review (1) • ATHENA (Christodoulakis et al. , 1989) – software quality certification tool – based on the parsers that generate abstract syntax trees as a representation of the source code for application of the metric algorithms – only executable on UNIX operating system. – more details about this tool as well as information about its further development are not available. • Codesquale metrics tool (Code. Squale, 2009) – based on abstract syntax trees representation of source code – one object oriented metric for source code written in Java language was realized with described idea for the further development – stated final goal was programming language independency – later results were not published. • Tool for static analysis (Truong et al. , 2004) – Static analysis of student programs written in the Java programming language – based on abstract syntax tree (AST) representation of the source code (in the XML format)

Literature Review (2) • Language independency in some related areas of source code analysis based on Abstract Syntax Tree representation of the source code – duplicated code analysis • (Ducasse et al. , 1999) – code clone analysis • (Baxter et al. , 1998) – detection of similar classes in the program code written in the Java programming language • (Sager et al. , 2006) – monitoring of changes or program code written in the Java programming language • (Neamtiu et al. , 2005)

Literature Review (2) • Language independency in some related areas of source code analysis based on Abstract Syntax Tree representation of the source code – duplicated code analysis • (Ducasse et al. , 1999) – code clone analysis • (Baxter et al. , 1998) – detection of similar classes in the program code written in the Java programming language • (Sager et al. , 2006) – monitoring of changes or program code written in the Java programming language • (Neamtiu et al. , 2005)

Review conclusion Practice needs new tool which • Is platform independent • Is programming language independent • Calculates broad specter of metrics • Supports keeping of source code history • Supports keeping of calculated values of software metrics

Review conclusion Practice needs new tool which • Is platform independent • Is programming language independent • Calculates broad specter of metrics • Supports keeping of source code history • Supports keeping of calculated values of software metrics

Contents Abstract (what we did) Motivation (why we did it) Background (basic information on the subject) Related work (what others did) Description (how we did it) Example (how does it work) Conclusion and results. Future work References

Contents Abstract (what we did) Motivation (why we did it) Background (basic information on the subject) Related work (what others did) Description (how we did it) Example (how does it work) Conclusion and results. Future work References

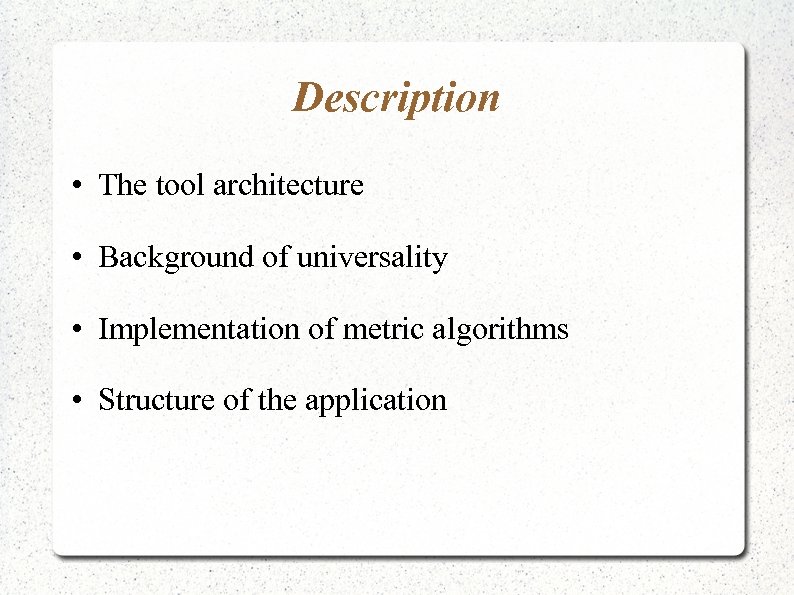

Description • The tool architecture • Background of universality • Implementation of metric algorithms • Structure of the application

Description • The tool architecture • Background of universality • Implementation of metric algorithms • Structure of the application

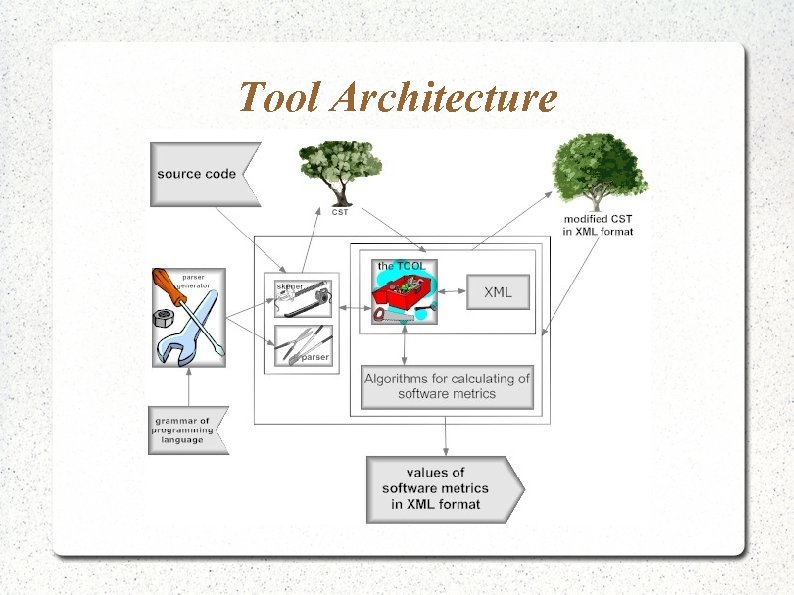

Tool Architecture

Tool Architecture

Parser Generators • Tool used for generation of language translators • Input – language grammar • Output – Language scanner – Language parser + Intermediate representation of language constructions !syntax trees!

Parser Generators • Tool used for generation of language translators • Input – language grammar • Output – Language scanner – Language parser + Intermediate representation of language constructions !syntax trees!

Syntax Trees • Syntax Trees – Intermediate structure produced by • parser generator directly • translator generated by parser generator • Represent – language in abstract form – concrete source code elements attached to corresponding abstract language elements

Syntax Trees • Syntax Trees – Intermediate structure produced by • parser generator directly • translator generated by parser generator • Represent – language in abstract form – concrete source code elements attached to corresponding abstract language elements

Syntax Trees (2) • Classification of Syntax trees used in this thesis – Abstract Syntax Tree (AST) represents concrete source code – Concrete Syntax Tree (CST) represents concrete source code elements attached to corresponding abstract language elements – Enriched Concrete Syntax Tree (e. CST) • Represents concrete source code elements attached to corresponding abstract language elements • Contains additional information - universal nodes as markers for language elements figuring in metric algorithms

Syntax Trees (2) • Classification of Syntax trees used in this thesis – Abstract Syntax Tree (AST) represents concrete source code – Concrete Syntax Tree (CST) represents concrete source code elements attached to corresponding abstract language elements – Enriched Concrete Syntax Tree (e. CST) • Represents concrete source code elements attached to corresponding abstract language elements • Contains additional information - universal nodes as markers for language elements figuring in metric algorithms

Description • The tool architecture • Background of universality • Implementation of metric algorithms • Structure of the application

Description • The tool architecture • Background of universality • Implementation of metric algorithms • Structure of the application

Background of universality • Enriched Concrete Syntax Tree (e. CST) – Generated by Parser generator • Generation is based on programming language grammar • Enrichment is based on modification of programming language grammar by adding universal nodes into tree – Stored to XML structure • Enrichment does not effect structure of the tree – Only content of the tree is affected by these changes

Background of universality • Enriched Concrete Syntax Tree (e. CST) – Generated by Parser generator • Generation is based on programming language grammar • Enrichment is based on modification of programming language grammar by adding universal nodes into tree – Stored to XML structure • Enrichment does not effect structure of the tree – Only content of the tree is affected by these changes

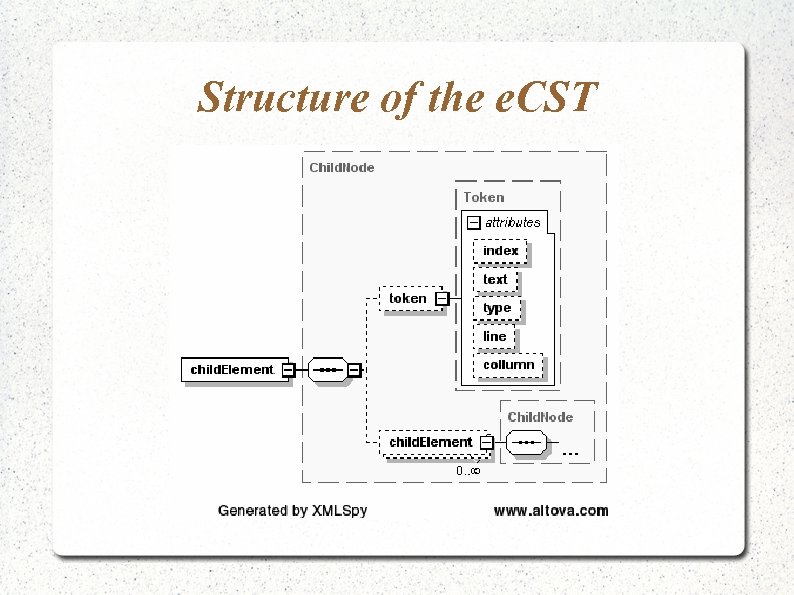

Structure of the e. CST

Structure of the e. CST

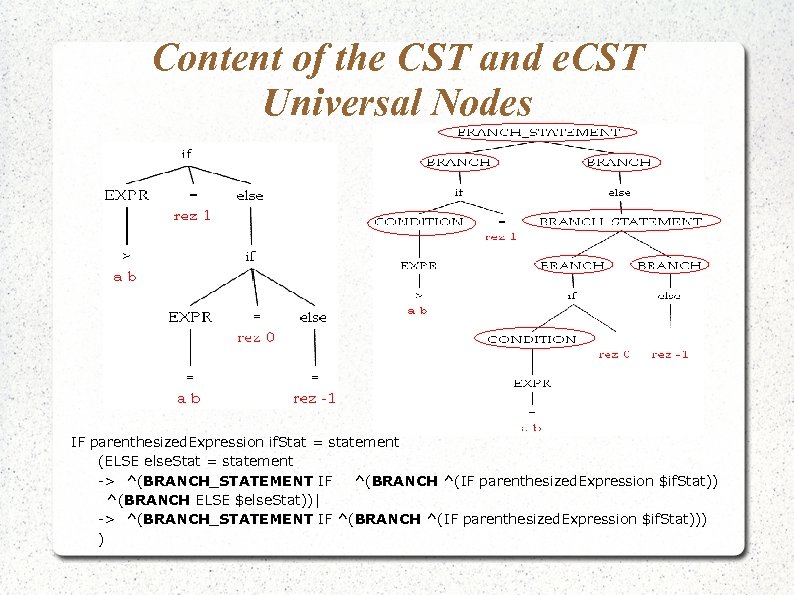

Content of the CST and e. CST Universal Nodes IF parenthesized. Expression if. Stat = statement (ELSE else. Stat = statement -> ^(BRANCH_STATEMENT IF ^(BRANCH ^(IF parenthesized. Expression $if. Stat)) ^(BRANCH ELSE $else. Stat))| -> ^(BRANCH_STATEMENT IF ^(BRANCH ^(IF parenthesized. Expression $if. Stat))) )

Content of the CST and e. CST Universal Nodes IF parenthesized. Expression if. Stat = statement (ELSE else. Stat = statement -> ^(BRANCH_STATEMENT IF ^(BRANCH ^(IF parenthesized. Expression $if. Stat)) ^(BRANCH ELSE $else. Stat))| -> ^(BRANCH_STATEMENT IF ^(BRANCH ^(IF parenthesized. Expression $if. Stat))) )

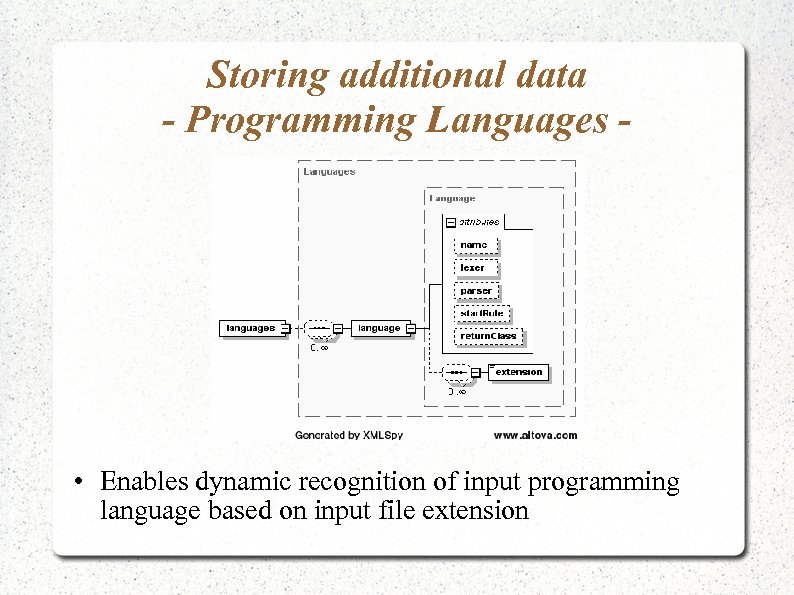

Storing additional data - Programming Languages - • Enables dynamic recognition of input programming language based on input file extension

Storing additional data - Programming Languages - • Enables dynamic recognition of input programming language based on input file extension

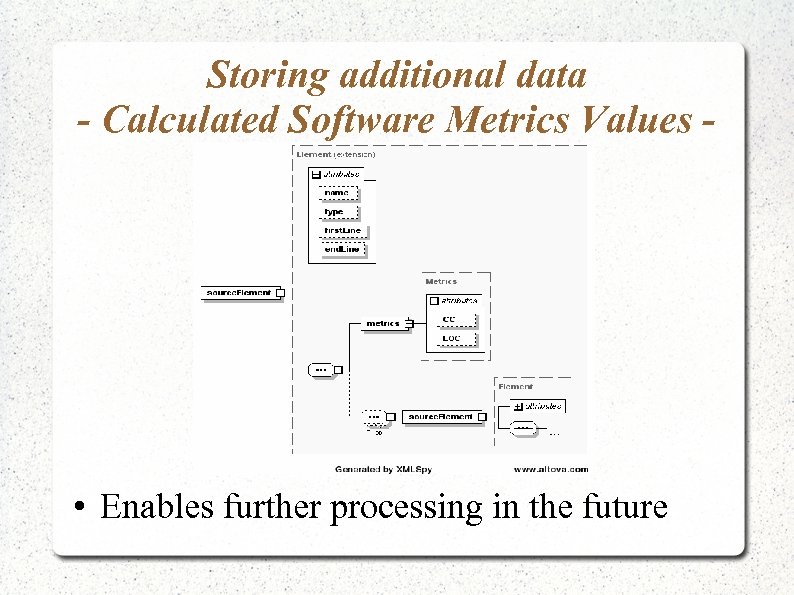

Storing additional data - Calculated Software Metrics Values - • Enables further processing in the future

Storing additional data - Calculated Software Metrics Values - • Enables further processing in the future

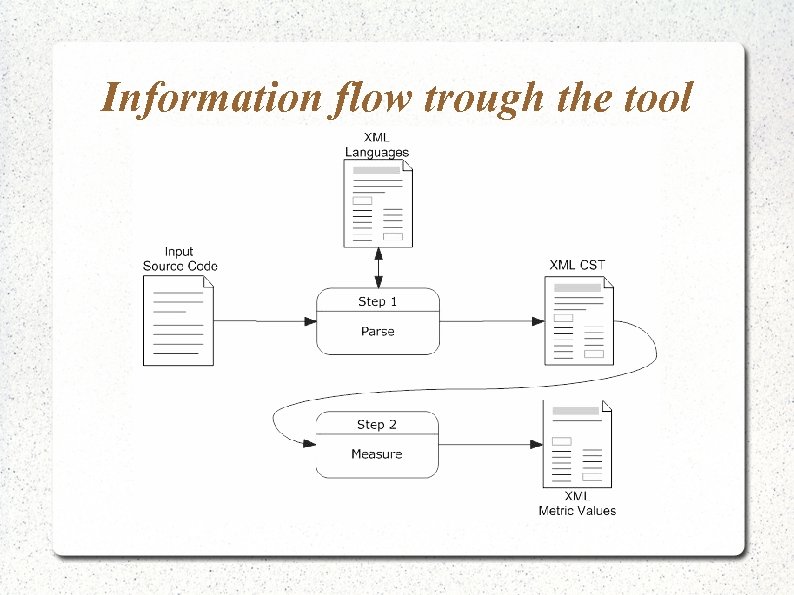

Information flow trough the tool

Information flow trough the tool

Description • The tool architecture • Background of universality • Implementation of metric algorithms • Structure of the application

Description • The tool architecture • Background of universality • Implementation of metric algorithms • Structure of the application

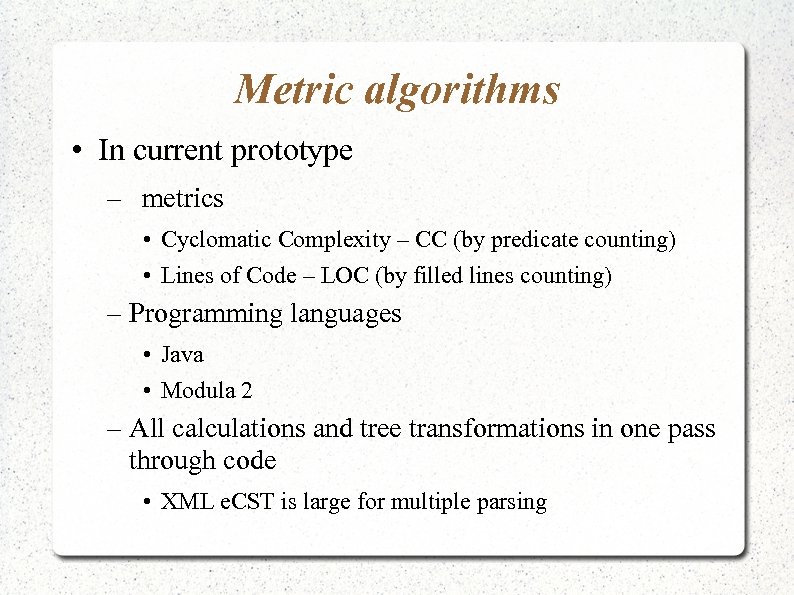

Metric algorithms • In current prototype – metrics • Cyclomatic Complexity – CC (by predicate counting) • Lines of Code – LOC (by filled lines counting) – Programming languages • Java • Modula 2 – All calculations and tree transformations in one pass through code • XML e. CST is large for multiple parsing

Metric algorithms • In current prototype – metrics • Cyclomatic Complexity – CC (by predicate counting) • Lines of Code – LOC (by filled lines counting) – Programming languages • Java • Modula 2 – All calculations and tree transformations in one pass through code • XML e. CST is large for multiple parsing

Metric algorithms (2)

Metric algorithms (2)

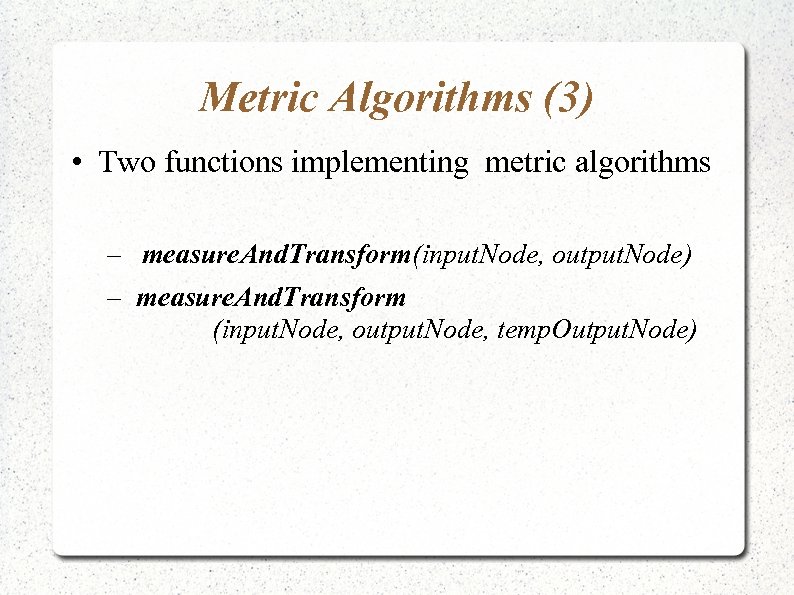

Metric Algorithms (3) • Two functions implementing metric algorithms – measure. And. Transform(input. Node, output. Node) – measure. And. Transform (input. Node, output. Node, temp. Output. Node)

Metric Algorithms (3) • Two functions implementing metric algorithms – measure. And. Transform(input. Node, output. Node) – measure. And. Transform (input. Node, output. Node, temp. Output. Node)

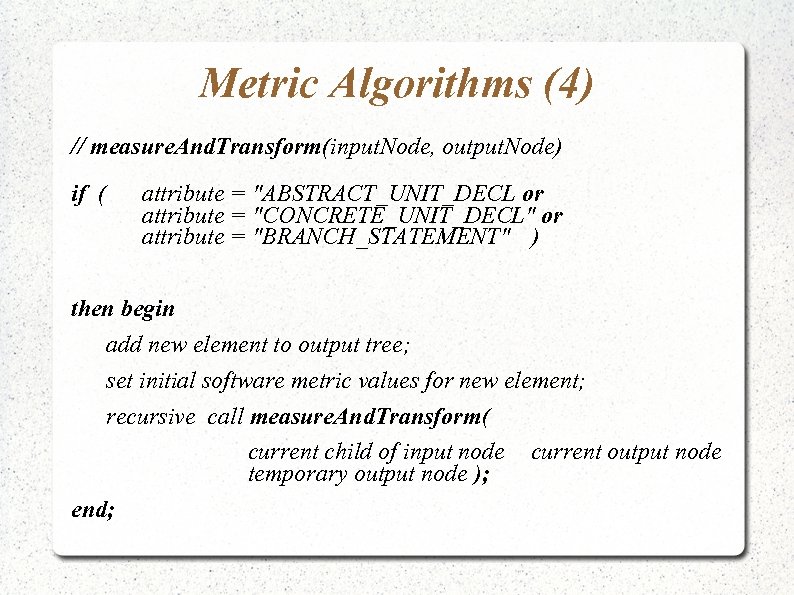

Metric Algorithms (4) // measure. And. Transform(input. Node, output. Node) if ( attribute = "ABSTRACT_UNIT_DECL or attribute = "CONCRETE_UNIT_DECL" or attribute = "BRANCH_STATEMENT" ) then begin add new element to output tree; set initial software metric values for new element; recursive call measure. And. Transform( current child of input node current output node temporary output node ); end;

Metric Algorithms (4) // measure. And. Transform(input. Node, output. Node) if ( attribute = "ABSTRACT_UNIT_DECL or attribute = "CONCRETE_UNIT_DECL" or attribute = "BRANCH_STATEMENT" ) then begin add new element to output tree; set initial software metric values for new element; recursive call measure. And. Transform( current child of input node current output node temporary output node ); end;

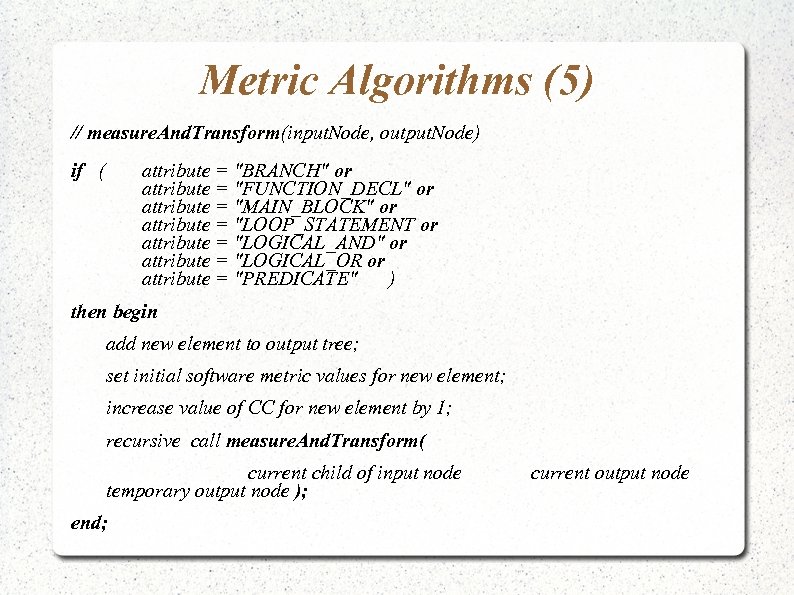

Metric Algorithms (5) // measure. And. Transform(input. Node, output. Node) if ( attribute = "BRANCH" or attribute = "FUNCTION_DECL" or attribute = "MAIN_BLOCK" or attribute = "LOOP_STATEMENT or attribute = "LOGICAL_AND" or attribute = "LOGICAL_OR or attribute = "PREDICATE" ) then begin add new element to output tree; set initial software metric values for new element; increase value of CC for new element by 1; recursive call measure. And. Transform( current child of input node temporary output node ); end; current output node

Metric Algorithms (5) // measure. And. Transform(input. Node, output. Node) if ( attribute = "BRANCH" or attribute = "FUNCTION_DECL" or attribute = "MAIN_BLOCK" or attribute = "LOOP_STATEMENT or attribute = "LOGICAL_AND" or attribute = "LOGICAL_OR or attribute = "PREDICATE" ) then begin add new element to output tree; set initial software metric values for new element; increase value of CC for new element by 1; recursive call measure. And. Transform( current child of input node temporary output node ); end; current output node

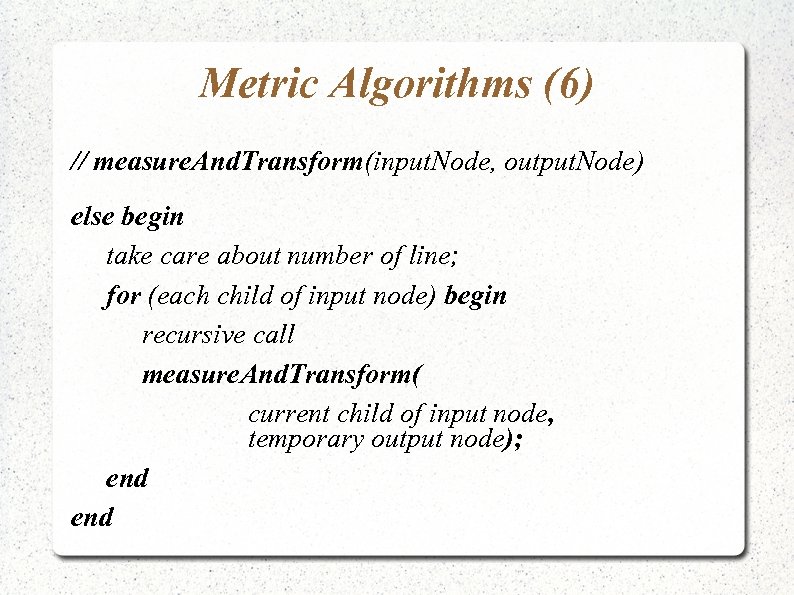

Metric Algorithms (6) // measure. And. Transform(input. Node, output. Node) else begin take care about number of line; for (each child of input node) begin recursive call measure. And. Transform( current child of input node, temporary output node); end

Metric Algorithms (6) // measure. And. Transform(input. Node, output. Node) else begin take care about number of line; for (each child of input node) begin recursive call measure. And. Transform( current child of input node, temporary output node); end

Metric Algorithms (7) //measure. And. Transform (input. Node, output. Node, temp. Output. Node) for(each child of input node) begin recursive call measure. And. Transform(current child of input node, temporary output node); end Calculate LOC for temporary output node; (* LOC = last. Line. Of. The. Element – first. Line. Of. The. Element + 1 *) add temporary output node as child of output node; Increase CC of output node by CC of temporary output node; Increase LOC of output node by LOC of temporary output node;

Metric Algorithms (7) //measure. And. Transform (input. Node, output. Node, temp. Output. Node) for(each child of input node) begin recursive call measure. And. Transform(current child of input node, temporary output node); end Calculate LOC for temporary output node; (* LOC = last. Line. Of. The. Element – first. Line. Of. The. Element + 1 *) add temporary output node as child of output node; Increase CC of output node by CC of temporary output node; Increase LOC of output node by LOC of temporary output node;

Description • The tool architecture • Background of universality • Implementation of metric algorithms • Structure of the application

Description • The tool architecture • Background of universality • Implementation of metric algorithms • Structure of the application

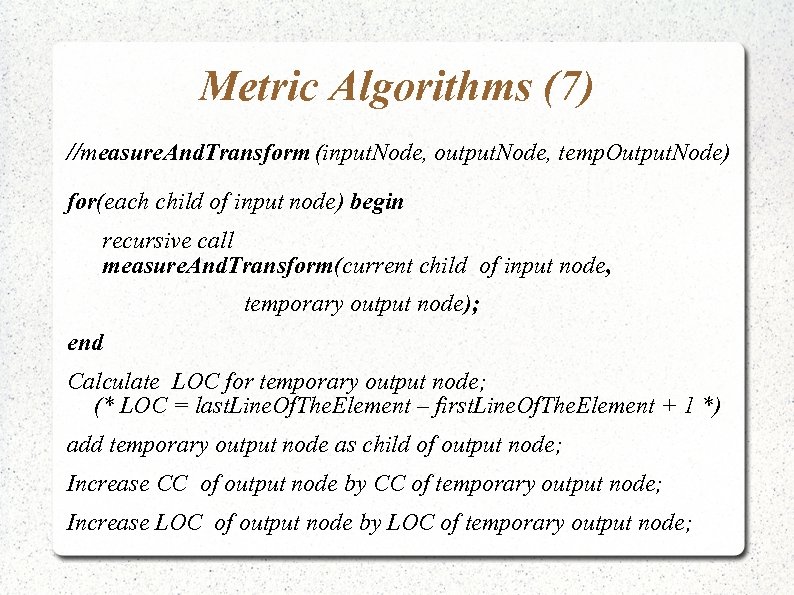

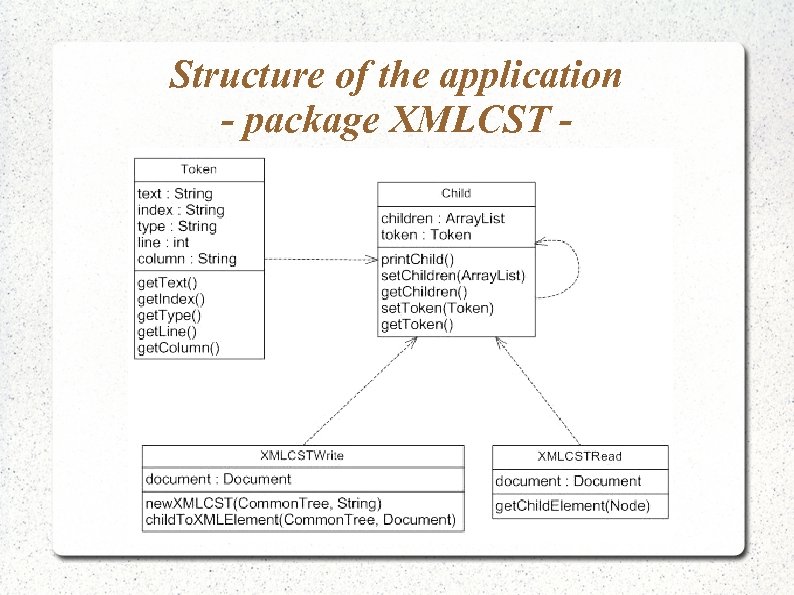

Structure of the application

Structure of the application

Structure of the application - package Languages -

Structure of the application - package Languages -

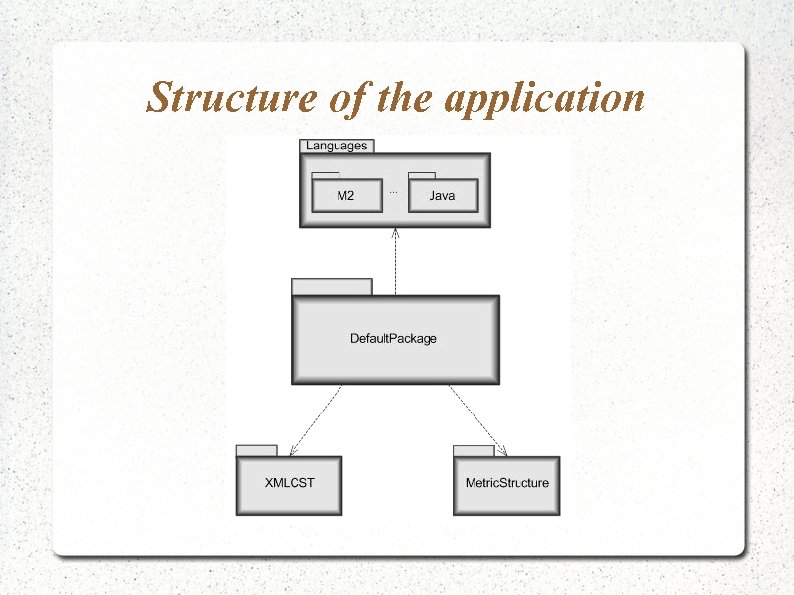

Structure of the application - package XMLCST -

Structure of the application - package XMLCST -

Structure of the application - package Metric. Structure -

Structure of the application - package Metric. Structure -

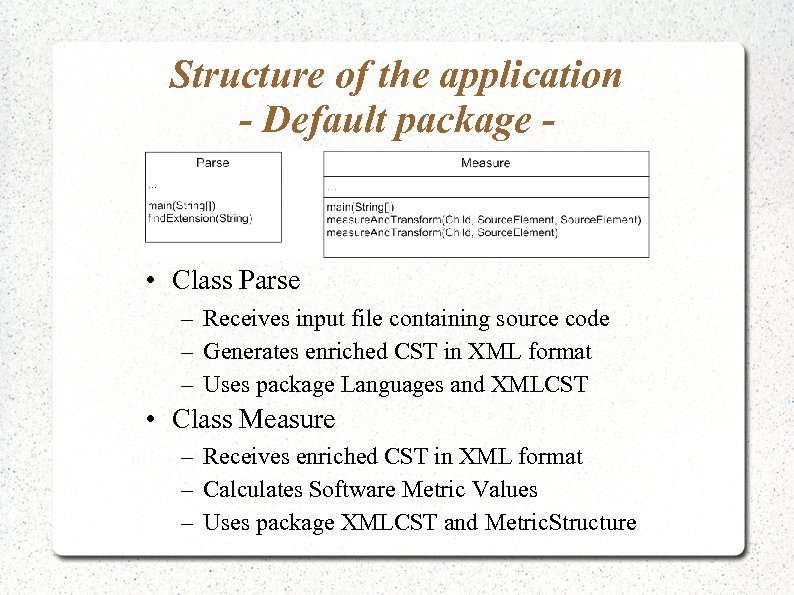

Structure of the application - Default package - • Class Parse – Receives input file containing source code – Generates enriched CST in XML format – Uses package Languages and XMLCST • Class Measure – Receives enriched CST in XML format – Calculates Software Metric Values – Uses package XMLCST and Metric. Structure

Structure of the application - Default package - • Class Parse – Receives input file containing source code – Generates enriched CST in XML format – Uses package Languages and XMLCST • Class Measure – Receives enriched CST in XML format – Calculates Software Metric Values – Uses package XMLCST and Metric. Structure

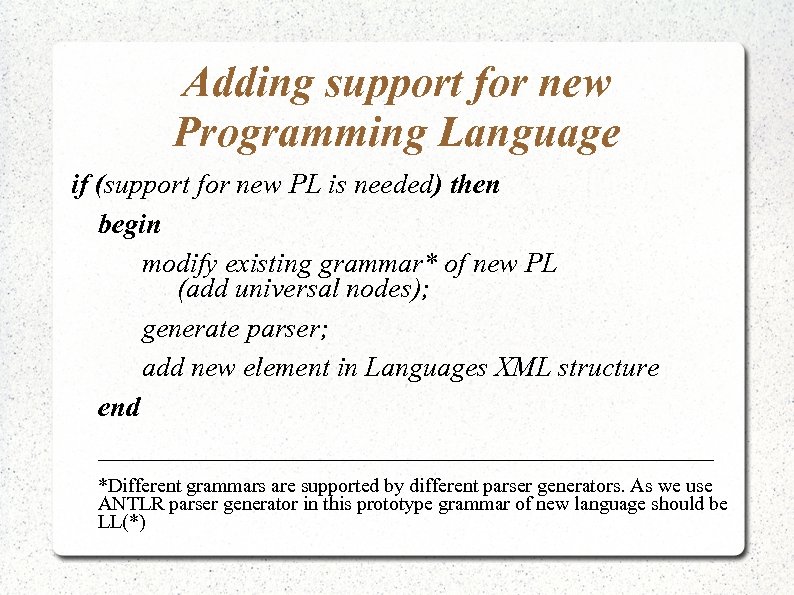

Adding support for new Programming Language if (support for new PL is needed) then begin modify existing grammar* of new PL (add universal nodes); generate parser; add new element in Languages XML structure end ______________________ *Different grammars are supported by different parser generators. As we use ANTLR parser generator in this prototype grammar of new language should be LL(*)

Adding support for new Programming Language if (support for new PL is needed) then begin modify existing grammar* of new PL (add universal nodes); generate parser; add new element in Languages XML structure end ______________________ *Different grammars are supported by different parser generators. As we use ANTLR parser generator in this prototype grammar of new language should be LL(*)

Adding support for new Software Metrics if (support for new Software Metrics is needed) then begin determine corresponding new universal nodes; modify grammars for all supported languages; regenerate language parsers; add attribute for storing new metric in XML structure for storing metric values; add software metric algorithm implementation in measure class end

Adding support for new Software Metrics if (support for new Software Metrics is needed) then begin determine corresponding new universal nodes; modify grammars for all supported languages; regenerate language parsers; add attribute for storing new metric in XML structure for storing metric values; add software metric algorithm implementation in measure class end

Contents Abstract (what we did) Motivation (why we did it) Background (basic information on the subject) Related work (what others did) Description (how we did it) Example (how does it work) Conclusion and results. Future work References

Contents Abstract (what we did) Motivation (why we did it) Background (basic information on the subject) Related work (what others did) Description (how we did it) Example (how does it work) Conclusion and results. Future work References

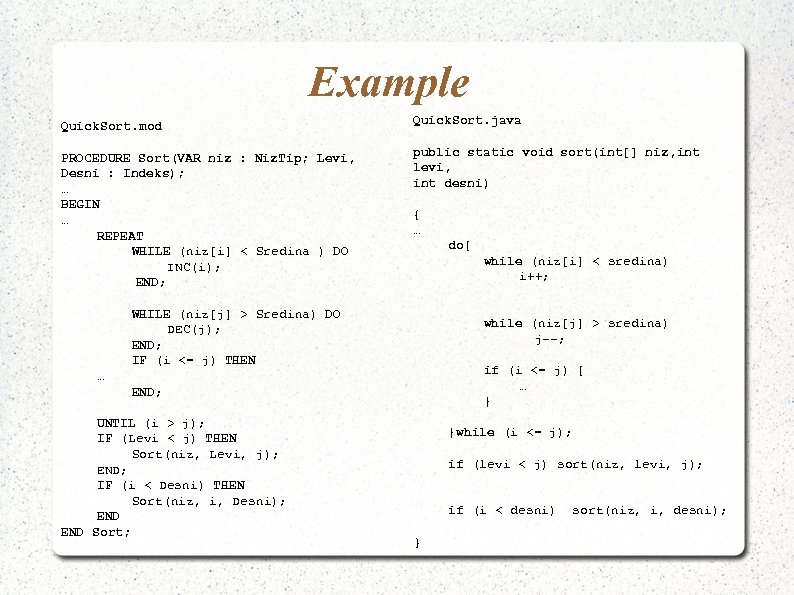

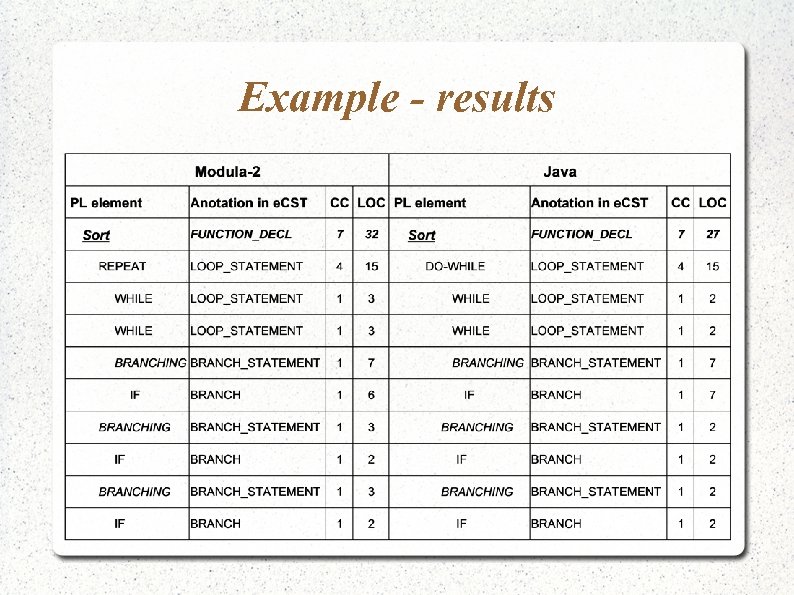

Example Quick. Sort. mod Quick. Sort. java PROCEDURE Sort(VAR niz : Niz. Tip; Levi, Desni : Indeks); … BEGIN … REPEAT WHILE (niz[i] < Sredina ) DO INC(i); END; public static void sort(int[] niz, int levi, int desni) { … do{ while (niz[i] < sredina) i++; WHILE (niz[j] > Sredina) DO DEC(j); END; IF (i <= j) THEN while (niz[j] > sredina) j--; if (i <= j) { … } … END; UNTIL (i > j); IF (Levi < j) THEN Sort(niz, Levi, j); END; IF (i < Desni) THEN Sort(niz, i, Desni); END Sort; }while (i <= j); if (levi < j) sort(niz, levi, j); if (i < desni) } sort(niz, i, desni);

Example Quick. Sort. mod Quick. Sort. java PROCEDURE Sort(VAR niz : Niz. Tip; Levi, Desni : Indeks); … BEGIN … REPEAT WHILE (niz[i] < Sredina ) DO INC(i); END; public static void sort(int[] niz, int levi, int desni) { … do{ while (niz[i] < sredina) i++; WHILE (niz[j] > Sredina) DO DEC(j); END; IF (i <= j) THEN while (niz[j] > sredina) j--; if (i <= j) { … } … END; UNTIL (i > j); IF (Levi < j) THEN Sort(niz, Levi, j); END; IF (i < Desni) THEN Sort(niz, i, Desni); END Sort; }while (i <= j); if (levi < j) sort(niz, levi, j); if (i < desni) } sort(niz, i, desni);

Example - results

Example - results

Contents Abstract (what we did) Motivation (why we did it) Background (basic information on the subject) Related work (what others did) Description (how we did it) Example (how does it work) Conclusion and results. Future work References

Contents Abstract (what we did) Motivation (why we did it) Background (basic information on the subject) Related work (what others did) Description (how we did it) Example (how does it work) Conclusion and results. Future work References

Results and conclusion • Motivation and background for development of new software metrics tool which is – Is platform independent – Is programming language independent – Calculates broad specter of metrics – Supports keeping of source code history – Supports keeping of calculated values of software metrics

Results and conclusion • Motivation and background for development of new software metrics tool which is – Is platform independent – Is programming language independent – Calculates broad specter of metrics – Supports keeping of source code history – Supports keeping of calculated values of software metrics

Results and conclusions (2) • Developed prototype of new software metrics tool which is – Developed in PL Java • is platform independent – Developed to support two input PLs • Is not programming language independent but base for it is sited – Developed to support two Software Metrics • Specter of metrics is not broad but base for it is sited – Supports keeping of source code history – Supports keeping of calculated values of software metrics ! It analyzes single compilation unit stores to single input file

Results and conclusions (2) • Developed prototype of new software metrics tool which is – Developed in PL Java • is platform independent – Developed to support two input PLs • Is not programming language independent but base for it is sited – Developed to support two Software Metrics • Specter of metrics is not broad but base for it is sited – Supports keeping of source code history – Supports keeping of calculated values of software metrics ! It analyzes single compilation unit stores to single input file

Contents Abstract (what we did) Motivation (why we did it) Background (basic information on the subject) Related work (what others did) Description (how we did it) Example (how does it work) Conclusion and results. Future work References

Contents Abstract (what we did) Motivation (why we did it) Background (basic information on the subject) Related work (what others did) Description (how we did it) Example (how does it work) Conclusion and results. Future work References

Future work • Adding support for additional PLs – Ancient PLs – Cobol and Fortran ? ! • Adding support for additional Software Metrics – For OO metrics relationships between compilation units are needed ! • Interpret calculated metric values – Represent them graphically – Interpret their meaning in order to provide useful information to the user

Future work • Adding support for additional PLs – Ancient PLs – Cobol and Fortran ? ! • Adding support for additional Software Metrics – For OO metrics relationships between compilation units are needed ! • Interpret calculated metric values – Represent them graphically – Interpret their meaning in order to provide useful information to the user

Contents Abstract (what we did) Motivation (why we did it) Background (basic information on the subject) Related work (what others did) Description (how we did it) Example (how does it work) Conclusion and results. Future work References

Contents Abstract (what we did) Motivation (why we did it) Background (basic information on the subject) Related work (what others did) Description (how we did it) Example (how does it work) Conclusion and results. Future work References

![References [Baxter et al. , 1998] I. D. Baxter, A. Yahin, L. Moura, M. References [Baxter et al. , 1998] I. D. Baxter, A. Yahin, L. Moura, M.](https://present5.com/presentation/88ece394f85de0277974a31ee60f3c1f/image-58.jpg) References [Baxter et al. , 1998] I. D. Baxter, A. Yahin, L. Moura, M. Sant'Anna, L. Bier, Clone Detection Using Abstract Syntax Trees, Proceedings of International Conference on Software Maintenance, 1998. ISBN: 0 -8186 -8779 -7, pp. 368 -377 [Budimac et al. , 2008] Z. Budimac, Ivanović M. , Odabrana poglavlja iz softverskog inzenjerstva i savremenih racunarskih tehnologija, Prirodno-matematički fakultet, Novi Sad, 2008, p. 320, ISBN 978 -86 -7031 -112 -1. [Christodoulakis et al. , 1989] D. N. Christodoulakis, C. Tsalidis, C. J. M. van Gogh, V. W. Stinesen, Towards an automated tool for Software Certification, International Workshop on Tools for Artificial Intelligence, 1989. Architectures, Languages and Algorithms, IEEE, ISBN: 0 -81861984 -8, pp. 670 -676 [Ducasse et al. , 1999] S. Ducasse, M. Rieger, S. Demeyer, A Language Independent Approach for Detecting Duplicated Code, Proceedings. IEEE International Conference on Software Maintenance, 1999. (ICSM '99) , ISBN: 0 -7695 -0016 -1, pp 109 -118 [Ebert, Dumke, 2007] C. Ebert, R. Dumke, Software Measurement – Establish Extract Evaluate Execute, Springer – Verlag, Berlin, Heidelberg, New York 2007, ISBN 978 -3 -540 -71648 -8 [Ebert et al. , 2005] C. Ebert, R. Dumke, M. Bundschuh, A. Schmietendorf, Best Practices in Software Measurement - How to use metrics to improve project and process performance, Springer-Verlag Berlin Heidelberg, 2005, ISBN 3 -540 -20867 -4 [Erdogmus, Tanir, 2002] H. Erdogmus, O. Tanir, Advances in software engineering: comprehension, evaluation, and evolution, Springer – Verlag, Berlin, Heidelberg, New York 2002, ISBN 0 -387 -95109 -1

References [Baxter et al. , 1998] I. D. Baxter, A. Yahin, L. Moura, M. Sant'Anna, L. Bier, Clone Detection Using Abstract Syntax Trees, Proceedings of International Conference on Software Maintenance, 1998. ISBN: 0 -8186 -8779 -7, pp. 368 -377 [Budimac et al. , 2008] Z. Budimac, Ivanović M. , Odabrana poglavlja iz softverskog inzenjerstva i savremenih racunarskih tehnologija, Prirodno-matematički fakultet, Novi Sad, 2008, p. 320, ISBN 978 -86 -7031 -112 -1. [Christodoulakis et al. , 1989] D. N. Christodoulakis, C. Tsalidis, C. J. M. van Gogh, V. W. Stinesen, Towards an automated tool for Software Certification, International Workshop on Tools for Artificial Intelligence, 1989. Architectures, Languages and Algorithms, IEEE, ISBN: 0 -81861984 -8, pp. 670 -676 [Ducasse et al. , 1999] S. Ducasse, M. Rieger, S. Demeyer, A Language Independent Approach for Detecting Duplicated Code, Proceedings. IEEE International Conference on Software Maintenance, 1999. (ICSM '99) , ISBN: 0 -7695 -0016 -1, pp 109 -118 [Ebert, Dumke, 2007] C. Ebert, R. Dumke, Software Measurement – Establish Extract Evaluate Execute, Springer – Verlag, Berlin, Heidelberg, New York 2007, ISBN 978 -3 -540 -71648 -8 [Ebert et al. , 2005] C. Ebert, R. Dumke, M. Bundschuh, A. Schmietendorf, Best Practices in Software Measurement - How to use metrics to improve project and process performance, Springer-Verlag Berlin Heidelberg, 2005, ISBN 3 -540 -20867 -4 [Erdogmus, Tanir, 2002] H. Erdogmus, O. Tanir, Advances in software engineering: comprehension, evaluation, and evolution, Springer – Verlag, Berlin, Heidelberg, New York 2002, ISBN 0 -387 -95109 -1

![References [Grune et al. , 2000] Grune D. , Bal H. E. , Jacobs References [Grune et al. , 2000] Grune D. , Bal H. E. , Jacobs](https://present5.com/presentation/88ece394f85de0277974a31ee60f3c1f/image-59.jpg) References [Grune et al. , 2000] Grune D. , Bal H. E. , Jacobs C. J. H. , Langendoen K. G. , Modern compiler design, – John Wiley & Sons, England, 2000, ISBN 0 -471 -97697 -0, 753 p [Johnes, 2008] Capers Jones, Applied Software Measurement - Global Analysis of Productivity and Quality – Third Edition, The Mc. Graw-Hill Companies, 2008, ISBN 0 -07 -150244 -0, 662 p. [Kan, 2003] S. Kan, Metrics and Models in Software Quality Engineering – Second Edition, Addison-Wesley, Boston, 2003, ISBN 0 -201 -72915 -6 [Neamtiu et al. , 2005] I. Neamtiu, J. S. Foster, M. Hicks, Understanding source code evolution using abstract syntax tree matching, Proceedings of the International Conference on Software Engineering 2005, international workshop on Mining software repositories, ISBN: 1 -59593 -1236, pp 1– 5 [Parr, 2007] Parr T. , The Definitive ANTLR Reference - Building Domain-Specific Languages, The Pragmatic Bookshelf, USA, 2007, ISBN: 0 -9787392 -5 -6 [Rakić, Budimac, Bothe, 2010] Rakić G. , Budimac Z. , Bothe K. , Towards a ‘Universal’ Software Metrics Tool - Motivation, Process and a Prototype, Proceedings of the 5 th International Conference on Software and Data Technologies (ICSOFT), 2010, accepted [Sager et al, 2006]. T. Sager, A. Bernstein, M. Pinzger, C. Kiefer, Detecting Similar Java Classes Using Tree Algorithms, Proceedings of the International Conference on Software Engineering 2006, international workshop on Mining software repositories, ISBN: 1 -59593 -397 -2, pp 65 -71 [Truong et al. , 2004] N. Truong, P. Roe, P. Bancroft, Static Analysis of Students’ Java Programs, Sixth Australian Computing Education Conference (ACE 2004), Dunedin, New Zealand. Conferences in Research and Practice in Information Technology, Vol. 30. , 2004, pp 317 -325

References [Grune et al. , 2000] Grune D. , Bal H. E. , Jacobs C. J. H. , Langendoen K. G. , Modern compiler design, – John Wiley & Sons, England, 2000, ISBN 0 -471 -97697 -0, 753 p [Johnes, 2008] Capers Jones, Applied Software Measurement - Global Analysis of Productivity and Quality – Third Edition, The Mc. Graw-Hill Companies, 2008, ISBN 0 -07 -150244 -0, 662 p. [Kan, 2003] S. Kan, Metrics and Models in Software Quality Engineering – Second Edition, Addison-Wesley, Boston, 2003, ISBN 0 -201 -72915 -6 [Neamtiu et al. , 2005] I. Neamtiu, J. S. Foster, M. Hicks, Understanding source code evolution using abstract syntax tree matching, Proceedings of the International Conference on Software Engineering 2005, international workshop on Mining software repositories, ISBN: 1 -59593 -1236, pp 1– 5 [Parr, 2007] Parr T. , The Definitive ANTLR Reference - Building Domain-Specific Languages, The Pragmatic Bookshelf, USA, 2007, ISBN: 0 -9787392 -5 -6 [Rakić, Budimac, Bothe, 2010] Rakić G. , Budimac Z. , Bothe K. , Towards a ‘Universal’ Software Metrics Tool - Motivation, Process and a Prototype, Proceedings of the 5 th International Conference on Software and Data Technologies (ICSOFT), 2010, accepted [Sager et al, 2006]. T. Sager, A. Bernstein, M. Pinzger, C. Kiefer, Detecting Similar Java Classes Using Tree Algorithms, Proceedings of the International Conference on Software Engineering 2006, international workshop on Mining software repositories, ISBN: 1 -59593 -397 -2, pp 65 -71 [Truong et al. , 2004] N. Truong, P. Roe, P. Bancroft, Static Analysis of Students’ Java Programs, Sixth Australian Computing Education Conference (ACE 2004), Dunedin, New Zealand. Conferences in Research and Practice in Information Technology, Vol. 30. , 2004, pp 317 -325

![Web References/References to the Tools [Aivosto. Oy, 2009 a] Web References/References to the Tools [Aivosto. Oy, 2009 a]](https://present5.com/presentation/88ece394f85de0277974a31ee60f3c1f/image-60.jpg) Web References/References to the Tools [Aivosto. Oy, 2009 a] "Tutorial: How to use Project Analyzer v 9, © 1998– 2008 Aivosto Oy URN: NBN: fi-fe 200808171836 " http: //www. aivosto. com/project/tutorial-fi. pdf [Aivosto. Oy, 2009 b] http: //www. aivosto. com [Budimac, Hericko, 2010] http: //perun. pmf. uns. ac. rs/documents/Budimac-Hericko-Project. CV. pdf [Campwood. Software, 2009] Source. Monitor, http: //www. campwoodsw. com/sourcemonitor. html [Coco/R, 2010] Coco/R http: //www. ssw. uni-linz. ac. at/Coco/ [Code. Squale, 2009 a] Code. Squale http: //code. google. com/p/codesquale/ [Code. Squale, 2009 b] Code. Squale http: //codesquale. googlepages. com/ [Geronesoft, 2009] http: //www. geronesoft. com/

Web References/References to the Tools [Aivosto. Oy, 2009 a] "Tutorial: How to use Project Analyzer v 9, © 1998– 2008 Aivosto Oy URN: NBN: fi-fe 200808171836 " http: //www. aivosto. com/project/tutorial-fi. pdf [Aivosto. Oy, 2009 b] http: //www. aivosto. com [Budimac, Hericko, 2010] http: //perun. pmf. uns. ac. rs/documents/Budimac-Hericko-Project. CV. pdf [Campwood. Software, 2009] Source. Monitor, http: //www. campwoodsw. com/sourcemonitor. html [Coco/R, 2010] Coco/R http: //www. ssw. uni-linz. ac. at/Coco/ [Code. Squale, 2009 a] Code. Squale http: //code. google. com/p/codesquale/ [Code. Squale, 2009 b] Code. Squale http: //codesquale. googlepages. com/ [Geronesoft, 2009] http: //www. geronesoft. com/

![Web References/References to the Tools [Mc. Cabe, 2010] http: //www. mccabe. com/ [Parr, 2009] Web References/References to the Tools [Mc. Cabe, 2010] http: //www. mccabe. com/ [Parr, 2009]](https://present5.com/presentation/88ece394f85de0277974a31ee60f3c1f/image-61.jpg) Web References/References to the Tools [Mc. Cabe, 2010] http: //www. mccabe. com/ [Parr, 2009] ANTLR http: //www. antlr. org [Power. Software, 2009 a] Krakatau Suite Management Overview, http: //www. powersoftware. com/ [Power. Software, 2009 b] Krakatau Essential PM (KEPM)- User guide 1. 11. 0. 0, http: //www. powersoftware. com/ [Scientific. Toolworks, 2009] Understand 2. 0 User Guide and Reference Manual, Copyright © 2008 Scientific Toolworks, March 2008 http: //www. scitools. com [Sm. Lab, 2009] Software Measurement Labaratory, TOOL OVERVIEW, http: //www. smlab. de/CAME. tools. mcomp. html [Wheeler, 2009] SLOCCount User's Guide, David A. Wheeler, August 1, 2004, Version 2. 26 http: //www. dwheeler. com/sloccount. html

Web References/References to the Tools [Mc. Cabe, 2010] http: //www. mccabe. com/ [Parr, 2009] ANTLR http: //www. antlr. org [Power. Software, 2009 a] Krakatau Suite Management Overview, http: //www. powersoftware. com/ [Power. Software, 2009 b] Krakatau Essential PM (KEPM)- User guide 1. 11. 0. 0, http: //www. powersoftware. com/ [Scientific. Toolworks, 2009] Understand 2. 0 User Guide and Reference Manual, Copyright © 2008 Scientific Toolworks, March 2008 http: //www. scitools. com [Sm. Lab, 2009] Software Measurement Labaratory, TOOL OVERVIEW, http: //www. smlab. de/CAME. tools. mcomp. html [Wheeler, 2009] SLOCCount User's Guide, David A. Wheeler, August 1, 2004, Version 2. 26 http: //www. dwheeler. com/sloccount. html

Thank you Hvala

Thank you Hvala