Lecture on special course.pptx

- Количество слайдов: 73

Processing of Financial Information with Office Applications IFF, 2013 Teacher: associate professor Berzin D. V.

Processing of Financial Information with Office Applications IFF, 2013 Teacher: associate professor Berzin D. V.

Information Systems as a discipline • Information system - is any combination of information technology and people's activities that support operations, management and decision making. In a very broad sense, the term information system is frequently used to refer to the interaction between people, processes, data and technology.

Information Systems as a discipline • Information system - is any combination of information technology and people's activities that support operations, management and decision making. In a very broad sense, the term information system is frequently used to refer to the interaction between people, processes, data and technology.

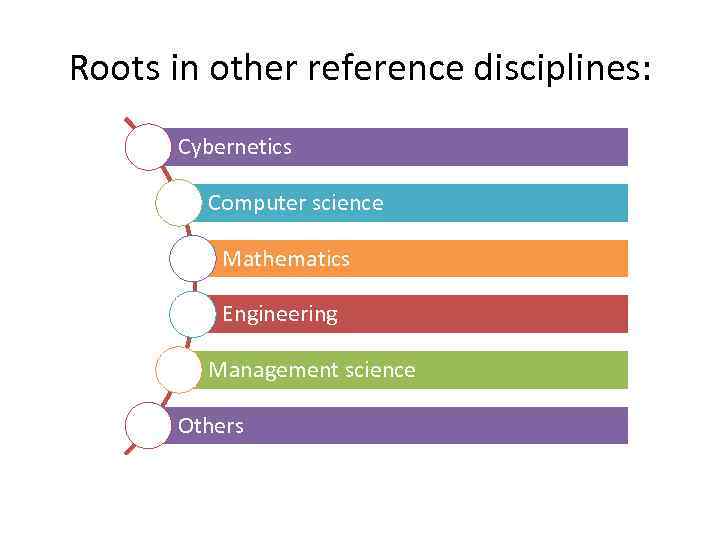

Roots in other reference disciplines: Cybernetics Computer science Mathematics Engineering Management science Others

Roots in other reference disciplines: Cybernetics Computer science Mathematics Engineering Management science Others

Increasing importance of the IS • In modern conditions the information systems have and will obtain an increasing role in achievement of the strategic purposes of companies. • Achievement of the strategic purposes entails new requirements to information systems and their functions.

Increasing importance of the IS • In modern conditions the information systems have and will obtain an increasing role in achievement of the strategic purposes of companies. • Achievement of the strategic purposes entails new requirements to information systems and their functions.

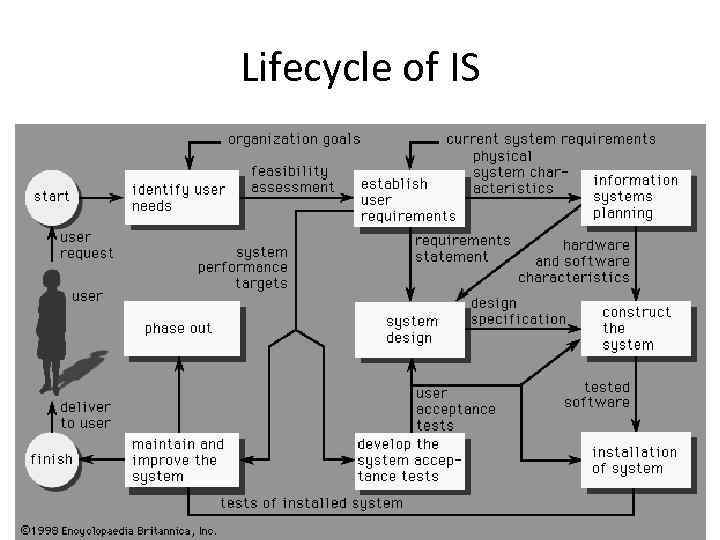

Lifecycle of IS

Lifecycle of IS

Features of IS • IS cannot be a trivial tool • IS should give new products and services, based on the information, which will provide the businesses with competitive advantages in the market.

Features of IS • IS cannot be a trivial tool • IS should give new products and services, based on the information, which will provide the businesses with competitive advantages in the market.

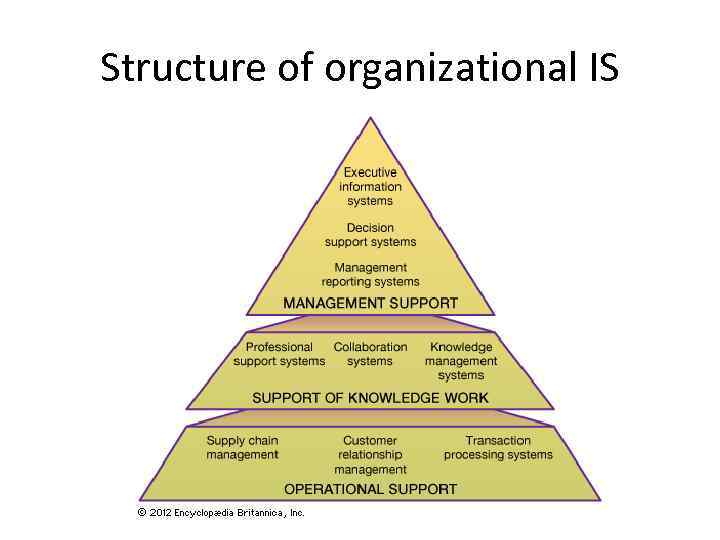

Structure of organizational IS

Structure of organizational IS

Management and IS • The information technologies, used at the enterprise, support the implementation of the managers’ decisions. • However, in turn, new systems and the technologies dictate the specific conditions of business dealing, shaping the companies’ structure and strategies.

Management and IS • The information technologies, used at the enterprise, support the implementation of the managers’ decisions. • However, in turn, new systems and the technologies dictate the specific conditions of business dealing, shaping the companies’ structure and strategies.

Management and IS • The manager is obliged to have sufficient knowledge with regards to IS • The manager should be able to derive the maximum benefit from potential advantages of information technologies. The manager is obliged to have sufficient knowledge to carry out the management of application and development of information technologies in the company and to understand whether the additional expenses of resources in this area are required.

Management and IS • The manager is obliged to have sufficient knowledge with regards to IS • The manager should be able to derive the maximum benefit from potential advantages of information technologies. The manager is obliged to have sufficient knowledge to carry out the management of application and development of information technologies in the company and to understand whether the additional expenses of resources in this area are required.

Information technologies • Information technologies, IT (Information Technology - IT) – wide class of disciplines and spheres of activity, concerning technologies of formation and management by processes of work with the data and the information, including with application of the computing and communication engineering.

Information technologies • Information technologies, IT (Information Technology - IT) – wide class of disciplines and spheres of activity, concerning technologies of formation and management by processes of work with the data and the information, including with application of the computing and communication engineering.

Information, data, knowledge and its characteristics and role • In XX century a word "information“ was used in set of scientific areas with the special definition and interpretation. • The information is primary and substantial, it is a category of the device of a science. • Its close categories are: matter, system, structure, reflection.

Information, data, knowledge and its characteristics and role • In XX century a word "information“ was used in set of scientific areas with the special definition and interpretation. • The information is primary and substantial, it is a category of the device of a science. • Its close categories are: matter, system, structure, reflection.

Data • The data (from latin: data) is a representation of the facts and ideas in the formalized kind, suitable for transfer and processing in some information process. Data, allocated from system, due to isolation of existence of the carrier, is the information.

Data • The data (from latin: data) is a representation of the facts and ideas in the formalized kind, suitable for transfer and processing in some information process. Data, allocated from system, due to isolation of existence of the carrier, is the information.

Knowledge • Knowledge in philosophical sense is understanding of the realized feeling; in a broad sense it is the set of concepts, theoretical constructions and representations, adequately reflecting objective laws of the real world. • Knowledge can be referred to laws of a subject domain (principles, communications, laws), received as a result of practical activity and professional experience, allowing the experts to put and to solve tasks in this area.

Knowledge • Knowledge in philosophical sense is understanding of the realized feeling; in a broad sense it is the set of concepts, theoretical constructions and representations, adequately reflecting objective laws of the real world. • Knowledge can be referred to laws of a subject domain (principles, communications, laws), received as a result of practical activity and professional experience, allowing the experts to put and to solve tasks in this area.

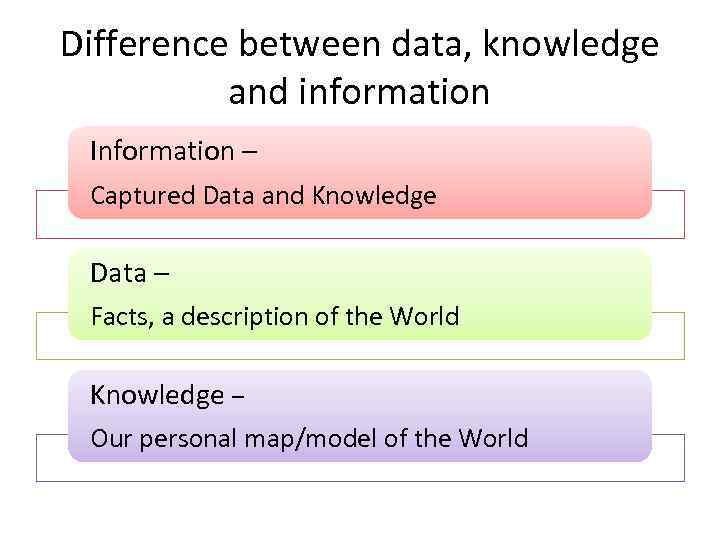

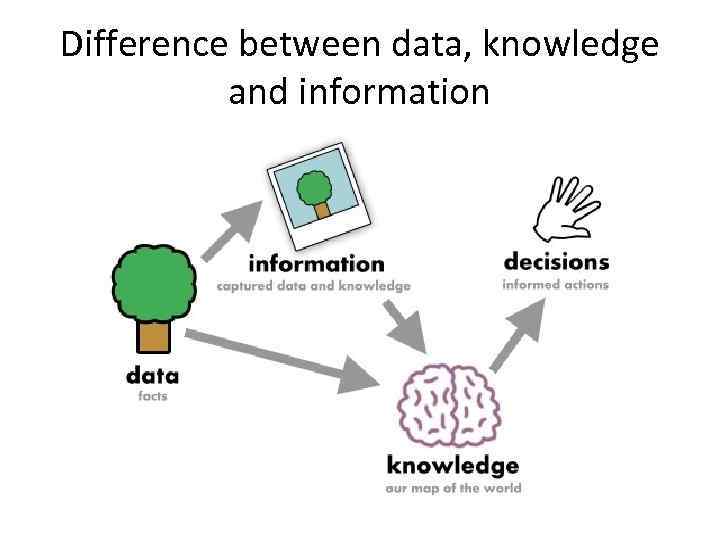

Difference between data, knowledge and information Information – Captured Data and Knowledge Data – Facts, a description of the World Knowledge – Our personal map/model of the World

Difference between data, knowledge and information Information – Captured Data and Knowledge Data – Facts, a description of the World Knowledge – Our personal map/model of the World

Difference between data, knowledge and information

Difference between data, knowledge and information

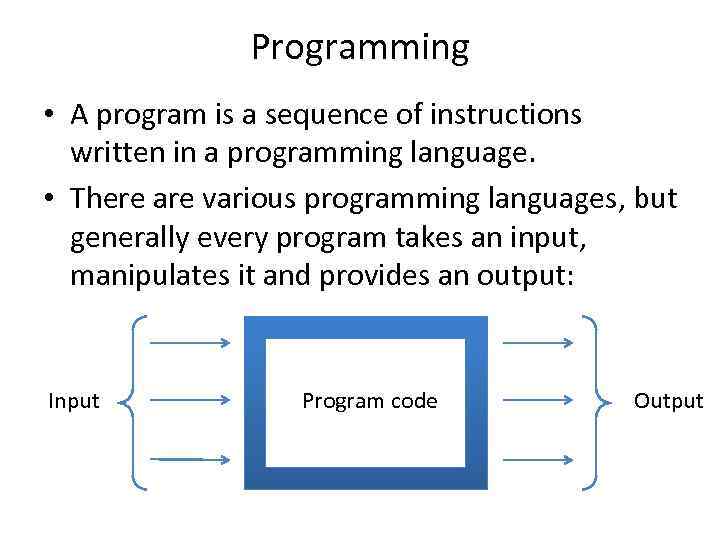

Programming • A program is a sequence of instructions written in a programming language. • There are various programming languages, but generally every program takes an input, manipulates it and provides an output: Input Program code Output

Programming • A program is a sequence of instructions written in a programming language. • There are various programming languages, but generally every program takes an input, manipulates it and provides an output: Input Program code Output

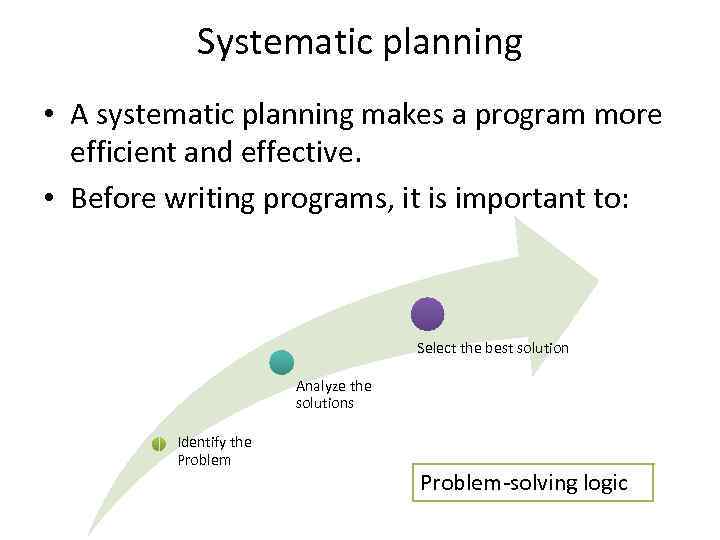

Systematic planning • A systematic planning makes a program more efficient and effective. • Before writing programs, it is important to: Select the best solution Analyze the solutions Identify the Problem-solving logic

Systematic planning • A systematic planning makes a program more efficient and effective. • Before writing programs, it is important to: Select the best solution Analyze the solutions Identify the Problem-solving logic

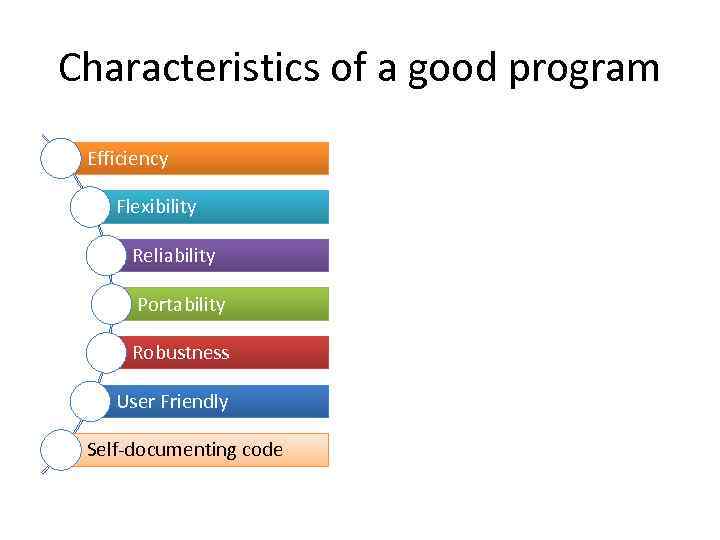

Characteristics of a good program Efficiency Flexibility Reliability Portability Robustness User Friendly Self-documenting code

Characteristics of a good program Efficiency Flexibility Reliability Portability Robustness User Friendly Self-documenting code

5 Generations of Programming Languages • A programming language is a set of rules that tells the computer what operations to do. • These languages are used by the programmers to create other kinds of software. 1. Machine language 2. Assembly language 3. High-level languages 4. Very high-level languages 5. Natural languages

5 Generations of Programming Languages • A programming language is a set of rules that tells the computer what operations to do. • These languages are used by the programmers to create other kinds of software. 1. Machine language 2. Assembly language 3. High-level languages 4. Very high-level languages 5. Natural languages

1. First Generation: Machine language • In narrow sense programming is considered as coding. • Machine code (language) is the basic language of the computer, representing data as 1 s and 0 s. • They can directly be executed by a processor. • This code is the lowest level of software. • It was widely used in the past, but nowadays programmers write an initial code in the high level language (C++, C#, Java).

1. First Generation: Machine language • In narrow sense programming is considered as coding. • Machine code (language) is the basic language of the computer, representing data as 1 s and 0 s. • They can directly be executed by a processor. • This code is the lowest level of software. • It was widely used in the past, but nowadays programmers write an initial code in the high level language (C++, C#, Java).

2. Second Generation: Assembly language • Assembly language is a low-level programming language that allows a computer user to write a program using abbreviations or more easily remembered words instead of numbers. • This language is still widely used in some situations, where execution times are critical.

2. Second Generation: Assembly language • Assembly language is a low-level programming language that allows a computer user to write a program using abbreviations or more easily remembered words instead of numbers. • This language is still widely used in some situations, where execution times are critical.

3. Third Generation: High-level or Procedural Languages • A high-level language resembles some human language such as English. • The advantage is that, once we get the object code, the program runs faster. • Some of the most popular procedural languages are Visual BASIC, C and C++.

3. Third Generation: High-level or Procedural Languages • A high-level language resembles some human language such as English. • The advantage is that, once we get the object code, the program runs faster. • Some of the most popular procedural languages are Visual BASIC, C and C++.

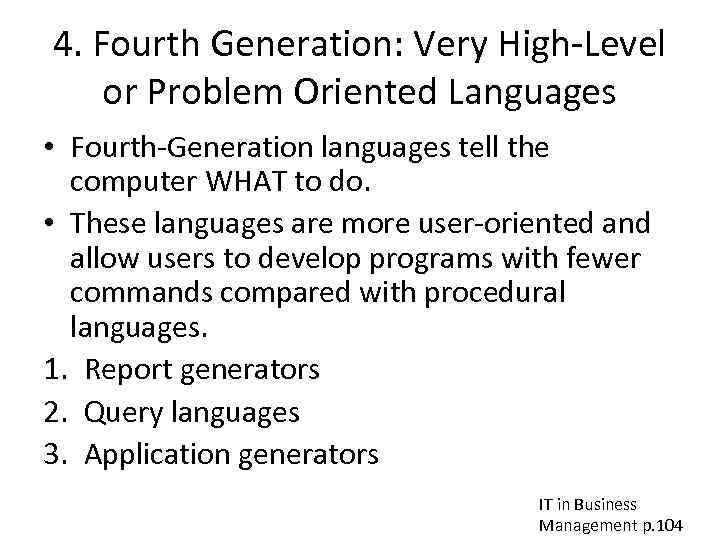

4. Fourth Generation: Very High-Level or Problem Oriented Languages • Fourth-Generation languages tell the computer WHAT to do. • These languages are more user-oriented and allow users to develop programs with fewer commands compared with procedural languages. 1. Report generators 2. Query languages 3. Application generators IT in Business Management p. 104

4. Fourth Generation: Very High-Level or Problem Oriented Languages • Fourth-Generation languages tell the computer WHAT to do. • These languages are more user-oriented and allow users to develop programs with fewer commands compared with procedural languages. 1. Report generators 2. Query languages 3. Application generators IT in Business Management p. 104

5. Fifth Generation: Natural Languages • Natural languages are of two types. • The first are ordinary human languages: English, Spanish etc. • The second are programming languages that use human language to provide people a more natural connection with computers. • These languages are part of the field of study known as artificial intelligence.

5. Fifth Generation: Natural Languages • Natural languages are of two types. • The first are ordinary human languages: English, Spanish etc. • The second are programming languages that use human language to provide people a more natural connection with computers. • These languages are part of the field of study known as artificial intelligence.

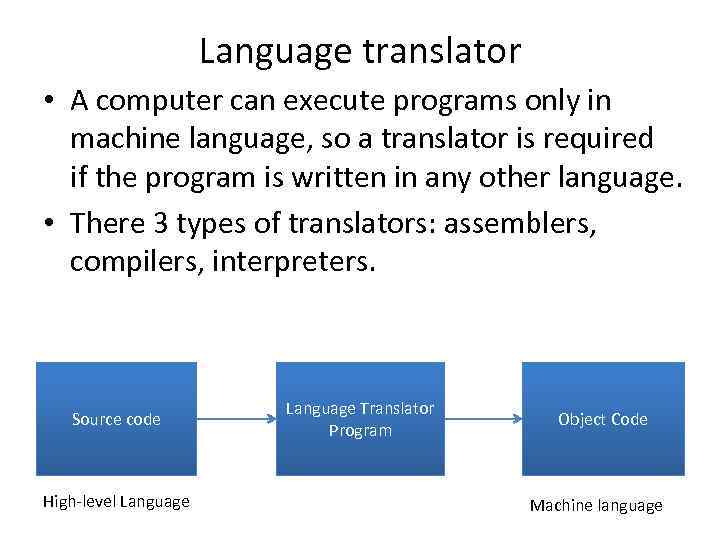

Language translator • A computer can execute programs only in machine language, so a translator is required if the program is written in any other language. • There 3 types of translators: assemblers, compilers, interpreters. Source code High-level Language Translator Program Object Code Machine language

Language translator • A computer can execute programs only in machine language, so a translator is required if the program is written in any other language. • There 3 types of translators: assemblers, compilers, interpreters. Source code High-level Language Translator Program Object Code Machine language

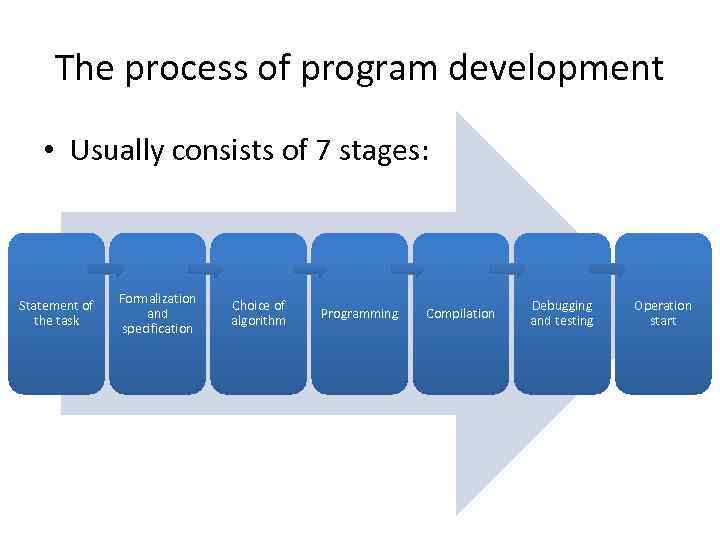

The process of program development • Usually consists of 7 stages: Statement of the task Formalization and specification Choice of algorithm Programming Compilation Debugging and testing Operation start

The process of program development • Usually consists of 7 stages: Statement of the task Formalization and specification Choice of algorithm Programming Compilation Debugging and testing Operation start

Historical Evolution of Computers

Historical Evolution of Computers

Historical Evolution of Computers • The first computers were people! • “Computer” was originally a job title: it was used to describe those human beings whose job was to perform the repetitive calculations. • Computers were invented because of human being’s search for fast and accurate calculating devices.

Historical Evolution of Computers • The first computers were people! • “Computer” was originally a job title: it was used to describe those human beings whose job was to perform the repetitive calculations. • Computers were invented because of human being’s search for fast and accurate calculating devices.

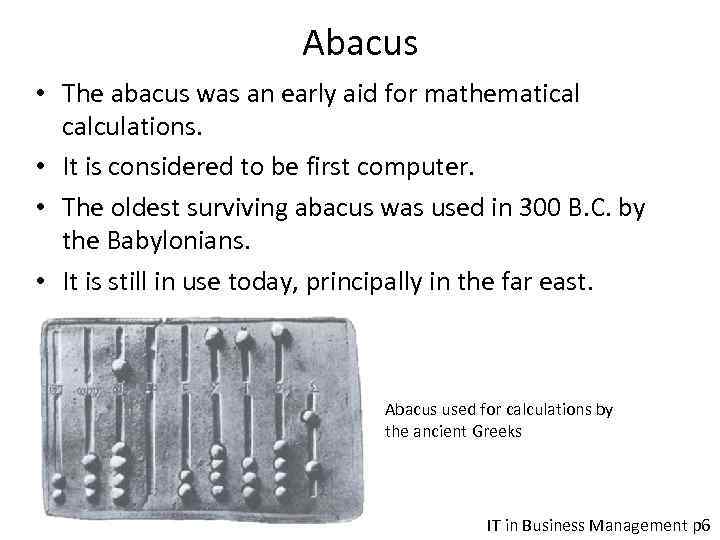

Abacus • The abacus was an early aid for mathematical calculations. • It is considered to be first computer. • The oldest surviving abacus was used in 300 B. C. by the Babylonians. • It is still in use today, principally in the far east. Abacus used for calculations by the ancient Greeks IT in Business Management p 6

Abacus • The abacus was an early aid for mathematical calculations. • It is considered to be first computer. • The oldest surviving abacus was used in 300 B. C. by the Babylonians. • It is still in use today, principally in the far east. Abacus used for calculations by the ancient Greeks IT in Business Management p 6

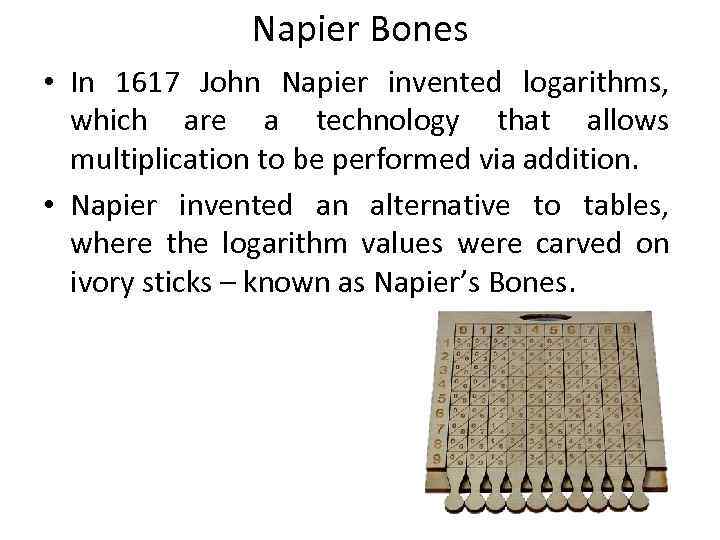

Napier Bones • In 1617 John Napier invented logarithms, which are a technology that allows multiplication to be performed via addition. • Napier invented an alternative to tables, where the logarithm values were carved on ivory sticks – known as Napier’s Bones.

Napier Bones • In 1617 John Napier invented logarithms, which are a technology that allows multiplication to be performed via addition. • Napier invented an alternative to tables, where the logarithm values were carved on ivory sticks – known as Napier’s Bones.

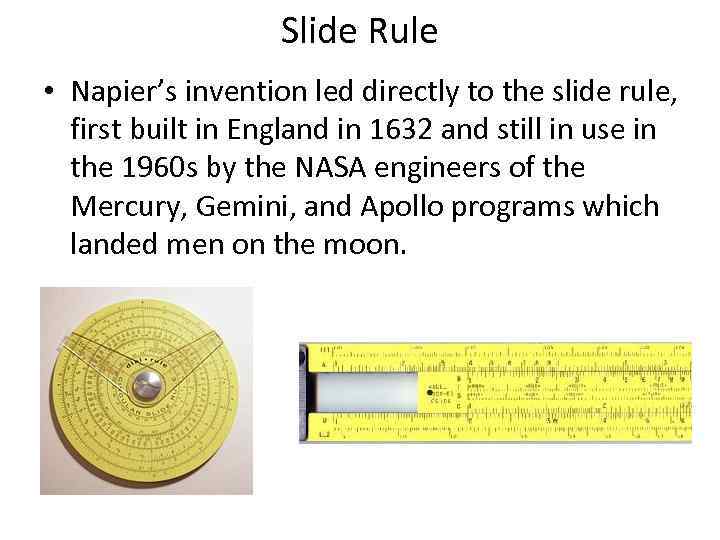

Slide Rule • Napier’s invention led directly to the slide rule, first built in England in 1632 and still in use in the 1960 s by the NASA engineers of the Mercury, Gemini, and Apollo programs which landed men on the moon.

Slide Rule • Napier’s invention led directly to the slide rule, first built in England in 1632 and still in use in the 1960 s by the NASA engineers of the Mercury, Gemini, and Apollo programs which landed men on the moon.

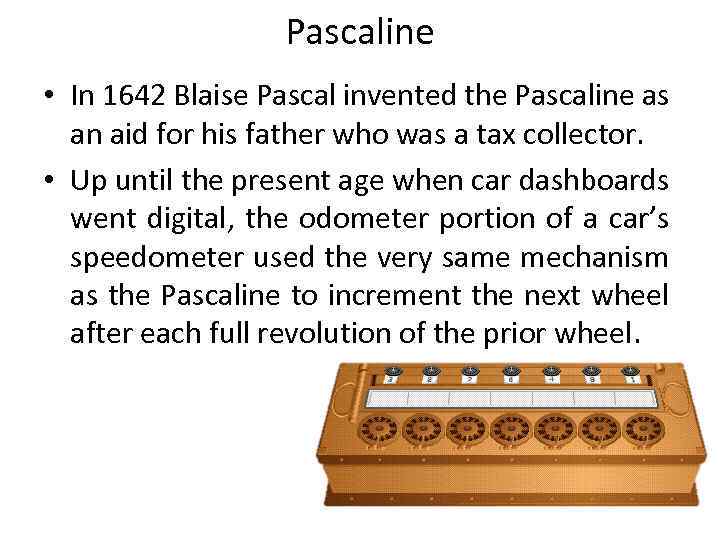

Pascaline • In 1642 Blaise Pascal invented the Pascaline as an aid for his father who was a tax collector. • Up until the present age when car dashboards went digital, the odometer portion of a car’s speedometer used the very same mechanism as the Pascaline to increment the next wheel after each full revolution of the prior wheel.

Pascaline • In 1642 Blaise Pascal invented the Pascaline as an aid for his father who was a tax collector. • Up until the present age when car dashboards went digital, the odometer portion of a car’s speedometer used the very same mechanism as the Pascaline to increment the next wheel after each full revolution of the prior wheel.

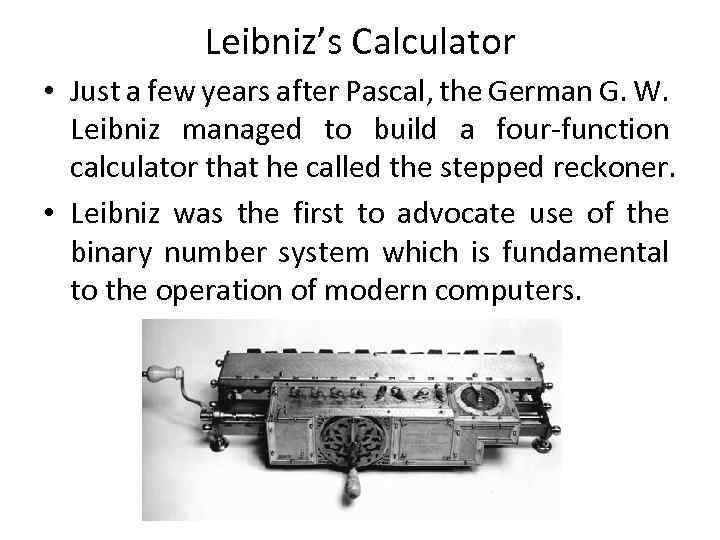

Leibniz’s Calculator • Just a few years after Pascal, the German G. W. Leibniz managed to build a four-function calculator that he called the stepped reckoner. • Leibniz was the first to advocate use of the binary number system which is fundamental to the operation of modern computers.

Leibniz’s Calculator • Just a few years after Pascal, the German G. W. Leibniz managed to build a four-function calculator that he called the stepped reckoner. • Leibniz was the first to advocate use of the binary number system which is fundamental to the operation of modern computers.

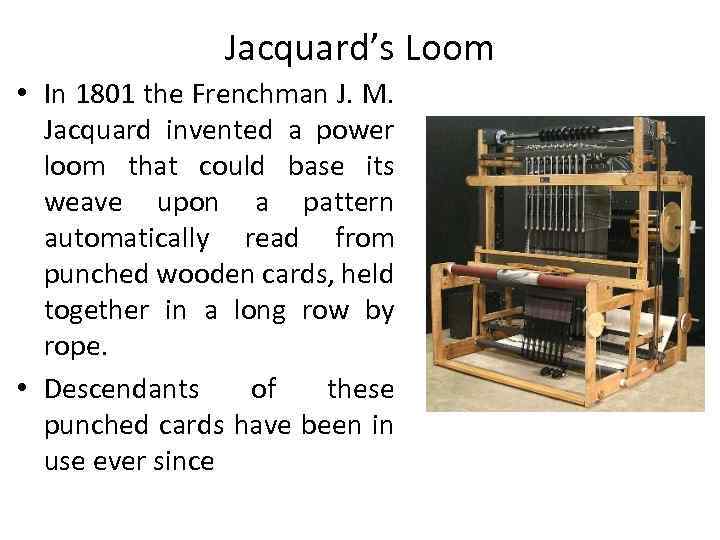

Jacquard’s Loom • In 1801 the Frenchman J. M. Jacquard invented a power loom that could base its weave upon a pattern automatically read from punched wooden cards, held together in a long row by rope. • Descendants of these punched cards have been in use ever since

Jacquard’s Loom • In 1801 the Frenchman J. M. Jacquard invented a power loom that could base its weave upon a pattern automatically read from punched wooden cards, held together in a long row by rope. • Descendants of these punched cards have been in use ever since

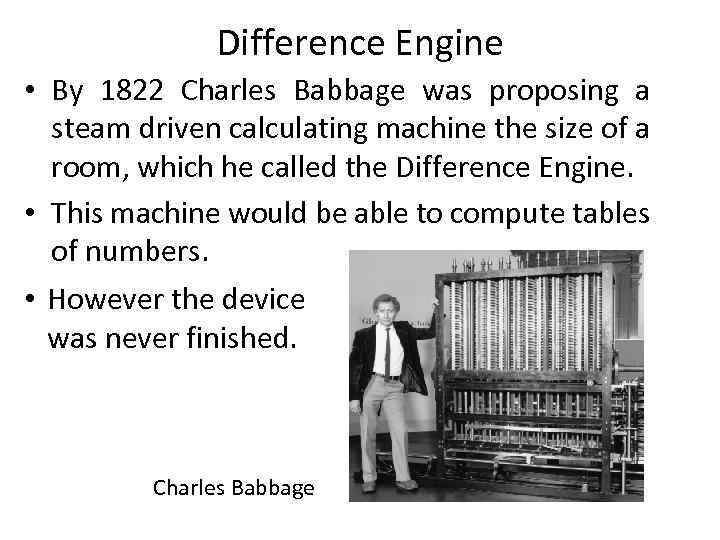

Difference Engine • By 1822 Charles Babbage was proposing a steam driven calculating machine the size of a room, which he called the Difference Engine. • This machine would be able to compute tables of numbers. • However the device was never finished. Charles Babbage

Difference Engine • By 1822 Charles Babbage was proposing a steam driven calculating machine the size of a room, which he called the Difference Engine. • This machine would be able to compute tables of numbers. • However the device was never finished. Charles Babbage

Analytical Engine • This device, large as a house and powered by 6 steam engines, would be more general purpose in nature because it would be programmable, thanks to the punched card technology of Jacquard. • The Analytical Engine also had a key function that distinguishes computers from calculators: the conditional statement.

Analytical Engine • This device, large as a house and powered by 6 steam engines, would be more general purpose in nature because it would be programmable, thanks to the punched card technology of Jacquard. • The Analytical Engine also had a key function that distinguishes computers from calculators: the conditional statement.

Hollerith’s Machine • The Hollerith desk, consisted of a card reader which sensed the holes in the cards, a gear driven mechanism and a large wall of dial indicators to display the results of the count. • Hollerith built a company, the Tabulating Machine Company which, after a few buyouts, actually became International Business Machines (IBM)

Hollerith’s Machine • The Hollerith desk, consisted of a card reader which sensed the holes in the cards, a gear driven mechanism and a large wall of dial indicators to display the results of the count. • Hollerith built a company, the Tabulating Machine Company which, after a few buyouts, actually became International Business Machines (IBM)

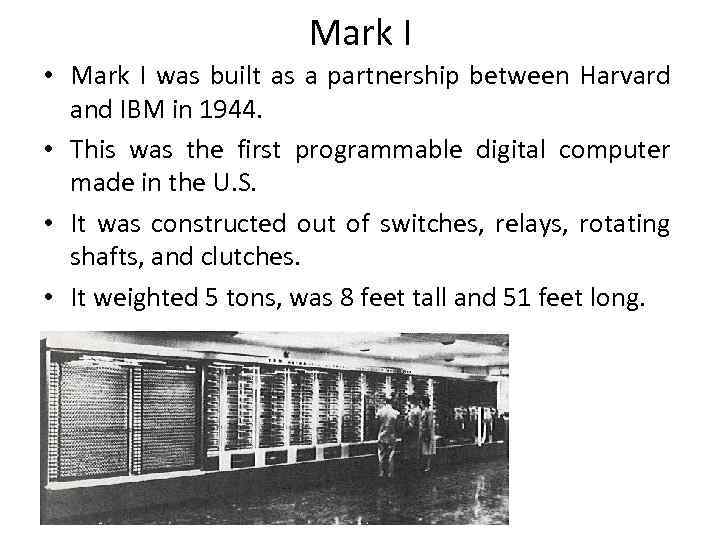

Mark I • Mark I was built as a partnership between Harvard and IBM in 1944. • This was the first programmable digital computer made in the U. S. • It was constructed out of switches, relays, rotating shafts, and clutches. • It weighted 5 tons, was 8 feet tall and 51 feet long.

Mark I • Mark I was built as a partnership between Harvard and IBM in 1944. • This was the first programmable digital computer made in the U. S. • It was constructed out of switches, relays, rotating shafts, and clutches. • It weighted 5 tons, was 8 feet tall and 51 feet long.

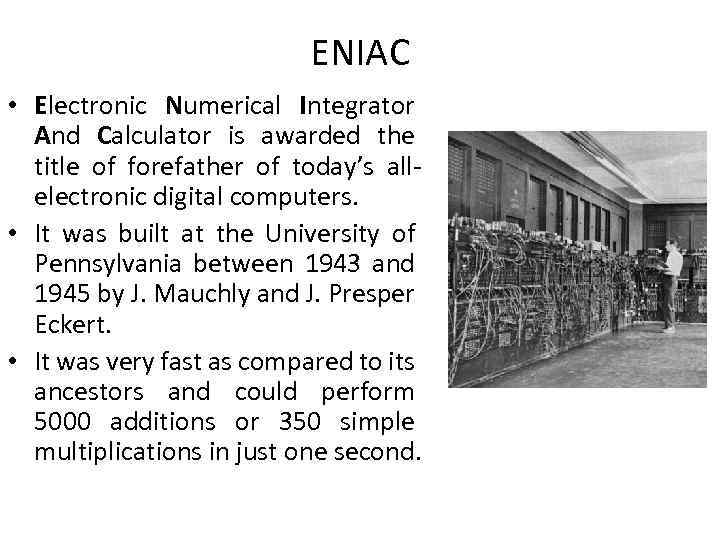

ENIAC • Electronic Numerical Integrator And Calculator is awarded the title of forefather of today’s allelectronic digital computers. • It was built at the University of Pennsylvania between 1943 and 1945 by J. Mauchly and J. Presper Eckert. • It was very fast as compared to its ancestors and could perform 5000 additions or 350 simple multiplications in just one second.

ENIAC • Electronic Numerical Integrator And Calculator is awarded the title of forefather of today’s allelectronic digital computers. • It was built at the University of Pennsylvania between 1943 and 1945 by J. Mauchly and J. Presper Eckert. • It was very fast as compared to its ancestors and could perform 5000 additions or 350 simple multiplications in just one second.

UNIVAC • Universal Automatic Computer was the first computer made by a private company, IBM. • It was followed by Electronic Discrete Variable Automatic Computer (EDVAC) which had a storage capacity of 1024 words of 44 bits each.

UNIVAC • Universal Automatic Computer was the first computer made by a private company, IBM. • It was followed by Electronic Discrete Variable Automatic Computer (EDVAC) which had a storage capacity of 1024 words of 44 bits each.

Types of computers

Types of computers

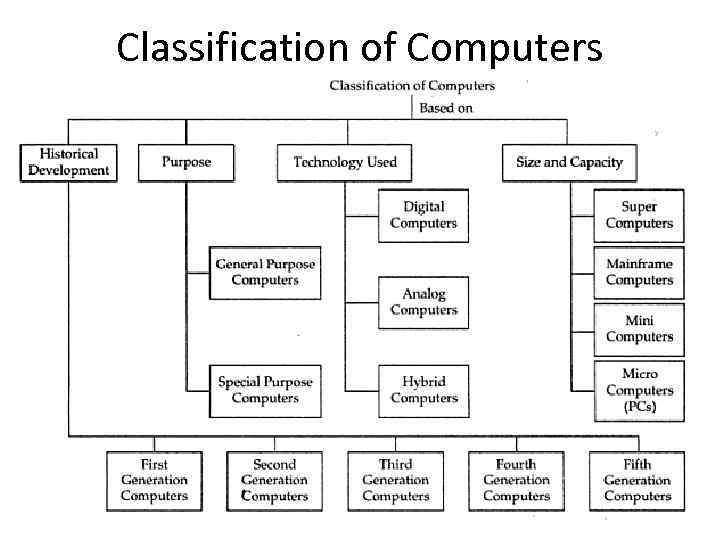

Classification of Computers

Classification of Computers

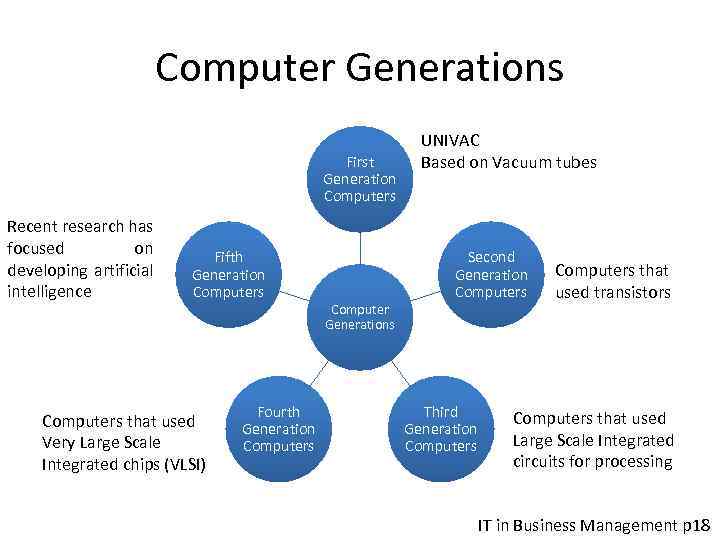

Computer Generations First Generation Computers Recent research has focused on developing artificial intelligence Fifth Generation Computers that used Very Large Scale Integrated chips (VLSI) Fourth Generation Computers Computer Generations UNIVAC Based on Vacuum tubes Second Generation Computers Third Generation Computers that used transistors Computers that used Large Scale Integrated circuits for processing IT in Business Management p 18

Computer Generations First Generation Computers Recent research has focused on developing artificial intelligence Fifth Generation Computers that used Very Large Scale Integrated chips (VLSI) Fourth Generation Computers Computer Generations UNIVAC Based on Vacuum tubes Second Generation Computers Third Generation Computers that used transistors Computers that used Large Scale Integrated circuits for processing IT in Business Management p 18

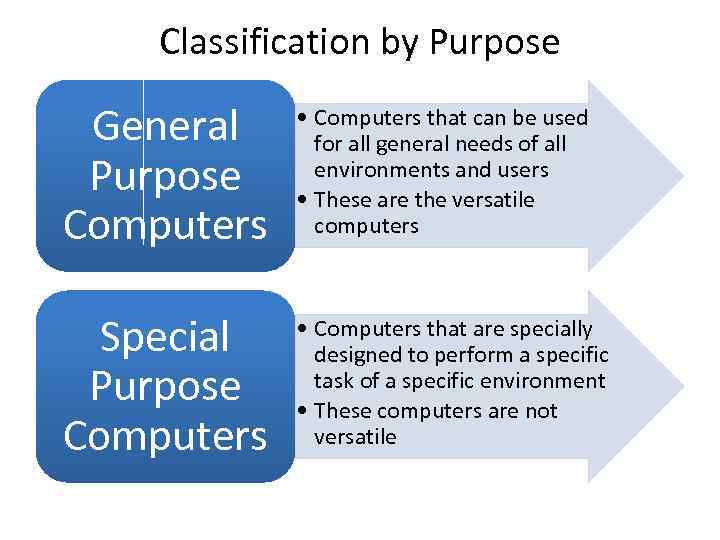

Classification by Purpose General Purpose Computers • Computers that can be used for all general needs of all environments and users • These are the versatile computers Special Purpose Computers • Computers that are specially designed to perform a specific task of a specific environment • These computers are not versatile

Classification by Purpose General Purpose Computers • Computers that can be used for all general needs of all environments and users • These are the versatile computers Special Purpose Computers • Computers that are specially designed to perform a specific task of a specific environment • These computers are not versatile

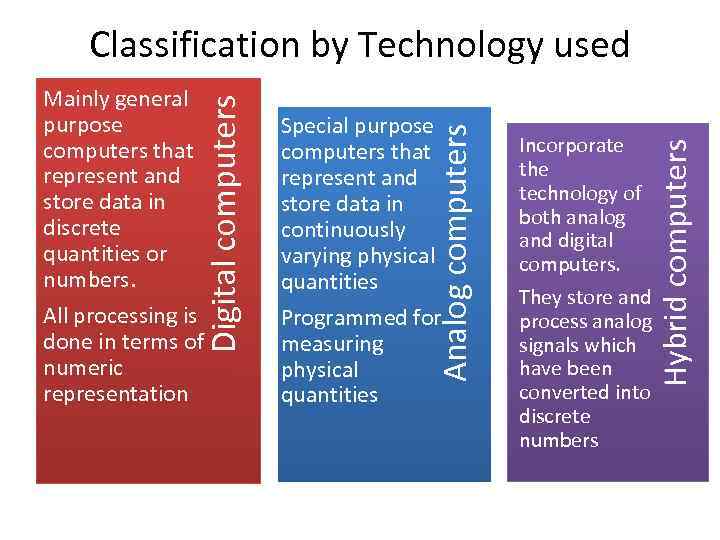

Programmed for measuring physical quantities Incorporate the technology of both analog and digital computers. They store and process analog signals which have been converted into discrete numbers Hybrid computers All processing is done in terms of numeric representation Special purpose computers that represent and store data in continuously varying physical quantities Analog computers Mainly general purpose computers that represent and store data in discrete quantities or numbers. Digital computers Classification by Technology used

Programmed for measuring physical quantities Incorporate the technology of both analog and digital computers. They store and process analog signals which have been converted into discrete numbers Hybrid computers All processing is done in terms of numeric representation Special purpose computers that represent and store data in continuously varying physical quantities Analog computers Mainly general purpose computers that represent and store data in discrete quantities or numbers. Digital computers Classification by Technology used

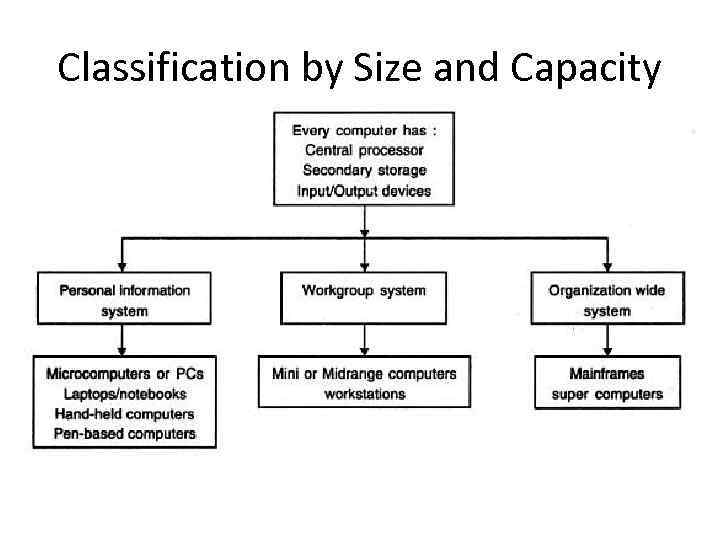

Classification by Size and Capacity

Classification by Size and Capacity

Super computers • The biggest in size, the most expensive in price. • It can process trillions of instructions in seconds. • Governments, different industries use these computers.

Super computers • The biggest in size, the most expensive in price. • It can process trillions of instructions in seconds. • Governments, different industries use these computers.

Mainframes • Can process millions of instructions per second and is capable of accessing billions of data. • Commonly used in big hospitals, air line reservation companies and other huge companies.

Mainframes • Can process millions of instructions per second and is capable of accessing billions of data. • Commonly used in big hospitals, air line reservation companies and other huge companies.

Minicomputers • These are computers which are mostly preferred by the small type of business personals, colleges etc.

Minicomputers • These are computers which are mostly preferred by the small type of business personals, colleges etc.

Personal computers • This is the computer mostly preferred by the home users. • These computers are lesser in cost, small in size. • Today this is thought to be the most popular computer in all.

Personal computers • This is the computer mostly preferred by the home users. • These computers are lesser in cost, small in size. • Today this is thought to be the most popular computer in all.

Notebook computers • Having a small size and low weight the notebook is easy to carry anywhere. • The approach of this computer is also the same as the Personal computer. • It can store the same amount of data and having a memory of the same size as that of a personal computer.

Notebook computers • Having a small size and low weight the notebook is easy to carry anywhere. • The approach of this computer is also the same as the Personal computer. • It can store the same amount of data and having a memory of the same size as that of a personal computer.

Hardware and software • Hardware refers to the machinery in a computer system (such as the monitor, keyboard, and Central Processing Unit - CPU) and software refers to a collection of instructions, called a program (or project), that directs the hardware. Programs are written to solve problems or perform tasks on a computer. • Programmers translate the solutions or tasks into a language the computer can understand. • As we write programs, we must keep in mind that the computer will only do what we instruct it to do. Because of this, we must be very careful and thorough with our instructions.

Hardware and software • Hardware refers to the machinery in a computer system (such as the monitor, keyboard, and Central Processing Unit - CPU) and software refers to a collection of instructions, called a program (or project), that directs the hardware. Programs are written to solve problems or perform tasks on a computer. • Programmers translate the solutions or tasks into a language the computer can understand. • As we write programs, we must keep in mind that the computer will only do what we instruct it to do. Because of this, we must be very careful and thorough with our instructions.

Compiler • A compiler is a computer program (or set of programs) that transforms source code written in a programming language (the source language) into another computer language (the target language, often having a binary form known as object code). The most common reason for wanting to transform source code is to create an executable program. • The name "compiler" is primarily used for programs that translate source code from a high-level programming language to a lower level language (e. g. , assembly language or machine code).

Compiler • A compiler is a computer program (or set of programs) that transforms source code written in a programming language (the source language) into another computer language (the target language, often having a binary form known as object code). The most common reason for wanting to transform source code is to create an executable program. • The name "compiler" is primarily used for programs that translate source code from a high-level programming language to a lower level language (e. g. , assembly language or machine code).

Interpreter • In computer science, an interpreter is a computer program that executes, i. e. performs, instructions written in a programming language. An interpreter generally uses one of the following strategies for program execution: 1) execute the source code directly 2) translate source code into some efficient intermediate representation and immediately execute this Early versions of the Lisp programming language and Dartmouth BASIC would be examples of the first type. Perl, Python, MATLAB, and Ruby are examples of the second. Some systems, such as Smalltalk, contemporary versions of BASIC, Java and others may also combine some strategies. • While interpretation and compilation are the two main means by which programming languages are implemented, they are not mutually exclusive, as most interpreting systems also perform some translation work, just like compilers.

Interpreter • In computer science, an interpreter is a computer program that executes, i. e. performs, instructions written in a programming language. An interpreter generally uses one of the following strategies for program execution: 1) execute the source code directly 2) translate source code into some efficient intermediate representation and immediately execute this Early versions of the Lisp programming language and Dartmouth BASIC would be examples of the first type. Perl, Python, MATLAB, and Ruby are examples of the second. Some systems, such as Smalltalk, contemporary versions of BASIC, Java and others may also combine some strategies. • While interpretation and compilation are the two main means by which programming languages are implemented, they are not mutually exclusive, as most interpreting systems also perform some translation work, just like compilers.

PDC: analysis and design Many programmers plan their programs using a sequence of steps, referred to as the program development cycle (PDC). 1. Analyze: Define the problem. Be sure you understand what the program should do, that is, what the output should be. Have a clear idea of what data (or input) are given and the relationship between the input and the desired output. 2. Design: Plan the solution to the problem. Find a logical sequence of precise steps that solve the problem. Such a sequence of steps is called an algorithm. Every detail, including obvious steps, should appear in the algorithm. In the next section, we discuss three popular methods used to develop the logic plan: flowcharts, pseudocode, and top-down charts. These tools help the programmer break a problem into a sequence of small tasks the computer can perform to solve the problem.

PDC: analysis and design Many programmers plan their programs using a sequence of steps, referred to as the program development cycle (PDC). 1. Analyze: Define the problem. Be sure you understand what the program should do, that is, what the output should be. Have a clear idea of what data (or input) are given and the relationship between the input and the desired output. 2. Design: Plan the solution to the problem. Find a logical sequence of precise steps that solve the problem. Such a sequence of steps is called an algorithm. Every detail, including obvious steps, should appear in the algorithm. In the next section, we discuss three popular methods used to develop the logic plan: flowcharts, pseudocode, and top-down charts. These tools help the programmer break a problem into a sequence of small tasks the computer can perform to solve the problem.

PDC: interface and coding 3. Choose the interface: Select the objects (text boxes, command buttons, etc. ). Determine how the input will be obtained and how the output will be displayed. Then create objects to receive the input and display the output. Also, create appropriate command buttons to allow the user to control the program. 4. Code: Translate the algorithm into a programming language. Coding is the technical word for writing the program. During this stage, the program is written in Visual Basic and entered into the computer. The programmer uses the algorithm devised in Step 2 along with a knowledge of Visual Basic.

PDC: interface and coding 3. Choose the interface: Select the objects (text boxes, command buttons, etc. ). Determine how the input will be obtained and how the output will be displayed. Then create objects to receive the input and display the output. Also, create appropriate command buttons to allow the user to control the program. 4. Code: Translate the algorithm into a programming language. Coding is the technical word for writing the program. During this stage, the program is written in Visual Basic and entered into the computer. The programmer uses the algorithm devised in Step 2 along with a knowledge of Visual Basic.

PDC: test, debug, documentation 5. Test and debug: Locate and remove any errors in the program. Testing is the process of finding errors in a program, and debugging is the process of correcting errors that are found. (An error in a program is called a bug. ) As the program is typed, Visual Basic points out certain types of program errors. Other types of errors will be detected by Visual Basic when the program is executed; however, many errors due to typing mistakes, flaws in the algorithm, or incorrect usages of the Visual Basic language rules only can be uncovered and corrected by careful detective work. An example of such an error would be using addition when multiplication was the properation. 6. Complete the documentation: Organize all the material that describes the program. Documentation is intended to allow another person, or the programmer at a later date, to understand the program. Internal documentation consists of statementsin the program that are not executed, but point out the purposes of various parts of the program. Documentation might also consist of a detailed description of what the program does and how to use the program (for instance, what type of input is expected). For commercial programs, documentation includes an instruction manual.

PDC: test, debug, documentation 5. Test and debug: Locate and remove any errors in the program. Testing is the process of finding errors in a program, and debugging is the process of correcting errors that are found. (An error in a program is called a bug. ) As the program is typed, Visual Basic points out certain types of program errors. Other types of errors will be detected by Visual Basic when the program is executed; however, many errors due to typing mistakes, flaws in the algorithm, or incorrect usages of the Visual Basic language rules only can be uncovered and corrected by careful detective work. An example of such an error would be using addition when multiplication was the properation. 6. Complete the documentation: Organize all the material that describes the program. Documentation is intended to allow another person, or the programmer at a later date, to understand the program. Internal documentation consists of statementsin the program that are not executed, but point out the purposes of various parts of the program. Documentation might also consist of a detailed description of what the program does and how to use the program (for instance, what type of input is expected). For commercial programs, documentation includes an instruction manual.

Programming Languages

Programming Languages

BASIC • BASIC is the easy language that was developed by John Kemeny and Thomas-Kurtch in 1965. • Beginner’s All-purpose Symbolic Instruction Code used to be the most popular microcomputer language and is considered the easiest programming language to learn. • Advantage: ease of use. • Disadvantage: (1) processing speed is slow, (2) there is no one version of BASIC – problem with running on different computers.

BASIC • BASIC is the easy language that was developed by John Kemeny and Thomas-Kurtch in 1965. • Beginner’s All-purpose Symbolic Instruction Code used to be the most popular microcomputer language and is considered the easiest programming language to learn. • Advantage: ease of use. • Disadvantage: (1) processing speed is slow, (2) there is no one version of BASIC – problem with running on different computers.

COBOL • Common Business-Oriented Language is the language of business and it was formally adopted in 1960. • It is the most frequently used business programming language for large computers.

COBOL • Common Business-Oriented Language is the language of business and it was formally adopted in 1960. • It is the most frequently used business programming language for large computers.

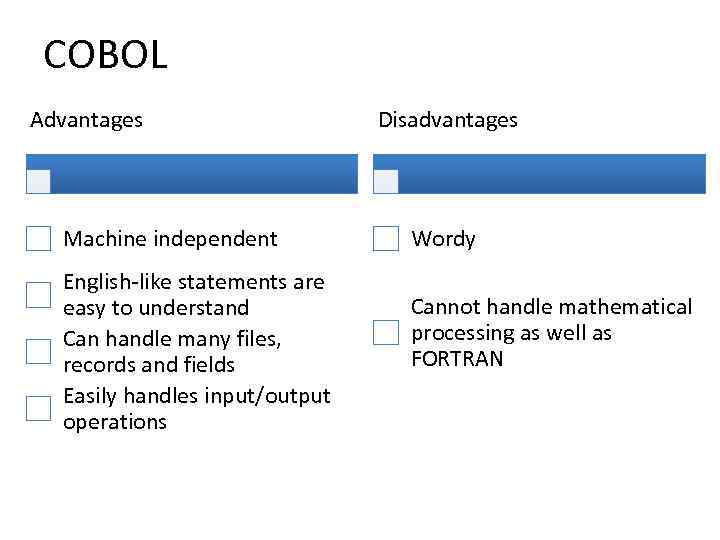

COBOL Advantages Machine independent English-like statements are easy to understand Can handle many files, records and fields Easily handles input/output operations Disadvantages Wordy Cannot handle mathematical processing as well as FORTRAN

COBOL Advantages Machine independent English-like statements are easy to understand Can handle many files, records and fields Easily handles input/output operations Disadvantages Wordy Cannot handle mathematical processing as well as FORTRAN

FORTRAN • The language of mathematics and first highlevel language. • Developed in 1954 by IBM • Originally designed to express mathematical formulas, it is still widely used language for mathematical, scientific, and engineering problems.

FORTRAN • The language of mathematics and first highlevel language. • Developed in 1954 by IBM • Originally designed to express mathematical formulas, it is still widely used language for mathematical, scientific, and engineering problems.

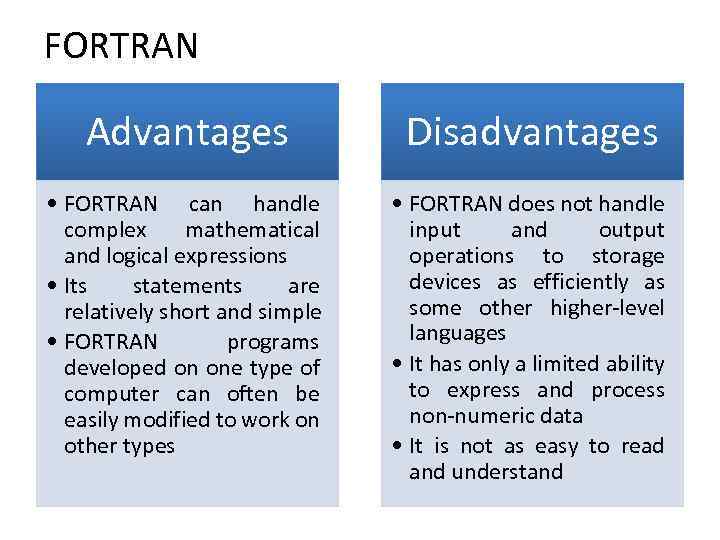

FORTRAN Advantages Disadvantages • FORTRAN can handle complex mathematical and logical expressions • Its statements are relatively short and simple • FORTRAN programs developed on one type of computer can often be easily modified to work on other types • FORTRAN does not handle input and output operations to storage devices as efficiently as some other higher-level languages • It has only a limited ability to express and process non-numeric data • It is not as easy to read and understand

FORTRAN Advantages Disadvantages • FORTRAN can handle complex mathematical and logical expressions • Its statements are relatively short and simple • FORTRAN programs developed on one type of computer can often be easily modified to work on other types • FORTRAN does not handle input and output operations to storage devices as efficiently as some other higher-level languages • It has only a limited ability to express and process non-numeric data • It is not as easy to read and understand

Pascal • Pascal is the simple language named after Blaise Pascal. • An alternative to BASIC as a language for teaching purposes and is relatively easy to learn. • A difference from BASIC is that Pascal uses structured programming.

Pascal • Pascal is the simple language named after Blaise Pascal. • An alternative to BASIC as a language for teaching purposes and is relatively easy to learn. • A difference from BASIC is that Pascal uses structured programming.

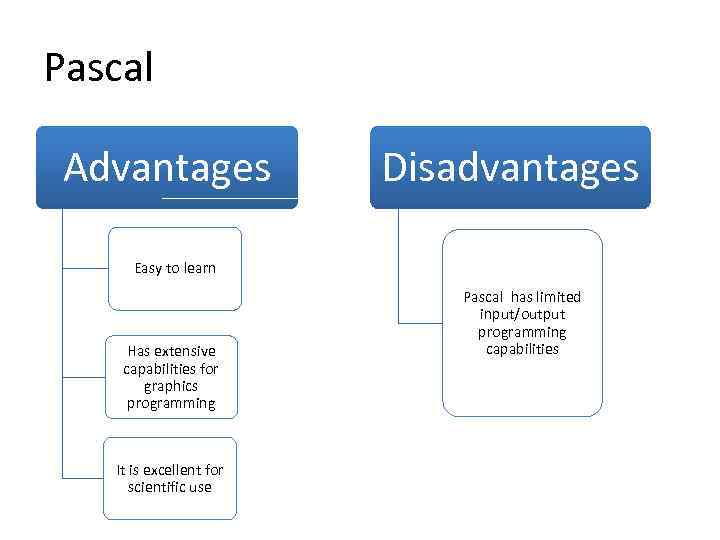

Pascal Advantages Disadvantages Easy to learn Has extensive capabilities for graphics programming It is excellent for scientific use Pascal has limited input/output programming capabilities

Pascal Advantages Disadvantages Easy to learn Has extensive capabilities for graphics programming It is excellent for scientific use Pascal has limited input/output programming capabilities

What are computers going to be like in the future? • Computers of the future might supersede human intellect. • Computer networking is sure to result in the death of distance. • Computers have evolved from simple electronic calculators. • What does the future hold for computer technology?

What are computers going to be like in the future? • Computers of the future might supersede human intellect. • Computer networking is sure to result in the death of distance. • Computers have evolved from simple electronic calculators. • What does the future hold for computer technology?

What does the future hold for computer technology? • Computer researchers picture the advent of a fully developed artificial intelligence in the world of computing. • Nanotechnology is one of the very popular fields that is being looked forward to. • Researchers look at nanotechnology as one of the promising fields to merge with computing technology in future. • Voice commands in natural languages are the next step in computing.

What does the future hold for computer technology? • Computer researchers picture the advent of a fully developed artificial intelligence in the world of computing. • Nanotechnology is one of the very popular fields that is being looked forward to. • Researchers look at nanotechnology as one of the promising fields to merge with computing technology in future. • Voice commands in natural languages are the next step in computing.

Pre-history of BASIC • Before the mid-1960 s, computers were extremely expensive and used only for special-purpose tasks. A simple batch processing arrangement ran only a single "job" at a time, one after another. But during the 1960 s faster and more affordable computers became available, and as prices decreased newer computer systems supported time-sharing, a system which allows multiple users or processes to use the CPU and memory. In such a system the operating system alternates between running processes, giving each one running time on the CPU before switching to another. The machines had become fast enough that most users could feel they had the machine all to themselves.

Pre-history of BASIC • Before the mid-1960 s, computers were extremely expensive and used only for special-purpose tasks. A simple batch processing arrangement ran only a single "job" at a time, one after another. But during the 1960 s faster and more affordable computers became available, and as prices decreased newer computer systems supported time-sharing, a system which allows multiple users or processes to use the CPU and memory. In such a system the operating system alternates between running processes, giving each one running time on the CPU before switching to another. The machines had become fast enough that most users could feel they had the machine all to themselves.

Pre-history of BASIC (2) • By this point the problem of interacting with the computer was a concern. In the batch processing model, users never interacted with the machine directly, instead they tendered their jobs to the computer operators. Under the time-sharing model the users were given individual computer terminals and interacted directly. The need for a system to simplify this experience, from command line interpreters to programming languages was an area of intense research during the 1960 s and 70 s.

Pre-history of BASIC (2) • By this point the problem of interacting with the computer was a concern. In the batch processing model, users never interacted with the machine directly, instead they tendered their jobs to the computer operators. Under the time-sharing model the users were given individual computer terminals and interacted directly. The need for a system to simplify this experience, from command line interpreters to programming languages was an area of intense research during the 1960 s and 70 s.

Origin of BASIC • The original BASIC language (Beginner's All-purpose Symbolic Instruction Code) was designed in 1964 by John Kemeny and Thomas Kurtz and implemented by a team of Dartmouth students under their direction. BASIC was designed to allow students to write programs for the Dartmouth Time-Sharing System. It was intended specifically for the new class of users that time-sharing systems allowed—that is, a less technical user who did not have the mathematical background of the more traditional users and was not interested in acquiring it. Being able to use a computer to support teaching and research was quite novel at the time.

Origin of BASIC • The original BASIC language (Beginner's All-purpose Symbolic Instruction Code) was designed in 1964 by John Kemeny and Thomas Kurtz and implemented by a team of Dartmouth students under their direction. BASIC was designed to allow students to write programs for the Dartmouth Time-Sharing System. It was intended specifically for the new class of users that time-sharing systems allowed—that is, a less technical user who did not have the mathematical background of the more traditional users and was not interested in acquiring it. Being able to use a computer to support teaching and research was quite novel at the time.

Origin of BASIC (2) • The language was based on FORTRAN II, with some influences from ALGOL 60. Initially, BASIC concentrated on supporting straightforward mathematical work, with matrix arithmetic support from its initial implementation as a batch language and full string functionality being added by 1965. • The designers of the language decided to make the compiler available free of charge so that the language would become widespread. They also made it available to high schools in the Hanover area and put a considerable amount of effort into promoting the language. In the following years, as other dialects of BASIC appeared, Kemeny and Kurtz's original BASIC dialect became known as Dartmouth BASIC.

Origin of BASIC (2) • The language was based on FORTRAN II, with some influences from ALGOL 60. Initially, BASIC concentrated on supporting straightforward mathematical work, with matrix arithmetic support from its initial implementation as a batch language and full string functionality being added by 1965. • The designers of the language decided to make the compiler available free of charge so that the language would become widespread. They also made it available to high schools in the Hanover area and put a considerable amount of effort into promoting the language. In the following years, as other dialects of BASIC appeared, Kemeny and Kurtz's original BASIC dialect became known as Dartmouth BASIC.

Growth and development. • The introduction of the first microcomputers in the mid-1970 s was the start of explosive growth for BASIC. It had the advantage that it was fairly well known to the young designers and computer hobbyists who took an interest in microcomputers. • In 1975, Micro Instrumentation and Telemetry Systems (MITS) released Altair BASIC, developed by Bill Gates and Paul Allen as the company Micro-Soft, which grew into today's corporate giant, Microsoft. • When the Apple II, PET 2001 and TRS-80 were all released in 1977, all three had BASIC as their primary programming language and operating environment. • When IBM was designing the IBM PC they followed the paradigm of existing home-computers in wanting to have a built-in BASIC. They sourced this from Microsoft - IBM Cassette BASIC - but Microsoft also produced several other versions of BASIC for MS-DOS/PC-DOS. In addition they produced the Microsoft BASIC Compiler aimed at professional programmers.

Growth and development. • The introduction of the first microcomputers in the mid-1970 s was the start of explosive growth for BASIC. It had the advantage that it was fairly well known to the young designers and computer hobbyists who took an interest in microcomputers. • In 1975, Micro Instrumentation and Telemetry Systems (MITS) released Altair BASIC, developed by Bill Gates and Paul Allen as the company Micro-Soft, which grew into today's corporate giant, Microsoft. • When the Apple II, PET 2001 and TRS-80 were all released in 1977, all three had BASIC as their primary programming language and operating environment. • When IBM was designing the IBM PC they followed the paradigm of existing home-computers in wanting to have a built-in BASIC. They sourced this from Microsoft - IBM Cassette BASIC - but Microsoft also produced several other versions of BASIC for MS-DOS/PC-DOS. In addition they produced the Microsoft BASIC Compiler aimed at professional programmers.

Growth and development (2) • BASIC's fortunes reversed once again with the introduction in 1991 of Visual Basic ("VB"), by Microsoft. The only significant similarity to older BASIC dialects was familiar syntax. Syntax itself no longer "fully defined" the language, since much development was done using "drag and drop" methods without exposing all code for commonly used objects such as buttons and scrollbars and many other user friendly objects to the developer. • Visual basic 6. 0 was released in 1998. • Microsoft also produced dialects VBA in 1993, VBScript in 1996 and Visual Basic. NET in 2001.

Growth and development (2) • BASIC's fortunes reversed once again with the introduction in 1991 of Visual Basic ("VB"), by Microsoft. The only significant similarity to older BASIC dialects was familiar syntax. Syntax itself no longer "fully defined" the language, since much development was done using "drag and drop" methods without exposing all code for commonly used objects such as buttons and scrollbars and many other user friendly objects to the developer. • Visual basic 6. 0 was released in 1998. • Microsoft also produced dialects VBA in 1993, VBScript in 1996 and Visual Basic. NET in 2001.