b4ad6473c07fd768173ea299f4bb7f75.ppt

- Количество слайдов: 22

Process Synchronization Ch. 4. 4 – Cooperating Processes Ch. 7 – Concurrency

Process Synchronization Ch. 4. 4 – Cooperating Processes Ch. 7 – Concurrency

Cooperating Processes § Independent process cannot affect or be affected by the execution of another process. § Cooperating process can affect or be affected by the execution of another process

Cooperating Processes § Independent process cannot affect or be affected by the execution of another process. § Cooperating process can affect or be affected by the execution of another process

Advantages of process cooperation § Information sharing • Allow concurrent access to data sources § Computation speed-up • Sub-tasks can be executed in parallel § Modularity • System functions can be divided into separate processes or threads § Convenience

Advantages of process cooperation § Information sharing • Allow concurrent access to data sources § Computation speed-up • Sub-tasks can be executed in parallel § Modularity • System functions can be divided into separate processes or threads § Convenience

Context Switches can Happen at Any Time § A process switch (full context switch) can happen at any time there is a mode switch into the kernel § This could be because of a: • • System call (semi-predictable) Timer (round robin, etc. ) I/O interrupt (unblock some other process) Other interrupt, etc. § The programmer generally cannot predict at what point in a program this might happen

Context Switches can Happen at Any Time § A process switch (full context switch) can happen at any time there is a mode switch into the kernel § This could be because of a: • • System call (semi-predictable) Timer (round robin, etc. ) I/O interrupt (unblock some other process) Other interrupt, etc. § The programmer generally cannot predict at what point in a program this might happen

Preemption is Unpredictable § This means that the program’s work can be interrupted at any time (I. e. just after the completion of any instruction): • Some other program gets to run for a while • And the interrupted program eventually gets restarted exactly where it left off. • After the other program (process) executes other instructions that we have no control over § This can lead to trouble if processes are not independent

Preemption is Unpredictable § This means that the program’s work can be interrupted at any time (I. e. just after the completion of any instruction): • Some other program gets to run for a while • And the interrupted program eventually gets restarted exactly where it left off. • After the other program (process) executes other instructions that we have no control over § This can lead to trouble if processes are not independent

Problems with Concurrent Execution § Concurrent processes (or threads) often need to share data (maintained either in shared memory or files) and resources § If there is no controlled access to shared data, execution of the processes on these data can interleave. § The results will then depend on the order in which data were modified • i. e. the results are non-deterministic.

Problems with Concurrent Execution § Concurrent processes (or threads) often need to share data (maintained either in shared memory or files) and resources § If there is no controlled access to shared data, execution of the processes on these data can interleave. § The results will then depend on the order in which data were modified • i. e. the results are non-deterministic.

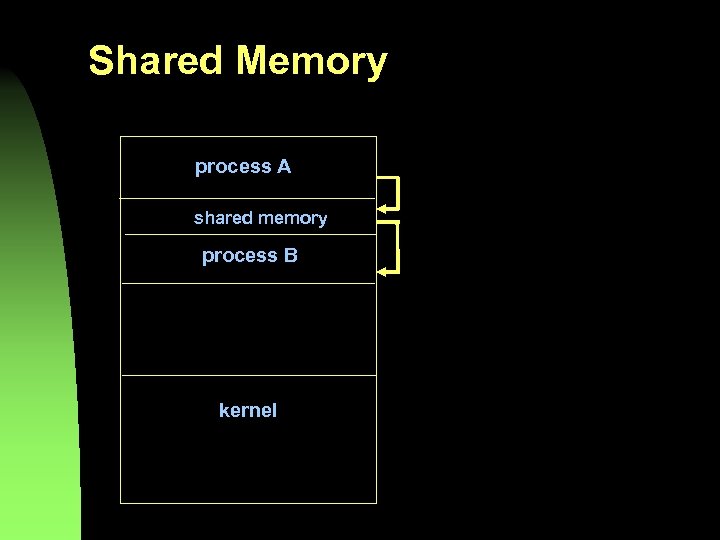

Shared Memory process A shared memory process B kernel

Shared Memory process A shared memory process B kernel

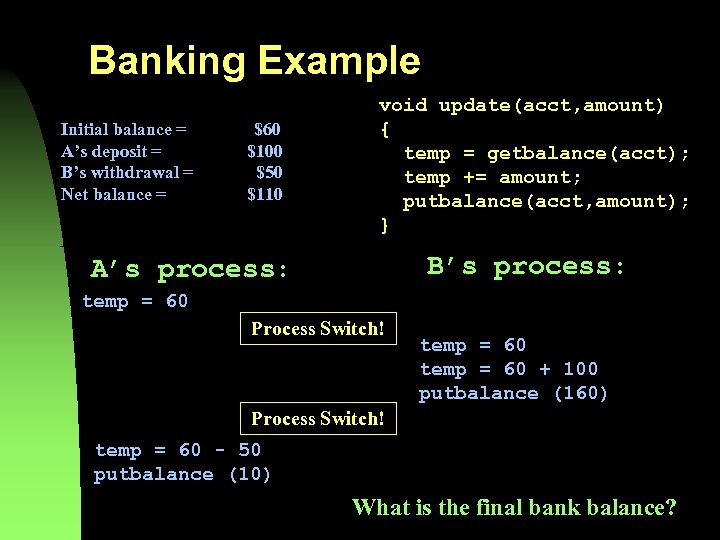

An Example: Bank Account A joint account. Each account holder accesses money at the same time – one deposits, the other withdraws. The bank’s computer is executing the routine below simultaneously as two processes running the same transaction processing program void update(acct, amount) { temp = getbalance(acct); temp += amount; putbalance(acct, temp); }

An Example: Bank Account A joint account. Each account holder accesses money at the same time – one deposits, the other withdraws. The bank’s computer is executing the routine below simultaneously as two processes running the same transaction processing program void update(acct, amount) { temp = getbalance(acct); temp += amount; putbalance(acct, temp); }

Banking Example Initial balance = A’s deposit = B’s withdrawal = Net balance = $60 $100 $50 $110 void update(acct, amount) { temp = getbalance(acct); temp += amount; putbalance(acct, amount); } B’s process: A’s process: temp = 60 Process Switch! temp = 60 + 100 putbalance (160) Process Switch! temp = 60 - 50 putbalance (10) What is the final bank balance?

Banking Example Initial balance = A’s deposit = B’s withdrawal = Net balance = $60 $100 $50 $110 void update(acct, amount) { temp = getbalance(acct); temp += amount; putbalance(acct, amount); } B’s process: A’s process: temp = 60 Process Switch! temp = 60 + 100 putbalance (160) Process Switch! temp = 60 - 50 putbalance (10) What is the final bank balance?

Race Conditions § A situation such as this, where processes “race” against each other, causing possible errors, is called a race condition. § 2 or more processes are reading/writing shared data and the final result depends on the order the processes have run § Can happen at the application level and the OS level

Race Conditions § A situation such as this, where processes “race” against each other, causing possible errors, is called a race condition. § 2 or more processes are reading/writing shared data and the final result depends on the order the processes have run § Can happen at the application level and the OS level

Printer queue example (OS level) § Printer queue – often implemented as a circular queue. • Out = position of next item to be printed • In = position of next empty slot. § lw or lpr • File added to print queue § What happens if 2 processes requesting queuing of a print job at the same time? Each must access the variable “in”.

Printer queue example (OS level) § Printer queue – often implemented as a circular queue. • Out = position of next item to be printed • In = position of next empty slot. § lw or lpr • File added to print queue § What happens if 2 processes requesting queuing of a print job at the same time? Each must access the variable “in”.

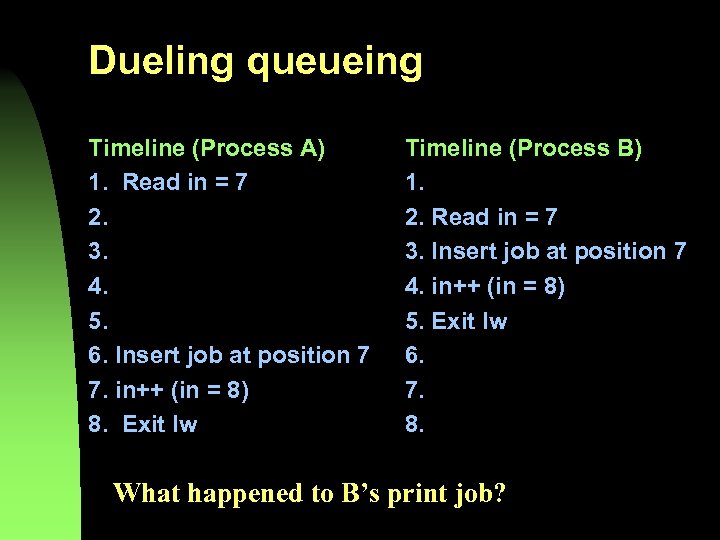

Dueling queueing Timeline (Process A) 1. Read in = 7 2. 3. 4. 5. 6. Insert job at position 7 7. in++ (in = 8) 8. Exit lw Timeline (Process B) 1. 2. Read in = 7 3. Insert job at position 7 4. in++ (in = 8) 5. Exit lw 6. 7. 8. What happened to B’s print job?

Dueling queueing Timeline (Process A) 1. Read in = 7 2. 3. 4. 5. 6. Insert job at position 7 7. in++ (in = 8) 8. Exit lw Timeline (Process B) 1. 2. Read in = 7 3. Insert job at position 7 4. in++ (in = 8) 5. Exit lw 6. 7. 8. What happened to B’s print job?

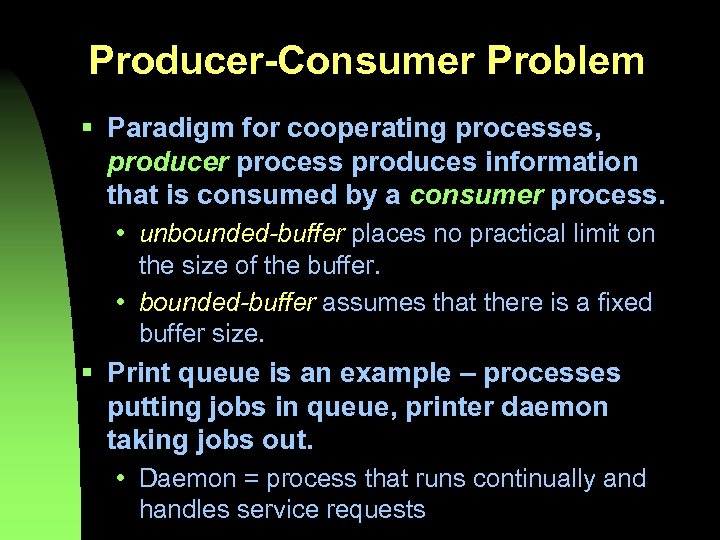

Producer-Consumer Problem § Paradigm for cooperating processes, producer process produces information that is consumed by a consumer process. • unbounded-buffer places no practical limit on the size of the buffer. • bounded-buffer assumes that there is a fixed buffer size. § Print queue is an example – processes putting jobs in queue, printer daemon taking jobs out. • Daemon = process that runs continually and handles service requests

Producer-Consumer Problem § Paradigm for cooperating processes, producer process produces information that is consumed by a consumer process. • unbounded-buffer places no practical limit on the size of the buffer. • bounded-buffer assumes that there is a fixed buffer size. § Print queue is an example – processes putting jobs in queue, printer daemon taking jobs out. • Daemon = process that runs continually and handles service requests

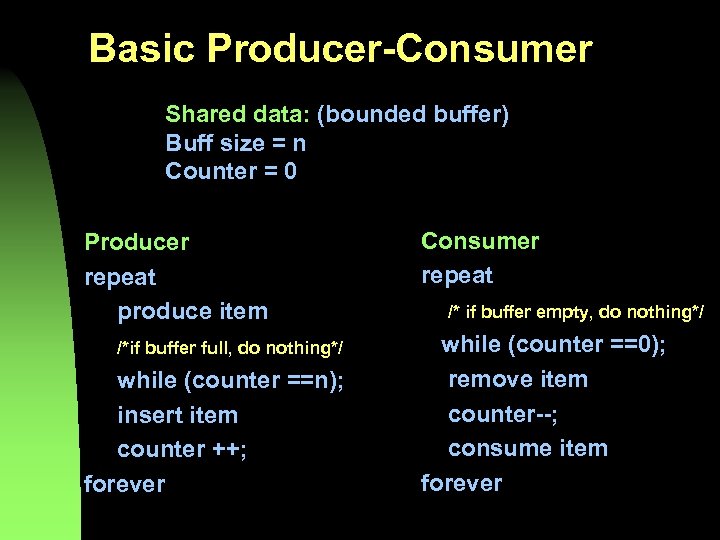

Basic Producer-Consumer Shared data: (bounded buffer) Buff size = n Counter = 0 Producer repeat produce item /*if buffer full, do nothing*/ while (counter ==n); insert item counter ++; forever Consumer repeat /* if buffer empty, do nothing*/ while (counter ==0); remove item counter--; consume item forever

Basic Producer-Consumer Shared data: (bounded buffer) Buff size = n Counter = 0 Producer repeat produce item /*if buffer full, do nothing*/ while (counter ==n); insert item counter ++; forever Consumer repeat /* if buffer empty, do nothing*/ while (counter ==0); remove item counter--; consume item forever

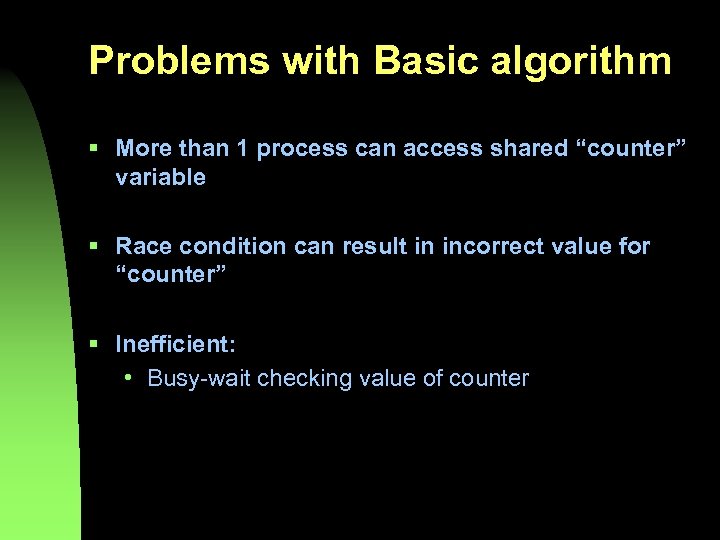

Problems with Basic algorithm § More than 1 process can access shared “counter” variable § Race condition can result in incorrect value for “counter” § Inefficient: • Busy-wait checking value of counter

Problems with Basic algorithm § More than 1 process can access shared “counter” variable § Race condition can result in incorrect value for “counter” § Inefficient: • Busy-wait checking value of counter

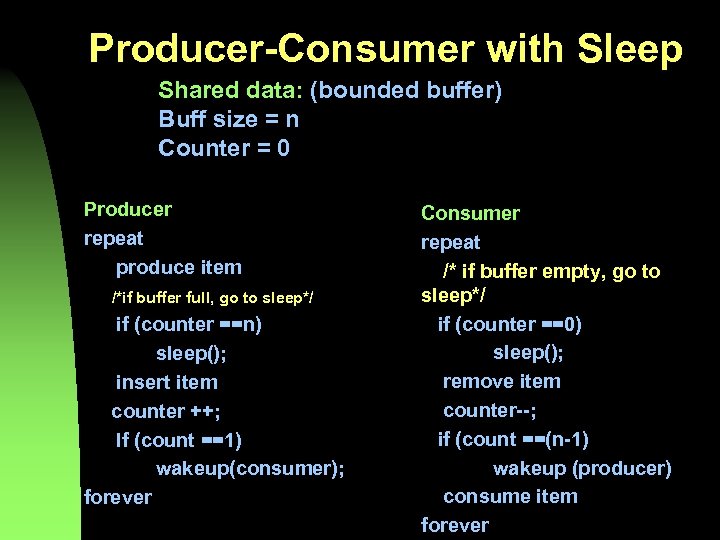

Producer-Consumer with Sleep Shared data: (bounded buffer) Buff size = n Counter = 0 Producer repeat produce item /*if buffer full, go to sleep*/ if (counter ==n) sleep(); insert item counter ++; If (count ==1) wakeup(consumer); forever Consumer repeat /* if buffer empty, go to sleep*/ if (counter ==0) sleep(); remove item counter--; if (count ==(n-1) wakeup (producer) consume item forever

Producer-Consumer with Sleep Shared data: (bounded buffer) Buff size = n Counter = 0 Producer repeat produce item /*if buffer full, go to sleep*/ if (counter ==n) sleep(); insert item counter ++; If (count ==1) wakeup(consumer); forever Consumer repeat /* if buffer empty, go to sleep*/ if (counter ==0) sleep(); remove item counter--; if (count ==(n-1) wakeup (producer) consume item forever

Problems § If counter has a value of 1. . n-1, both processes are running, so both can access shared “counter” variable § Race condition can result in incorrect value for “counter” § Could lead to deadlock with both processes asleep

Problems § If counter has a value of 1. . n-1, both processes are running, so both can access shared “counter” variable § Race condition can result in incorrect value for “counter” § Could lead to deadlock with both processes asleep

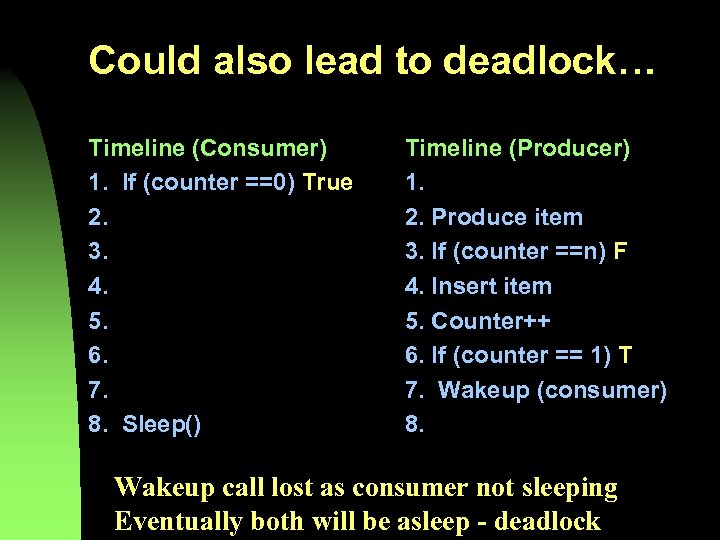

Could also lead to deadlock… Timeline (Consumer) 1. If (counter ==0) True 2. 3. 4. 5. 6. 7. 8. Sleep() Timeline (Producer) 1. 2. Produce item 3. If (counter ==n) F 4. Insert item 5. Counter++ 6. If (counter == 1) T 7. Wakeup (consumer) 8. Wakeup call lost as consumer not sleeping Eventually both will be asleep - deadlock

Could also lead to deadlock… Timeline (Consumer) 1. If (counter ==0) True 2. 3. 4. 5. 6. 7. 8. Sleep() Timeline (Producer) 1. 2. Produce item 3. If (counter ==n) F 4. Insert item 5. Counter++ 6. If (counter == 1) T 7. Wakeup (consumer) 8. Wakeup call lost as consumer not sleeping Eventually both will be asleep - deadlock

Critical section § That part of the program where shared resources are accessed § When a process executes code that manipulates shared data (or resource), we say that the process is in a critical section (CS) (for that resource) § Entry and exit sections (small pieces of code) guard the critical section

Critical section § That part of the program where shared resources are accessed § When a process executes code that manipulates shared data (or resource), we say that the process is in a critical section (CS) (for that resource) § Entry and exit sections (small pieces of code) guard the critical section

The Critical Section Problem § CS’s can be thought of as sequences of instructions that are ‘tightly bound’ so no other process should interfere via interleaving or parallel execution. § The execution of CS’s must be mutually exclusive: At any time, only one process should be allowed to execute in a CS (even with multiple CPUs) § Therefore we need a system where each process must request permission to enter its CS, and we need a means to “administer” this

The Critical Section Problem § CS’s can be thought of as sequences of instructions that are ‘tightly bound’ so no other process should interfere via interleaving or parallel execution. § The execution of CS’s must be mutually exclusive: At any time, only one process should be allowed to execute in a CS (even with multiple CPUs) § Therefore we need a system where each process must request permission to enter its CS, and we need a means to “administer” this

The Critical Section Problem § The section of code implementing this request is called the entry section § The critical section (CS) will be followed by an exit section, which opens the possibility of other processes entering their CS. § The remaining code is the remainder section RS § The critical section problem is to design the processes so that their results will not depend on the order in which their execution is interleaved. § We must also prevent deadlock and starvation.

The Critical Section Problem § The section of code implementing this request is called the entry section § The critical section (CS) will be followed by an exit section, which opens the possibility of other processes entering their CS. § The remaining code is the remainder section RS § The critical section problem is to design the processes so that their results will not depend on the order in which their execution is interleaved. § We must also prevent deadlock and starvation.

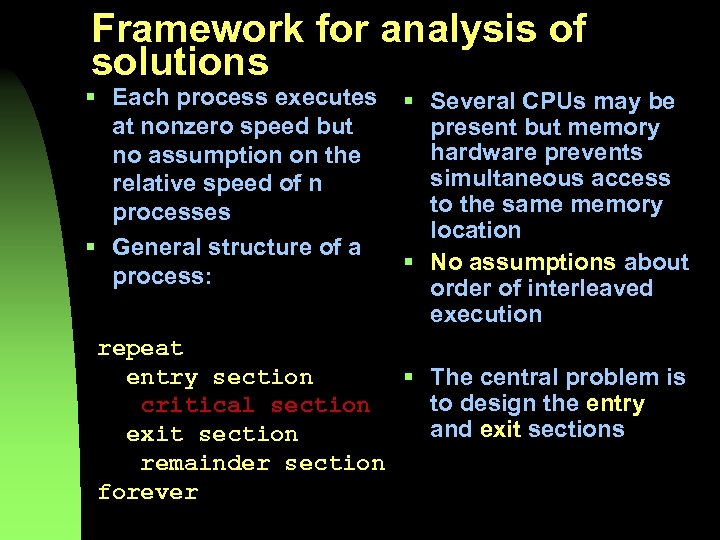

Framework for analysis of solutions § Each process executes at nonzero speed but no assumption on the relative speed of n processes § General structure of a process: § Several CPUs may be present but memory hardware prevents simultaneous access to the same memory location § No assumptions about order of interleaved execution repeat entry section § The central problem is to design the entry critical section and exit sections exit section remainder section forever

Framework for analysis of solutions § Each process executes at nonzero speed but no assumption on the relative speed of n processes § General structure of a process: § Several CPUs may be present but memory hardware prevents simultaneous access to the same memory location § No assumptions about order of interleaved execution repeat entry section § The central problem is to design the entry critical section and exit sections exit section remainder section forever