ef3b3763143ad20dfb6edec65e762d13.ppt

- Количество слайдов: 16

Probabilistic Contextual Skylines D. Sacharidis 1, A. Arvanitis 12, T. Sellis 12 1 Institute for the Management of Information Systems — “Athena” R. C. , Greece 2 National Technical University of Athens, Greece

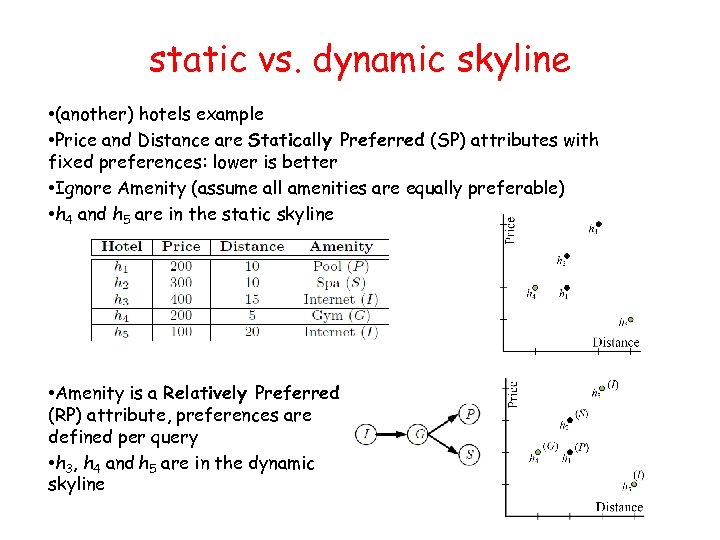

static vs. dynamic skyline • (another) hotels example • Price and Distance are Statically Preferred (SP) attributes with fixed preferences: lower is better • Ignore Amenity (assume all amenities are equally preferable) • h 4 and h 5 are in the static skyline • Amenity is a Relatively Preferred (RP) attribute, preferences are defined per query • h 3, h 4 and h 5 are in the dynamic skyline

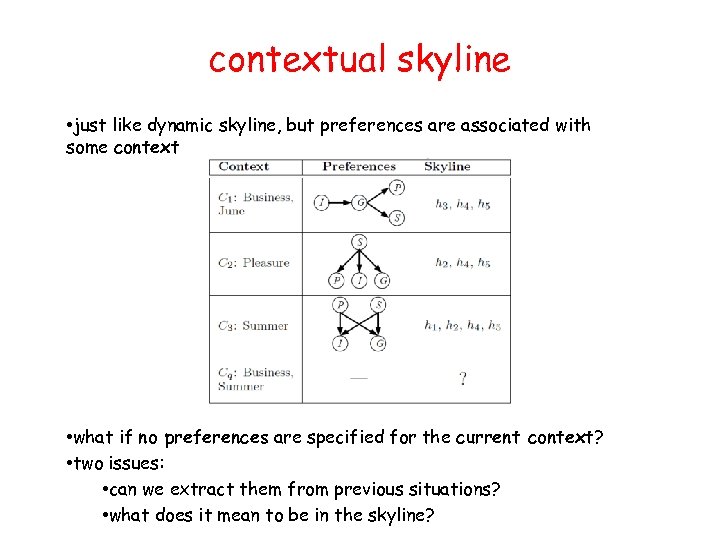

contextual skyline • just like dynamic skyline, but preferences are associated with some context • what if no preferences are specified for the current context? • two issues: • can we extract them from previous situations? • what does it mean to be in the skyline?

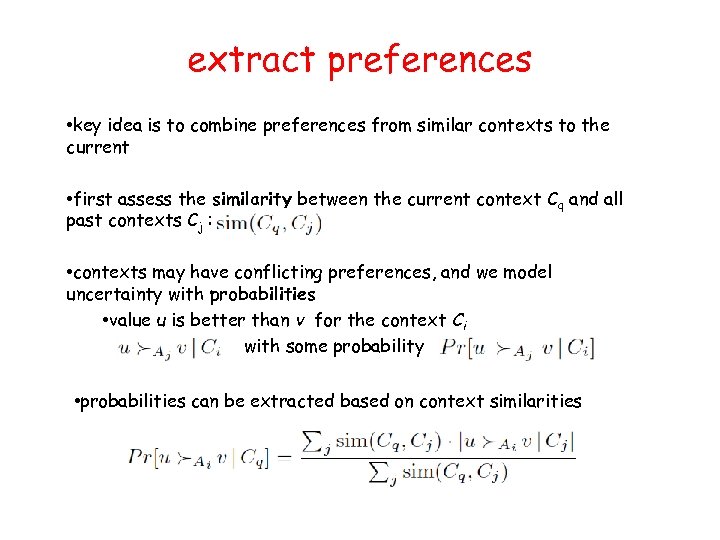

extract preferences • key idea is to combine preferences from similar contexts to the current • first assess the similarity between the current context Cq and all past contexts Cj : • contexts may have conflicting preferences, and we model uncertainty with probabilities • value u is better than v for the context Ci with some probability • probabilities can be extracted based on context similarities

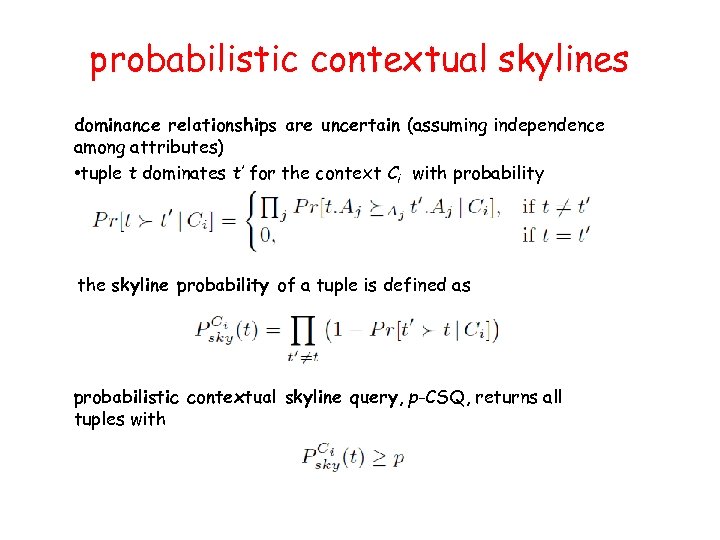

probabilistic contextual skylines dominance relationships are uncertain (assuming independence among attributes) • tuple t dominates t’ for the context Ci with probability the skyline probability of a tuple is defined as probabilistic contextual skyline query, p-CSQ, returns all tuples with

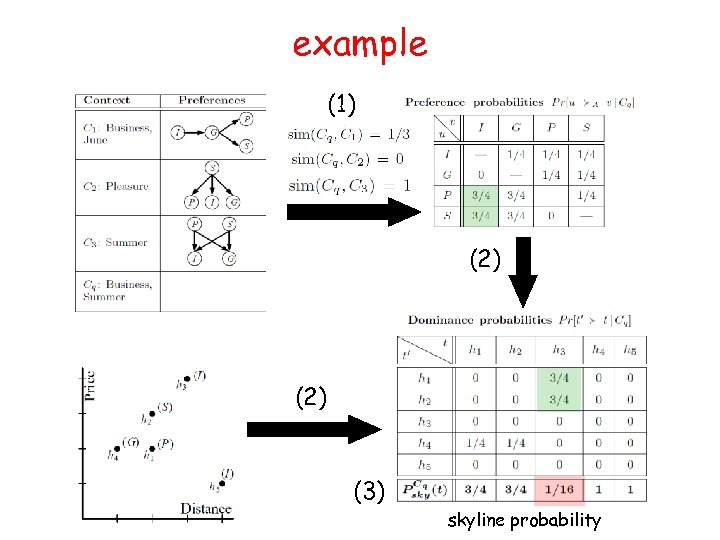

example (1) (2) (3) skyline probability

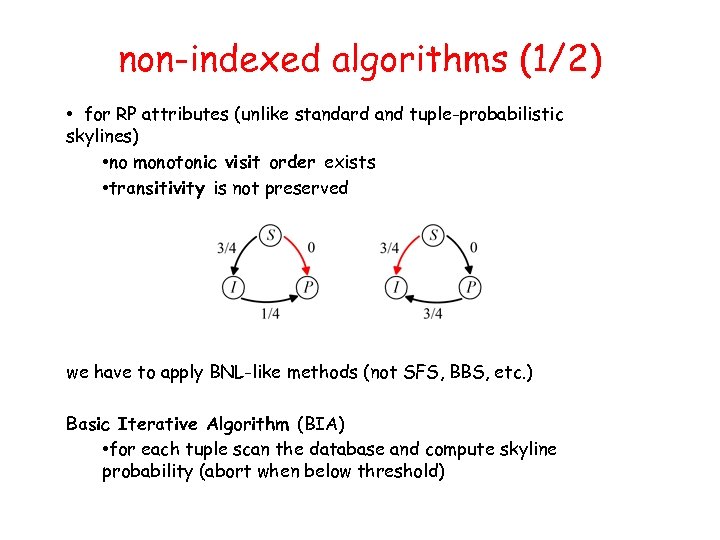

non-indexed algorithms (1/2) • for RP attributes (unlike standard and tuple-probabilistic skylines) • no monotonic visit order exists • transitivity is not preserved we have to apply BNL-like methods (not SFS, BBS, etc. ) Basic Iterative Algorithm (BIA) • for each tuple scan the database and compute skyline probability (abort when below threshold)

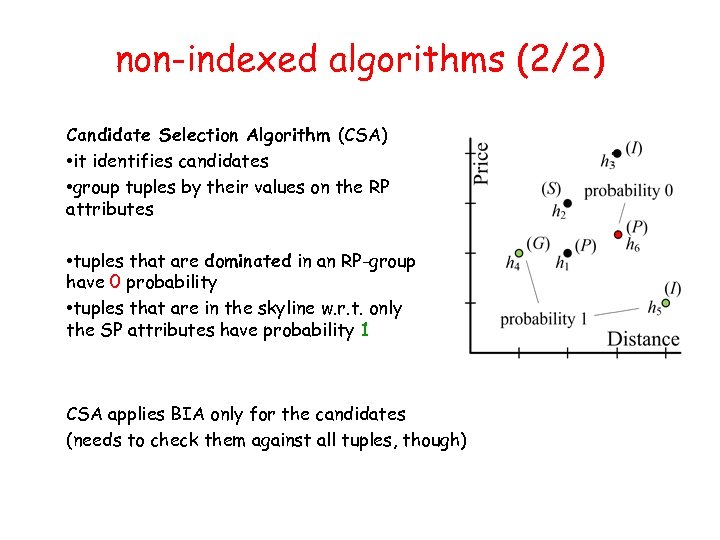

non-indexed algorithms (2/2) Candidate Selection Algorithm (CSA) • it identifies candidates • group tuples by their values on the RP attributes • tuples that are dominated in an RP-group have 0 probability • tuples that are in the skyline w. r. t. only the SP attributes have probability 1 CSA applies BIA only for the candidates (needs to check them against all tuples, though)

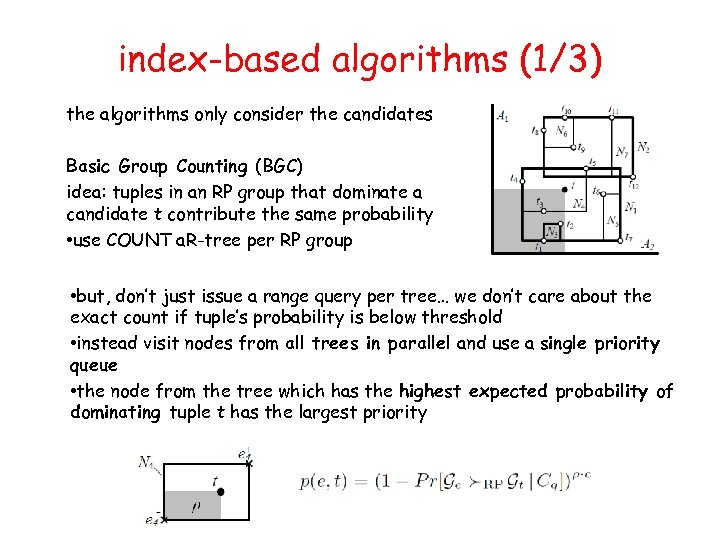

index-based algorithms (1/3) the algorithms only consider the candidates Basic Group Counting (BGC) idea: tuples in an RP group that dominate a candidate t contribute the same probability • use COUNT a. R-tree per RP group • but, don’t just issue a range query per tree… we don’t care about the exact count if tuple’s probability is below threshold • instead visit nodes from all trees in parallel and use a single priority queue • the node from the tree which has the highest expected probability of dominating tuple t has the largest priority

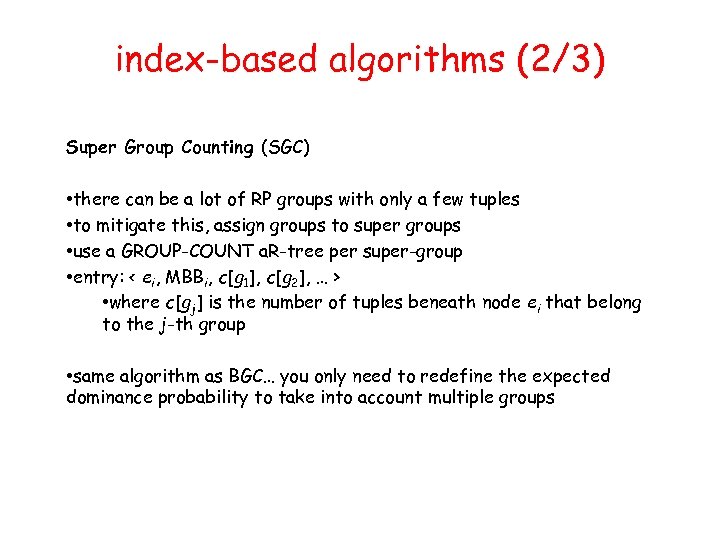

index-based algorithms (2/3) Super Group Counting (SGC) • there can be a lot of RP groups with only a few tuples • to mitigate this, assign groups to super groups • use a GROUP-COUNT a. R-tree per super-group • entry: < ei, MBBi, c[g 1], c[g 2], … > • where c[gj] is the number of tuples beneath node ei that belong to the j-th group • same algorithm as BGC… you only need to redefine the expected dominance probability to take into account multiple groups

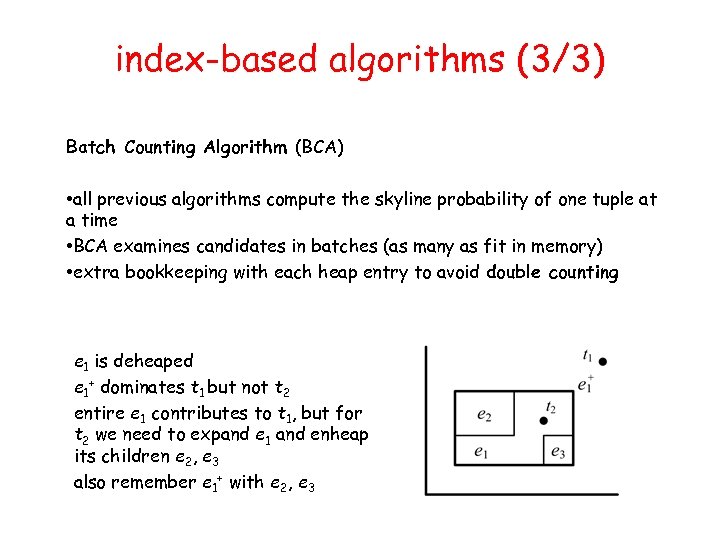

index-based algorithms (3/3) Batch Counting Algorithm (BCA) • all previous algorithms compute the skyline probability of one tuple at a time • BCA examines candidates in batches (as many as fit in memory) • extra bookkeeping with each heap entry to avoid double counting e 1 is deheaped e 1+ dominates t 1 but not t 2 entire e 1 contributes to t 1, but for t 2 we need to expand e 1 and enheap its children e 2, e 3 also remember e 1+ with e 2, e 3

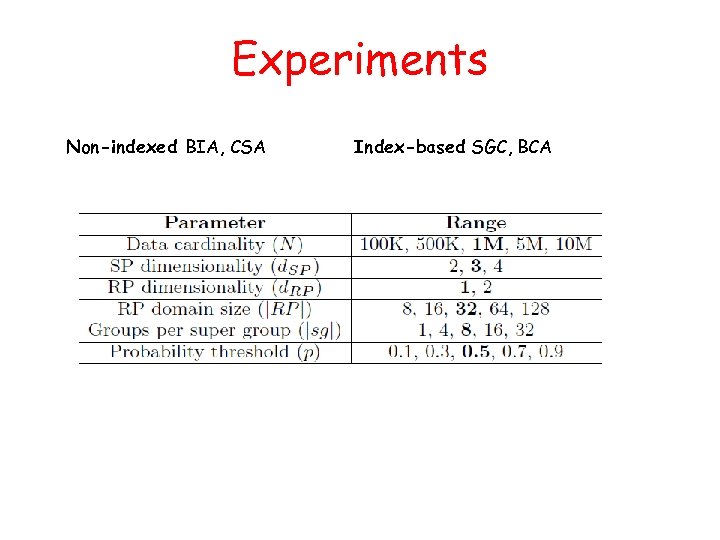

Experiments Non-indexed BIA, CSA Index-based SGC, BCA

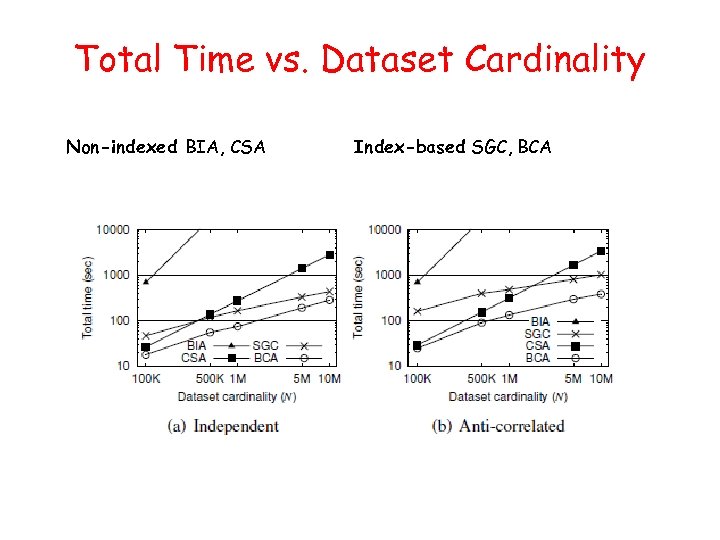

Total Time vs. Dataset Cardinality Non-indexed BIA, CSA Index-based SGC, BCA

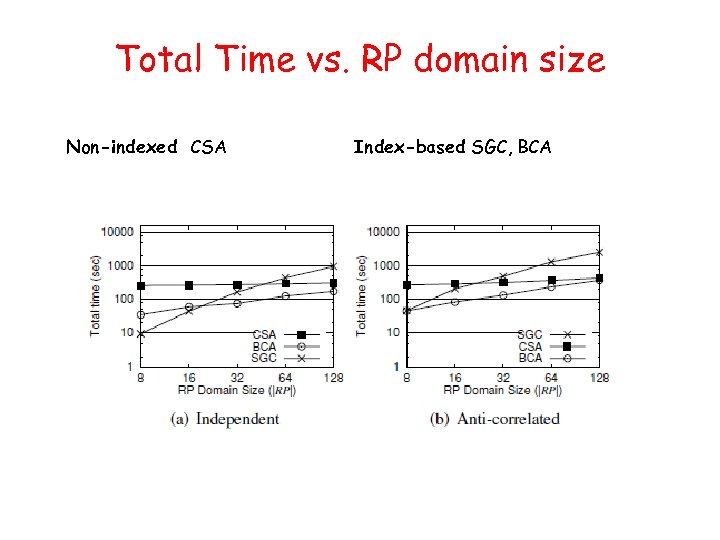

Total Time vs. RP domain size Non-indexed CSA Index-based SGC, BCA

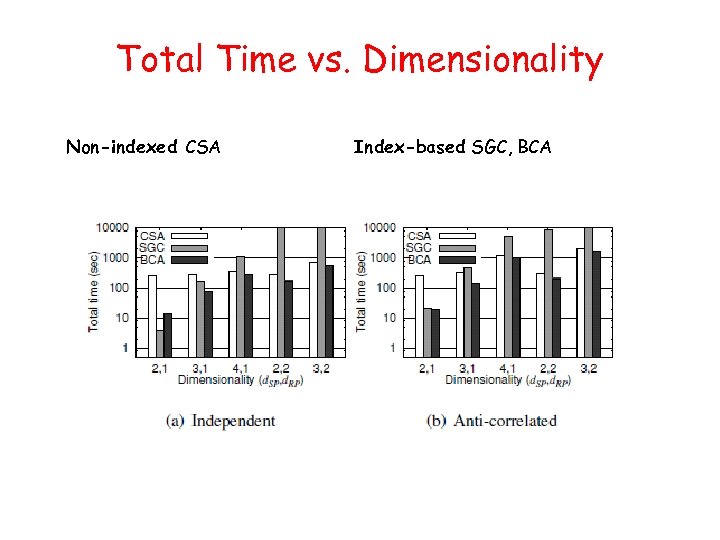

Total Time vs. Dimensionality Non-indexed CSA Index-based SGC, BCA

thank you!

ef3b3763143ad20dfb6edec65e762d13.ppt