48a81f7d29d78fc7c93fe330bbd9ea0c.ppt

- Количество слайдов: 29

Principles & Practice of Evaluation Erica Wimbush Head of Evaluation, NHS Health Scotland Scot. PHO Training, 29 th March 2011

Outline § § § § What is it? Why do we do it? Who is it for? When do we do it? How is population health data used? What are the different types of evaluation? Examples of ‘good’ evaluations

Examples § Monitoring & Evaluation of Scotland’s Alcohol Strategy (MESAS) – outcome planning § Evaluation of Keep Well (Wave 1) – pilot phase § Evaluation of Wo. SCAP – pre-testing § Evaluation of the Smoking Ban - implementation § Review of Scottish Diet Action Plan - review

Evaluation – what is it? The making of a judgement about the amount, cost or value of something Oxford English Dictionary “The primary purpose of evaluation is to improve the human condition … to help determine if the promised improvements of social programs are actually delivered” (Lipsey 2001) “The ultimate goal of evaluation is social betterment to which evaluation can contribute by assisting democratic institutions to better select oversee, improve and make sense of social programs” Mel Mark 2007 Ensuring that the interests of all individuals and groups in society are served” (Hopson 2001)

Focus of Evaluation Planned interventions that are intended to bring about change · a policy or policy mechanism · an agency or organisation · a service · a programme or project · a practice

Why do we do evaluation? § How effective/successful? To provide sound evidence of programme effects - what actually happened vs what was intended § Better understanding about how programmes work § Generate learning from programme implementation to inform decision-making and improve practice § Accountability - Assurance to funders about how (public) money has been spent

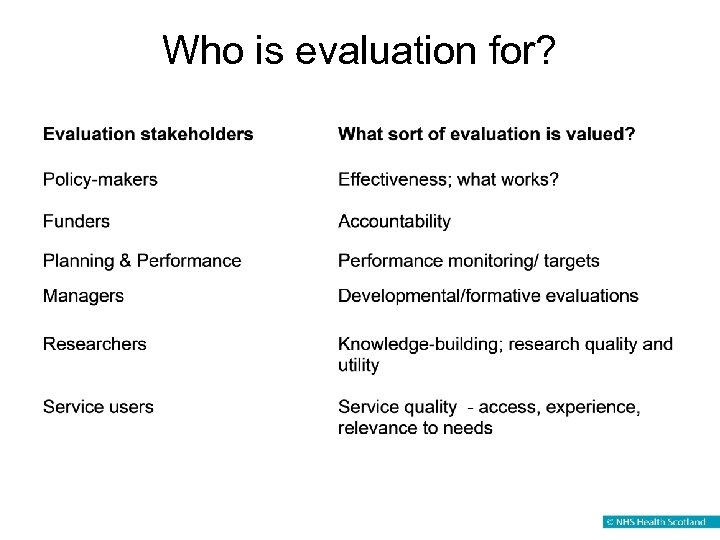

Who is evaluation for?

Principles for evaluation § Be focused – on the purpose, what you really need to know, and what will be useful and used § Be realistic – about what you can and should evaluate; what is possible and what it is in your gift to influence § Be proportionate – about how much evaluation is appropriate § Be convincing– to your evaluation audience: what will it take to convince a reasonable person? § Be honest – about why you are evaluating, what the evaluation will be used for, and what you can claim

Types of evaluation § OUTCOME – assesses effectiveness § PROCESS – understanding the processes of implementation and change § FORMATIVE – feeds directly back into programme development § SUMMATIVE – review of evidence and learning at the end of a period of implementation or funding

Outcome evaluation designs Experimental § True - Random assignment to experimental and control groups § Quasi - Controlled design, non-random assignment; non-equivalent control group Non-experimental § Time series analysis (single, comparative) § Before and after § Post-intervention (single, comparative)

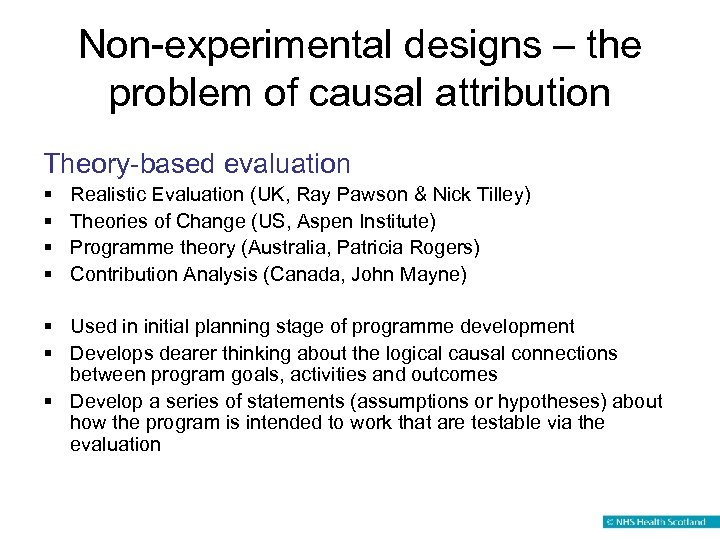

Non-experimental designs – the problem of causal attribution Theory-based evaluation § § Realistic Evaluation (UK, Ray Pawson & Nick Tilley) Theories of Change (US, Aspen Institute) Programme theory (Australia, Patricia Rogers) Contribution Analysis (Canada, John Mayne) § Used in initial planning stage of programme development § Develops dearer thinking about the logical causal connections between program goals, activities and outcomes § Develop a series of statements (assumptions or hypotheses) about how the program is intended to work that are testable via the evaluation

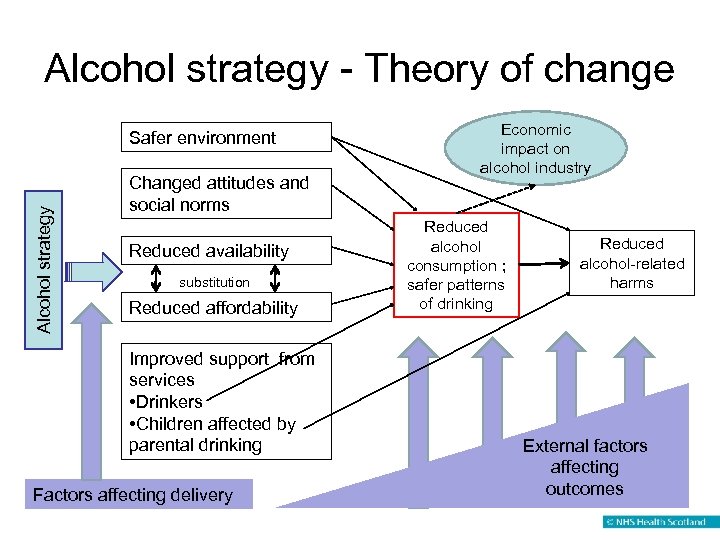

Alcohol strategy - Theory of change Alcohol strategy Safer environment Changed attitudes and social norms Reduced availability substitution Reduced affordability Improved support from services • Drinkers • Children affected by parental drinking Factors affecting delivery Economic impact on alcohol industry Reduced alcohol consumption ; safer patterns of drinking Reduced alcohol-related harms External factors affecting outcomes

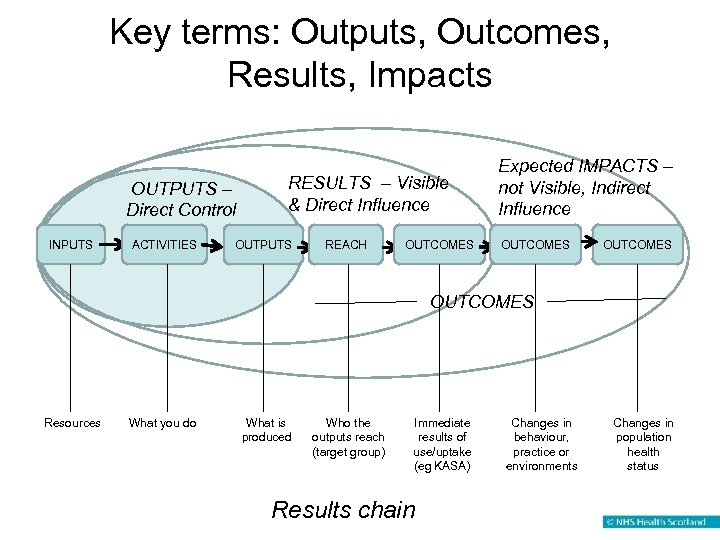

Key terms: Outputs, Outcomes, Results, Impacts OUTPUTS – Direct Control INPUTS ACTIVITIES RESULTS – Visible & Direct Influence OUTPUTS REACH OUTCOMES Expected IMPACTS – not Visible, Indirect Influence OUTCOMES Resources What you do What is produced Who the outputs reach (target group) Immediate results of use/uptake (eg KASA) Results chain Changes in behaviour, practice or environments Changes in population health status

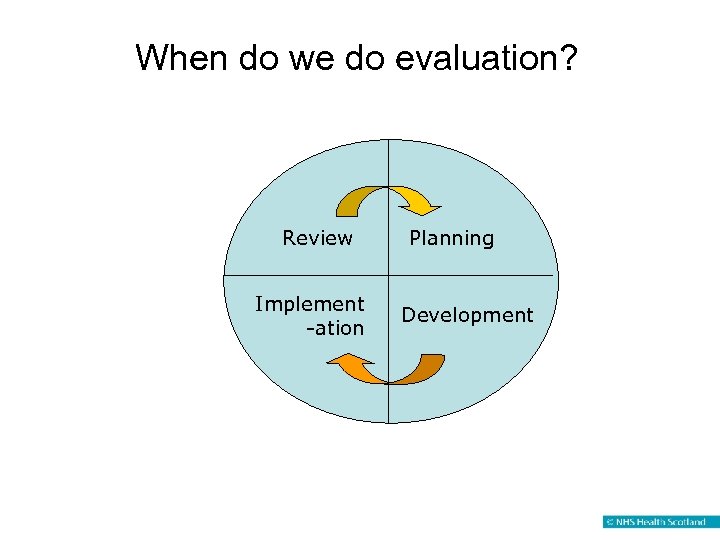

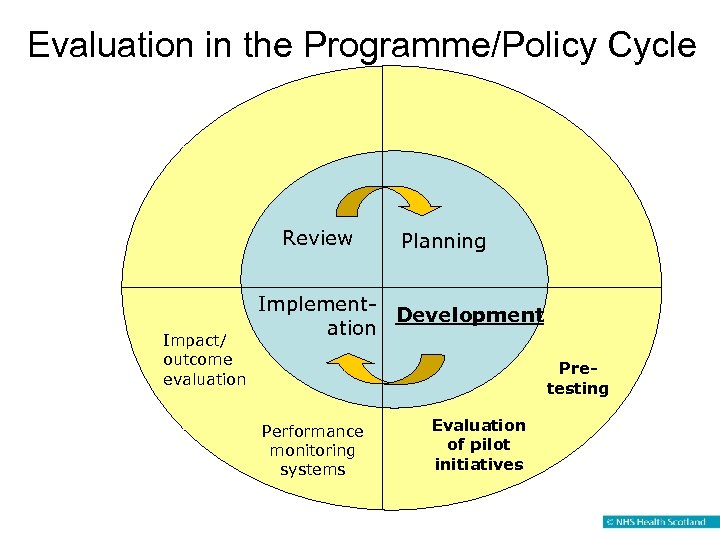

When do we do evaluation? Review Implement -ation Planning Development

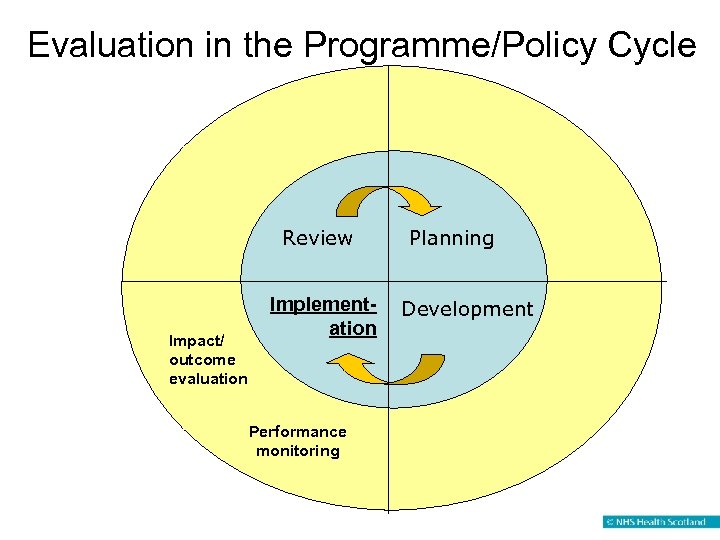

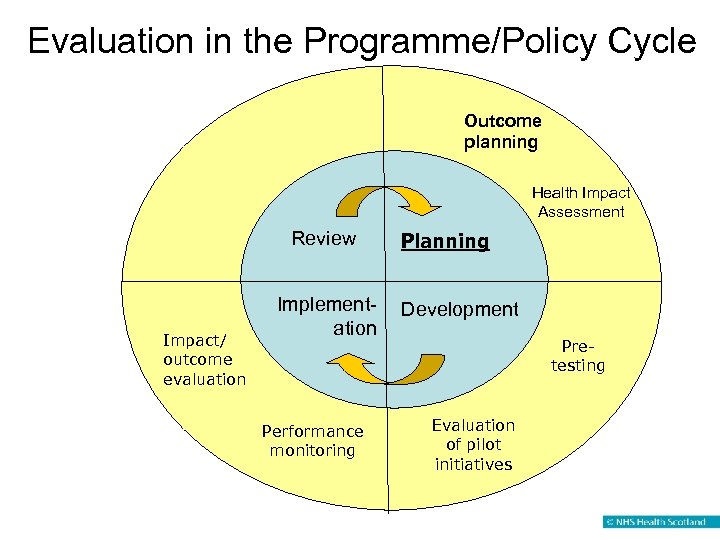

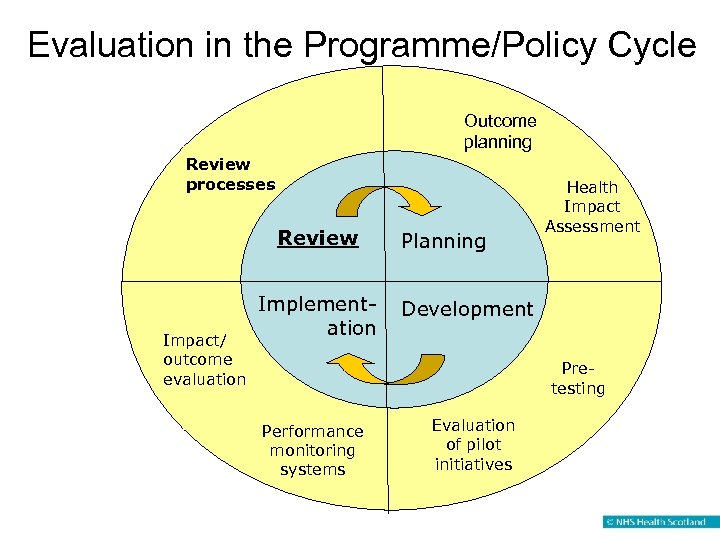

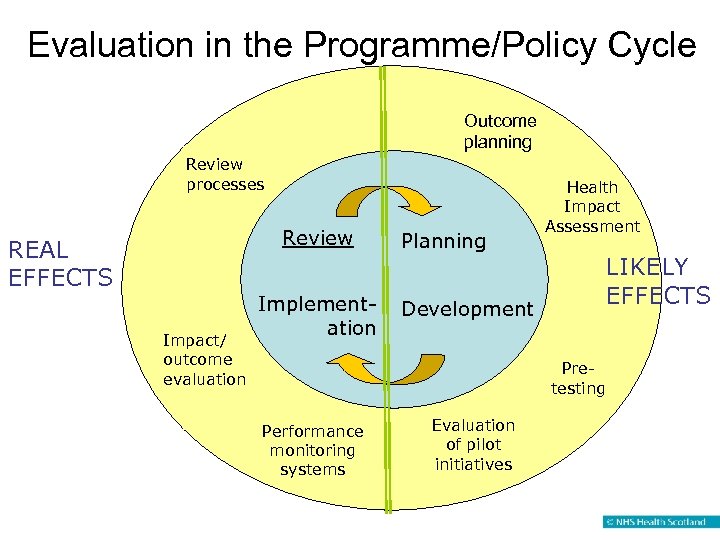

Evaluation in the Programme/Policy Cycle Review Impact/ outcome evaluation Implementation Performance monitoring Planning Development

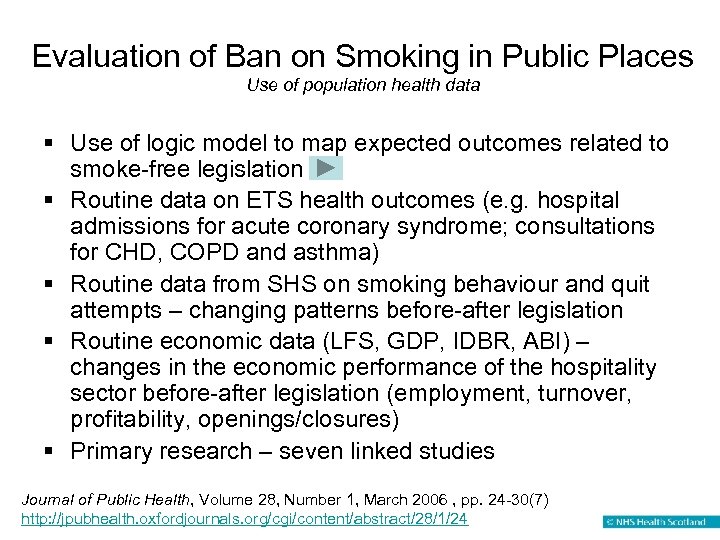

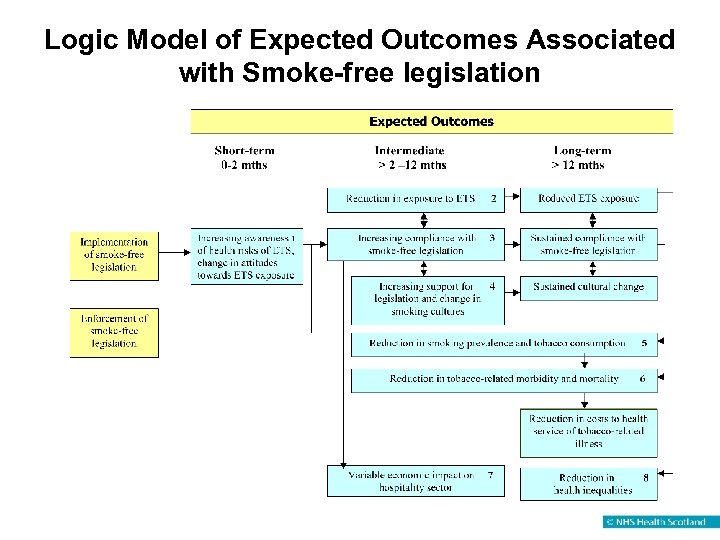

Evaluation of Ban on Smoking in Public Places Use of population health data § Use of logic model to map expected outcomes related to smoke-free legislation § Routine data on ETS health outcomes (e. g. hospital admissions for acute coronary syndrome; consultations for CHD, COPD and asthma) § Routine data from SHS on smoking behaviour and quit attempts – changing patterns before-after legislation § Routine economic data (LFS, GDP, IDBR, ABI) – changes in the economic performance of the hospitality sector before-after legislation (employment, turnover, profitability, openings/closures) § Primary research – seven linked studies Journal of Public Health, Volume 28, Number 1, March 2006 , pp. 24 -30(7) http: //jpubhealth. oxfordjournals. org/cgi/content/abstract/28/1/24

Logic Model of Expected Outcomes Associated with Smoke-free legislation

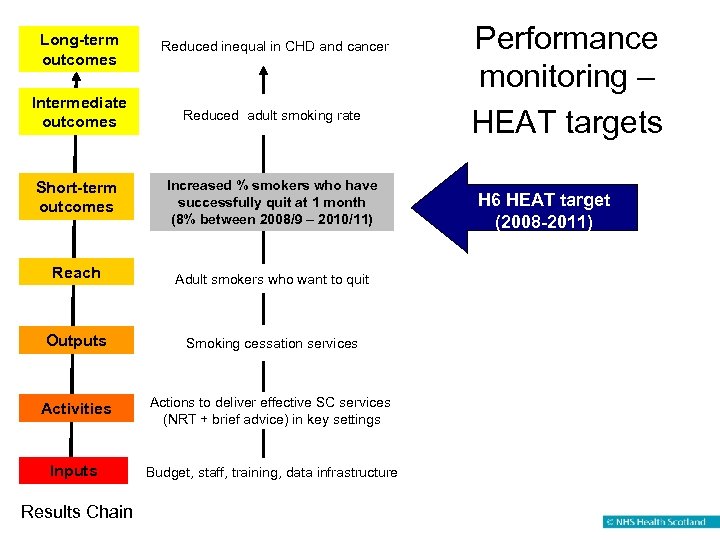

Long-term outcomes Reduced inequal in CHD and cancer Intermediate outcomes Reduced adult smoking rate Short-term outcomes Increased % smokers who have successfully quit at 1 month (8% between 2008/9 – 2010/11) Reach Adult smokers who want to quit Outputs Smoking cessation services Activities Actions to deliver effective SC services (NRT + brief advice) in key settings Inputs Budget, staff, training, data infrastructure Results Chain Performance monitoring – HEAT targets H 6 HEAT target (2008 -2011)

Evaluation in the Programme/Policy Cycle Review Impact/ outcome evaluation Planning Implement. Development ation Pretesting Performance monitoring systems Evaluation of pilot initiatives

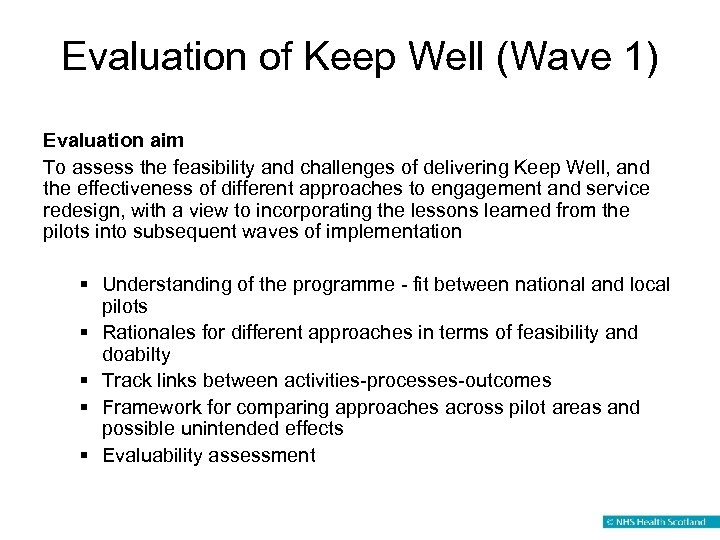

Evaluation of Keep Well (Wave 1) Evaluation aim To assess the feasibility and challenges of delivering Keep Well, and the effectiveness of different approaches to engagement and service redesign, with a view to incorporating the lessons learned from the pilots into subsequent waves of implementation § Understanding of the programme - fit between national and local pilots § Rationales for different approaches in terms of feasibility and doabilty § Track links between activities-processes-outcomes § Framework for comparing approaches across pilot areas and possible unintended effects § Evaluability assessment

Evaluation of Keep Well (Wave 1) Use of population health data Phase 1 (2007 -10) Informed by Theory of Change - understanding the process of implementation of Keep Well 1: Tracking theories of change at national level and local pilots 2: Tracking the impact of KW on ‘anticipatory care’ in the target population using secondary data Phase 2 (2009 -10) Informed by Realistic Evaluation - deeper understanding of certain facets through use of case studies Practice level case studies to assess the impact of aspects of Keep Well (informed by Phase 1 findings) Patient and practice experiences (2009 -10) Collection of quantitative and qualitative data at practice level, and patient level including patients recruited via Keep Well practices and community-based venues.

Pre-testing – Bowel Cancer Campaign (Wo. SCAP) Use of Qualitative research 5 concepts pre-tested in 6 focus groups § ‘niggling worries’ addressed real barriers to action –inertia and fear § Proved compelling and intriguing due to dialogue with man confronting his fears § It had a direct call to action –‘go to your doctor if your bowel habits change or you have blood in your motions’ § It was felt to be an empathetic way of tackling the fear that surrounds the subject area

Evaluation in the Programme/Policy Cycle Outcome planning Health Impact Assessment Review Impact/ outcome evaluation Implementation Performance monitoring Planning Development Pretesting Evaluation of pilot initiatives

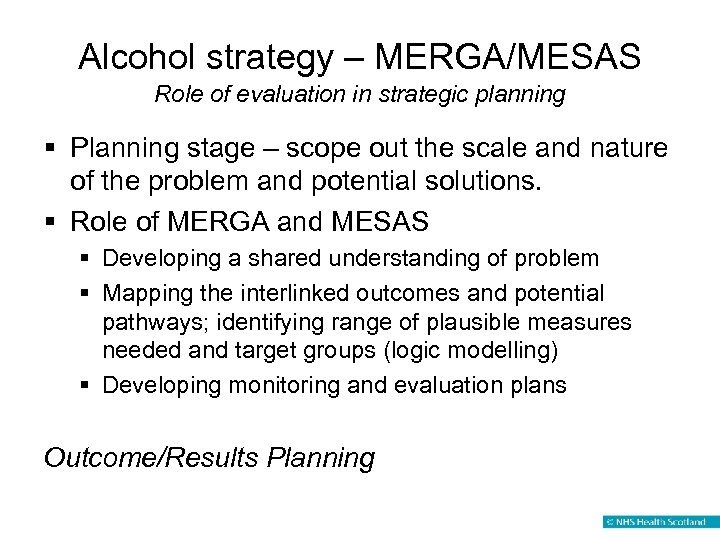

Alcohol strategy – MERGA/MESAS Role of evaluation in strategic planning § Planning stage – scope out the scale and nature of the problem and potential solutions. § Role of MERGA and MESAS § Developing a shared understanding of problem § Mapping the interlinked outcomes and potential pathways; identifying range of plausible measures needed and target groups (logic modelling) § Developing monitoring and evaluation plans Outcome/Results Planning

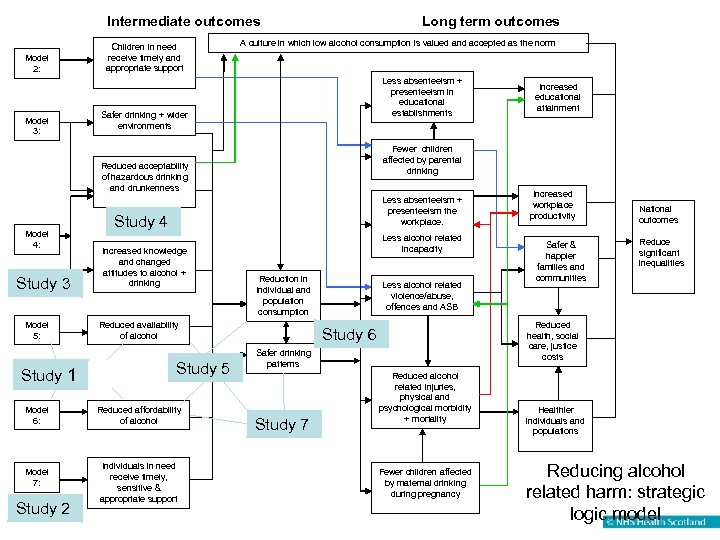

Intermediate outcomes Model 2: Model 3: Children in need receive timely and appropriate support Long term outcomes A culture in which low alcohol consumption is valued and accepted as the norm Less absenteeism + presenteeism in educational establishments Safer drinking + wider environments Fewer children affected by parental drinking Reduced acceptability of hazardous drinking and drunkenness Model 4: Study 3 Model 5: Study 1 Model 6: Model 7: Study 2 Less absenteeism + presenteeism the workplace. Study 4 Increased knowledge and changed attitudes to alcohol + drinking Less alcohol related incapacity Reduction in Individual and population consumption Reduced availability of alcohol Study 5 Reduced affordability of alcohol Individuals in need receive timely, sensitive & appropriate support Increased educational attainment Less alcohol related violence/abuse, offences and ASB Safer & happier families and communities National outcomes Reduce significant inequalities Reduced health, social care, justice costs Study 6 Safer drinking patterns Study 7 Increased workplace productivity Reduced alcohol related injuries, physical and psychological morbidity + mortality Fewer children affected by maternal drinking during pregnancy Healthier individuals and populations Reducing alcohol related harm: strategic logic model

Evaluation in the Programme/Policy Cycle Outcome planning Review processes Review Impact/ outcome evaluation Implementation Planning Health Impact Assessment Development Pretesting Performance monitoring systems Evaluation of pilot initiatives

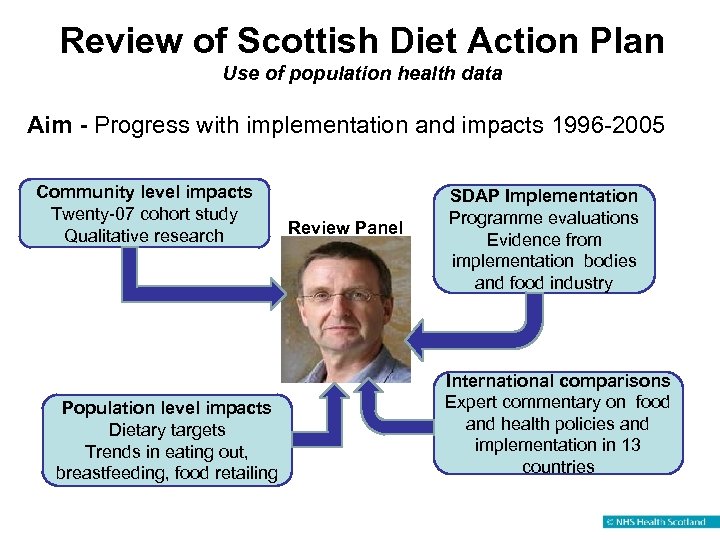

Review of Scottish Diet Action Plan Use of population health data Aim - Progress with implementation and impacts 1996 -2005 Community level impacts Twenty-07 cohort study Qualitative research Population level impacts Dietary targets Trends in eating out, breastfeeding, food retailing Review Panel SDAP Implementation Programme evaluations Evidence from implementation bodies and food industry International comparisons Expert commentary on food and health policies and implementation in 13 countries

Evaluation in the Programme/Policy Cycle Outcome planning Review processes Review REAL EFFECTS Impact/ outcome evaluation Implementation Planning Development Health Impact Assessment LIKELY EFFECTS Pretesting Performance monitoring systems Evaluation of pilot initiatives

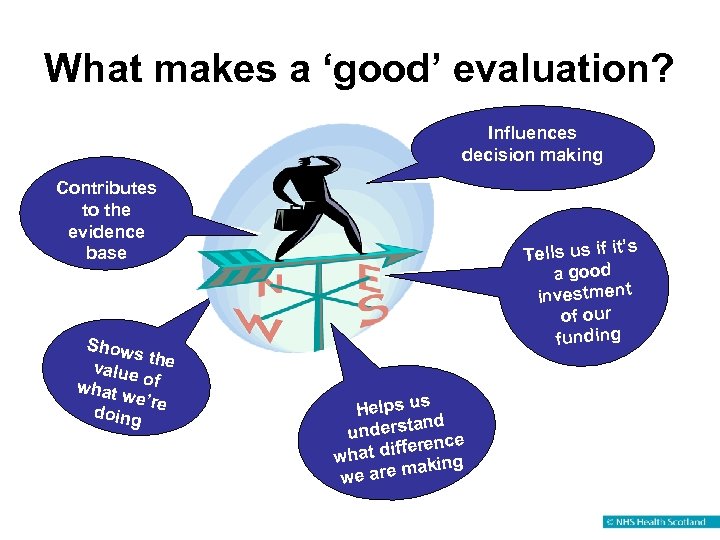

What makes a ‘good’ evaluation? Influences decision making Contributes to the evidence base Show s the value what of we’re doing Tells us if it’s a good investment of our funding s Hel p s u an d u n d erst en ce a t d i ffe r wh ing are mak we

48a81f7d29d78fc7c93fe330bbd9ea0c.ppt