1b8b453ebff864f1e90d5289d05527a4.ppt

- Количество слайдов: 70

Preventing Errors: Safety for Patients and Caregivers Stephen R Czekalinski MBA, RN, BSN

Preventing Errors: Safety for Patients and Caregivers Stephen R Czekalinski MBA, RN, BSN

Agenda • Patient/Staff safety in healthcare • Where we started • How far have we come? • Next Steps • Culture • Human factors ergonomics (HFE) • Questions and such 2

Agenda • Patient/Staff safety in healthcare • Where we started • How far have we come? • Next Steps • Culture • Human factors ergonomics (HFE) • Questions and such 2

Common Assumptions in Healthcare • Errors are personal failings • Someone must be at fault • Healthcare professionals resist change 3

Common Assumptions in Healthcare • Errors are personal failings • Someone must be at fault • Healthcare professionals resist change 3

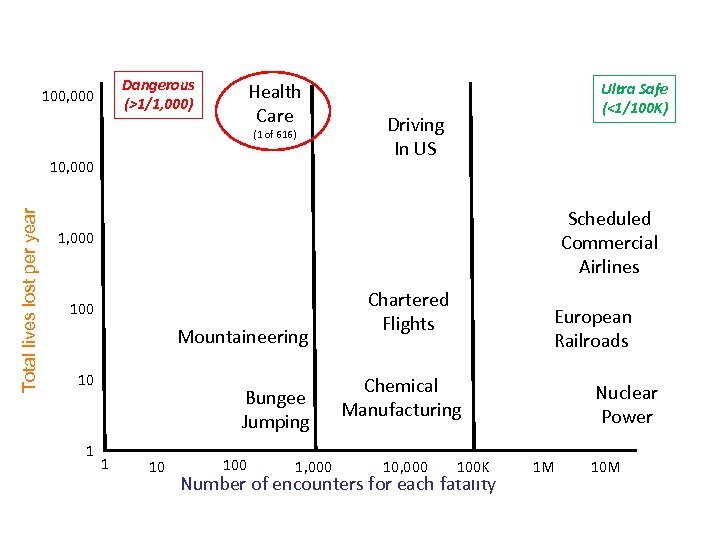

How Safe is Healthcare? Dangerous (>1/1, 000) 100, 000 Health Care (1 of 616) Total lives lost per year 10, 000 Ultra Safe (<1/100 K) Driving In US Scheduled Commercial Airlines 1, 000 100 Mountaineering 10 1 Bungee Jumping 1 10 100 1, 000 Chartered Flights European Railroads Chemical Manufacturing 10, 000 100 K Number of encounters for each fatality Nuclear Power 1 M 10 M

How Safe is Healthcare? Dangerous (>1/1, 000) 100, 000 Health Care (1 of 616) Total lives lost per year 10, 000 Ultra Safe (<1/100 K) Driving In US Scheduled Commercial Airlines 1, 000 100 Mountaineering 10 1 Bungee Jumping 1 10 100 1, 000 Chartered Flights European Railroads Chemical Manufacturing 10, 000 100 K Number of encounters for each fatality Nuclear Power 1 M 10 M

Patient Safety 48, 000 -98, 000 lives lost every year 5

Patient Safety 48, 000 -98, 000 lives lost every year 5

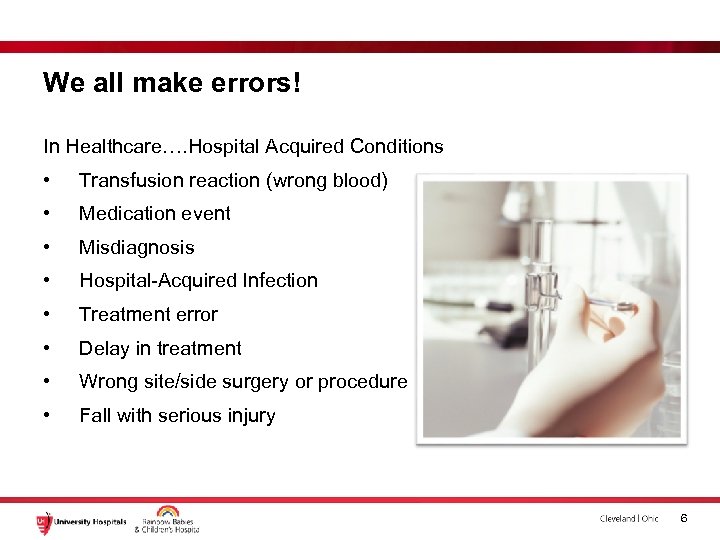

We all make errors! In Healthcare…. Hospital Acquired Conditions • Transfusion reaction (wrong blood) • Medication event • Misdiagnosis • Hospital-Acquired Infection • Treatment error • Delay in treatment • Wrong site/side surgery or procedure • Fall with serious injury 6

We all make errors! In Healthcare…. Hospital Acquired Conditions • Transfusion reaction (wrong blood) • Medication event • Misdiagnosis • Hospital-Acquired Infection • Treatment error • Delay in treatment • Wrong site/side surgery or procedure • Fall with serious injury 6

Josie King - Died February 22, 2001 7

Josie King - Died February 22, 2001 7

High reliability organizations (HROs) “operate under very trying conditions all the time and yet manage to have fewer than their fair share of accidents. ” Managing the Unexpected (Weick & Sutcliffe)

High reliability organizations (HROs) “operate under very trying conditions all the time and yet manage to have fewer than their fair share of accidents. ” Managing the Unexpected (Weick & Sutcliffe)

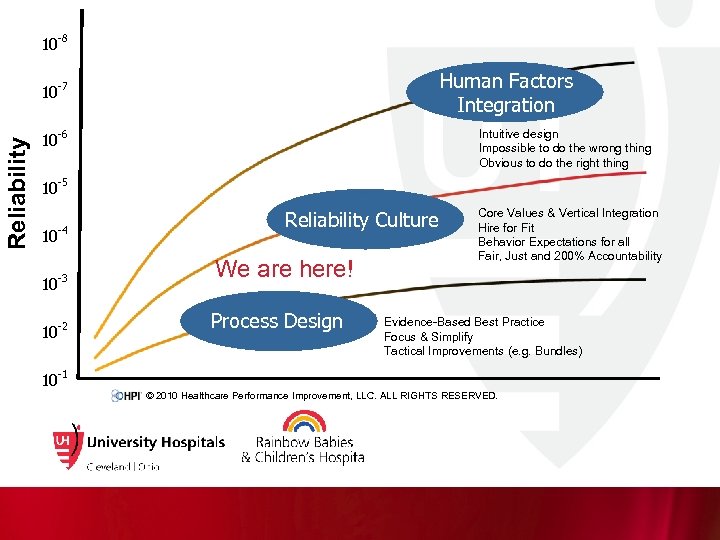

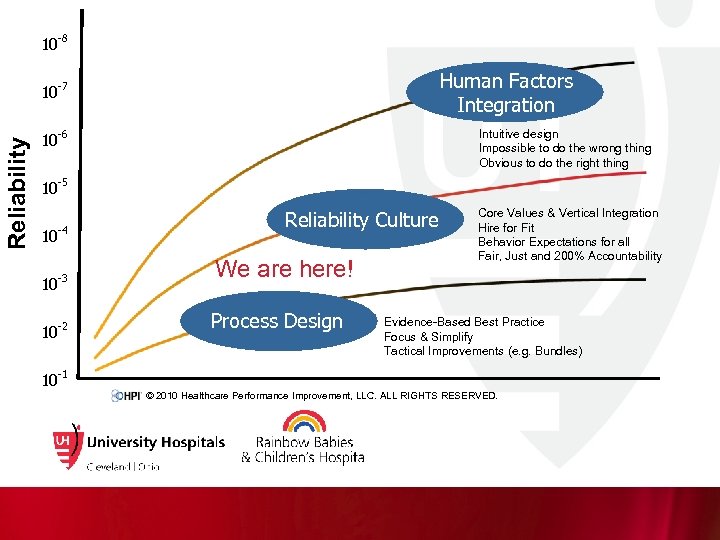

10 Reliability 10 -8 -7 10 -6 10 Optimized Outcomes -5 10 -4 10 -3 10 -2 10 Human Factors Integration Intuitive design Impossible to do the wrong thing Obvious to do the right thing Reliability Culture Core Values & Vertical Integration Hire for Fit Behavior Expectations for all Fair, Just and 200% Accountability -1 We are here! Process Design Evidence-Based Best Practice Focus & Simplify Tactical Improvements (e. g. Bundles) © 2010 Healthcare Performance Improvement, LLC. ALL RIGHTS RESERVED.

10 Reliability 10 -8 -7 10 -6 10 Optimized Outcomes -5 10 -4 10 -3 10 -2 10 Human Factors Integration Intuitive design Impossible to do the wrong thing Obvious to do the right thing Reliability Culture Core Values & Vertical Integration Hire for Fit Behavior Expectations for all Fair, Just and 200% Accountability -1 We are here! Process Design Evidence-Based Best Practice Focus & Simplify Tactical Improvements (e. g. Bundles) © 2010 Healthcare Performance Improvement, LLC. ALL RIGHTS RESERVED.

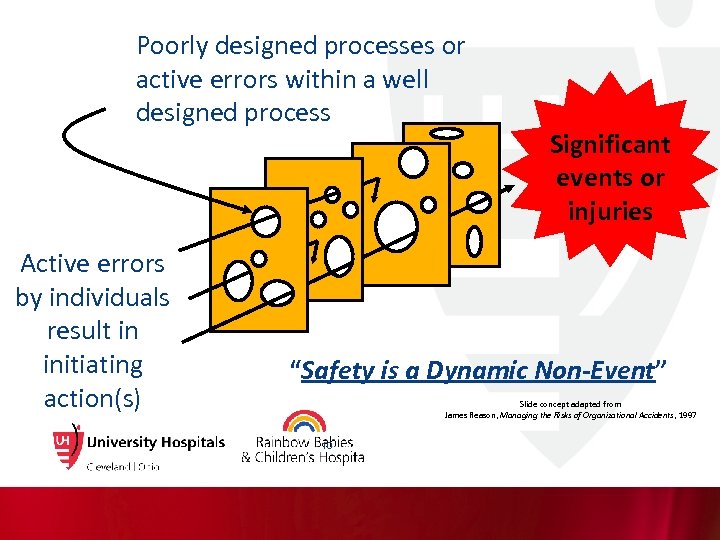

Poorly designed processes or active errors within a well designed process Active errors by individuals result in initiating action(s) Significant events or injuries “Safety is a Dynamic Non-Event” Slide concept adapted from James Reason, Managing the Risks of Organizational Accidents, 1997 10

Poorly designed processes or active errors within a well designed process Active errors by individuals result in initiating action(s) Significant events or injuries “Safety is a Dynamic Non-Event” Slide concept adapted from James Reason, Managing the Risks of Organizational Accidents, 1997 10

As Humans, We Work in 3 Modes Skill-Based Performance “Auto-Pilot Mode” Rule-Based Performance “If-Then Response Mode” Knowledge-Based Performance “Figuring It Out Mode” 11

As Humans, We Work in 3 Modes Skill-Based Performance “Auto-Pilot Mode” Rule-Based Performance “If-Then Response Mode” Knowledge-Based Performance “Figuring It Out Mode” 11

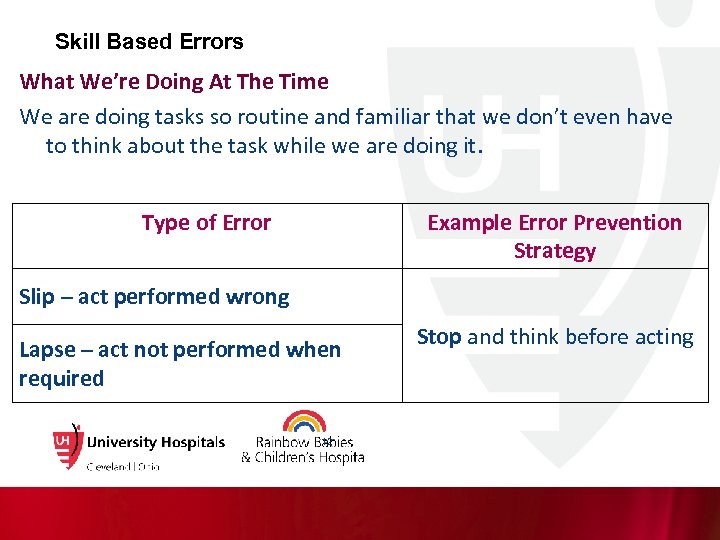

Skill Based Errors What We’re Doing At The Time We are doing tasks so routine and familiar that we don’t even have to think about the task while we are doing it. Type of Error Example Error Prevention Strategy Slip – act performed wrong Lapse – act not performed when required 12 Stop and think before acting

Skill Based Errors What We’re Doing At The Time We are doing tasks so routine and familiar that we don’t even have to think about the task while we are doing it. Type of Error Example Error Prevention Strategy Slip – act performed wrong Lapse – act not performed when required 12 Stop and think before acting

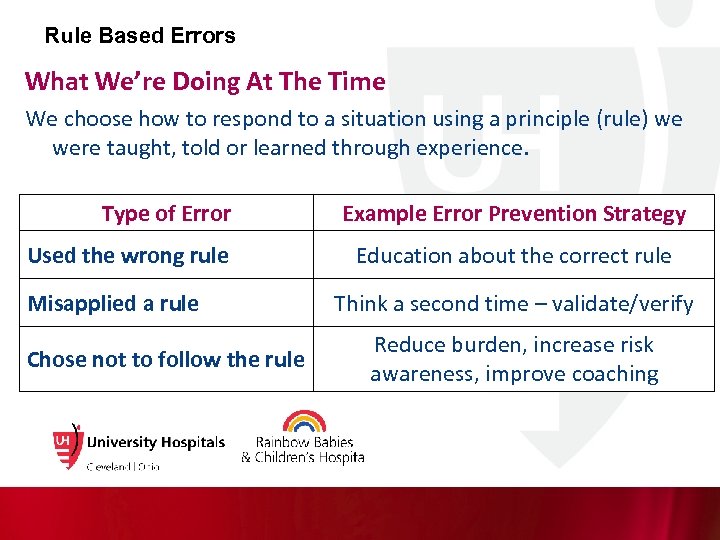

Rule Based Errors What We’re Doing At The Time We choose how to respond to a situation using a principle (rule) we were taught, told or learned through experience. Type of Error Used the wrong rule Misapplied a rule Chose not to follow the rule Example Error Prevention Strategy Education about the correct rule Think a second time – validate/verify Reduce burden, increase risk awareness, improve coaching

Rule Based Errors What We’re Doing At The Time We choose how to respond to a situation using a principle (rule) we were taught, told or learned through experience. Type of Error Used the wrong rule Misapplied a rule Chose not to follow the rule Example Error Prevention Strategy Education about the correct rule Think a second time – validate/verify Reduce burden, increase risk awareness, improve coaching

Knowledge Based Errors What We’re Doing At The Time We’re problem solving in a new, unfamiliar situation. We don’t have a skill for the situation, we don’t know the rules, or no rule exists. So we come up with the answer by: • Using what we do know (fundamentals) • Taking a guess • Figuring it out by trial-and-error Type of Error Example Error Prevention Strategy We came up with the wrong answer (a mistake) STOP and find an expert who/that knows the right answer 14

Knowledge Based Errors What We’re Doing At The Time We’re problem solving in a new, unfamiliar situation. We don’t have a skill for the situation, we don’t know the rules, or no rule exists. So we come up with the answer by: • Using what we do know (fundamentals) • Taking a guess • Figuring it out by trial-and-error Type of Error Example Error Prevention Strategy We came up with the wrong answer (a mistake) STOP and find an expert who/that knows the right answer 14

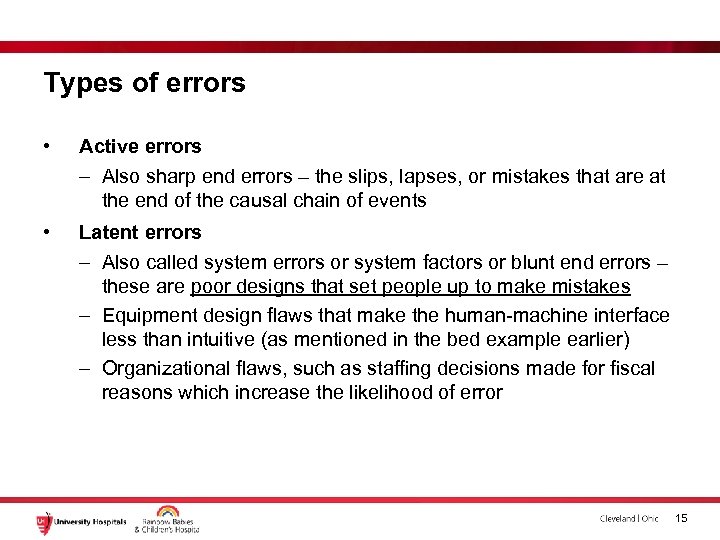

Types of errors • Active errors – Also sharp end errors – the slips, lapses, or mistakes that are at the end of the causal chain of events • Latent errors – Also called system errors or system factors or blunt end errors – these are poor designs that set people up to make mistakes – Equipment design flaws that make the human-machine interface less than intuitive (as mentioned in the bed example earlier) – Organizational flaws, such as staffing decisions made for fiscal reasons which increase the likelihood of error 15

Types of errors • Active errors – Also sharp end errors – the slips, lapses, or mistakes that are at the end of the causal chain of events • Latent errors – Also called system errors or system factors or blunt end errors – these are poor designs that set people up to make mistakes – Equipment design flaws that make the human-machine interface less than intuitive (as mentioned in the bed example earlier) – Organizational flaws, such as staffing decisions made for fiscal reasons which increase the likelihood of error 15

Exercise 16

Exercise 16

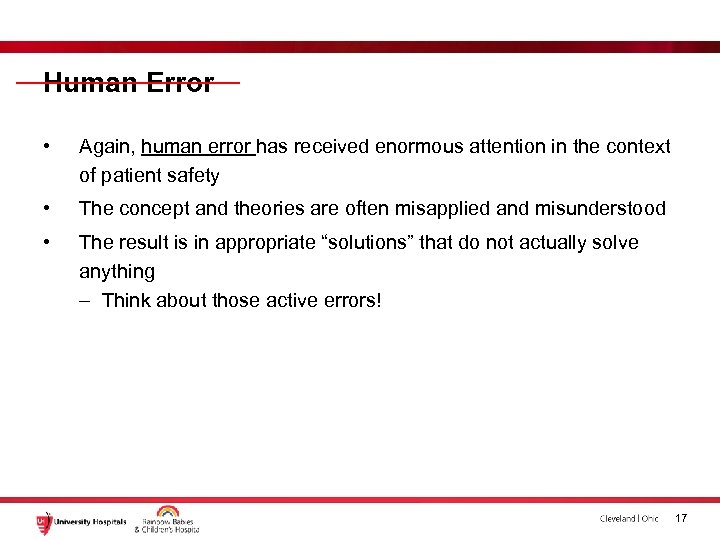

Human Error • Again, human error has received enormous attention in the context of patient safety • The concept and theories are often misapplied and misunderstood • The result is in appropriate “solutions” that do not actually solve anything – Think about those active errors! 17

Human Error • Again, human error has received enormous attention in the context of patient safety • The concept and theories are often misapplied and misunderstood • The result is in appropriate “solutions” that do not actually solve anything – Think about those active errors! 17

Errors vs Hazards 18

Errors vs Hazards 18

So have we made a difference? 19

So have we made a difference? 19

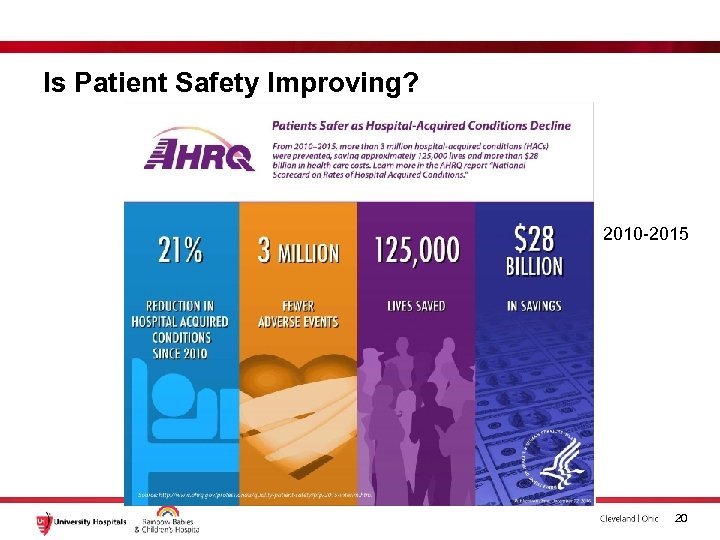

Is Patient Safety Improving? 2010 -2015 20

Is Patient Safety Improving? 2010 -2015 20

Is Patient Safety Improving? http: //www. cnn. com/2016/05/03/health/medical-error-a-leading-cause-ofdeath/index. html 21

Is Patient Safety Improving? http: //www. cnn. com/2016/05/03/health/medical-error-a-leading-cause-ofdeath/index. html 21

Is Patient Safety Improving? Maybe, maybe not – but there’s room for improvement Let’s talk about some of that… 22

Is Patient Safety Improving? Maybe, maybe not – but there’s room for improvement Let’s talk about some of that… 22

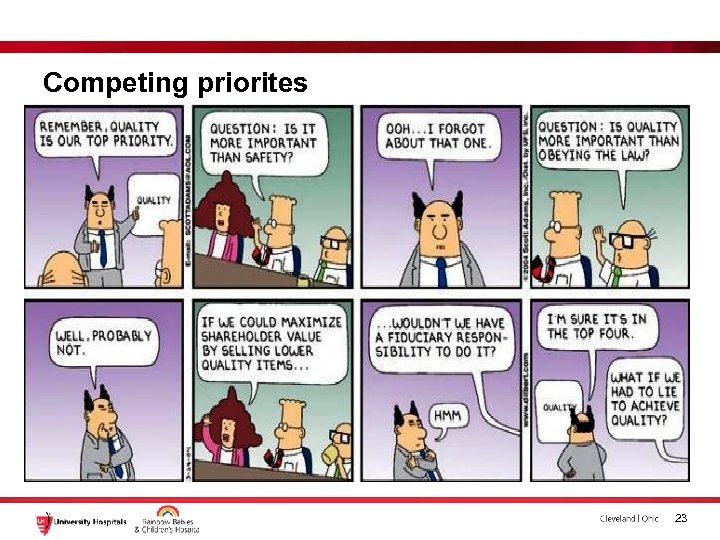

Competing priorites 23

Competing priorites 23

Culture 24

Culture 24

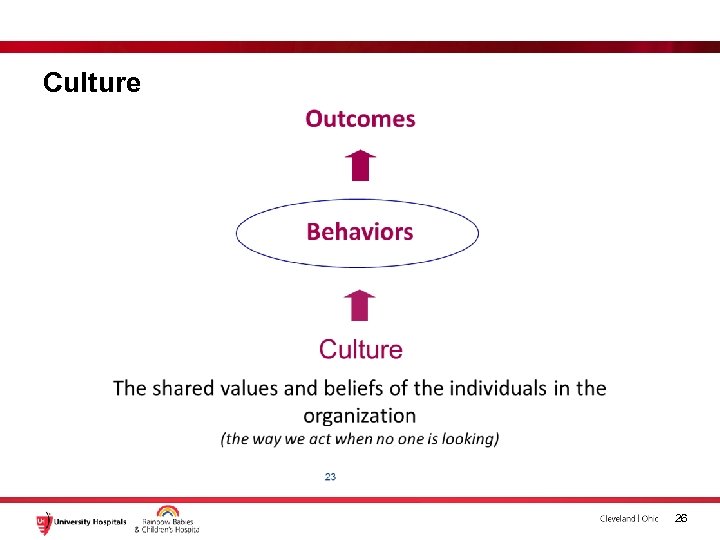

Culture – what is culture? 25

Culture – what is culture? 25

Culture 26

Culture 26

Culture • Ross, C. (2017, Mar. 20). When Hospital Inspectors are in Town, Fewer Patients Die, Study Says. Boston, MA: STAT. Retrieved from: https: //www. statnews. com/2017/03/20/hospital-inspectors-fewer-patients-die 27

Culture • Ross, C. (2017, Mar. 20). When Hospital Inspectors are in Town, Fewer Patients Die, Study Says. Boston, MA: STAT. Retrieved from: https: //www. statnews. com/2017/03/20/hospital-inspectors-fewer-patients-die 27

The next frontier 28

The next frontier 28

High Reliability Three Principles of Anticipation- • 1. Preoccupation with Failure • 2. Sensitivity to Operations • 3. Reluctance to Simplify Two Principles of Containment- • 4. Commitment to Resilience • 5. Deference to Expertise Karl E. Weick and Kathleen M. Sutcliffe 29

High Reliability Three Principles of Anticipation- • 1. Preoccupation with Failure • 2. Sensitivity to Operations • 3. Reluctance to Simplify Two Principles of Containment- • 4. Commitment to Resilience • 5. Deference to Expertise Karl E. Weick and Kathleen M. Sutcliffe 29

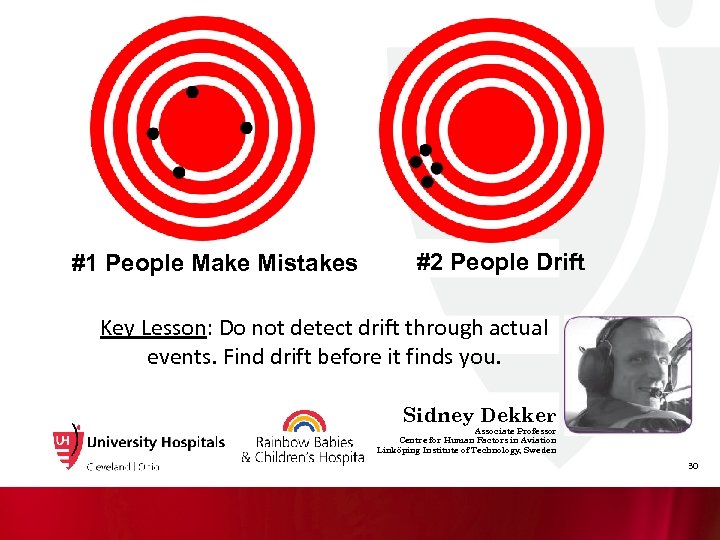

#1 People Make Mistakes #2 People Drift Key Lesson: Do not detect drift through actual events. Find drift before it finds you. Sidney Dekker Associate Professor Centre for Human Factors in Aviation Linköping Institute of Technology, Sweden 30

#1 People Make Mistakes #2 People Drift Key Lesson: Do not detect drift through actual events. Find drift before it finds you. Sidney Dekker Associate Professor Centre for Human Factors in Aviation Linköping Institute of Technology, Sweden 30

Safety Culture Composites, 2017 Safety Culture Composites Your Hospital's Composite Score Average % of positive responses National Benchmark (50 th percentile) Overall Perceptions of Safety (4 items--% Agree/Strongly Agree) 66% Frequency of Events Reported (3 items--% Most of the time/Always) 67% Supervisor/Manager Expectations & Actions Promoting Patient Safety (4 items--% Agree/Strongly Agree) 79% Organizational Learning--Continuous Improvement (3 items--% Agree/Strongly Agree) 73% Teamwork Within Units (4 items--% Agree/Strongly Agree) 82% Communication Openness (3 items--% Most of the time/Always) 64% Feedback & Communication About Error (3 items--% Most of the time/Always) 68% Nonpunitive Response to Error (3 items--% Agree/Strongly Agree) 44% Staffing (4 survey items--% Agree/Strongly Agree) 53% Hospital Management Support for Patient Safety (3 items--% Agree/Strongly Agree) 73% Teamwork Across Hospital Units (4 survey items--% Agree/Strongly Agree) 61% Hospital Handoffs & Transitions 4 survey items--% Agree/Strongly Agree) 46% 31

Safety Culture Composites, 2017 Safety Culture Composites Your Hospital's Composite Score Average % of positive responses National Benchmark (50 th percentile) Overall Perceptions of Safety (4 items--% Agree/Strongly Agree) 66% Frequency of Events Reported (3 items--% Most of the time/Always) 67% Supervisor/Manager Expectations & Actions Promoting Patient Safety (4 items--% Agree/Strongly Agree) 79% Organizational Learning--Continuous Improvement (3 items--% Agree/Strongly Agree) 73% Teamwork Within Units (4 items--% Agree/Strongly Agree) 82% Communication Openness (3 items--% Most of the time/Always) 64% Feedback & Communication About Error (3 items--% Most of the time/Always) 68% Nonpunitive Response to Error (3 items--% Agree/Strongly Agree) 44% Staffing (4 survey items--% Agree/Strongly Agree) 53% Hospital Management Support for Patient Safety (3 items--% Agree/Strongly Agree) 73% Teamwork Across Hospital Units (4 survey items--% Agree/Strongly Agree) 61% Hospital Handoffs & Transitions 4 survey items--% Agree/Strongly Agree) 46% 31

Culture of Blame A barrier to our reporting goals Differences within the hospital, within departments, and within divisions or wards Culture doesn’t change overnight Need to show that reporting yields positive change

Culture of Blame A barrier to our reporting goals Differences within the hospital, within departments, and within divisions or wards Culture doesn’t change overnight Need to show that reporting yields positive change

Creating a culture of safety

Creating a culture of safety

Culture of reporting • Remember hazards? 34

Culture of reporting • Remember hazards? 34

Intuitive Design 35

Intuitive Design 35

Every system is perfectly designed to deliver the results it gets 36

Every system is perfectly designed to deliver the results it gets 36

An Alternative Approach • Human Factors Engineering / Ergonomics (proposed by the IOM and patient safety experts) So – What is it? 37

An Alternative Approach • Human Factors Engineering / Ergonomics (proposed by the IOM and patient safety experts) So – What is it? 37

Definition of the International Ergonomics Association • Ergonomics (or human factors) is the scientific discipline concerned with the understanding of interactions among humans and other elements of a system, and the profession that appies theory, principles, data and methods to design in order to optimize human well-being and overall system performance www. iea. cc 38

Definition of the International Ergonomics Association • Ergonomics (or human factors) is the scientific discipline concerned with the understanding of interactions among humans and other elements of a system, and the profession that appies theory, principles, data and methods to design in order to optimize human well-being and overall system performance www. iea. cc 38

But really, what is it? • SCIENCE – Discovers and applies info about human behavior, abilities, limitations and other characteristics to the design of tools, machines, systems tasks, jobs, and environments for productive, safe, comfortable and effective human use – Basic science of human performance • PRACTICE – Designing the fit between people and products, equipment, facilities, procedures and environments – Evidence based design for supports people’s physical and cognitive work 39

But really, what is it? • SCIENCE – Discovers and applies info about human behavior, abilities, limitations and other characteristics to the design of tools, machines, systems tasks, jobs, and environments for productive, safe, comfortable and effective human use – Basic science of human performance • PRACTICE – Designing the fit between people and products, equipment, facilities, procedures and environments – Evidence based design for supports people’s physical and cognitive work 39

10 Reliability 10 -8 -7 10 -6 10 Optimized Outcomes -5 10 -4 10 -3 10 -2 10 Human Factors Integration Intuitive design Impossible to do the wrong thing Obvious to do the right thing Reliability Culture Core Values & Vertical Integration Hire for Fit Behavior Expectations for all Fair, Just and 200% Accountability -1 We are here! Process Design Evidence-Based Best Practice Focus & Simplify Tactical Improvements (e. g. Bundles) © 2010 Healthcare Performance Improvement, LLC. ALL RIGHTS RESERVED.

10 Reliability 10 -8 -7 10 -6 10 Optimized Outcomes -5 10 -4 10 -3 10 -2 10 Human Factors Integration Intuitive design Impossible to do the wrong thing Obvious to do the right thing Reliability Culture Core Values & Vertical Integration Hire for Fit Behavior Expectations for all Fair, Just and 200% Accountability -1 We are here! Process Design Evidence-Based Best Practice Focus & Simplify Tactical Improvements (e. g. Bundles) © 2010 Healthcare Performance Improvement, LLC. ALL RIGHTS RESERVED.

3 main sub-disciplines • Physical Ergonomics – Working postures, materials handling, repetitive movements, work-related musculoskeletal disorders, workplace layout, safety and health • Cognitive Ergonomics – Mental workload, decision-making, skilled performance, humancomputer interaction, human reliability, work stress and training • Organizational Ergonomics – Optimization of sociotechnical systems, organizational structures, policies, and processes, teamwork, scheduling, coordination/communication 41

3 main sub-disciplines • Physical Ergonomics – Working postures, materials handling, repetitive movements, work-related musculoskeletal disorders, workplace layout, safety and health • Cognitive Ergonomics – Mental workload, decision-making, skilled performance, humancomputer interaction, human reliability, work stress and training • Organizational Ergonomics – Optimization of sociotechnical systems, organizational structures, policies, and processes, teamwork, scheduling, coordination/communication 41

Topics of study • Usability • Info processing • Human-computer interaction • Handoffs • Mental workload • Interruptions and distractions • Situation awareness • Violations or workarounds • Alerts • Human error • Lifting • Safety • Workstation design • Resilience • Training • Job stress • Teamwork 42

Topics of study • Usability • Info processing • Human-computer interaction • Handoffs • Mental workload • Interruptions and distractions • Situation awareness • Violations or workarounds • Alerts • Human error • Lifting • Safety • Workstation design • Resilience • Training • Job stress • Teamwork 42

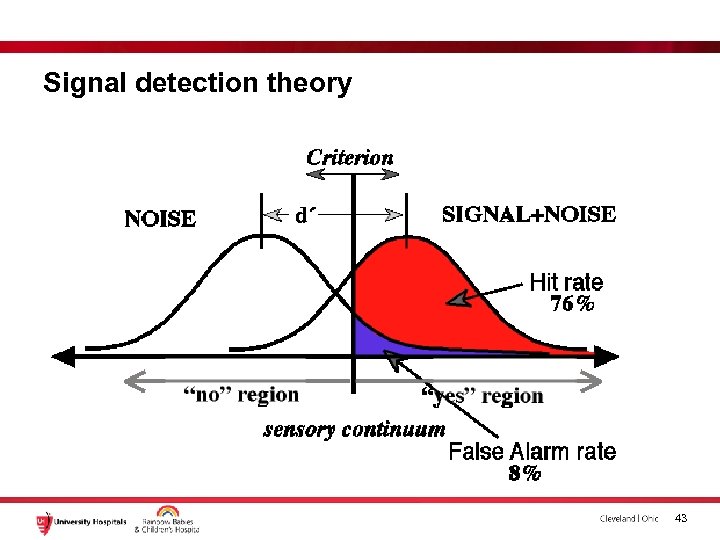

Signal detection theory 43

Signal detection theory 43

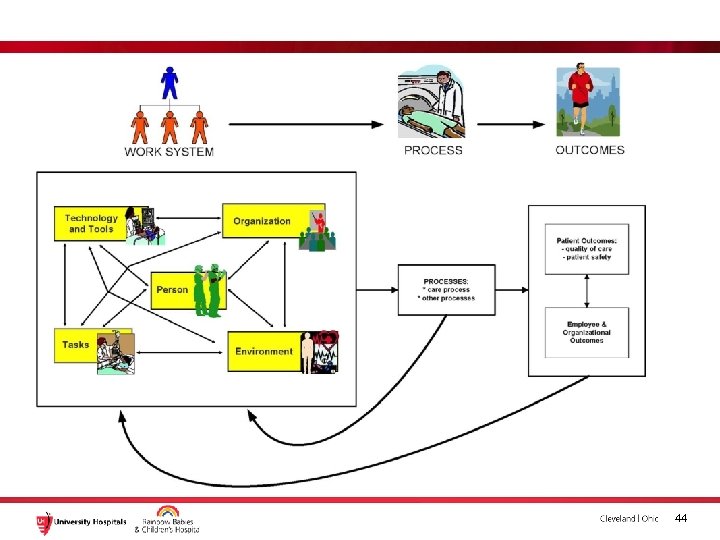

44

44

Take one of the most commonly discussed explanations for train accidents: "human error. " While this may sound straightforward -- an accident caused by a mistake by a train operator such as a driver or engineer -- there are often a multitude of factors that led to that "error" taking place. 45

Take one of the most commonly discussed explanations for train accidents: "human error. " While this may sound straightforward -- an accident caused by a mistake by a train operator such as a driver or engineer -- there are often a multitude of factors that led to that "error" taking place. 45

But train operators' behavior is conditioned by decisions made by work planners or managers, which might have resulted in poor workplace designs, an unbalanced workload, overly complicated operational processes, unsafe conditions, faulty maintenance, ineffective training, nonresponsive managerial systems or poor planning. As such, it is a gross oversimplification to attribute accidents to the actions of frontline operators. 46

But train operators' behavior is conditioned by decisions made by work planners or managers, which might have resulted in poor workplace designs, an unbalanced workload, overly complicated operational processes, unsafe conditions, faulty maintenance, ineffective training, nonresponsive managerial systems or poor planning. As such, it is a gross oversimplification to attribute accidents to the actions of frontline operators. 46

Human Error? 47

Human Error? 47

HFE 48

HFE 48

HFE 49

HFE 49

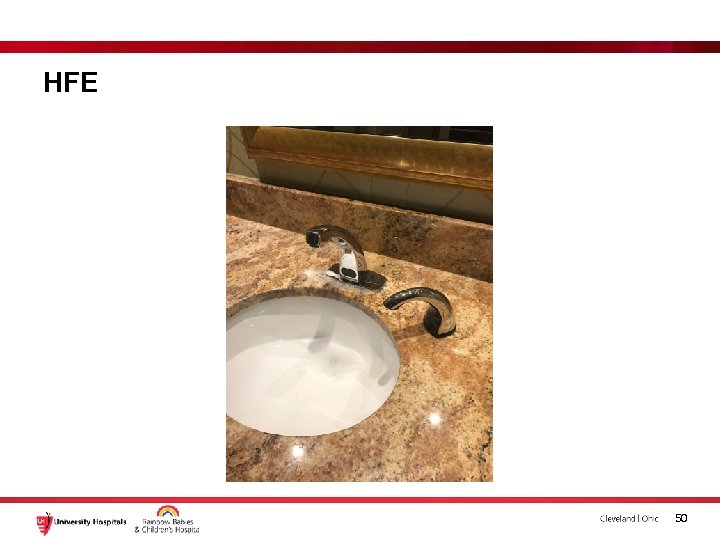

HFE 50

HFE 50

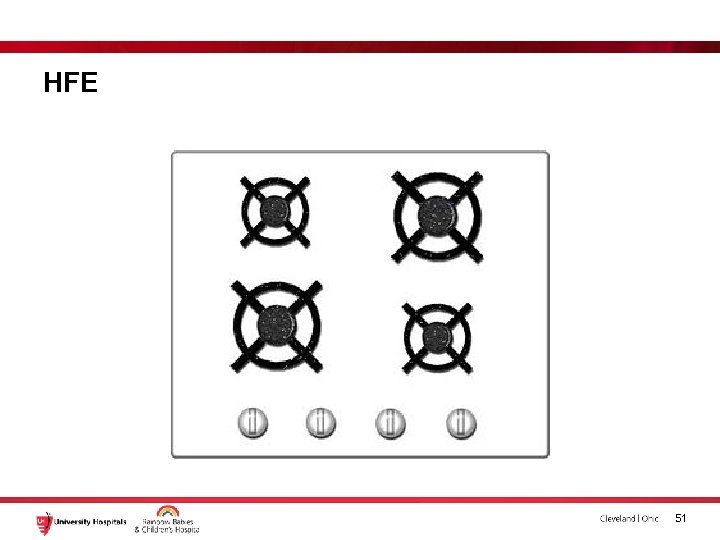

HFE 51

HFE 51

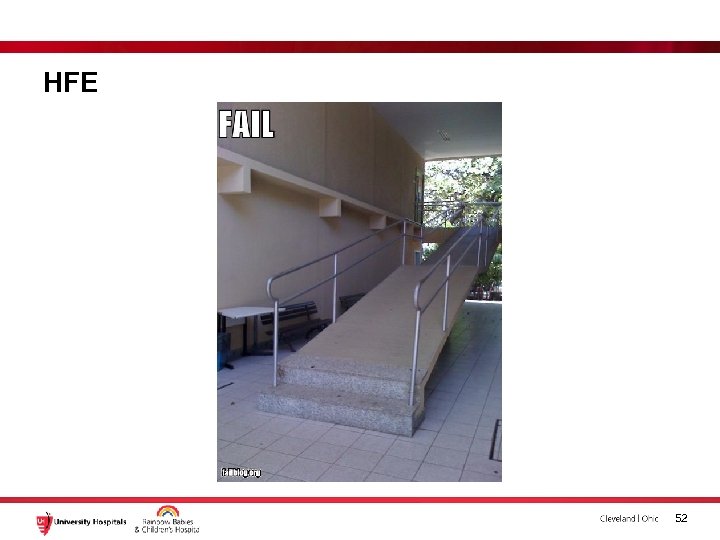

HFE 52

HFE 52

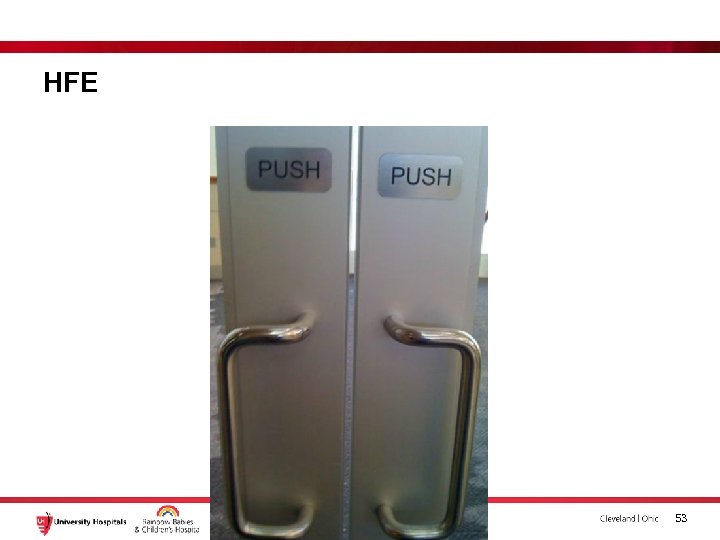

HFE 53

HFE 53

HFE 54

HFE 54

HFE 55

HFE 55

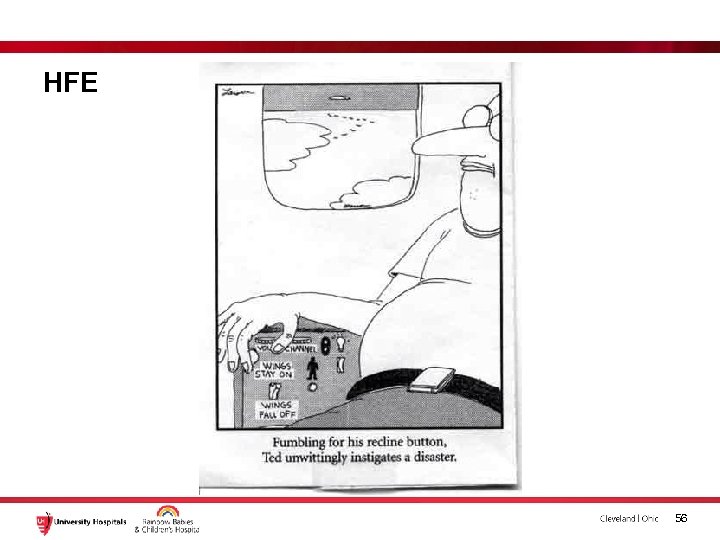

HFE 56

HFE 56

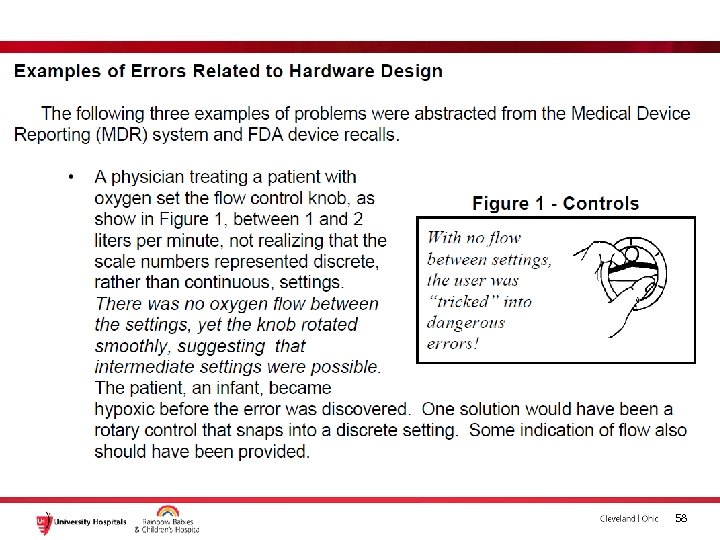

57

57

• A total of 16% of patients used the epinephrine autoinjector properly. Of the remaining 84%, 56% missed 3 or more steps (e. Fig 1 A). The most common error was not holding the unit in place for at least 10 seconds after triggering. A total of 76% of erroneous users made this mistake (e. Fig 1 B). Other common errors included failure to place the needle end of the device on the thigh and failure to depress the device forcefully enough to activate the injection. The least common error was failing to remove the cap before attempting to use the injector. 58

• A total of 16% of patients used the epinephrine autoinjector properly. Of the remaining 84%, 56% missed 3 or more steps (e. Fig 1 A). The most common error was not holding the unit in place for at least 10 seconds after triggering. A total of 76% of erroneous users made this mistake (e. Fig 1 B). Other common errors included failure to place the needle end of the device on the thigh and failure to depress the device forcefully enough to activate the injection. The least common error was failing to remove the cap before attempting to use the injector. 58

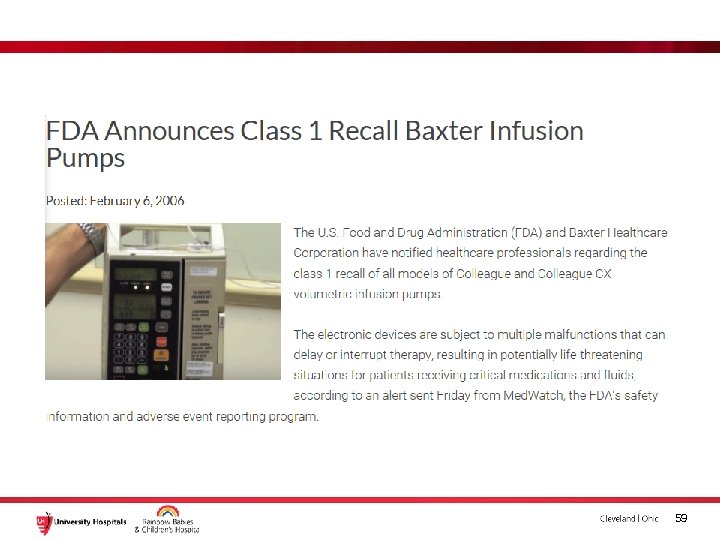

59

59

http: //www. annallergy. org/article/S 10811206(14)00752 -2/abstract 60

http: //www. annallergy. org/article/S 10811206(14)00752 -2/abstract 60

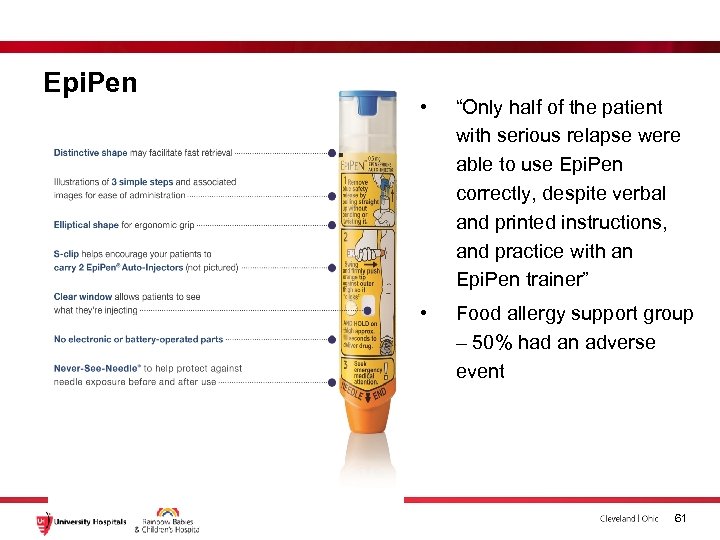

Epi. Pen • “Only half of the patient with serious relapse were able to use Epi. Pen correctly, despite verbal and printed instructions, and practice with an Epi. Pen trainer” • Food allergy support group – 50% had an adverse event 61

Epi. Pen • “Only half of the patient with serious relapse were able to use Epi. Pen correctly, despite verbal and printed instructions, and practice with an Epi. Pen trainer” • Food allergy support group – 50% had an adverse event 61

“…we strongly advocate not blaming clinicians for violations, but rather searching for a more systemsoriented causal explanation. It is, after all, the causes of violations that need remediation. ”

“…we strongly advocate not blaming clinicians for violations, but rather searching for a more systemsoriented causal explanation. It is, after all, the causes of violations that need remediation. ”

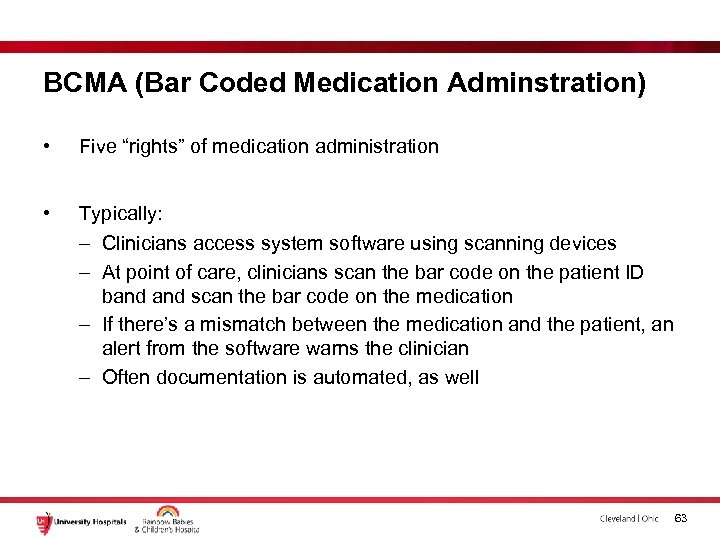

BCMA (Bar Coded Medication Adminstration) • Five “rights” of medication administration • Typically: – Clinicians access system software using scanning devices – At point of care, clinicians scan the bar code on the patient ID band scan the bar code on the medication – If there’s a mismatch between the medication and the patient, an alert from the software warns the clinician – Often documentation is automated, as well 63

BCMA (Bar Coded Medication Adminstration) • Five “rights” of medication administration • Typically: – Clinicians access system software using scanning devices – At point of care, clinicians scan the bar code on the patient ID band scan the bar code on the medication – If there’s a mismatch between the medication and the patient, an alert from the software warns the clinician – Often documentation is automated, as well 63

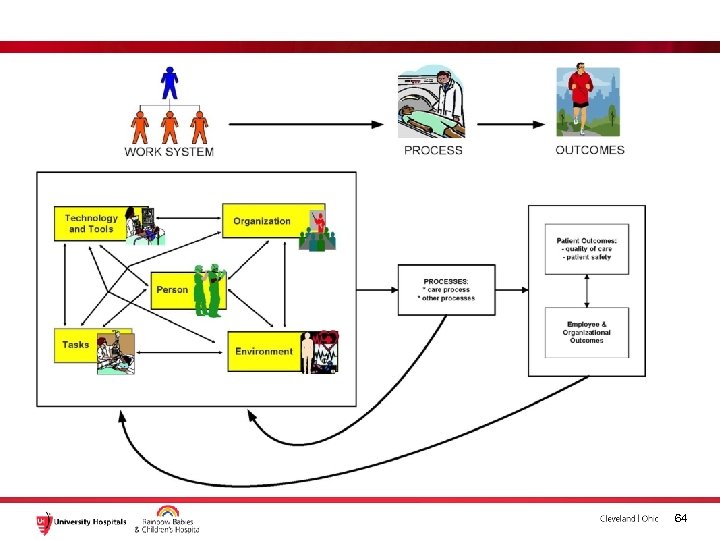

64

64

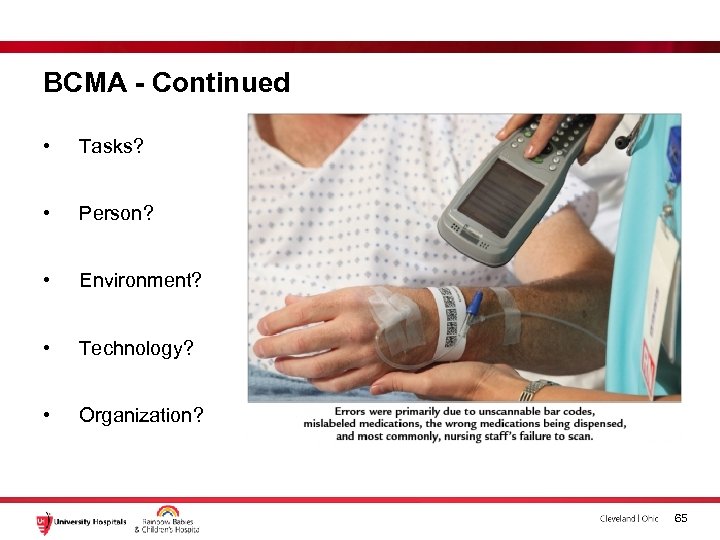

BCMA - Continued • Tasks? • Person? • Environment? • Technology? • Organization? 65

BCMA - Continued • Tasks? • Person? • Environment? • Technology? • Organization? 65

BCMA - Continued • Tasks – Potentially unsafe med admin • Person – Patient in isolation, asleep, etc • Environment – Messy, insufficient light • Technology – Automation surprises, malfunctioning equipment, meds don’t scan • Organization – Interruptions 66

BCMA - Continued • Tasks – Potentially unsafe med admin • Person – Patient in isolation, asleep, etc • Environment – Messy, insufficient light • Technology – Automation surprises, malfunctioning equipment, meds don’t scan • Organization – Interruptions 66

BCMA • Violations? – So what happened? What happens? 67

BCMA • Violations? – So what happened? What happens? 67

Okay so now what? • Focus moving forward should not be on individual error, but identification of hazards and creation of systems that support patient safety 68

Okay so now what? • Focus moving forward should not be on individual error, but identification of hazards and creation of systems that support patient safety 68

Agenda • Patient/Staff safety in healthcare • Where we started • How far have we come? • Next Steps • Culture • Human factors ergonomics (HFE) • Questions and such 69

Agenda • Patient/Staff safety in healthcare • Where we started • How far have we come? • Next Steps • Culture • Human factors ergonomics (HFE) • Questions and such 69

70

70