9f4e5d446ebfc7b8e69229a9396ff0f2.ppt

- Количество слайдов: 30

Predicting Student Emotions in Computer-Human Tutoring Dialogues Diane J. Litman and Kate Forbes-Riley University of Pittsburgh, PA 15260 USA

Predicting Student Emotions in Computer-Human Tutoring Dialogues Diane J. Litman and Kate Forbes-Riley University of Pittsburgh, PA 15260 USA

Motivation u Bridge Learning Gap between Human Tutors and Computer Tutors u Our Approach: Add emotion prediction and adaptation to ITSPOKE, our Intelligent Tutoring SPOKEn dialogue system

Motivation u Bridge Learning Gap between Human Tutors and Computer Tutors u Our Approach: Add emotion prediction and adaptation to ITSPOKE, our Intelligent Tutoring SPOKEn dialogue system

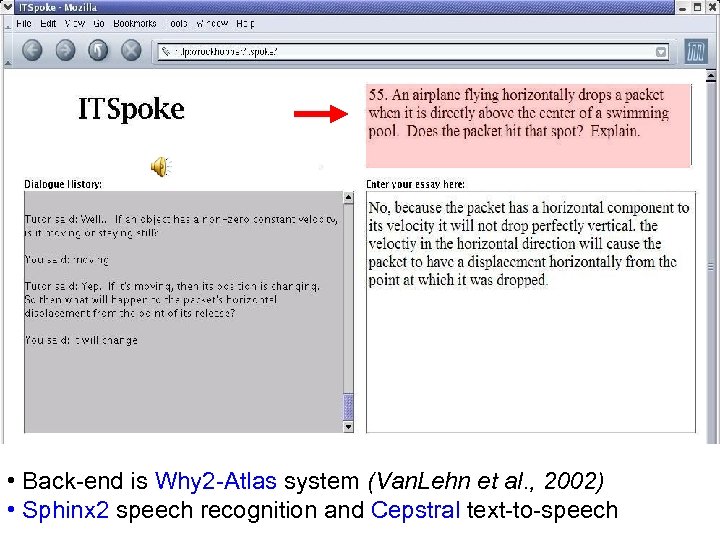

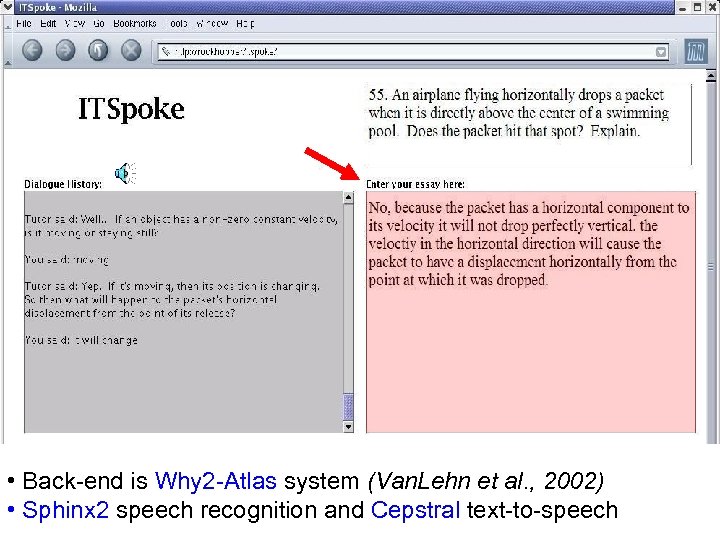

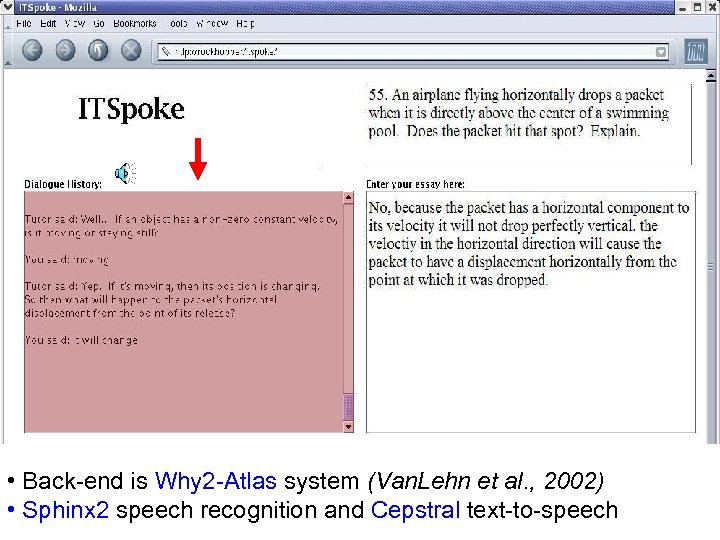

• Back-end is Why 2 -Atlas system (Van. Lehn et al. , 2002) • Sphinx 2 speech recognition and Cepstral text-to-speech

• Back-end is Why 2 -Atlas system (Van. Lehn et al. , 2002) • Sphinx 2 speech recognition and Cepstral text-to-speech

• Back-end is Why 2 -Atlas system (Van. Lehn et al. , 2002) • Sphinx 2 speech recognition and Cepstral text-to-speech

• Back-end is Why 2 -Atlas system (Van. Lehn et al. , 2002) • Sphinx 2 speech recognition and Cepstral text-to-speech

• Back-end is Why 2 -Atlas system (Van. Lehn et al. , 2002) • Sphinx 2 speech recognition and Cepstral text-to-speech

• Back-end is Why 2 -Atlas system (Van. Lehn et al. , 2002) • Sphinx 2 speech recognition and Cepstral text-to-speech

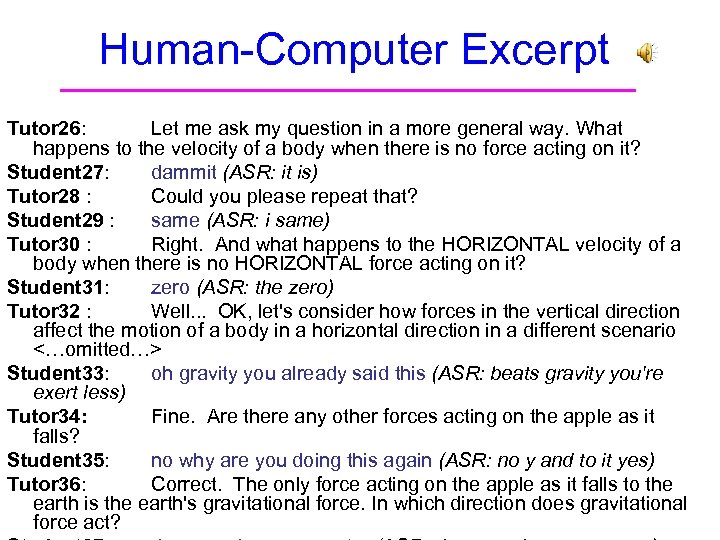

Human-Computer Excerpt Tutor 26: Let me ask my question in a more general way. What happens to the velocity of a body when there is no force acting on it? Student 27: dammit (ASR: it is) Tutor 28 : Could you please repeat that? Student 29 : same (ASR: i same) Tutor 30 : Right. And what happens to the HORIZONTAL velocity of a body when there is no HORIZONTAL force acting on it? Student 31: zero (ASR: the zero) Tutor 32 : Well. . . OK, let's consider how forces in the vertical direction affect the motion of a body in a horizontal direction in a different scenario <…omitted…> Student 33: oh gravity you already said this (ASR: beats gravity you're exert less) Tutor 34: Fine. Are there any other forces acting on the apple as it falls? Student 35: no why are you doing this again (ASR: no y and to it yes) Tutor 36: Correct. The only force acting on the apple as it falls to the earth is the earth's gravitational force. In which direction does gravitational force act?

Human-Computer Excerpt Tutor 26: Let me ask my question in a more general way. What happens to the velocity of a body when there is no force acting on it? Student 27: dammit (ASR: it is) Tutor 28 : Could you please repeat that? Student 29 : same (ASR: i same) Tutor 30 : Right. And what happens to the HORIZONTAL velocity of a body when there is no HORIZONTAL force acting on it? Student 31: zero (ASR: the zero) Tutor 32 : Well. . . OK, let's consider how forces in the vertical direction affect the motion of a body in a horizontal direction in a different scenario <…omitted…> Student 33: oh gravity you already said this (ASR: beats gravity you're exert less) Tutor 34: Fine. Are there any other forces acting on the apple as it falls? Student 35: no why are you doing this again (ASR: no y and to it yes) Tutor 36: Correct. The only force acting on the apple as it falls to the earth is the earth's gravitational force. In which direction does gravitational force act?

Outline Data and Emotion Annotation Machine Learning Experiments – extract linguistic features from student turns – use different feature sets to predict emotions • 19 -36% relative reduction of baseline error – comparison with human tutoring

Outline Data and Emotion Annotation Machine Learning Experiments – extract linguistic features from student turns – use different feature sets to predict emotions • 19 -36% relative reduction of baseline error – comparison with human tutoring

ITSPOKE Dialogue Corpus – 100 spoken tutoring dialogues (physics problems) with ITSPOKE • on average, 19. 4 minutes and 25 student turns – 20 subjects • university students who have never taken college physics and who are native speakers

ITSPOKE Dialogue Corpus – 100 spoken tutoring dialogues (physics problems) with ITSPOKE • on average, 19. 4 minutes and 25 student turns – 20 subjects • university students who have never taken college physics and who are native speakers

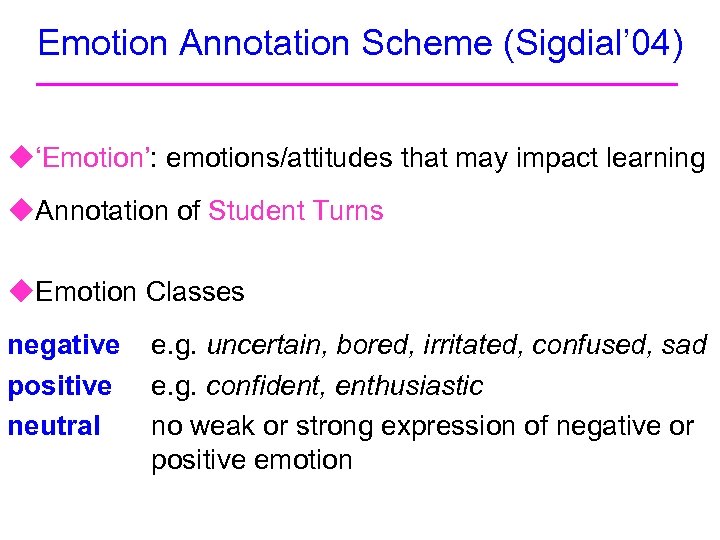

Emotion Annotation Scheme (Sigdial’ 04) u‘Emotion’: emotions/attitudes that may impact learning u. Annotation of Student Turns u. Emotion Classes negative positive neutral e. g. uncertain, bored, irritated, confused, sad e. g. confident, enthusiastic no weak or strong expression of negative or positive emotion

Emotion Annotation Scheme (Sigdial’ 04) u‘Emotion’: emotions/attitudes that may impact learning u. Annotation of Student Turns u. Emotion Classes negative positive neutral e. g. uncertain, bored, irritated, confused, sad e. g. confident, enthusiastic no weak or strong expression of negative or positive emotion

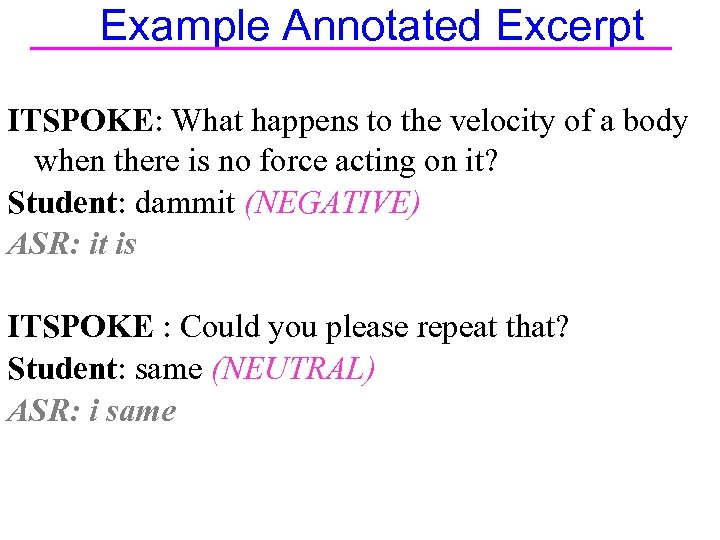

Example Annotated Excerpt ITSPOKE: What happens to the velocity of a body when there is no force acting on it? Student: dammit (NEGATIVE) ASR: it is ITSPOKE : Could you please repeat that? Student: same (NEUTRAL) ASR: i same

Example Annotated Excerpt ITSPOKE: What happens to the velocity of a body when there is no force acting on it? Student: dammit (NEGATIVE) ASR: it is ITSPOKE : Could you please repeat that? Student: same (NEUTRAL) ASR: i same

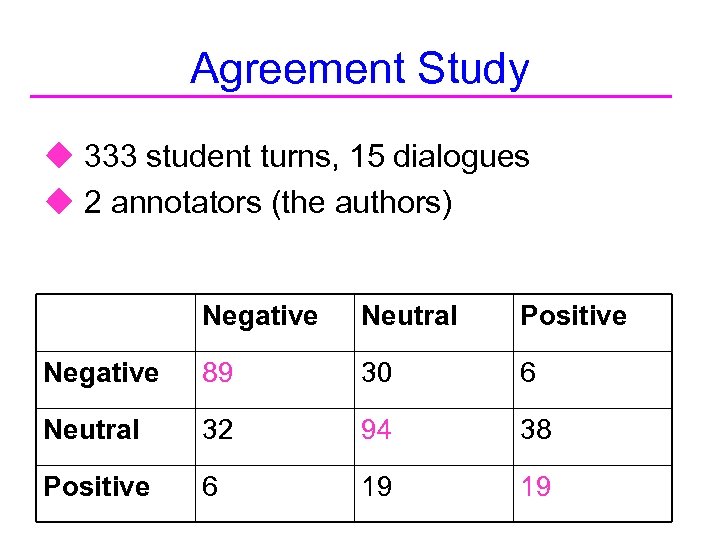

Agreement Study u 333 student turns, 15 dialogues u 2 annotators (the authors) Negative Neutral Positive Negative 89 30 6 Neutral 32 94 38 Positive 6 19 19

Agreement Study u 333 student turns, 15 dialogues u 2 annotators (the authors) Negative Neutral Positive Negative 89 30 6 Neutral 32 94 38 Positive 6 19 19

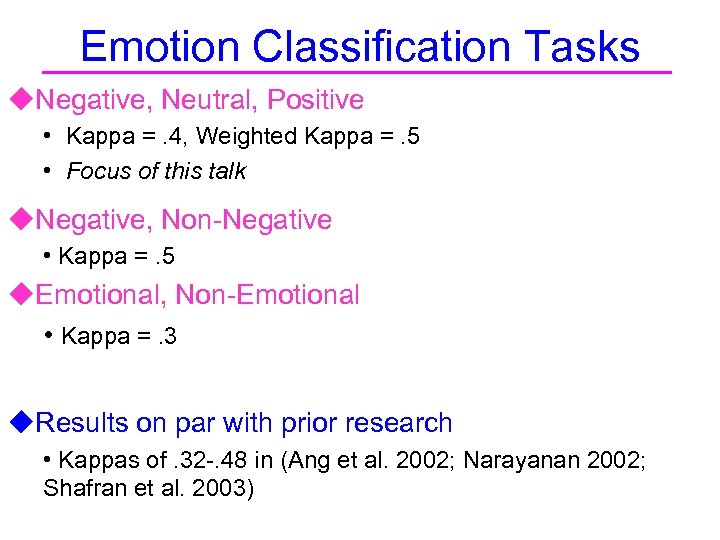

Emotion Classification Tasks u. Negative, Neutral, Positive • Kappa =. 4, Weighted Kappa =. 5 • Focus of this talk u. Negative, Non-Negative • Kappa =. 5 u. Emotional, Non-Emotional • Kappa =. 3 u. Results on par with prior research • Kappas of. 32 -. 48 in (Ang et al. 2002; Narayanan 2002; Shafran et al. 2003)

Emotion Classification Tasks u. Negative, Neutral, Positive • Kappa =. 4, Weighted Kappa =. 5 • Focus of this talk u. Negative, Non-Negative • Kappa =. 5 u. Emotional, Non-Emotional • Kappa =. 3 u. Results on par with prior research • Kappas of. 32 -. 48 in (Ang et al. 2002; Narayanan 2002; Shafran et al. 2003)

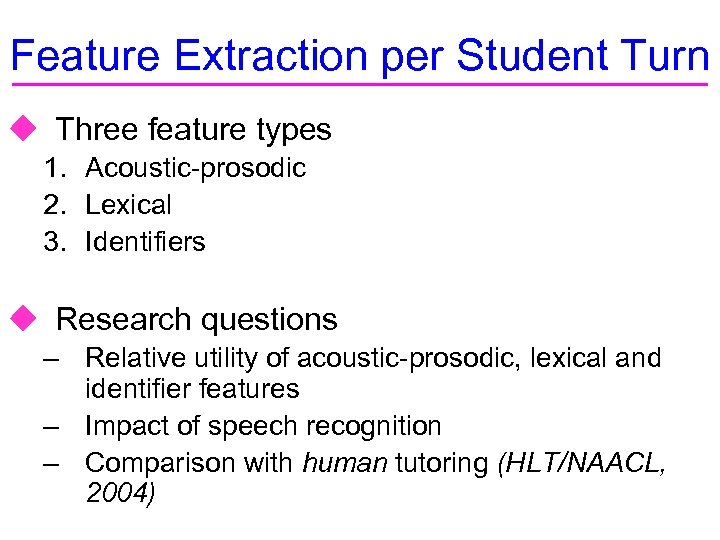

Feature Extraction per Student Turn u Three feature types 1. Acoustic-prosodic 2. Lexical 3. Identifiers u Research questions – Relative utility of acoustic-prosodic, lexical and identifier features – Impact of speech recognition – Comparison with human tutoring (HLT/NAACL, 2004)

Feature Extraction per Student Turn u Three feature types 1. Acoustic-prosodic 2. Lexical 3. Identifiers u Research questions – Relative utility of acoustic-prosodic, lexical and identifier features – Impact of speech recognition – Comparison with human tutoring (HLT/NAACL, 2004)

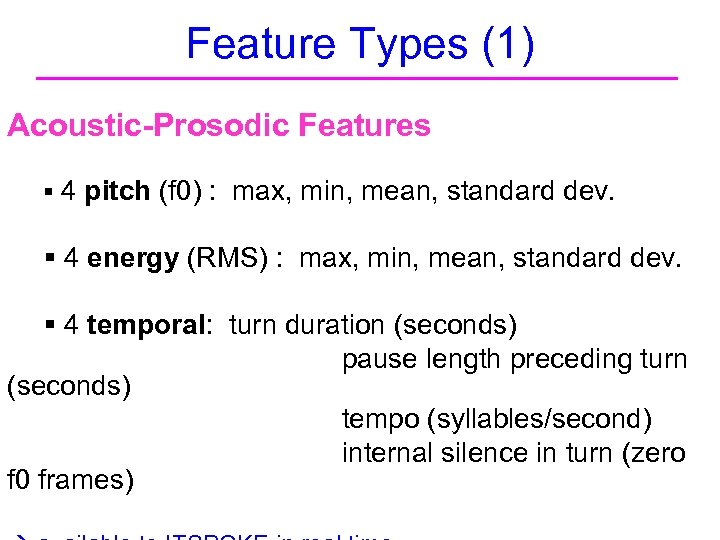

Feature Types (1) Acoustic-Prosodic Features § 4 pitch (f 0) : max, min, mean, standard dev. § 4 energy (RMS) : max, min, mean, standard dev. § 4 temporal: turn duration (seconds) pause length preceding turn (seconds) tempo (syllables/second) internal silence in turn (zero f 0 frames)

Feature Types (1) Acoustic-Prosodic Features § 4 pitch (f 0) : max, min, mean, standard dev. § 4 energy (RMS) : max, min, mean, standard dev. § 4 temporal: turn duration (seconds) pause length preceding turn (seconds) tempo (syllables/second) internal silence in turn (zero f 0 frames)

Feature Types (2) Word Occurrence Vectors §Human-transcribed lexical items in the turn §ITSPOKE-recognized lexical items

Feature Types (2) Word Occurrence Vectors §Human-transcribed lexical items in the turn §ITSPOKE-recognized lexical items

Feature Types (3) Identifier Features § student id § student gender § problem id

Feature Types (3) Identifier Features § student id § student gender § problem id

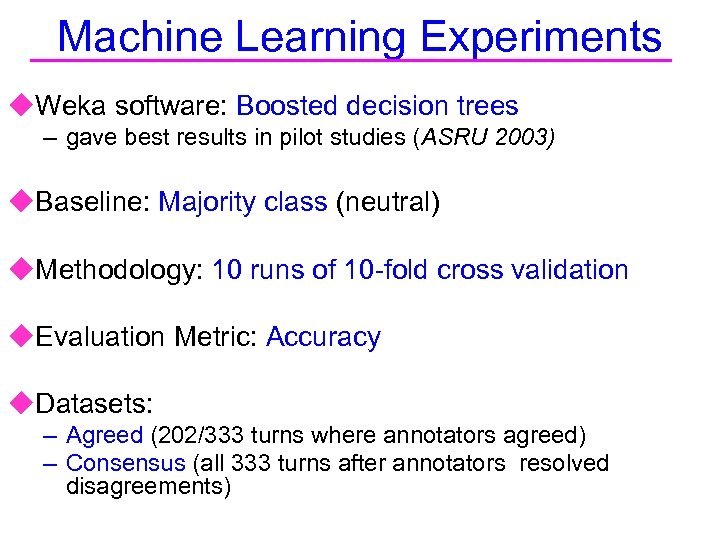

Machine Learning Experiments u. Weka software: Boosted decision trees – gave best results in pilot studies (ASRU 2003) u. Baseline: Majority class (neutral) u. Methodology: 10 runs of 10 -fold cross validation u. Evaluation Metric: Accuracy u. Datasets: – Agreed (202/333 turns where annotators agreed) – Consensus (all 333 turns after annotators resolved disagreements)

Machine Learning Experiments u. Weka software: Boosted decision trees – gave best results in pilot studies (ASRU 2003) u. Baseline: Majority class (neutral) u. Methodology: 10 runs of 10 -fold cross validation u. Evaluation Metric: Accuracy u. Datasets: – Agreed (202/333 turns where annotators agreed) – Consensus (all 333 turns after annotators resolved disagreements)

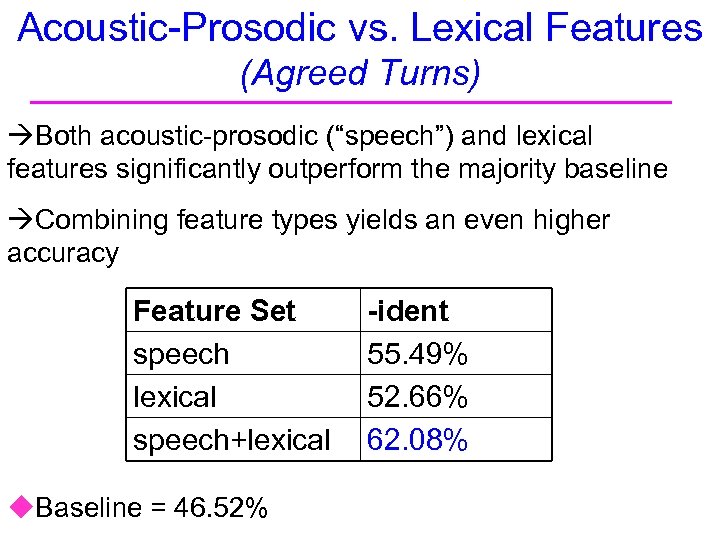

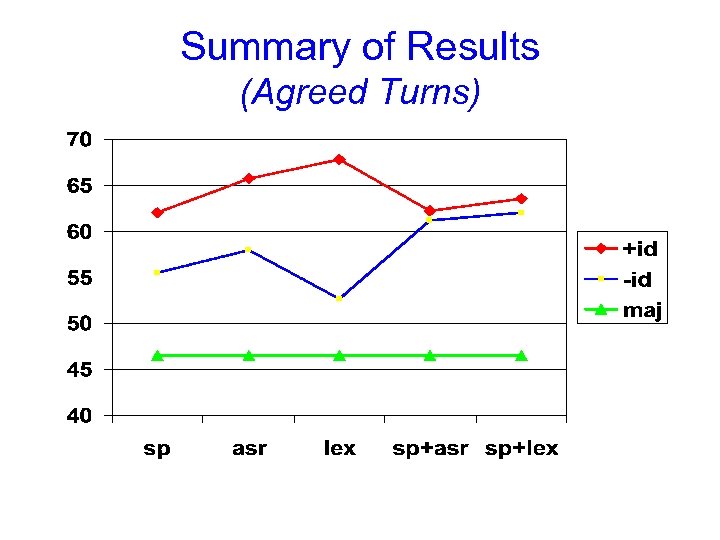

Acoustic-Prosodic vs. Lexical Features (Agreed Turns) Both acoustic-prosodic (“speech”) and lexical features significantly outperform the majority baseline Combining feature types yields an even higher accuracy Feature Set speech lexical speech+lexical u. Baseline = 46. 52% -ident 55. 49% 52. 66% 62. 08%

Acoustic-Prosodic vs. Lexical Features (Agreed Turns) Both acoustic-prosodic (“speech”) and lexical features significantly outperform the majority baseline Combining feature types yields an even higher accuracy Feature Set speech lexical speech+lexical u. Baseline = 46. 52% -ident 55. 49% 52. 66% 62. 08%

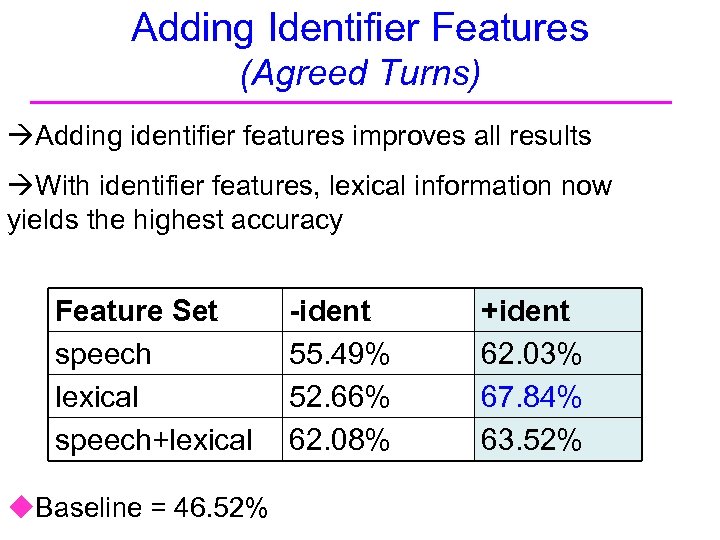

Adding Identifier Features (Agreed Turns) Adding identifier features improves all results With identifier features, lexical information now yields the highest accuracy Feature Set speech lexical speech+lexical u. Baseline = 46. 52% -ident 55. 49% 52. 66% 62. 08% +ident 62. 03% 67. 84% 63. 52%

Adding Identifier Features (Agreed Turns) Adding identifier features improves all results With identifier features, lexical information now yields the highest accuracy Feature Set speech lexical speech+lexical u. Baseline = 46. 52% -ident 55. 49% 52. 66% 62. 08% +ident 62. 03% 67. 84% 63. 52%

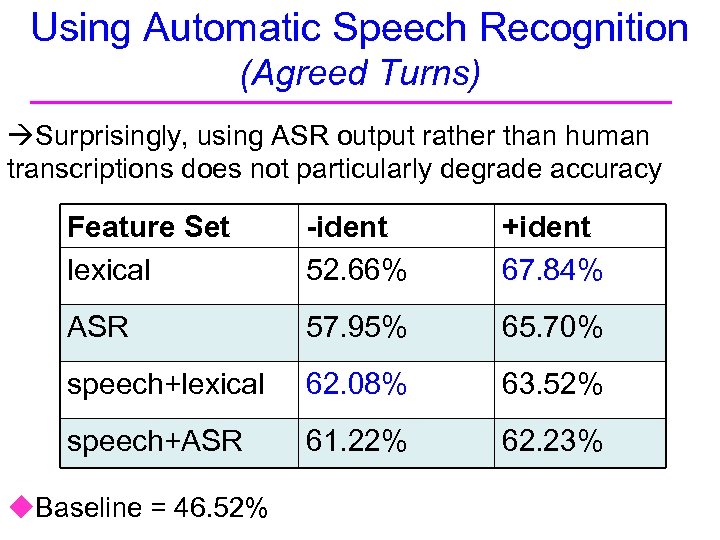

Using Automatic Speech Recognition (Agreed Turns) Surprisingly, using ASR output rather than human transcriptions does not particularly degrade accuracy Feature Set lexical -ident 52. 66% +ident 67. 84% ASR 57. 95% 65. 70% speech+lexical 62. 08% 63. 52% speech+ASR 61. 22% 62. 23% u. Baseline = 46. 52%

Using Automatic Speech Recognition (Agreed Turns) Surprisingly, using ASR output rather than human transcriptions does not particularly degrade accuracy Feature Set lexical -ident 52. 66% +ident 67. 84% ASR 57. 95% 65. 70% speech+lexical 62. 08% 63. 52% speech+ASR 61. 22% 62. 23% u. Baseline = 46. 52%

Summary of Results (Agreed Turns)

Summary of Results (Agreed Turns)

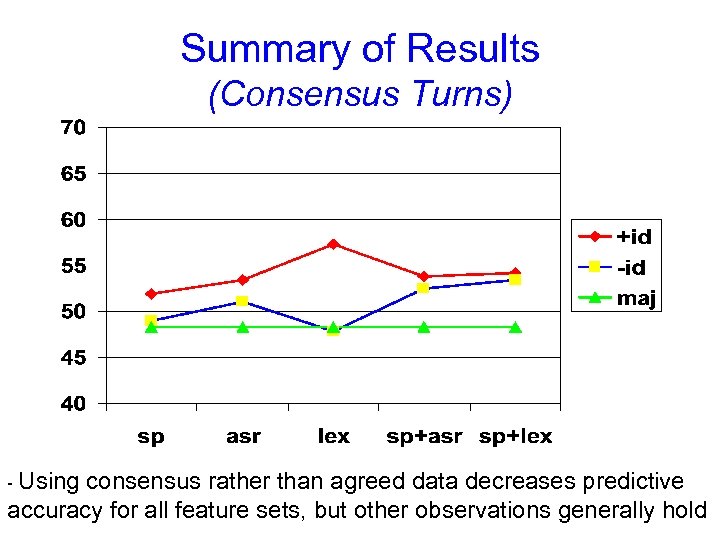

Summary of Results (Consensus Turns) - Using consensus rather than agreed data decreases predictive accuracy for all feature sets, but other observations generally hold

Summary of Results (Consensus Turns) - Using consensus rather than agreed data decreases predictive accuracy for all feature sets, but other observations generally hold

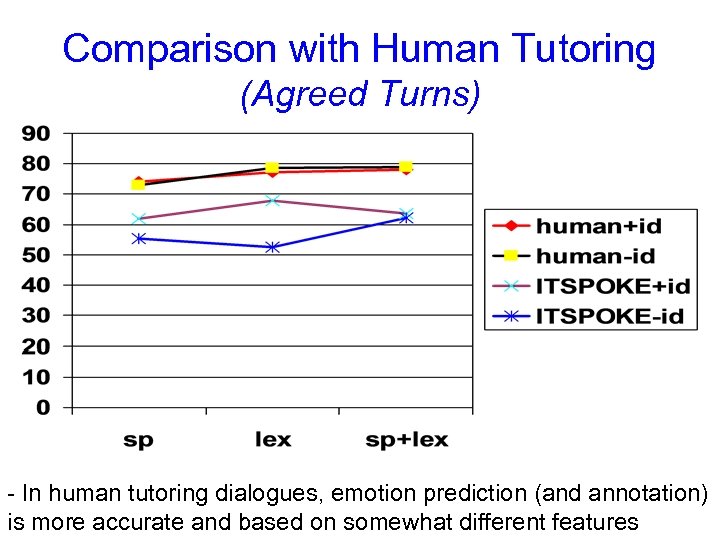

Comparison with Human Tutoring (Agreed Turns) - In human tutoring dialogues, emotion prediction (and annotation) is more accurate and based on somewhat different features

Comparison with Human Tutoring (Agreed Turns) - In human tutoring dialogues, emotion prediction (and annotation) is more accurate and based on somewhat different features

Related Research in Emotional Speech u. Elicited Speech (Polzin & Waibel 1998; Oudeyer 2002; Liscombe et al. 2003) u. Naturally-Occurring Speech (Ang et al. 2002; Lee et al. 2002; Batliner et al. 2003; Devillers et al. 2003; Shafran et al. 2003) u. Our Work Ønaturally-occurring tutoring data Øanalysis of comparable human and computer corpora

Related Research in Emotional Speech u. Elicited Speech (Polzin & Waibel 1998; Oudeyer 2002; Liscombe et al. 2003) u. Naturally-Occurring Speech (Ang et al. 2002; Lee et al. 2002; Batliner et al. 2003; Devillers et al. 2003; Shafran et al. 2003) u. Our Work Ønaturally-occurring tutoring data Øanalysis of comparable human and computer corpora

Current Directions u. Develop adaptive strategies for ITSPOKE – annotate human tutor turns – evaluate ITSPOKE with emotion adaptation u. Co-training to address annotation bottleneck – Maeireizo, Litman, and Hwa: Saturday poster

Current Directions u. Develop adaptive strategies for ITSPOKE – annotate human tutor turns – evaluate ITSPOKE with emotion adaptation u. Co-training to address annotation bottleneck – Maeireizo, Litman, and Hwa: Saturday poster

Summary u. Recognition of annotated student emotions in spoken computer tutoring dialogues u. Feature sets containing acoustic-prosodic, lexical, and/or identifier features yield significant improvements in predictive accuracy compared to majority class baselines – role of differing feature types and speech recognition errors – comparable analysis of human tutoring dialogues – paper contains details regarding two other emotion prediction tasks u. This research is a first step towards implementing emotion prediction and adaptation in ITSPOKE

Summary u. Recognition of annotated student emotions in spoken computer tutoring dialogues u. Feature sets containing acoustic-prosodic, lexical, and/or identifier features yield significant improvements in predictive accuracy compared to majority class baselines – role of differing feature types and speech recognition errors – comparable analysis of human tutoring dialogues – paper contains details regarding two other emotion prediction tasks u. This research is a first step towards implementing emotion prediction and adaptation in ITSPOKE

Thank You! Questions?

Thank You! Questions?

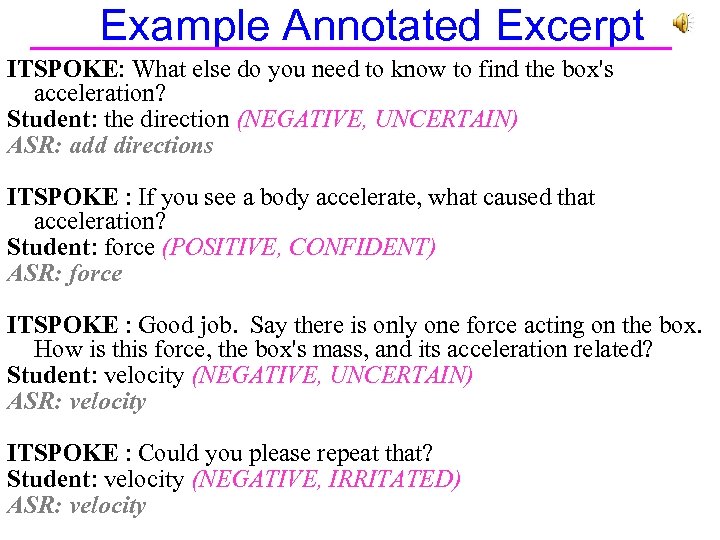

Example Annotated Excerpt ITSPOKE: What else do you need to know to find the box's acceleration? Student: the direction (NEGATIVE, UNCERTAIN) ASR: add directions ITSPOKE : If you see a body accelerate, what caused that acceleration? Student: force (POSITIVE, CONFIDENT) ASR: force ITSPOKE : Good job. Say there is only one force acting on the box. How is this force, the box's mass, and its acceleration related? Student: velocity (NEGATIVE, UNCERTAIN) ASR: velocity ITSPOKE : Could you please repeat that? Student: velocity (NEGATIVE, IRRITATED) ASR: velocity

Example Annotated Excerpt ITSPOKE: What else do you need to know to find the box's acceleration? Student: the direction (NEGATIVE, UNCERTAIN) ASR: add directions ITSPOKE : If you see a body accelerate, what caused that acceleration? Student: force (POSITIVE, CONFIDENT) ASR: force ITSPOKE : Good job. Say there is only one force acting on the box. How is this force, the box's mass, and its acceleration related? Student: velocity (NEGATIVE, UNCERTAIN) ASR: velocity ITSPOKE : Could you please repeat that? Student: velocity (NEGATIVE, IRRITATED) ASR: velocity

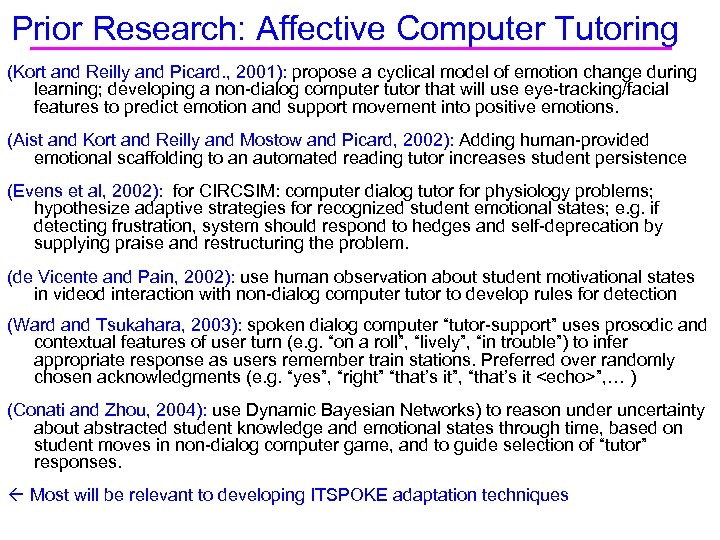

Prior Research: Affective Computer Tutoring (Kort and Reilly and Picard. , 2001): propose a cyclical model of emotion change during learning; developing a non-dialog computer tutor that will use eye-tracking/facial features to predict emotion and support movement into positive emotions. (Aist and Kort and Reilly and Mostow and Picard, 2002): Adding human-provided emotional scaffolding to an automated reading tutor increases student persistence (Evens et al, 2002): for CIRCSIM: computer dialog tutor for physiology problems; hypothesize adaptive strategies for recognized student emotional states; e. g. if detecting frustration, system should respond to hedges and self-deprecation by supplying praise and restructuring the problem. (de Vicente and Pain, 2002): use human observation about student motivational states in videod interaction with non-dialog computer tutor to develop rules for detection (Ward and Tsukahara, 2003): spoken dialog computer “tutor-support” uses prosodic and contextual features of user turn (e. g. “on a roll”, “lively”, “in trouble”) to infer appropriate response as users remember train stations. Preferred over randomly chosen acknowledgments (e. g. “yes”, “right” “that’s it”, “that’s it

Prior Research: Affective Computer Tutoring (Kort and Reilly and Picard. , 2001): propose a cyclical model of emotion change during learning; developing a non-dialog computer tutor that will use eye-tracking/facial features to predict emotion and support movement into positive emotions. (Aist and Kort and Reilly and Mostow and Picard, 2002): Adding human-provided emotional scaffolding to an automated reading tutor increases student persistence (Evens et al, 2002): for CIRCSIM: computer dialog tutor for physiology problems; hypothesize adaptive strategies for recognized student emotional states; e. g. if detecting frustration, system should respond to hedges and self-deprecation by supplying praise and restructuring the problem. (de Vicente and Pain, 2002): use human observation about student motivational states in videod interaction with non-dialog computer tutor to develop rules for detection (Ward and Tsukahara, 2003): spoken dialog computer “tutor-support” uses prosodic and contextual features of user turn (e. g. “on a roll”, “lively”, “in trouble”) to infer appropriate response as users remember train stations. Preferred over randomly chosen acknowledgments (e. g. “yes”, “right” “that’s it”, “that’s it

Experimental Procedure u. Students take a physics pretest u. Students read background material u. Students use the web and voice interface to work through up to 10 problems with either ITSPOKE or a human tutor u. Students take a post-test

Experimental Procedure u. Students take a physics pretest u. Students read background material u. Students use the web and voice interface to work through up to 10 problems with either ITSPOKE or a human tutor u. Students take a post-test