d24d564d898c140f77667fd386ae4d3a.ppt

- Количество слайдов: 12

Pre-processing text for web information retrieval purposes by splitting compounds into their morphemes Universität Oldenburg Fakultät für Informatik, Wirtschafts- und Rechtswissenschaften Abteilung Wirtschaftsinformatik Ammerländer Heerstr. 114 -118 26129 Oldenburg Tel. (0441) 798 -4480 Fax (0441) 798 -4472 www. wi-ol. de Sven Abels, Axel Hahn

Agenda What are compounds? Why do we need to split them? Special requirements in IR Existing solutions Introduction of our approach Problematic areas Evaluation results © Abels/Hahn, Wirtschaftsinformatik Oldenburg -2 -

j. Word. Splitter • What are compounds? – A word that consists of several connected sub words (morphemes) – Compounds can be found in most languages – English examples: summertime, doghouse, containership, ecosystem, eyeglasses, handshake, handwriting, houseboat, goldfish or fireplace. – German examples: Sonnenblume, Netzwerkkarte, Apfelkuchen, Wortzusammensetzung, Eisenbahn oder Donaudampfschiffartsanwärtermützenstoff. – Italian examples: Centopiedo, Ferrovia, Lavacristallo. – Finnish examples: sanakirja, tietokone, keskiviikko, maailma. –. . . – Compounds can be found in most languages – What differs from language to language is • a) The number of compounds used in a language • b) Their length (in English most compounds consist of 2 subwords; in German we may find large words consisting of up to 10 or more subwords). © Abels/Hahn, Wirtschaftsinformatik Oldenburg -3 -

j. Word. Splitter • What is the purpose of our approach? – To split/decompose compounds into their sub words "sunglasses" { "sun", "glasses" } "houseboat" { "house", "boat" } – In some languages we also need to change the words after they are decomposed because they use connecting chars in some cases. – Example: „Liebesgedicht“ (love poem) „Liebe-s-gedicht“ { „Liebe“, „Gedicht“ } (n. b. : NOT „Liebes“) – Our approach should be language independent (if case that language rules for word connections are known) © Abels/Hahn, Wirtschaftsinformatik Oldenburg -4 -

j. Word. Splitter • Why do we need a word decomposition? – It allows a better stemming of words – It allows an easier analysis of the text semantics – It allows us to easier find synonyms if necessary For example: – Stemming is important in many IR scenarios. – It transforms word into their basic form: houses house / swimming swim / … – Normally, stemming is based on an algorithm (Porter stemmer, etc. ). Hence, there are problems when stemming compounds. “sunglasses” will be stemmed to “sunglass” Problems appear whenever the first word is changed: “götterspeise” (jelly) is a problem because its first word is plural but it will not be recognized by a stemmer. It is necessary to split it into { “götter”, “speise” } to stem both morphemes correctly © Abels/Hahn, Wirtschaftsinformatik Oldenburg -5 -

j. Word. Splitter • What are special requirements for the IR domain? – In many cases we have to analyze ‘tons of data’ (e. g. search engines, etc. ) – Hence, we need a fast solution with a good quality – Quality has to be high but not for the price of speed – A low error rate is acceptable in many cases if we get a high speed solution – Furthermore, we have to look at special word connections such as numbers. – For example: File name: Baseball. Game 2005, 2 nd. Houseboat. Catalog, … • What about existing solutions? – Machinese Pharse Tagger (Connexor): commercial solution; takes almost a second per sentence – Si. Si (Commercial license): Not developed for splitting compounds but for performing a word hyphenation. It identified the “main hyphenation areas”, which are identical to word splitting results in most cases. Does not allow to look at words like 2 nd. Houseboat. Catalog. Is fast but it still takes twice as long as our approach. © Abels/Hahn, Wirtschaftsinformatik Oldenburg -6 -

j. Word. Splitter • How does our approach work? – – – – Realized as a Java library. Based on a word list for every language that should be supported. In the current realization: 206. 877 words in singular and plural The approach algorithm consists of three steps: 1. direct decomposition of a compound 2. truncation of the word from left to right and 3. truncation of the word from right to left In the first step we call a recursive method “find. Tupel(String word)” in order to split the word into morphemes. If no decomposition is found, we truncate the word from right to left and call find. Tupel again. We repeat the truncation for some characters and in case that we still didn’t find anything we truncate it from left to right. This enables us to decompose “Houseboat. Season 2005” correctly. © Abels/Hahn, Wirtschaftsinformatik Oldenburg -7 -

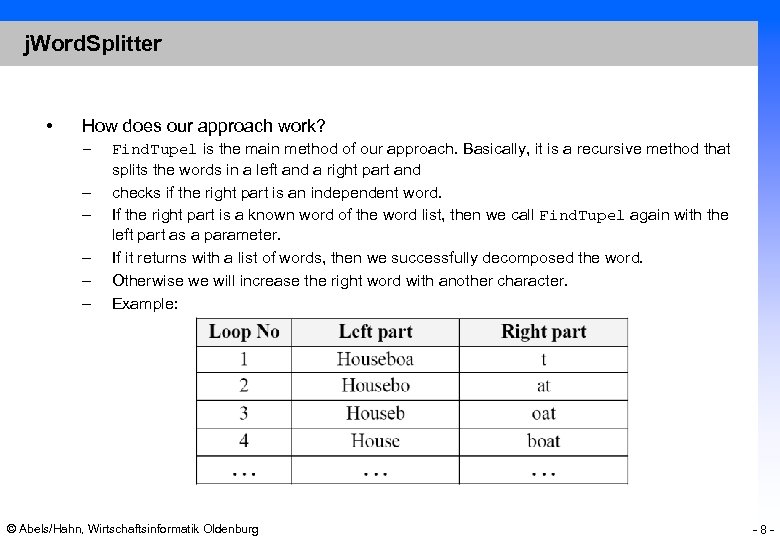

j. Word. Splitter • How does our approach work? – – – Find. Tupel is the main method of our approach. Basically, it is a recursive method that splits the words in a left and a right part and checks if the right part is an independent word. If the right part is a known word of the word list, then we call Find. Tupel again with the left part as a parameter. If it returns with a list of words, then we successfully decomposed the word. Otherwise we will increase the right word with another character. Example: © Abels/Hahn, Wirtschaftsinformatik Oldenburg -8 -

j. Word. Splitter • What are critical things in the approach? – There might be more then one possible decompositions for a word: “Wachstube” has two meanings and could be decomposed into – “Wachs” and “Tube” (“wax tube”) or into – “Wach” (guard) and “Stube” (house), which means “guardhouse”. Obviously, both decompositions are correct but have a different meaning. – – The approach will always choose the smallest possible word length of sub words The Evaluation has shown that small words in the word list corrupt the algorithm. For example: “he”, “it”, etc. are problems. For example, our approach would decompose the word “Laserdrucker” into “Las”, “Er”, “Druck”, “Er” (read-he-print-he) instead of “Laser”, “Drucker” (laser printer). – We added a minimum word length of 4 characters for the approach. This made the effect disappear in practical scenarios and has shown to deliver an optimal result A scientific check is still needed for this © Abels/Hahn, Wirtschaftsinformatik Oldenburg -9 -

j. Word. Splitter • What about the quality and the speed of j. Word. Splitter? We evaluated the results in many different test cases. Speed: – – – “Large” compounds (5 or more morphemes): 50. 000 words/min. 1 or 2 morphemes (“handwriting”, “sunglasses”): 150. 000 words/min. words with no sense (not splittable): 120. 000 words/min. (based on a 1. 5 Ghz PC; Java 1. 5) Quality: – – – We used a word set of 200 randomly chosen words with 456 morphemes: 89% have been decomposed completely and without any errors and about 94% have been either composed correctly or at least not been composed incorrectly. © Abels/Hahn, Wirtschaftsinformatik Oldenburg - 10 -

j. Word. Splitter • • Further research Two projects to test j. Word. Splitter: – 1: Classification of product descriptions (analyze product description) – – 2: Enhance search engine for measuring the benefits The benefits of j. Word. Splitter differ from language to language We want to preprocess text and then compare the search quality before and after we used j. Word. Splitter We will repeat this in 3 different languages (English, German, X) – Results will be available at the end of October http: //www. wi-ol. de/j. Word. Splitter (Download, Evaluation results, Open Source) © Abels/Hahn, Wirtschaftsinformatik Oldenburg - 11 -

Pre-processing text for web information retrieval purposes by splitting compounds into their morphemes Universität Oldenburg Fakultät für Informatik, Wirtschafts- und Rechtswissenschaften Abteilung Wirtschaftsinformatik Ammerländer Heerstr. 114 -118 26129 Oldenburg Tel. (0441) 798 -4480 Fax (0441) 798 -4472 www. wi-ol. de Sven Abels, Axel Hahn

d24d564d898c140f77667fd386ae4d3a.ppt