0d3e919623d9a63f1545ec8b2fb85402.ppt

- Количество слайдов: 25

POZNAŃ SUPERCOMPUTING AND NETWORKING Systemy rozproszone • • • Definicje Wymagania co do środowisk rozproszonych IBM SP 2 POE System T 3 E DCE Mirosław Kupczyk miron@man. poznan. pl

POZNAŃ SUPERCOMPUTING AND NETWORKING Systemy rozproszone • • • Definicje Wymagania co do środowisk rozproszonych IBM SP 2 POE System T 3 E DCE Mirosław Kupczyk miron@man. poznan. pl

POZNAŃ SUPERCOMPUTING AND NETWORKING Why Distributed Computing? • historical reason computing resources that used to operate independently now need to work together. For example, consider an office that acquired personal workstations for individual use. After a while, there were many workstations in the office building, and the users recognized that it would be desirable to share data and resources among the individual computers. They accomplished this by connecting the workstations over a network. • functional reason if there is special-function hardware or software available over the network, then that functionality does not have to be duplicated on every computer system (or node) that needs to access the special-purpose resource. For example, an organization could make a typesetting service available over the network, allowing users throughout the organization to submit their jobs to be typeset.

POZNAŃ SUPERCOMPUTING AND NETWORKING Why Distributed Computing? • historical reason computing resources that used to operate independently now need to work together. For example, consider an office that acquired personal workstations for individual use. After a while, there were many workstations in the office building, and the users recognized that it would be desirable to share data and resources among the individual computers. They accomplished this by connecting the workstations over a network. • functional reason if there is special-function hardware or software available over the network, then that functionality does not have to be duplicated on every computer system (or node) that needs to access the special-purpose resource. For example, an organization could make a typesetting service available over the network, allowing users throughout the organization to submit their jobs to be typeset.

POZNAŃ SUPERCOMPUTING AND NETWORKING Why Distributed Computing? • economical reason it may be more cost effective to have many small computers working together than one large computer of equivalent power. In addition, having many units connected to a network is the more flexible configuration, because if more resources are needed, another unit can be added in place, rather than bringing the whole system down and replacing it with an upgraded one. • reliability reason a distributed system can be more reliable and available than a centralized system. This is a result of the ability to replicate both data and functionality. For example, when a given file is copied on two different machines, then even if one machine is unavailable, the file can still be accessed on the other machine. Likewise, if several printers are attached to a network, then even if an administrator takes one printer offline for maintenance, users can still print their files by using an alternate printer.

POZNAŃ SUPERCOMPUTING AND NETWORKING Why Distributed Computing? • economical reason it may be more cost effective to have many small computers working together than one large computer of equivalent power. In addition, having many units connected to a network is the more flexible configuration, because if more resources are needed, another unit can be added in place, rather than bringing the whole system down and replacing it with an upgraded one. • reliability reason a distributed system can be more reliable and available than a centralized system. This is a result of the ability to replicate both data and functionality. For example, when a given file is copied on two different machines, then even if one machine is unavailable, the file can still be accessed on the other machine. Likewise, if several printers are attached to a network, then even if an administrator takes one printer offline for maintenance, users can still print their files by using an alternate printer.

POZNAŃ SUPERCOMPUTING AND NETWORKING Definitions Node, PE A node refers to single machine, running its own copy of the operating system. A node has a unique network name/address. Within a parallel system, a node can be booted and configured independently, or cooperatively with other nodes. For SMP machines, a node may contain multiple CPUs. SMP Symmetric Multi-Processor. A computer (single machine) that has multiple CPUs, configured to share/arbitrate shared memory. A single copy of the operating system serves all CPUs. Pool, domain A pool is an arbitrary collection of nodes assigned by the system managers. Pools are typically used to separate nodes into disjoint groups, each of which is used for specific purposes. For example, on a given system, some nodes may be designated as "login" nodes, while others are reserved for "batch" or "testing" use only. Partition The group of nodes on which you run your parallel program is called your partition. Typically, there are multiple active partitions for multiple users across a system. Depending upon how a given system is configured, the nodes in your partition may/may not be shared with other users' partitions.

POZNAŃ SUPERCOMPUTING AND NETWORKING Definitions Node, PE A node refers to single machine, running its own copy of the operating system. A node has a unique network name/address. Within a parallel system, a node can be booted and configured independently, or cooperatively with other nodes. For SMP machines, a node may contain multiple CPUs. SMP Symmetric Multi-Processor. A computer (single machine) that has multiple CPUs, configured to share/arbitrate shared memory. A single copy of the operating system serves all CPUs. Pool, domain A pool is an arbitrary collection of nodes assigned by the system managers. Pools are typically used to separate nodes into disjoint groups, each of which is used for specific purposes. For example, on a given system, some nodes may be designated as "login" nodes, while others are reserved for "batch" or "testing" use only. Partition The group of nodes on which you run your parallel program is called your partition. Typically, there are multiple active partitions for multiple users across a system. Depending upon how a given system is configured, the nodes in your partition may/may not be shared with other users' partitions.

POZNAŃ SUPERCOMPUTING AND NETWORKING Definitions Home Node / Remote Node For interactive use, your home node is the node where you are logged into, and where you start your job. A Remote Node is any other non-home node in your partition. (Technically speaking, the home node may or may not be considered part of your partition). Job Manager When you request nodes to run your parallel job, Job Manager will find allocate nodes for your use. Job. Manager also enables user jobs to take advantage of multiple CPU SMP nodes, and keeps track of how the switch is used for communications. User Space Protocol Often referred to simply as US protocol. The fastest method for intertask MPI communications. Only one user may use US communications on a node at any given time. Can only be conducted over the SP switch. Internet Protocol Often referred to simply as IP protocol. A slower, but more flexible method of intertask MPI communications. Multiple users can all use IP communications on a node at the same time. .

POZNAŃ SUPERCOMPUTING AND NETWORKING Definitions Home Node / Remote Node For interactive use, your home node is the node where you are logged into, and where you start your job. A Remote Node is any other non-home node in your partition. (Technically speaking, the home node may or may not be considered part of your partition). Job Manager When you request nodes to run your parallel job, Job Manager will find allocate nodes for your use. Job. Manager also enables user jobs to take advantage of multiple CPU SMP nodes, and keeps track of how the switch is used for communications. User Space Protocol Often referred to simply as US protocol. The fastest method for intertask MPI communications. Only one user may use US communications on a node at any given time. Can only be conducted over the SP switch. Internet Protocol Often referred to simply as IP protocol. A slower, but more flexible method of intertask MPI communications. Multiple users can all use IP communications on a node at the same time. .

POZNAŃ SUPERCOMPUTING AND NETWORKING Definitions Non-Specific Node Allocation Refers to the Job Manager automatically selecting which nodes will be used to run your parallel job. Non-specific node allocation is usually the recommended (and default) method of node allocation. For batch jobs, this is typically the only method of node allocation available. Specific Node Allocation Enables the user to explicitly choose which nodes will be used to run a job. Requires the use of a "host list file", which contains the actual names of the nodes that must be used. Specific node allocation is only for interactive use, and recommended only when there is a reason for selecting specifc nodes.

POZNAŃ SUPERCOMPUTING AND NETWORKING Definitions Non-Specific Node Allocation Refers to the Job Manager automatically selecting which nodes will be used to run your parallel job. Non-specific node allocation is usually the recommended (and default) method of node allocation. For batch jobs, this is typically the only method of node allocation available. Specific Node Allocation Enables the user to explicitly choose which nodes will be used to run a job. Requires the use of a "host list file", which contains the actual names of the nodes that must be used. Specific node allocation is only for interactive use, and recommended only when there is a reason for selecting specifc nodes.

POZNAŃ SUPERCOMPUTING AND NETWORKING

POZNAŃ SUPERCOMPUTING AND NETWORKING

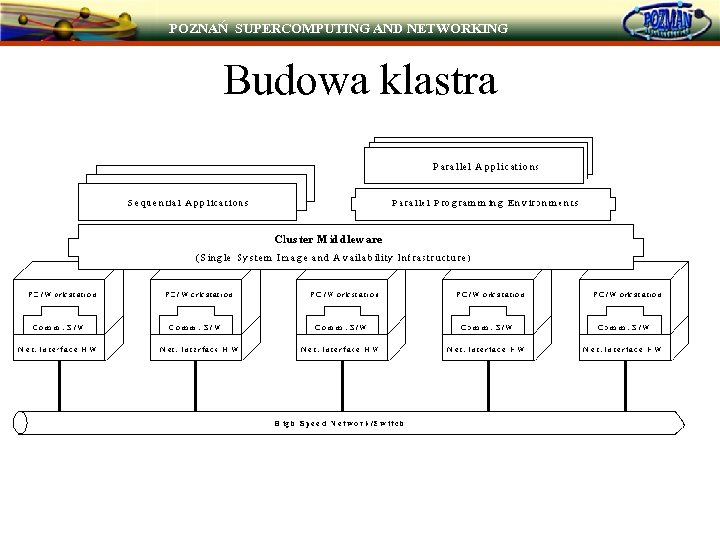

POZNAŃ SUPERCOMPUTING AND NETWORKING Budowa klastra

POZNAŃ SUPERCOMPUTING AND NETWORKING Budowa klastra

POZNAŃ SUPERCOMPUTING AND NETWORKING Typy klastrów - zapewniające niezawodność (ang. high-availability cluster), których zadanie polega na zapewnieniu ciągłej pracy systemu i przerzucenie obciążenia na zapasowe węzły w przypadku awarii (np. serwery WWW, e-commerce) - obliczeniowe (ang. capability cluster), których zadaniem jest przetwarzanie równoległe aplikacji dla celów naukowych, inżynierskich czy projektowych. Wymagane jest zapewnienie wydajnych mechanizmów komunikacji między węzłami, co umożliwi wykorzystanie wysokiego stopnia równoległości (fain granularity). Klastry obliczeniowe przeważnie są dedykowane dla określonej aplikacji, a programy są wykonywane sekwencyjnie i nie współzawodniczą między sobą w dostępie do zasobów. - skalowalne (ang. scalability cluster), których zadaniem jest poprawienie efektywności wykonywania programów poprzez odpowiednie przydzielanie węzłów do aplikacji. Wymagane jest oprogramowanie zarządzające zapewniające uruchamianie zadań, load balancing, analizę obciążenia i zarządzanie zadaniami. Ewentualne zadania rozproszone mogą wykorzystywać równoległość na poziomie procedur i modułów.

POZNAŃ SUPERCOMPUTING AND NETWORKING Typy klastrów - zapewniające niezawodność (ang. high-availability cluster), których zadanie polega na zapewnieniu ciągłej pracy systemu i przerzucenie obciążenia na zapasowe węzły w przypadku awarii (np. serwery WWW, e-commerce) - obliczeniowe (ang. capability cluster), których zadaniem jest przetwarzanie równoległe aplikacji dla celów naukowych, inżynierskich czy projektowych. Wymagane jest zapewnienie wydajnych mechanizmów komunikacji między węzłami, co umożliwi wykorzystanie wysokiego stopnia równoległości (fain granularity). Klastry obliczeniowe przeważnie są dedykowane dla określonej aplikacji, a programy są wykonywane sekwencyjnie i nie współzawodniczą między sobą w dostępie do zasobów. - skalowalne (ang. scalability cluster), których zadaniem jest poprawienie efektywności wykonywania programów poprzez odpowiednie przydzielanie węzłów do aplikacji. Wymagane jest oprogramowanie zarządzające zapewniające uruchamianie zadań, load balancing, analizę obciążenia i zarządzanie zadaniami. Ewentualne zadania rozproszone mogą wykorzystywać równoległość na poziomie procedur i modułów.

POZNAŃ SUPERCOMPUTING AND NETWORKING Middleware • It frees the end user from having to know where an application will run. • It frees the operator from having to know where a resource (an instance of resource) is located. • It allows to centralize/decentralize system management and control to avoid the need of skilled administrators for system administration. • It greatly simplifies system management; actions affecting multiple resources can be achieved with a single command, even where the resources are spread among multiple systems on different machines. • It provides location-independent message communication. Because SSI provides a dynamic map of the message routing as it occurs in reality, the operator can always be sure that actions will be performed on the current system. • It helps track the locations of all resources so that there is no longer any need for system operators to be concerned with their physical location while carrying out system management tasks.

POZNAŃ SUPERCOMPUTING AND NETWORKING Middleware • It frees the end user from having to know where an application will run. • It frees the operator from having to know where a resource (an instance of resource) is located. • It allows to centralize/decentralize system management and control to avoid the need of skilled administrators for system administration. • It greatly simplifies system management; actions affecting multiple resources can be achieved with a single command, even where the resources are spread among multiple systems on different machines. • It provides location-independent message communication. Because SSI provides a dynamic map of the message routing as it occurs in reality, the operator can always be sure that actions will be performed on the current system. • It helps track the locations of all resources so that there is no longer any need for system operators to be concerned with their physical location while carrying out system management tasks.

POZNAŃ SUPERCOMPUTING AND NETWORKING Resource Management and Scheduling RMS environments provide middleware services to users that should enable heterogeneous environments of workstations, SMPs, and dedicated parallel platforms to be easily and efficient utilized. The services provided by a RMS environment can include: • Process Migration - This is where a process can be suspended, moved, and restarted on another computer within the RMS environment. Generally, process migration occurs due to one of two reasons: a computational resource has become too heavily loaded and there are other free resources, which can be utilized, or in conjunction with the process of minimizing the impact of users, mentioned below. • Checkpointing - This is where a snapshot of an executing program's state is saved and can be used to restart the program from the same point at a later time if necessary. Checkpointing is generally regarded as a means of providing reliability. When some part of an RMS environment fails, the programs executing on it can be restarted from some intermediate point in their run, rather than restarting them from scratch.

POZNAŃ SUPERCOMPUTING AND NETWORKING Resource Management and Scheduling RMS environments provide middleware services to users that should enable heterogeneous environments of workstations, SMPs, and dedicated parallel platforms to be easily and efficient utilized. The services provided by a RMS environment can include: • Process Migration - This is where a process can be suspended, moved, and restarted on another computer within the RMS environment. Generally, process migration occurs due to one of two reasons: a computational resource has become too heavily loaded and there are other free resources, which can be utilized, or in conjunction with the process of minimizing the impact of users, mentioned below. • Checkpointing - This is where a snapshot of an executing program's state is saved and can be used to restart the program from the same point at a later time if necessary. Checkpointing is generally regarded as a means of providing reliability. When some part of an RMS environment fails, the programs executing on it can be restarted from some intermediate point in their run, rather than restarting them from scratch.

POZNAŃ SUPERCOMPUTING AND NETWORKING Resource Management and Scheduling • Scavenging Idle Cycles - It is generally recognized that between 70 percent and 90 percent of the time most workstations are idle. RMS systems can be set up to utilize idle CPU cycles. For example, jobs can be submitted to workstations during the night or at weekends. This way, interactive users are not impacted by external jobs and idle CPU cycles can be taken advantage of. • Fault Tolerance - By monitoring its jobs and resources, an RMS system can provide various levels of fault tolerance. In its simplest form, fault tolerant support can mean that a failed job can be restarted or rerun, thus guaranteeing that the job will be completed. • Minimization of Impact on Users - Running a job on public workstations can have a great impact on the usability of the workstations by interactive users. Some RMS systems attempt to minimize the impact of a running job on interactive users by either reducing a job's local scheduling priority or suspending the job. Suspended jobs can be restarted later or migrated to other resources in the systems.

POZNAŃ SUPERCOMPUTING AND NETWORKING Resource Management and Scheduling • Scavenging Idle Cycles - It is generally recognized that between 70 percent and 90 percent of the time most workstations are idle. RMS systems can be set up to utilize idle CPU cycles. For example, jobs can be submitted to workstations during the night or at weekends. This way, interactive users are not impacted by external jobs and idle CPU cycles can be taken advantage of. • Fault Tolerance - By monitoring its jobs and resources, an RMS system can provide various levels of fault tolerance. In its simplest form, fault tolerant support can mean that a failed job can be restarted or rerun, thus guaranteeing that the job will be completed. • Minimization of Impact on Users - Running a job on public workstations can have a great impact on the usability of the workstations by interactive users. Some RMS systems attempt to minimize the impact of a running job on interactive users by either reducing a job's local scheduling priority or suspending the job. Suspended jobs can be restarted later or migrated to other resources in the systems.

POZNAŃ SUPERCOMPUTING AND NETWORKING Resource Management and Scheduling • Load Balancing - Jobs can be distributed among all the computational platforms available in a particular organization. This will allow for the efficient and effective usage of all the resources, rather than a few which may be the only ones that the users are aware of. Process migration can also be part of the load balancing strategy, where it may be beneficial to move processes from overloaded system to lightly loaded ones. • Multiple Application Queues - Job queues can be set up to help manage the resources at a particular organization. Each queue can be configured with certain attributes. For example, certain users have priority of short jobs run before long jobs. Job queues can also be set up to manage the usage of specialized resources, such as a parallel computing platform or a high performance graphics workstation. The queues in an RMS system can be transparent to users; jobs are allocated to them via keywords specified when the job is submitted.

POZNAŃ SUPERCOMPUTING AND NETWORKING Resource Management and Scheduling • Load Balancing - Jobs can be distributed among all the computational platforms available in a particular organization. This will allow for the efficient and effective usage of all the resources, rather than a few which may be the only ones that the users are aware of. Process migration can also be part of the load balancing strategy, where it may be beneficial to move processes from overloaded system to lightly loaded ones. • Multiple Application Queues - Job queues can be set up to help manage the resources at a particular organization. Each queue can be configured with certain attributes. For example, certain users have priority of short jobs run before long jobs. Job queues can also be set up to manage the usage of specialized resources, such as a parallel computing platform or a high performance graphics workstation. The queues in an RMS system can be transparent to users; jobs are allocated to them via keywords specified when the job is submitted.

POZNAŃ SUPERCOMPUTING AND NETWORKING Single system image Single Point of Entry: A user can connect to the cluster as a single system (like telnet beowulf. myinstitute. edu), instead of connecting to individual nodes as in the case of distributed systems (like telnet node 1. beowulf. myinstitute. edu). Single File Hierarchy (SFH): On entering into the system, the user sees a file system as a single hierarchy of files and directories under the same root directory. Examples: x. FS and Solaris MC Proxy. Single Point of Management and Control: The entire cluster can be monitored or controlled from a single window using a single GUI tool, much like an NT workstation managed by the Task Manager tool or PARMON monitoring the cluster resources Single Virtual Networking: This means that any node can access any network connection throughout the cluster domain even if the network is not physically connected to all nodes in the cluster. Single Memory Space: This illusion of shared memory over memories associated with nodes of the cluster. Single Job Management System: A user can submit a job from any node using a transparent job submission mechanism. Jobs can be scheduled to run in either batch, interactive, or parallel modes (discussed later). Example systems include LSF and CODINE. Single User Interface: The user should be able to use the cluster through a single GUI. The interface must have the same look and feel of an interface that is available for workstations (e. g. , Solaris Open. Win or Windows NT GUI).

POZNAŃ SUPERCOMPUTING AND NETWORKING Single system image Single Point of Entry: A user can connect to the cluster as a single system (like telnet beowulf. myinstitute. edu), instead of connecting to individual nodes as in the case of distributed systems (like telnet node 1. beowulf. myinstitute. edu). Single File Hierarchy (SFH): On entering into the system, the user sees a file system as a single hierarchy of files and directories under the same root directory. Examples: x. FS and Solaris MC Proxy. Single Point of Management and Control: The entire cluster can be monitored or controlled from a single window using a single GUI tool, much like an NT workstation managed by the Task Manager tool or PARMON monitoring the cluster resources Single Virtual Networking: This means that any node can access any network connection throughout the cluster domain even if the network is not physically connected to all nodes in the cluster. Single Memory Space: This illusion of shared memory over memories associated with nodes of the cluster. Single Job Management System: A user can submit a job from any node using a transparent job submission mechanism. Jobs can be scheduled to run in either batch, interactive, or parallel modes (discussed later). Example systems include LSF and CODINE. Single User Interface: The user should be able to use the cluster through a single GUI. The interface must have the same look and feel of an interface that is available for workstations (e. g. , Solaris Open. Win or Windows NT GUI).

POZNAŃ SUPERCOMPUTING AND NETWORKING Single system image Availability Support Functions Single I/O Space (SIOS): This allows any node to perform I/O operation on local or remotely located peripheral or disk devices. In this SIOS design, disks associated with cluster nodes, RAIDs, and peripheral devices form a single address space. Single Process Space: Processes have a unique cluster-wide process id. A process on any node can create child processes on the same or different node (through a UNIX fork) or communicate with any other process (through signals and pipes) on a remote node. This cluster should support globalized process management and allow the management and control of processes as if they are running on local machines. Checkpointing and Process Migration: Checkpointing mechanisms allow a process state and intermediate computing results to be saved periodically. When a node fails, processes on the failed node can be restarted on another working

POZNAŃ SUPERCOMPUTING AND NETWORKING Single system image Availability Support Functions Single I/O Space (SIOS): This allows any node to perform I/O operation on local or remotely located peripheral or disk devices. In this SIOS design, disks associated with cluster nodes, RAIDs, and peripheral devices form a single address space. Single Process Space: Processes have a unique cluster-wide process id. A process on any node can create child processes on the same or different node (through a UNIX fork) or communicate with any other process (through signals and pipes) on a remote node. This cluster should support globalized process management and allow the management and control of processes as if they are running on local machines. Checkpointing and Process Migration: Checkpointing mechanisms allow a process state and intermediate computing results to be saved periodically. When a node fails, processes on the failed node can be restarted on another working

POZNAŃ SUPERCOMPUTING AND NETWORKING Parallel Operating Environment • Parallel Operating Environment - środowisko do pracy równoległej • Upraszcza uruchamianie programów równoległych • Jeden punkt zarządzania - konsola wspólna dla wszystkich procesów • Proste konfigurowanie przy pomocy zmiennych środowiskowych (lub parametrów) • MPL, MPI, własne programy równoległe lub nawet seryjne Poznańskie Centrum Superkomputerowo-Sieciowe

POZNAŃ SUPERCOMPUTING AND NETWORKING Parallel Operating Environment • Parallel Operating Environment - środowisko do pracy równoległej • Upraszcza uruchamianie programów równoległych • Jeden punkt zarządzania - konsola wspólna dla wszystkich procesów • Proste konfigurowanie przy pomocy zmiennych środowiskowych (lub parametrów) • MPL, MPI, własne programy równoległe lub nawet seryjne Poznańskie Centrum Superkomputerowo-Sieciowe

POZNAŃ SUPERCOMPUTING AND NETWORKING Parallel Operating Environment The POE consists of parallel compiler scripts, POE environment variables, parallel debugger(s) and profiler(s), MPL, and parallel visualization tools. These tools allow one to develop, execute, profile, debug, and fine-tune parallel code. The Partition Manager controls a partition, or group of nodes on which you wish to run your program. The Partition Manager requests the nodes for your parallel job, acquires the nodes necessary for that job (if the Resource Manager is not used), copies the executables from the initiating node to each node in the partition, loads executables on every node in the partition, and sets up standard I/O. The Resource Manager keeps track of the nodes currently processing a parallel task, and, when nodes are requested from the Partion Manager, it allocates nodes for use. The Resource Manager attempts to enforce a ``one parallel task per node” rule. The Processor Pools are sets of nodes dedicated to a particular type of process (such as interactive, batch, I/O intensive) which have been grouped together by the system administrator(s).

POZNAŃ SUPERCOMPUTING AND NETWORKING Parallel Operating Environment The POE consists of parallel compiler scripts, POE environment variables, parallel debugger(s) and profiler(s), MPL, and parallel visualization tools. These tools allow one to develop, execute, profile, debug, and fine-tune parallel code. The Partition Manager controls a partition, or group of nodes on which you wish to run your program. The Partition Manager requests the nodes for your parallel job, acquires the nodes necessary for that job (if the Resource Manager is not used), copies the executables from the initiating node to each node in the partition, loads executables on every node in the partition, and sets up standard I/O. The Resource Manager keeps track of the nodes currently processing a parallel task, and, when nodes are requested from the Partion Manager, it allocates nodes for use. The Resource Manager attempts to enforce a ``one parallel task per node” rule. The Processor Pools are sets of nodes dedicated to a particular type of process (such as interactive, batch, I/O intensive) which have been grouped together by the system administrator(s).

POZNAŃ SUPERCOMPUTING AND NETWORKING Cray T 3 E Procesory są podzielone na domeny trzech typów: • systemowe • aplikacyjne • interakcyjne (system) (application) (command) Może być po kilka domen każdego typu.

POZNAŃ SUPERCOMPUTING AND NETWORKING Cray T 3 E Procesory są podzielone na domeny trzech typów: • systemowe • aplikacyjne • interakcyjne (system) (application) (command) Może być po kilka domen każdego typu.

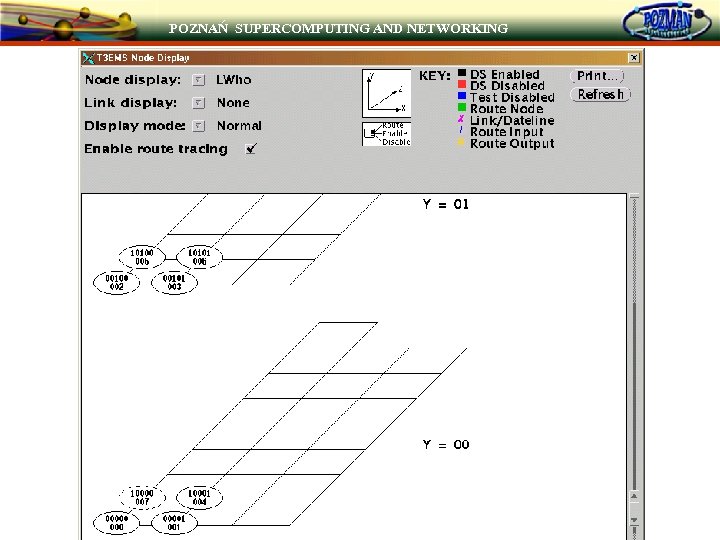

POZNAŃ SUPERCOMPUTING AND NETWORKING

POZNAŃ SUPERCOMPUTING AND NETWORKING

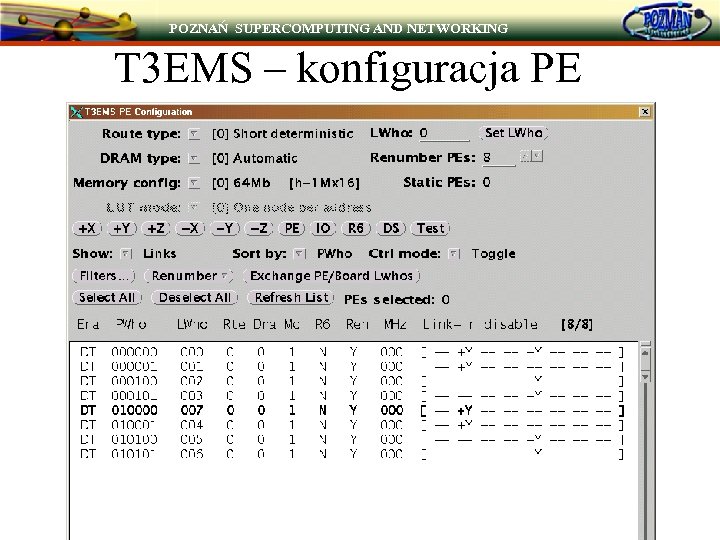

POZNAŃ SUPERCOMPUTING AND NETWORKING T 3 EMS – konfiguracja PE

POZNAŃ SUPERCOMPUTING AND NETWORKING T 3 EMS – konfiguracja PE

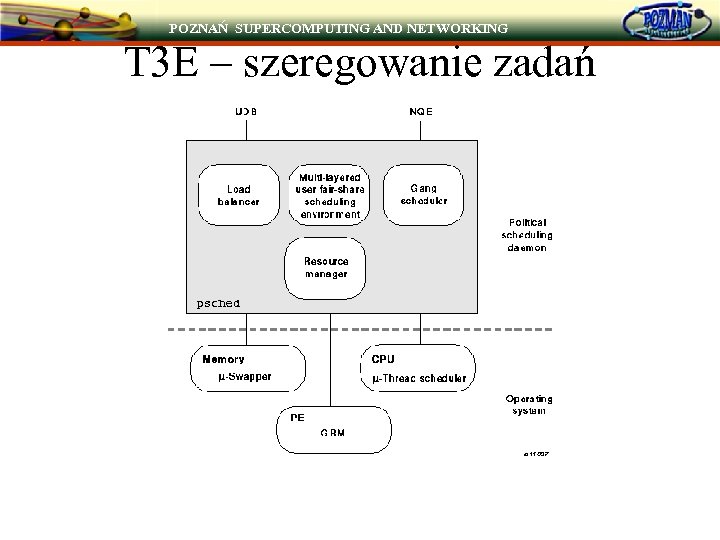

POZNAŃ SUPERCOMPUTING AND NETWORKING T 3 E – szeregowanie zadań

POZNAŃ SUPERCOMPUTING AND NETWORKING T 3 E – szeregowanie zadań

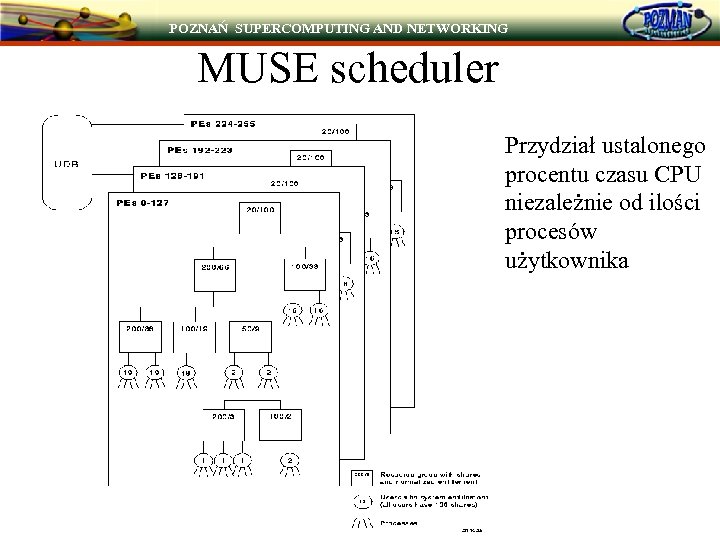

POZNAŃ SUPERCOMPUTING AND NETWORKING MUSE scheduler Przydział ustalonego procentu czasu CPU niezależnie od ilości procesów użytkownika

POZNAŃ SUPERCOMPUTING AND NETWORKING MUSE scheduler Przydział ustalonego procentu czasu CPU niezależnie od ilości procesów użytkownika

POZNAŃ SUPERCOMPUTING AND NETWORKING DCE 1. DCE provides tools and services that support distributed applications. (DCE RPC, DCE Threads, DCE Directory Service, Security Service and Distributed Time Service, 2. DCE's set of services is integrated and comprehensive. 3. DCE provides interoperability and portability across heterogeneous platforms. 4. DCE supports data sharing. 5. DCE participates in a global computing environment. (X. 500 and Domain Name Service (DNS))

POZNAŃ SUPERCOMPUTING AND NETWORKING DCE 1. DCE provides tools and services that support distributed applications. (DCE RPC, DCE Threads, DCE Directory Service, Security Service and Distributed Time Service, 2. DCE's set of services is integrated and comprehensive. 3. DCE provides interoperability and portability across heterogeneous platforms. 4. DCE supports data sharing. 5. DCE participates in a global computing environment. (X. 500 and Domain Name Service (DNS))

POZNAŃ SUPERCOMPUTING AND NETWORKING DCE Models of Distributed Computing • The Client/Server Model • The Remote Procedure Call Model • The Data Sharing Model • The Distributed Object Model

POZNAŃ SUPERCOMPUTING AND NETWORKING DCE Models of Distributed Computing • The Client/Server Model • The Remote Procedure Call Model • The Data Sharing Model • The Distributed Object Model

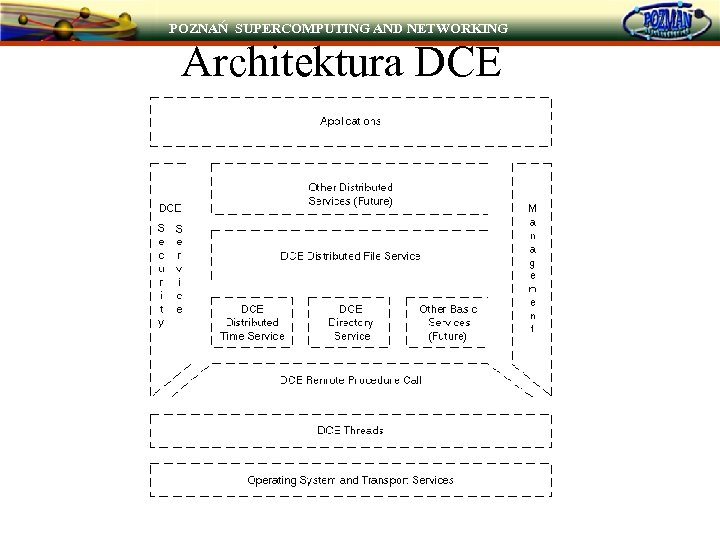

POZNAŃ SUPERCOMPUTING AND NETWORKING Architektura DCE

POZNAŃ SUPERCOMPUTING AND NETWORKING Architektura DCE