6a6d82214e233507e193ff0ee5eac1f7.ppt

- Количество слайдов: 55

Power awareness through selective Dynamically Optimized Traces Roni Rosner, Yoav Almong, Micha Moffie, Naftali Schwartz and Avi Mendelson Microprocessor Reseacrch Intel Labs, Haifa, Israel ISCA 2004 Presented at the Computer Architecture Group University of Cyprus by Andreas Artemiou 02 -02 -2005 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 1

Power awareness through selective Dynamically Optimized Traces Roni Rosner, Yoav Almong, Micha Moffie, Naftali Schwartz and Avi Mendelson Microprocessor Reseacrch Intel Labs, Haifa, Israel ISCA 2004 Presented at the Computer Architecture Group University of Cyprus by Andreas Artemiou 02 -02 -2005 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 1

PARROT Concept n Achieve higher performance with reduced energy consumption through gradual optimization of frequently executed code traces 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 2

PARROT Concept n Achieve higher performance with reduced energy consumption through gradual optimization of frequently executed code traces 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 2

Why energy is important? n Current processors have become power limited, that is, they can only operate at limited frequency, preventing them from achieving their full microarchitectural performance potential. 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 3

Why energy is important? n Current processors have become power limited, that is, they can only operate at limited frequency, preventing them from achieving their full microarchitectural performance potential. 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 3

Modern Processors n Front end: fetch, dispatch, issue n Back end: execute, commit n The front end bandwidth is crucial for the overall performance of the system n The power and complexity of dynamic scheduling depends on execution bandwidth as well as on program behavior and the instruction window size 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 4

Modern Processors n Front end: fetch, dispatch, issue n Back end: execute, commit n The front end bandwidth is crucial for the overall performance of the system n The power and complexity of dynamic scheduling depends on execution bandwidth as well as on program behavior and the instruction window size 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 4

Amdahl’s Law n This work try to take advantage of the hot/cold (90/10) paradigm following the Amdahl's Law n That is a small portion of the static program code is responsible for the most of its dynamic execution n PARROT applies similar principles like those in profiling compilers, dynamic translators etc. 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 5

Amdahl’s Law n This work try to take advantage of the hot/cold (90/10) paradigm following the Amdahl's Law n That is a small portion of the static program code is responsible for the most of its dynamic execution n PARROT applies similar principles like those in profiling compilers, dynamic translators etc. 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 5

Identifying techniques n To identify frequently executed code sections we have: n n Software based techniques like those proposed by Mahlke S. A. et al (MICRO 1992) Hardware based techniques like those by Merten M. C. et al (ISCA 26, 1999) n PARROT aims to aggressively exploit the hot/cold paradigm in hardware for the benefit of both: processor performance and power awareness PARROT=Power-Aware a. Rchitecture Running Optimized Traces 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 6

Identifying techniques n To identify frequently executed code sections we have: n n Software based techniques like those proposed by Mahlke S. A. et al (MICRO 1992) Hardware based techniques like those by Merten M. C. et al (ISCA 26, 1999) n PARROT aims to aggressively exploit the hot/cold paradigm in hardware for the benefit of both: processor performance and power awareness PARROT=Power-Aware a. Rchitecture Running Optimized Traces 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 6

What this work does? n Examine several microarchitectural alternatives based on the concept of PARROT (referred as PARROT Microarchitectures) n Organized around optimized trace cache. n What is a trace cache; 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 7

What this work does? n Examine several microarchitectural alternatives based on the concept of PARROT (referred as PARROT Microarchitectures) n Organized around optimized trace cache. n What is a trace cache; 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 7

PARROT parts n PARROT (3 parts) n Identify the most frequent sequences of program code with a mechanism that does trace selection and filtering n Aggressively optimize them once with a dynamic optimizer n Efficiently execute them many times (after stored in the trace cache) 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 8

PARROT parts n PARROT (3 parts) n Identify the most frequent sequences of program code with a mechanism that does trace selection and filtering n Aggressively optimize them once with a dynamic optimizer n Efficiently execute them many times (after stored in the trace cache) 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 8

PARROT parts (2) n Key factors for power awareness: n Gradual construction of traces n Pipeline decoupling n Specific Trace optimizations n Cold part: n Limiting the hardware for the cold part may exact a small price in performance n Hot part: n More aggressive hardware may be used to improve performance/power tradeoffs for the dominant hot segments of the code n Results show that no additional energy is spent for the optimization of hot traces 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 9

PARROT parts (2) n Key factors for power awareness: n Gradual construction of traces n Pipeline decoupling n Specific Trace optimizations n Cold part: n Limiting the hardware for the cold part may exact a small price in performance n Hot part: n More aggressive hardware may be used to improve performance/power tradeoffs for the dominant hot segments of the code n Results show that no additional energy is spent for the optimization of hot traces 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 9

Related work n PARROT's uniqueness is: n in the application of decoupling and n Dynamic optimization techniques to achieve better performance with less energy consumption n Other similar ideas: n re. PLay (Patel J. S. and Lumetta S. S. IEEE Transactions on Computer VOL. 50 NO. 6 JUNE 2001) – Hardare based n Turboscalar (B. Black and J. P. Shen ISCA 27, June 2000) – Hardware based n DAISY (K. Ebcioglu and E. R. Altman ISCA 24, 1997) – Software based 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 10

Related work n PARROT's uniqueness is: n in the application of decoupling and n Dynamic optimization techniques to achieve better performance with less energy consumption n Other similar ideas: n re. PLay (Patel J. S. and Lumetta S. S. IEEE Transactions on Computer VOL. 50 NO. 6 JUNE 2001) – Hardare based n Turboscalar (B. Black and J. P. Shen ISCA 27, June 2000) – Hardware based n DAISY (K. Ebcioglu and E. R. Altman ISCA 24, 1997) – Software based 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 10

Motivation n Parrot is based on the following observations n The working set of a program is relatively small n Much of the complexity excesses of modern OOO processors results from handling rare cases n Small segments of code which are repeatedly executed (“hot traces”) typically cover most of the program´s working set n The hot segments of the code behave differently than the rest of the code, namely they are more regular and predictable, and consequently they exhibit higher potential for ILP extraction than the other, less frequent executed parts of the code 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 11

Motivation n Parrot is based on the following observations n The working set of a program is relatively small n Much of the complexity excesses of modern OOO processors results from handling rare cases n Small segments of code which are repeatedly executed (“hot traces”) typically cover most of the program´s working set n The hot segments of the code behave differently than the rest of the code, namely they are more regular and predictable, and consequently they exhibit higher potential for ILP extraction than the other, less frequent executed parts of the code 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 11

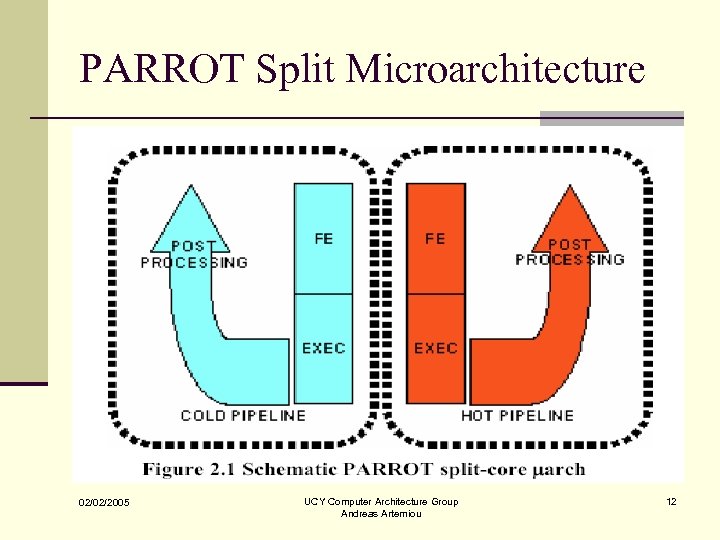

PARROT Split Microarchitecture 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 12

PARROT Split Microarchitecture 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 12

Microarchitecture Components n Front-end and execution pipelines are tuned for either cold or hot portions of the code n Background components post process the instruction flow out of the foreground pipeline making “off critical path” decisions such as when to move from the cold subsystem to the hot subsystems and when to apply further optimizations n Synchronization elements are required for arbitrating and switching states between pipelines and for preserving global program order 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 13

Microarchitecture Components n Front-end and execution pipelines are tuned for either cold or hot portions of the code n Background components post process the instruction flow out of the foreground pipeline making “off critical path” decisions such as when to move from the cold subsystem to the hot subsystems and when to apply further optimizations n Synchronization elements are required for arbitrating and switching states between pipelines and for preserving global program order 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 13

Cold/Hot Subsystems n A trace cache can be very efficient in handling hot code, provided this code has been sufficiently well identified (Previous studies) n We base the cold subsystem on instructions fetched from an instruction cache whereas the hot subsystem is based on traces fetched from a trace cache n Power awareness and trace cache effectiveness limit trace construction and trace cache insertion to frequently executed code sections n PARROT gradually applies dynamic optimizations – the hotter the trace is, the more aggressive poweraware optimizations are applied 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 14

Cold/Hot Subsystems n A trace cache can be very efficient in handling hot code, provided this code has been sufficiently well identified (Previous studies) n We base the cold subsystem on instructions fetched from an instruction cache whereas the hot subsystem is based on traces fetched from a trace cache n Power awareness and trace cache effectiveness limit trace construction and trace cache insertion to frequently executed code sections n PARROT gradually applies dynamic optimizations – the hotter the trace is, the more aggressive poweraware optimizations are applied 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 14

Decoding/Optimizing n Reusability of hardware work and results is important for both performance and energy savings n In PARROT, the trace-cache stores: decoded traces and is thus a container for reuse of decoding results n optimized traces allowing multiple reuses of trace optimizations n 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 15

Decoding/Optimizing n Reusability of hardware work and results is important for both performance and energy savings n In PARROT, the trace-cache stores: decoded traces and is thus a container for reuse of decoding results n optimized traces allowing multiple reuses of trace optimizations n 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 15

Dynamic Optimization n Dynamic Optimizations advantages: n Dynamic information available (outcome of trace internal branches) enables optimizations that are impossible for a static compiler. n Decoupling these optimizations allows more aggressive optimizations than on-the-fly optimizations that can be performed within a standard execution of the pipeline n To take full advantage of these optimizations atomicity of a trace is assumed. That permits very aggressive optimizations across basic-block boundaries n Architectural transparency (the hardware is able to optimize legacy code without the need of recompilation) 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 16

Dynamic Optimization n Dynamic Optimizations advantages: n Dynamic information available (outcome of trace internal branches) enables optimizations that are impossible for a static compiler. n Decoupling these optimizations allows more aggressive optimizations than on-the-fly optimizations that can be performed within a standard execution of the pipeline n To take full advantage of these optimizations atomicity of a trace is assumed. That permits very aggressive optimizations across basic-block boundaries n Architectural transparency (the hardware is able to optimize legacy code without the need of recompilation) 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 16

Traces n An execution trace is a sequence of operations representing a continuous segment of the dynamic flow of a program. Trace may contain execution beyond CTI and so a trace may extend over several basic blocks n In the current study they consider decoded atomic traces n n 02/02/2005 Decoded = contains decoded micro-operations (uops) and enable reuse of decode activity, thus saving energy Atomic traces are single-entry and single-exit UCY Computer Architecture Group Andreas Artemiou 17

Traces n An execution trace is a sequence of operations representing a continuous segment of the dynamic flow of a program. Trace may contain execution beyond CTI and so a trace may extend over several basic blocks n In the current study they consider decoded atomic traces n n 02/02/2005 Decoded = contains decoded micro-operations (uops) and enable reuse of decode activity, thus saving energy Atomic traces are single-entry and single-exit UCY Computer Architecture Group Andreas Artemiou 17

Trace Selection n Trace selection is the process of constructing particular traces out of a dynamic sequence of instructions. It may be: Deterministic: if applied to the fully predictable sequence of in-order committed instructions n Speculative: if applied to any previous stage in pipeline which instructions are potentially mispredicted n 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 18

Trace Selection n Trace selection is the process of constructing particular traces out of a dynamic sequence of instructions. It may be: Deterministic: if applied to the fully predictable sequence of in-order committed instructions n Speculative: if applied to any previous stage in pipeline which instructions are potentially mispredicted n 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 18

Trace Construction Criteria n Deterministic selection criteria: n Capacity limitation: traces are constructed into frames of at most 64 uops n Complete basic blocks: with the exceptions of large basic blocks, traces always terminate on CTIs n Terminating CTIs: all indirect jumps and software exceptions terminate basic blocks, except RETURN instructions. In addition, backward taken branches terminate a trace n RETURN instructions terminate traces only if they exit the outermost procedure context already encountered in the current trace n If two or more consecutive traces are identical, they are joined into a single trace, until capacity limit is reached (achieves the effects of explicit loop unrolling) n Unique trace identifiers (TIDs) can be compared into a single address and a sequence of branch directions (taken/not taken) 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 19

Trace Construction Criteria n Deterministic selection criteria: n Capacity limitation: traces are constructed into frames of at most 64 uops n Complete basic blocks: with the exceptions of large basic blocks, traces always terminate on CTIs n Terminating CTIs: all indirect jumps and software exceptions terminate basic blocks, except RETURN instructions. In addition, backward taken branches terminate a trace n RETURN instructions terminate traces only if they exit the outermost procedure context already encountered in the current trace n If two or more consecutive traces are identical, they are joined into a single trace, until capacity limit is reached (achieves the effects of explicit loop unrolling) n Unique trace identifiers (TIDs) can be compared into a single address and a sequence of branch directions (taken/not taken) 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 19

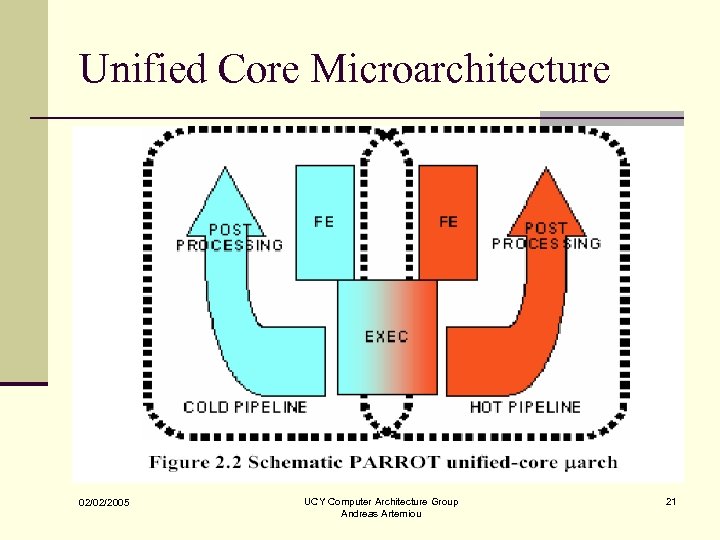

Split Execution Microarchitecture n A split-execution implementation, consists of two disjoint sub-systems for the cold and for the hot paths. n Different execution engines can be employed by each subsystem (wider execution engine, higher bandwidth etc) n There is an optimized unified-execution engine that shares the execution resources between the hot and cold subsystems 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 20

Split Execution Microarchitecture n A split-execution implementation, consists of two disjoint sub-systems for the cold and for the hot paths. n Different execution engines can be employed by each subsystem (wider execution engine, higher bandwidth etc) n There is an optimized unified-execution engine that shares the execution resources between the hot and cold subsystems 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 20

Unified Core Microarchitecture 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 21

Unified Core Microarchitecture 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 21

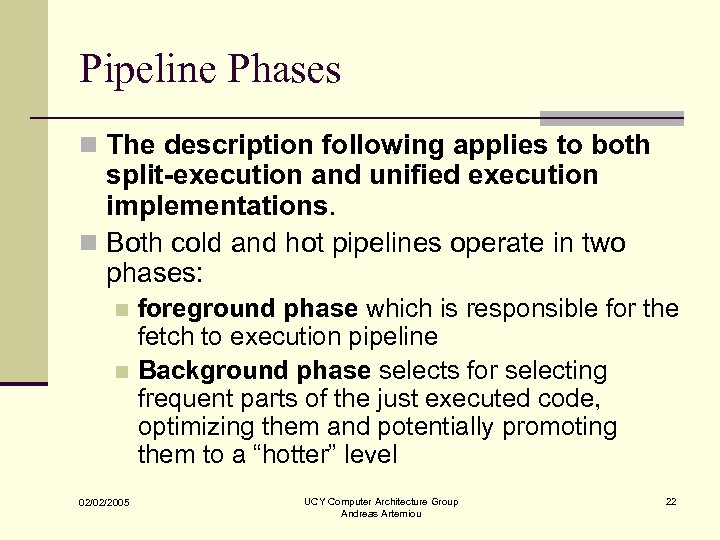

Pipeline Phases n The description following applies to both split-execution and unified execution implementations. n Both cold and hot pipelines operate in two phases: foreground phase which is responsible for the fetch to execution pipeline n Background phase selects for selecting frequent parts of the just executed code, optimizing them and potentially promoting them to a “hotter” level n 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 22

Pipeline Phases n The description following applies to both split-execution and unified execution implementations. n Both cold and hot pipelines operate in two phases: foreground phase which is responsible for the fetch to execution pipeline n Background phase selects for selecting frequent parts of the just executed code, optimizing them and potentially promoting them to a “hotter” level n 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 22

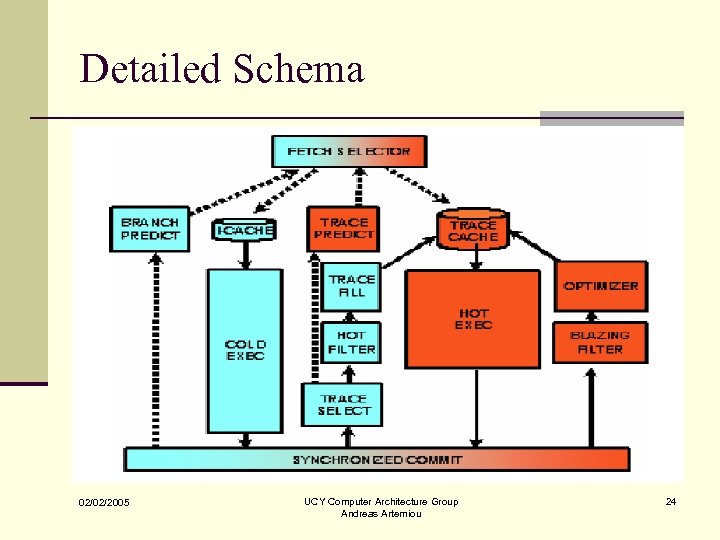

Background phase n The background phase of the cold subsystem identifies n n n frequent IA 32 instruction sequences and captures them as traces in the trace cache. Composed of TID selection, TID hot-filtering, traceconstruction and insertion into trace cache Continuous training of both trace predictor and hot filter is assured. Only those TIDs that pass the hot-filter continue to the trace construction stage. The background phase of the hot subsystem identifies the most frequent traces, optimizes them and inserts them back into trace cache. Post-processing is used gradually, so the longer a trace is used the more aggressive optimizations are applied to it 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 23

Background phase n The background phase of the cold subsystem identifies n n n frequent IA 32 instruction sequences and captures them as traces in the trace cache. Composed of TID selection, TID hot-filtering, traceconstruction and insertion into trace cache Continuous training of both trace predictor and hot filter is assured. Only those TIDs that pass the hot-filter continue to the trace construction stage. The background phase of the hot subsystem identifies the most frequent traces, optimizes them and inserts them back into trace cache. Post-processing is used gradually, so the longer a trace is used the more aggressive optimizations are applied to it 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 23

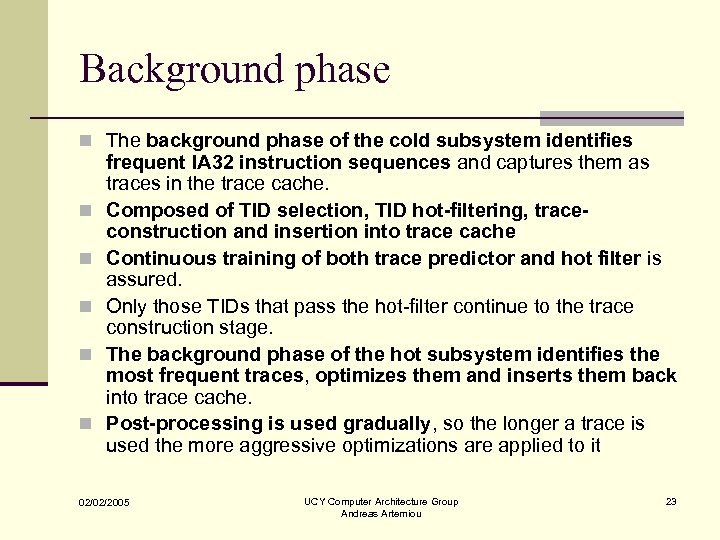

Detailed Schema 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 24

Detailed Schema 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 24

Predictors n Two predictors: n Branch predictor predicts the next cache line to be fetched from the I$ for execution on the cold pipeline. n A trace predictor predicts the TID of the next trace to be fetched from the trace cache and executed on the hot pipeline n Both based on a global history register (GHR) n GHR is updated for each CTI being executed n Both support speculative update upon fetch and real upon commit n NOTE!!! Is important that a trace predictor may predict a TID that reflect a trace not present in the trace cache. 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 25

Predictors n Two predictors: n Branch predictor predicts the next cache line to be fetched from the I$ for execution on the cold pipeline. n A trace predictor predicts the TID of the next trace to be fetched from the trace cache and executed on the hot pipeline n Both based on a global history register (GHR) n GHR is updated for each CTI being executed n Both support speculative update upon fetch and real upon commit n NOTE!!! Is important that a trace predictor may predict a TID that reflect a trace not present in the trace cache. 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 25

Fetch Selector n Fetch selector chooses between the execution pipelines by consulting both the lower priority branch predictor and the higher priority trace predictor n When the trace predictor is successful in making a next TID prediction the hot pipeline is selected and if a trace is successfully fetched it is executed on the hot pipeline n All other cases result in cold pipeline directed by branch prediction 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 26

Fetch Selector n Fetch selector chooses between the execution pipelines by consulting both the lower priority branch predictor and the higher priority trace predictor n When the trace predictor is successful in making a next TID prediction the hot pipeline is selected and if a trace is successfully fetched it is executed on the hot pipeline n All other cases result in cold pipeline directed by branch prediction 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 26

Foreground phase n In the foreground phase the pipelines are executing n n sequences of uops originating from either cold instructions or hot traces. A split core enables core specialization: n The cold core may focus on the execution of rare but complex operations or be less performance aggressive while the hot core may excel at aggressive execution of atomic traces, employ simplified renaming schemes or rely on dynamic scheduling performed by the optimizer On the other hand split core increases die size and introduces complexities with cold/hot stage switches A unified core reduces both die size and idle power This study considers standard superscalar out-of-order cores only, in both split and unified configurations 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 27

Foreground phase n In the foreground phase the pipelines are executing n n sequences of uops originating from either cold instructions or hot traces. A split core enables core specialization: n The cold core may focus on the execution of rare but complex operations or be less performance aggressive while the hot core may excel at aggressive execution of atomic traces, employ simplified renaming schemes or rely on dynamic scheduling performed by the optimizer On the other hand split core increases die size and introduces complexities with cold/hot stage switches A unified core reduces both die size and idle power This study considers standard superscalar out-of-order cores only, in both split and unified configurations 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 27

Switch Mechanism in Split Core n For the split core: n The state switch mechanism ensures that values computed and stored in the register file of one core are used at the appropriate time and place in the second n By tracking for each register the last writer uop preceding the switch and the first reader uop of the code following the switch, and assuring that the reader is not executed until writer completes writeback and the value has communicated to the second core 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 28

Switch Mechanism in Split Core n For the split core: n The state switch mechanism ensures that values computed and stored in the register file of one core are used at the appropriate time and place in the second n By tracking for each register the last writer uop preceding the switch and the first reader uop of the code following the switch, and assuring that the reader is not executed until writer completes writeback and the value has communicated to the second core 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 28

Commit Stage n The commit stage is responsible for committing IA 32 instructions to the architectural state. n Two synchronization issues: n to commit instruction in program order (in a split microarchitecture instructions must contain markers to reconstruct global order) n The atomic hot traces should be committed at once as a single entity, requiring a mechanism for state accumulation n Note that for such a gradual scheme only moderate enlargement of non critical machine resources is necessary. n If any intermediate event prevents full completion of the trace, the remaining uops are flushed and the architectural stage returns before the fetch of the trace. This may happen from exceptions, failed assert uops or from external interrupts! 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 29

Commit Stage n The commit stage is responsible for committing IA 32 instructions to the architectural state. n Two synchronization issues: n to commit instruction in program order (in a split microarchitecture instructions must contain markers to reconstruct global order) n The atomic hot traces should be committed at once as a single entity, requiring a mechanism for state accumulation n Note that for such a gradual scheme only moderate enlargement of non critical machine resources is necessary. n If any intermediate event prevents full completion of the trace, the remaining uops are flushed and the architectural stage returns before the fetch of the trace. This may happen from exceptions, failed assert uops or from external interrupts! 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 29

Post Processing n For post-processing cold code, PARROT employs a deterministic TID/trace build scheme. n Uops from cold committed instructions are collected until a termination condition of a trace is reached. Then a new TID is generated from the entry address and the CTI’s, and this TID is used to train the trace predictor n If this TID is identified as frequent, the collected uops are used to construct an executable trace that can be inserted into the trace cache. 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 30

Post Processing n For post-processing cold code, PARROT employs a deterministic TID/trace build scheme. n Uops from cold committed instructions are collected until a termination condition of a trace is reached. Then a new TID is generated from the entry address and the CTI’s, and this TID is used to train the trace predictor n If this TID is identified as frequent, the collected uops are used to construct an executable trace that can be inserted into the trace cache. 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 30

Filtering Mechanisms n PARROT employs two filtering mechanisms: Hot filter to select frequent TID’s from among those constructed on cold pipeline n Blazing filter which is used for selecting the most frequent TID’s from among those executed on the hot pipeline Both are small caches that maintain counters for each TID Each trace execution increment the counter of the executed TID Once the hot filter threshold is reached, the trace is constructed and inserted into the trace cache. When the blazing filter threshold is reached, the execution trace is optimized and written back to the trace cache, replacing the original. n n n 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 31

Filtering Mechanisms n PARROT employs two filtering mechanisms: Hot filter to select frequent TID’s from among those constructed on cold pipeline n Blazing filter which is used for selecting the most frequent TID’s from among those executed on the hot pipeline Both are small caches that maintain counters for each TID Each trace execution increment the counter of the executed TID Once the hot filter threshold is reached, the trace is constructed and inserted into the trace cache. When the blazing filter threshold is reached, the execution trace is optimized and written back to the trace cache, replacing the original. n n n 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 31

Optimizations n PARROT employs dynamic optimizations on blazing traces. n The optimizations can be classified as: n n general purpose which are independent of the underlying execution core (logic simplifications, constant propagation and dead code elimination) or core-specific which include functional transformations as (micro-operation fusion and SIMDification and global transformations) n Optimization results in: n Uop reduction n Dependency elimination n Simplified renaming n Improved scheduling n Virtual renaming results Computer Architecture Group to power/energy savings UCY 02/02/2005 Andreas Artemiou 32

Optimizations n PARROT employs dynamic optimizations on blazing traces. n The optimizations can be classified as: n n general purpose which are independent of the underlying execution core (logic simplifications, constant propagation and dead code elimination) or core-specific which include functional transformations as (micro-operation fusion and SIMDification and global transformations) n Optimization results in: n Uop reduction n Dependency elimination n Simplified renaming n Improved scheduling n Virtual renaming results Computer Architecture Group to power/energy savings UCY 02/02/2005 Andreas Artemiou 32

Simulation Framework n Performance simulation n Energy simulation 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 33

Simulation Framework n Performance simulation n Energy simulation 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 33

Performance Simulation (1) n Incorporates full memory hierarchy n Newly designed components for the post processing phases n Software architecture includes a generic highly configurable object oriented execution core class which can be instantiated with a variable number of execution cores of widely differing characteristics 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 34

Performance Simulation (1) n Incorporates full memory hierarchy n Newly designed components for the post processing phases n Software architecture includes a generic highly configurable object oriented execution core class which can be instantiated with a variable number of execution cores of widely differing characteristics 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 34

Performance Simulation (2) n One feature of the simulation framework is the abstract instruction which can be defined as a “commitable work unit” and so has a different interpretation within the cold and hot pipelines i. e. in cold pipeline is an instruction, in hot pipeline is the trace 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 35

Performance Simulation (2) n One feature of the simulation framework is the abstract instruction which can be defined as a “commitable work unit” and so has a different interpretation within the cold and hot pipelines i. e. in cold pipeline is an instruction, in hot pipeline is the trace 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 35

Energy Simulation (1) n Use tools which are based on a combination of the WATTCH-like and TEM 2 P 2 EST like approaches. n Assume uniform leakage In space n In time n 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 36

Energy Simulation (1) n Use tools which are based on a combination of the WATTCH-like and TEM 2 P 2 EST like approaches. n Assume uniform leakage In space n In time n 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 36

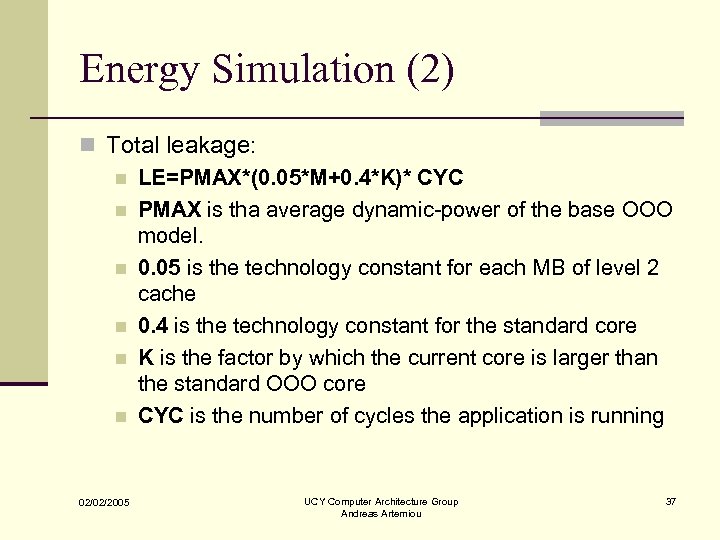

Energy Simulation (2) n Total leakage: n LE=PMAX*(0. 05*M+0. 4*K)* CYC n PMAX is tha average dynamic-power of the base OOO model. n 0. 05 is the technology constant for each MB of level 2 cache n 0. 4 is the technology constant for the standard core n K is the factor by which the current core is larger than the standard OOO core n CYC is the number of cycles the application is running 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 37

Energy Simulation (2) n Total leakage: n LE=PMAX*(0. 05*M+0. 4*K)* CYC n PMAX is tha average dynamic-power of the base OOO model. n 0. 05 is the technology constant for each MB of level 2 cache n 0. 4 is the technology constant for the standard core n K is the factor by which the current core is larger than the standard OOO core n CYC is the number of cycles the application is running 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 37

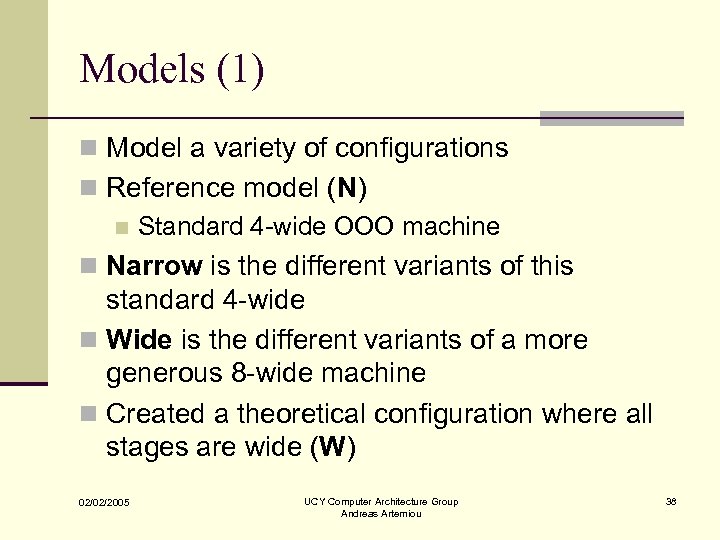

Models (1) n Model a variety of configurations n Reference model (N) n Standard 4 -wide OOO machine n Narrow is the different variants of this standard 4 -wide n Wide is the different variants of a more generous 8 -wide machine n Created a theoretical configuration where all stages are wide (W) 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 38

Models (1) n Model a variety of configurations n Reference model (N) n Standard 4 -wide OOO machine n Narrow is the different variants of this standard 4 -wide n Wide is the different variants of a more generous 8 -wide machine n Created a theoretical configuration where all stages are wide (W) 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 38

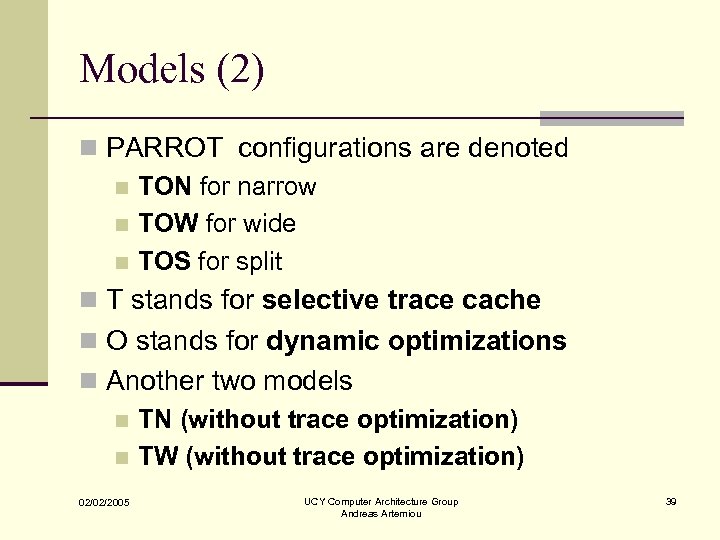

Models (2) n PARROT configurations are denoted n TON for narrow n TOW for wide n TOS for split n T stands for selective trace cache n O stands for dynamic optimizations n Another two models n TN (without trace optimization) n TW (without trace optimization) 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 39

Models (2) n PARROT configurations are denoted n TON for narrow n TOW for wide n TOS for split n T stands for selective trace cache n O stands for dynamic optimizations n Another two models n TN (without trace optimization) n TW (without trace optimization) 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 39

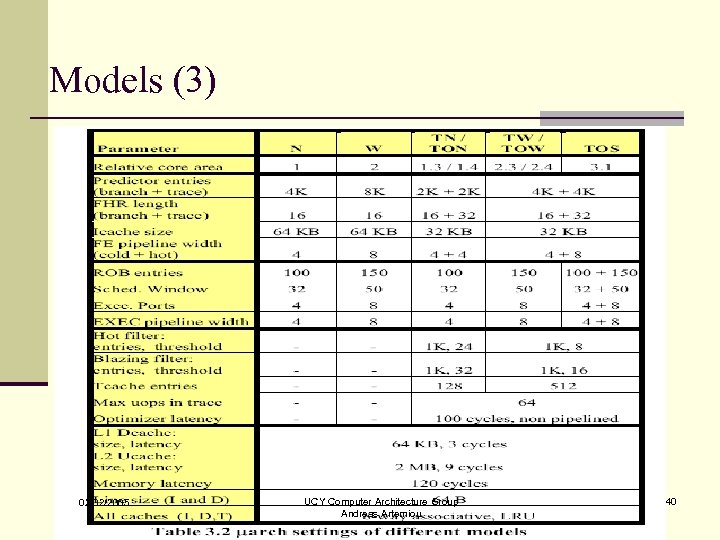

Models (3) 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 40

Models (3) 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 40

Benchmarks n Spec. Int 2000: 30 M instructions n SPECFP 2000: 30 M instructions n OFFICE/Windows applications: 100 M instructions n Multimedia: from 30 M to 100 M instructions n Dot. Net: 100 M instructions 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 41

Benchmarks n Spec. Int 2000: 30 M instructions n SPECFP 2000: 30 M instructions n OFFICE/Windows applications: 100 M instructions n Multimedia: from 30 M to 100 M instructions n Dot. Net: 100 M instructions 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 41

Metrics n For processor performance: n IPC n Total energy n Cubic-MIPS-per-WATT(CMPW) n Parameters characterizing PARROT: n Coverage n Uop reduction n Energy breakdown 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 42

Metrics n For processor performance: n IPC n Total energy n Cubic-MIPS-per-WATT(CMPW) n Parameters characterizing PARROT: n Coverage n Uop reduction n Energy breakdown 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 42

Results n Presents the results of alternative enhancements applied to the reference machines N and W n The TOS conceptual microarchitecture statistics are presented only as a reference for alternative future development 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 43

Results n Presents the results of alternative enhancements applied to the reference machines N and W n The TOS conceptual microarchitecture statistics are presented only as a reference for alternative future development 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 43

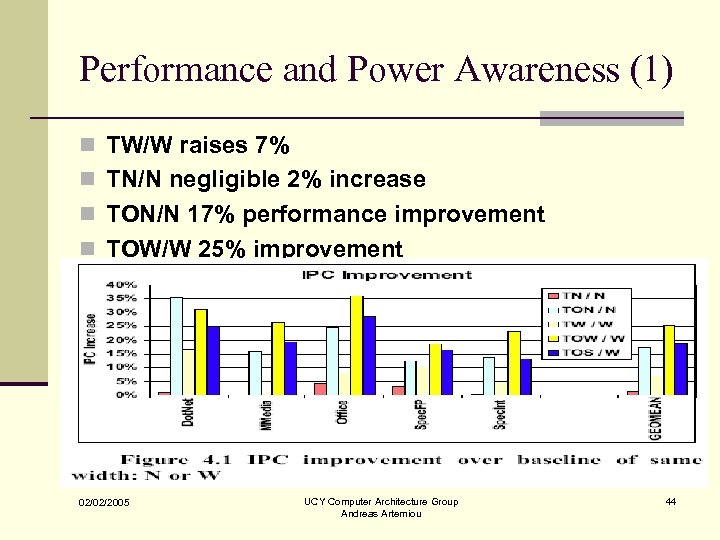

Performance and Power Awareness (1) n TW/W raises 7% n TN/N negligible 2% increase n TON/N 17% performance improvement n TOW/W 25% improvement 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 44

Performance and Power Awareness (1) n TW/W raises 7% n TN/N negligible 2% increase n TON/N 17% performance improvement n TOW/W 25% improvement 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 44

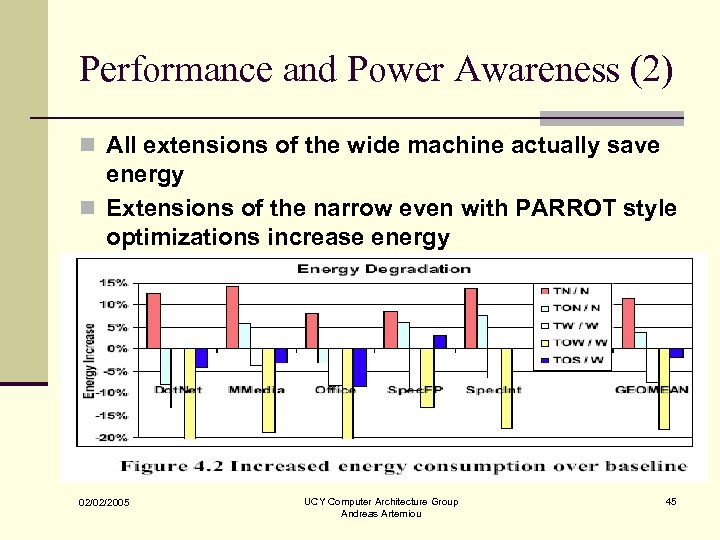

Performance and Power Awareness (2) n All extensions of the wide machine actually save energy n Extensions of the narrow even with PARROT style optimizations increase energy 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 45

Performance and Power Awareness (2) n All extensions of the wide machine actually save energy n Extensions of the narrow even with PARROT style optimizations increase energy 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 45

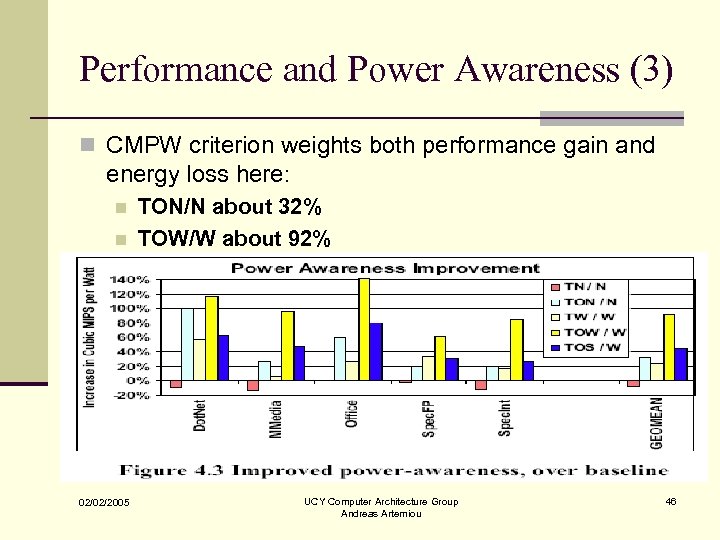

Performance and Power Awareness (3) n CMPW criterion weights both performance gain and energy loss here: n n 02/02/2005 TON/N about 32% TOW/W about 92% UCY Computer Architecture Group Andreas Artemiou 46

Performance and Power Awareness (3) n CMPW criterion weights both performance gain and energy loss here: n n 02/02/2005 TON/N about 32% TOW/W about 92% UCY Computer Architecture Group Andreas Artemiou 46

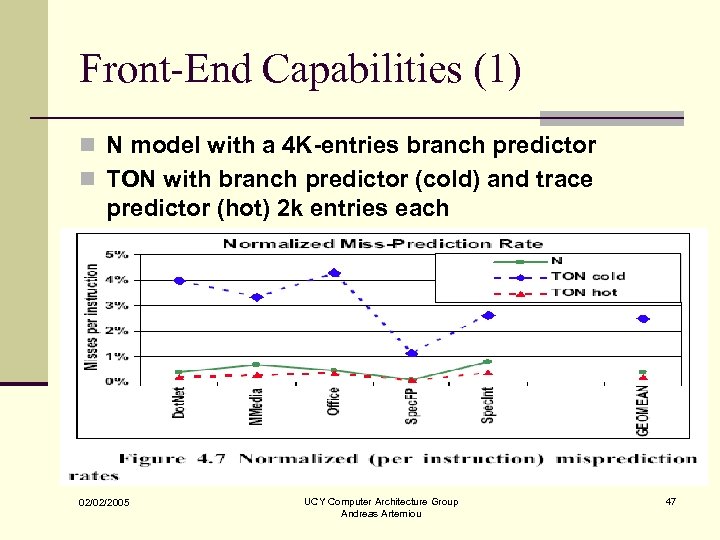

Front-End Capabilities (1) n N model with a 4 K-entries branch predictor n TON with branch predictor (cold) and trace predictor (hot) 2 k entries each 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 47

Front-End Capabilities (1) n N model with a 4 K-entries branch predictor n TON with branch predictor (cold) and trace predictor (hot) 2 k entries each 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 47

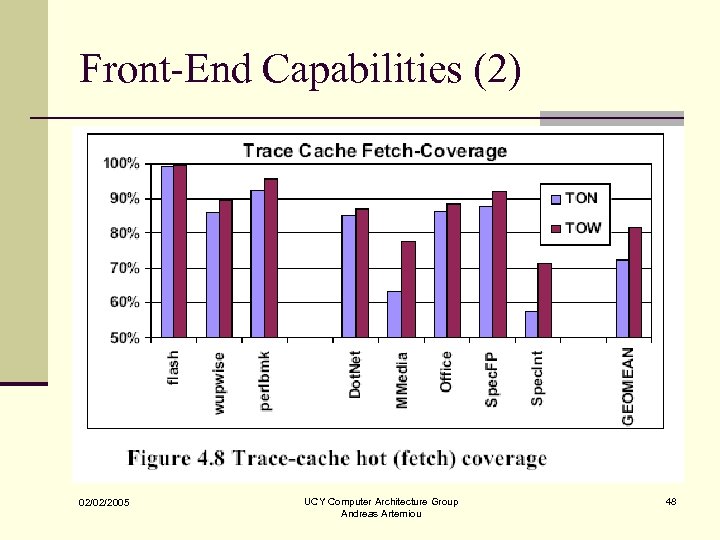

Front-End Capabilities (2) 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 48

Front-End Capabilities (2) 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 48

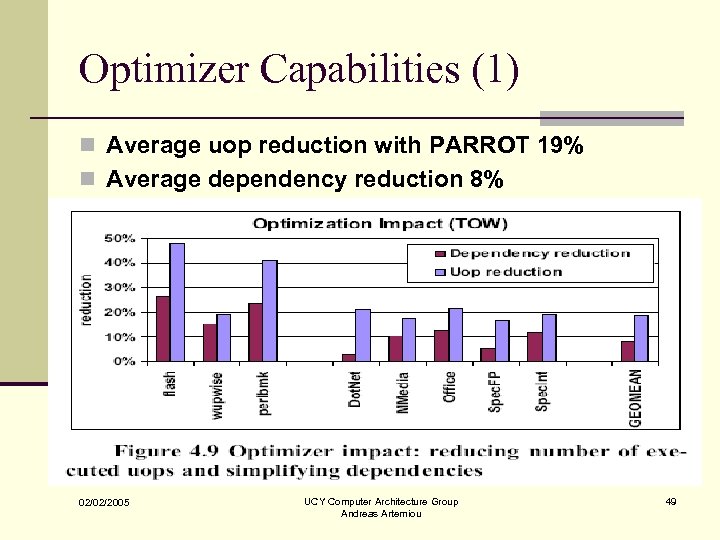

Optimizer Capabilities (1) n Average uop reduction with PARROT 19% n Average dependency reduction 8% 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 49

Optimizer Capabilities (1) n Average uop reduction with PARROT 19% n Average dependency reduction 8% 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 49

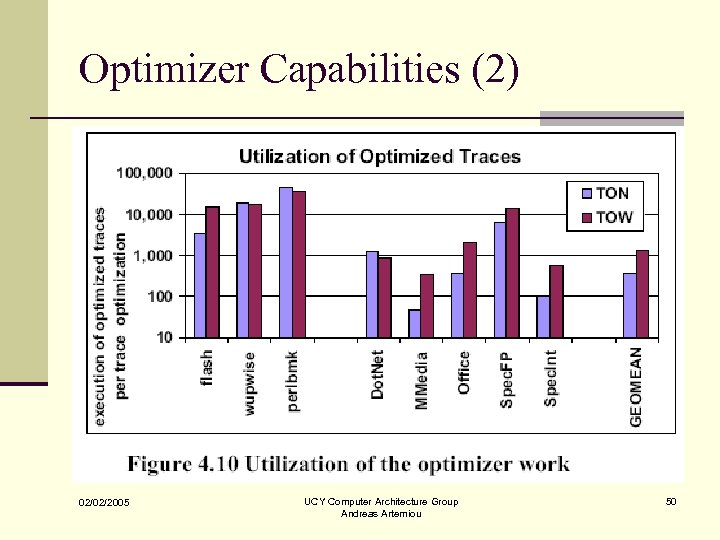

Optimizer Capabilities (2) 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 50

Optimizer Capabilities (2) 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 50

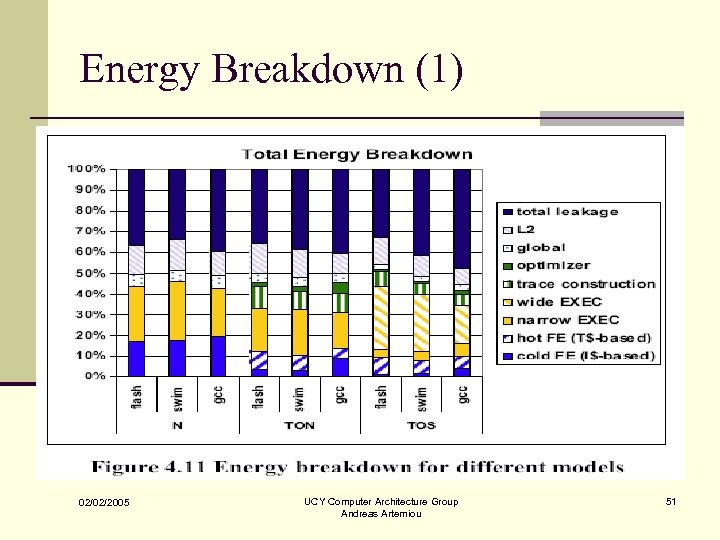

Energy Breakdown (1) 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 51

Energy Breakdown (1) 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 51

Conclusions (1) n Presents PARROT n Improving processor performance and power awareness n Consists of asymmetric decoupling of the processor into subsystems responsible for handling the cold-infrequent and hot-frequent portions of code n Designs each part according to different power and performance considerations 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 52

Conclusions (1) n Presents PARROT n Improving processor performance and power awareness n Consists of asymmetric decoupling of the processor into subsystems responsible for handling the cold-infrequent and hot-frequent portions of code n Designs each part according to different power and performance considerations 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 52

Conclusions (2) n The presented simulation results demonstrate that applying the PARROT concept to a standard 4 -wide, OOO processor yields comparable performance to an 8 -wide processor, however, consuming significantly less energy 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 53

Conclusions (2) n The presented simulation results demonstrate that applying the PARROT concept to a standard 4 -wide, OOO processor yields comparable performance to an 8 -wide processor, however, consuming significantly less energy 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 53

Future Work n One major topic for future research is related to split-core micro architectures n Investigate potential advantage of such design for establishing even better performance/energy tradeoffs by considering different alternatives 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 54

Future Work n One major topic for future research is related to split-core micro architectures n Investigate potential advantage of such design for establishing even better performance/energy tradeoffs by considering different alternatives 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 54

Thank you!!!! 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 55

Thank you!!!! 02/02/2005 UCY Computer Architecture Group Andreas Artemiou 55