c7bc3fde5b617077c3b5021b3dd04b7e.ppt

- Количество слайдов: 56

Power and Energy Conservation Techniques for Disk Array Based Systems Zvika Guz zguz@tx. technion. ac. il June, 2004

Power and Energy Conservation Techniques for Disk Array Based Systems Zvika Guz zguz@tx. technion. ac. il June, 2004

Agenda n Motivation n The Severs world special characteristics ¨ n Why the mobile world approach doesn’t work 3 different solutions ¨ ¨ Power Aware Cache ¨ n Dynamically modulate the disk speed Popular Data concentration Summary 2

Agenda n Motivation n The Severs world special characteristics ¨ n Why the mobile world approach doesn’t work 3 different solutions ¨ ¨ Power Aware Cache ¨ n Dynamically modulate the disk speed Popular Data concentration Summary 2

Agenda n Motivation n The Severs world special characteristics ¨ n Why the mobile world approach doesn’t work 3 different solutions ¨ ¨ Power Aware Cache ¨ n Dynamically modulate the disk speed Popular Data concentration Summary 3

Agenda n Motivation n The Severs world special characteristics ¨ n Why the mobile world approach doesn’t work 3 different solutions ¨ ¨ Power Aware Cache ¨ n Dynamically modulate the disk speed Popular Data concentration Summary 3

Power consumption in Servers ? Who cares ? Energy User News (EUN) predictions for 2005: n n Energy cost is growing by 25% annually. Power requirement will grow from 150 Watts/ft 2 to 300 Watts/ft 2. ¨ Requires huge cooling infrastructure in order to dissipate this hit n n two times the total power used by the computers is needed for HVAC Total web sites and servers will consumes 40 TWh per year ! 4 billion dollars at 100$ per MWh ¨ Occupies more then 2 Hoover Dams 24 X 7 ¨ http: //www. energyusernews. com/ 4

Power consumption in Servers ? Who cares ? Energy User News (EUN) predictions for 2005: n n Energy cost is growing by 25% annually. Power requirement will grow from 150 Watts/ft 2 to 300 Watts/ft 2. ¨ Requires huge cooling infrastructure in order to dissipate this hit n n two times the total power used by the computers is needed for HVAC Total web sites and servers will consumes 40 TWh per year ! 4 billion dollars at 100$ per MWh ¨ Occupies more then 2 Hoover Dams 24 X 7 ¨ http: //www. energyusernews. com/ 4

Power consumption hurts n Power becomes a considerable factor in the TCO of a data center ¨ Power delivery ¨ Cooling the system (air-condition) n high operating temperature effect the stability and reliability of the system n Electricity production harms the environment Disks role in the power equation n 27% of the total energy consumed by a data center. ¨ The biggest single load in the system n Storage demands are growing by 60% annually n The use of continuously growing RAID arrays keeps enlarging the disks relative contribution 5

Power consumption hurts n Power becomes a considerable factor in the TCO of a data center ¨ Power delivery ¨ Cooling the system (air-condition) n high operating temperature effect the stability and reliability of the system n Electricity production harms the environment Disks role in the power equation n 27% of the total energy consumed by a data center. ¨ The biggest single load in the system n Storage demands are growing by 60% annually n The use of continuously growing RAID arrays keeps enlarging the disks relative contribution 5

Agenda n Motivation n The Severs world special characteristics ¨ n Why the mobile world approach doesn’t work 3 different solutions ¨ ¨ Power Aware Cache ¨ n Dynamically modulate the disk speed Popular Data concentration Summary 6

Agenda n Motivation n The Severs world special characteristics ¨ n Why the mobile world approach doesn’t work 3 different solutions ¨ ¨ Power Aware Cache ¨ n Dynamically modulate the disk speed Popular Data concentration Summary 6

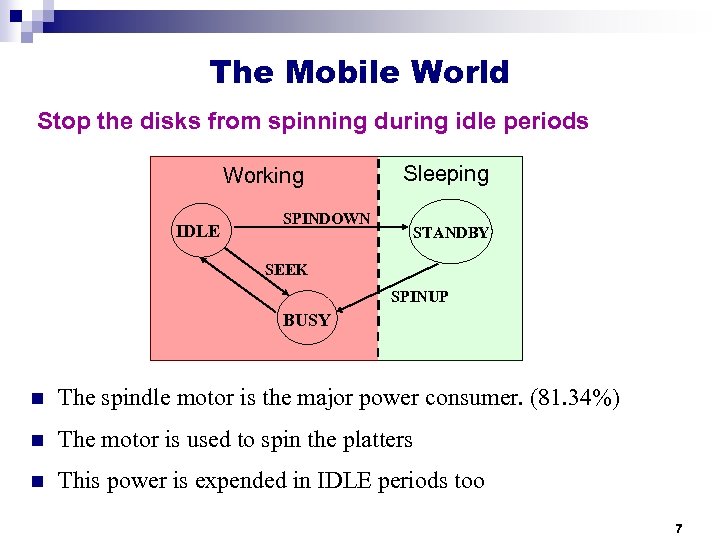

The Mobile World Stop the disks from spinning during idle periods Working IDLE Sleeping SPINDOWN STANDBY SEEK SPINUP BUSY n The spindle motor is the major power consumer. (81. 34%) n The motor is used to spin the platters n This power is expended in IDLE periods too 7

The Mobile World Stop the disks from spinning during idle periods Working IDLE Sleeping SPINDOWN STANDBY SEEK SPINUP BUSY n The spindle motor is the major power consumer. (81. 34%) n The motor is used to spin the platters n This power is expended in IDLE periods too 7

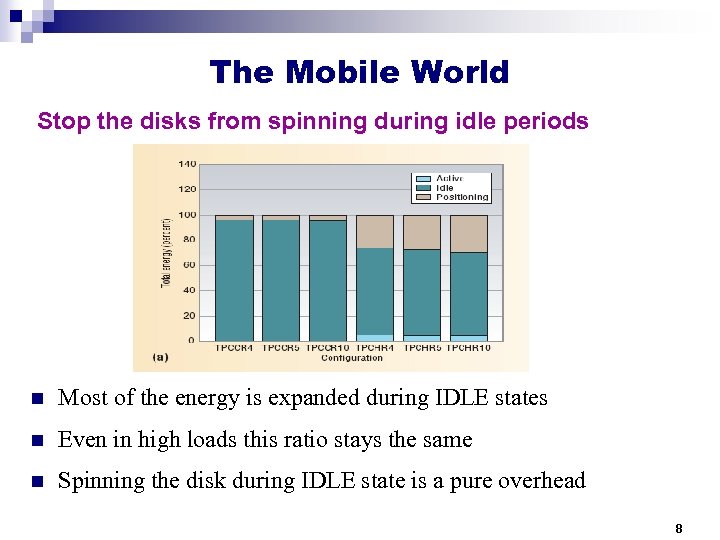

The Mobile World Stop the disks from spinning during idle periods n Most of the energy is expanded during IDLE states n Even in high loads this ratio stays the same n Spinning the disk during IDLE state is a pure overhead 8

The Mobile World Stop the disks from spinning during idle periods n Most of the energy is expanded during IDLE states n Even in high loads this ratio stays the same n Spinning the disk during IDLE state is a pure overhead 8

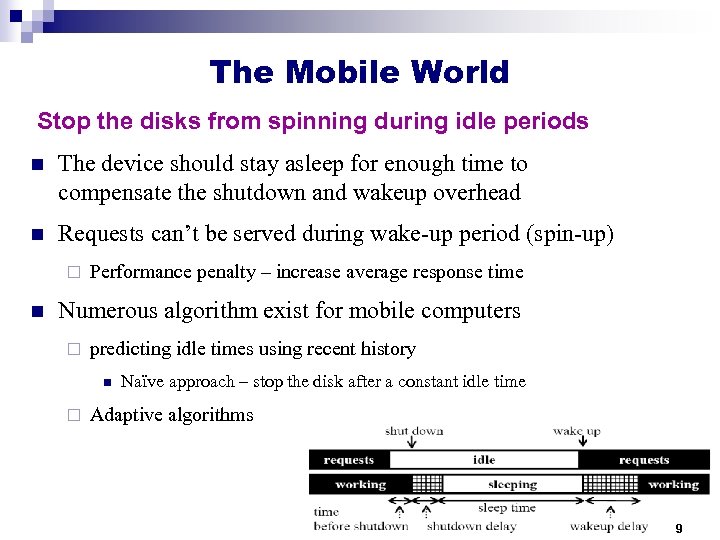

The Mobile World Stop the disks from spinning during idle periods n The device should stay asleep for enough time to compensate the shutdown and wakeup overhead n Requests can’t be served during wake-up period (spin-up) ¨ n Performance penalty – increase average response time Numerous algorithm exist for mobile computers ¨ predicting idle times using recent history n ¨ Naïve approach – stop the disk after a constant idle time Adaptive algorithms 9

The Mobile World Stop the disks from spinning during idle periods n The device should stay asleep for enough time to compensate the shutdown and wakeup overhead n Requests can’t be served during wake-up period (spin-up) ¨ n Performance penalty – increase average response time Numerous algorithm exist for mobile computers ¨ predicting idle times using recent history n ¨ Naïve approach – stop the disk after a constant idle time Adaptive algorithms 9

Special Needs of the Server World Workload characteristic n Servers experience extremely short idle times ¨ ¨ Continuous request stream rather then intermittent activity ¨ n many transactions at the same time Light loads periods present the same behavior Previous (recent) history do not provide good predictions of future idle times ¨ n TPM schemes do not work that well Performance degradations is usually unacceptable Yet, this partition still holds: 10

Special Needs of the Server World Workload characteristic n Servers experience extremely short idle times ¨ ¨ Continuous request stream rather then intermittent activity ¨ n many transactions at the same time Light loads periods present the same behavior Previous (recent) history do not provide good predictions of future idle times ¨ n TPM schemes do not work that well Performance degradations is usually unacceptable Yet, this partition still holds: 10

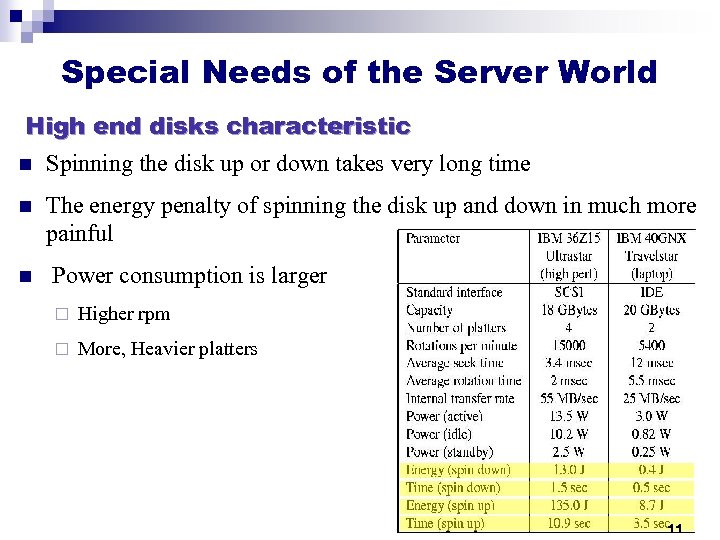

Special Needs of the Server World High end disks characteristic n Spinning the disk up or down takes very long time n n The energy penalty of spinning the disk up and down in much more painful Power consumption is larger ¨ Higher rpm ¨ More, Heavier platters 11

Special Needs of the Server World High end disks characteristic n Spinning the disk up or down takes very long time n n The energy penalty of spinning the disk up and down in much more painful Power consumption is larger ¨ Higher rpm ¨ More, Heavier platters 11

Special Needs of the Server World High end disks characteristic n Spinning the disk up or down takes very long time n The energy penalty of spinning the disk up and down in much more painful ⇒ minimum sleeping time needed is significantly larger ¨ Allow enough time to spin the disk down and up ¨ Must compensate much larger overheads ⇒ Much larger impact on performance ¨ Large latency of spinning up the disk to serve a new request 12

Special Needs of the Server World High end disks characteristic n Spinning the disk up or down takes very long time n The energy penalty of spinning the disk up and down in much more painful ⇒ minimum sleeping time needed is significantly larger ¨ Allow enough time to spin the disk down and up ¨ Must compensate much larger overheads ⇒ Much larger impact on performance ¨ Large latency of spinning up the disk to serve a new request 12

Agenda n Motivation n The Severs world special characteristics ¨ n Why the mobile world approach doesn’t work 3 different solutions ¨ ¨ Power Aware Cache ¨ n Dynamically modulate the disk speed Popular Data concentration Summary 13

Agenda n Motivation n The Severs world special characteristics ¨ n Why the mobile world approach doesn’t work 3 different solutions ¨ ¨ Power Aware Cache ¨ n Dynamically modulate the disk speed Popular Data concentration Summary 13

DRPM: Dynamic Rotations per Minutes n “DRPM: Dynamic Speed Control for Power Management in Server Class Disks” Sudhanva Gurumurthi, Anand Sivasubramaniam, Mahmut Kandemir, and Hubertus Franke. In Proceedings of the International Symposium on Computer Architecture (ISCA’ 03), June 9 -11, 2003, San Diego, California, USA IEEE CS Press, pp. 169 -179. http: //www. cse. psu. edu/~anand/csl/papers/isca 03. pdf n “Reducing Disk Power Consumption in Servers with DRPM” Sudhanva Gurumurthi, Anand Sivasubramaniam, Mahmut Kandemir, and Hubertus Franke. IEEE Computer, 36(12): 59 -66, December 2003. http: //www. cse. psu. edu/~gurumurt/papers/ieee_comp 03. pdf 14

DRPM: Dynamic Rotations per Minutes n “DRPM: Dynamic Speed Control for Power Management in Server Class Disks” Sudhanva Gurumurthi, Anand Sivasubramaniam, Mahmut Kandemir, and Hubertus Franke. In Proceedings of the International Symposium on Computer Architecture (ISCA’ 03), June 9 -11, 2003, San Diego, California, USA IEEE CS Press, pp. 169 -179. http: //www. cse. psu. edu/~anand/csl/papers/isca 03. pdf n “Reducing Disk Power Consumption in Servers with DRPM” Sudhanva Gurumurthi, Anand Sivasubramaniam, Mahmut Kandemir, and Hubertus Franke. IEEE Computer, 36(12): 59 -66, December 2003. http: //www. cse. psu. edu/~gurumurt/papers/ieee_comp 03. pdf 14

DRPM: Main idea n Modulate the disk speed dynamically ¨ ¨ n SW can dynamically control the spindle motor. (via a register) A larger spectrum then the TPM on/off modes Request can be served at all speeds of the spectrum Disk configuration and assumptions: n Rotation speed: 3600 -12, 000 rpm. 15 levels of rpm KE = const, n ¨ n = rotation speed, R= motor renitence Similar to the DVS equation (not really…) Time to change RPM is proportional to the amplitude of the change 15

DRPM: Main idea n Modulate the disk speed dynamically ¨ ¨ n SW can dynamically control the spindle motor. (via a register) A larger spectrum then the TPM on/off modes Request can be served at all speeds of the spectrum Disk configuration and assumptions: n Rotation speed: 3600 -12, 000 rpm. 15 levels of rpm KE = const, n ¨ n = rotation speed, R= motor renitence Similar to the DVS equation (not really…) Time to change RPM is proportional to the amplitude of the change 15

DRPM - Advantages over TPM n The large “minimum sleeping time” needed ¨ ¨ n Spinning the disk up or down takes very long time A huge energy penalty of spinning the disk up and down The very short idle times in servers workload 16

DRPM - Advantages over TPM n The large “minimum sleeping time” needed ¨ ¨ n Spinning the disk up or down takes very long time A huge energy penalty of spinning the disk up and down The very short idle times in servers workload 16

DRPM - Advantages over TPM n Exploits much shorter idle periods ¨ No need to fully spin down the disk n n n saves time Reduce the energy overhead (is it really? ) The performance degradation due to the long wake up time 17

DRPM - Advantages over TPM n Exploits much shorter idle periods ¨ No need to fully spin down the disk n n n saves time Reduce the energy overhead (is it really? ) The performance degradation due to the long wake up time 17

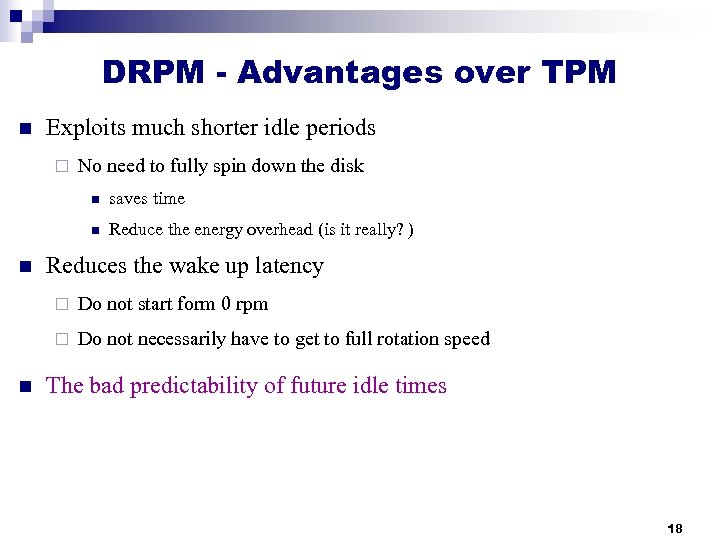

DRPM - Advantages over TPM n Exploits much shorter idle periods ¨ No need to fully spin down the disk n n n saves time Reduce the energy overhead (is it really? ) Reduces the wake up latency ¨ ¨ n Do not start form 0 rpm Do not necessarily have to get to full rotation speed The bad predictability of future idle times 18

DRPM - Advantages over TPM n Exploits much shorter idle periods ¨ No need to fully spin down the disk n n n saves time Reduce the energy overhead (is it really? ) Reduces the wake up latency ¨ ¨ n Do not start form 0 rpm Do not necessarily have to get to full rotation speed The bad predictability of future idle times 18

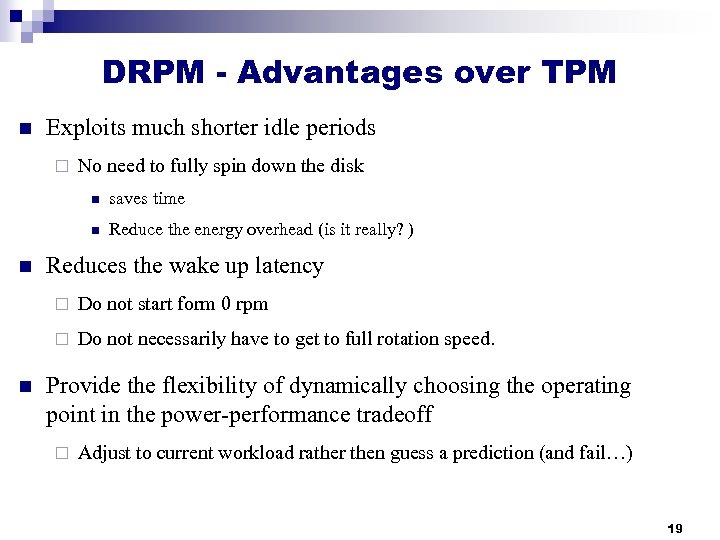

DRPM - Advantages over TPM n Exploits much shorter idle periods ¨ No need to fully spin down the disk n n n saves time Reduce the energy overhead (is it really? ) Reduces the wake up latency ¨ ¨ n Do not start form 0 rpm Do not necessarily have to get to full rotation speed. Provide the flexibility of dynamically choosing the operating point in the power-performance tradeoff ¨ Adjust to current workload rather then guess a prediction (and fail…) 19

DRPM - Advantages over TPM n Exploits much shorter idle periods ¨ No need to fully spin down the disk n n n saves time Reduce the energy overhead (is it really? ) Reduces the wake up latency ¨ ¨ n Do not start form 0 rpm Do not necessarily have to get to full rotation speed. Provide the flexibility of dynamically choosing the operating point in the power-performance tradeoff ¨ Adjust to current workload rather then guess a prediction (and fail…) 19

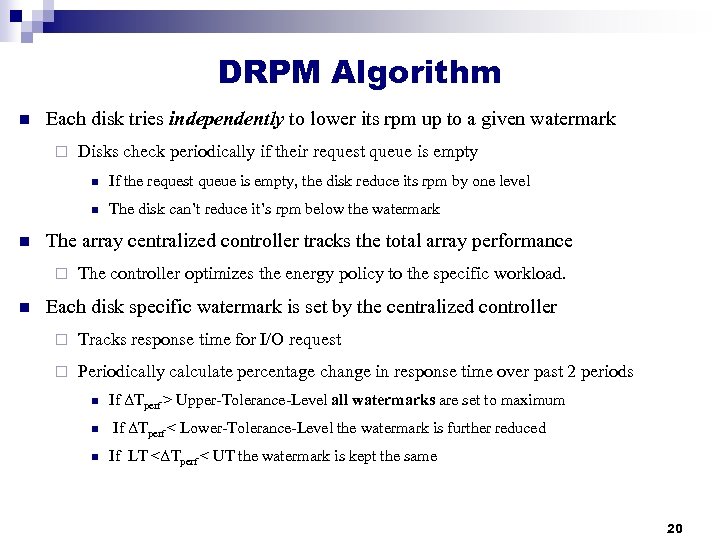

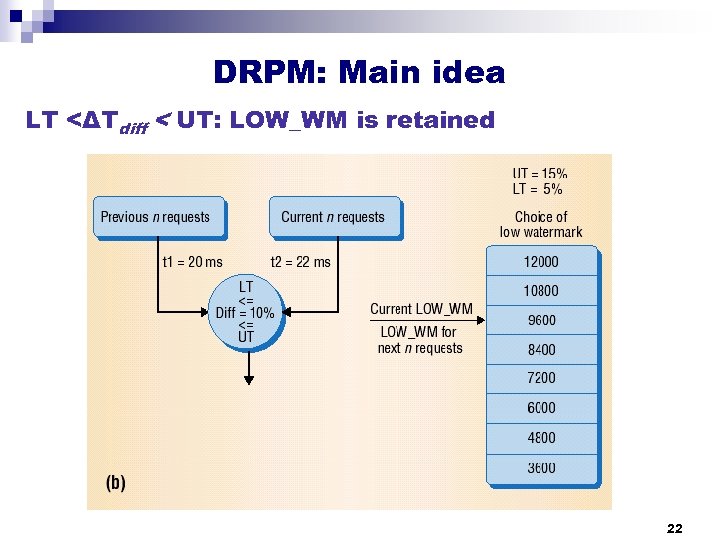

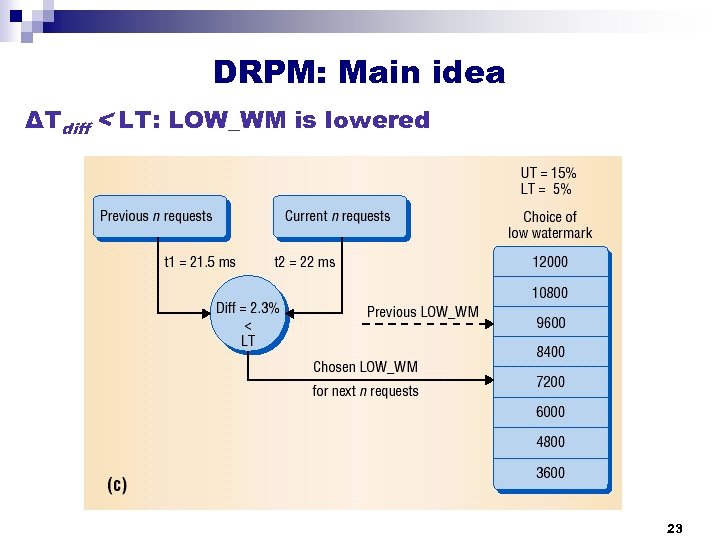

DRPM Algorithm n Each disk tries independently to lower its rpm up to a given watermark ¨ Disks check periodically if their request queue is empty n n n The disk can’t reduce it’s rpm below the watermark The array centralized controller tracks the total array performance ¨ n If the request queue is empty, the disk reduce its rpm by one level The controller optimizes the energy policy to the specific workload. Each disk specific watermark is set by the centralized controller ¨ Tracks response time for I/O request ¨ Periodically calculate percentage change in response time over past 2 periods n n n If ΔTperf > Upper-Tolerance-Level all watermarks are set to maximum If ΔTperf < Lower-Tolerance-Level the watermark is further reduced If LT <ΔTperf < UT the watermark is kept the same 20

DRPM Algorithm n Each disk tries independently to lower its rpm up to a given watermark ¨ Disks check periodically if their request queue is empty n n n The disk can’t reduce it’s rpm below the watermark The array centralized controller tracks the total array performance ¨ n If the request queue is empty, the disk reduce its rpm by one level The controller optimizes the energy policy to the specific workload. Each disk specific watermark is set by the centralized controller ¨ Tracks response time for I/O request ¨ Periodically calculate percentage change in response time over past 2 periods n n n If ΔTperf > Upper-Tolerance-Level all watermarks are set to maximum If ΔTperf < Lower-Tolerance-Level the watermark is further reduced If LT <ΔTperf < UT the watermark is kept the same 20

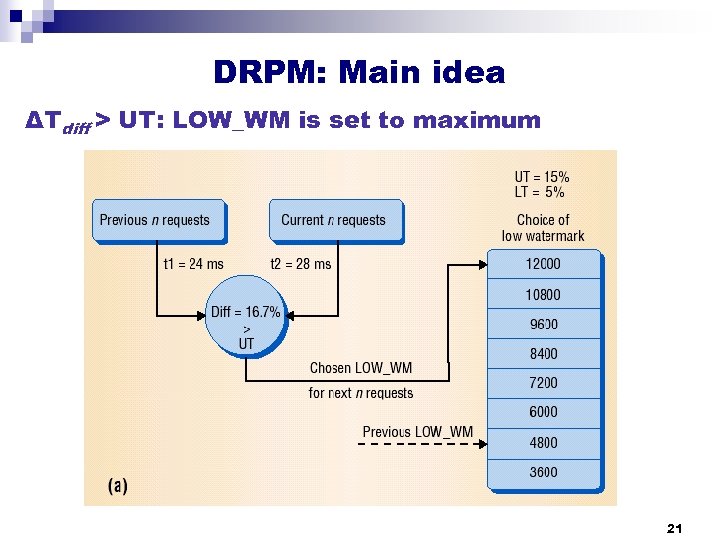

DRPM: Main idea ΔTdiff > UT: LOW_WM is set to maximum 21

DRPM: Main idea ΔTdiff > UT: LOW_WM is set to maximum 21

DRPM: Main idea LT <ΔTdiff < UT: LOW_WM is retained 22

DRPM: Main idea LT <ΔTdiff < UT: LOW_WM is retained 22

DRPM: Main idea ΔTdiff < LT: LOW_WM is lowered 23

DRPM: Main idea ΔTdiff < LT: LOW_WM is lowered 23

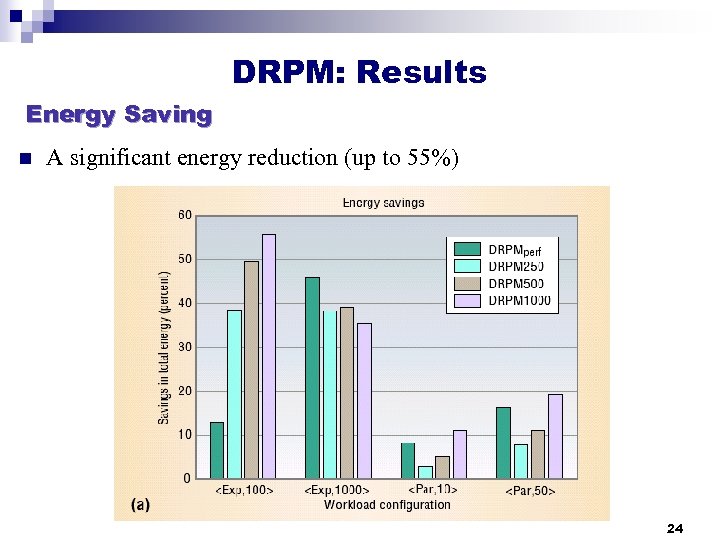

DRPM: Results Energy Saving n A significant energy reduction (up to 55%) 24

DRPM: Results Energy Saving n A significant energy reduction (up to 55%) 24

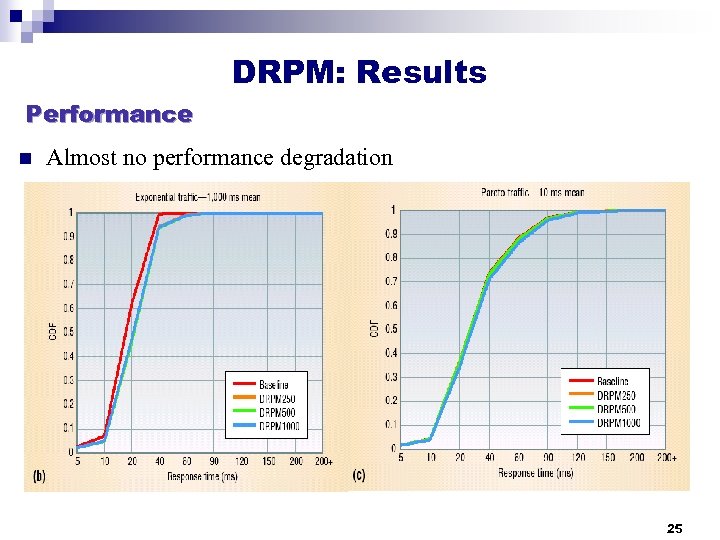

DRPM: Results Performance n Almost no performance degradation 25

DRPM: Results Performance n Almost no performance degradation 25

DRPM Technology problems Technology isn’t quite there yet n Providing speed control n Head fly height n Head positioning Servo and Data Channel design ¨ n Sampling frequency for servo control Affect of DRPM on reliability and MTTF But we’re getting closer! n Sony’s Multimedia Hard Disk Drive: ¨ Support 2 rotational speeds ¨ Pre-configured. (can’t be changed dynamically) 26

DRPM Technology problems Technology isn’t quite there yet n Providing speed control n Head fly height n Head positioning Servo and Data Channel design ¨ n Sampling frequency for servo control Affect of DRPM on reliability and MTTF But we’re getting closer! n Sony’s Multimedia Hard Disk Drive: ¨ Support 2 rotational speeds ¨ Pre-configured. (can’t be changed dynamically) 26

DRPM: Summary n Dynamically control the rotational speed ¨ Exploit the short idle periods n Allow better optimizations then TPM techniques n Reduce Energy without significant performance degradation n Technology isn’t quite there yet n The heuristic is very naïve! ¨ A lot of room for improvement 27

DRPM: Summary n Dynamically control the rotational speed ¨ Exploit the short idle periods n Allow better optimizations then TPM techniques n Reduce Energy without significant performance degradation n Technology isn’t quite there yet n The heuristic is very naïve! ¨ A lot of room for improvement 27

Agenda n Motivation n The Severs world special characteristics ¨ n Why the mobile world approach doesn’t work 3 different solutions ¨ ¨ Power Aware Cache ¨ n Dynamically modulate the disk speed Popular Data concentration Summary 28

Agenda n Motivation n The Severs world special characteristics ¨ n Why the mobile world approach doesn’t work 3 different solutions ¨ ¨ Power Aware Cache ¨ n Dynamically modulate the disk speed Popular Data concentration Summary 28

Power Aware Cache n “PB-LRU: A Tuning Power Aware Storage Cache Replacement Algorithm for Conserving Disk Energy” Qingbo Zhu, Asim Shankar and Yuanyuan Zhou In proceeding of the 18 th International Conference on Supercomputing (ICS’ 04), June 26 -July 1, 2004, Malo, France. . http: //www-faculty. cs. uiuc. edu/~yyzhou/paper/ICS 04. pdf n “Reducing energy consumption of disk storage using power-aware cache management” Q. Zhu, F. M. David, C. F. Devaraj, Z. Li, Y. Zhou, and P. Cao In 10 th International Symposium on High Performance Computer Architecture, (HPCA-10) February 14 -18, 2004 Madrid, Spain. . http: //carmen. cs. uiuc. edu/paper/HPCA 04. pdf 29

Power Aware Cache n “PB-LRU: A Tuning Power Aware Storage Cache Replacement Algorithm for Conserving Disk Energy” Qingbo Zhu, Asim Shankar and Yuanyuan Zhou In proceeding of the 18 th International Conference on Supercomputing (ICS’ 04), June 26 -July 1, 2004, Malo, France. . http: //www-faculty. cs. uiuc. edu/~yyzhou/paper/ICS 04. pdf n “Reducing energy consumption of disk storage using power-aware cache management” Q. Zhu, F. M. David, C. F. Devaraj, Z. Li, Y. Zhou, and P. Cao In 10 th International Symposium on High Performance Computer Architecture, (HPCA-10) February 14 -18, 2004 Madrid, Spain. . http: //carmen. cs. uiuc. edu/paper/HPCA 04. pdf 29

Power Aware Cache Fundamental observations: n File access frequencies are highly skewed ¨ n Not all files enjoy the same popularity The workload is not equally distributed among all disks 30

Power Aware Cache Fundamental observations: n File access frequencies are highly skewed ¨ n Not all files enjoy the same popularity The workload is not equally distributed among all disks 30

Power Aware Cache The Storage cache role n Cache policy directly affect the disk array energy consumption ¨ ¨ n Generates the disk access sequences Can increase disk idle time and provide more opportunities for energy save Power management schemes change I/O response time ¨ I/O Response time of an idle disk is huge (10. 9 sec to spin-up) ¨ Cache miss penalty is not a constant any more ¨ Awareness to power management police will yield better performance 31

Power Aware Cache The Storage cache role n Cache policy directly affect the disk array energy consumption ¨ ¨ n Generates the disk access sequences Can increase disk idle time and provide more opportunities for energy save Power management schemes change I/O response time ¨ I/O Response time of an idle disk is huge (10. 9 sec to spin-up) ¨ Cache miss penalty is not a constant any more ¨ Awareness to power management police will yield better performance 31

Power Aware Cache Main Idea n The large “minimum sleeping time” needed ¨ Spinning the disk up or down takes very long time ¨ A huge energy penalty of spinning the disk up and down n The very short idle times in servers workload n The performance degradation due to the long wake up time 32

Power Aware Cache Main Idea n The large “minimum sleeping time” needed ¨ Spinning the disk up or down takes very long time ¨ A huge energy penalty of spinning the disk up and down n The very short idle times in servers workload n The performance degradation due to the long wake up time 32

Power Aware Cache Main Idea n Selectively keep blocks from “inactive” disks in the cache longer ¨ Extend the idle period length of those disks n n Divide the entire cache into separate partitions – one for each disk ¨ n Allow longer low-power modes Each partition is managed using LRU Find the partition that will minimize energy consumption ¨ Reduce the partition size for active disk ¨ Increase the partition size for inactive disks 33

Power Aware Cache Main Idea n Selectively keep blocks from “inactive” disks in the cache longer ¨ Extend the idle period length of those disks n n Divide the entire cache into separate partitions – one for each disk ¨ n Allow longer low-power modes Each partition is managed using LRU Find the partition that will minimize energy consumption ¨ Reduce the partition size for active disk ¨ Increase the partition size for inactive disks 33

PB-LRU Algorithm n For each disk, maintain the energy consumption that would have been used under every partition size ¨ ¨ n Done at run-time Periodically, find the energy-optimal partitioning ¨ A form of the Multiple choice Knapsack Problem (MCKP) ¨ NP-Hard 34

PB-LRU Algorithm n For each disk, maintain the energy consumption that would have been used under every partition size ¨ ¨ n Done at run-time Periodically, find the energy-optimal partitioning ¨ A form of the Multiple choice Knapsack Problem (MCKP) ¨ NP-Hard 34

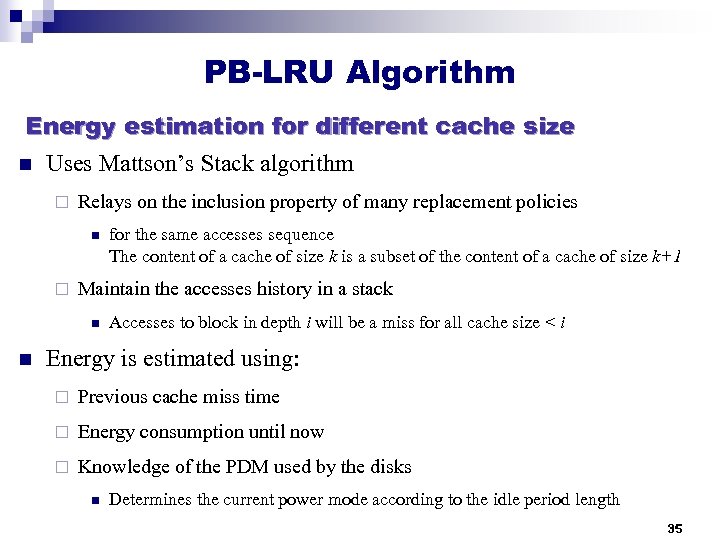

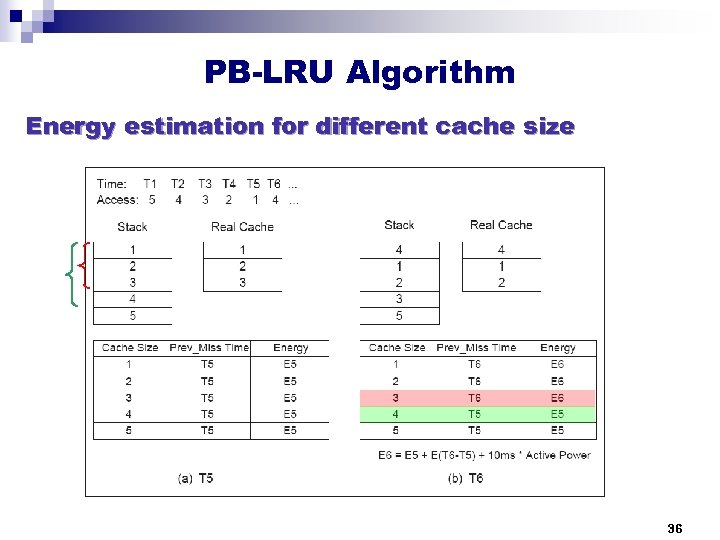

PB-LRU Algorithm Energy estimation for different cache size n Uses Mattson’s Stack algorithm ¨ Relays on the inclusion property of many replacement policies n ¨ Maintain the accesses history in a stack n n for the same accesses sequence The content of a cache of size k is a subset of the content of a cache of size k+1 Accesses to block in depth i will be a miss for all cache size < i Energy is estimated using: ¨ Previous cache miss time ¨ Energy consumption until now ¨ Knowledge of the PDM used by the disks n Determines the current power mode according to the idle period length 35

PB-LRU Algorithm Energy estimation for different cache size n Uses Mattson’s Stack algorithm ¨ Relays on the inclusion property of many replacement policies n ¨ Maintain the accesses history in a stack n n for the same accesses sequence The content of a cache of size k is a subset of the content of a cache of size k+1 Accesses to block in depth i will be a miss for all cache size < i Energy is estimated using: ¨ Previous cache miss time ¨ Energy consumption until now ¨ Knowledge of the PDM used by the disks n Determines the current power mode according to the idle period length 35

PB-LRU Algorithm Energy estimation for different cache size 36

PB-LRU Algorithm Energy estimation for different cache size 36

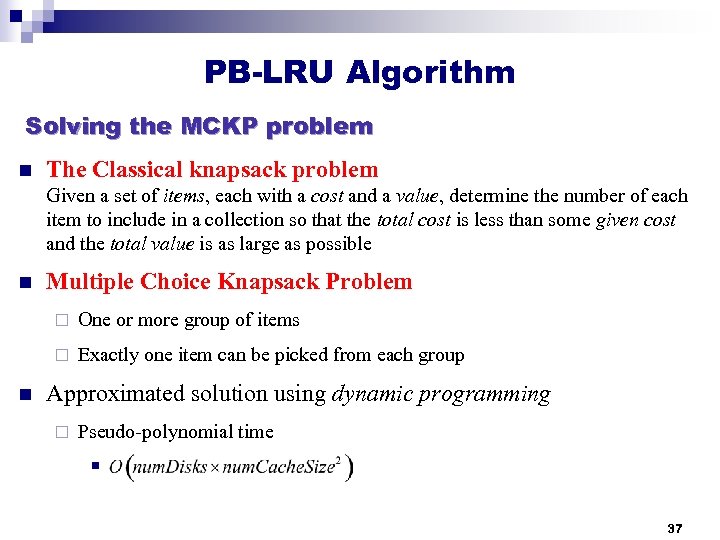

PB-LRU Algorithm Solving the MCKP problem n The Classical knapsack problem Given a set of items, each with a cost and a value, determine the number of each item to include in a collection so that the total cost is less than some given cost and the total value is as large as possible n Multiple Choice Knapsack Problem ¨ ¨ n One or more group of items Exactly one item can be picked from each group Approximated solution using dynamic programming ¨ Pseudo-polynomial time n 37

PB-LRU Algorithm Solving the MCKP problem n The Classical knapsack problem Given a set of items, each with a cost and a value, determine the number of each item to include in a collection so that the total cost is less than some given cost and the total value is as large as possible n Multiple Choice Knapsack Problem ¨ ¨ n One or more group of items Exactly one item can be picked from each group Approximated solution using dynamic programming ¨ Pseudo-polynomial time n 37

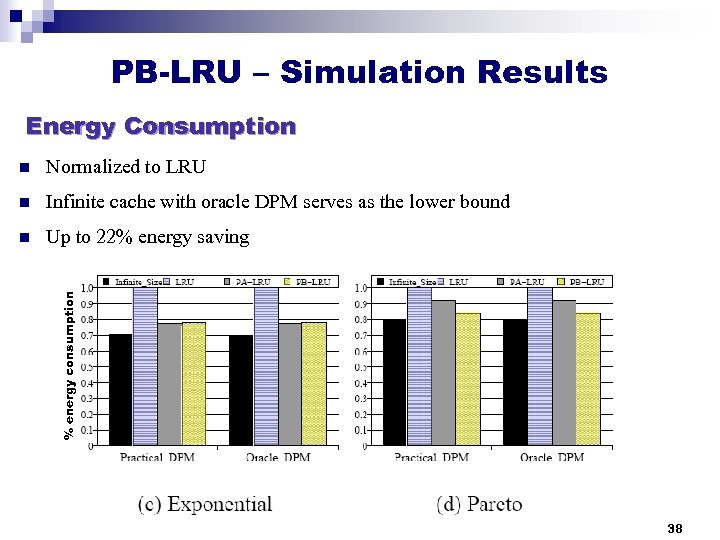

PB-LRU – Simulation Results Energy Consumption Normalized to LRU n Infinite cache with oracle DPM serves as the lower bound n Up to 22% energy saving % energy consumption n 38

PB-LRU – Simulation Results Energy Consumption Normalized to LRU n Infinite cache with oracle DPM serves as the lower bound n Up to 22% energy saving % energy consumption n 38

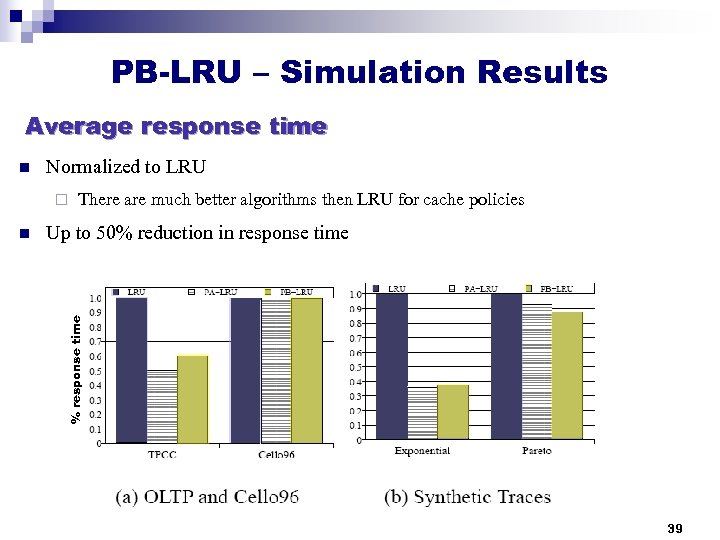

PB-LRU – Simulation Results Average response time n Normalized to LRU ¨ Up to 50% reduction in response time % response time n There are much better algorithms then LRU for cache policies 39

PB-LRU – Simulation Results Average response time n Normalized to LRU ¨ Up to 50% reduction in response time % response time n There are much better algorithms then LRU for cache policies 39

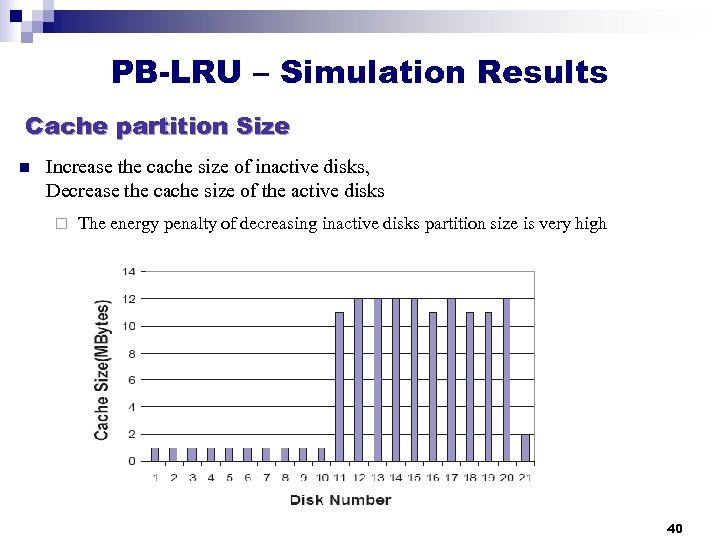

PB-LRU – Simulation Results Cache partition Size n Increase the cache size of inactive disks, Decrease the cache size of the active disks ¨ The energy penalty of decreasing inactive disks partition size is very high 40

PB-LRU – Simulation Results Cache partition Size n Increase the cache size of inactive disks, Decrease the cache size of the active disks ¨ The energy penalty of decreasing inactive disks partition size is very high 40

PB-LRU Soft Spots n Performance issues: ¨ The algorithm is oblivious to performance n ¨ Lack of detailed analysis on the effect of DPM on power oblivious caches n n Performance as a ‘by product’ and not as a design goal Average I/O access time with and without DPM Breaks the hierarchies encapsulation 41

PB-LRU Soft Spots n Performance issues: ¨ The algorithm is oblivious to performance n ¨ Lack of detailed analysis on the effect of DPM on power oblivious caches n n Performance as a ‘by product’ and not as a design goal Average I/O access time with and without DPM Breaks the hierarchies encapsulation 41

Power Aware Cache – Summary n Selectively keep blocks from “inactive” disks in the cache longer ¨ Extend the idle period length of those disks n n Allow longer low-power modes. Cache targeted to minimize energy consumption ¨ Must meet the performance requirements n Quickly adaptive, elegant, on line algorithm n Very promising approach 42

Power Aware Cache – Summary n Selectively keep blocks from “inactive” disks in the cache longer ¨ Extend the idle period length of those disks n n Allow longer low-power modes. Cache targeted to minimize energy consumption ¨ Must meet the performance requirements n Quickly adaptive, elegant, on line algorithm n Very promising approach 42

Agenda n Motivation n The Severs world special characteristics ¨ n Why the mobile world approach doesn’t work 3 different solutions ¨ ¨ Power Aware Cache ¨ n Dynamically modulate the disk speed Popular Data concentration Summary 43

Agenda n Motivation n The Severs world special characteristics ¨ n Why the mobile world approach doesn’t work 3 different solutions ¨ ¨ Power Aware Cache ¨ n Dynamically modulate the disk speed Popular Data concentration Summary 43

Popular Data Concentration n “Energy conservation techniques for disk array-based servers” Eduardo Pinheiro and Ricardo Bianchini In proceeding of the 18 th International Conference on Supercomputing (ICS’ 04), June 26 -July 1, 2004, Malo, France. http: //www. cs. rutgers. edu/~ricardob/papers/ics 04. ps. gz n "Massive Arrays of Idle Disks For Storage Archives" Dennis Colarelli and Dirk Grunwald In proceedings of the 15 th High Performance Networking and Computing Conference, November 2002, Baltimore, Maryland http: //sc-2002. org/paperpdfs/pap. pap 312. pdf 44

Popular Data Concentration n “Energy conservation techniques for disk array-based servers” Eduardo Pinheiro and Ricardo Bianchini In proceeding of the 18 th International Conference on Supercomputing (ICS’ 04), June 26 -July 1, 2004, Malo, France. http: //www. cs. rutgers. edu/~ricardob/papers/ics 04. ps. gz n "Massive Arrays of Idle Disks For Storage Archives" Dennis Colarelli and Dirk Grunwald In proceedings of the 15 th High Performance Networking and Computing Conference, November 2002, Baltimore, Maryland http: //sc-2002. org/paperpdfs/pap. pap 312. pdf 44

PDC: Popular Data Concentration Fundamental observation: n File access frequencies are highly skewed ¨ Not all files enjoy the same popularity Main Idea n The large “minimum sleeping time” needed ¨ ¨ n Spinning the disk up or down takes very long time A huge energy penalty of spinning the disk up and down The very short idle times in servers workload 45

PDC: Popular Data Concentration Fundamental observation: n File access frequencies are highly skewed ¨ Not all files enjoy the same popularity Main Idea n The large “minimum sleeping time” needed ¨ ¨ n Spinning the disk up or down takes very long time A huge energy penalty of spinning the disk up and down The very short idle times in servers workload 45

PDC: Popular Data Concentration Fundamental observation: n File access frequencies are highly skewed ¨ Not all files enjoy the same popularity Main Idea n Dynamically migrate popular data to a subset of the disks in the array n Skew the load towards a few of the disks ¨ Other disks will have longer idle times n Can be switched to a low power mode for longer periods n Applied periodically to adjust to data popularity changes n Limits the load ¨ of the popular disks prevent performance degradation due to local congestion of accesses 46

PDC: Popular Data Concentration Fundamental observation: n File access frequencies are highly skewed ¨ Not all files enjoy the same popularity Main Idea n Dynamically migrate popular data to a subset of the disks in the array n Skew the load towards a few of the disks ¨ Other disks will have longer idle times n Can be switched to a low power mode for longer periods n Applied periodically to adjust to data popularity changes n Limits the load ¨ of the popular disks prevent performance degradation due to local congestion of accesses 46

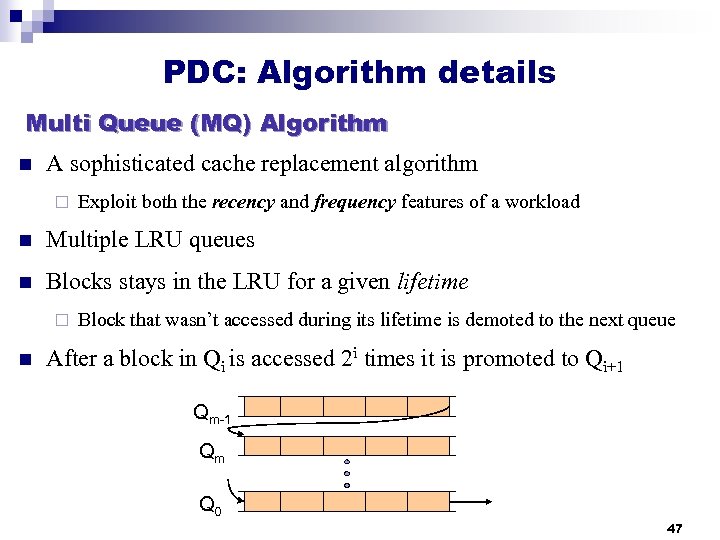

PDC: Algorithm details Multi Queue (MQ) Algorithm n A sophisticated cache replacement algorithm ¨ Exploit both the recency and frequency features of a workload n Multiple LRU queues n Blocks stays in the LRU for a given lifetime ¨ n Block that wasn’t accessed during its lifetime is demoted to the next queue After a block in Qi is accessed 2 i times it is promoted to Qi+1 Qm-1 Qm Q 0 47

PDC: Algorithm details Multi Queue (MQ) Algorithm n A sophisticated cache replacement algorithm ¨ Exploit both the recency and frequency features of a workload n Multiple LRU queues n Blocks stays in the LRU for a given lifetime ¨ n Block that wasn’t accessed during its lifetime is demoted to the next queue After a block in Qi is accessed 2 i times it is promoted to Qi+1 Qm-1 Qm Q 0 47

PDC: Algorithm details n Maintain block reference history in MQ rank list n Periodically migrate files to disk based on the MQ rank list ¨ Migration period is half an hour ¨ Stride the MQ list from head to tail. (Qm-1 to Q 0) ¨ Migrate files to the same disk until reaching the maximum allowed load n Expected file load is estimated as Power conservation techniques n Two methods compared ¨ Spun down the disk after a fixed idleness period (TPM) ¨ Using to 2 -speed level disks n Disks under a low load switch to lower rpm. (DRPM wannabe) 48

PDC: Algorithm details n Maintain block reference history in MQ rank list n Periodically migrate files to disk based on the MQ rank list ¨ Migration period is half an hour ¨ Stride the MQ list from head to tail. (Qm-1 to Q 0) ¨ Migrate files to the same disk until reaching the maximum allowed load n Expected file load is estimated as Power conservation techniques n Two methods compared ¨ Spun down the disk after a fixed idleness period (TPM) ¨ Using to 2 -speed level disks n Disks under a low load switch to lower rpm. (DRPM wannabe) 48

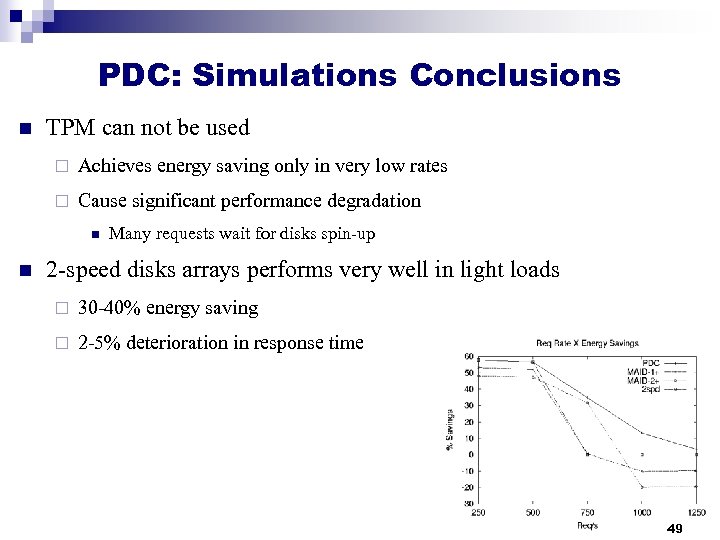

PDC: Simulations Conclusions n TPM can not be used ¨ Achieves energy saving only in very low rates ¨ Cause significant performance degradation n n Many requests wait for disks spin-up 2 -speed disks arrays performs very well in light loads ¨ 30 -40% energy saving ¨ 2 -5% deterioration in response time 49

PDC: Simulations Conclusions n TPM can not be used ¨ Achieves energy saving only in very low rates ¨ Cause significant performance degradation n n Many requests wait for disks spin-up 2 -speed disks arrays performs very well in light loads ¨ 30 -40% energy saving ¨ 2 -5% deterioration in response time 49

PDC: Soft Spots n Migration process adds unnecessary blocks transfers ¨ ¨ n Pure energy overhead Temporally increase the disks load The algorithm is far from perfect ¨ ¨ n Estimation of the file contribution to the disk load is pretty lame The fact that TPM is useless is fishy The unbalanced load put too much stress on a subset of the disks ¨ Reliability issues 50

PDC: Soft Spots n Migration process adds unnecessary blocks transfers ¨ ¨ n Pure energy overhead Temporally increase the disks load The algorithm is far from perfect ¨ ¨ n Estimation of the file contribution to the disk load is pretty lame The fact that TPM is useless is fishy The unbalanced load put too much stress on a subset of the disks ¨ Reliability issues 50

PDC: Summary n Dynamically migrate popular data to a subset of the disks in the array n Skew the load towards a few of the disks ¨ More power optimization opportunities to the unemployed disks n Integrates well with DRPM techniques n Very intuitive and hence very promising ¨ A lot of work left to be done 51

PDC: Summary n Dynamically migrate popular data to a subset of the disks in the array n Skew the load towards a few of the disks ¨ More power optimization opportunities to the unemployed disks n Integrates well with DRPM techniques n Very intuitive and hence very promising ¨ A lot of work left to be done 51

Agenda n Motivation n The Severs world special characteristics ¨ n Why the mobile world approach doesn’t work 3 different solutions ¨ ¨ Power Aware Cache ¨ n Dynamically modulate the disk speed Popular Data concentration Summary 52

Agenda n Motivation n The Severs world special characteristics ¨ n Why the mobile world approach doesn’t work 3 different solutions ¨ ¨ Power Aware Cache ¨ n Dynamically modulate the disk speed Popular Data concentration Summary 52

Summary What have we seen n DRPM ¨ Dynamically chance the rotation speed of the disk n n Power Aware Cache techniques. (PB-LRU) ¨ Extend the idle periods of inactive disks n n Present more energy saving by better exploitation of the short idle time allow longer power-save modes Popular Data Concentration (PDC) ¨ Skew the load towards a few of the disks n Create inactive disks with long idle periods 53

Summary What have we seen n DRPM ¨ Dynamically chance the rotation speed of the disk n n Power Aware Cache techniques. (PB-LRU) ¨ Extend the idle periods of inactive disks n n Present more energy saving by better exploitation of the short idle time allow longer power-save modes Popular Data Concentration (PDC) ¨ Skew the load towards a few of the disks n Create inactive disks with long idle periods 53

Summary n All Papers pinpoint and tackle the same problems ¨ ¨ n The very short idle times in servers workload The performance degradation due to the long wake up time The ICS’ 04 papers are based on the same basic solution ¨ ¨ n Exploit the skew in the popularity of files and disks in the array each paper weave its own path though Each paper approaches a different level of the system ¨ DRPM works on the disk level ¨ PB-LRU study the cache ¨ PDC alter the intermediate level (disk array centralized controller) n Negligible intersection with DRPM 54

Summary n All Papers pinpoint and tackle the same problems ¨ ¨ n The very short idle times in servers workload The performance degradation due to the long wake up time The ICS’ 04 papers are based on the same basic solution ¨ ¨ n Exploit the skew in the popularity of files and disks in the array each paper weave its own path though Each paper approaches a different level of the system ¨ DRPM works on the disk level ¨ PB-LRU study the cache ¨ PDC alter the intermediate level (disk array centralized controller) n Negligible intersection with DRPM 54

Summary Integration Time (Editor’s pick) n All disks use DRPM n Storage cache applies the Power Aware Cache algorithm n Popular Data Concentration is used to further skew the load n The few busiest disks should be MEMS storage devices ¨ Can confront the very high load ¨ Economical solution as only a few of those are used 55

Summary Integration Time (Editor’s pick) n All disks use DRPM n Storage cache applies the Power Aware Cache algorithm n Popular Data Concentration is used to further skew the load n The few busiest disks should be MEMS storage devices ¨ Can confront the very high load ¨ Economical solution as only a few of those are used 55

Summary A super hot research filed n Works are still preliminary n Many aspects were never touched ¨ RAID were not examine yet ¨ Each niche has its own special needs n n Simulation and results are not convincing ¨ Lake of simulation tools for huge disks array n n Different workloads with different behaviors One size doesn’t fit all Thesis quality material Any questions ? 56

Summary A super hot research filed n Works are still preliminary n Many aspects were never touched ¨ RAID were not examine yet ¨ Each niche has its own special needs n n Simulation and results are not convincing ¨ Lake of simulation tools for huge disks array n n Different workloads with different behaviors One size doesn’t fit all Thesis quality material Any questions ? 56