Power and Cooling at Texas Advanced Computing Center Tommy Minyard, Ph. D. Director of Advanced Computing Systems 42 nd HPC User Forum September 8, 2011

Power and Cooling at Texas Advanced Computing Center Tommy Minyard, Ph. D. Director of Advanced Computing Systems 42 nd HPC User Forum September 8, 2011

TACC Mission & Strategy The mission of the Texas Advanced Computing Center is to enable scientific discovery and enhance society through the application of advanced computing technologies. To accomplish this mission, TACC: – Evaluates, acquires & operates advanced computing systems – Provides training, consulting, and documentation to users – Collaborates with researchers to apply advanced computing techniques – Conducts research & development to produce new computational technologies Resources & Services Research & Development

TACC Mission & Strategy The mission of the Texas Advanced Computing Center is to enable scientific discovery and enhance society through the application of advanced computing technologies. To accomplish this mission, TACC: – Evaluates, acquires & operates advanced computing systems – Provides training, consulting, and documentation to users – Collaborates with researchers to apply advanced computing techniques – Conducts research & development to produce new computational technologies Resources & Services Research & Development

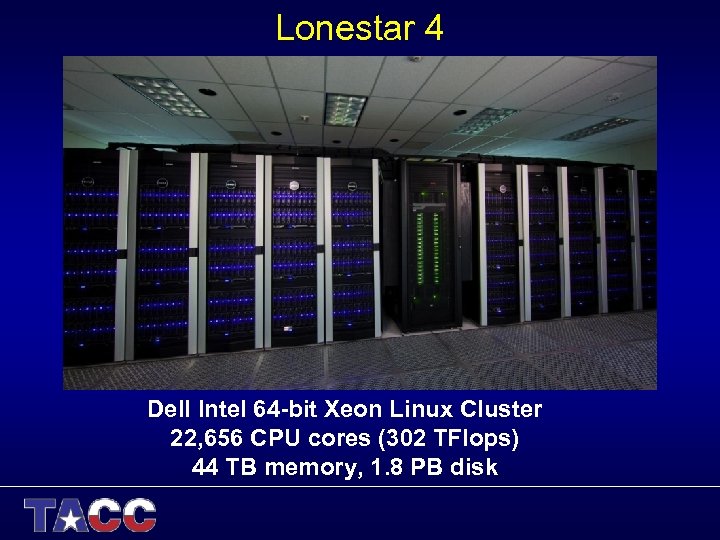

Recent History of Systems at TACC • 2001 – IBM Power 4 system, 1 TFlop, ~300 k. W • 2003 – Dell Linux cluster, 5 TFlops, ~300 k. W • 2006 – Dell Linux blade cluster, 62 TFlops ~500 k. W, 16 k. W per rack • 2008 – Sun Linux blade cluster, Ranger, 579 TFlops, 2. 4 MW, 30 k. W per rack • 2011 – Dell Linux blade cluster, Lonestar 4, 302 Tflops, 800 k. W, 20 k. W per rack

Recent History of Systems at TACC • 2001 – IBM Power 4 system, 1 TFlop, ~300 k. W • 2003 – Dell Linux cluster, 5 TFlops, ~300 k. W • 2006 – Dell Linux blade cluster, 62 TFlops ~500 k. W, 16 k. W per rack • 2008 – Sun Linux blade cluster, Ranger, 579 TFlops, 2. 4 MW, 30 k. W per rack • 2011 – Dell Linux blade cluster, Lonestar 4, 302 Tflops, 800 k. W, 20 k. W per rack

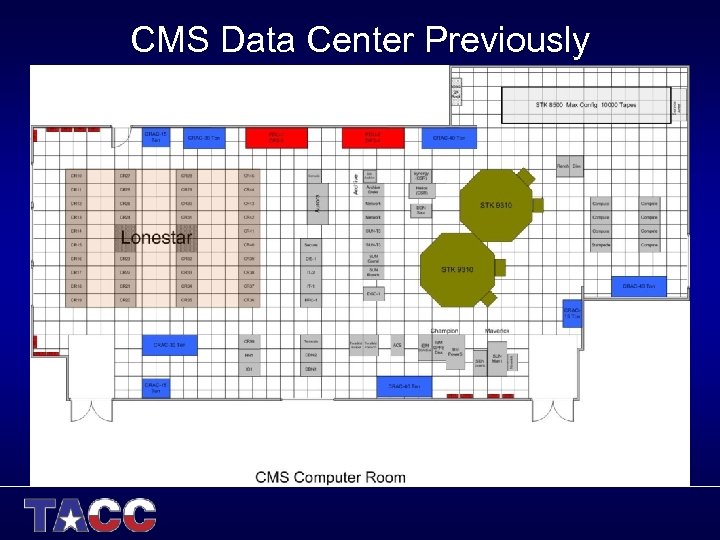

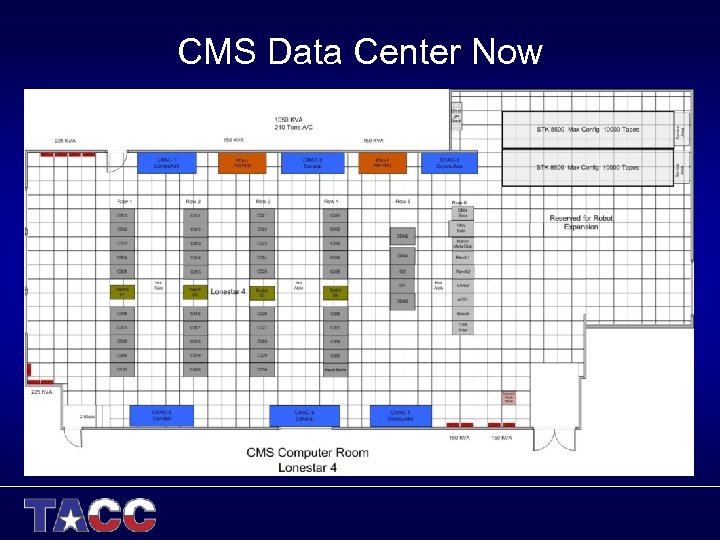

TACC Data Centers • Commons Center (CMS) – Originally built in 1986 with 3, 200 sq. ft. – Designed to house large Cray systems – Retrofitted multiple times to increase power/cooling infrastructure, ~1 MW total power – 18” raised floor, standard CRAC cooling units • Research Office Complex (ROC) – – Built in 2007 as part of new office building 6, 400 sq. ft. , 1 MW original designed power Refitted to support 4 MW total power for Ranger 30” raised floor, CRAC and APC In-Row Coolers

TACC Data Centers • Commons Center (CMS) – Originally built in 1986 with 3, 200 sq. ft. – Designed to house large Cray systems – Retrofitted multiple times to increase power/cooling infrastructure, ~1 MW total power – 18” raised floor, standard CRAC cooling units • Research Office Complex (ROC) – – Built in 2007 as part of new office building 6, 400 sq. ft. , 1 MW original designed power Refitted to support 4 MW total power for Ranger 30” raised floor, CRAC and APC In-Row Coolers

CMS Data Center Previously

CMS Data Center Previously

CMS Data Center Now

CMS Data Center Now

Lonestar 4 Dell Intel 64 -bit Xeon Linux Cluster 22, 656 CPU cores (302 TFlops) 44 TB memory, 1. 8 PB disk

Lonestar 4 Dell Intel 64 -bit Xeon Linux Cluster 22, 656 CPU cores (302 TFlops) 44 TB memory, 1. 8 PB disk

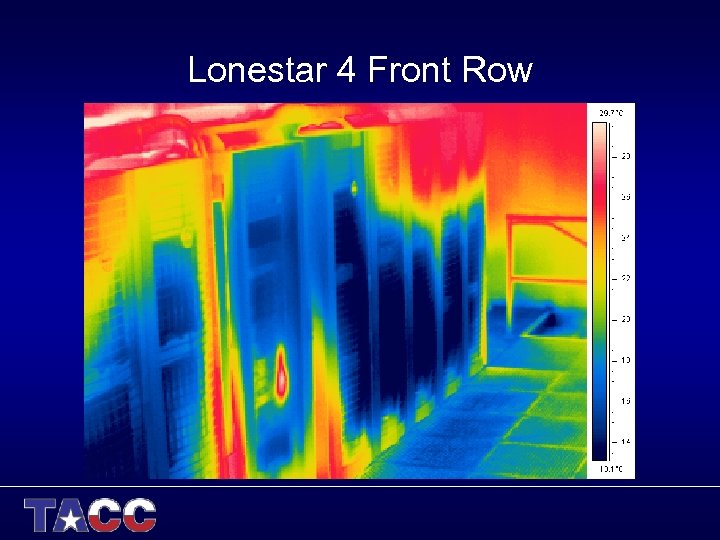

Lonestar 4 Front Row

Lonestar 4 Front Row

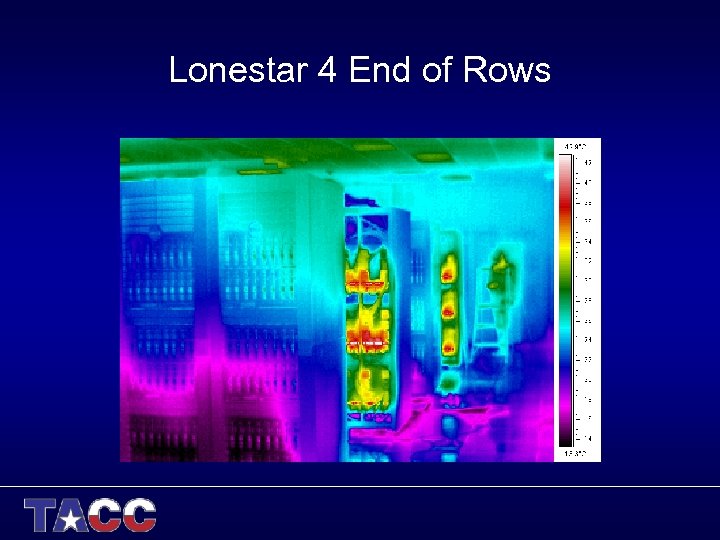

Lonestar 4 End of Rows

Lonestar 4 End of Rows

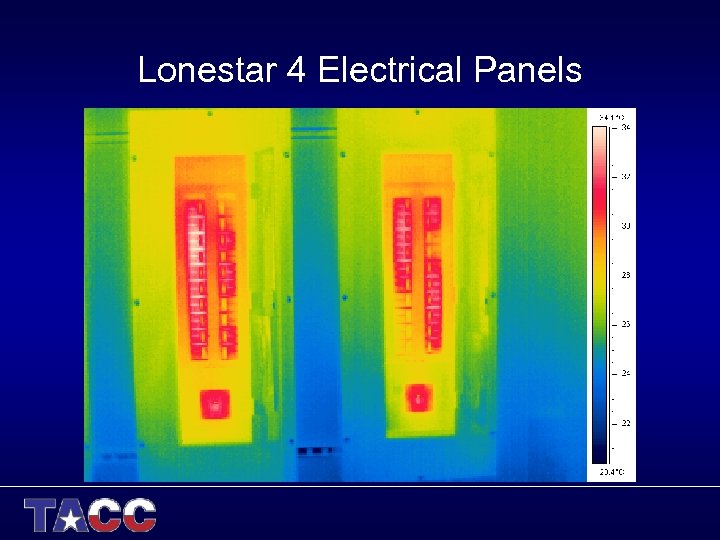

Lonestar 4 Electrical Panels

Lonestar 4 Electrical Panels

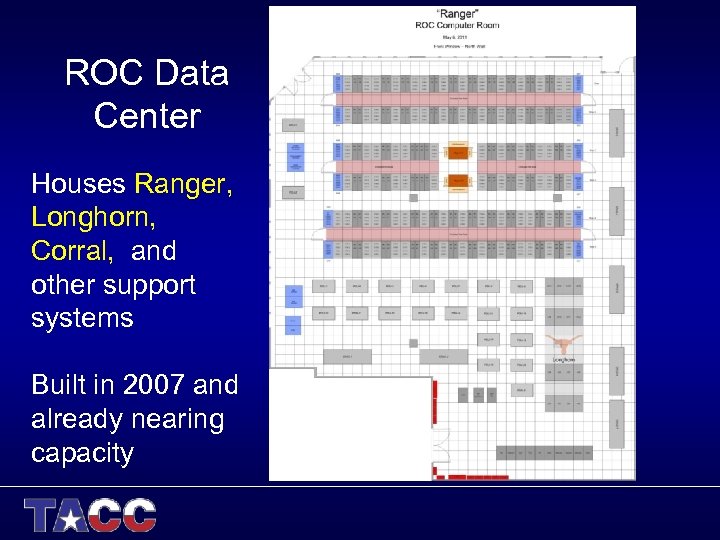

ROC Data Center Houses Ranger, Longhorn, Corral, and other support systems Built in 2007 and already nearing capacity

ROC Data Center Houses Ranger, Longhorn, Corral, and other support systems Built in 2007 and already nearing capacity

Ranger

Ranger

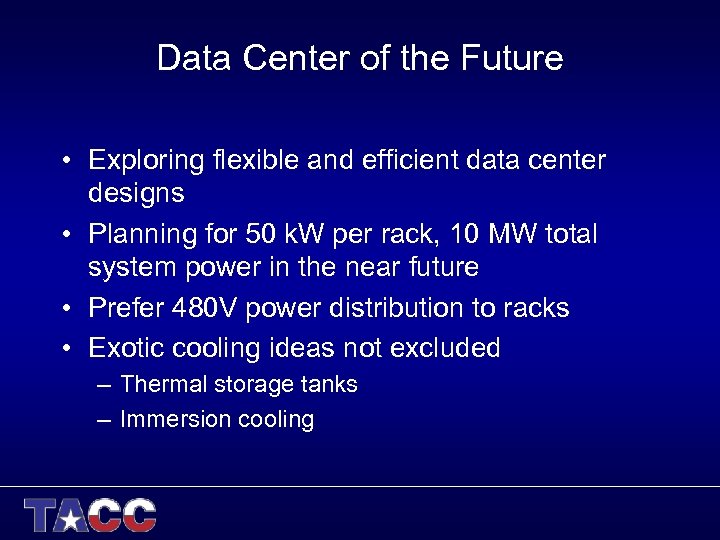

Data Center of the Future • Exploring flexible and efficient data center designs • Planning for 50 k. W per rack, 10 MW total system power in the near future • Prefer 480 V power distribution to racks • Exotic cooling ideas not excluded – Thermal storage tanks – Immersion cooling

Data Center of the Future • Exploring flexible and efficient data center designs • Planning for 50 k. W per rack, 10 MW total system power in the near future • Prefer 480 V power distribution to racks • Exotic cooling ideas not excluded – Thermal storage tanks – Immersion cooling

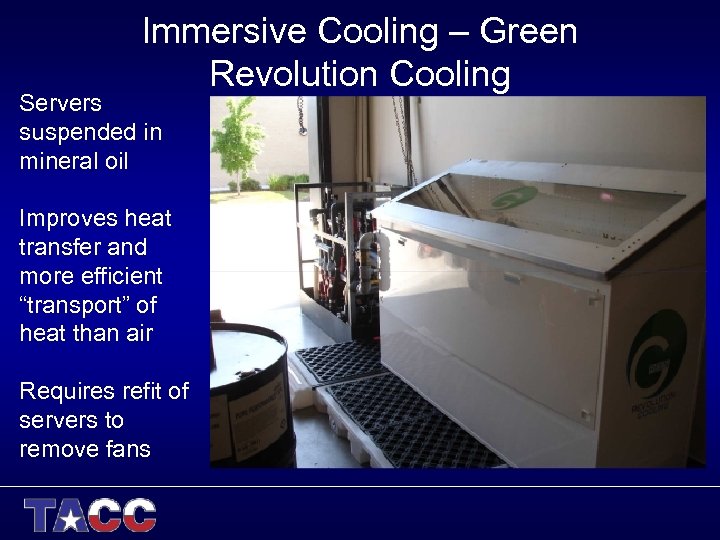

Immersive Cooling – Green Revolution Cooling Servers suspended in mineral oil Improves heat transfer and more efficient “transport” of heat than air Requires refit of servers to remove fans

Immersive Cooling – Green Revolution Cooling Servers suspended in mineral oil Improves heat transfer and more efficient “transport” of heat than air Requires refit of servers to remove fans

Summary • Data center/rack power densities increasing • Efficiency of delivering power and cooling the heat generated becoming substantial • Air cooling reaching limits of cooling capability • Future data centers will require more “exotic” or customized cooling solutions for very high power density

Summary • Data center/rack power densities increasing • Efficiency of delivering power and cooling the heat generated becoming substantial • Air cooling reaching limits of cooling capability • Future data centers will require more “exotic” or customized cooling solutions for very high power density