c50e507fb073710cd942bf40db6ae991.ppt

- Количество слайдов: 70

POSTEC Lecture Network Management Chapter 4 SLA and Qo. S May 6 -27 , 2008 Masayoshi Ejiri Japan 1

POSTEC Lecture Network Management Chapter 4 SLA and Qo. S May 6 -27 , 2008 Masayoshi Ejiri Japan 1

Agenda 1. ICT Operations and Management - Service Industries - ICT Services and Networks— - Target of the Management 2, Architecture , Function , Information Model and Business Process - ITU-T TMN( Telecommunications Management Network) - Tele. Management Forum Telecommunications Operations Map ( TOM) - Multi domain management and System Integration - Standardization 3. OSS( Operations Support System ) Development - Software Architecture , Key Technologies and Product Evaluation— 4. SLA( Service Level Agreement) and Qo. S( Quality of Service) - SLA Definition , reference point and policy based negotiation 5, IP/e. Business Management - Paradigm shift , Architecture beyond TMN and enhanced TOM 6. NGN( Next Generation Networks) Management - NGN Networks and Services , New Paradigm of ICT Business and Management 2

Agenda 1. ICT Operations and Management - Service Industries - ICT Services and Networks— - Target of the Management 2, Architecture , Function , Information Model and Business Process - ITU-T TMN( Telecommunications Management Network) - Tele. Management Forum Telecommunications Operations Map ( TOM) - Multi domain management and System Integration - Standardization 3. OSS( Operations Support System ) Development - Software Architecture , Key Technologies and Product Evaluation— 4. SLA( Service Level Agreement) and Qo. S( Quality of Service) - SLA Definition , reference point and policy based negotiation 5, IP/e. Business Management - Paradigm shift , Architecture beyond TMN and enhanced TOM 6. NGN( Next Generation Networks) Management - NGN Networks and Services , New Paradigm of ICT Business and Management 2

Agenda • • Service Life cycle of Qo. S/SLA IP Qo. S and network performance Qo. S , Qo. E and SLA and OLA Overviews SLA Management SLA Features SLA Negotiation Security 3

Agenda • • Service Life cycle of Qo. S/SLA IP Qo. S and network performance Qo. S , Qo. E and SLA and OLA Overviews SLA Management SLA Features SLA Negotiation Security 3

Service Life cycle and Qo. S/SLA • ITU-T Recommendation M. 3341 Requirements for Qo. S/SLA management over the TMN X-interface for IP-based services Management of Qo. S and associated SLAs require interaction between many telecom operations business processes and TMN management services as defined in ITU-T Rec. M. 3200 and TMN management function sets as defined in ITU-T Rec. M. 3400. 4

Service Life cycle and Qo. S/SLA • ITU-T Recommendation M. 3341 Requirements for Qo. S/SLA management over the TMN X-interface for IP-based services Management of Qo. S and associated SLAs require interaction between many telecom operations business processes and TMN management services as defined in ITU-T Rec. M. 3200 and TMN management function sets as defined in ITU-T Rec. M. 3400. 4

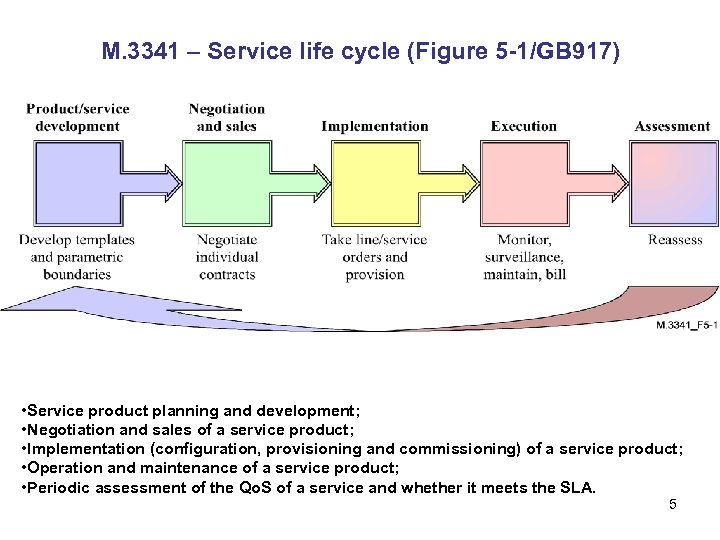

M. 3341 – Service life cycle (Figure 5 -1/GB 917) • Service product planning and development; • Negotiation and sales of a service product; • Implementation (configuration, provisioning and commissioning) of a service product; • Operation and maintenance of a service product; • Periodic assessment of the Qo. S of a service and whether it meets the SLA. 5

M. 3341 – Service life cycle (Figure 5 -1/GB 917) • Service product planning and development; • Negotiation and sales of a service product; • Implementation (configuration, provisioning and commissioning) of a service product; • Operation and maintenance of a service product; • Periodic assessment of the Qo. S of a service and whether it meets the SLA. 5

Qo. S/SLA management interactions across QMS interface M. 3341 SC( Service Customer) initiated: • • Retrieve MPs MP : Measurement Point Retrieve Obs Ob : Observation Configure Ob Assign PM data collection interval PM : Performance Management Suspend/Resume PM data collection Reset PM data Assign PM history duration Assign PM threshold (including severity) • Request PM data (current or history) SP( Service Provider ) initiated/provided: • • • Report MP configuration changes Report SP suspension of PM data collection Report PM threshold violation QMS : Qo. S/SLA Management Services 6

Qo. S/SLA management interactions across QMS interface M. 3341 SC( Service Customer) initiated: • • Retrieve MPs MP : Measurement Point Retrieve Obs Ob : Observation Configure Ob Assign PM data collection interval PM : Performance Management Suspend/Resume PM data collection Reset PM data Assign PM history duration Assign PM threshold (including severity) • Request PM data (current or history) SP( Service Provider ) initiated/provided: • • • Report MP configuration changes Report SP suspension of PM data collection Report PM threshold violation QMS : Qo. S/SLA Management Services 6

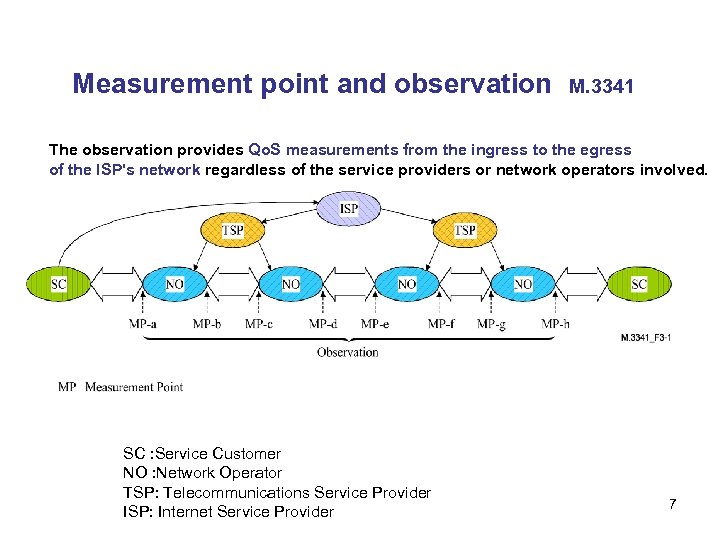

Measurement point and observation M. 3341 The observation provides Qo. S measurements from the ingress to the egress of the ISP's network regardless of the service providers or network operators involved. SC : Service Customer NO : Network Operator TSP: Telecommunications Service Provider ISP: Internet Service Provider 7

Measurement point and observation M. 3341 The observation provides Qo. S measurements from the ingress to the egress of the ISP's network regardless of the service providers or network operators involved. SC : Service Customer NO : Network Operator TSP: Telecommunications Service Provider ISP: Internet Service Provider 7

IP Qo. S related Y series. Recommendations • Y. 1540 Internet protocol data communication service – IP packet transfer and availability performance parameters • Y. 1541 Network performance objectives for IP-based services Note : SERIES Y: GLOBAL INFORMATION INFRASTRUCTURE, INTERNET PROTOCOL ASPECTS AND NEXT-GENERATION NETWORKS Internet protocol aspects – Quality of service and network performance 8

IP Qo. S related Y series. Recommendations • Y. 1540 Internet protocol data communication service – IP packet transfer and availability performance parameters • Y. 1541 Network performance objectives for IP-based services Note : SERIES Y: GLOBAL INFORMATION INFRASTRUCTURE, INTERNET PROTOCOL ASPECTS AND NEXT-GENERATION NETWORKS Internet protocol aspects – Quality of service and network performance 8

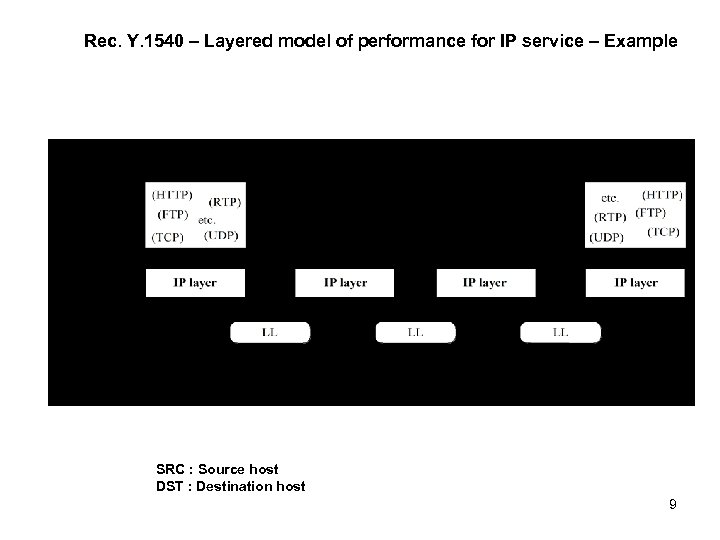

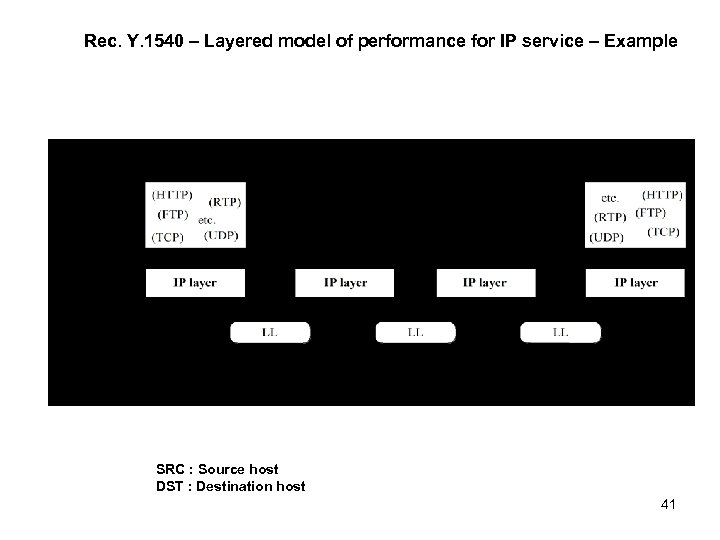

Rec. Y. 1540 – Layered model of performance for IP service – Example SRC : Source host DST : Destination host 9

Rec. Y. 1540 – Layered model of performance for IP service – Example SRC : Source host DST : Destination host 9

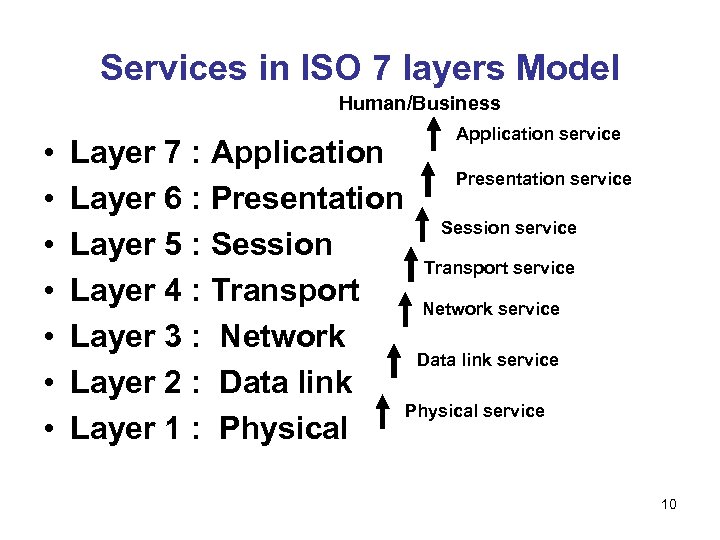

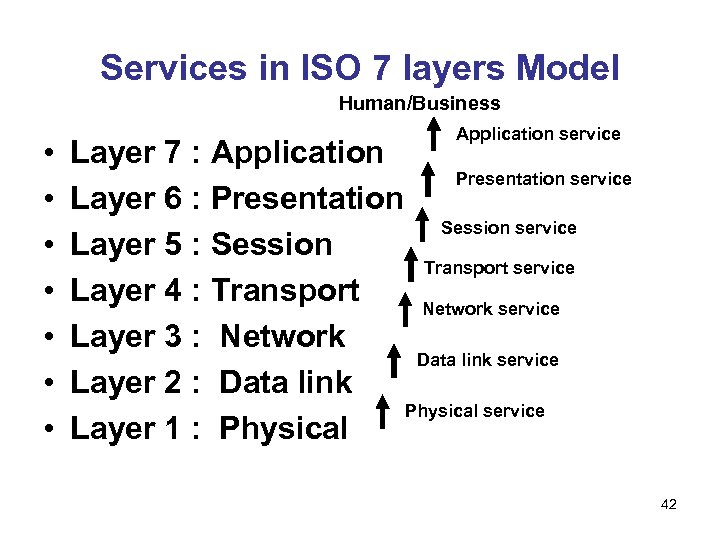

Services in ISO 7 layers Model Human/Business • • Application service Layer 7 : Application Presentation service Layer 6 : Presentation Session service Layer 5 : Session Transport service Layer 4 : Transport Network service Layer 3 : Network Data link service Layer 2 : Data link Physical service Layer 1 : Physical 10

Services in ISO 7 layers Model Human/Business • • Application service Layer 7 : Application Presentation service Layer 6 : Presentation Session service Layer 5 : Session Transport service Layer 4 : Transport Network service Layer 3 : Network Data link service Layer 2 : Data link Physical service Layer 1 : Physical 10

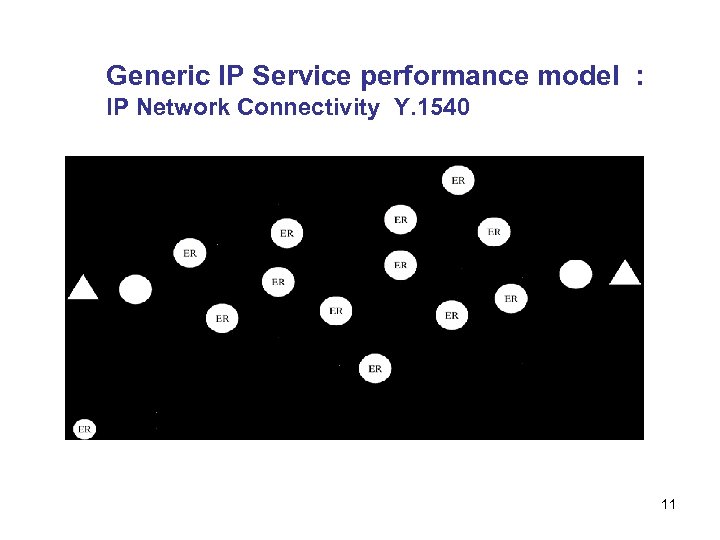

Generic IP Service performance model : IP Network Connectivity Y. 1540 11

Generic IP Service performance model : IP Network Connectivity Y. 1540 11

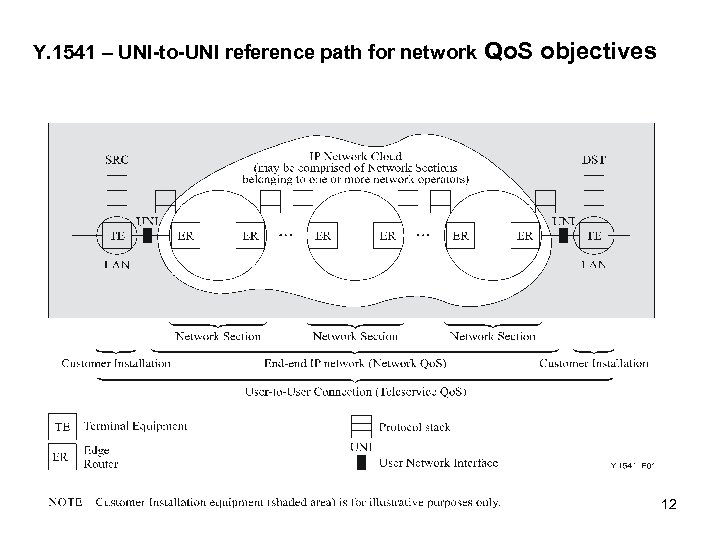

Y. 1541 – UNI-to-UNI reference path for network Qo. S objectives 12

Y. 1541 – UNI-to-UNI reference path for network Qo. S objectives 12

End-to-End Qo. S • NOTE – The phrase "End-to-End" has a different meaning in Recommendations concerning user Qo. S classes, where end-toend means, for example, from mouth to ear in voice quality Recommendations. Within the context of this Recommendation( Y. 1541), end-to-end is to be understood as from UNI‑to‑UNI. 13

End-to-End Qo. S • NOTE – The phrase "End-to-End" has a different meaning in Recommendations concerning user Qo. S classes, where end-toend means, for example, from mouth to ear in voice quality Recommendations. Within the context of this Recommendation( Y. 1541), end-to-end is to be understood as from UNI‑to‑UNI. 13

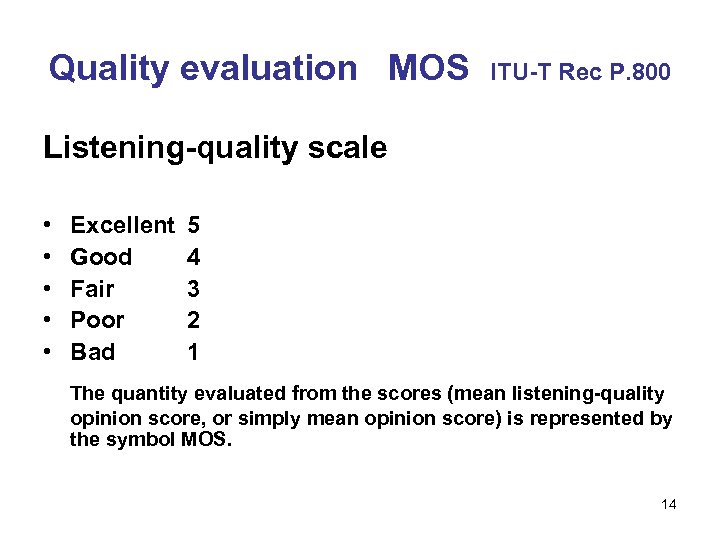

Quality evaluation MOS ITU-T Rec P. 800 Listening-quality scale • • • Excellent Good Fair Poor Bad 5 4 3 2 1 The quantity evaluated from the scores (mean listening-quality opinion score, or simply mean opinion score) is represented by the symbol MOS. 14

Quality evaluation MOS ITU-T Rec P. 800 Listening-quality scale • • • Excellent Good Fair Poor Bad 5 4 3 2 1 The quantity evaluated from the scores (mean listening-quality opinion score, or simply mean opinion score) is represented by the symbol MOS. 14

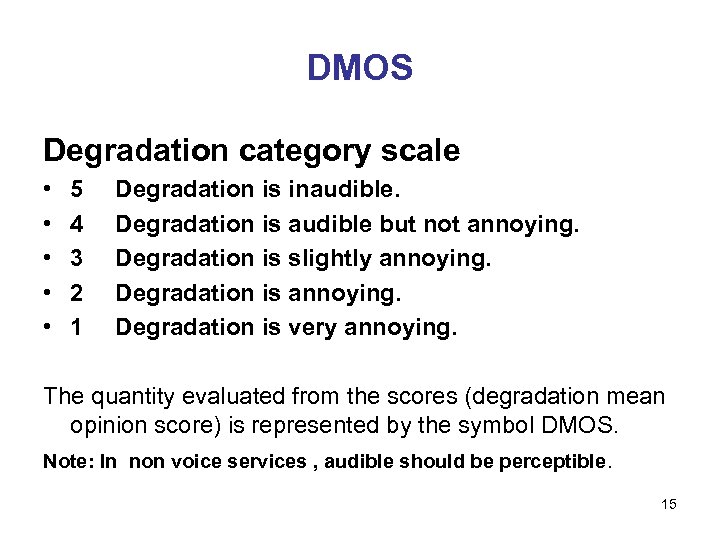

DMOS Degradation category scale • • • 5 4 3 2 1 Degradation is inaudible. Degradation is audible but not annoying. Degradation is slightly annoying. Degradation is very annoying. The quantity evaluated from the scores (degradation mean opinion score) is represented by the symbol DMOS. Note: In non voice services , audible should be perceptible. 15

DMOS Degradation category scale • • • 5 4 3 2 1 Degradation is inaudible. Degradation is audible but not annoying. Degradation is slightly annoying. Degradation is very annoying. The quantity evaluated from the scores (degradation mean opinion score) is represented by the symbol DMOS. Note: In non voice services , audible should be perceptible. 15

NP, Qo. S , Qo. E and SLA • • • Network performance Quality of Service Quality of Experience Quality of Preference ? ? Service Level Agreement 16

NP, Qo. S , Qo. E and SLA • • • Network performance Quality of Service Quality of Experience Quality of Preference ? ? Service Level Agreement 16

Definition of Quality of Experience (Qo. E) Rec.G100 The overall acceptability of an application or service, as perceived subjectively by the end-user. • NOTE 1 – Quality of Experience includes the complete end-to-end system effects (client, terminal, network, services infrastructure, etc. ). • NOTE 2 – Overall acceptability may be influenced by user expectations and context. 17

Definition of Quality of Experience (Qo. E) Rec.G100 The overall acceptability of an application or service, as perceived subjectively by the end-user. • NOTE 1 – Quality of Experience includes the complete end-to-end system effects (client, terminal, network, services infrastructure, etc. ). • NOTE 2 – Overall acceptability may be influenced by user expectations and context. 17

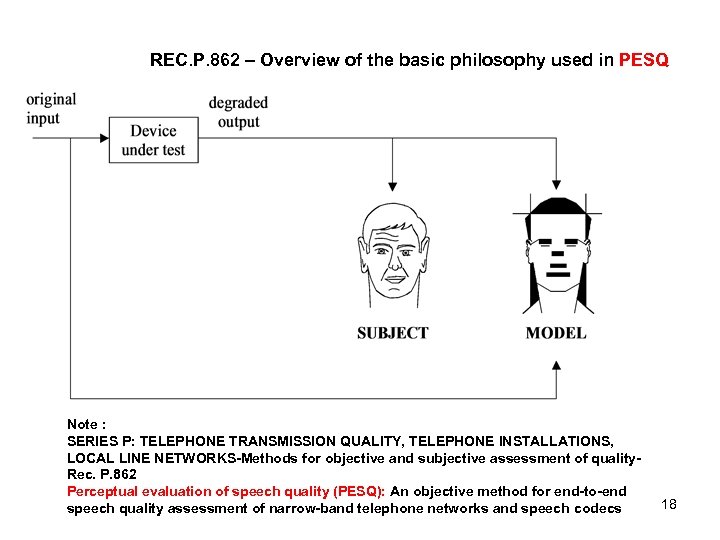

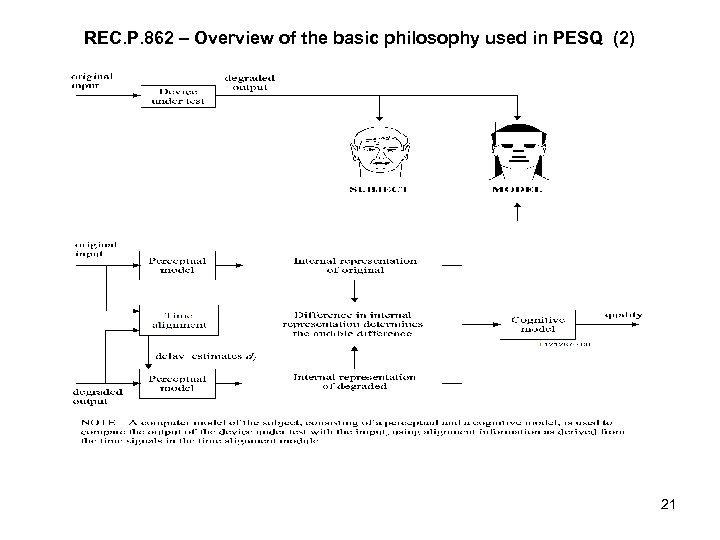

REC. P. 862 – Overview of the basic philosophy used in PESQ Note : SERIES P: TELEPHONE TRANSMISSION QUALITY, TELEPHONE INSTALLATIONS, LOCAL LINE NETWORKS-Methods for objective and subjective assessment of quality. Rec. P. 862 Perceptual evaluation of speech quality (PESQ): An objective method for end-to-end speech quality assessment of narrow-band telephone networks and speech codecs 18

REC. P. 862 – Overview of the basic philosophy used in PESQ Note : SERIES P: TELEPHONE TRANSMISSION QUALITY, TELEPHONE INSTALLATIONS, LOCAL LINE NETWORKS-Methods for objective and subjective assessment of quality. Rec. P. 862 Perceptual evaluation of speech quality (PESQ): An objective method for end-to-end speech quality assessment of narrow-band telephone networks and speech codecs 18

Subjective quality assessment for voice codec Rec. P 830 ACR( Absolute Category Rating ) on Listening Quality scale Testing procedure • Source speech materials : recording system, speech sample, talker, speech level/equalization • Experiment parameter : Codec condition( speech /listening level, talkers, errors, bit rate, transcodings, tandeming, bit rate mismatch, environmental noise, signaling, reference condition( SNR, codecs) • Experiment design combination of parameters result in a single experiment minimum set of experiments • Listening test procedure : : Receiving system, opinion scale, gaussian noise • Analysis of results 19

Subjective quality assessment for voice codec Rec. P 830 ACR( Absolute Category Rating ) on Listening Quality scale Testing procedure • Source speech materials : recording system, speech sample, talker, speech level/equalization • Experiment parameter : Codec condition( speech /listening level, talkers, errors, bit rate, transcodings, tandeming, bit rate mismatch, environmental noise, signaling, reference condition( SNR, codecs) • Experiment design combination of parameters result in a single experiment minimum set of experiments • Listening test procedure : : Receiving system, opinion scale, gaussian noise • Analysis of results 19

Subjective audiovisual quality assessment for multimedia applications Rec. P 911 Typical viewing and listening conditions • • Room size : Specify L W H Viewing distance : 1 -8 H Peak luminance of the screen : 100 -200 cd/m 2 Ratio of luminance of inactive screen to peak luminance : 0. 05 Ratio of the luminance of the screen, when displaying only black level in a completely dark room, to that corresponding to peak white : 0. 1 Ratio of luminance of background behind picture monitor to peak luminance of picture : 0. 2 Chromaticity of background : D 65 Background room illumination : 20 lux • Background noise level : 30 d. BA • Listening level : 80 d. BA • Reverberation time : <500 ms, f > 150 Hz 20

Subjective audiovisual quality assessment for multimedia applications Rec. P 911 Typical viewing and listening conditions • • Room size : Specify L W H Viewing distance : 1 -8 H Peak luminance of the screen : 100 -200 cd/m 2 Ratio of luminance of inactive screen to peak luminance : 0. 05 Ratio of the luminance of the screen, when displaying only black level in a completely dark room, to that corresponding to peak white : 0. 1 Ratio of luminance of background behind picture monitor to peak luminance of picture : 0. 2 Chromaticity of background : D 65 Background room illumination : 20 lux • Background noise level : 30 d. BA • Listening level : 80 d. BA • Reverberation time : <500 ms, f > 150 Hz 20

REC. P. 862 – Overview of the basic philosophy used in PESQ (2) 21

REC. P. 862 – Overview of the basic philosophy used in PESQ (2) 21

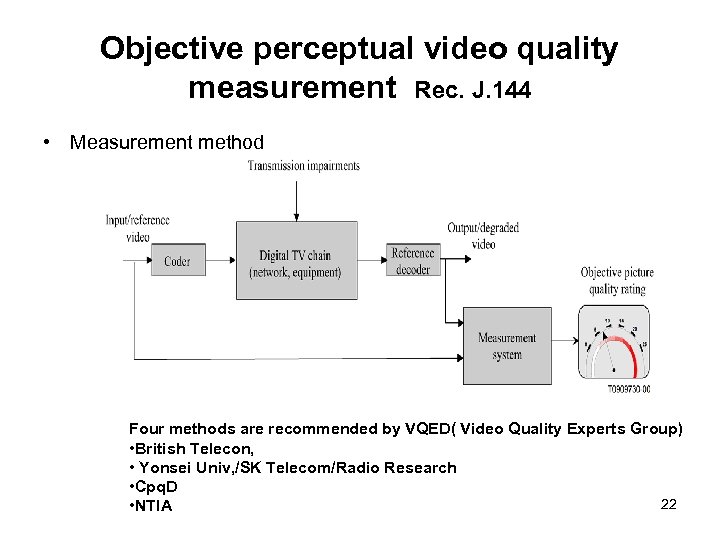

Objective perceptual video quality measurement Rec. J. 144 • Measurement method Four methods are recommended by VQED( Video Quality Experts Group) • British Telecon, • Yonsei Univ, /SK Telecom/Radio Research • Cpq. D 22 • NTIA

Objective perceptual video quality measurement Rec. J. 144 • Measurement method Four methods are recommended by VQED( Video Quality Experts Group) • British Telecon, • Yonsei Univ, /SK Telecom/Radio Research • Cpq. D 22 • NTIA

SERIES G: TRANSMISSION SYSTEMS AND MEDIA, DIGITAL SYSTEMS AND NETWORKS International telephone connections and circuits – General definitions REC. G. 107 “The E-model, a computational model for use in transmission planning “ The E-model is based on the equipment impairment factor method, following previous transmission rating models. It was developed by an ETSI ad hoc group called "Voice Transmission Quality from Mouth to Ear". REC. G. 1070 Opinion model for video-telephony applications a computational model for point-to-point interactive videophone applications over IP networks that is useful as a Qo. E/Qo. S planning tool for assessing the combined effects of variations in several video and speech parameters that affect the quality of experience (Qo. E). 23

SERIES G: TRANSMISSION SYSTEMS AND MEDIA, DIGITAL SYSTEMS AND NETWORKS International telephone connections and circuits – General definitions REC. G. 107 “The E-model, a computational model for use in transmission planning “ The E-model is based on the equipment impairment factor method, following previous transmission rating models. It was developed by an ETSI ad hoc group called "Voice Transmission Quality from Mouth to Ear". REC. G. 1070 Opinion model for video-telephony applications a computational model for point-to-point interactive videophone applications over IP networks that is useful as a Qo. E/Qo. S planning tool for assessing the combined effects of variations in several video and speech parameters that affect the quality of experience (Qo. E). 23

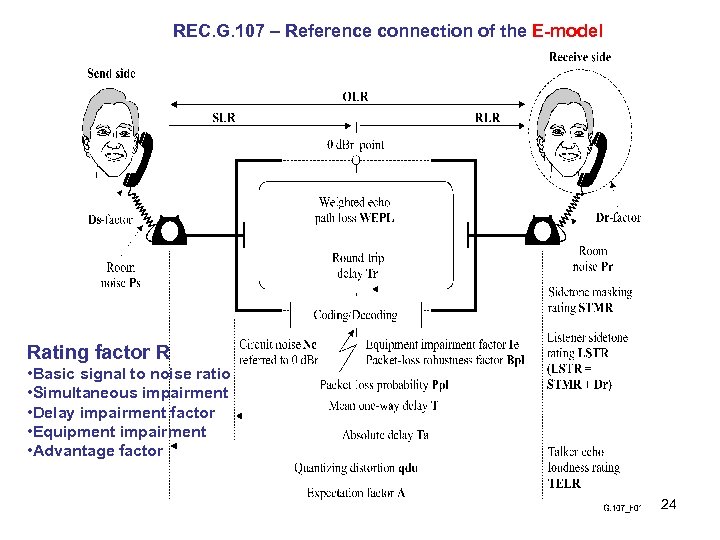

REC. G. 107 – Reference connection of the E-model Rating factor R • Basic signal to noise ratio • Simultaneous impairment • Delay impairment factor • Equipment impairment • Advantage factor 24

REC. G. 107 – Reference connection of the E-model Rating factor R • Basic signal to noise ratio • Simultaneous impairment • Delay impairment factor • Equipment impairment • Advantage factor 24

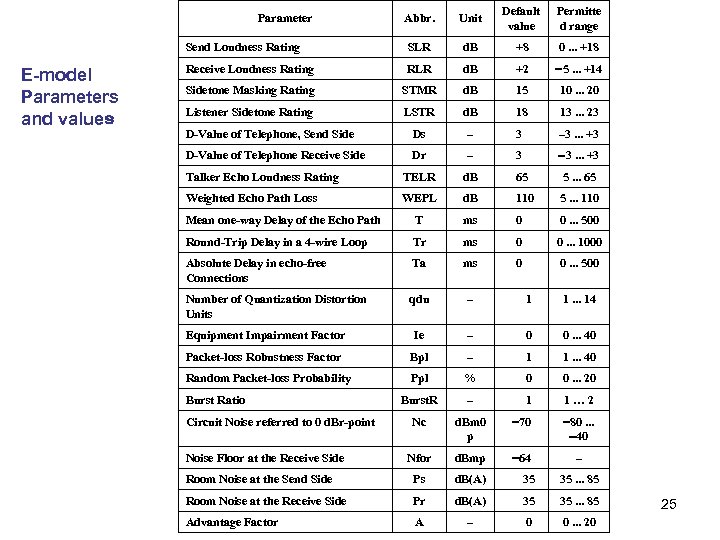

Abbr. Unit Default value Permitte d range Send Loudness Rating SLR d. B +8 0. . . +18 Receive Loudness Rating RLR d. B +2 5. . . +14 Sidetone Masking Rating STMR d. B 15 10. . . 20 Listener Sidetone Rating LSTR d. B 18 13. . . 23 D-Value of Telephone, Send Side Ds – 3. . . +3 D-Value of Telephone Receive Side Dr – 3 3. . . +3 Talker Echo Loudness Rating TELR d. B 65 5. . . 65 Weighted Echo Path Loss WEPL d. B 110 5. . . 110 Mean one-way Delay of the Echo Path T ms 0 0. . . 500 Round-Trip Delay in a 4 -wire Loop Tr ms 0 0. . . 1000 Absolute Delay in echo-free Connections Ta ms 0 0. . . 500 Number of Quantization Distortion Units qdu – 1 1. . . 14 Equipment Impairment Factor Ie – 0 0. . . 40 Packet-loss Robustness Factor Bpl – 1 1. . . 40 Random Packet-loss Probability Ppl % 0 0. . . 20 Burst. R – 1 1… 2 Nc d. Bm 0 p 70 80. . . 40 Nfor d. Bmp 64 – Room Noise at the Send Side Ps d. B(A) 35 35. . . 85 Room Noise at the Receive Side Pr d. B(A) 35 35. . . 85 Advantage Factor A – 0 0. . . 20 Parameter E-model Parameters and values Burst Ratio Circuit Noise referred to 0 d. Br-point Noise Floor at the Receive Side 25

Abbr. Unit Default value Permitte d range Send Loudness Rating SLR d. B +8 0. . . +18 Receive Loudness Rating RLR d. B +2 5. . . +14 Sidetone Masking Rating STMR d. B 15 10. . . 20 Listener Sidetone Rating LSTR d. B 18 13. . . 23 D-Value of Telephone, Send Side Ds – 3. . . +3 D-Value of Telephone Receive Side Dr – 3 3. . . +3 Talker Echo Loudness Rating TELR d. B 65 5. . . 65 Weighted Echo Path Loss WEPL d. B 110 5. . . 110 Mean one-way Delay of the Echo Path T ms 0 0. . . 500 Round-Trip Delay in a 4 -wire Loop Tr ms 0 0. . . 1000 Absolute Delay in echo-free Connections Ta ms 0 0. . . 500 Number of Quantization Distortion Units qdu – 1 1. . . 14 Equipment Impairment Factor Ie – 0 0. . . 40 Packet-loss Robustness Factor Bpl – 1 1. . . 40 Random Packet-loss Probability Ppl % 0 0. . . 20 Burst. R – 1 1… 2 Nc d. Bm 0 p 70 80. . . 40 Nfor d. Bmp 64 – Room Noise at the Send Side Ps d. B(A) 35 35. . . 85 Room Noise at the Receive Side Pr d. B(A) 35 35. . . 85 Advantage Factor A – 0 0. . . 20 Parameter E-model Parameters and values Burst Ratio Circuit Noise referred to 0 d. Br-point Noise Floor at the Receive Side 25

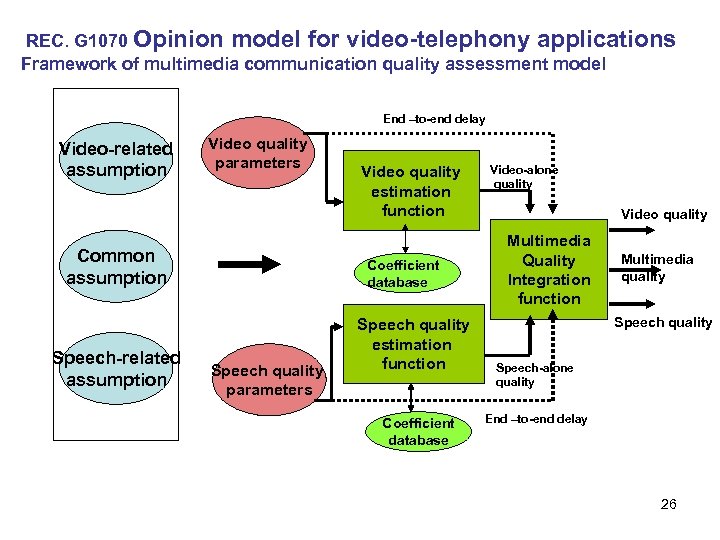

REC. G 1070 Opinion model for video-telephony applications Framework of multimedia communication quality assessment model End –to-end delay Video-related assumption Video quality parameters Common assumption Speech-related assumption Video quality estimation function Coefficient database Speech quality parameters Speech quality estimation function Coefficient database Video-alone quality Video quality Multimedia Quality Integration function Multimedia quality Speech-alone quality End –to-end delay 26

REC. G 1070 Opinion model for video-telephony applications Framework of multimedia communication quality assessment model End –to-end delay Video-related assumption Video quality parameters Common assumption Speech-related assumption Video quality estimation function Coefficient database Speech quality parameters Speech quality estimation function Coefficient database Video-alone quality Video quality Multimedia Quality Integration function Multimedia quality Speech-alone quality End –to-end delay 26

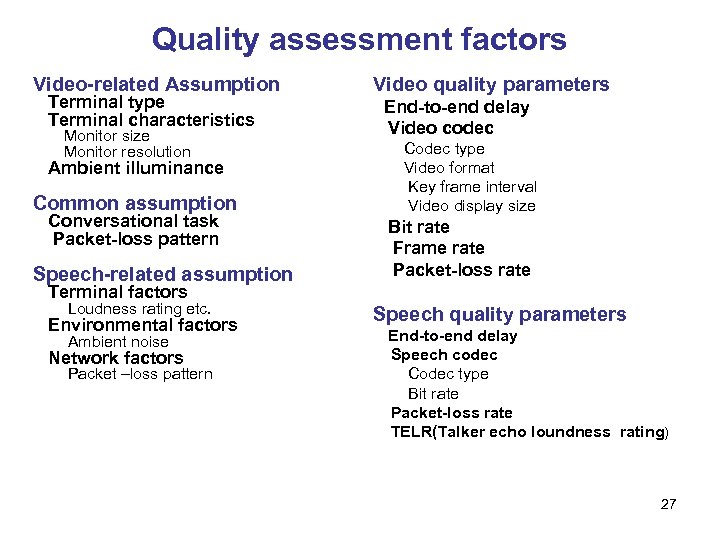

Quality assessment factors Video-related Assumption Terminal type Terminal characteristics Monitor size Monitor resolution Ambient illuminance Common assumption Conversational task Packet-loss pattern Speech-related assumption Video quality parameters End-to-end delay Video codec Codec type Video format Key frame interval Video display size Bit rate Frame rate Packet-loss rate Terminal factors Loudness rating etc. Speech quality parameters Ambient noise End-to-end delay Speech codec Codec type Bit rate Packet-loss rate TELR(Talker echo loundness rating) Environmental factors Network factors Packet –loss pattern 27

Quality assessment factors Video-related Assumption Terminal type Terminal characteristics Monitor size Monitor resolution Ambient illuminance Common assumption Conversational task Packet-loss pattern Speech-related assumption Video quality parameters End-to-end delay Video codec Codec type Video format Key frame interval Video display size Bit rate Frame rate Packet-loss rate Terminal factors Loudness rating etc. Speech quality parameters Ambient noise End-to-end delay Speech codec Codec type Bit rate Packet-loss rate TELR(Talker echo loundness rating) Environmental factors Network factors Packet –loss pattern 27

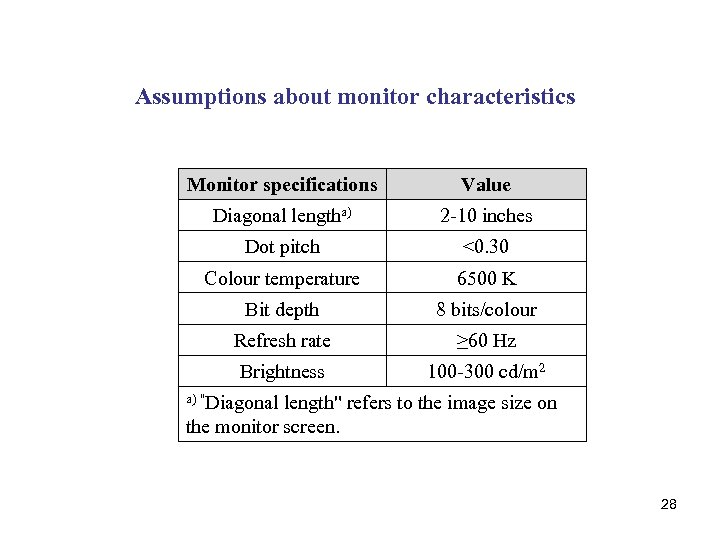

Assumptions about monitor characteristics Monitor specifications Value Diagonal lengtha) 2 -10 inches Dot pitch <0. 30 Colour temperature 6500 K Bit depth 8 bits/colour Refresh rate ≥ 60 Hz Brightness 100 -300 cd/m 2 a) "Diagonal length" refers to the image size on the monitor screen. 28

Assumptions about monitor characteristics Monitor specifications Value Diagonal lengtha) 2 -10 inches Dot pitch <0. 30 Colour temperature 6500 K Bit depth 8 bits/colour Refresh rate ≥ 60 Hz Brightness 100 -300 cd/m 2 a) "Diagonal length" refers to the image size on the monitor screen. 28

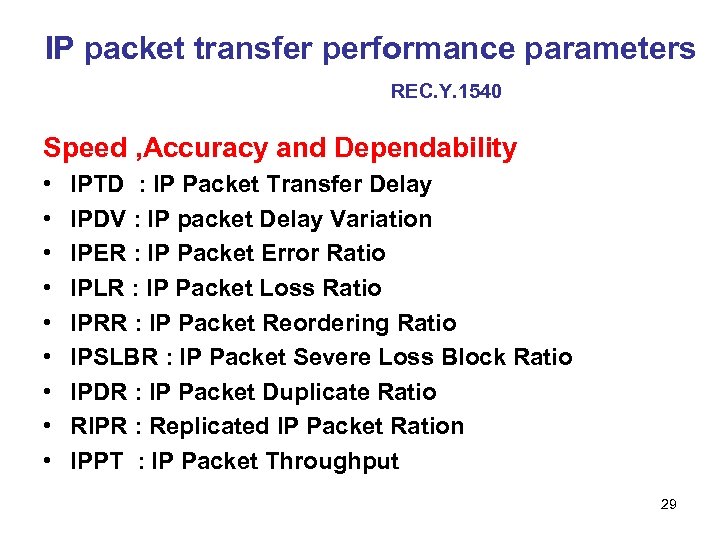

IP packet transfer performance parameters REC. Y. 1540 Speed , Accuracy and Dependability • • • IPTD : IP Packet Transfer Delay IPDV : IP packet Delay Variation IPER : IP Packet Error Ratio IPLR : IP Packet Loss Ratio IPRR : IP Packet Reordering Ratio IPSLBR : IP Packet Severe Loss Block Ratio IPDR : IP Packet Duplicate Ratio RIPR : Replicated IP Packet Ration IPPT : IP Packet Throughput 29

IP packet transfer performance parameters REC. Y. 1540 Speed , Accuracy and Dependability • • • IPTD : IP Packet Transfer Delay IPDV : IP packet Delay Variation IPER : IP Packet Error Ratio IPLR : IP Packet Loss Ratio IPRR : IP Packet Reordering Ratio IPSLBR : IP Packet Severe Loss Block Ratio IPDR : IP Packet Duplicate Ratio RIPR : Replicated IP Packet Ration IPPT : IP Packet Throughput 29

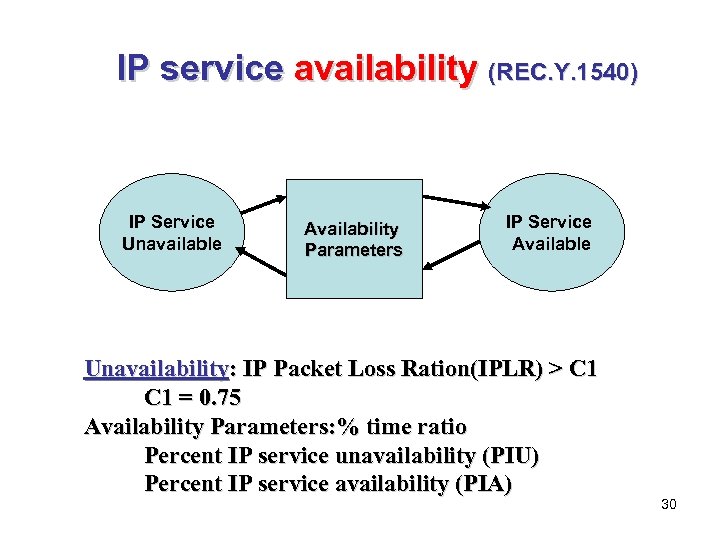

IP service availability (REC. Y. 1540) IP Service Unavailable Availability Parameters IP Service Available Unavailability: IP Packet Loss Ration(IPLR) > C 1 = 0. 75 Availability Parameters: % time ratio Percent IP service unavailability (PIU) Percent IP service availability (PIA) 30

IP service availability (REC. Y. 1540) IP Service Unavailable Availability Parameters IP Service Available Unavailability: IP Packet Loss Ration(IPLR) > C 1 = 0. 75 Availability Parameters: % time ratio Percent IP service unavailability (PIU) Percent IP service availability (PIA) 30

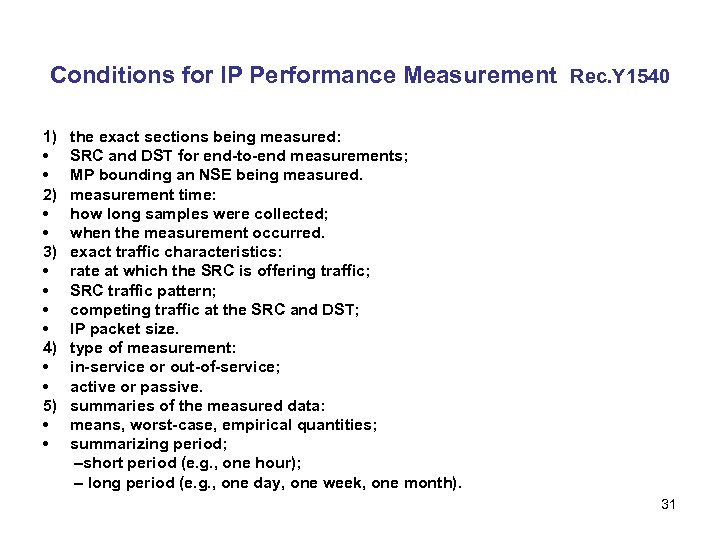

Conditions for IP Performance Measurement Rec. Y 1540 1) the exact sections being measured: • SRC and DST for end-to-end measurements; • MP bounding an NSE being measured. 2) measurement time: • how long samples were collected; • when the measurement occurred. 3) exact traffic characteristics: • rate at which the SRC is offering traffic; • SRC traffic pattern; • competing traffic at the SRC and DST; • IP packet size. 4) type of measurement: • in-service or out-of-service; • active or passive. 5) summaries of the measured data: • means, worst-case, empirical quantities; • summarizing period; –short period (e. g. , one hour); – long period (e. g. , one day, one week, one month). 31

Conditions for IP Performance Measurement Rec. Y 1540 1) the exact sections being measured: • SRC and DST for end-to-end measurements; • MP bounding an NSE being measured. 2) measurement time: • how long samples were collected; • when the measurement occurred. 3) exact traffic characteristics: • rate at which the SRC is offering traffic; • SRC traffic pattern; • competing traffic at the SRC and DST; • IP packet size. 4) type of measurement: • in-service or out-of-service; • active or passive. 5) summaries of the measured data: • means, worst-case, empirical quantities; • summarizing period; –short period (e. g. , one hour); – long period (e. g. , one day, one week, one month). 31

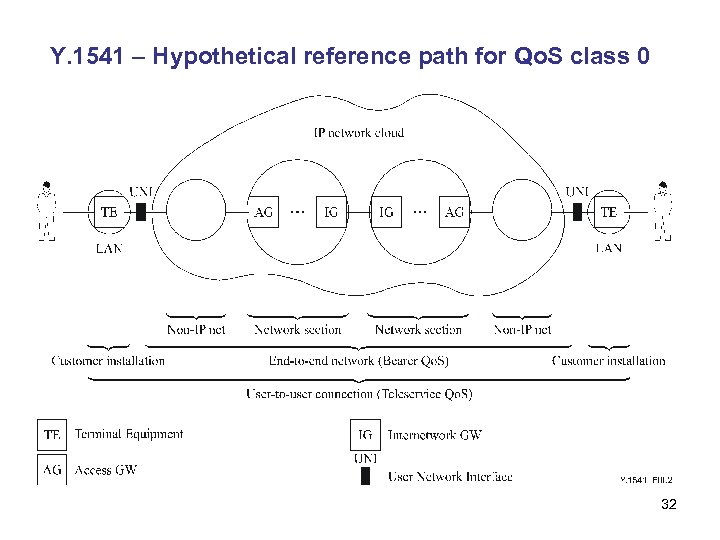

Y. 1541 – Hypothetical reference path for Qo. S class 0 32

Y. 1541 – Hypothetical reference path for Qo. S class 0 32

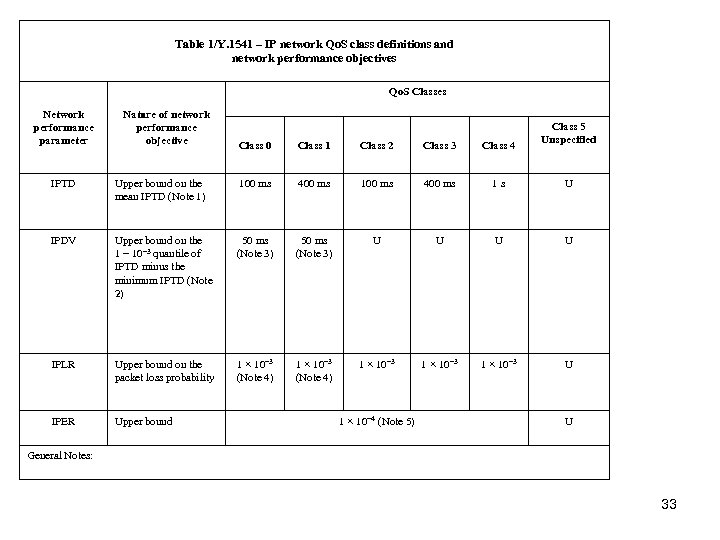

Table 1/Y. 1541 – IP network Qo. S class definitions and network performance objectives Qo. S Classes Network performance parameter Nature of network performance objective Class 0 Class 1 Class 2 Class 3 Class 4 Class 5 Unspecified IPTD Upper bound on the mean IPTD (Note 1) 100 ms 400 ms 1 s U IPDV Upper bound on the 1 10– 3 quantile of IPTD minus the minimum IPTD (Note 2) 50 ms (Note 3) U U IPLR Upper bound on the packet loss probability 1 × 10– 3 (Note 4) 1 × 10– 3 U IPER Upper bound 1 × 10– 4 (Note 5) U General Notes: 33

Table 1/Y. 1541 – IP network Qo. S class definitions and network performance objectives Qo. S Classes Network performance parameter Nature of network performance objective Class 0 Class 1 Class 2 Class 3 Class 4 Class 5 Unspecified IPTD Upper bound on the mean IPTD (Note 1) 100 ms 400 ms 1 s U IPDV Upper bound on the 1 10– 3 quantile of IPTD minus the minimum IPTD (Note 2) 50 ms (Note 3) U U IPLR Upper bound on the packet loss probability 1 × 10– 3 (Note 4) 1 × 10– 3 U IPER Upper bound 1 × 10– 4 (Note 5) U General Notes: 33

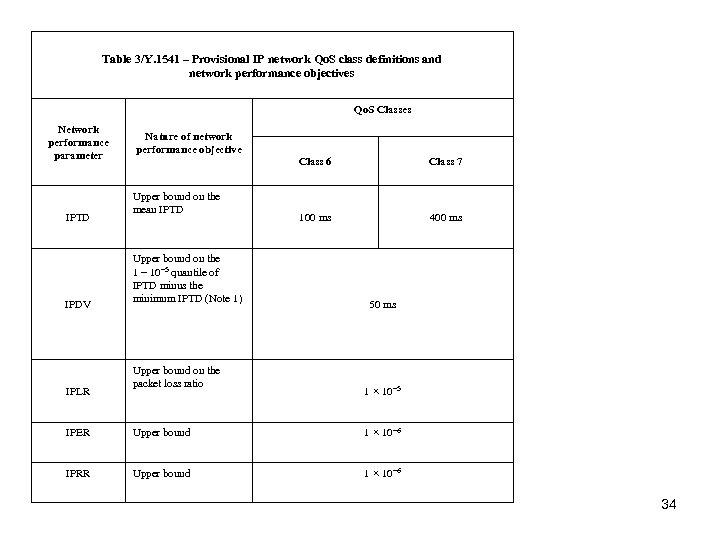

Table 3/Y. 1541 – Provisional IP network Qo. S class definitions and network performance objectives Qo. S Classes Network performance parameter IPTD IPDV IPLR Nature of network performance objective Class 6 Upper bound on the mean IPTD Upper bound on the 1 10– 5 quantile of IPTD minus the minimum IPTD (Note 1) Upper bound on the packet loss ratio Class 7 100 ms 400 ms 50 ms 1 × 10– 5 IPER Upper bound 1 × 10– 6 IPRR Upper bound 1 × 10– 6 34

Table 3/Y. 1541 – Provisional IP network Qo. S class definitions and network performance objectives Qo. S Classes Network performance parameter IPTD IPDV IPLR Nature of network performance objective Class 6 Upper bound on the mean IPTD Upper bound on the 1 10– 5 quantile of IPTD minus the minimum IPTD (Note 1) Upper bound on the packet loss ratio Class 7 100 ms 400 ms 50 ms 1 × 10– 5 IPER Upper bound 1 × 10– 6 IPRR Upper bound 1 × 10– 6 34

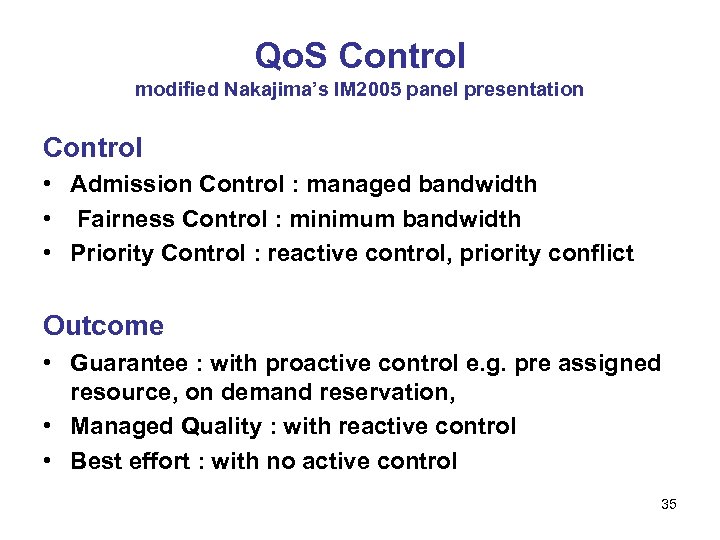

Qo. S Control modified Nakajima’s IM 2005 panel presentation Control • Admission Control : managed bandwidth • Fairness Control : minimum bandwidth • Priority Control : reactive control, priority conflict Outcome • Guarantee : with proactive control e. g. pre assigned resource, on demand reservation, • Managed Quality : with reactive control • Best effort : with no active control 35

Qo. S Control modified Nakajima’s IM 2005 panel presentation Control • Admission Control : managed bandwidth • Fairness Control : minimum bandwidth • Priority Control : reactive control, priority conflict Outcome • Guarantee : with proactive control e. g. pre assigned resource, on demand reservation, • Managed Quality : with reactive control • Best effort : with no active control 35

SLA ? ? -How to reach the practical Agreement by Negotiation ? - , • • • Who drive SLA ? Why SLA is needed ? What S. L. A. is ? When SLA is agreed ? How to agree SLA ? 36

SLA ? ? -How to reach the practical Agreement by Negotiation ? - , • • • Who drive SLA ? Why SLA is needed ? What S. L. A. is ? When SLA is agreed ? How to agree SLA ? 36

ITU-T Rec. E 860 ( 2002) • “A Service Level Agreement is a formal agreement between two or more entities that is reached after a negotiating activities with the scope to access service characteristics, responsibilities and priorities of every part “ 37

ITU-T Rec. E 860 ( 2002) • “A Service Level Agreement is a formal agreement between two or more entities that is reached after a negotiating activities with the scope to access service characteristics, responsibilities and priorities of every part “ 37

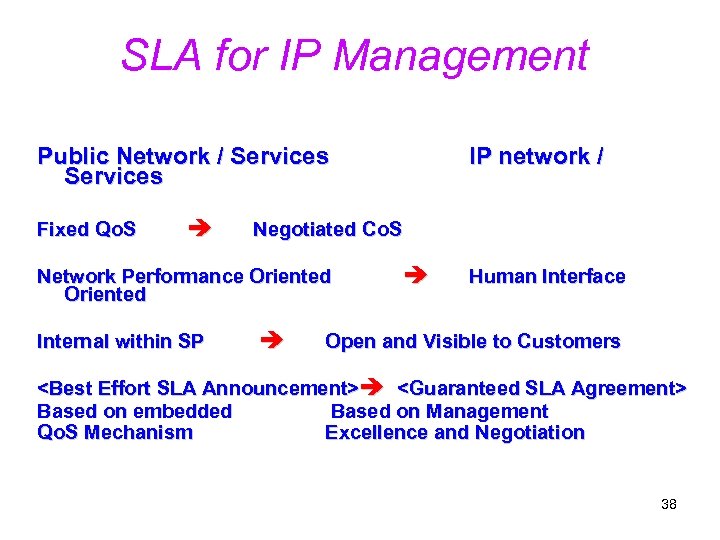

SLA for IP Management Public Network / Services Fixed Qo. S Negotiated Co. S Network Performance Oriented Internal within SP IP network / Human Interface Open and Visible to Customers

SLA for IP Management Public Network / Services Fixed Qo. S Negotiated Co. S Network Performance Oriented Internal within SP IP network / Human Interface Open and Visible to Customers

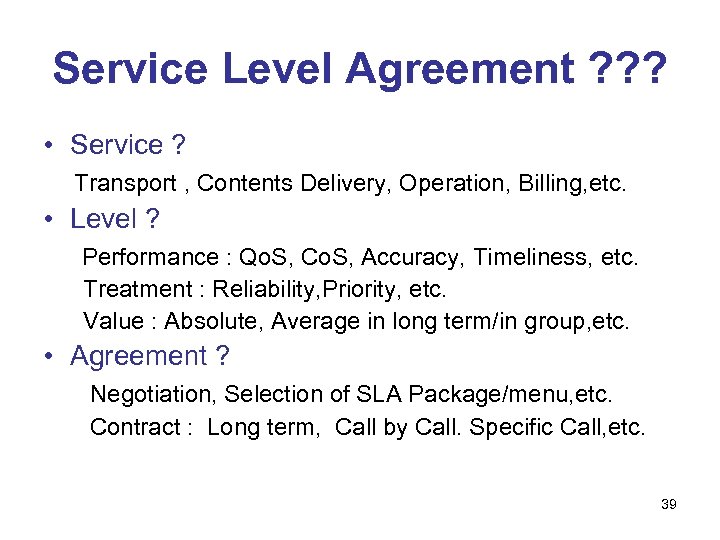

Service Level Agreement ? ? ? • Service ? Transport , Contents Delivery, Operation, Billing, etc. • Level ? Performance : Qo. S, Co. S, Accuracy, Timeliness, etc. Treatment : Reliability, Priority, etc. Value : Absolute, Average in long term/in group, etc. • Agreement ? Negotiation, Selection of SLA Package/menu, etc. Contract : Long term, Call by Call. Specific Call, etc. 39

Service Level Agreement ? ? ? • Service ? Transport , Contents Delivery, Operation, Billing, etc. • Level ? Performance : Qo. S, Co. S, Accuracy, Timeliness, etc. Treatment : Reliability, Priority, etc. Value : Absolute, Average in long term/in group, etc. • Agreement ? Negotiation, Selection of SLA Package/menu, etc. Contract : Long term, Call by Call. Specific Call, etc. 39

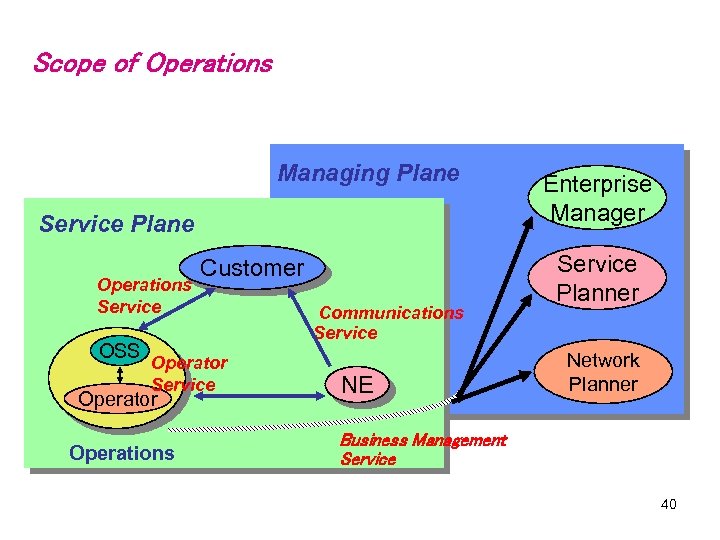

Scope of Operations Managing Plane Service Plane Operations Service OSS Customer Operator Service Operator Operations Communications Service NE Enterprise Manager Service Planner Network Planner Business Management Service 40

Scope of Operations Managing Plane Service Plane Operations Service OSS Customer Operator Service Operator Operations Communications Service NE Enterprise Manager Service Planner Network Planner Business Management Service 40

Rec. Y. 1540 – Layered model of performance for IP service – Example SRC : Source host DST : Destination host 41

Rec. Y. 1540 – Layered model of performance for IP service – Example SRC : Source host DST : Destination host 41

Services in ISO 7 layers Model Human/Business • • Application service Layer 7 : Application Presentation service Layer 6 : Presentation Session service Layer 5 : Session Transport service Layer 4 : Transport Network service Layer 3 : Network Data link service Layer 2 : Data link Physical service Layer 1 : Physical 42

Services in ISO 7 layers Model Human/Business • • Application service Layer 7 : Application Presentation service Layer 6 : Presentation Session service Layer 5 : Session Transport service Layer 4 : Transport Network service Layer 3 : Network Data link service Layer 2 : Data link Physical service Layer 1 : Physical 42

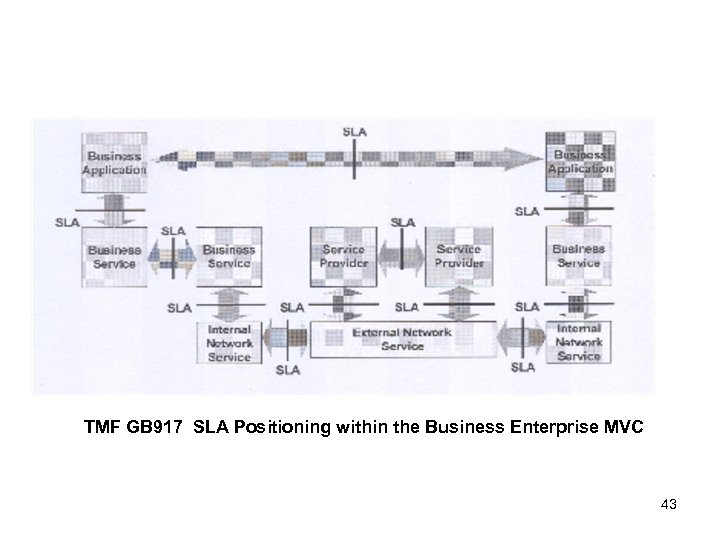

TMF GB 917 SLA Positioning within the Business Enterprise MVC 43

TMF GB 917 SLA Positioning within the Business Enterprise MVC 43

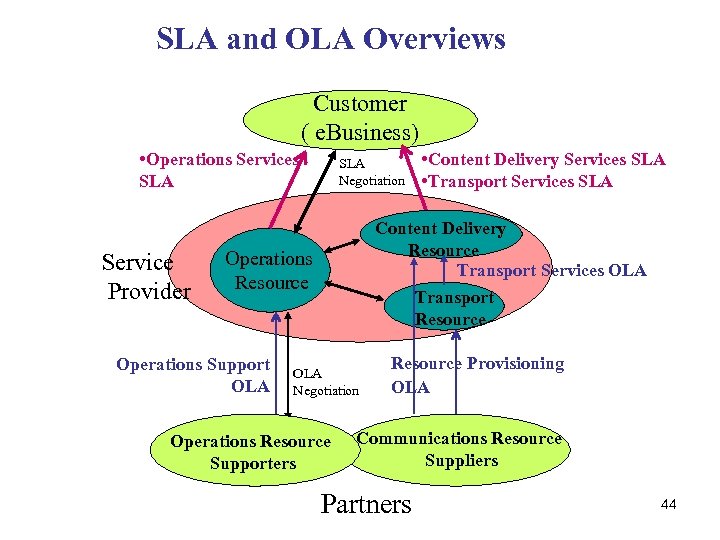

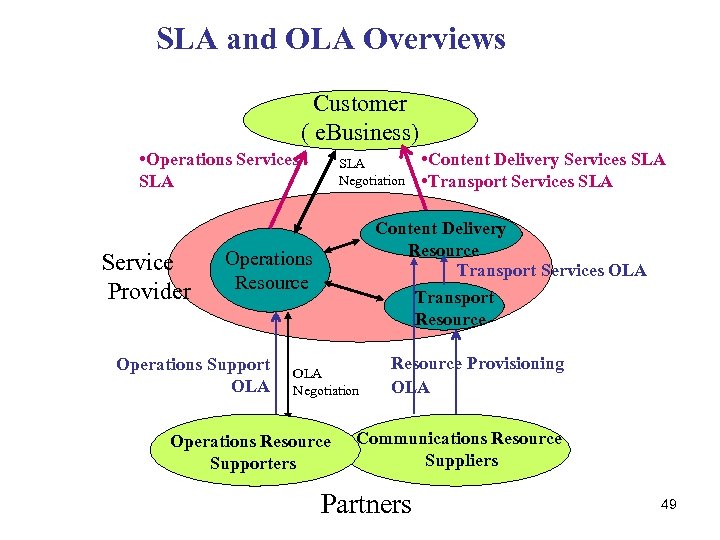

SLA and OLA Overviews Customer ( e. Business) • Operations Services SLA Service Provider SLA Negotiation Content Delivery Resource Transport Services OLA Transport Resource Operations Support OLA • Content Delivery Services SLA • Transport Services SLA OLA Negotiation Operations Resource Supporters Resource Provisioning OLA Communications Resource Suppliers Partners 44

SLA and OLA Overviews Customer ( e. Business) • Operations Services SLA Service Provider SLA Negotiation Content Delivery Resource Transport Services OLA Transport Resource Operations Support OLA • Content Delivery Services SLA • Transport Services SLA OLA Negotiation Operations Resource Supporters Resource Provisioning OLA Communications Resource Suppliers Partners 44

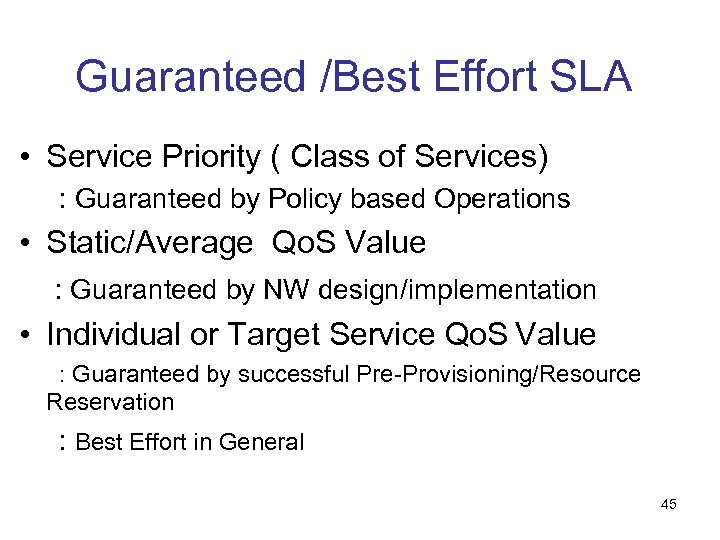

Guaranteed /Best Effort SLA • Service Priority ( Class of Services) : Guaranteed by Policy based Operations • Static/Average Qo. S Value : Guaranteed by NW design/implementation • Individual or Target Service Qo. S Value : Guaranteed by successful Pre-Provisioning/Resource Reservation : Best Effort in General 45

Guaranteed /Best Effort SLA • Service Priority ( Class of Services) : Guaranteed by Policy based Operations • Static/Average Qo. S Value : Guaranteed by NW design/implementation • Individual or Target Service Qo. S Value : Guaranteed by successful Pre-Provisioning/Resource Reservation : Best Effort in General 45

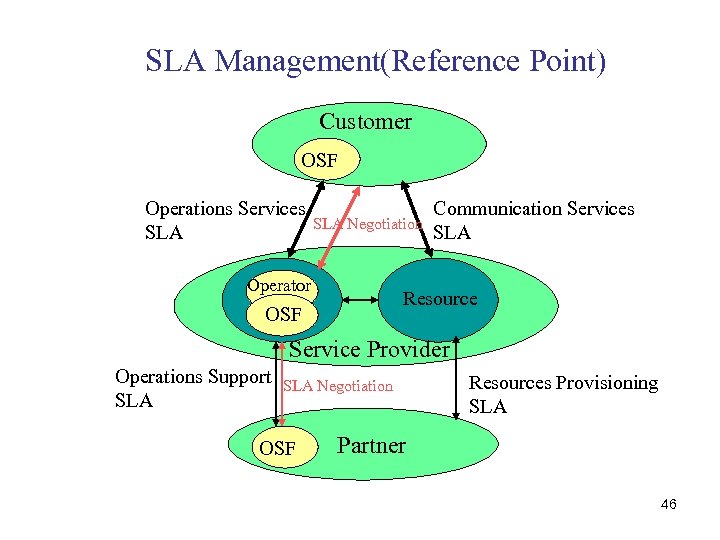

SLA Management(Reference Point) Customer OSF Operations Services SLA Negotiation Operator Communication Services SLA Resource OSF Service Provider Operations Support SLA Negotiation OSF Resources Provisioning SLA Partner 46

SLA Management(Reference Point) Customer OSF Operations Services SLA Negotiation Operator Communication Services SLA Resource OSF Service Provider Operations Support SLA Negotiation OSF Resources Provisioning SLA Partner 46

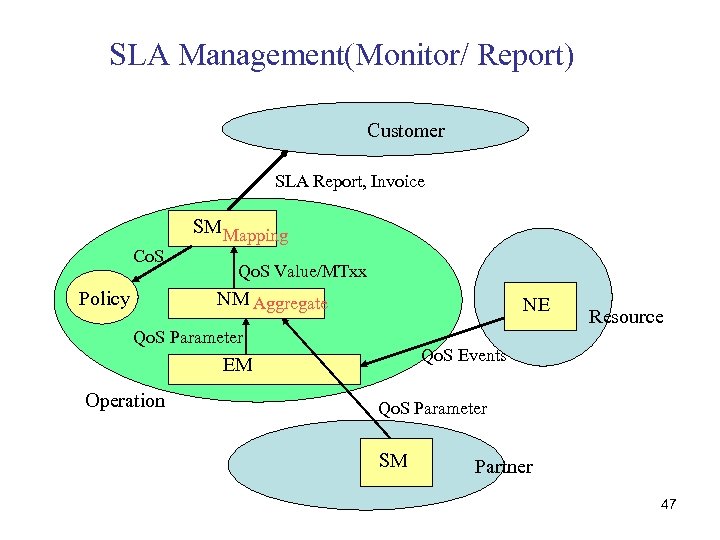

SLA Management(Monitor/ Report) Customer SLA Report, Invoice SM Mapping Co. S Policy Qo. S Value/MTxx NM Aggregate NE Qo. S Parameter Qo. S Events EM Operation Resource Qo. S Parameter SM Partner 47

SLA Management(Monitor/ Report) Customer SLA Report, Invoice SM Mapping Co. S Policy Qo. S Value/MTxx NM Aggregate NE Qo. S Parameter Qo. S Events EM Operation Resource Qo. S Parameter SM Partner 47

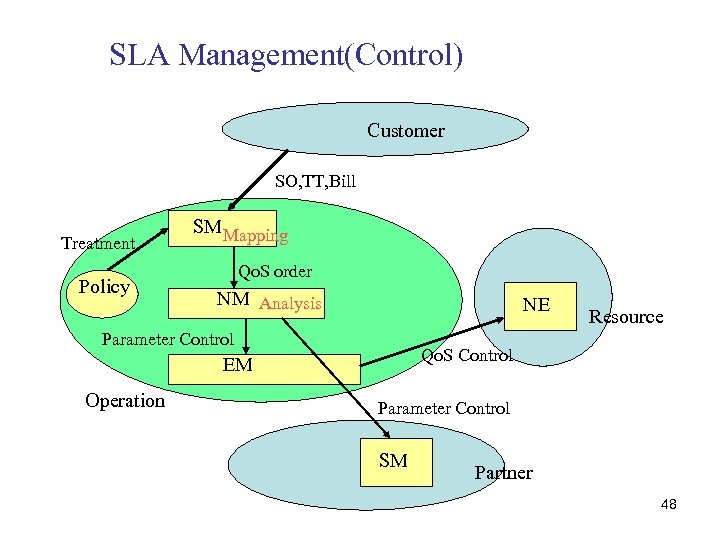

SLA Management(Control) Customer SO, TT, Bill Treatment Policy SM Mapping Qo. S order NM Analysis NE Parameter Control Qo. S Control EM Operation Resource Parameter Control SM Partner 48

SLA Management(Control) Customer SO, TT, Bill Treatment Policy SM Mapping Qo. S order NM Analysis NE Parameter Control Qo. S Control EM Operation Resource Parameter Control SM Partner 48

SLA and OLA Overviews Customer ( e. Business) • Operations Services SLA Service Provider SLA Negotiation Content Delivery Resource Transport Services OLA Transport Resource Operations Support OLA • Content Delivery Services SLA • Transport Services SLA OLA Negotiation Operations Resource Supporters Resource Provisioning OLA Communications Resource Suppliers Partners 49

SLA and OLA Overviews Customer ( e. Business) • Operations Services SLA Service Provider SLA Negotiation Content Delivery Resource Transport Services OLA Transport Resource Operations Support OLA • Content Delivery Services SLA • Transport Services SLA OLA Negotiation Operations Resource Supporters Resource Provisioning OLA Communications Resource Suppliers Partners 49

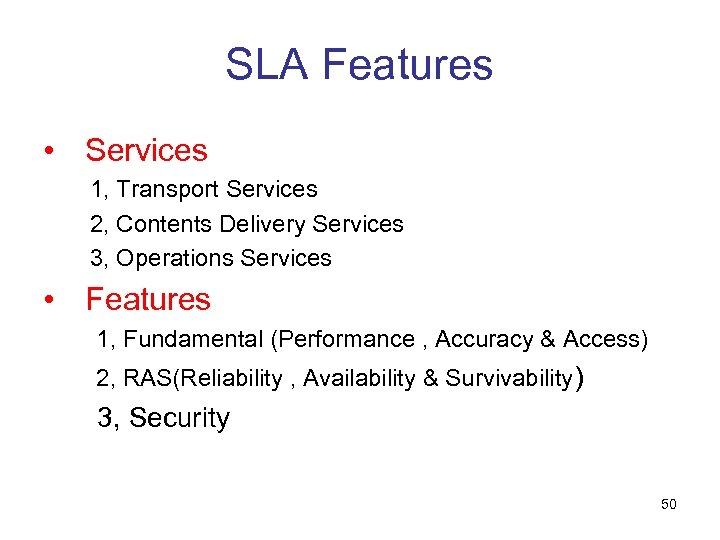

SLA Features • Services 1, Transport Services 2, Contents Delivery Services 3, Operations Services • Features 1, Fundamental (Performance , Accuracy & Access) 2, RAS(Reliability , Availability & Survivability) 3, Security 50

SLA Features • Services 1, Transport Services 2, Contents Delivery Services 3, Operations Services • Features 1, Fundamental (Performance , Accuracy & Access) 2, RAS(Reliability , Availability & Survivability) 3, Security 50

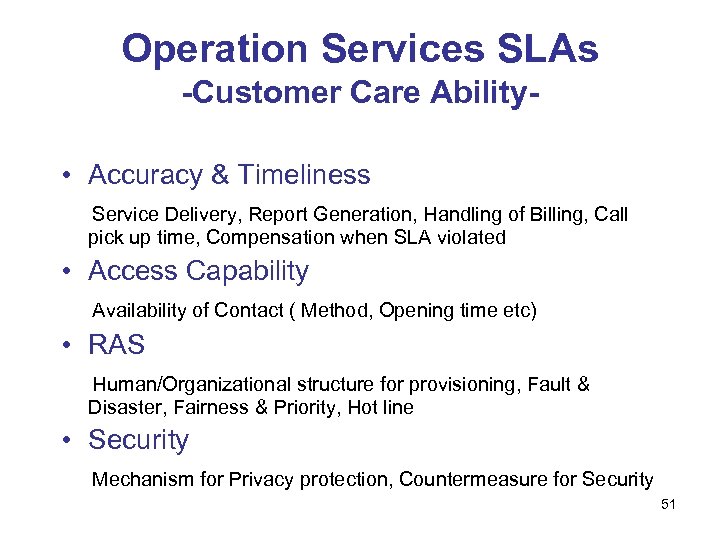

Operation Services SLAs -Customer Care Ability • Accuracy & Timeliness Service Delivery, Report Generation, Handling of Billing, Call pick up time, Compensation when SLA violated • Access Capability Availability of Contact ( Method, Opening time etc) • RAS Human/Organizational structure for provisioning, Fault & Disaster, Fairness & Priority, Hot line • Security Mechanism for Privacy protection, Countermeasure for Security 51

Operation Services SLAs -Customer Care Ability • Accuracy & Timeliness Service Delivery, Report Generation, Handling of Billing, Call pick up time, Compensation when SLA violated • Access Capability Availability of Contact ( Method, Opening time etc) • RAS Human/Organizational structure for provisioning, Fault & Disaster, Fairness & Priority, Hot line • Security Mechanism for Privacy protection, Countermeasure for Security 51

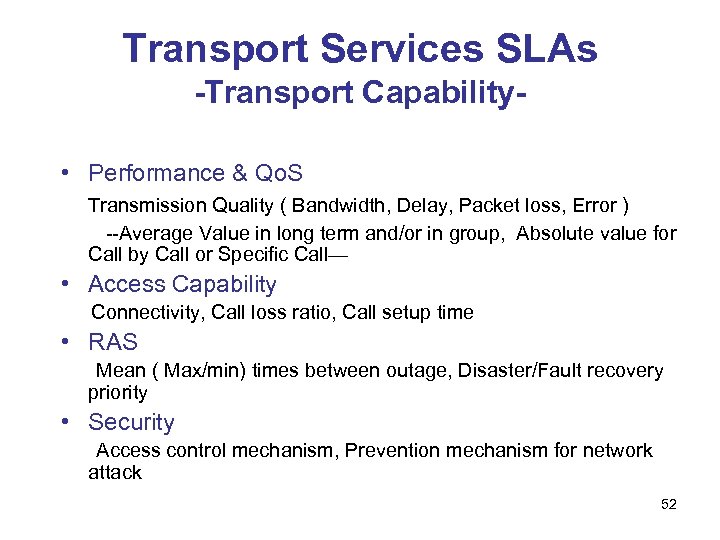

Transport Services SLAs -Transport Capability • Performance & Qo. S Transmission Quality ( Bandwidth, Delay, Packet loss, Error ) --Average Value in long term and/or in group, Absolute value for Call by Call or Specific Call— • Access Capability Connectivity, Call loss ratio, Call setup time • RAS Mean ( Max/min) times between outage, Disaster/Fault recovery priority • Security Access control mechanism, Prevention mechanism for network attack 52

Transport Services SLAs -Transport Capability • Performance & Qo. S Transmission Quality ( Bandwidth, Delay, Packet loss, Error ) --Average Value in long term and/or in group, Absolute value for Call by Call or Specific Call— • Access Capability Connectivity, Call loss ratio, Call setup time • RAS Mean ( Max/min) times between outage, Disaster/Fault recovery priority • Security Access control mechanism, Prevention mechanism for network attack 52

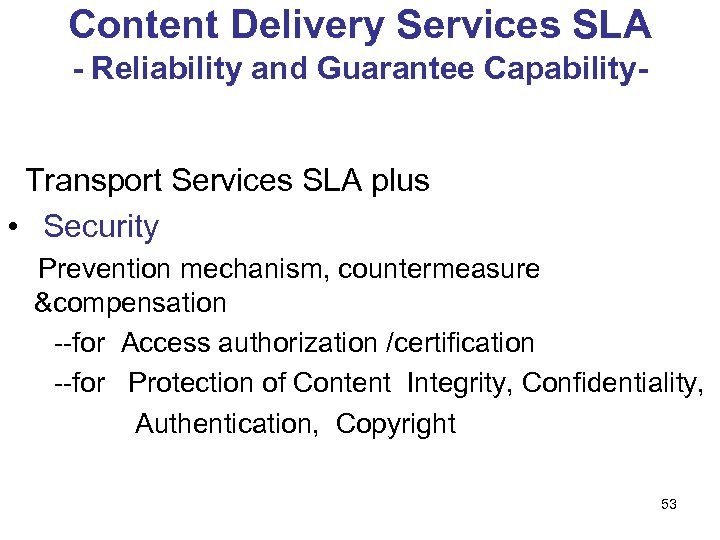

Content Delivery Services SLA - Reliability and Guarantee Capability. Transport Services SLA plus • Security Prevention mechanism, countermeasure &compensation --for Access authorization /certification --for Protection of Content Integrity, Confidentiality, Authentication, Copyright 53

Content Delivery Services SLA - Reliability and Guarantee Capability. Transport Services SLA plus • Security Prevention mechanism, countermeasure &compensation --for Access authorization /certification --for Protection of Content Integrity, Confidentiality, Authentication, Copyright 53

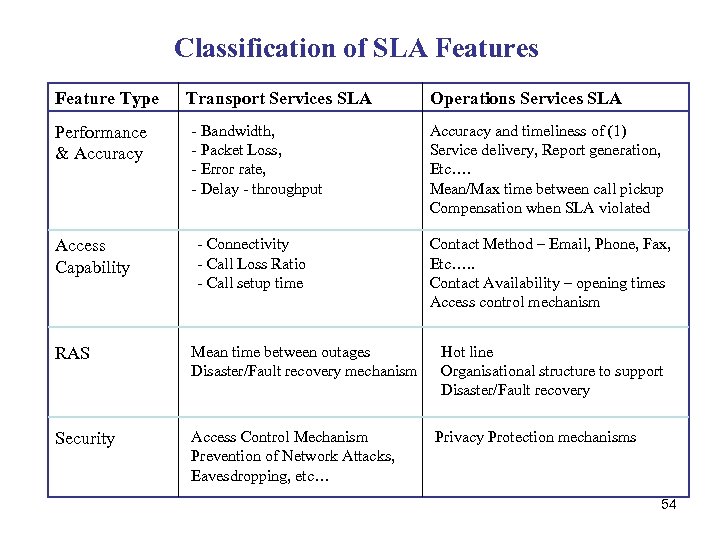

Classification of SLA Features Feature Type Performance & Accuracy Access Capability Transport Services SLA - Bandwidth, - Packet Loss, - Error rate, - Delay - throughput - Connectivity - Call Loss Ratio - Call setup time RAS Mean time between outages Disaster/Fault recovery mechanism Security Access Control Mechanism Prevention of Network Attacks, Eavesdropping, etc… Operations Services SLA Accuracy and timeliness of (1) Service delivery, Report generation, Etc…. Mean/Max time between call pickup Compensation when SLA violated Contact Method – Email, Phone, Fax, Etc…. . Contact Availability – opening times Access control mechanism Hot line Organisational structure to support Disaster/Fault recovery Privacy Protection mechanisms 54

Classification of SLA Features Feature Type Performance & Accuracy Access Capability Transport Services SLA - Bandwidth, - Packet Loss, - Error rate, - Delay - throughput - Connectivity - Call Loss Ratio - Call setup time RAS Mean time between outages Disaster/Fault recovery mechanism Security Access Control Mechanism Prevention of Network Attacks, Eavesdropping, etc… Operations Services SLA Accuracy and timeliness of (1) Service delivery, Report generation, Etc…. Mean/Max time between call pickup Compensation when SLA violated Contact Method – Email, Phone, Fax, Etc…. . Contact Availability – opening times Access control mechanism Hot line Organisational structure to support Disaster/Fault recovery Privacy Protection mechanisms 54

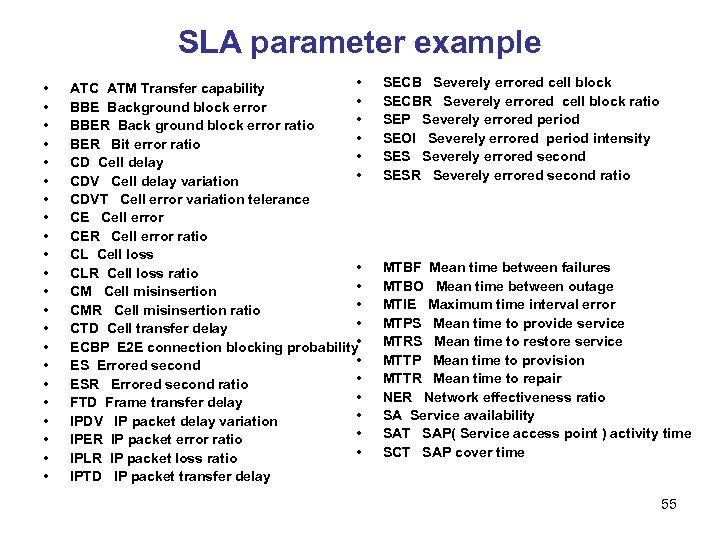

SLA parameter example • • • • • • ATC ATM Transfer capability • BBE Background block error • BBER Back ground block error ratio • BER Bit error ratio • CD Cell delay • CDV Cell delay variation CDVT Cell error variation telerance CE Cell error CER Cell error ratio CL Cell loss • CLR Cell loss ratio • CM Cell misinsertion • CMR Cell misinsertion ratio • CTD Cell transfer delay ECBP E 2 E connection blocking probability • • ES Errored second • ESR Errored second ratio • FTD Frame transfer delay • IPDV IP packet delay variation • IPER IP packet error ratio • IPLR IP packet loss ratio IPTD IP packet transfer delay SECB Severely errored cell block SECBR Severely errored cell block ratio SEP Severely errored period SEOI Severely errored period intensity SES Severely errored second SESR Severely errored second ratio MTBF Mean time between failures MTBO Mean time between outage MTIE Maximum time interval error MTPS Mean time to provide service MTRS Mean time to restore service MTTP Mean time to provision MTTR Mean time to repair NER Network effectiveness ratio SA Service availability SAT SAP( Service access point ) activity time SCT SAP cover time 55

SLA parameter example • • • • • • ATC ATM Transfer capability • BBE Background block error • BBER Back ground block error ratio • BER Bit error ratio • CD Cell delay • CDV Cell delay variation CDVT Cell error variation telerance CE Cell error CER Cell error ratio CL Cell loss • CLR Cell loss ratio • CM Cell misinsertion • CMR Cell misinsertion ratio • CTD Cell transfer delay ECBP E 2 E connection blocking probability • • ES Errored second • ESR Errored second ratio • FTD Frame transfer delay • IPDV IP packet delay variation • IPER IP packet error ratio • IPLR IP packet loss ratio IPTD IP packet transfer delay SECB Severely errored cell block SECBR Severely errored cell block ratio SEP Severely errored period SEOI Severely errored period intensity SES Severely errored second SESR Severely errored second ratio MTBF Mean time between failures MTBO Mean time between outage MTIE Maximum time interval error MTPS Mean time to provide service MTRS Mean time to restore service MTTP Mean time to provision MTTR Mean time to repair NER Network effectiveness ratio SA Service availability SAT SAP( Service access point ) activity time SCT SAP cover time 55

56

56

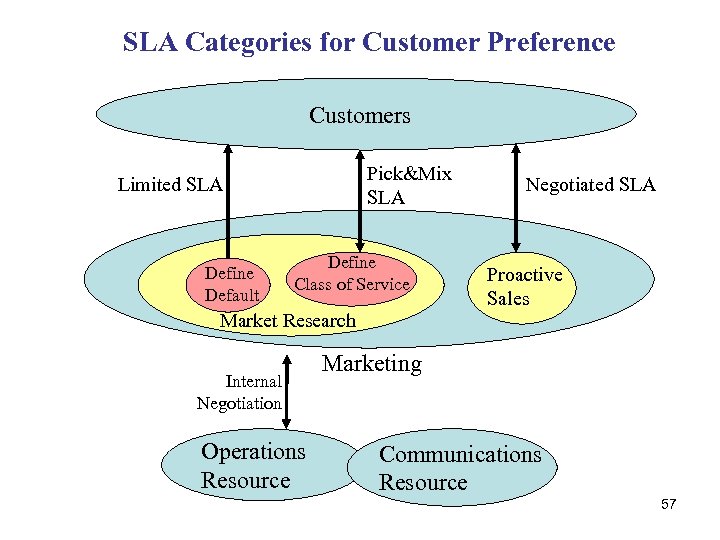

SLA Categories for Customer Preference Customers Pick&Mix SLA Limited SLA Define Default Define Class of Service Market Research Internal Negotiation Operations Resource Negotiated SLA Proactive Sales Marketing Communications Resource 57

SLA Categories for Customer Preference Customers Pick&Mix SLA Limited SLA Define Default Define Class of Service Market Research Internal Negotiation Operations Resource Negotiated SLA Proactive Sales Marketing Communications Resource 57

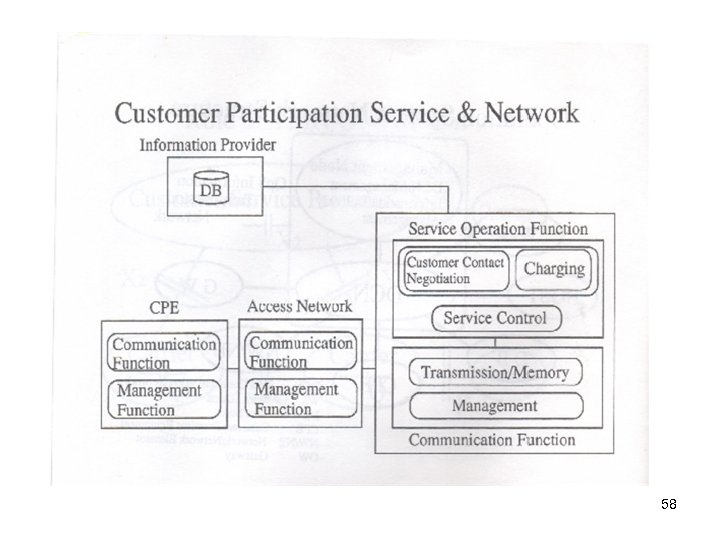

58

58

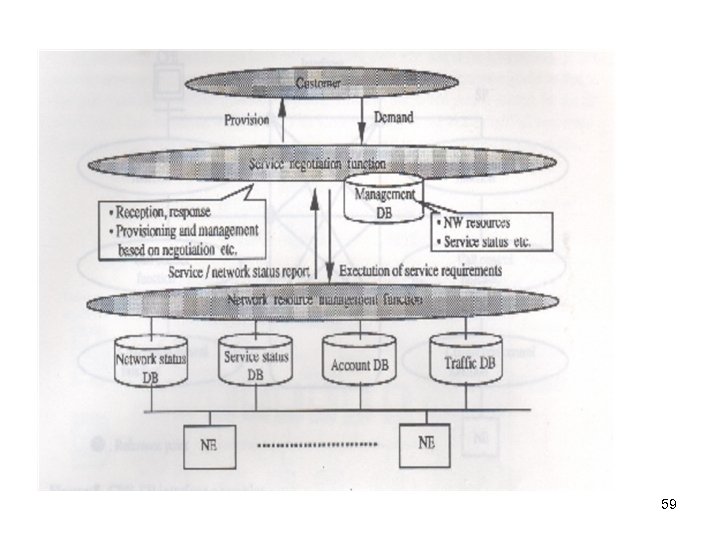

59

59

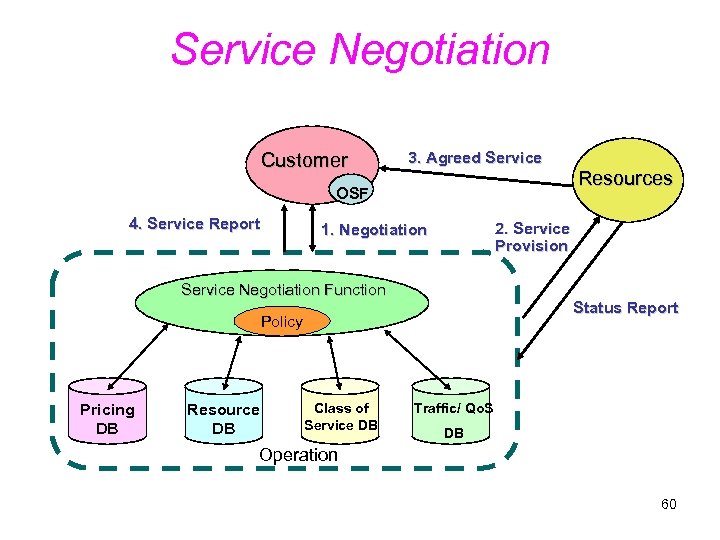

Service Negotiation Customer 3. Agreed Service Resources OSF 4. Service Report 2. Service Provision 1. Negotiation Service Negotiation Function Status Report Policy Pricing DB Resource DB Class of Service DB Traffic/ Qo. S DB Operation 60

Service Negotiation Customer 3. Agreed Service Resources OSF 4. Service Report 2. Service Provision 1. Negotiation Service Negotiation Function Status Report Policy Pricing DB Resource DB Class of Service DB Traffic/ Qo. S DB Operation 60

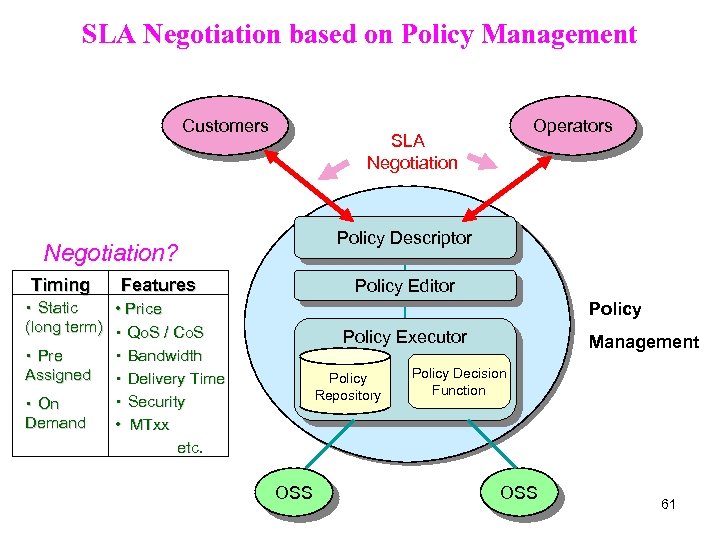

SLA Negotiation based on Policy Management Customers Policy Descriptor Negotiation? Timing Operators SLA Negotiation Features Policy Editor ・ Static • Price (long term) ・ Qo. S / Co. S ・ Bandwidth ・ Pre Assigned ・ Delivery Time ・ Security ・ On Demand • MTxx etc. Policy Executor Policy Repository OSS Management Policy Decision Function OSS 61

SLA Negotiation based on Policy Management Customers Policy Descriptor Negotiation? Timing Operators SLA Negotiation Features Policy Editor ・ Static • Price (long term) ・ Qo. S / Co. S ・ Bandwidth ・ Pre Assigned ・ Delivery Time ・ Security ・ On Demand • MTxx etc. Policy Executor Policy Repository OSS Management Policy Decision Function OSS 61

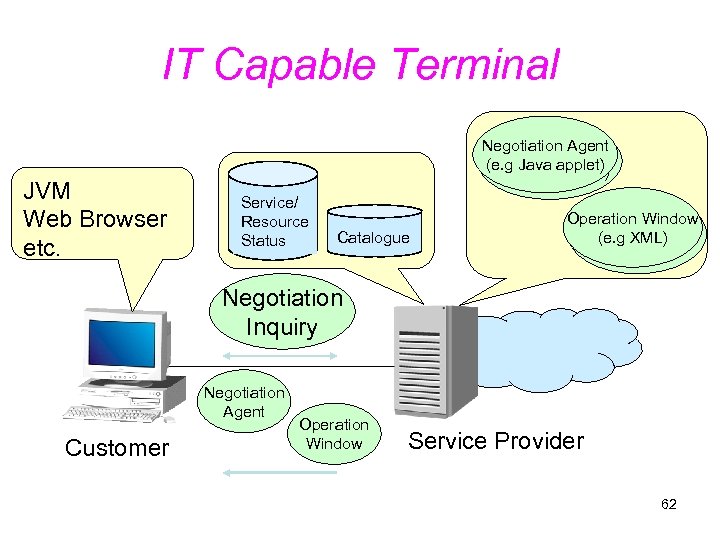

IT Capable Terminal JVM Web Browser etc. Negotiation Agent (e. g Java applet) Service/ Resource Status Catalogue Operation Window (e. g XML) Negotiation Inquiry Negotiation Agent Customer Operation Window Service Provider 62

IT Capable Terminal JVM Web Browser etc. Negotiation Agent (e. g Java applet) Service/ Resource Status Catalogue Operation Window (e. g XML) Negotiation Inquiry Negotiation Agent Customer Operation Window Service Provider 62

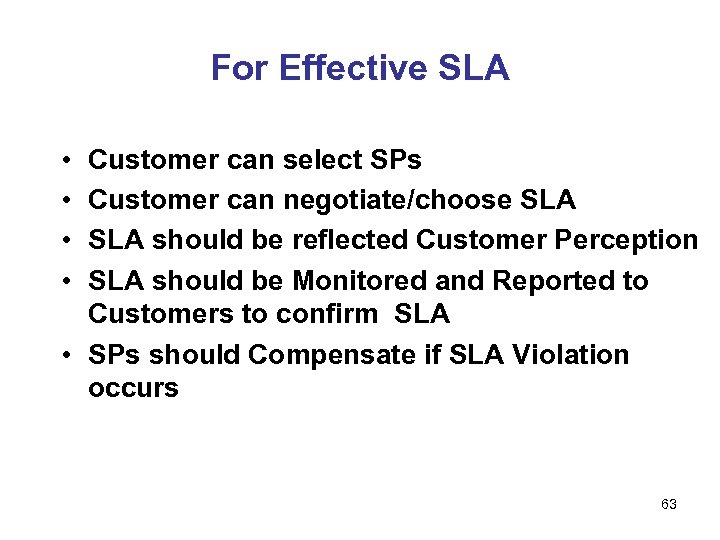

For Effective SLA • • Customer can select SPs Customer can negotiate/choose SLA should be reflected Customer Perception SLA should be Monitored and Reported to Customers to confirm SLA • SPs should Compensate if SLA Violation occurs 63

For Effective SLA • • Customer can select SPs Customer can negotiate/choose SLA should be reflected Customer Perception SLA should be Monitored and Reported to Customers to confirm SLA • SPs should Compensate if SLA Violation occurs 63

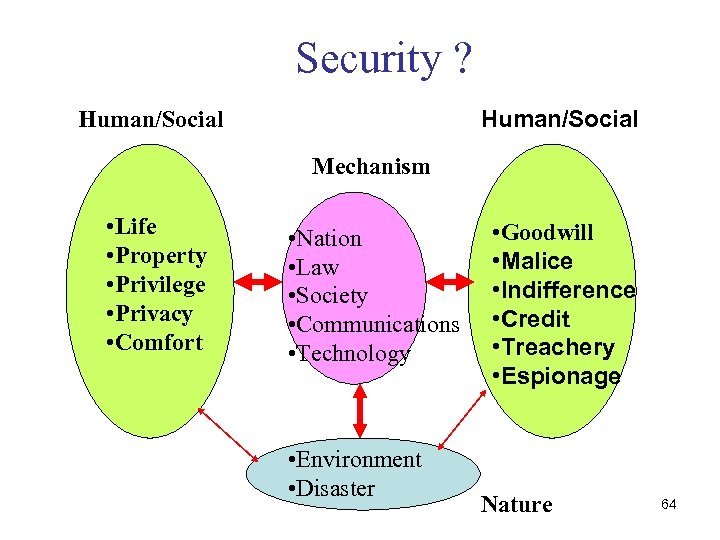

Security ? Human/Social Mechanism • Life • Property • Privilege • Privacy • Comfort • Nation • Law • Society • Communications • Technology • Environment • Disaster • Goodwill • Malice • Indifference • Credit • Treachery • Espionage Nature 64

Security ? Human/Social Mechanism • Life • Property • Privilege • Privacy • Comfort • Nation • Law • Society • Communications • Technology • Environment • Disaster • Goodwill • Malice • Indifference • Credit • Treachery • Espionage Nature 64

Security Management • Management of Human /Society Moral, Ethics, Education Law, Regulation, Community, Privacy, Vigilant ? • Management of Information distribution/exchange Safe, secure , accurate and comfortable ICT network/services • Management of environment Prognosis, disaster prevention, environmental preservation 65

Security Management • Management of Human /Society Moral, Ethics, Education Law, Regulation, Community, Privacy, Vigilant ? • Management of Information distribution/exchange Safe, secure , accurate and comfortable ICT network/services • Management of environment Prognosis, disaster prevention, environmental preservation 65

IP/e. Business Security Management • Network Security -RAS -Privacy ( Tapping, fairness , secrecy of communications, customer information) -Attack : Physical, Logical • Information distribution security -Integrity of contents and delivery -Human verification, certification , justification • e. Business security ーEnsure real and virtual money -Forgery( Fake), Fraud, Robbery with/without violence, Credibility , Confidence ーPrivacy ( Anonymity, Private information leakage) 66

IP/e. Business Security Management • Network Security -RAS -Privacy ( Tapping, fairness , secrecy of communications, customer information) -Attack : Physical, Logical • Information distribution security -Integrity of contents and delivery -Human verification, certification , justification • e. Business security ーEnsure real and virtual money -Forgery( Fake), Fraud, Robbery with/without violence, Credibility , Confidence ーPrivacy ( Anonymity, Private information leakage) 66

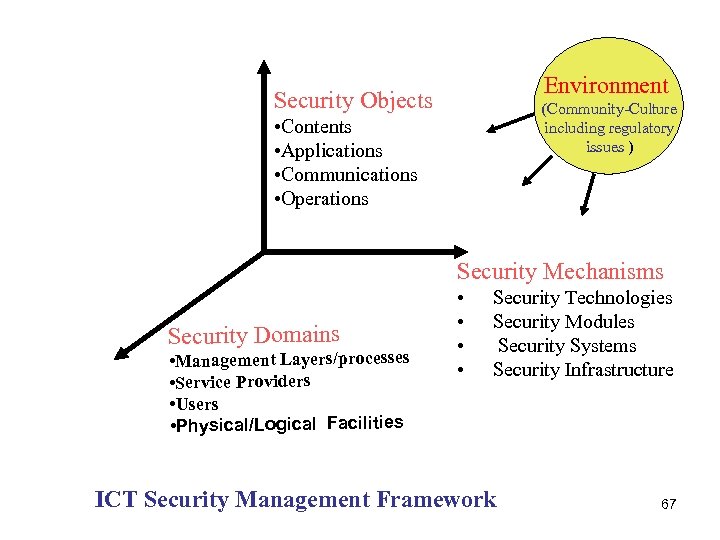

Environment Security Objects (Community-Culture including regulatory issues ) • Contents • Applications • Communications • Operations Security Mechanisms Security Domains • Management Layers/processes • Service Providers • Users • Physical/Logical Facilities • • Security Technologies Security Modules Security Systems Security Infrastructure ICT Security Management Framework 67

Environment Security Objects (Community-Culture including regulatory issues ) • Contents • Applications • Communications • Operations Security Mechanisms Security Domains • Management Layers/processes • Service Providers • Users • Physical/Logical Facilities • • Security Technologies Security Modules Security Systems Security Infrastructure ICT Security Management Framework 67

Security Objects • Contents Completeness( Integrity ), Secrecy, Certification, Copyright • Application Virus, Worm, Destruction, Falsify, Fishing, • Communications Access/admission, Routing, AAA (Authentication, Authorization , and Accounting), Tapping, Pretence, Espionage, IP spoof Attack ( Intrusion , Denial of service, Service degradation, jamming, etc. ) • Operations Privacy, Leakage, Risk 68

Security Objects • Contents Completeness( Integrity ), Secrecy, Certification, Copyright • Application Virus, Worm, Destruction, Falsify, Fishing, • Communications Access/admission, Routing, AAA (Authentication, Authorization , and Accounting), Tapping, Pretence, Espionage, IP spoof Attack ( Intrusion , Denial of service, Service degradation, jamming, etc. ) • Operations Privacy, Leakage, Risk 68

Security Domains • Management Layers/processes OSI 7 Layers services, TMN Logical layer, FAB ( Fulfillment, assurance and billing ), • Service Providers and partners Contracted SP, Virtual SP, ASP, CSP, Management SP, Network Operator, VMNO, Service/operation agent, • Users Enterprise customers Consumers End users Customer representatives Shareholders • Physical/Logical Facilities Terminals, CPE/CPN, Transmission , Service node, Storage, Data center, Call center, Address/phone number, Routing table, Domain name server 69

Security Domains • Management Layers/processes OSI 7 Layers services, TMN Logical layer, FAB ( Fulfillment, assurance and billing ), • Service Providers and partners Contracted SP, Virtual SP, ASP, CSP, Management SP, Network Operator, VMNO, Service/operation agent, • Users Enterprise customers Consumers End users Customer representatives Shareholders • Physical/Logical Facilities Terminals, CPE/CPN, Transmission , Service node, Storage, Data center, Call center, Address/phone number, Routing table, Domain name server 69

Security Mechanisms/Technologies • Security Technologies Encryption, Cryptograph, Authentication, Firewall, IPsec(Security Architecture for IP), • Security Modules SOCKS, Digital signature, Secure protocol ( e. g. IKE: Internet Key Exchange protocol) Bio metrics, Intrusion detection/block, Anti virus, IC card, Electronic cash • Security Systems and Infrastructure PKI (Public Key Infrastructure), PKI authority, KES (Key Escrowed System) , Certification authority, SET: Secure Electronic Transaction, Standardization Regulation, Legal and administration protection, Penalty 70

Security Mechanisms/Technologies • Security Technologies Encryption, Cryptograph, Authentication, Firewall, IPsec(Security Architecture for IP), • Security Modules SOCKS, Digital signature, Secure protocol ( e. g. IKE: Internet Key Exchange protocol) Bio metrics, Intrusion detection/block, Anti virus, IC card, Electronic cash • Security Systems and Infrastructure PKI (Public Key Infrastructure), PKI authority, KES (Key Escrowed System) , Certification authority, SET: Secure Electronic Transaction, Standardization Regulation, Legal and administration protection, Penalty 70