d2c1253dcac8fd28392429d227c50f16.ppt

- Количество слайдов: 34

Pip: Detecting the Unexpected in Distributed Systems Patrick Reynolds reynolds@cs. duke. edu Collaborators: Janet Wiener Charles Killian Jeffrey Mogul Amin Vahdat Mehul Shah UCSD HP Labs http: //issg. cs. duke. edu/pip/

Introduction Distributed systems are essential to the Internet • Distributed systems are complex • – • Harder to debug than centralized systems Pip uses causal paths to find bugs Characterize system behavior – Deviations from expected behavior often indicate bugs – • Experimental results show that Pip is effective – Found 19 bugs in 6 test systems NSDI - May 8 th, 2006 2

Challenges of debugging distributed systems • Distributed systems have more bugs Parallelism is hard – New sources of failure – • More components = more faults • Network errors • Security breaches • Finding bugs is harder More nodes, events, and messages to keep track of – Applications may cross administrative domains – Bugs on one node may be caused by events on another – NSDI - May 8 th, 2006 3

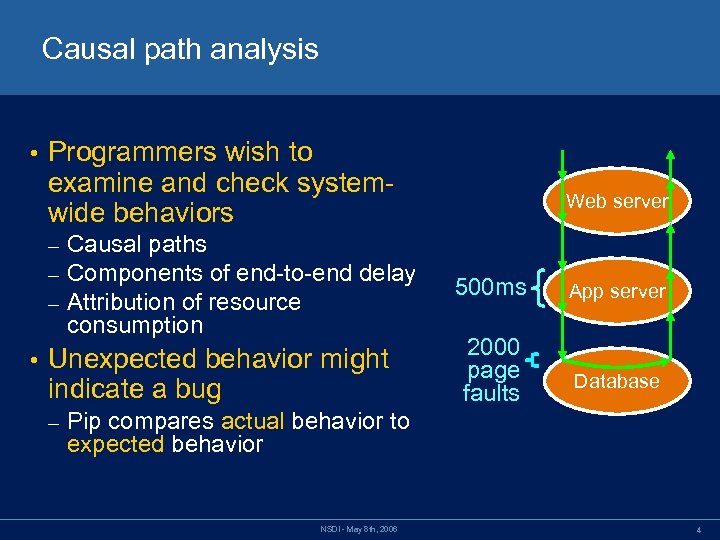

Causal path analysis • Programmers wish to examine and check systemwide behaviors – – – • Causal paths Components of end-to-end delay Attribution of resource consumption Unexpected behavior might indicate a bug – Web server 500 ms 2000 page faults App server Database Pip compares actual behavior to expected behavior NSDI - May 8 th, 2006 4

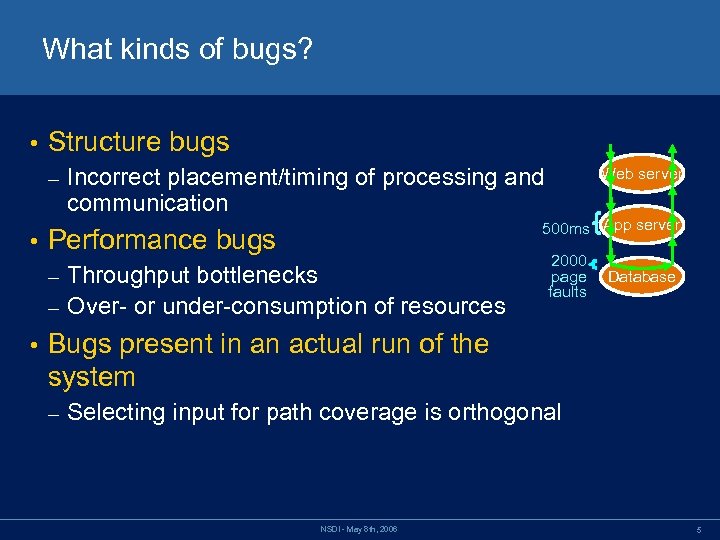

What kinds of bugs? • Structure bugs – • Incorrect placement/timing of processing and communication 500 ms App server Performance bugs Throughput bottlenecks – Over- or under-consumption of resources – • Web server 2000 page faults Database Bugs present in an actual run of the system – Selecting input for path coverage is orthogonal NSDI - May 8 th, 2006 5

Three target audiences • Primary programmer – • Debugging or optimizing his/her own system Secondary programmer Inheriting a project or joining a programming team – Discovery: learning how the system behaves – • Operator Monitoring a running system for unexpected behavior – Performing regression tests after a change – NSDI - May 8 th, 2006 6

Outline Introduction • Pip • Expressing expected behavior – Exploring application behavior – Results – • Conclusions NSDI - May 8 th, 2006 7

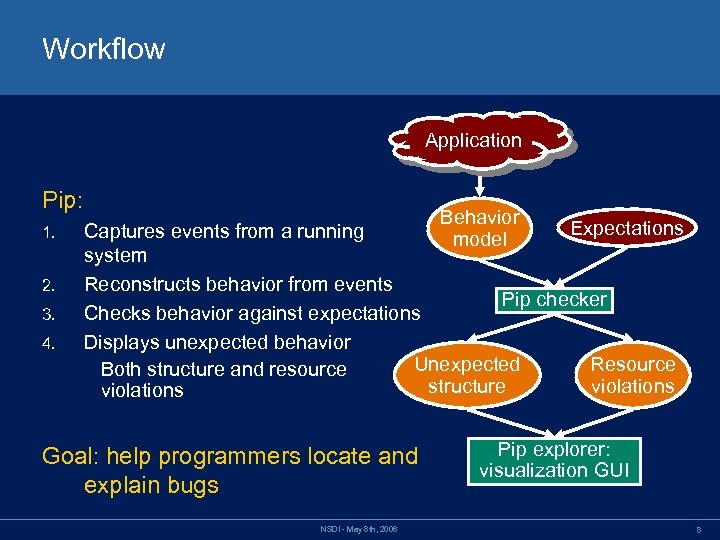

Workflow Application Pip: 1. 2. 3. 4. Behavior Expectations Captures events from a running model system Reconstructs behavior from events Pip checker Checks behavior against expectations Displays unexpected behavior Unexpected Resource Both structure and resource structure violations Goal: help programmers locate and explain bugs NSDI - May 8 th, 2006 Pip explorer: visualization GUI 8

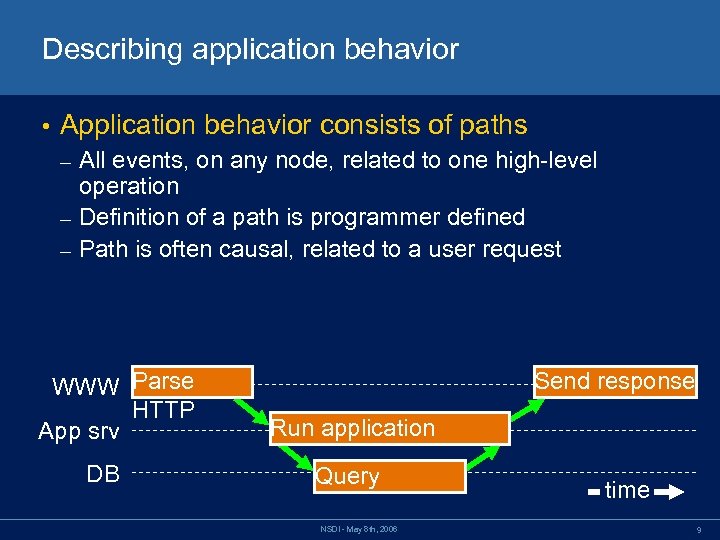

Describing application behavior • Application behavior consists of paths All events, on any node, related to one high-level operation – Definition of a path is programmer defined – Path is often causal, related to a user request – WWW Parse HTTP App srv DB Send response Run application Query NSDI - May 8 th, 2006 time 9

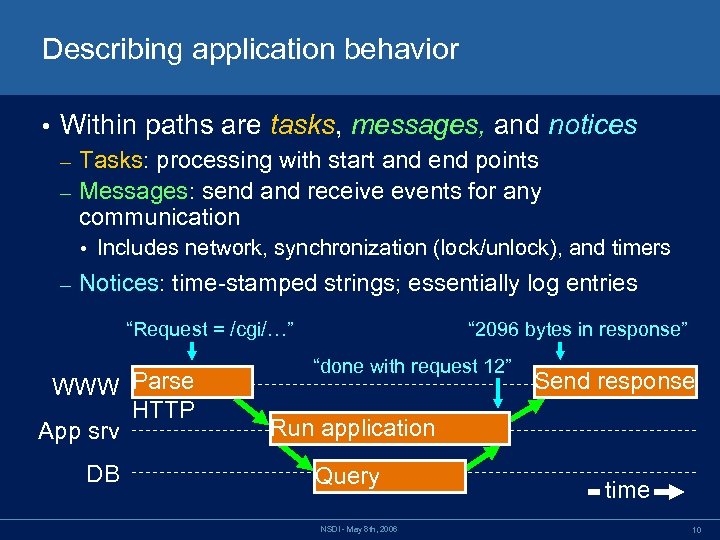

Describing application behavior • Within paths are tasks, messages, and notices Tasks: processing with start and end points – Messages: send and receive events for any communication – • Includes network, synchronization (lock/unlock), and timers – Notices: time-stamped strings; essentially log entries “Request = /cgi/…” WWW Parse HTTP App srv DB “ 2096 bytes in response” “done with request 12” Send response Run application Query NSDI - May 8 th, 2006 time 10

Outline Introduction • Pip • Expressing expected behavior – Exploring application behavior – Results – • Conclusions NSDI - May 8 th, 2006 11

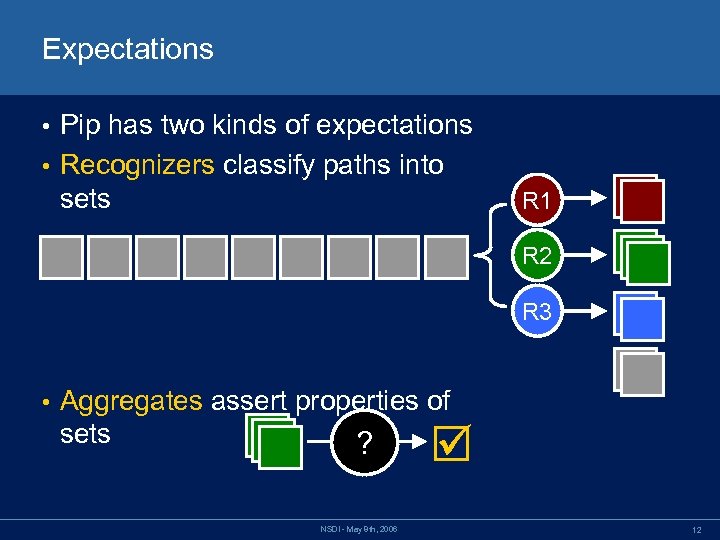

Expectations Pip has two kinds of expectations • Recognizers classify paths into sets • R 1 R 2 R 3 • Aggregates assert properties of sets ? NSDI - May 8 th, 2006 12

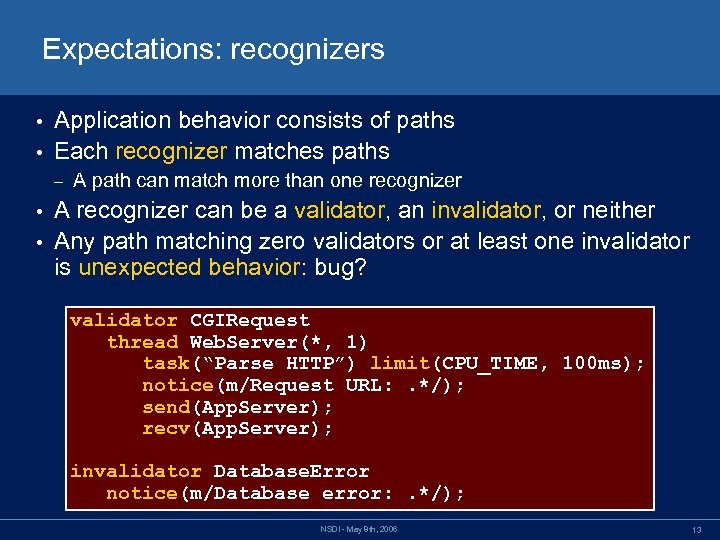

Expectations: recognizers Application behavior consists of paths • Each recognizer matches paths • – A path can match more than one recognizer A recognizer can be a validator, an invalidator, or neither • Any path matching zero validators or at least one invalidator is unexpected behavior: bug? • validator CGIRequest thread Web. Server(*, 1) task(“Parse HTTP”) limit(CPU_TIME, 100 ms); notice(m/Request URL: . */); send(App. Server); recv(App. Server); invalidator Database. Error notice(m/Database error: . */); NSDI - May 8 th, 2006 13

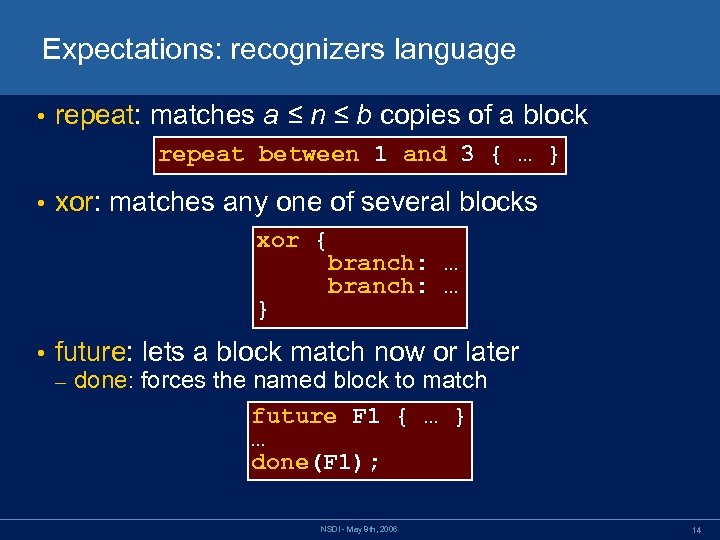

Expectations: recognizers language • repeat: matches a ≤ n ≤ b copies of a block repeat between 1 and 3 { … } • xor: matches any one of several blocks xor { branch: … } • future: lets a block match now or later – done: forces the named block to match future F 1 { … } … done(F 1); NSDI - May 8 th, 2006 14

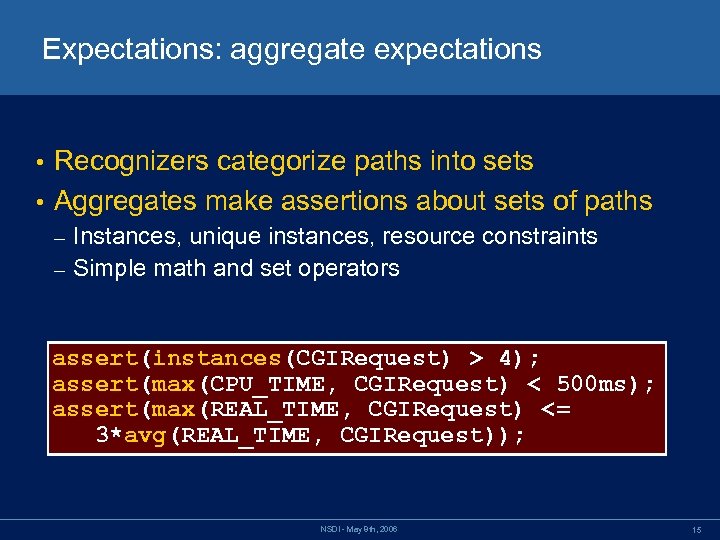

Expectations: aggregate expectations Recognizers categorize paths into sets • Aggregates make assertions about sets of paths • Instances, unique instances, resource constraints – Simple math and set operators – assert(instances(CGIRequest) > 4); assert(max(CPU_TIME, CGIRequest) < 500 ms); assert(max(REAL_TIME, CGIRequest) <= 3*avg(REAL_TIME, CGIRequest)); NSDI - May 8 th, 2006 15

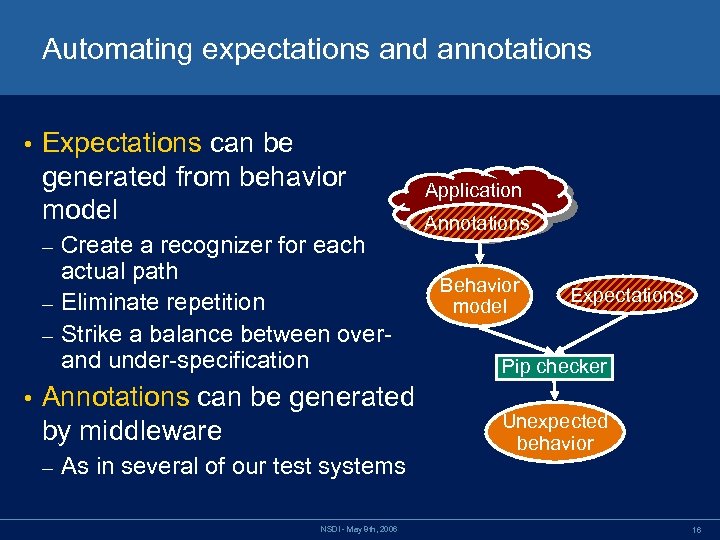

Automating expectations and annotations • Expectations can be generated from behavior model Create a recognizer for each actual path – Eliminate repetition – Strike a balance between overand under-specification Application Annotations – • Annotations can be generated by middleware – As in several of our test systems NSDI - May 8 th, 2006 Behavior model Expectations Pip checker Unexpected behavior 16

Exploring behavior Expectations checker generates lists of valid and invalid paths • Explore both sets • – Why did invalid paths occur? – Is any unexpected behavior misclassified as valid? • Insufficiently constrained expectations • Pip may be unable to express all expectations NSDI - May 8 th, 2006 17

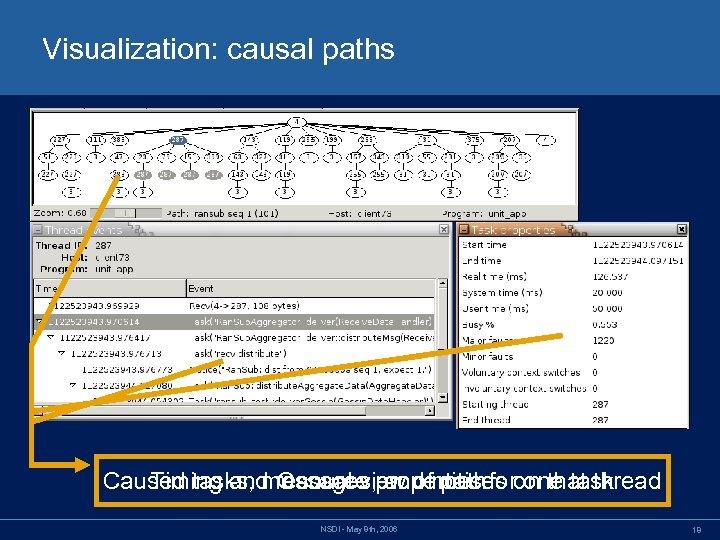

Visualization: causal paths Caused tasks, messages, properties foron that thread Timing and resource and notices one task Causal view of path NSDI - May 8 th, 2006 18

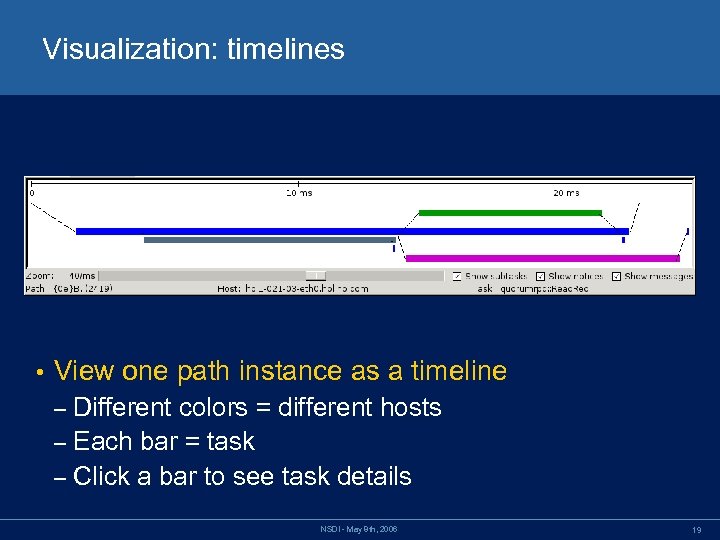

Visualization: timelines • View one path instance as a timeline Different colors = different hosts – Each bar = task – Click a bar to see task details – NSDI - May 8 th, 2006 19

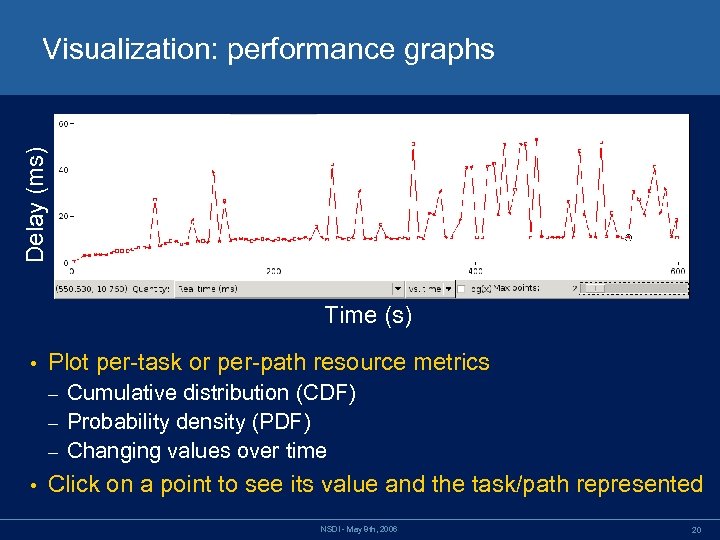

Delay (ms) Visualization: performance graphs Time (s) • Plot per-task or per-path resource metrics Cumulative distribution (CDF) – Probability density (PDF) – Changing values over time – • Click on a point to see its value and the task/path represented NSDI - May 8 th, 2006 20

Results • We have applied Pip to several distributed systems: FAB: distributed block store – Split. Stream: DHT-based multicast protocol – Others: Ran. Sub, Bullet, SWORD, Oracle of Bacon – We have found unexpected behavior in each system • We have fixed bugs in some systems • … and used Pip to verify that the behavior was fixed NSDI - May 8 th, 2006 21

Results: Split. Stream DHT-based multicast protocol 13 bugs found, 12 fixed – • 11 found using expectations, 2 found using GUI Structural bug: some nodes have up to 25 children when they should have at most 18 This bug was fixed and later reoccurred – Root cause #1: variable shadowing – Root cause #2: failed to register a callback – • How discovered: first in the explorer GUI, confirmed with automated checking NSDI - May 8 th, 2006 22

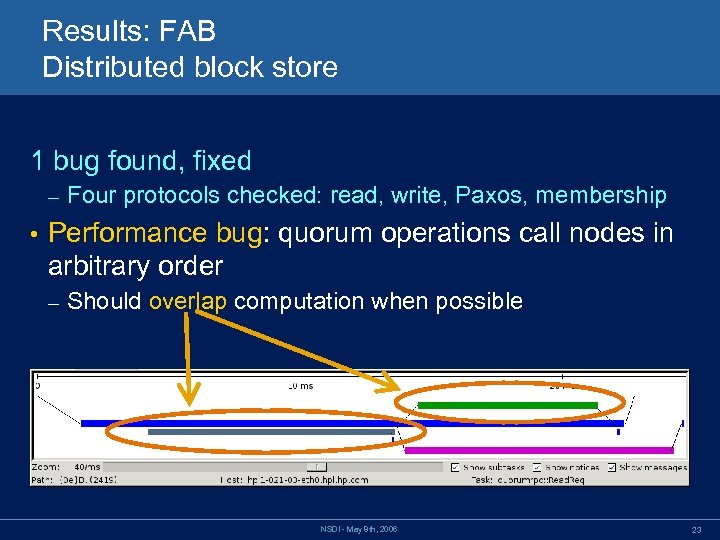

Results: FAB Distributed block store 1 bug found, fixed – • Four protocols checked: read, write, Paxos, membership Performance bug: quorum operations call nodes in arbitrary order – Should overlap computation when possible NSDI - May 8 th, 2006 23

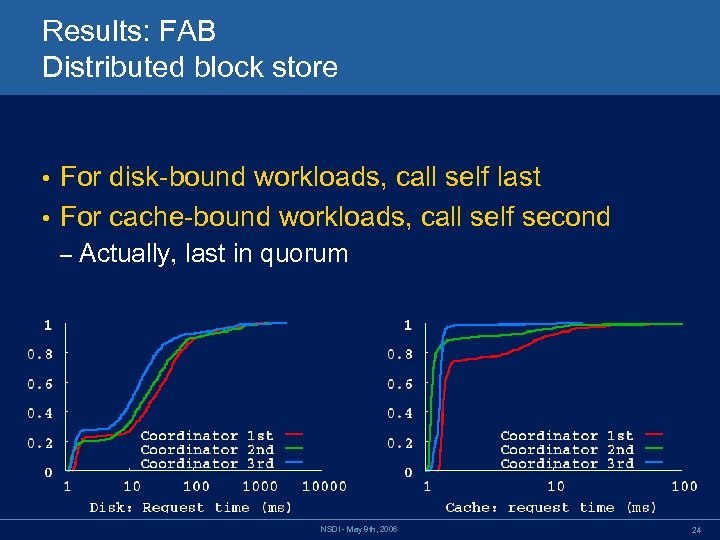

Results: FAB Distributed block store For disk-bound workloads, call self last • For cache-bound workloads, call self second • – Actually, last in quorum NSDI - May 8 th, 2006 24

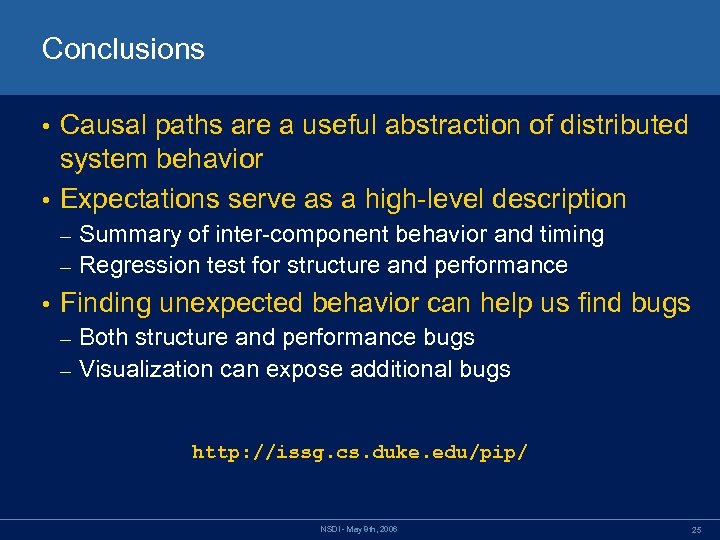

Conclusions Causal paths are a useful abstraction of distributed system behavior • Expectations serve as a high-level description • Summary of inter-component behavior and timing – Regression test for structure and performance – • Finding unexpected behavior can help us find bugs Both structure and performance bugs – Visualization can expose additional bugs – http: //issg. cs. duke. edu/pip/ NSDI - May 8 th, 2006 25

Extra slides • • Related work Pip results: Ran. Sub Pip vs. printf Pip resource metrics Building a behavior model Annotations Checking expectations Visualization: communication graph NSDI - May 8 th, 2006 26

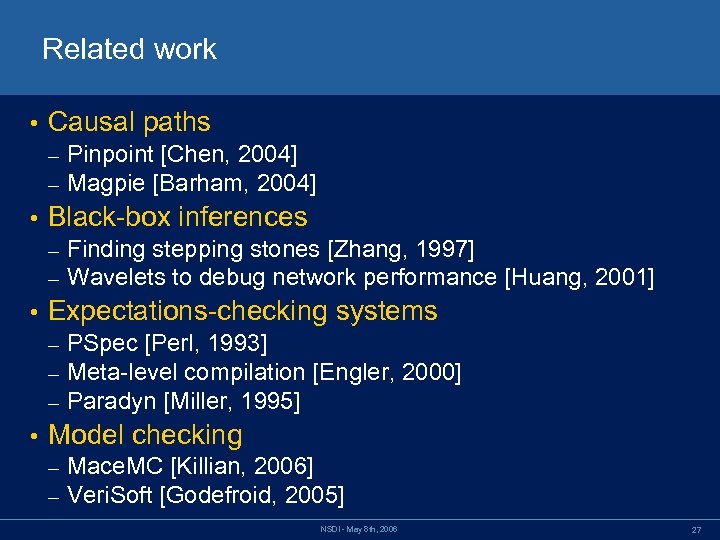

Related work • Causal paths – – • Black-box inferences – – • Finding stepping stones [Zhang, 1997] Wavelets to debug network performance [Huang, 2001] Expectations-checking systems – – – • Pinpoint [Chen, 2004] Magpie [Barham, 2004] PSpec [Perl, 1993] Meta-level compilation [Engler, 2000] Paradyn [Miller, 1995] Model checking – – Mace. MC [Killian, 2006] Veri. Soft [Godefroid, 2005] NSDI - May 8 th, 2006 27

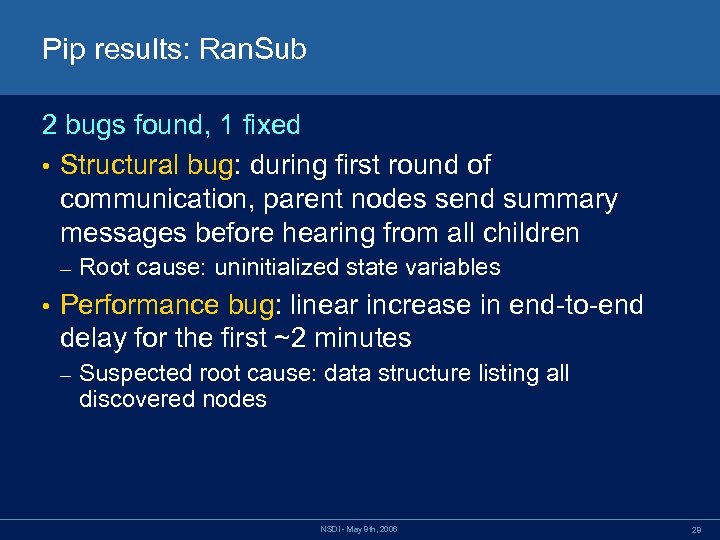

Pip results: Ran. Sub 2 bugs found, 1 fixed • Structural bug: during first round of communication, parent nodes send summary messages before hearing from all children – • Root cause: uninitialized state variables Performance bug: linear increase in end-to-end delay for the first ~2 minutes – Suspected root cause: data structure listing all discovered nodes NSDI - May 8 th, 2006 28

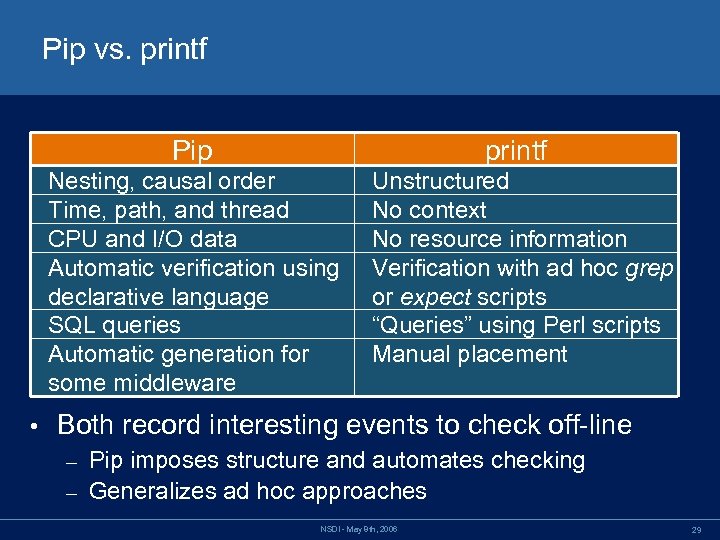

Pip vs. printf Pip Nesting, causal order Time, path, and thread CPU and I/O data Automatic verification using declarative language SQL queries Automatic generation for some middleware • printf Unstructured No context No resource information Verification with ad hoc grep or expect scripts “Queries” using Perl scripts Manual placement Both record interesting events to check off-line Pip imposes structure and automates checking – Generalizes ad hoc approaches – NSDI - May 8 th, 2006 29

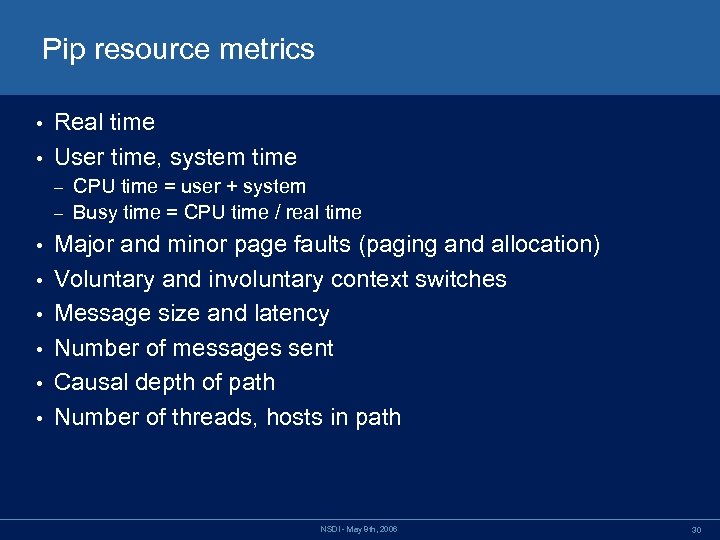

Pip resource metrics Real time • User time, system time • CPU time = user + system – Busy time = CPU time / real time – • • • Major and minor page faults (paging and allocation) Voluntary and involuntary context switches Message size and latency Number of messages sent Causal depth of path Number of threads, hosts in path NSDI - May 8 th, 2006 30

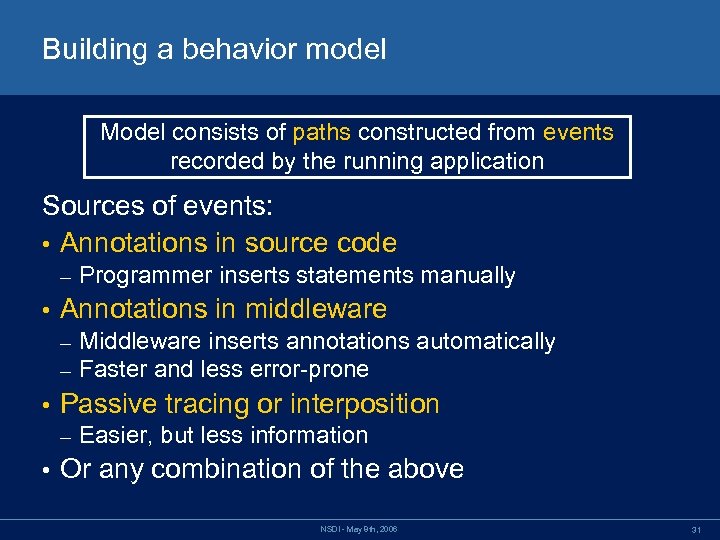

Building a behavior model Model consists of paths constructed from events recorded by the running application Sources of events: • Annotations in source code – • Annotations in middleware – – • Middleware inserts annotations automatically Faster and less error-prone Passive tracing or interposition – • Programmer inserts statements manually Easier, but less information Or any combination of the above NSDI - May 8 th, 2006 31

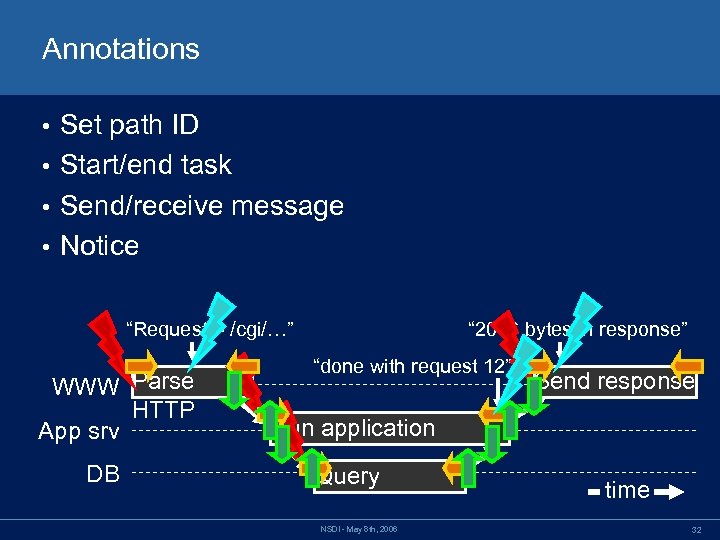

Annotations Set path ID • Start/end task • Send/receive message • Notice • “Request = /cgi/…” WWW Parse HTTP App srv DB “ 2096 bytes in response” “done with request 12” Send response Run application Query NSDI - May 8 th, 2006 time 32

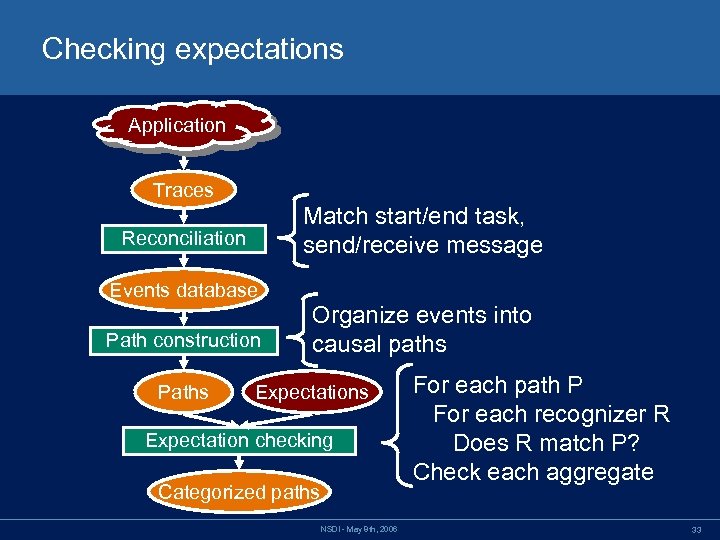

Checking expectations Application Traces Match start/end task, send/receive message Reconciliation Events database Path construction Paths Organize events into causal paths Expectation checking Categorized paths NSDI - May 8 th, 2006 For each path P For each recognizer R Does R match P? Check each aggregate 33

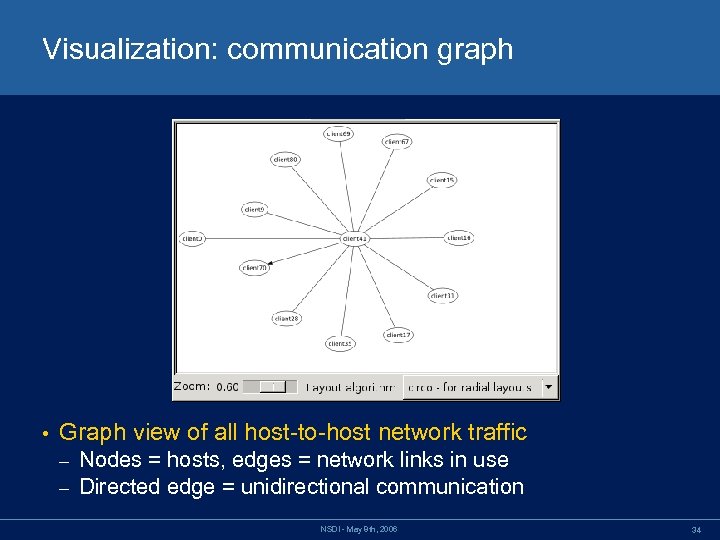

Visualization: communication graph • Graph view of all host-to-host network traffic – – Nodes = hosts, edges = network links in use Directed edge = unidirectional communication NSDI - May 8 th, 2006 34

d2c1253dcac8fd28392429d227c50f16.ppt