76448bc0d0d68ab7bee2b0c85624ac5e.ppt

- Количество слайдов: 39

Phrase Based Machine Translation Professor Sivaji Bandyopadhyay Computer Science & Engineering Department Jadavpur University Kolkata, INDIA.

Objectives • What is Phrase based Machine Translation (PBMT) • Development of a PBMT System • Research directions in PBMT

Machine Translation • Machine translation (MT) is the application of computers to the task of translating texts from one natural language to another. • Speech – to – Speech machine translation – Eg. , ATR, CMU, DFKI, NEC, Matsushita, Hitachi • High quality translation is achieved by – Carefully domain targeted systems – Control language based systems

Machine Translation approaches • Translation Memory • Machine Translation – Rule based MT – Corpus based MT / Data Driven MT • Example Based MT (EBMT) • Statistical MT (SMT) – Data Driven MT is nowadays the most prevalent trend in MT

Processes in a MT System • The core process converts elements (entities, structures, words, etc. ) of the input (SL) text into equivalent elements for the output (TL) text, where the output text means the same (or is functionally equivalent to) the input text. • The analysis process precedes this core conversion (or ‘transfer’). • The synthesis (or generation) process succedes conversion.

RBMT • The core process is mediated by bilingual dictionaries and rules for converting SL structures into TL structures, and/or by dictionaries and rules for deriving intermediary representations’ from which output can be generated. • The preceding stage of analysis interprets (surface) input SL strings into appropriate ‘translation units’ (e. g. canonical noun and verb forms) and relations (e. g. dependencies and syntactic units). • The succeeding stage of synthesis (or generation) derives TL texts from the TL structures or representations produced by the core ‘transfer’ (or ‘interlingual’) process.

SMT • The core process involves a ‘translation model’ which takes as input SL words or word sequences (‘phrases’) and produces as output TL words or word sequences. • The following stage involves a ‘language model’ which synthesises the sets of TL words in ‘meaningful’ strings which are intended to be equivalent to the input sentences. • The preceding ‘analysis’ stage is represented by the (trivial) process of matching individual words or word sequences of input SL text against entries in the translation model. • More important is the essential preparatory stage of aligning SL and TL texts from a corpus and deriving the statistical frequency data for the ‘translation model’ (or adding statistical data from a corpus to a pre-existing ‘translation model’. ) • The monolingual ‘language model’ may or may not be derived from the same corpus as the ‘translation model’.

EBMT • The core process is the selection and extraction of TL fragments corresponding to SL fragments. • It is preceded by an ‘analysis’ stage for the decomposition of input sentences into appropriate fragments (or templates with variables) and their matching against SL fragments (in a database). – Whether the ‘matching’ involves precompiled fragments (templates derived from the corpus), whether the fragments are derived at ‘runtime’, and whether the fragments (chunks) contain variables or not, are all secondary factors. • The succeeding stage of synthesis (or ‘recombination’ adapts the extracted TL fragments and combines them into TL (output) sentences. • As in SMT, there are essential preparatory stages which align SL and TL sentences in the bilingual database and which derive any templates or patterns used in the processes of matching and extracting.

Mixing Approaches to Machine Translation • The boundaries between the three principal approaches to MT are becoming narrower – Phrase based SMT models are incorporating morphology, syntax and semantics into their systems – Rule based systems are using parallel corpora to enrich their lexicon and grammars, to create new methods for disambiguation – Probabilistic model for EBMT searches the translation example combination which has the highest probability

Web-Enabled Indian MT systems • MAchi. Ne assisted TRAnslation tool (MANTRA) – English to Hindi – MANTRA uses the Lexicalized Tree Adjoining Grammar (LTAG) formalism to represent the English as well as the Hindi grammar. – http: //www. cdac. in/html/aai/mantra. asp • Angla. Hindi – English to Hindi – pattern directed rule based system with CFG like structure for English. – http: //anglahindi. iitk. ac. in

Web-Enabled Indian MT systems • Shakti – English to (Hindi, Marathi, Telugu) – combines rule-based approach with statistical approach. – http: //shakti. iiit. net/ • UNL Based MT System – English to Hindi – Interlingual approach – http: //www. cfilt. iitb. ac. in/machinetranslation/eng-hindi-mt/

SMT involving Indian Languages • English-Hindi SMT – IBM India Research Laboratory, IJCNLP 2004 – CDAC Mumbai & IIT Bombay, IJCNLP 2008

SMT at CDAC, Mumbai • The parallel corpus is word-aligned bidirectionally, and using various heuristics (see (Koehn et al. , 2003) for details) phrase correspondences are established. • Given the set of collected phrase pairs, the phrase translation probability is calculated by relative frequency. • Lexical weighting, which measures how well words within phrase pairs translate to each other, validates the phrase translation, and addresses the problem of data sparsity. • The language model p(e) used in our baseline system is a trigram model with modified Kneser-Ney smoothing. • Reordering is penalized according to a simple exponential distortion model. • The weights for the various components of the model (phrase translation model, language model, distortion model etc. ) are set by minimum error rate training (Och, 2003).

MT Consortiums in India • Indian Language to Indian Languages Machine Translation Systems (IL-ILMT) • English to Indian Languages Machine Translation Systems (EILMT) – EILMT (1) – EILMT (2)

MT approaches • • Anal-Gen based with MT TAG based MT EBMT SMT

Background • • • Statistical Machine Translation as a research area started in the late 1980 s with the Candide project at IBM's original approach maps individual words to words and allows for deletion and insertion of words. Lately, various researchers have shown better translation quality with the use of phrase translation. Phrase-based MT can be traced back to Och's alignment template model, which can be re-framed as a phrase translation system. Other researchers augmented their systems with phrase translation, such as Yamada, who use phrase translation in a syntax-based model. Marcu introduced a joint-probability model for phrase translation. At this point, most competitive statistical machine translation systems use phrase translation, such as the CMU, IBM, ISI, and Google systems, to name just a few. Phrase-based systems came out ahead at a recent international machine translation competition (DARPA TIDES Machine Translation Evaluation 2003 -2006 on Chinese-English and Arabic-English).

Background • Of course, there are other ways to do machine translation. Most commercial systems use transfer rules and a rich translation lexicon. • There also other ways to do statistical machine translation. There is some effort in building syntax-based models that either use real syntax trees generated by syntactic parsers, or tree transfer methods motivated by syntactic reordering patterns. • The phrase-based statistical machine translation model we present here was defined by [Koehn/Och/Marcu, 2003]. See also the description by [Zens, 2002]. The alternative phrase-based methods differ in the way the phrase translation table is created, which we disuss in detail below.

IBM Word Based Models • Fundamental Equation of Machine Translation: e^ = argmax Pr(e) Pr(f le ). • In Model 1 we assume all connections for each French position to be equally likely. • Therefore, the order of the words in e and f does not affect Pr(f | e).

• In Model 2 we make the more realistic assumption that the probability of a connection depends on the positions it connects and on the lengths of the two strings. Therefore, for Model 2, Pr(f |e) does depend on the order of the words in e and f.

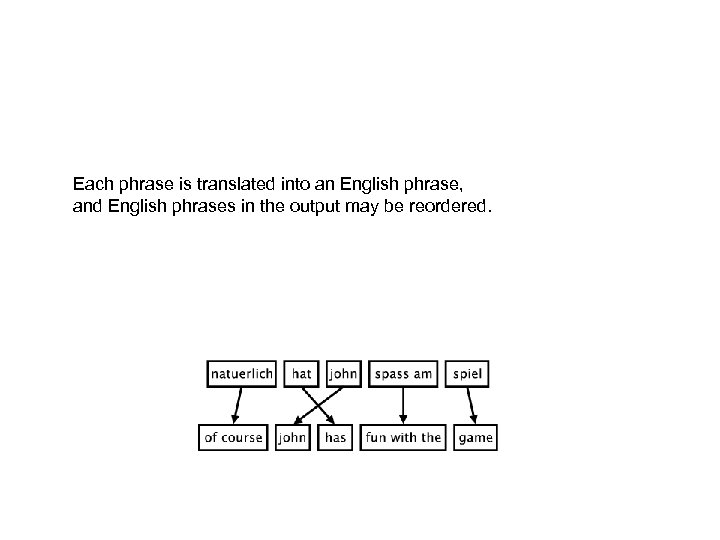

Each phrase is translated into an English phrase, and English phrases in the output may be reordered.

Phrase based Translation Model • The phrase translation model is based on the noisy channel model. • We use Bayes rule to reformulate the translation probability for translating a foreign sentence f into English e as – argmaxe p(e|f) = argmaxe p(f|e) p(e) • This allows for a language model e and a separate translation model p(f|e). • During decoding, the foreign input sentence f is segmented into a sequence of I phrases f 1 I. We assume a uniform probability distribution over all possible segmentations. • Each foreign phrase fi in f 1 I is translated into an English phrase ei. The English phrases may be reordered. Phrase translation is modeled by a probability distribution φ(fi|ei).

Phrase based Translation Model • Reordering of the English output phrases is modeled by a relative distortion probability distribution d(starti, endi-1), where starti denotes the start position of the foreign phrase that was translated into the i th English phrase, and endi-1 denotes the end position of the foreign phrase that was translated into the (i-1)th English phrase. • We use a simple distortion model d(starti, endi-1) = α|starti-endi-1 -1| with an appropriate value for the parameter α. • In order to calibrate the output length, we introduce a factor ω (called word cost) for each generated English word in addition to the trigram language model p. LM. This is a simple means to optimize performance. Usually, this factor is larger than 1, biasing toward longer output. • In summary, the best English output sentence ebest given a foreign input sentence f according to our model is – ebest = argmax_e p(e|f) = argmaxe p(f|e) p_LM(e) ωlength(e) where p(f|e) is decomposed into p(f 1 I|e 1 I) = Φi=1 I φ(fi|ei) d(starti, endi-1)

Decoder • Phrase based Decoder – Beam search algorithm – A sequence of untranslated foreign words and a possible English phrase translation for them is selected – The English phrase is attached to the existing English output sequence

Hierarchical Phrase based SMT Model • A Hierarchical Phrase-Based Model for Statistical Machine Translation, David Chiang, ACL 05

Phrase-based translation Morgen fliege ich nach Kanada zur Konferenz Tomorrow I will fly to the conference in Canada • Foreign input is segmented in phrases – any sequence of words, not necessarily linguistically motivated • Each phrase is translated into English • Phrases are reordered

• • • • Phrase-based translation model • Major components of phrase-based model – phrase translation model (f |e) – reordering model !length(e) – language model plm(e) • Bayes rule argmaxep(e|f ) = argmaxep(f |e)p(e) = argmaxe(f |e)plm(e)!length(e) • Sentence f is decomposed into I phrases ¯ f. I 1 = ¯ f 1, . . . , ¯ f. I • Decomposition of (f |e) ( ¯ f. I 1 |¯e. I 1) = I Yi=1 ( ¯ fi|¯ei)d(ai − bi− 1)

Syntax based Statistical Translation Model • Yamada and Knight (2001)

Syntax based SMT • One criticism of the PBMT is that it does not model structural or syntactic aspects of the language. • Syntax based SMT transforms a sourcelanguage parse tree into a target-language string by applying stochastic operations at each node. • These operations capture linguistic differences such as word order and case marking.

Syntax based SMT • The input sentence is preprocessed by a syntactic parser. • The channel performs operations on each node of the parse tree. The operations are reordering child nodes, inserting extra words at each node, and translating leaf words. • Parsing is only needed on the channel input side.

Syntax based SMT • The reorder operation is intended to model translation between languages with different word orders, such as SVO-languages (English or Chinese) and SOV-languages (Japanese or Turkish). • The word-insertion operation is intended to capture linguistic differences in specifying syntactic cases. E. g. , English and French use structural position to specify case, while Japanese and Korean use case-marker particles.

Reorder operation • Child nodes on each internal node are stochastically reordered. A node with N children has N! possible reorderings. • The probability of taking a specific reordering is given by the model’s r-table.

R-table

N-table • An extra word is stochastically inserted at each node • A word can be inserted either to the left of the node, to the right of the node, or nowhere. • The insertion probability is determined by the n-table.

N-table • The n-table is split into two – a table for insert positions • node’s label and its parent’s label are used to index the table for insert positions. – Probabilities of no insertion, left insertion and right insertion for each index condition – a table for words to be inserted • Probability of insertion dependent only on the word

T-table • We apply the translate operation to each leaf. We assume that this operation is dependent only on the word itself and that no context is consulted. • The model’s t-table specifies the probability for all cases.

• Syntax-light alignment models such as the five IBM models (Brown et al. , 1993) and their relatives have proved to be very successful and robust at producing wordlevel alignments, especially for closely related languages with similar word order and mostly local reorderings, which can be captured via simple models of relative word distortion.

• However, these models have been less successful at modeling syntactic distortions with longer distance movement. • In contrast, more syntactically informed approaches have been constrained by the often weak syntactic correspondences typical of realworld parallel texts, and by the difficulty of finding or inducing syntactic parsers for any but a few of the world’s most studied languages.

• Elliott Franco Drábek, David Yarowsky; ACL 2004 Proceedings of the interactive poster and demonstration sessions, 21 -26 July 2004, Barcelona, Spain. ¤ An improved method for automated word alignment of parallel texts which takes advantage of knowledge of syntactic divergences, while avoiding the need for syntactic analysis of the less resource rich language, and retaining the robustness of

76448bc0d0d68ab7bee2b0c85624ac5e.ppt