86f9db9f2b5ec5ea24a5daf4b58897af.ppt

- Количество слайдов: 25

PHENIX Computing Sangsu Ryu

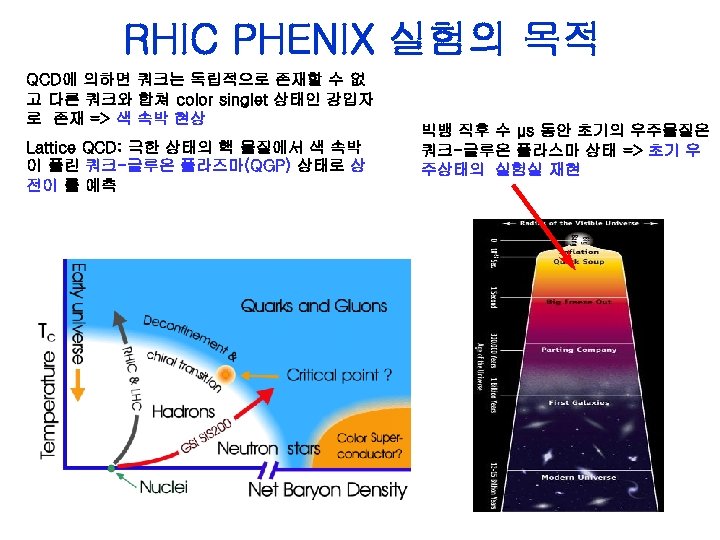

RHIC PHENIX 실험의 목적 QCD에 의하면 쿼크는 독립적으로 존재할 수 없 고 다른 쿼크와 합쳐 color singlet 상태인 강입자 로 존재 => 색 속박 현상 Lattice QCD: 극한 상태의 핵 물질에서 색 속박 이 풀린 쿼크-글루온 플라즈마(QGP) 상태로 상 전이 를 예측 빅뱅 직후 수 μs 동안 초기의 우주물질은 쿼크-글루온 플라스마 상태 => 초기 우 주상태의 실험실 재현

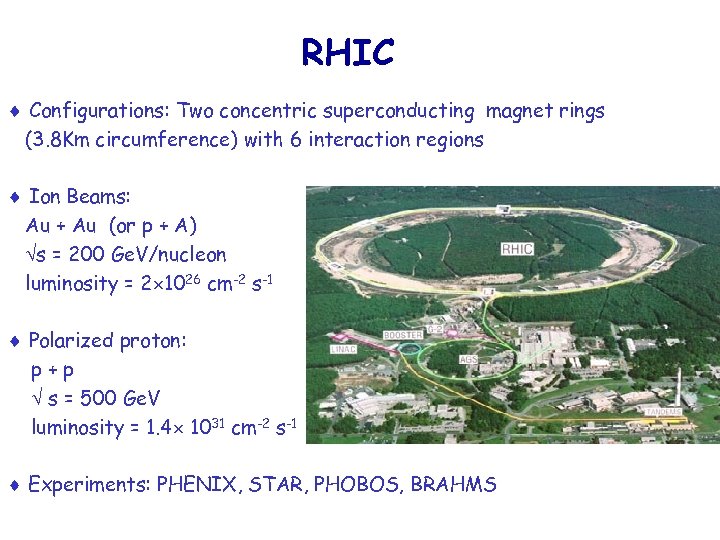

RHIC Configurations: Two concentric superconducting magnet rings (3. 8 Km circumference) with 6 interaction regions Ion Beams: Au + Au (or p + A) s = 200 Ge. V/nucleon luminosity = 2 1026 cm-2 s-1 Polarized proton: p+p s = 500 Ge. V luminosity = 1. 4 1031 cm-2 s-1 Experiments: PHENIX, STAR, PHOBOS, BRAHMS

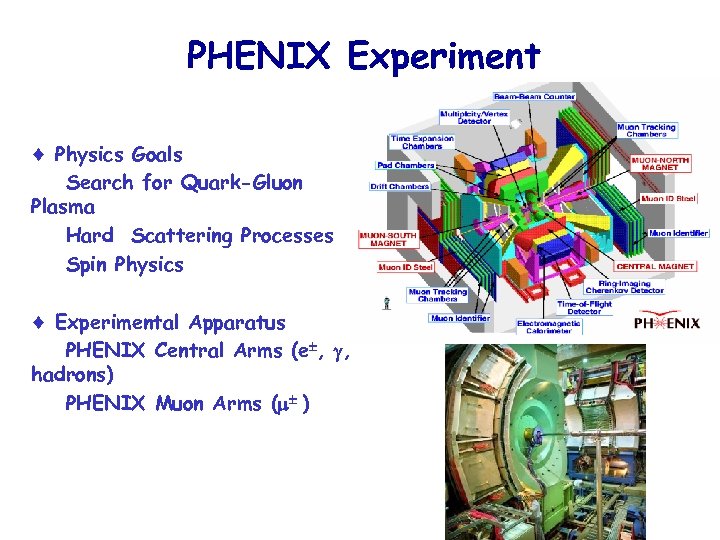

PHENIX Experiment Physics Goals Search for Quark-Gluon Plasma Hard Scattering Processes Spin Physics Experimental Apparatus PHENIX Central Arms (e , , hadrons) PHENIX Muon Arms ( )

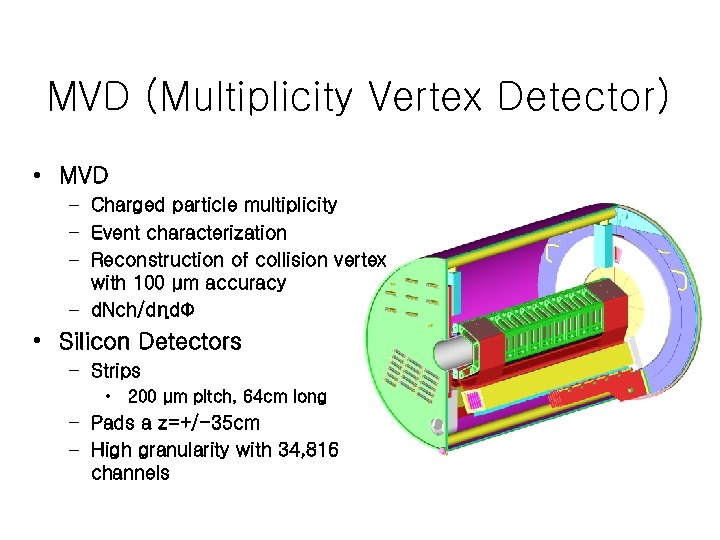

MVD (Multiplicity Vertex Detector) • MVD – Charged particle multiplicity – Event characterization – Reconstruction of collision vertex with 100 μm accuracy – d. Nch/dηdΦ • Silicon Detectors – Strips • 200 μm pitch, 64 cm long – Pads a z=+/-35 cm – High granularity with 34, 816 channels

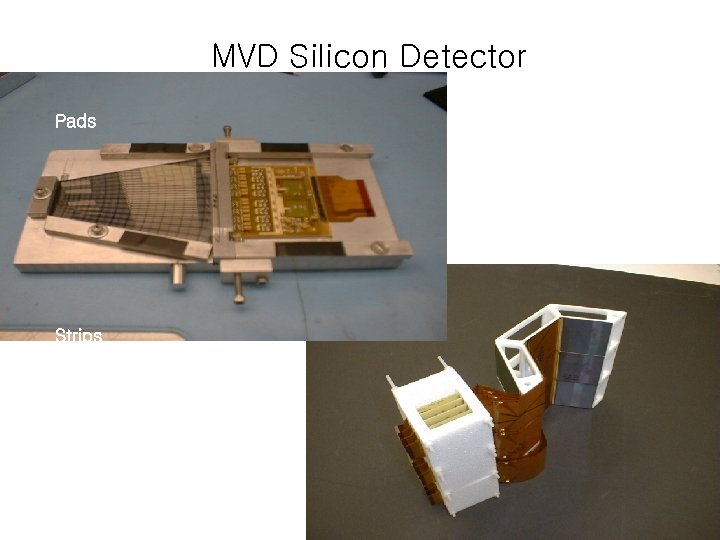

MVD Silicon Detector Pads Strips

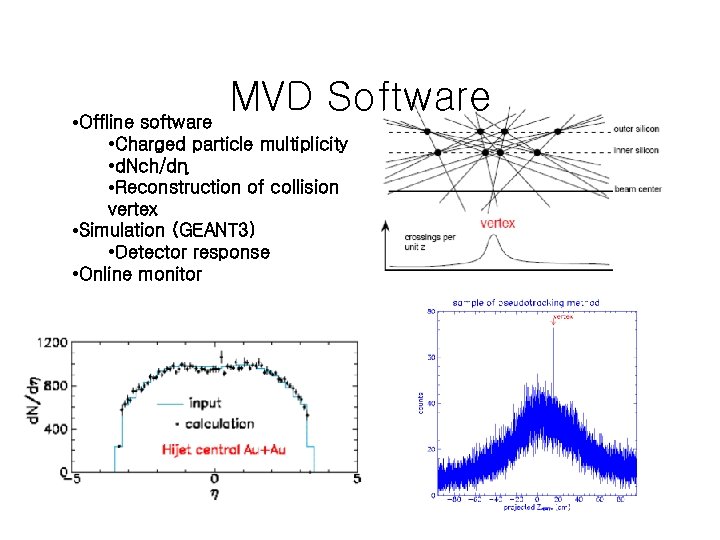

MVD Software • Offline software • Charged particle multiplicity • d. Nch/dη • Reconstruction of collision vertex • Simulation (GEANT 3) • Detector response • Online monitor

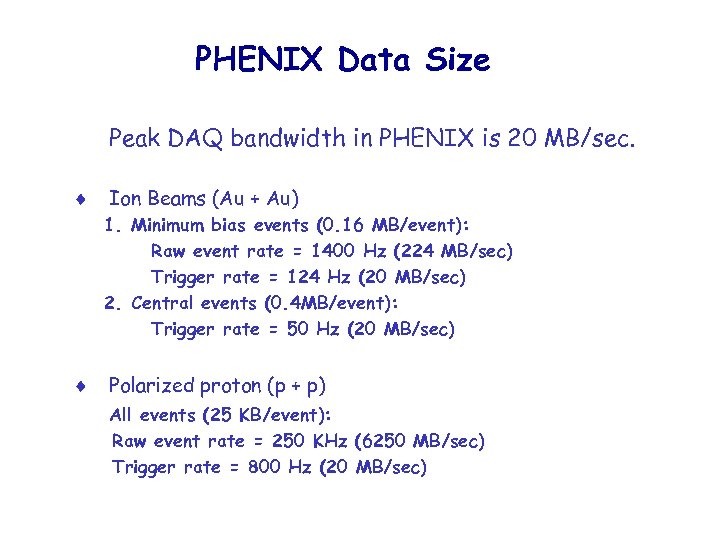

PHENIX Data Size Peak DAQ bandwidth in PHENIX is 20 MB/sec. Ion Beams (Au + Au) 1. Minimum bias events (0. 16 MB/event): Raw event rate = 1400 Hz (224 MB/sec) Trigger rate = 124 Hz (20 MB/sec) 2. Central events (0. 4 MB/event): Trigger rate = 50 Hz (20 MB/sec) Polarized proton (p + p) All events (25 KB/event): Raw event rate = 250 KHz (6250 MB/sec) Trigger rate = 800 Hz (20 MB/sec)

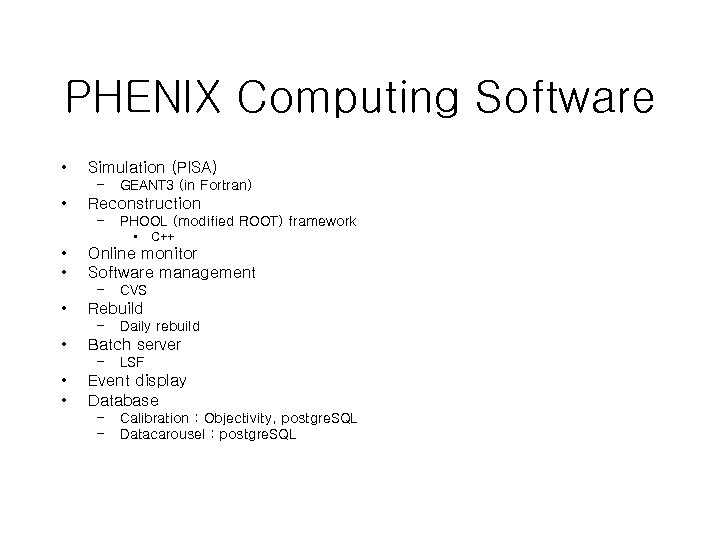

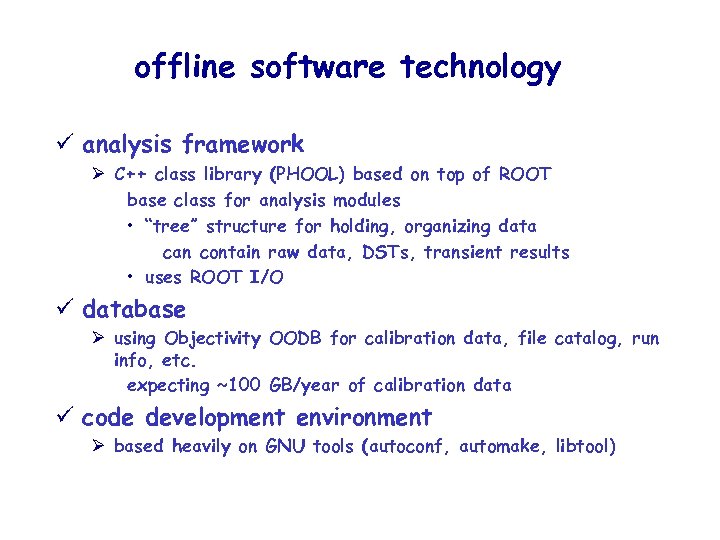

PHENIX Computing Software • Simulation (PISA) – • GEANT 3 (in Fortran) Reconstruction – PHOOL (modified ROOT) framework • • • Online monitor Software management – • Daily rebuild Batch server – • • CVS Rebuild – • C++ LSF Event display Database – – Calibration : Objectivity, postgre. SQL Datacarousel : postgre. SQL

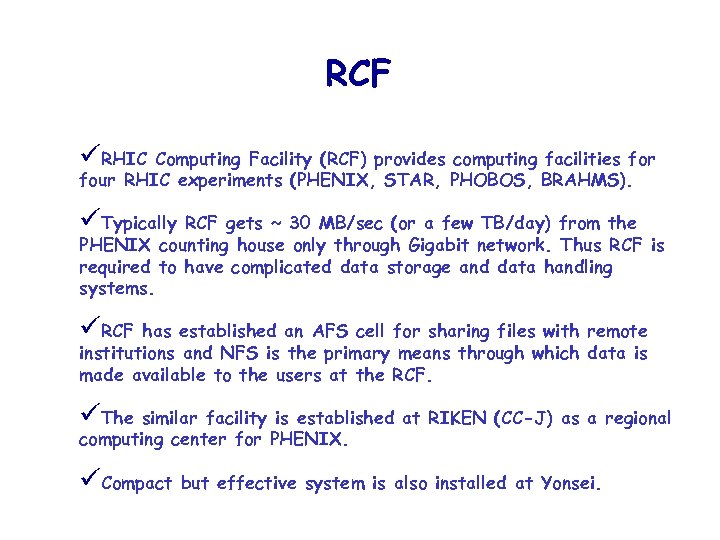

RCF üRHIC Computing Facility (RCF) provides computing facilities for four RHIC experiments (PHENIX, STAR, PHOBOS, BRAHMS). üTypically RCF gets ~ 30 MB/sec (or a few TB/day) from the PHENIX counting house only through Gigabit network. Thus RCF is required to have complicated data storage and data handling systems. üRCF has established an AFS cell for sharing files with remote institutions and NFS is the primary means through which data is made available to the users at the RCF. üThe similar facility is established at RIKEN (CC-J) as a regional computing center for PHENIX. üCompact but effective system is also installed at Yonsei.

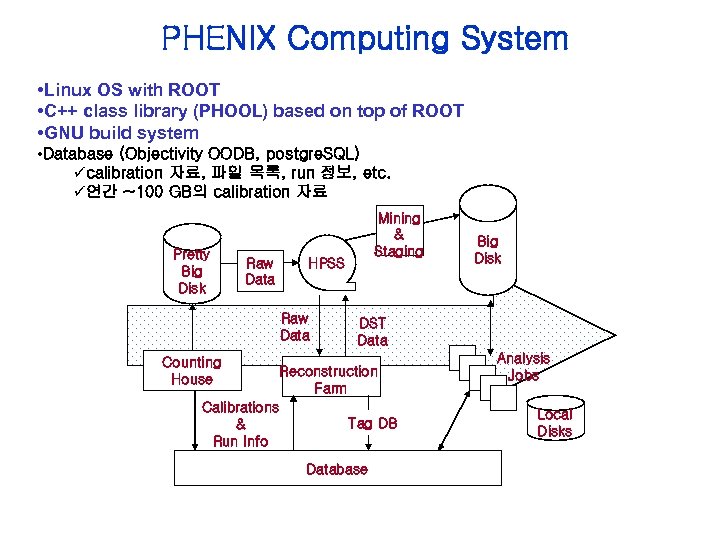

PHENIX Computing System • Linux OS with ROOT • C++ class library (PHOOL) based on top of ROOT • GNU build system • Database (Objectivity OODB, postgre. SQL) ücalibration 자료, 파일 목록, run 정보, etc. ü연간 ~100 GB의 calibration 자료 Pretty Big Disk Raw Data HPSS Raw Data Counting House Calibrations & Run Info Mining & Staging Big Disk DST Data Reconstruction Farm Tag DB Database Analysis Jobs Local Disks

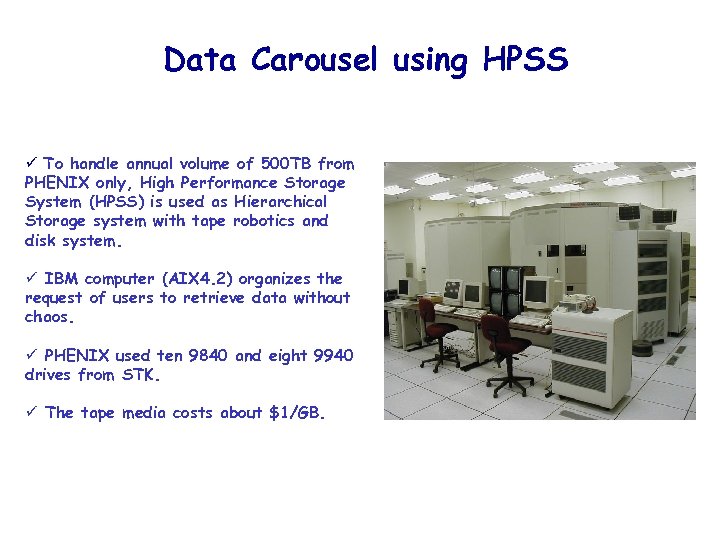

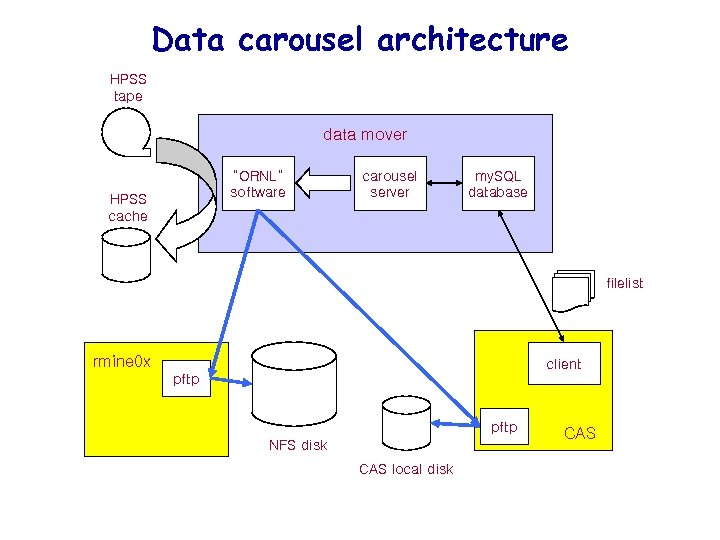

Data Carousel using HPSS ü To handle annual volume of 500 TB from PHENIX only, High Performance Storage System (HPSS) is used as Hierarchical Storage system with tape robotics and disk system. ü IBM computer (AIX 4. 2) organizes the request of users to retrieve data without chaos. ü PHENIX used ten 9840 and eight 9940 drives from STK. ü The tape media costs about $1/GB.

Data carousel architecture HPSS tape data mover “ORNL” software HPSS cache carousel server my. SQL database filelist rmine 0 x client pftp NFS disk CAS local disk CAS

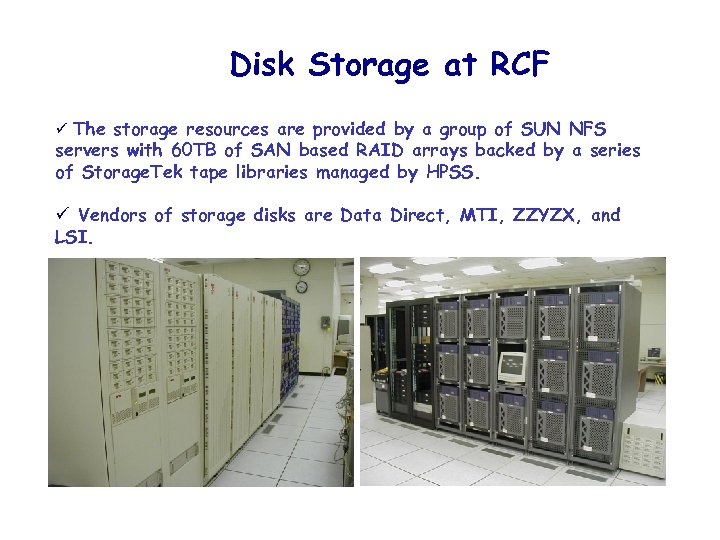

Disk Storage at RCF ü The storage resources are provided by a group of SUN NFS servers with 60 TB of SAN based RAID arrays backed by a series of Storage. Tek tape libraries managed by HPSS. ü Vendors of storage disks are Data Direct, MTI, ZZYZX, and LSI.

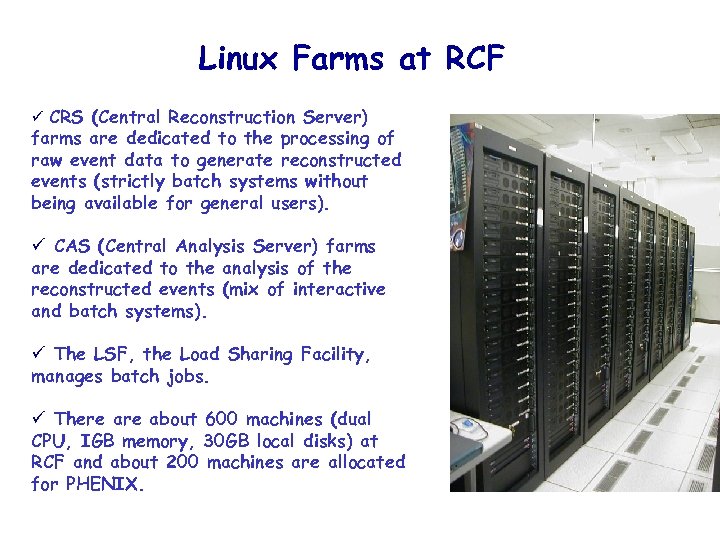

Linux Farms at RCF ü CRS (Central Reconstruction Server) farms are dedicated to the processing of raw event data to generate reconstructed events (strictly batch systems without being available for general users). ü CAS (Central Analysis Server) farms are dedicated to the analysis of the reconstructed events (mix of interactive and batch systems). ü The LSF, the Load Sharing Facility, manages batch jobs. ü There about 600 machines (dual CPU, IGB memory, 30 GB local disks) at RCF and about 200 machines are allocated for PHENIX.

offline software technology ü analysis framework Ø C++ class library (PHOOL) based on top of ROOT base class for analysis modules • “tree” structure for holding, organizing data can contain raw data, DSTs, transient results • uses ROOT I/O ü database Ø using Objectivity OODB for calibration data, file catalog, run info, etc. expecting ~100 GB/year of calibration data ü code development environment Ø based heavily on GNU tools (autoconf, automake, libtool)

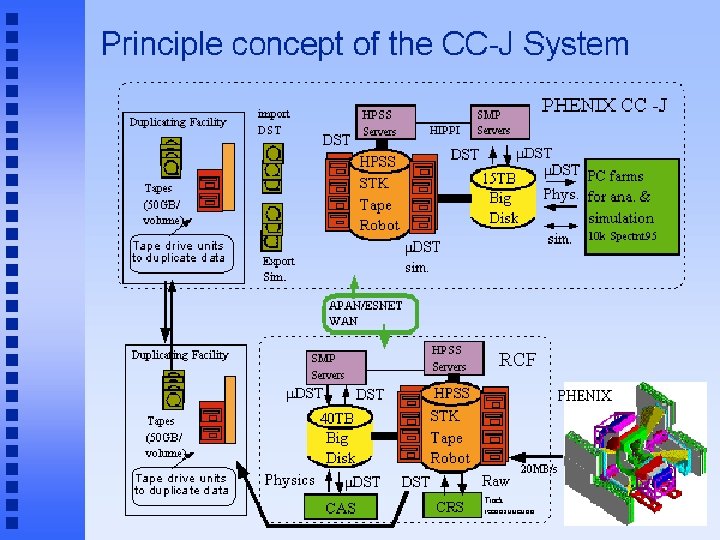

PHENIX CC-J ü The PHENIX CC-J at RIKEN is intended to serve as the main site of computing for PHENIX simulations, a regional Asia computing center for PHENIX, and as a center for SPIN physics analysis. ü In order to exchange data between RCF and CC-J, a proper bandwidth of the WAN between RCF and CC-J is required. ü CC-J has CPU farms of 10 K SPECint 95, tape storage of 100 TB, disk storage of 15 TB, tape I/O of 100 MB/sec, disk I/O of 600 MB/sec, and 6 SUN SMP data server units.

Situations at YONSEI ü Comparable mirror image into Yonsei by “Explicit” copy of the remote system ü Usage of the local cluster machines Similar operation environment (same OS, and similar hardware spec) 1. Disk sharing through NFS One installation of analysis library and sharing by other machines 2. Easy upgrade and management ü Local clustering Unlimited network resources between the cluster machines by using 100 Mbps Current number of the cluster machines = 2 (2 CPUs) + 2 (as RAID) ü File transfers from RCF Software update by copying shared libraries (once/week, takes less than about 1 hour) Raw data copy using “scp” or BBFTP (~1 GB/day)

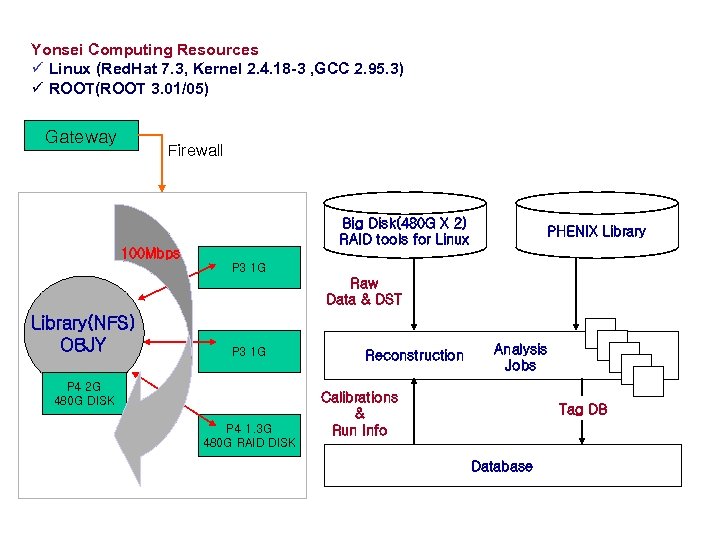

Yonsei Computing Resources Yonsei Linux boxes for PHENIX analysis use ü 4 desktop boxes in a firewall (Pentium III/IV) Linux (Red. Hat 7. 3, Kernel 2. 4. 18 -3, GCC 2. 95. 3) ROOT(ROOT 3. 01/05) ü One machine has all software required for PHENIX analysis ü Event generation, reconstruction, analysis ü Remaining desktops share one library directory via NFS ü 2 large RAID disk box with several IDE HDDs (~500 G X 2) and several small disks (total ~500 G) in 2 desktops ü Compact but effective system for small user group

Yonsei Computing Resources ü Linux (Red. Hat 7. 3, Kernel 2. 4. 18 -3 , GCC 2. 95. 3) ü ROOT(ROOT 3. 01/05) Gateway Firewall Big Disk(480 G X 2) RAID tools for Linux 100 Mbps PHENIX Library P 3 1 G Raw Data & DST Library(NFS) OBJY P 3 1 G P 4 2 G 480 G DISK P 4 1. 3 G 480 G RAID DISK Reconstruction Analysis Jobs Calibrations & Run Info Tag DB Database

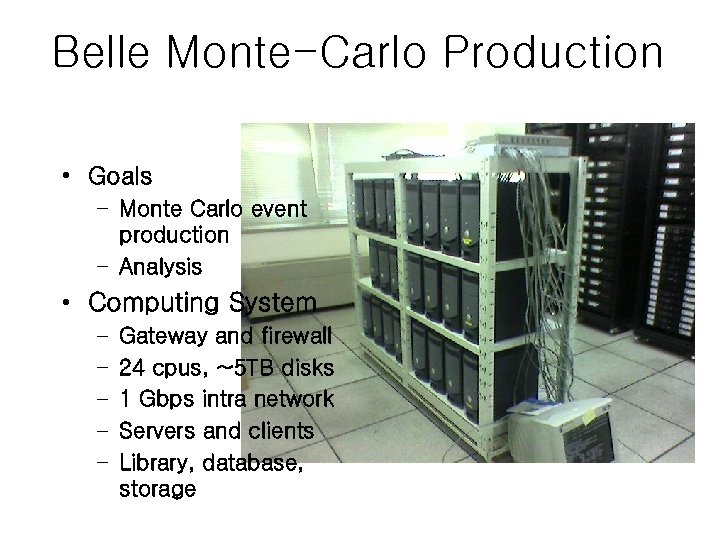

Belle Monte-Carlo Production • Goals – Monte Carlo event production – Analysis • Computing System – – – Gateway and firewall 24 cpus, ~5 TB disks 1 Gbps intra network Servers and clients Library, database, storage

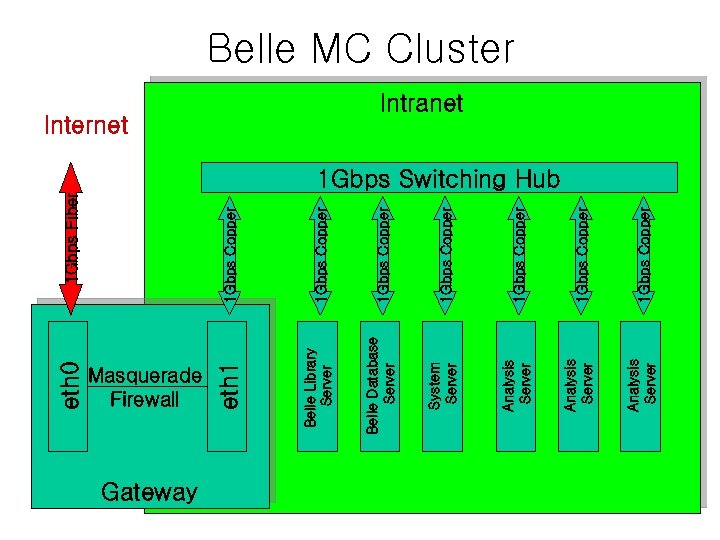

1 Gbps Fiber 1 Gbps Copper eth 0 Gateway 1 Gbps Copper 1 Gbps Copper Belle Library Server Belle Database Server System Server Analysis Server Masquerade Firewall eth 1 Belle MC Cluster Internet Intranet 1 Gbps Switching Hub

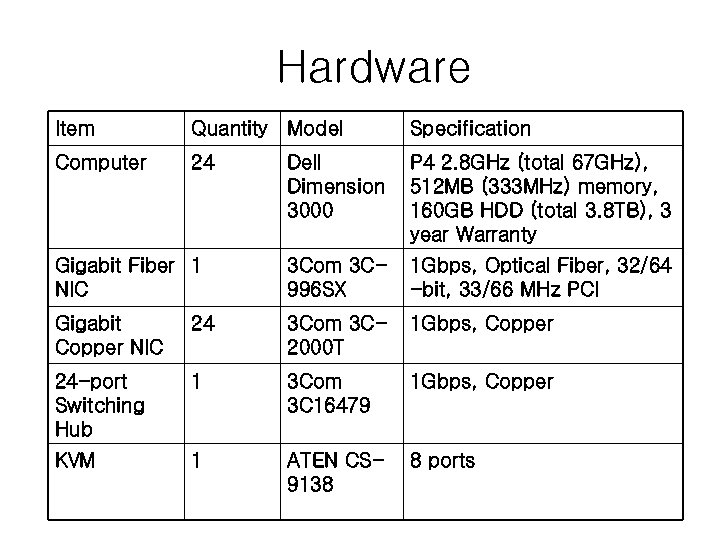

Hardware Item Quantity Model Specification Computer 24 Dell Dimension 3000 P 4 2. 8 GHz (total 67 GHz), 512 MB (333 MHz) memory, 160 GB HDD (total 3. 8 TB), 3 year Warranty Gigabit Fiber 1 NIC 3 Com 3 C 996 SX 1 Gbps, Optical Fiber, 32/64 -bit, 33/66 MHz PCI Gigabit Copper NIC 24 3 Com 3 C 2000 T 1 Gbps, Copper 24 -port Switching Hub 1 3 Com 3 C 16479 1 Gbps, Copper KVM 1 ATEN CS 9138 8 ports

Belle Cluster Servers • Automated OS installation – DHCP/BOOTP – TFTP – NFS or FTP • System administration – 사용자 관리를 위한 NIS – 파일 공유를 위한 NFS • Belle 라이브러리 – NFS 를 통한 파일 공유 • 자료파일 – NFS를 통한 파일 공유 • Belle 데이터베이스 – Postgre. SQL 서버

86f9db9f2b5ec5ea24a5daf4b58897af.ppt