57f954b19de8330248b1a0c0369622bb.ppt

- Количество слайдов: 46

Ph. D. Thesis Proposal Feature Selection in Video Classification • • Yan Liu Computer Science Columbia University Advisor: John R. Kender 1

Ph. D. Thesis Proposal Feature Selection in Video Classification • • Yan Liu Computer Science Columbia University Advisor: John R. Kender 1

Outline 1. Introduction 2. Progress 3. Proposal 4. Conclusion • • Introduction Research progress Proposed work Conclusion and schedule 2

Outline 1. Introduction 2. Progress 3. Proposal 4. Conclusion • • Introduction Research progress Proposed work Conclusion and schedule 2

Outline 1. Introduction 2. Progress 3. Proposal 4. Conclusion • Introduction – Motivation of feature selection in video classification – Definition of feature selection – Feature selection algorithm design and evaluation – Applications of feature selection • Research progress • Proposed work • Conclusion and schedule 3

Outline 1. Introduction 2. Progress 3. Proposal 4. Conclusion • Introduction – Motivation of feature selection in video classification – Definition of feature selection – Feature selection algorithm design and evaluation – Applications of feature selection • Research progress • Proposed work • Conclusion and schedule 3

Motivation of feature selection in video classification 1. Introduction 1. 1. Motivation 1. 2. Definition 1. 3. Components 1. 4. Applications 2. Progress 3. Proposal 4. Conclusion • The problem of efficient video data management is an important issue – “Semantic gap”: machine learning methods, such as classification can close it – Efficiency: reducing the dimensionality of the data prior to processing is necessary • Feature selection in video classification not well explored – So far, mostly based on researchers’ intuition [A. Vailaya 2001] – Goal: select representative features automatically 4

Motivation of feature selection in video classification 1. Introduction 1. 1. Motivation 1. 2. Definition 1. 3. Components 1. 4. Applications 2. Progress 3. Proposal 4. Conclusion • The problem of efficient video data management is an important issue – “Semantic gap”: machine learning methods, such as classification can close it – Efficiency: reducing the dimensionality of the data prior to processing is necessary • Feature selection in video classification not well explored – So far, mostly based on researchers’ intuition [A. Vailaya 2001] – Goal: select representative features automatically 4

Definition of feature selection 1. Introduction 1. 1. Motivation 1. 2. Definition 1. 3. Components 1. 4. Applications • Feature selection focuses on – Finding a feature subset that has the most discriminative information from the original feature space – Objective: [Guyon 2003] 2. Progress • Improve the prediction performance • Provide a more cost-effective predictor • Provide a better understanding of the data 3. Proposal • Two major approaches define feature selection 4. Conclusion [Blum 1997] – Filter methods: emphasize the discovery of relevant relationships between features and high-level concepts – Wrapper methods: seek a feature subset that minimizes prediction error of classifying the high-level concept 5

Definition of feature selection 1. Introduction 1. 1. Motivation 1. 2. Definition 1. 3. Components 1. 4. Applications • Feature selection focuses on – Finding a feature subset that has the most discriminative information from the original feature space – Objective: [Guyon 2003] 2. Progress • Improve the prediction performance • Provide a more cost-effective predictor • Provide a better understanding of the data 3. Proposal • Two major approaches define feature selection 4. Conclusion [Blum 1997] – Filter methods: emphasize the discovery of relevant relationships between features and high-level concepts – Wrapper methods: seek a feature subset that minimizes prediction error of classifying the high-level concept 5

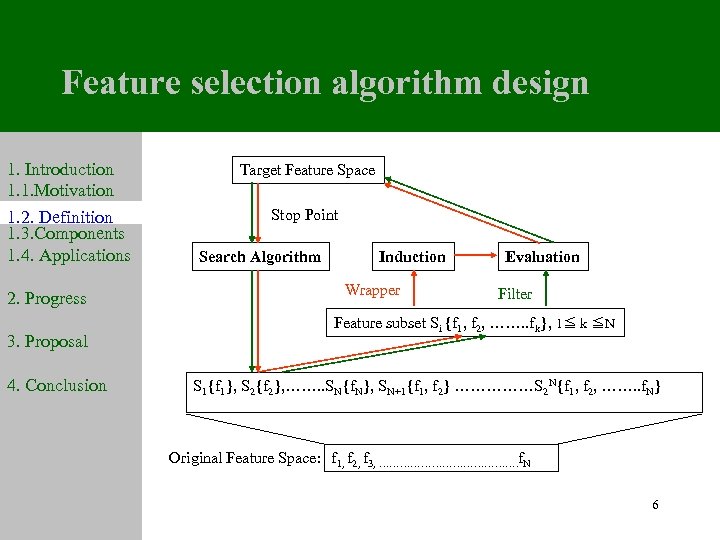

Feature selection algorithm design 1. Introduction 1. 1. Motivation 1. 2. Definition 1. 3. Components 1. 4. Applications 2. Progress 3. Proposal 4. Conclusion Target Feature Space Stop Point Search Algorithm Induction Wrapper Evaluation Filter Feature subset Si {f 1, f 2, ……. . fk}, 1≦ k ≦N S 1{f 1}, S 2{f 2}, ……. . SN{f. N}, SN+1{f 1, f 2} ……………S 2 N{f 1, f 2, ……. . f. N} Original Feature Space: f 1, f 2, f 3, …………………. f. N 6

Feature selection algorithm design 1. Introduction 1. 1. Motivation 1. 2. Definition 1. 3. Components 1. 4. Applications 2. Progress 3. Proposal 4. Conclusion Target Feature Space Stop Point Search Algorithm Induction Wrapper Evaluation Filter Feature subset Si {f 1, f 2, ……. . fk}, 1≦ k ≦N S 1{f 1}, S 2{f 2}, ……. . SN{f. N}, SN+1{f 1, f 2} ……………S 2 N{f 1, f 2, ……. . f. N} Original Feature Space: f 1, f 2, f 3, …………………. f. N 6

Three components of feature selection algorithms 1. Introduction 1. 1. Motivation 1. 2. Definition 1. 3. Components 1. 4. Applications 2. Progress 3. Proposal 4. Conclusion • Search algorithm – Forward selection [Singh 1995] – Backward elimination [Koller 1996] – Genetic algorithm [Oliveira 2001] • Induction algorithm – SVM [Bi 2003], BN [Singh 1995], k. NN [Abe 2002], NN [Oliveira 2001], Boosting [Das 2001] – Classifier-specific [Weston 2000] and classifierindependent feature selection [Abe 2002] • Evaluation metric – Distance measure [H. Liu 2002], dependence measure, consistency measure [Dash 2000], information measure [Koller 1996] – Predictive accuracy measure (for most wrapper methods) 7

Three components of feature selection algorithms 1. Introduction 1. 1. Motivation 1. 2. Definition 1. 3. Components 1. 4. Applications 2. Progress 3. Proposal 4. Conclusion • Search algorithm – Forward selection [Singh 1995] – Backward elimination [Koller 1996] – Genetic algorithm [Oliveira 2001] • Induction algorithm – SVM [Bi 2003], BN [Singh 1995], k. NN [Abe 2002], NN [Oliveira 2001], Boosting [Das 2001] – Classifier-specific [Weston 2000] and classifierindependent feature selection [Abe 2002] • Evaluation metric – Distance measure [H. Liu 2002], dependence measure, consistency measure [Dash 2000], information measure [Koller 1996] – Predictive accuracy measure (for most wrapper methods) 7

Applying feature selection to video classification 1. Introduction 1. 1. Motivation 1. 2. Definition 1. 3. Components 1. 4. Applications 2. Progress 3. Proposal 4. Conclusion • Current applications with large data sets – – Text categorization [Forman 2003] Genetic microarray [Xing 2001] Handwritten digit recognition [Oliveira 2001] Web classification [Coetzee 2001] • Applying existing feature selection algorithms to video data – Similar need: massive data, high dimensionality, complex hypotheses – Difficulty: higher requirement of time cost – Some existing work in video classification [Jaimes 2000] 8

Applying feature selection to video classification 1. Introduction 1. 1. Motivation 1. 2. Definition 1. 3. Components 1. 4. Applications 2. Progress 3. Proposal 4. Conclusion • Current applications with large data sets – – Text categorization [Forman 2003] Genetic microarray [Xing 2001] Handwritten digit recognition [Oliveira 2001] Web classification [Coetzee 2001] • Applying existing feature selection algorithms to video data – Similar need: massive data, high dimensionality, complex hypotheses – Difficulty: higher requirement of time cost – Some existing work in video classification [Jaimes 2000] 8

Outline 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion • Introduction • Research progress – BSMT: Basic Sort-Merge Tree [Liu 2002] – CSMT: Complement Sort-Merge Tree [Liu 2003] – FSMT: Fast-converging Sort-Merge Tree [Liu 2004] – MLFS: Multi-Level Feature Selection [Liu 2003] – Fast video retrieval system [Liu 2003] • Proposed work • Conclusion and schedule 9

Outline 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion • Introduction • Research progress – BSMT: Basic Sort-Merge Tree [Liu 2002] – CSMT: Complement Sort-Merge Tree [Liu 2003] – FSMT: Fast-converging Sort-Merge Tree [Liu 2004] – MLFS: Multi-Level Feature Selection [Liu 2003] – Fast video retrieval system [Liu 2003] • Proposed work • Conclusion and schedule 9

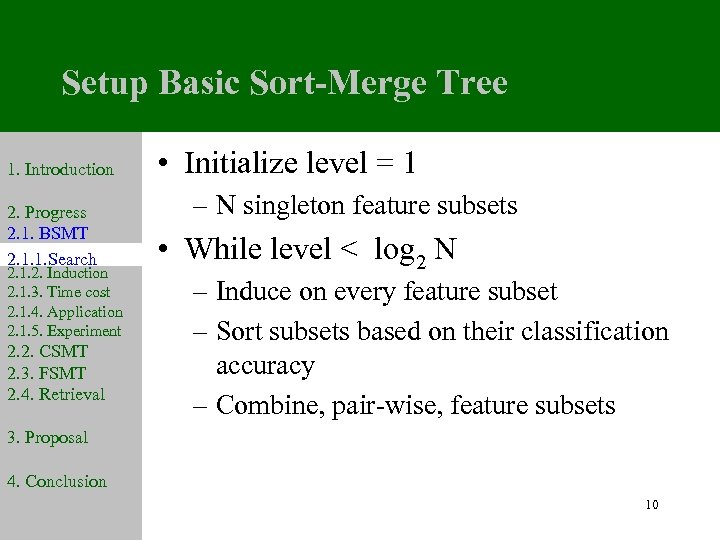

Setup Basic Sort-Merge Tree 1. Introduction 2. Progress 2. 1. BSMT 2. 1. 1. Search 2. 1. 2. Induction 2. 1. 3. Time cost 2. 1. 4. Application 2. 1. 5. Experiment 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal • Initialize level = 1 – N singleton feature subsets • While level < log 2 N – Induce on every feature subset – Sort subsets based on their classification accuracy – Combine, pair-wise, feature subsets 4. Conclusion 10

Setup Basic Sort-Merge Tree 1. Introduction 2. Progress 2. 1. BSMT 2. 1. 1. Search 2. 1. 2. Induction 2. 1. 3. Time cost 2. 1. 4. Application 2. 1. 5. Experiment 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal • Initialize level = 1 – N singleton feature subsets • While level < log 2 N – Induce on every feature subset – Sort subsets based on their classification accuracy – Combine, pair-wise, feature subsets 4. Conclusion 10

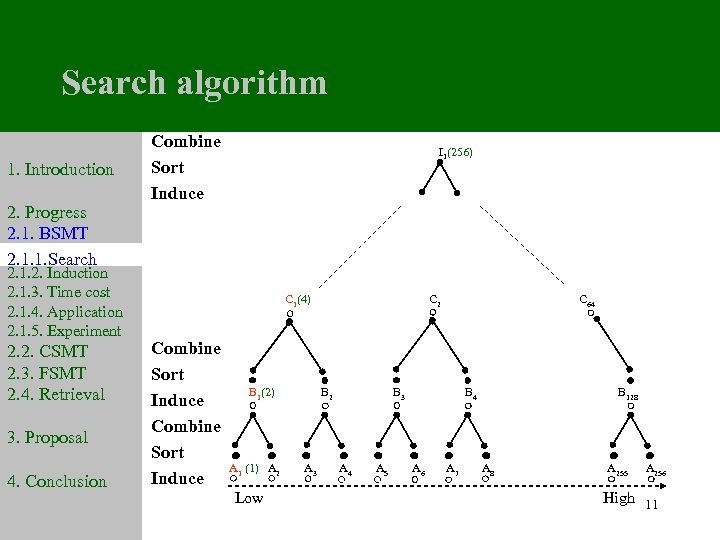

Search algorithm 1. Introduction 2. Progress 2. 1. BSMT 2. 1. 1. Search 2. 1. 2. Induction 2. 1. 3. Time cost 2. 1. 4. Application 2. 1. 5. Experiment 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion Combine Sort Induce I 1(256) C 1(4) Combine Sort Induce B 1(2) B 2 C 2 B 3 B 4 A 1 (1) A 2 A 3 A 4 A 5 A 6 A 7 A 8 Low C 64 B 128 A 255 A 256 High 11

Search algorithm 1. Introduction 2. Progress 2. 1. BSMT 2. 1. 1. Search 2. 1. 2. Induction 2. 1. 3. Time cost 2. 1. 4. Application 2. 1. 5. Experiment 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion Combine Sort Induce I 1(256) C 1(4) Combine Sort Induce B 1(2) B 2 C 2 B 3 B 4 A 1 (1) A 2 A 3 A 4 A 5 A 6 A 7 A 8 Low C 64 B 128 A 255 A 256 High 11

Advantages 1. Introduction 2. Progress 2. 1. BSMT 2. 1. 1. Search 2. 1. 2. Induction 2. 1. 3. Time cost 2. 1. 4. Application 2. 1. 5. Experiment 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal • To achieve better performance – Avoids local optima of forward selection and backward elimination – Avoids heuristic randomness of genetic algorithms • To achieve lower time cost – Search algorithm is linear in the number of features – Enables the straightforward creation of nearoptimal feature subsets with little additional work [Liu 2003] 4. Conclusion 12

Advantages 1. Introduction 2. Progress 2. 1. BSMT 2. 1. 1. Search 2. 1. 2. Induction 2. 1. 3. Time cost 2. 1. 4. Application 2. 1. 5. Experiment 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal • To achieve better performance – Avoids local optima of forward selection and backward elimination – Avoids heuristic randomness of genetic algorithms • To achieve lower time cost – Search algorithm is linear in the number of features – Enables the straightforward creation of nearoptimal feature subsets with little additional work [Liu 2003] 4. Conclusion 12

Induction algorithm 1. Introduction 2. Progress 2. 1. BSMT 2. 1. 1. Search 2. 1. 2. Induction 2. 1. 3. Time cost 2. 1. 4. Application 2. 1. 5. Experiment 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal • Novel combination of Fastmap and Mahalanobis likelihood • Fastmap for dimensionality reduction [Faloutsos 1995] – Feature extraction algorithm approximates PCA with linear time cost – Reduces the dimensionality of feature subsets to a prespecified small number • Mahalanobis maximum likelihood for classification [Duda 2000] – Computes the likelihood that a point belongs to a distribution that is modeled as a multidimensional Gaussian with arbitrary covariance – Works well for video domain 4. Conclusion 13

Induction algorithm 1. Introduction 2. Progress 2. 1. BSMT 2. 1. 1. Search 2. 1. 2. Induction 2. 1. 3. Time cost 2. 1. 4. Application 2. 1. 5. Experiment 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal • Novel combination of Fastmap and Mahalanobis likelihood • Fastmap for dimensionality reduction [Faloutsos 1995] – Feature extraction algorithm approximates PCA with linear time cost – Reduces the dimensionality of feature subsets to a prespecified small number • Mahalanobis maximum likelihood for classification [Duda 2000] – Computes the likelihood that a point belongs to a distribution that is modeled as a multidimensional Gaussian with arbitrary covariance – Works well for video domain 4. Conclusion 13

Applications to instructional video frame categorization 1. Introduction 2. Progress 2. 1. BSMT 2. 1. 1. Search 2. 1. 2. Induction 2. 1. 3. Time cost 2. 1. 4. Application 2. 1. 5. Experiment 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion • Pre-processing: – Temporally subsample: every other I frame (one frame/sec) – Spatially subsample: six DC terms of each macroblock • Feature selection – From 300 six-dimensional features to r features • Video segmentation and retrieval – Classify frames or segments in the usual way using the resulting feature subset 14

Applications to instructional video frame categorization 1. Introduction 2. Progress 2. 1. BSMT 2. 1. 1. Search 2. 1. 2. Induction 2. 1. 3. Time cost 2. 1. 4. Application 2. 1. 5. Experiment 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion • Pre-processing: – Temporally subsample: every other I frame (one frame/sec) – Spatially subsample: six DC terms of each macroblock • Feature selection – From 300 six-dimensional features to r features • Video segmentation and retrieval – Classify frames or segments in the usual way using the resulting feature subset 14

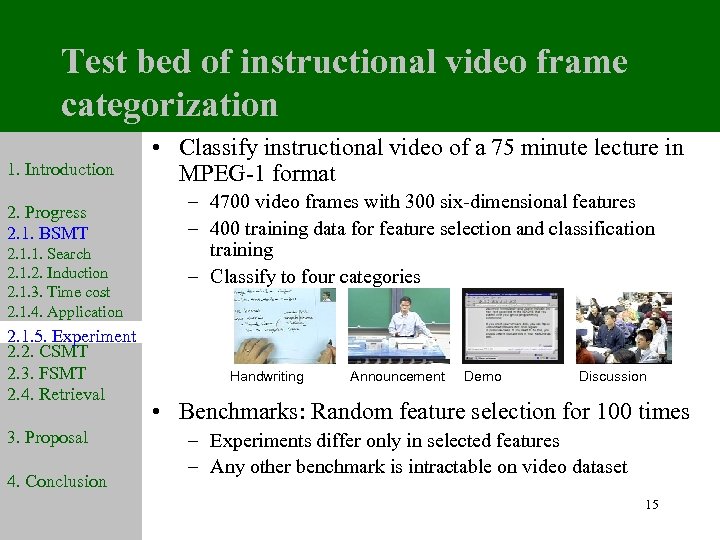

Test bed of instructional video frame categorization 1. Introduction 2. Progress 2. 1. BSMT 2. 1. 1. Search 2. 1. 2. Induction 2. 1. 3. Time cost 2. 1. 4. Application 2. 1. 5. Experiment 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion • Classify instructional video of a 75 minute lecture in MPEG-1 format – 4700 video frames with 300 six-dimensional features – 400 training data for feature selection and classification training – Classify to four categories Handwriting Announcement Demo Discussion • Benchmarks: Random feature selection for 100 times – Experiments differ only in selected features – Any other benchmark is intractable on video dataset 15

Test bed of instructional video frame categorization 1. Introduction 2. Progress 2. 1. BSMT 2. 1. 1. Search 2. 1. 2. Induction 2. 1. 3. Time cost 2. 1. 4. Application 2. 1. 5. Experiment 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion • Classify instructional video of a 75 minute lecture in MPEG-1 format – 4700 video frames with 300 six-dimensional features – 400 training data for feature selection and classification training – Classify to four categories Handwriting Announcement Demo Discussion • Benchmarks: Random feature selection for 100 times – Experiments differ only in selected features – Any other benchmark is intractable on video dataset 15

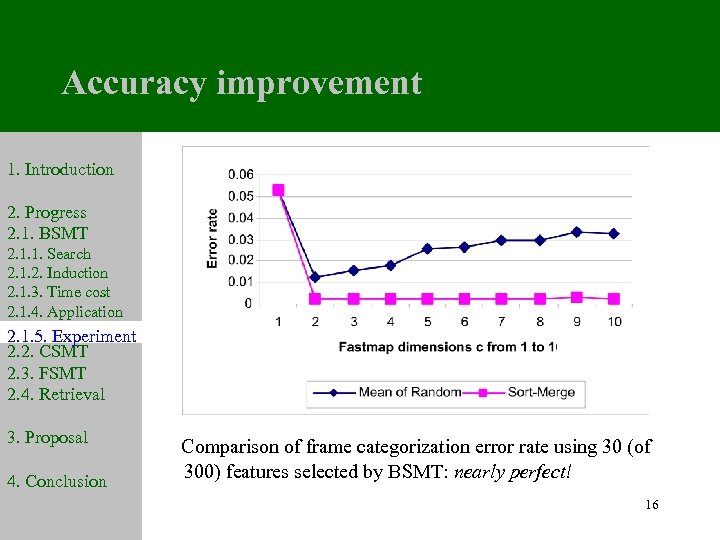

Accuracy improvement 1. Introduction 2. Progress 2. 1. BSMT 2. 1. 1. Search 2. 1. 2. Induction 2. 1. 3. Time cost 2. 1. 4. Application 2. 1. 5. Experiment 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion Comparison of frame categorization error rate using 30 (of 300) features selected by BSMT: nearly perfect! 16

Accuracy improvement 1. Introduction 2. Progress 2. 1. BSMT 2. 1. 1. Search 2. 1. 2. Induction 2. 1. 3. Time cost 2. 1. 4. Application 2. 1. 5. Experiment 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion Comparison of frame categorization error rate using 30 (of 300) features selected by BSMT: nearly perfect! 16

Test bed of sports video retrieval 1. Introduction 2. Progress 2. 1. BSMT • Retrieve “pitching” frames from an entire video 2. 1. 1. Search 2. 1. 2. Induction 2. 1. 3. Time cost 2. 1. 4. Application 2. 1. 5. Experiment 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal Pitching Part Competing image types – Sampled more finely: every I frame – 3600 frames for half an hour • First task: binary classify 3600 video frames • Second task: retrieve 45 “pitching” segments from 182 pre-segmented video segments 4. Conclusion 17

Test bed of sports video retrieval 1. Introduction 2. Progress 2. 1. BSMT • Retrieve “pitching” frames from an entire video 2. 1. 1. Search 2. 1. 2. Induction 2. 1. 3. Time cost 2. 1. 4. Application 2. 1. 5. Experiment 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal Pitching Part Competing image types – Sampled more finely: every I frame – 3600 frames for half an hour • First task: binary classify 3600 video frames • Second task: retrieve 45 “pitching” segments from 182 pre-segmented video segments 4. Conclusion 17

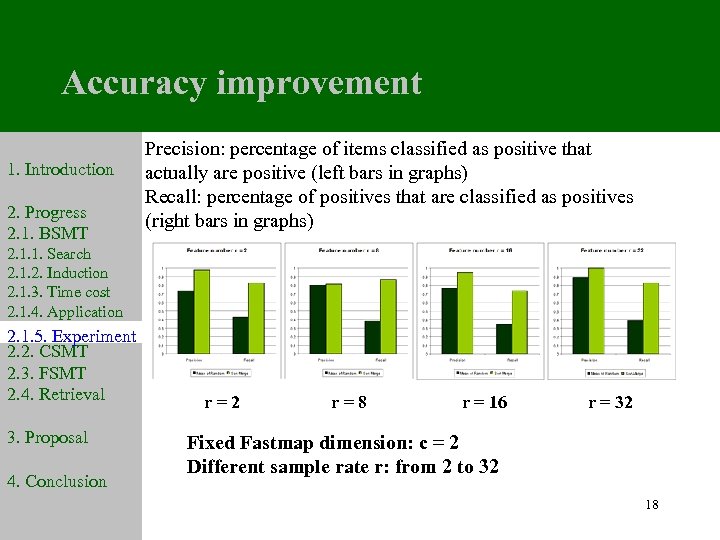

Accuracy improvement 1. Introduction 2. Progress 2. 1. BSMT Precision: percentage of items classified as positive that actually are positive (left bars in graphs) Recall: percentage of positives that are classified as positives (right bars in graphs) 2. 1. 1. Search 2. 1. 2. Induction 2. 1. 3. Time cost 2. 1. 4. Application 2. 1. 5. Experiment 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion r=2 r=8 r = 16 Fixed Fastmap dimension: c = 2 Different sample rate r: from 2 to 32 r = 32 18

Accuracy improvement 1. Introduction 2. Progress 2. 1. BSMT Precision: percentage of items classified as positive that actually are positive (left bars in graphs) Recall: percentage of positives that are classified as positives (right bars in graphs) 2. 1. 1. Search 2. 1. 2. Induction 2. 1. 3. Time cost 2. 1. 4. Application 2. 1. 5. Experiment 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion r=2 r=8 r = 16 Fixed Fastmap dimension: c = 2 Different sample rate r: from 2 to 32 r = 32 18

Adapting to sparse training data 1. Introduction • Difficulties of feature selection in video, as in genetic microarray data 2. Progress 2. 1. BSMT • 2. 2. CSMT 2. 2. 1. Sparse train 2. 2. 2. Search 2. 2. 3. Experiment 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion – Huge feature sets with 7130 dimensions – Small training data sets with 38 training data Renders some feature selection algorithm ineffective [Liu 2003] – More coarsely quantized prediction error – Randomness accumulates with the choice of each feature, influencing the choice of its successors • Existing feature selection for microarray data is a model for video [Xing 2001] – Forward search based on information gain: 7130 features reduced to 360 – Backward elimination based on Markov Blanket: 360 features reduced to 100 or less – Leave-one-out cross-validation decides the best size of the feature subset 19

Adapting to sparse training data 1. Introduction • Difficulties of feature selection in video, as in genetic microarray data 2. Progress 2. 1. BSMT • 2. 2. CSMT 2. 2. 1. Sparse train 2. 2. 2. Search 2. 2. 3. Experiment 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion – Huge feature sets with 7130 dimensions – Small training data sets with 38 training data Renders some feature selection algorithm ineffective [Liu 2003] – More coarsely quantized prediction error – Randomness accumulates with the choice of each feature, influencing the choice of its successors • Existing feature selection for microarray data is a model for video [Xing 2001] – Forward search based on information gain: 7130 features reduced to 360 – Backward elimination based on Markov Blanket: 360 features reduced to 100 or less – Leave-one-out cross-validation decides the best size of the feature subset 19

Complement Sort-Merge Tree for video retrieval • Focuses on sparse and noisy training data in video retrieval 2. Progress 2. 1. BSMT • Combines the performance guarantees of a 2. 2. CSMT wrapper method with the logical organization 2. 2. 1. Sparse train 2. 2. 2. Search of a filter method 2. 2. 3. Experiment 1. Introduction 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion – Outer wrapper model for high accuracy – Inner filter method to merge the feature subsets based on “complement” requirement, addressing the limitation of sparse training data 20

Complement Sort-Merge Tree for video retrieval • Focuses on sparse and noisy training data in video retrieval 2. Progress 2. 1. BSMT • Combines the performance guarantees of a 2. 2. CSMT wrapper method with the logical organization 2. 2. 1. Sparse train 2. 2. 2. Search of a filter method 2. 2. 3. Experiment 1. Introduction 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion – Outer wrapper model for high accuracy – Inner filter method to merge the feature subsets based on “complement” requirement, addressing the limitation of sparse training data 20

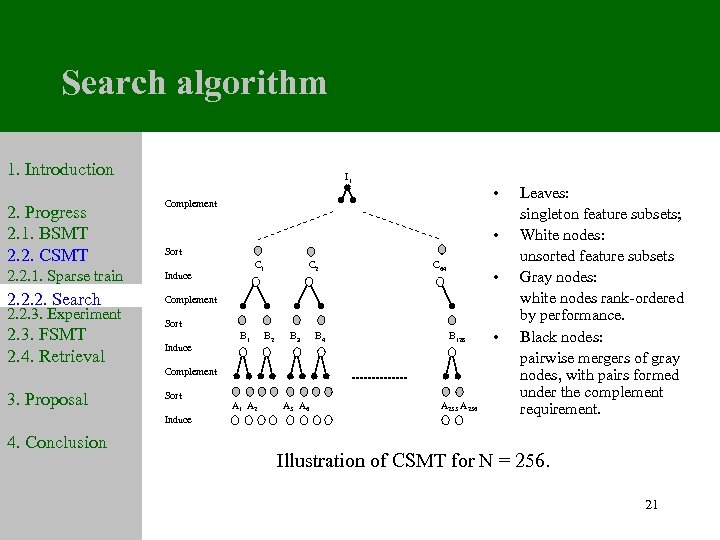

Search algorithm 1. Introduction I 1 2. Progress 2. 1. BSMT 2. 2. CSMT • Complement 2. 2. 1. Sparse train 2. 2. 2. Search 2. 2. 3. Experiment 2. 3. FSMT 2. 4. Retrieval 3. Proposal • Sort Induce C 64 • Complement Sort Induce B 1 B 2 B 3 B 4 B 128 Complement Sort Induce 4. Conclusion C 1 C 2 A 1 A 2 A 5 A 6 A 255 A 256 • Leaves: singleton feature subsets; White nodes: unsorted feature subsets Gray nodes: white nodes rank-ordered by performance. Black nodes: pairwise mergers of gray nodes, with pairs formed under the complement requirement. Illustration of CSMT for N = 256. 21

Search algorithm 1. Introduction I 1 2. Progress 2. 1. BSMT 2. 2. CSMT • Complement 2. 2. 1. Sparse train 2. 2. 2. Search 2. 2. 3. Experiment 2. 3. FSMT 2. 4. Retrieval 3. Proposal • Sort Induce C 64 • Complement Sort Induce B 1 B 2 B 3 B 4 B 128 Complement Sort Induce 4. Conclusion C 1 C 2 A 1 A 2 A 5 A 6 A 255 A 256 • Leaves: singleton feature subsets; White nodes: unsorted feature subsets Gray nodes: white nodes rank-ordered by performance. Black nodes: pairwise mergers of gray nodes, with pairs formed under the complement requirement. Illustration of CSMT for N = 256. 21

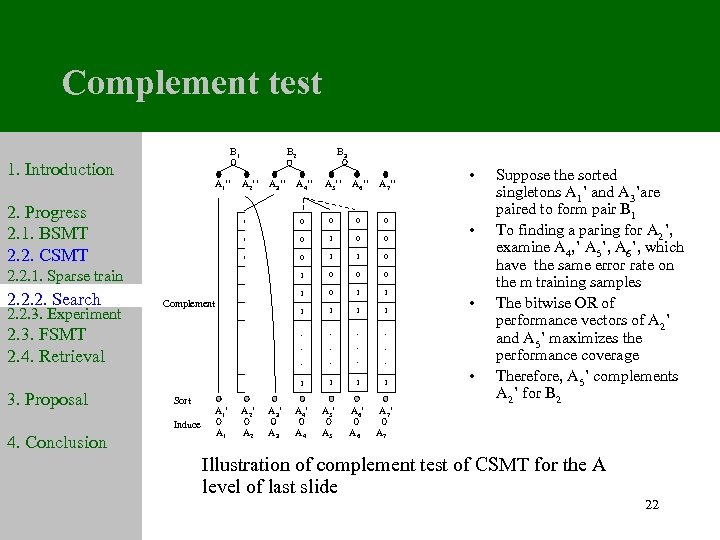

Complement test B 1 B 2 B 3 1. Introduction A 1’’ A 2’’ A 3’’ A 4’’ A 5’’ A 6’’ A 7’‘ • 2. Progress 2. 1. BSMT 2. 2. CSMT 0 2. 2. 1. Sparse train 2. 2. 2. Search 2. 2. 3. Experiment 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion 0 1 0 0 0 1 1 1 1 . . . . 1 Induce 0 1 Sort 0 0 Complement 0 0 0 1 1 A 1’ A 2’ A 3’ A 4’ A 5’ A 6’ A 7’ • • • Suppose the sorted singletons A 1’ and A 3’are paired to form pair B 1 To finding a paring for A 2’, examine A 4, ’ A 5’, A 6’, which have the same error rate on the m training samples The bitwise OR of performance vectors of A 2’ and A 5’ maximizes the performance coverage Therefore, A 5’ complements A 2’ for B 2 A 1 A 2 A 3 A 4 A 5 A 6 A 7 Illustration of complement test of CSMT for the A level of last slide 22

Complement test B 1 B 2 B 3 1. Introduction A 1’’ A 2’’ A 3’’ A 4’’ A 5’’ A 6’’ A 7’‘ • 2. Progress 2. 1. BSMT 2. 2. CSMT 0 2. 2. 1. Sparse train 2. 2. 2. Search 2. 2. 3. Experiment 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion 0 1 0 0 0 1 1 1 1 . . . . 1 Induce 0 1 Sort 0 0 Complement 0 0 0 1 1 A 1’ A 2’ A 3’ A 4’ A 5’ A 6’ A 7’ • • • Suppose the sorted singletons A 1’ and A 3’are paired to form pair B 1 To finding a paring for A 2’, examine A 4, ’ A 5’, A 6’, which have the same error rate on the m training samples The bitwise OR of performance vectors of A 2’ and A 5’ maximizes the performance coverage Therefore, A 5’ complements A 2’ for B 2 A 1 A 2 A 3 A 4 A 5 A 6 A 7 Illustration of complement test of CSMT for the A level of last slide 22

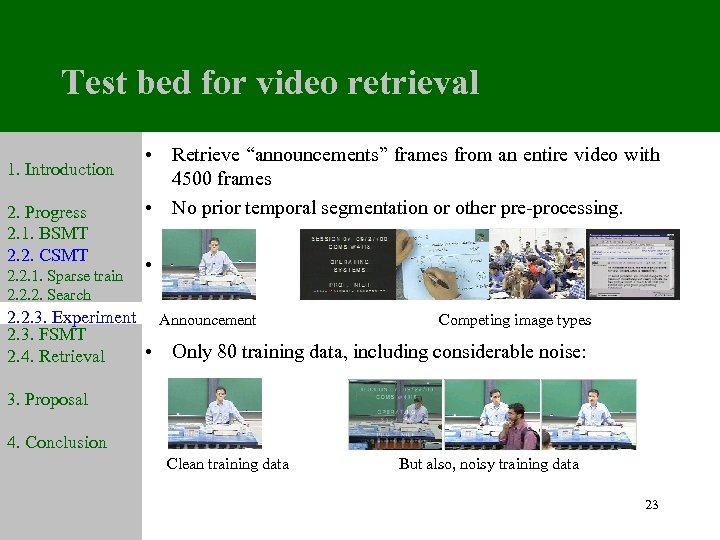

Test bed for video retrieval 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT • Retrieve “announcements” frames from an entire video with 4500 frames • No prior temporal segmentation or other pre-processing. • 2. 2. 1. Sparse train 2. 2. 2. Search 2. 2. 3. Experiment Announcement 2. 3. FSMT 2. 4. Retrieval 3. Proposal Competing image types • Only 80 training data, including considerable noise: 4. Conclusion Clean training data But also, noisy training data 23

Test bed for video retrieval 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT • Retrieve “announcements” frames from an entire video with 4500 frames • No prior temporal segmentation or other pre-processing. • 2. 2. 1. Sparse train 2. 2. 2. Search 2. 2. 3. Experiment Announcement 2. 3. FSMT 2. 4. Retrieval 3. Proposal Competing image types • Only 80 training data, including considerable noise: 4. Conclusion Clean training data But also, noisy training data 23

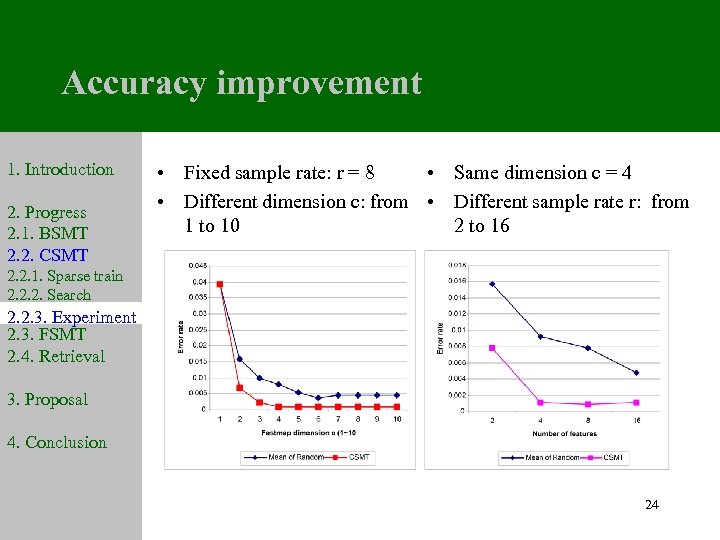

Accuracy improvement 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT • Fixed sample rate: r = 8 • Same dimension c = 4 • Different dimension c: from • Different sample rate r: from 1 to 10 2 to 16 2. 2. 1. Sparse train 2. 2. 2. Search 2. 2. 3. Experiment 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion 24

Accuracy improvement 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT • Fixed sample rate: r = 8 • Same dimension c = 4 • Different dimension c: from • Different sample rate r: from 1 to 10 2 to 16 2. 2. 1. Sparse train 2. 2. 2. Search 2. 2. 3. Experiment 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion 24

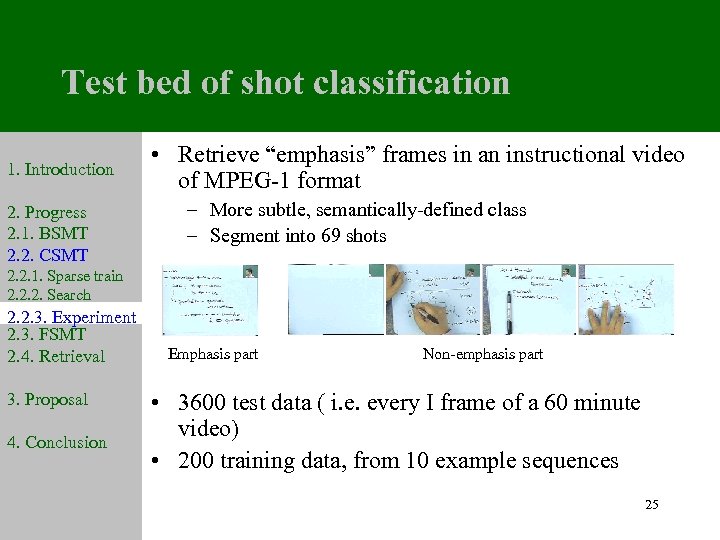

Test bed of shot classification 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT • Retrieve “emphasis” frames in an instructional video of MPEG-1 format – More subtle, semantically-defined class – Segment into 69 shots 2. 2. 1. Sparse train 2. 2. 2. Search 2. 2. 3. Experiment 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion Emphasis part Non-emphasis part • 3600 test data ( i. e. every I frame of a 60 minute video) • 200 training data, from 10 example sequences 25

Test bed of shot classification 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT • Retrieve “emphasis” frames in an instructional video of MPEG-1 format – More subtle, semantically-defined class – Segment into 69 shots 2. 2. 1. Sparse train 2. 2. 2. Search 2. 2. 3. Experiment 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion Emphasis part Non-emphasis part • 3600 test data ( i. e. every I frame of a 60 minute video) • 200 training data, from 10 example sequences 25

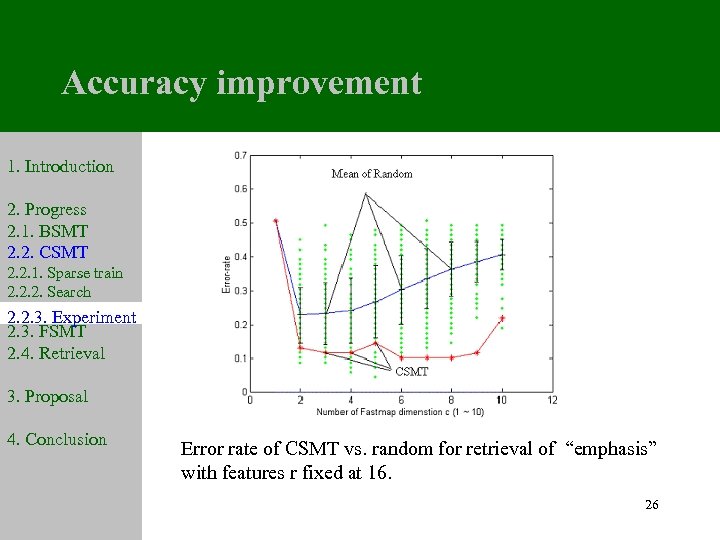

Accuracy improvement 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 2. 1. Sparse train 2. 2. 2. Search 2. 2. 3. Experiment 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion Error rate of CSMT vs. random for retrieval of “emphasis” with features r fixed at 16. 26

Accuracy improvement 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 2. 1. Sparse train 2. 2. 2. Search 2. 2. 3. Experiment 2. 3. FSMT 2. 4. Retrieval 3. Proposal 4. Conclusion Error rate of CSMT vs. random for retrieval of “emphasis” with features r fixed at 16. 26

Fast-converging Sort-Merge Tree 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT 2. 3. 1. Search 2. 3. 2. Experiment 2. 4. Retrieval 3. Proposal • Focuses on the problem of – Over-learning data sets – On-line retrieval requirement • Sets up selected parts of the feature selection tree to save time, without sacrificing accuracy • Uses information gain as an evaluation metric, instead of prediction error in BSMT • Controls the convergence speed (amount of pruning at each level ) based on user’s requirement 4. Conclusion 27

Fast-converging Sort-Merge Tree 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT 2. 3. 1. Search 2. 3. 2. Experiment 2. 4. Retrieval 3. Proposal • Focuses on the problem of – Over-learning data sets – On-line retrieval requirement • Sets up selected parts of the feature selection tree to save time, without sacrificing accuracy • Uses information gain as an evaluation metric, instead of prediction error in BSMT • Controls the convergence speed (amount of pruning at each level ) based on user’s requirement 4. Conclusion 27

Search algorithm 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT 2. 3. 1. Search 2. 3. 2. Experiment 2. 4. Retrieval 3. Proposal 4. Conclusion • Initialize level = 0 – N singleton feature subsets. – Calculate R: number of features retained at each level, based desired convergence rate and the goal of r features at conclusion. • While level < log 2 r +1 – – Induce on every feature subset. Sort subsets based on information gain. Prune the level based on R. Combine, pair-wise, feature subsets form those remaining. 28

Search algorithm 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT 2. 3. 1. Search 2. 3. 2. Experiment 2. 4. Retrieval 3. Proposal 4. Conclusion • Initialize level = 0 – N singleton feature subsets. – Calculate R: number of features retained at each level, based desired convergence rate and the goal of r features at conclusion. • While level < log 2 r +1 – – Induce on every feature subset. Sort subsets based on information gain. Prune the level based on R. Combine, pair-wise, feature subsets form those remaining. 28

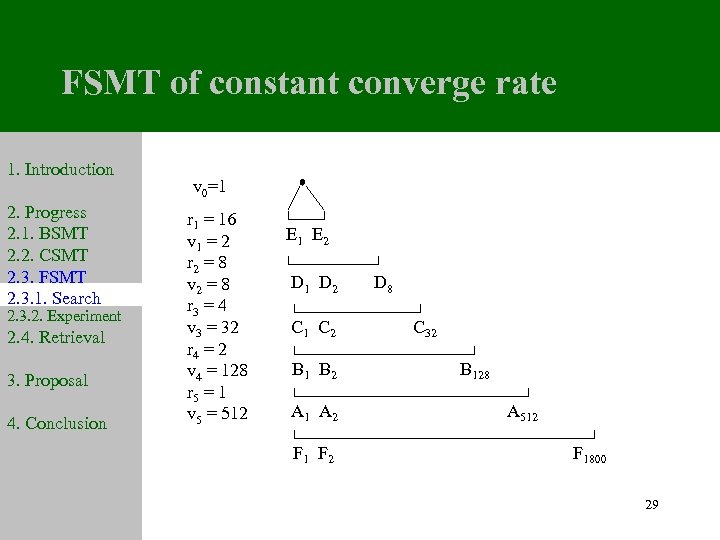

FSMT of constant converge rate 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT 2. 3. 1. Search 2. 3. 2. Experiment 2. 4. Retrieval 3. Proposal 4. Conclusion v 0=1 r 1 = 16 v 1 = 2 r 2 = 8 v 2 = 8 r 3 = 4 v 3 = 32 r 4 = 2 v 4 = 128 r 5 = 1 v 5 = 512 E 1 E 2 D 1 D 2 D 8 C 1 C 2 C 32 B 1 B 2 B 128 A 1 A 2 A 512 F 1 F 2 F 1800 29

FSMT of constant converge rate 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT 2. 3. 1. Search 2. 3. 2. Experiment 2. 4. Retrieval 3. Proposal 4. Conclusion v 0=1 r 1 = 16 v 1 = 2 r 2 = 8 v 2 = 8 r 3 = 4 v 3 = 32 r 4 = 2 v 4 = 128 r 5 = 1 v 5 = 512 E 1 E 2 D 1 D 2 D 8 C 1 C 2 C 32 B 1 B 2 B 128 A 1 A 2 A 512 F 1 F 2 F 1800 29

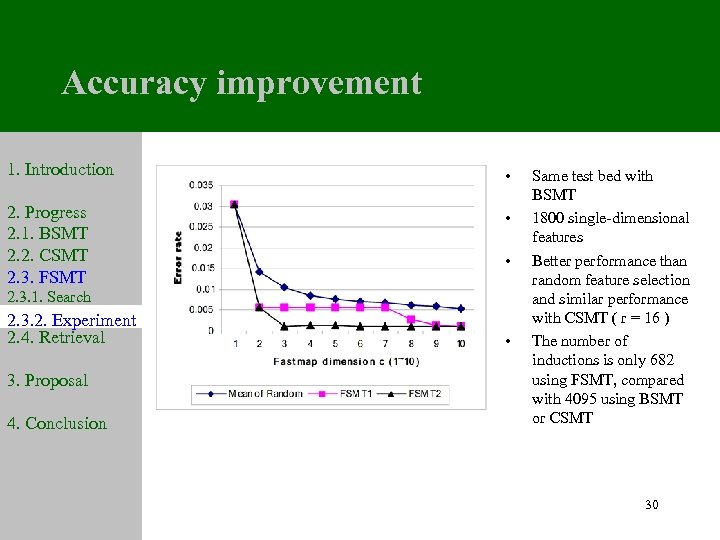

Accuracy improvement 1. Introduction • 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT • • 2. 3. 1. Search 2. 3. 2. Experiment 2. 4. Retrieval 3. Proposal 4. Conclusion • Same test bed with BSMT 1800 single-dimensional features Better performance than random feature selection and similar performance with CSMT ( r = 16 ) The number of inductions is only 682 using FSMT, compared with 4095 using BSMT or CSMT 30

Accuracy improvement 1. Introduction • 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT • • 2. 3. 1. Search 2. 3. 2. Experiment 2. 4. Retrieval 3. Proposal 4. Conclusion • Same test bed with BSMT 1800 single-dimensional features Better performance than random feature selection and similar performance with CSMT ( r = 16 ) The number of inductions is only 682 using FSMT, compared with 4095 using BSMT or CSMT 30

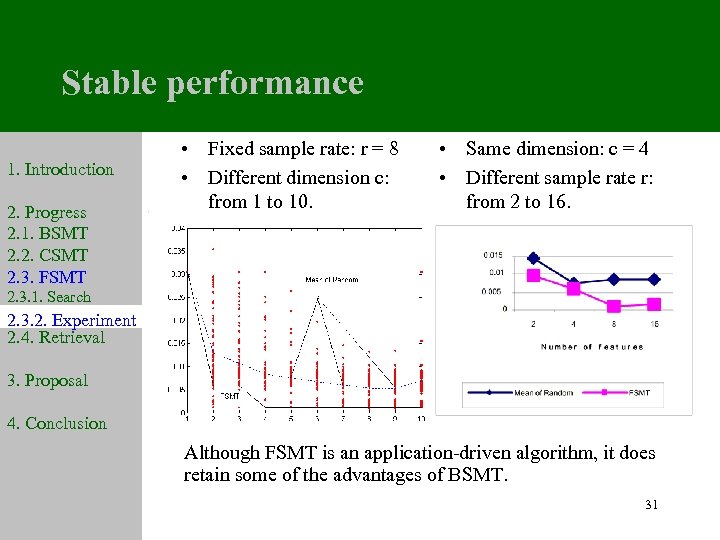

Stable performance 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT • Fixed sample rate: r = 8 • Different dimension c: from 1 to 10. • Same dimension: c = 4 • Different sample rate r: from 2 to 16. 2. 3. 1. Search 2. 3. 2. Experiment 2. 4. Retrieval 3. Proposal 4. Conclusion Although FSMT is an application-driven algorithm, it does retain some of the advantages of BSMT. 31

Stable performance 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT • Fixed sample rate: r = 8 • Different dimension c: from 1 to 10. • Same dimension: c = 4 • Different sample rate r: from 2 to 16. 2. 3. 1. Search 2. 3. 2. Experiment 2. 4. Retrieval 3. Proposal 4. Conclusion Although FSMT is an application-driven algorithm, it does retain some of the advantages of BSMT. 31

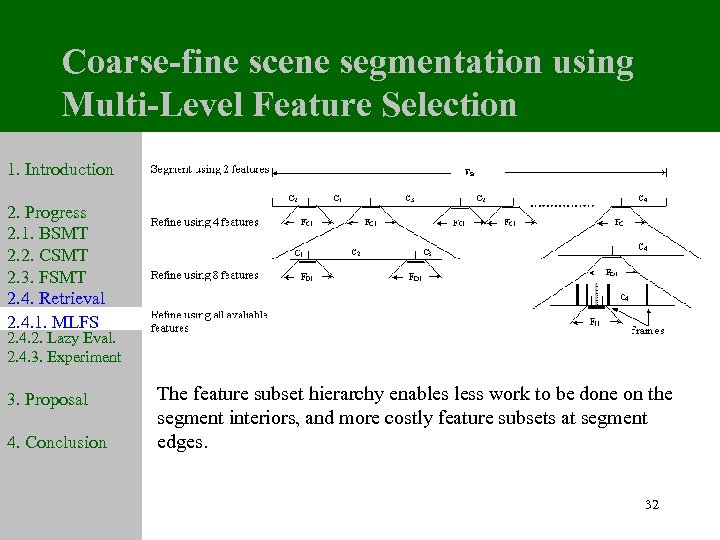

Coarse-fine scene segmentation using Multi-Level Feature Selection 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 2. 4. 1. MLFS 2. 4. 2. Lazy Eval. 2. 4. 3. Experiment 3. Proposal 4. Conclusion The feature subset hierarchy enables less work to be done on the segment interiors, and more costly feature subsets at segment edges. 32

Coarse-fine scene segmentation using Multi-Level Feature Selection 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 2. 4. 1. MLFS 2. 4. 2. Lazy Eval. 2. 4. 3. Experiment 3. Proposal 4. Conclusion The feature subset hierarchy enables less work to be done on the segment interiors, and more costly feature subsets at segment edges. 32

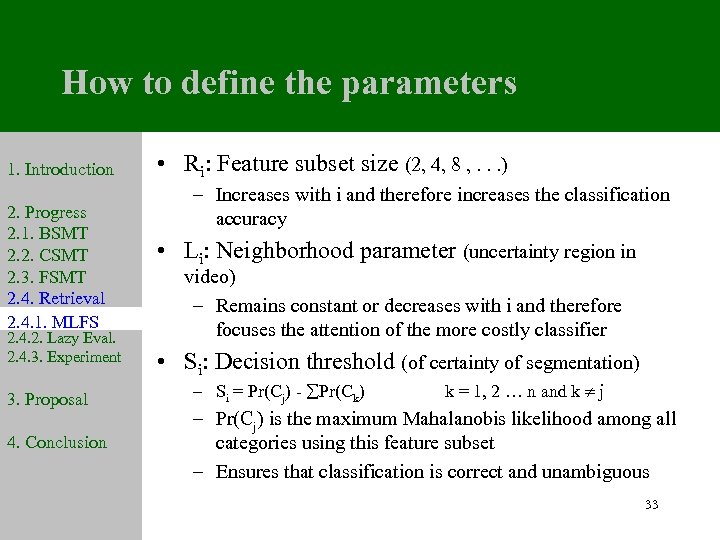

How to define the parameters 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 2. 4. 1. MLFS 2. 4. 2. Lazy Eval. 2. 4. 3. Experiment 3. Proposal 4. Conclusion • Ri: Feature subset size (2, 4, 8 , . . . ) – Increases with i and therefore increases the classification accuracy • Li: Neighborhood parameter (uncertainty region in video) – Remains constant or decreases with i and therefore focuses the attention of the more costly classifier • Si: Decision threshold (of certainty of segmentation) – Si = Pr(Cj) - Pr(Ck) k = 1, 2 … n and k j – Pr(Cj) is the maximum Mahalanobis likelihood among all categories using this feature subset – Ensures that classification is correct and unambiguous 33

How to define the parameters 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 2. 4. 1. MLFS 2. 4. 2. Lazy Eval. 2. 4. 3. Experiment 3. Proposal 4. Conclusion • Ri: Feature subset size (2, 4, 8 , . . . ) – Increases with i and therefore increases the classification accuracy • Li: Neighborhood parameter (uncertainty region in video) – Remains constant or decreases with i and therefore focuses the attention of the more costly classifier • Si: Decision threshold (of certainty of segmentation) – Si = Pr(Cj) - Pr(Ck) k = 1, 2 … n and k j – Pr(Cj) is the maximum Mahalanobis likelihood among all categories using this feature subset – Ensures that classification is correct and unambiguous 33

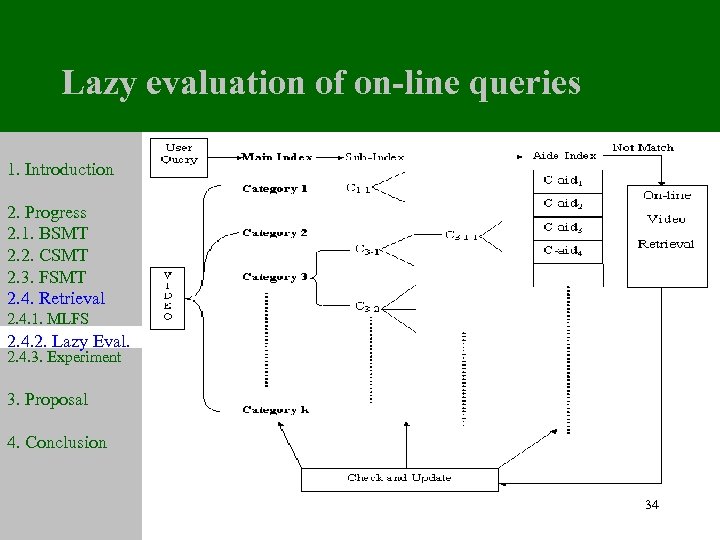

Lazy evaluation of on-line queries 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 2. 4. 1. MLFS 2. 4. 2. Lazy Eval. 2. 4. 3. Experiment 3. Proposal 4. Conclusion 34

Lazy evaluation of on-line queries 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 2. 4. 1. MLFS 2. 4. 2. Lazy Eval. 2. 4. 3. Experiment 3. Proposal 4. Conclusion 34

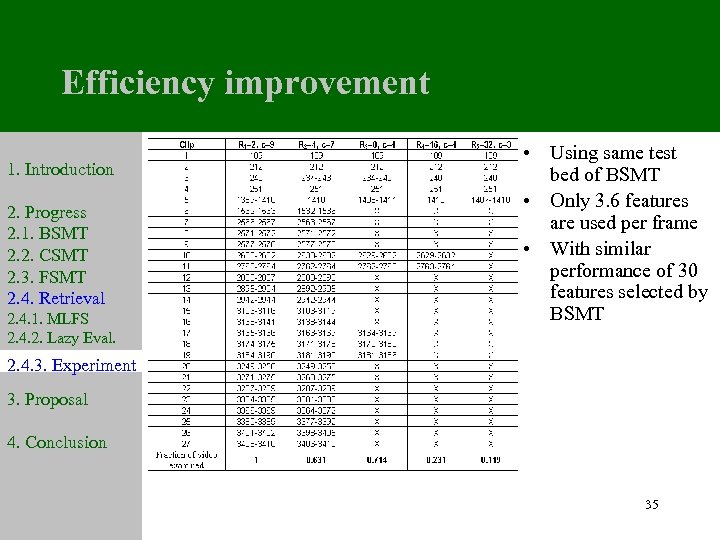

Efficiency improvement 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 2. 4. 1. MLFS 2. 4. 2. Lazy Eval. 2. 4. 3. Experiment • Using same test bed of BSMT • Only 3. 6 features are used per frame • With similar performance of 30 features selected by BSMT 2. 4. 3. Experiment 3. Proposal 4. Conclusion 35

Efficiency improvement 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 2. 4. 1. MLFS 2. 4. 2. Lazy Eval. 2. 4. 3. Experiment • Using same test bed of BSMT • Only 3. 6 features are used per frame • With similar performance of 30 features selected by BSMT 2. 4. 3. Experiment 3. Proposal 4. Conclusion 35

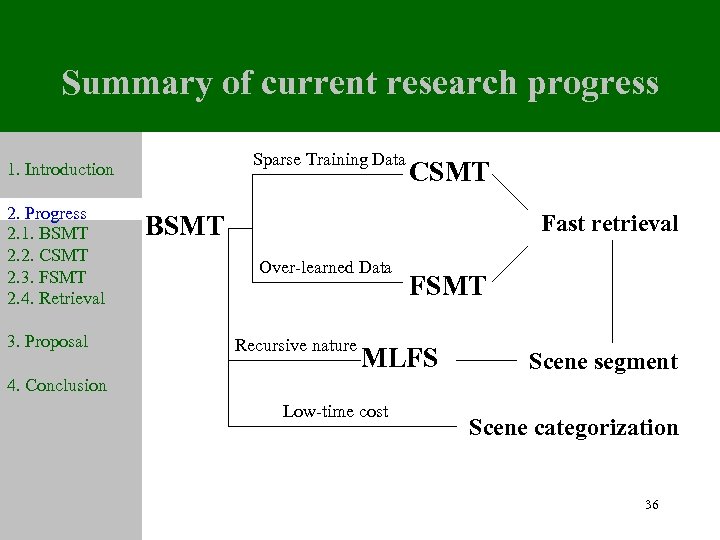

Summary of current research progress Sparse Training Data 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal CSMT Fast retrieval BSMT Over-learned Data Recursive nature FSMT MLFS Scene segment 4. Conclusion Low-time cost Scene categorization 36

Summary of current research progress Sparse Training Data 1. Introduction 2. Progress 2. 1. BSMT 2. 2. CSMT 2. 3. FSMT 2. 4. Retrieval 3. Proposal CSMT Fast retrieval BSMT Over-learned Data Recursive nature FSMT MLFS Scene segment 4. Conclusion Low-time cost Scene categorization 36

Outline 1. Introduction 2. Progress 3. Proposal 4. Conclusion • Introduction • Research progress • Proposed work • • • Improve current feature selection algorithm Algorithm evaluation New Applications Size of training data Theoretical analysis • Conclusion and schedule 37

Outline 1. Introduction 2. Progress 3. Proposal 4. Conclusion • Introduction • Research progress • Proposed work • • • Improve current feature selection algorithm Algorithm evaluation New Applications Size of training data Theoretical analysis • Conclusion and schedule 37

Improvements to current algorithms 1. Introduction 2. Progress 3. Proposal 3. 1. Improve 3. 2. Evaluation 3. 3. Application 3. 4. Train size 3. 5. Theory 4. Conclusion • Search algorithm – Set up the bottom of the Sort-Merge Tree more efficiently • Induction algorithm of feature selection – Explore SVM: powerful for binary classification and sparse training data – Explore HMM: good performance in temporal analysis • Evaluation metric – Explore filter evaluation metric in the wrapper method 38

Improvements to current algorithms 1. Introduction 2. Progress 3. Proposal 3. 1. Improve 3. 2. Evaluation 3. 3. Application 3. 4. Train size 3. 5. Theory 4. Conclusion • Search algorithm – Set up the bottom of the Sort-Merge Tree more efficiently • Induction algorithm of feature selection – Explore SVM: powerful for binary classification and sparse training data – Explore HMM: good performance in temporal analysis • Evaluation metric – Explore filter evaluation metric in the wrapper method 38

Algorithm evaluation 1. Introduction 2. Progress 3. Proposal 3. 1. Improve 3. 2. Evaluation 3. 3. Application 3. 4. Train size 3. 5. Theory 4. Conclusion • Accuracy – Classification evaluation: Error rate, Balanced Error Rate (BER), Received Operating Characteristic (ROC) Curve, Area Under Curve (AUC) – Video analysis evaluation: Precision, Recall, F-measure • Efficiency – Selected feature subsets size: Fraction of Features (FF), best size of feature subset – Time cost: of search algorithm, of induction algorithm; stopping point • Dependence – How to choose proper classifier – How to compare feature selection algorithms in certain applications 39

Algorithm evaluation 1. Introduction 2. Progress 3. Proposal 3. 1. Improve 3. 2. Evaluation 3. 3. Application 3. 4. Train size 3. 5. Theory 4. Conclusion • Accuracy – Classification evaluation: Error rate, Balanced Error Rate (BER), Received Operating Characteristic (ROC) Curve, Area Under Curve (AUC) – Video analysis evaluation: Precision, Recall, F-measure • Efficiency – Selected feature subsets size: Fraction of Features (FF), best size of feature subset – Time cost: of search algorithm, of induction algorithm; stopping point • Dependence – How to choose proper classifier – How to compare feature selection algorithms in certain applications 39

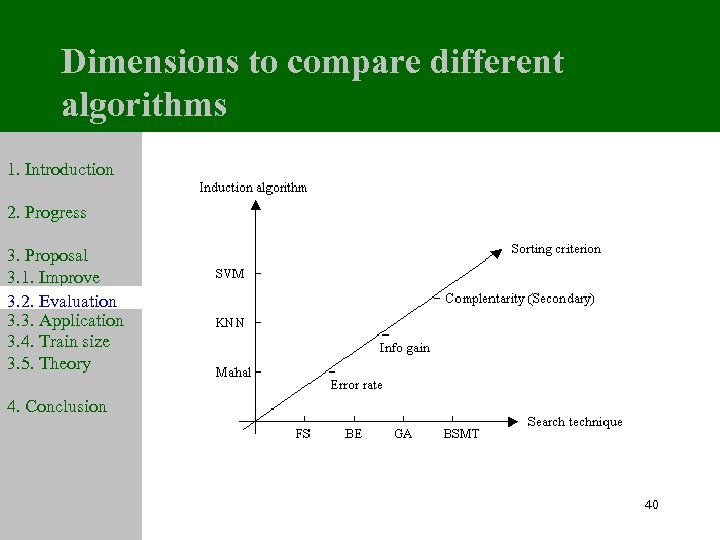

Dimensions to compare different algorithms 1. Introduction 2. Progress 3. Proposal 3. 1. Improve 3. 2. Evaluation 3. 3. Application 3. 4. Train size 3. 5. Theory 4. Conclusion 40

Dimensions to compare different algorithms 1. Introduction 2. Progress 3. Proposal 3. 1. Improve 3. 2. Evaluation 3. 3. Application 3. 4. Train size 3. 5. Theory 4. Conclusion 40

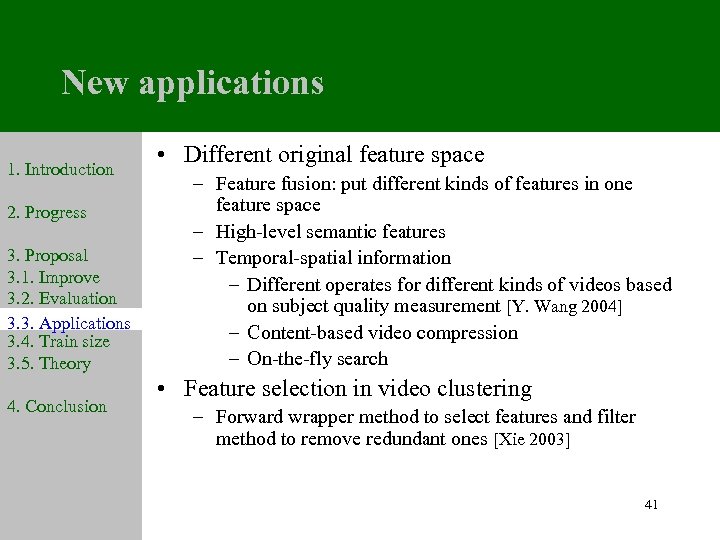

New applications 1. Introduction 2. Progress 3. Proposal 3. 1. Improve 3. 2. Evaluation 3. 3. Applications 3. 4. Train size 3. 5. Theory 4. Conclusion • Different original feature space – Feature fusion: put different kinds of features in one feature space – High-level semantic features – Temporal-spatial information – Different operates for different kinds of videos based on subject quality measurement [Y. Wang 2004] – Content-based video compression – On-the-fly search • Feature selection in video clustering – Forward wrapper method to select features and filter method to remove redundant ones [Xie 2003] 41

New applications 1. Introduction 2. Progress 3. Proposal 3. 1. Improve 3. 2. Evaluation 3. 3. Applications 3. 4. Train size 3. 5. Theory 4. Conclusion • Different original feature space – Feature fusion: put different kinds of features in one feature space – High-level semantic features – Temporal-spatial information – Different operates for different kinds of videos based on subject quality measurement [Y. Wang 2004] – Content-based video compression – On-the-fly search • Feature selection in video clustering – Forward wrapper method to select features and filter method to remove redundant ones [Xie 2003] 41

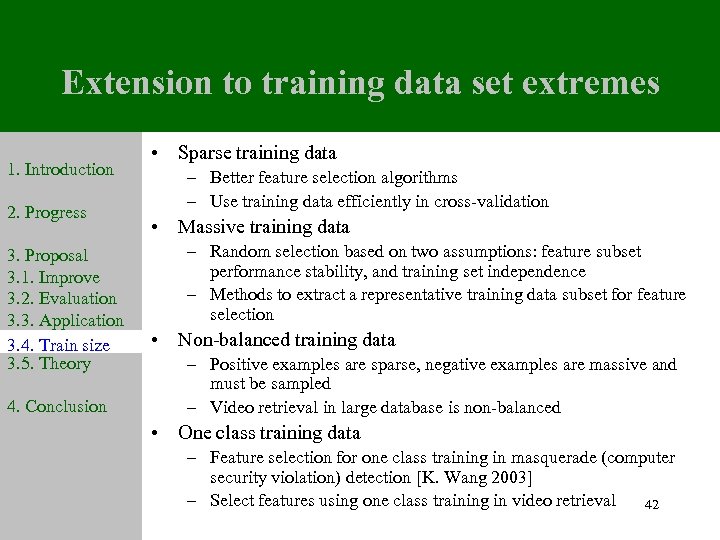

Extension to training data set extremes 1. Introduction 2. Progress 3. Proposal 3. 1. Improve 3. 2. Evaluation 3. 3. Application 3. 4. Train size 3. 5. Theory 4. Conclusion • Sparse training data – Better feature selection algorithms – Use training data efficiently in cross-validation • Massive training data – Random selection based on two assumptions: feature subset performance stability, and training set independence – Methods to extract a representative training data subset for feature selection • Non-balanced training data – Positive examples are sparse, negative examples are massive and must be sampled – Video retrieval in large database is non-balanced • One class training data – Feature selection for one class training in masquerade (computer security violation) detection [K. Wang 2003] – Select features using one class training in video retrieval 42

Extension to training data set extremes 1. Introduction 2. Progress 3. Proposal 3. 1. Improve 3. 2. Evaluation 3. 3. Application 3. 4. Train size 3. 5. Theory 4. Conclusion • Sparse training data – Better feature selection algorithms – Use training data efficiently in cross-validation • Massive training data – Random selection based on two assumptions: feature subset performance stability, and training set independence – Methods to extract a representative training data subset for feature selection • Non-balanced training data – Positive examples are sparse, negative examples are massive and must be sampled – Video retrieval in large database is non-balanced • One class training data – Feature selection for one class training in masquerade (computer security violation) detection [K. Wang 2003] – Select features using one class training in video retrieval 42

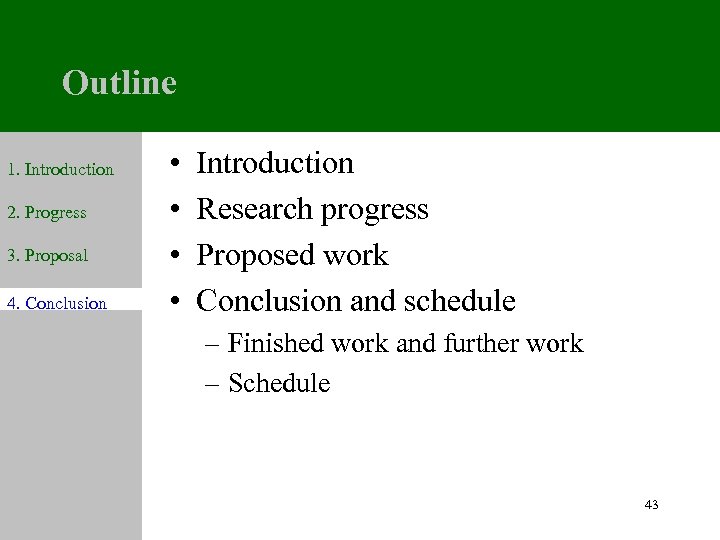

Outline 1. Introduction 2. Progress 3. Proposal 4. Conclusion • • Introduction Research progress Proposed work Conclusion and schedule – Finished work and further work – Schedule 43

Outline 1. Introduction 2. Progress 3. Proposal 4. Conclusion • • Introduction Research progress Proposed work Conclusion and schedule – Finished work and further work – Schedule 43

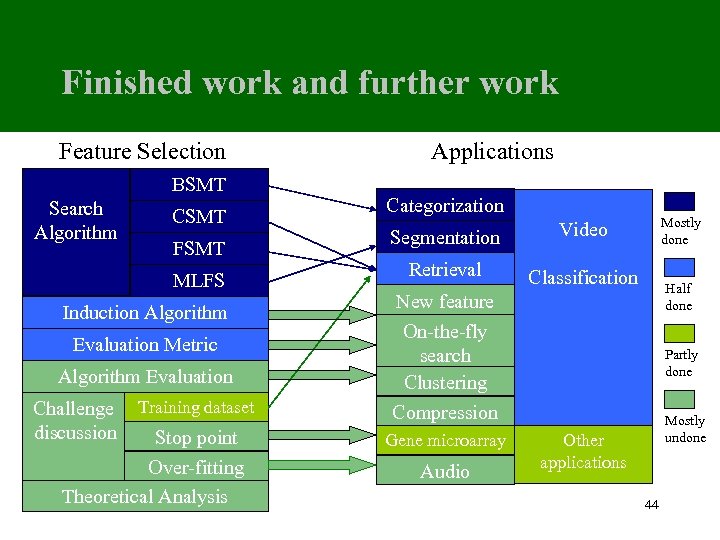

Finished work and further work Feature Selection BSMT Search Algorithm CSMT FSMT MLFS Induction Algorithm Evaluation Metric Algorithm Evaluation Challenge discussion Training dataset Stop point Over-fitting Theoretical Analysis Applications Categorization Segmentation Retrieval Mostly done Video Classification Half done New feature set On-the-fly search Clustering Compression Gene microarray Audio Partly done Mostly undone Other applications 44

Finished work and further work Feature Selection BSMT Search Algorithm CSMT FSMT MLFS Induction Algorithm Evaluation Metric Algorithm Evaluation Challenge discussion Training dataset Stop point Over-fitting Theoretical Analysis Applications Categorization Segmentation Retrieval Mostly done Video Classification Half done New feature set On-the-fly search Clustering Compression Gene microarray Audio Partly done Mostly undone Other applications 44

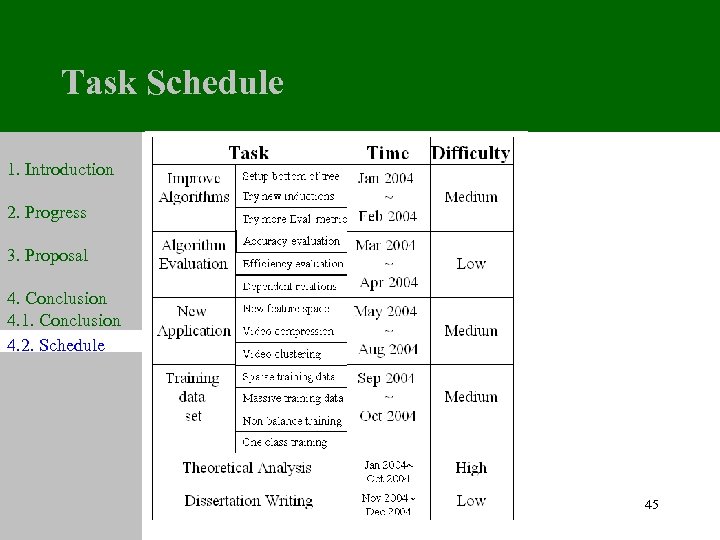

Task Schedule 1. Introduction 2. Progress 3. Proposal 4. Conclusion 4. 1. Conclusion 4. 2. Schedule 45

Task Schedule 1. Introduction 2. Progress 3. Proposal 4. Conclusion 4. 1. Conclusion 4. 2. Schedule 45

Ph. D. Thesis Proposal 1. Introduction 2. Progress 3. Proposal 4. Conclusion Thank you! 46

Ph. D. Thesis Proposal 1. Introduction 2. Progress 3. Proposal 4. Conclusion Thank you! 46