f8251499ad0487d6095ba3bb5c82fb7d.ppt

- Количество слайдов: 19

Perspectives on LHC Computing José M. Hernández (CIEMAT, Madrid) On behalf of the Spanish LHC Computing community Jornadas CPAN 2013, Santiago de Compostela

Perspectives on LHC Computing José M. Hernández (CIEMAT, Madrid) On behalf of the Spanish LHC Computing community Jornadas CPAN 2013, Santiago de Compostela

The LHC Computing Challenge § The Large Hadron Collider (LHC) delivered in Run 1 (2010 -2012) billions of recorded collisions to the experiments § ~ 100 PB of data stored at CERN on tape § The Worldwide LHC Computing Grid (WLCG) provides compute and storage resources for data processing, simulation and analysis § ~ 300 k cores, ~200 PB disk, ~200 PB tape § The computing challenge resulted in a great success § Unprecedented data volume analyzed in record time delivering great scientific results (e. g. Higgs boson discovery) José Hernández LHC Computing Perspectives 2

The LHC Computing Challenge § The Large Hadron Collider (LHC) delivered in Run 1 (2010 -2012) billions of recorded collisions to the experiments § ~ 100 PB of data stored at CERN on tape § The Worldwide LHC Computing Grid (WLCG) provides compute and storage resources for data processing, simulation and analysis § ~ 300 k cores, ~200 PB disk, ~200 PB tape § The computing challenge resulted in a great success § Unprecedented data volume analyzed in record time delivering great scientific results (e. g. Higgs boson discovery) José Hernández LHC Computing Perspectives 2

Global effort, global success José Hernández LHC Computing Perspectives 3

Global effort, global success José Hernández LHC Computing Perspectives 3

Computing is part of the global effort Computing José Hernández CMS Computing Upgrade and Evolution 28 October 2013, Seoul, Korea 4

Computing is part of the global effort Computing José Hernández CMS Computing Upgrade and Evolution 28 October 2013, Seoul, Korea 4

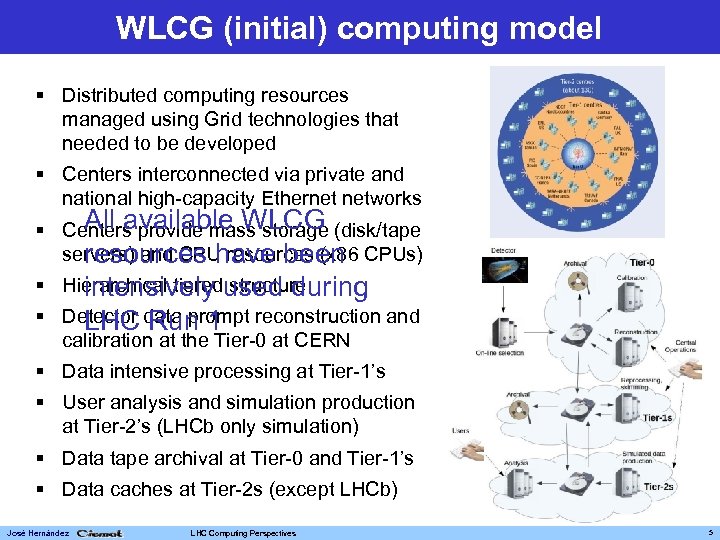

WLCG (initial) computing model § Distributed computing resources managed using Grid technologies that needed to be developed § Centers interconnected via private and national high-capacity Ethernet networks All available WLCG § Centers provide mass storage (disk/tape servers) and CPU resources (x 86 CPUs) resources have been § Hierarchical tieredused during intensively structure § Detector data prompt reconstruction and LHC Run 1 calibration at the Tier-0 at CERN § Data intensive processing at Tier-1’s § User analysis and simulation production at Tier-2’s (LHCb only simulation) § Data tape archival at Tier-0 and Tier-1’s § Data caches at Tier-2 s (except LHCb) José Hernández LHC Computing Perspectives 5

WLCG (initial) computing model § Distributed computing resources managed using Grid technologies that needed to be developed § Centers interconnected via private and national high-capacity Ethernet networks All available WLCG § Centers provide mass storage (disk/tape servers) and CPU resources (x 86 CPUs) resources have been § Hierarchical tieredused during intensively structure § Detector data prompt reconstruction and LHC Run 1 calibration at the Tier-0 at CERN § Data intensive processing at Tier-1’s § User analysis and simulation production at Tier-2’s (LHCb only simulation) § Data tape archival at Tier-0 and Tier-1’s § Data caches at Tier-2 s (except LHCb) José Hernández LHC Computing Perspectives 5

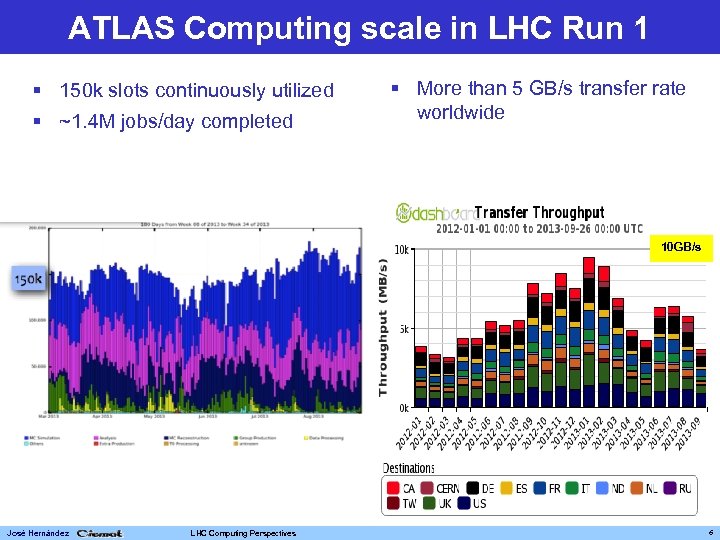

ATLAS Computing scale in LHC Run 1 § 150 k slots continuously utilized § ~1. 4 M jobs/day completed § More than 5 GB/s transfer rate worldwide 10 GB/s José Hernández LHC Computing Perspectives 6

ATLAS Computing scale in LHC Run 1 § 150 k slots continuously utilized § ~1. 4 M jobs/day completed § More than 5 GB/s transfer rate worldwide 10 GB/s José Hernández LHC Computing Perspectives 6

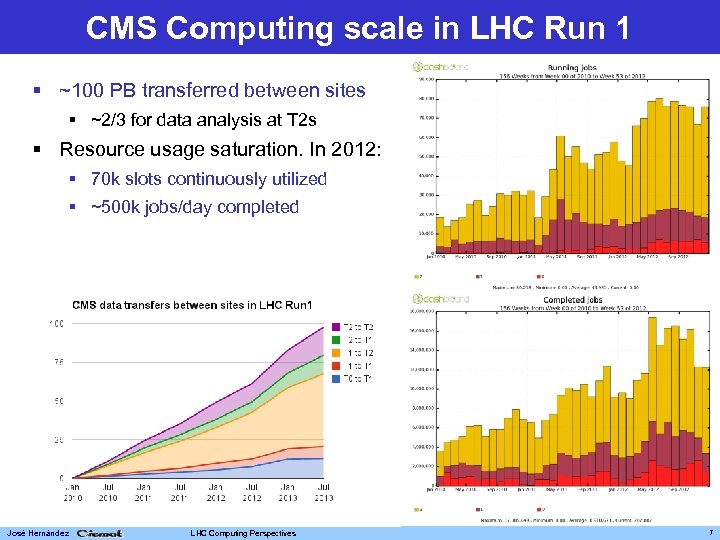

CMS Computing scale in LHC Run 1 § ~100 PB transferred between sites § ~2/3 for data analysis at T 2 s § Resource usage saturation. In 2012: § 70 k slots continuously utilized § ~500 k jobs/day completed José Hernández LHC Computing Perspectives 7

CMS Computing scale in LHC Run 1 § ~100 PB transferred between sites § ~2/3 for data analysis at T 2 s § Resource usage saturation. In 2012: § 70 k slots continuously utilized § ~500 k jobs/day completed José Hernández LHC Computing Perspectives 7

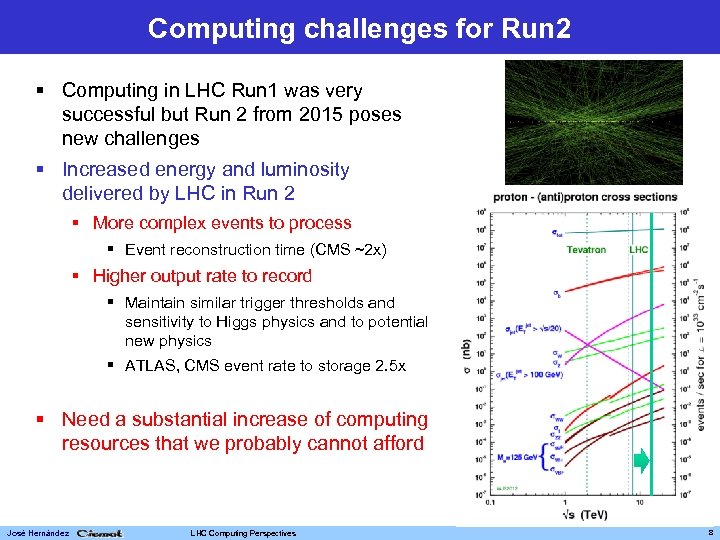

Computing challenges for Run 2 § Computing in LHC Run 1 was very successful but Run 2 from 2015 poses new challenges § Increased energy and luminosity delivered by LHC in Run 2 § More complex events to process § Event reconstruction time (CMS ~2 x) § Higher output rate to record § Maintain similar trigger thresholds and sensitivity to Higgs physics and to potential new physics § ATLAS, CMS event rate to storage 2. 5 x § Need a substantial increase of computing resources that we probably cannot afford José Hernández LHC Computing Perspectives 8

Computing challenges for Run 2 § Computing in LHC Run 1 was very successful but Run 2 from 2015 poses new challenges § Increased energy and luminosity delivered by LHC in Run 2 § More complex events to process § Event reconstruction time (CMS ~2 x) § Higher output rate to record § Maintain similar trigger thresholds and sensitivity to Higgs physics and to potential new physics § ATLAS, CMS event rate to storage 2. 5 x § Need a substantial increase of computing resources that we probably cannot afford José Hernández LHC Computing Perspectives 8

Upgrading LHC Computing in LS 1 § The shutdown period is a valuable opportunity to asses § Lessons and operational experiences of Run 1 § Computing demands of Run 2 § The technical and cost evolution of computing § Undertake intensive planning and development to prepare LHC Computing for 2015 and beyond § While sustaining steady state full scale operations § With an assumption of constrained funding § This has been happening internally to the experiments and collaboratively with CERN IT, WLCG, common software and computing projects § Upgrade in parallel to accelerator and detector upgrades to push the frontiers of HEP José Hernández LHC Computing Perspectives 9

Upgrading LHC Computing in LS 1 § The shutdown period is a valuable opportunity to asses § Lessons and operational experiences of Run 1 § Computing demands of Run 2 § The technical and cost evolution of computing § Undertake intensive planning and development to prepare LHC Computing for 2015 and beyond § While sustaining steady state full scale operations § With an assumption of constrained funding § This has been happening internally to the experiments and collaboratively with CERN IT, WLCG, common software and computing projects § Upgrade in parallel to accelerator and detector upgrades to push the frontiers of HEP José Hernández LHC Computing Perspectives 9

Computing strategy for Run 2 § Increase resources in WLCG as much as possible § Try to conform to constrained budget situation § Make a more efficient and flexible use of the available resources § Reduce CPU and storage needs § Less reprocessing passes, less simulated events, more compact data format, reduce data replication factor § Intelligent dynamic data placement § Automatic replication of hot data and deletion of cold data § Break down the boundaries between the computing tiers § Run reconstruction, simulation and analysis at Tier-1/Tier-2 indistinctly § Tier-1 s extension of the Tier-0 § Keep higher service level and custodial tape storage at Tier-1 § Centralized production of group analysis datasets § Shrink ‘chaotic analysis’ to only what really is user specific § Remove redundancies in processing and storage, reducing operational workloads while improving turnaround for users José Hernández LHC Computing Perspectives 10

Computing strategy for Run 2 § Increase resources in WLCG as much as possible § Try to conform to constrained budget situation § Make a more efficient and flexible use of the available resources § Reduce CPU and storage needs § Less reprocessing passes, less simulated events, more compact data format, reduce data replication factor § Intelligent dynamic data placement § Automatic replication of hot data and deletion of cold data § Break down the boundaries between the computing tiers § Run reconstruction, simulation and analysis at Tier-1/Tier-2 indistinctly § Tier-1 s extension of the Tier-0 § Keep higher service level and custodial tape storage at Tier-1 § Centralized production of group analysis datasets § Shrink ‘chaotic analysis’ to only what really is user specific § Remove redundancies in processing and storage, reducing operational workloads while improving turnaround for users José Hernández LHC Computing Perspectives 10

Access to new resources for Run 2 § Access to opportunistic resources § HPC clusters, academic or commercial clouds, volunteer computing § Significant increase in capacity with low cost (satisfy capacity peaks) § Use HLT farm for offline data processing § A significant resource (>10 k slots) § During extended periods with no data taking and even inter-fill periods § Adopt advanced architectures § Processing in Run 1 done under Enterprise Linux on x 86 CPUs § Many-core processors, low-power CPUs, GPU environments § Challenging heterogeneous environment § Parallelization of processing application will be key José Hernández LHC Computing Perspectives 11

Access to new resources for Run 2 § Access to opportunistic resources § HPC clusters, academic or commercial clouds, volunteer computing § Significant increase in capacity with low cost (satisfy capacity peaks) § Use HLT farm for offline data processing § A significant resource (>10 k slots) § During extended periods with no data taking and even inter-fill periods § Adopt advanced architectures § Processing in Run 1 done under Enterprise Linux on x 86 CPUs § Many-core processors, low-power CPUs, GPU environments § Challenging heterogeneous environment § Parallelization of processing application will be key José Hernández LHC Computing Perspectives 11

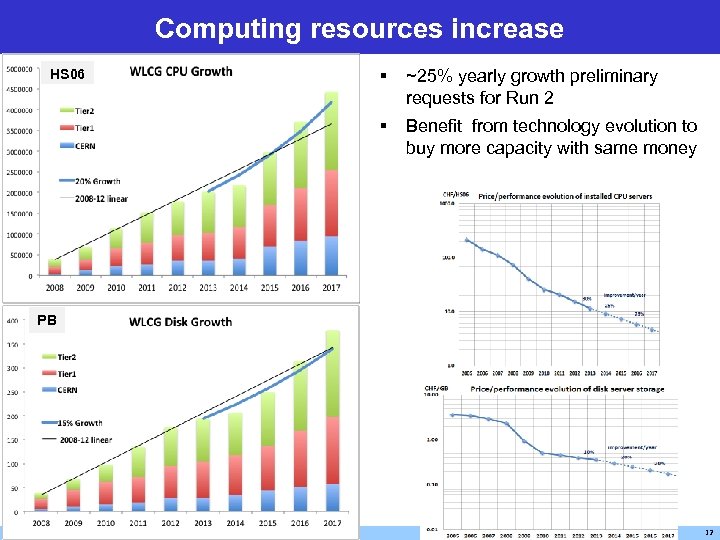

Computing resources increase § ~25% yearly growth preliminary requests for Run 2 § HS 06 Benefit from technology evolution to buy more capacity with same money PB José Hernández LHC Computing Perspectives 12

Computing resources increase § ~25% yearly growth preliminary requests for Run 2 § HS 06 Benefit from technology evolution to buy more capacity with same money PB José Hernández LHC Computing Perspectives 12

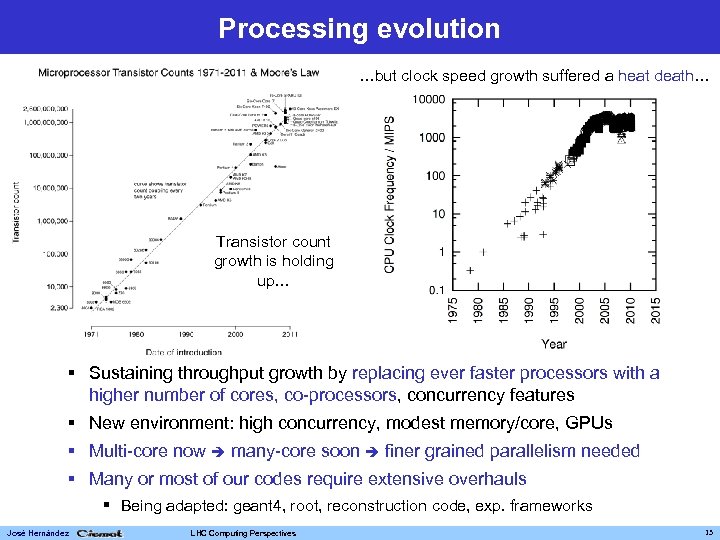

Processing evolution …but clock speed growth suffered a heat death… Transistor count growth is holding up… § Sustaining throughput growth by replacing ever faster processors with a higher number of cores, co-processors, concurrency features § New environment: high concurrency, modest memory/core, GPUs § Multi-core now many-core soon finer grained parallelism needed § Many or most of our codes require extensive overhauls § Being adapted: geant 4, root, reconstruction code, exp. frameworks José Hernández LHC Computing Perspectives 13

Processing evolution …but clock speed growth suffered a heat death… Transistor count growth is holding up… § Sustaining throughput growth by replacing ever faster processors with a higher number of cores, co-processors, concurrency features § New environment: high concurrency, modest memory/core, GPUs § Multi-core now many-core soon finer grained parallelism needed § Many or most of our codes require extensive overhauls § Being adapted: geant 4, root, reconstruction code, exp. frameworks José Hernández LHC Computing Perspectives 13

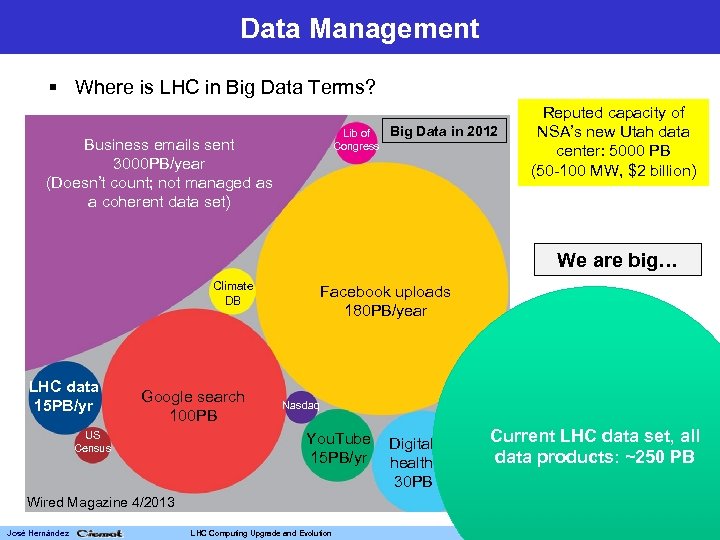

Data Management § Where is LHC in Big Data Terms? Lib of Congress Business emails sent 3000 PB/year (Doesn’t count; not managed as a coherent data set) Big Data in 2012 Reputed capacity of NSA’s new Utah data center: 5000 PB (50 -100 MW, $2 billion) We are big… Climate DB LHC data 15 PB/yr Google search 100 PB US Census Facebook uploads 180 PB/year Nasdaq You. Tube 15 PB/yr Digital health 30 PB Current LHC data set, all data products: ~250 PB Wired Magazine 4/2013 José Hernández LHC Computing Upgrade and Evolution 14

Data Management § Where is LHC in Big Data Terms? Lib of Congress Business emails sent 3000 PB/year (Doesn’t count; not managed as a coherent data set) Big Data in 2012 Reputed capacity of NSA’s new Utah data center: 5000 PB (50 -100 MW, $2 billion) We are big… Climate DB LHC data 15 PB/yr Google search 100 PB US Census Facebook uploads 180 PB/year Nasdaq You. Tube 15 PB/yr Digital health 30 PB Current LHC data set, all data products: ~250 PB Wired Magazine 4/2013 José Hernández LHC Computing Upgrade and Evolution 14

Data Management evolution § Data access model during LHC Run 1 § Pre-locate and replicate data at sites, send jobs to the data § We need more efficient distributed data handling, lower disk storage demands and better use of available CPU resources § The network has been very reliable and has experimented a large increase in bandwidth § (Aspire to) send only the data you need, only where you need it (and cache it when it arrives) § Towards transparent distributed data access enabled by the network § Industry has been at this approach for years, in content delivery networks § Already successful approaches during Run 1… José Hernández LHC Computing Perspectives 15

Data Management evolution § Data access model during LHC Run 1 § Pre-locate and replicate data at sites, send jobs to the data § We need more efficient distributed data handling, lower disk storage demands and better use of available CPU resources § The network has been very reliable and has experimented a large increase in bandwidth § (Aspire to) send only the data you need, only where you need it (and cache it when it arrives) § Towards transparent distributed data access enabled by the network § Industry has been at this approach for years, in content delivery networks § Already successful approaches during Run 1… José Hernández LHC Computing Perspectives 15

Data Management evolution in Run 1 § Scalable access to conditions data § Frontier for Scalable Distributed DB Access § Caching web proxies provide hierarchical, highly scalable cache based data access § Experiment software provisioning to the worker nodes § CERNVM File System (CVMFS) § Evolve towards a distributed data federation… José Hernández LHC Computing Perspectives 16

Data Management evolution in Run 1 § Scalable access to conditions data § Frontier for Scalable Distributed DB Access § Caching web proxies provide hierarchical, highly scalable cache based data access § Experiment software provisioning to the worker nodes § CERNVM File System (CVMFS) § Evolve towards a distributed data federation… José Hernández LHC Computing Perspectives 16

Data Management evolution § Distributed data federation § A collection of disparate storage resources transparently accessible across a wide area via a common namespace (CMS AAA, ATLAS FAX) § Needs efficient remote I/O § CMS has invested heavily in I/O optimizations within the application to allow efficient reading of the data over the (long latency) network using the xrootd technology while maintaining a high CPU efficiency § Extending initial use cases: fallback on local access failure, overflow busy sites, allow interactive access to data, use diskless sites § Interesting approach: ATLAS event service § Ask for exactly what you need, have it delivered by a service that knows how to get it to you efficiently § Return the outputs in a ~steady stream, such that a WN can be lost with little lost processing § Well suited to transient opportunistic resources, volunteer computing where preemption cannot be avoided § Well suited for high-CPU low I/O workflows José Hernández LHC Computing Perspectives 17

Data Management evolution § Distributed data federation § A collection of disparate storage resources transparently accessible across a wide area via a common namespace (CMS AAA, ATLAS FAX) § Needs efficient remote I/O § CMS has invested heavily in I/O optimizations within the application to allow efficient reading of the data over the (long latency) network using the xrootd technology while maintaining a high CPU efficiency § Extending initial use cases: fallback on local access failure, overflow busy sites, allow interactive access to data, use diskless sites § Interesting approach: ATLAS event service § Ask for exactly what you need, have it delivered by a service that knows how to get it to you efficiently § Return the outputs in a ~steady stream, such that a WN can be lost with little lost processing § Well suited to transient opportunistic resources, volunteer computing where preemption cannot be avoided § Well suited for high-CPU low I/O workflows José Hernández LHC Computing Perspectives 17

From Grid to Clouds § Turning computing into a utility providing infrastructure as a service § Clouds evolve, complement and extend the Grid § Decrease heterogeneity seen by the user (hardware virtualization) § VMs provide a uniform user interface to resources § Integrate diverse resources manageably § Isolate software from physical hardware § Dynamic provision of resources § New resources (commercial, research clouds) § Huge community behind Cloud software § Grid of clouds already used by LHC exps § Several sites provide Cloud interface § ATLAS ~450 k production jobs from Google over a few weeks § Tests on amazon EC spot pricing ~economically viable José Hernández LHC Computing Perspectives 18

From Grid to Clouds § Turning computing into a utility providing infrastructure as a service § Clouds evolve, complement and extend the Grid § Decrease heterogeneity seen by the user (hardware virtualization) § VMs provide a uniform user interface to resources § Integrate diverse resources manageably § Isolate software from physical hardware § Dynamic provision of resources § New resources (commercial, research clouds) § Huge community behind Cloud software § Grid of clouds already used by LHC exps § Several sites provide Cloud interface § ATLAS ~450 k production jobs from Google over a few weeks § Tests on amazon EC spot pricing ~economically viable José Hernández LHC Computing Perspectives 18

Conclusions § LHC computing performed extremely well at all levels in Run 1 § We know how to deliver, adapting where necessary § Excellent networks, flexible and adaptable computing models and software systems paid off in exploiting resources § LHC computing needs to face new challenges for LHC Run 2 § Large increase of computing resources required from 2015 § Live within constrained budgets § Use resources we own as fully and efficiently as possible § Support major development program required § Access to opportunistic and cloud resources, explore new computer and processing architectures § Evolve towards dynamic data access & distributed parallel computing § Explosive growth in data and (highly granular) processors in the wider world gives us a powerful ground for success in our evolution path § Evolve towards a more dynamic, efficient and flexible system José Hernández LHC Computing Perspectives 19

Conclusions § LHC computing performed extremely well at all levels in Run 1 § We know how to deliver, adapting where necessary § Excellent networks, flexible and adaptable computing models and software systems paid off in exploiting resources § LHC computing needs to face new challenges for LHC Run 2 § Large increase of computing resources required from 2015 § Live within constrained budgets § Use resources we own as fully and efficiently as possible § Support major development program required § Access to opportunistic and cloud resources, explore new computer and processing architectures § Evolve towards dynamic data access & distributed parallel computing § Explosive growth in data and (highly granular) processors in the wider world gives us a powerful ground for success in our evolution path § Evolve towards a more dynamic, efficient and flexible system José Hernández LHC Computing Perspectives 19