5b04e3369304fdddaaae2a97736c154f.ppt

- Количество слайдов: 56

Personalizing Information Search: Understanding Users and their Interests Diane Kelly School of Information & Library Science University of North Carolina dianek@email. unc. edu IPAM | 04 October 2007

Personalizing Information Search: Understanding Users and their Interests Diane Kelly School of Information & Library Science University of North Carolina dianek@email. unc. edu IPAM | 04 October 2007

Background: IR and TREC § What is IR? § Who works on problems in IR? § Where can I find the most recent work in IR? § A TREC primer

Background: IR and TREC § What is IR? § Who works on problems in IR? § Where can I find the most recent work in IR? § A TREC primer

Background: Personalization § Personalization is a process where retrieval is customized to the individual (not one-size-fits-all searching) § Hans Peter Luhn was one of the first people to personalize IR through selective dissemination of information (SDI) (now called ‘filtering’) § Profiles and user models are often employed to ‘house’ data about users and represent their interests § Figuring out how to populate and maintain the profile or user model is a hard problem

Background: Personalization § Personalization is a process where retrieval is customized to the individual (not one-size-fits-all searching) § Hans Peter Luhn was one of the first people to personalize IR through selective dissemination of information (SDI) (now called ‘filtering’) § Profiles and user models are often employed to ‘house’ data about users and represent their interests § Figuring out how to populate and maintain the profile or user model is a hard problem

Major Approaches § Explicit Feedback § Implicit Feedback § User’s desktop

Major Approaches § Explicit Feedback § Implicit Feedback § User’s desktop

Explicit Feedback

Explicit Feedback

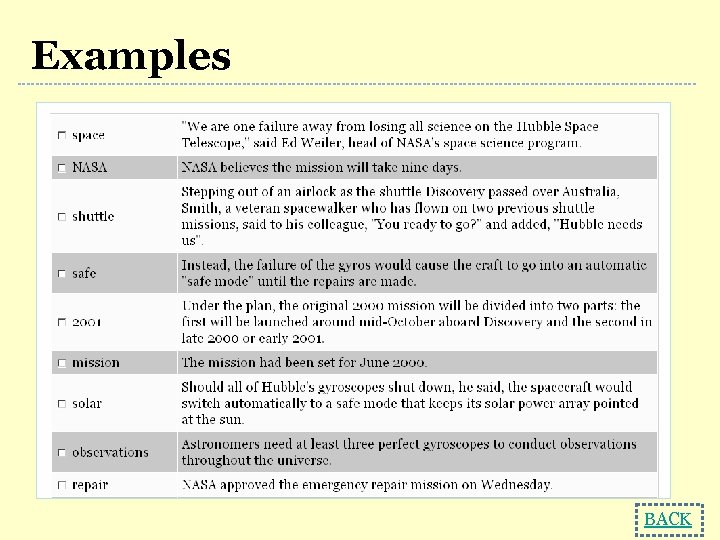

Explicit Feedback § Term relevance feedback is one of the most widely used and studied explicit feedback techniques § Typical relevance feedback scenarios (examples) § Systems-centered research has found that relevance feedback works (including pseudorelevance feedback) § User-centered research has found mixed results about its effectiveness

Explicit Feedback § Term relevance feedback is one of the most widely used and studied explicit feedback techniques § Typical relevance feedback scenarios (examples) § Systems-centered research has found that relevance feedback works (including pseudorelevance feedback) § User-centered research has found mixed results about its effectiveness

Explicit Feedback § Terms are not presented in context so it may be hard for users to understand how they can help § Quality of terms suggested is not always good § Users don’t have the additional cognitive resources to engage in explicit feedback § Users are too lazy to provide feedback § Questions about the sustainability of explicit feedback for long-term modeling

Explicit Feedback § Terms are not presented in context so it may be hard for users to understand how they can help § Quality of terms suggested is not always good § Users don’t have the additional cognitive resources to engage in explicit feedback § Users are too lazy to provide feedback § Questions about the sustainability of explicit feedback for long-term modeling

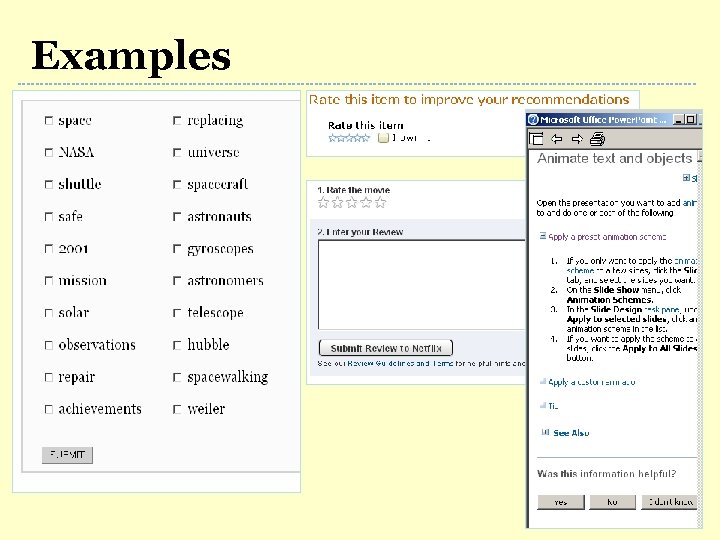

Examples

Examples

Examples BACK

Examples BACK

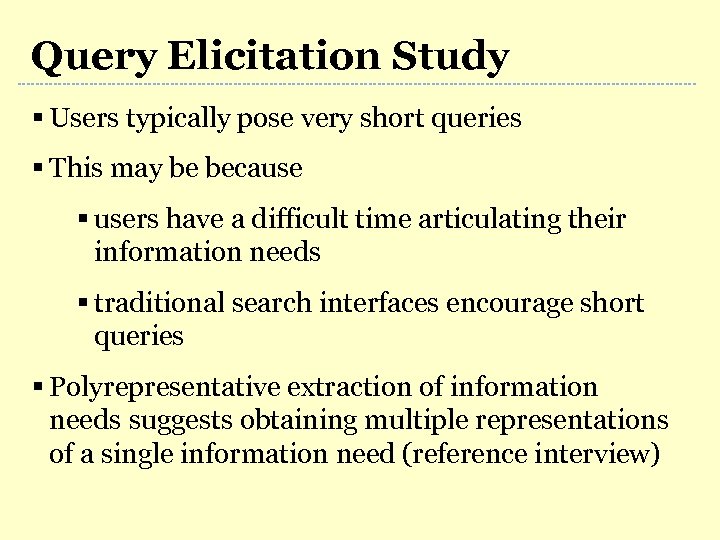

Query Elicitation Study § Users typically pose very short queries § This may be because § users have a difficult time articulating their information needs § traditional search interfaces encourage short queries § Polyrepresentative extraction of information needs suggests obtaining multiple representations of a single information need (reference interview)

Query Elicitation Study § Users typically pose very short queries § This may be because § users have a difficult time articulating their information needs § traditional search interfaces encourage short queries § Polyrepresentative extraction of information needs suggests obtaining multiple representations of a single information need (reference interview)

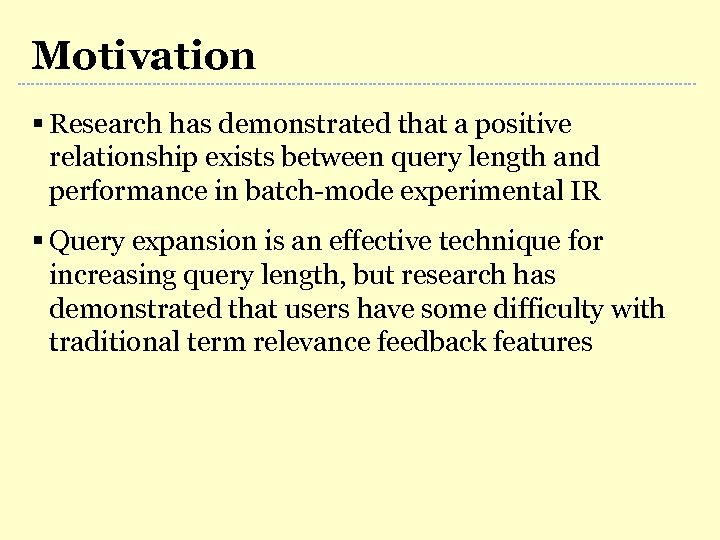

Motivation § Research has demonstrated that a positive relationship exists between query length and performance in batch-mode experimental IR § Query expansion is an effective technique for increasing query length, but research has demonstrated that users have some difficulty with traditional term relevance feedback features

Motivation § Research has demonstrated that a positive relationship exists between query length and performance in batch-mode experimental IR § Query expansion is an effective technique for increasing query length, but research has demonstrated that users have some difficulty with traditional term relevance feedback features

![Elicitation Form [Already Know] [Why Know] [Keywords] Elicitation Form [Already Know] [Why Know] [Keywords]](https://present5.com/presentation/5b04e3369304fdddaaae2a97736c154f/image-12.jpg) Elicitation Form [Already Know] [Why Know] [Keywords]

Elicitation Form [Already Know] [Why Know] [Keywords]

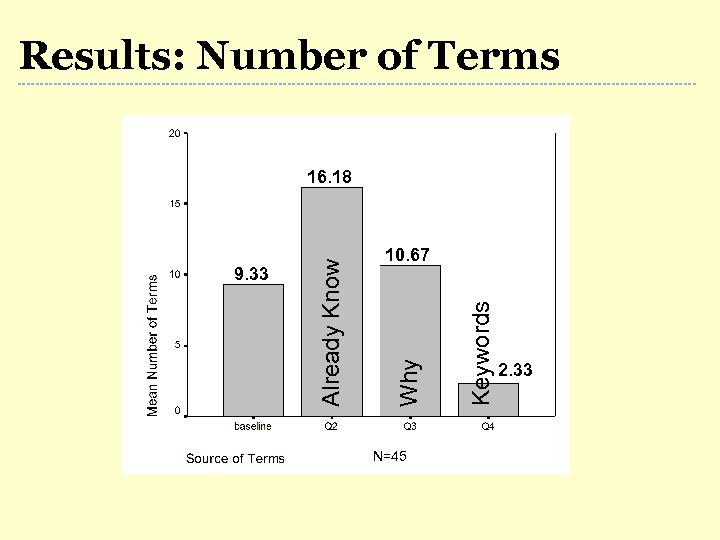

Results: Number of Terms N=45 Keywords 10. 67 Why 9. 33 Already Know 16. 18 2. 33

Results: Number of Terms N=45 Keywords 10. 67 Why 9. 33 Already Know 16. 18 2. 33

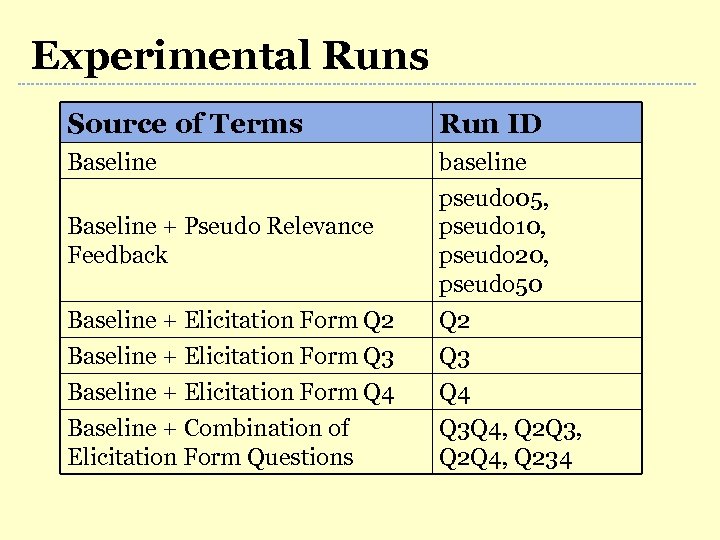

Experimental Runs Source of Terms Run ID Baseline baseline pseudo 05, pseudo 10, pseudo 20, pseudo 50 Baseline + Pseudo Relevance Feedback Baseline + Elicitation Form Q 2 Baseline + Elicitation Form Q 3 Baseline + Elicitation Form Q 4 Baseline + Combination of Elicitation Form Questions Q 2 Q 3 Q 4 Q 3 Q 4, Q 2 Q 3, Q 2 Q 4, Q 234

Experimental Runs Source of Terms Run ID Baseline baseline pseudo 05, pseudo 10, pseudo 20, pseudo 50 Baseline + Pseudo Relevance Feedback Baseline + Elicitation Form Q 2 Baseline + Elicitation Form Q 3 Baseline + Elicitation Form Q 4 Baseline + Combination of Elicitation Form Questions Q 2 Q 3 Q 4 Q 3 Q 4, Q 2 Q 3, Q 2 Q 4, Q 234

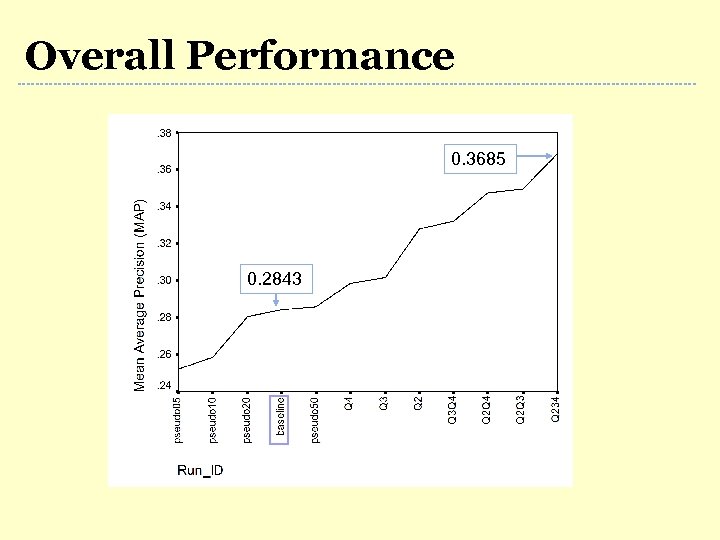

Overall Performance 0. 3685 0. 2843

Overall Performance 0. 3685 0. 2843

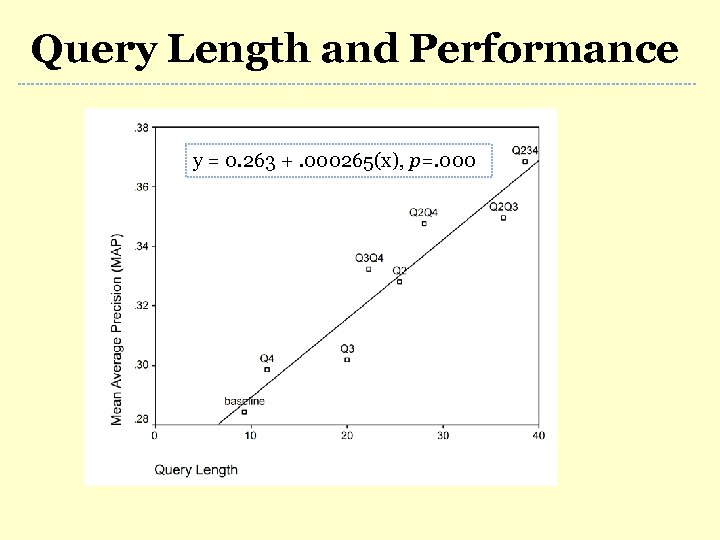

Query Length and Performance y = 0. 263 +. 000265(x), p=. 000

Query Length and Performance y = 0. 263 +. 000265(x), p=. 000

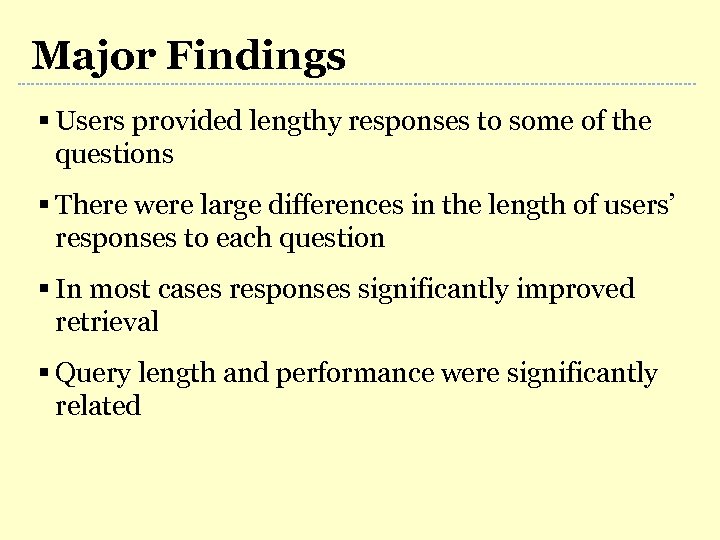

Major Findings § Users provided lengthy responses to some of the questions § There were large differences in the length of users’ responses to each question § In most cases responses significantly improved retrieval § Query length and performance were significantly related

Major Findings § Users provided lengthy responses to some of the questions § There were large differences in the length of users’ responses to each question § In most cases responses significantly improved retrieval § Query length and performance were significantly related

Implicit Feedback

Implicit Feedback

Implicit Feedback § What is it? Information about users, their needs and document preferences that can be obtained unobtrusively, by watching users’ interactions and behaviors with systems § What are some examples? § Examine: Select, View, Listen, Scroll, Find, Query, Cumulative measures § Retain: Print, Save, Bookmark, Purchase, Email § Reference: Link, Cite § Annotate/Create: Mark up, Type, Edit, Organize, Label

Implicit Feedback § What is it? Information about users, their needs and document preferences that can be obtained unobtrusively, by watching users’ interactions and behaviors with systems § What are some examples? § Examine: Select, View, Listen, Scroll, Find, Query, Cumulative measures § Retain: Print, Save, Bookmark, Purchase, Email § Reference: Link, Cite § Annotate/Create: Mark up, Type, Edit, Organize, Label

Implicit Feedback § Why is it important? § It is generally believed that users are unwilling to engage in explicit relevance feedback § It is unlikely that users can maintain their profiles over time § Users generate large amounts of data each time the engage in online information-seeking activities and the things in which they are ‘interested’ is in this data somewhere

Implicit Feedback § Why is it important? § It is generally believed that users are unwilling to engage in explicit relevance feedback § It is unlikely that users can maintain their profiles over time § Users generate large amounts of data each time the engage in online information-seeking activities and the things in which they are ‘interested’ is in this data somewhere

Implicit Feedback § What do we “know” about it? § There seems to be a positive correlation between selection (click-through) and relevance § There seems to be a positive correlation between display time and relevance § What is problematic about it? § Much of the research has been based on incomplete data and general behavior § And has not considered the impact of contextual variables – such as task and a user’s familiarity with a topic – on behaviors

Implicit Feedback § What do we “know” about it? § There seems to be a positive correlation between selection (click-through) and relevance § There seems to be a positive correlation between display time and relevance § What is problematic about it? § Much of the research has been based on incomplete data and general behavior § And has not considered the impact of contextual variables – such as task and a user’s familiarity with a topic – on behaviors

Implicit Feedback Study § To investigate: § the relationship between behaviors and relevance § the relationship between behaviors and context § To develop a method for studying and measuring behaviors, context and relevance in a natural setting, over time

Implicit Feedback Study § To investigate: § the relationship between behaviors and relevance § the relationship between behaviors and context § To develop a method for studying and measuring behaviors, context and relevance in a natural setting, over time

Method § Approach: naturalistic and longitudinal, but some control § Subjects/Cases: 7 Ph. D. students § Study period: 14 weeks § Compensation: new laptops and printers

Method § Approach: naturalistic and longitudinal, but some control § Subjects/Cases: 7 Ph. D. students § Study period: 14 weeks § Compensation: new laptops and printers

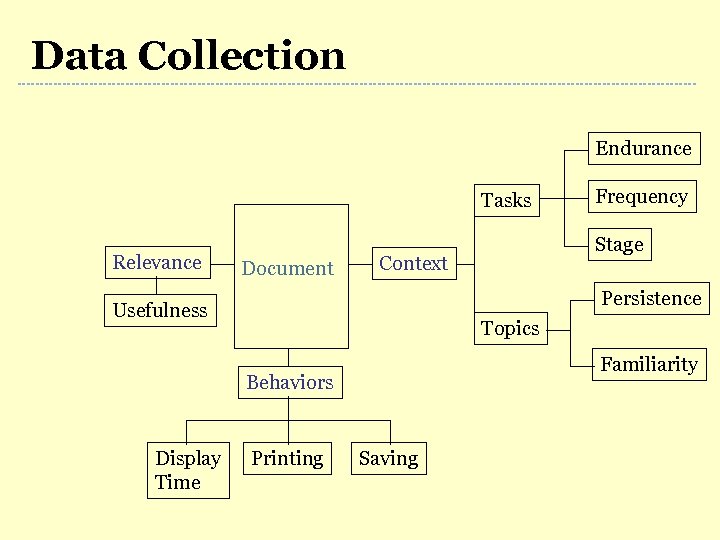

Data Collection Endurance Tasks Relevance Document Stage Context Persistence Usefulness Topics Familiarity Behaviors Display Time Frequency Printing Saving

Data Collection Endurance Tasks Relevance Document Stage Context Persistence Usefulness Topics Familiarity Behaviors Display Time Frequency Printing Saving

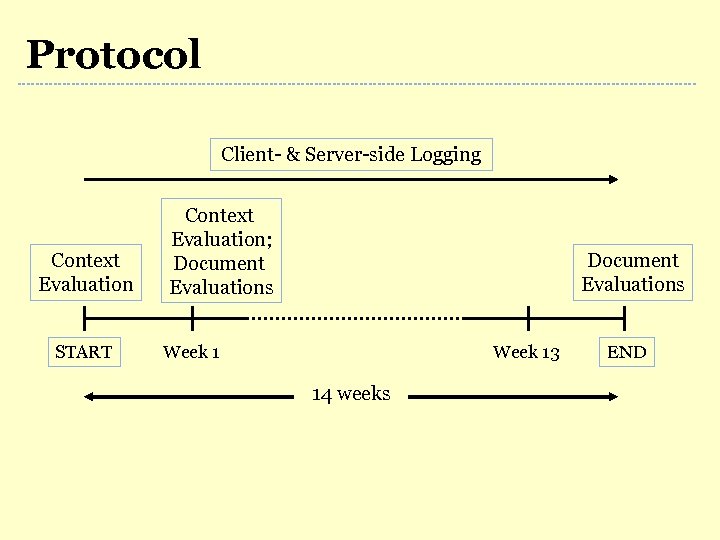

Protocol Client- & Server-side Logging Context Evaluation START Context Evaluation; Document Evaluations Week 13 14 weeks END

Protocol Client- & Server-side Logging Context Evaluation START Context Evaluation; Document Evaluations Week 13 14 weeks END

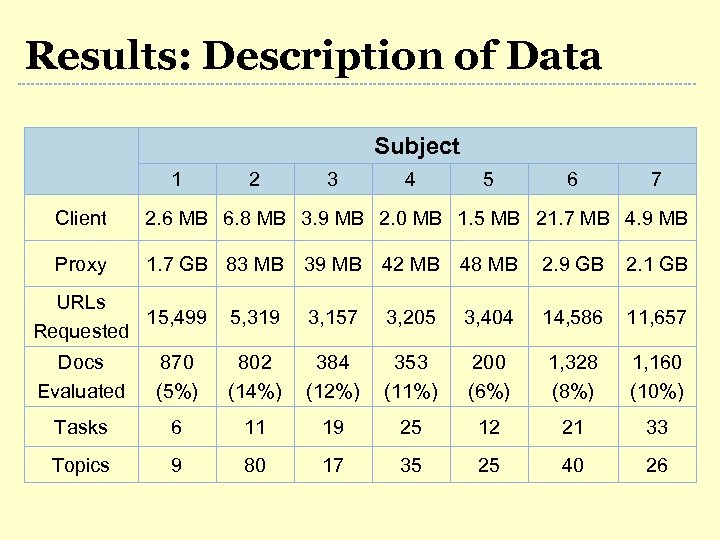

Results: Description of Data Subject 1 2 3 4 5 6 7 Client 2. 6 MB 6. 8 MB 3. 9 MB 2. 0 MB 1. 5 MB 21. 7 MB 4. 9 MB Proxy 1. 7 GB 83 MB 39 MB 42 MB 48 MB 2. 9 GB 2. 1 GB URLs 15, 499 Requested 5, 319 3, 157 3, 205 3, 404 14, 586 11, 657 Docs Evaluated 870 (5%) 802 (14%) 384 (12%) 353 (11%) 200 (6%) 1, 328 (8%) 1, 160 (10%) Tasks 6 11 19 25 12 21 33 Topics 9 80 17 35 25 40 26

Results: Description of Data Subject 1 2 3 4 5 6 7 Client 2. 6 MB 6. 8 MB 3. 9 MB 2. 0 MB 1. 5 MB 21. 7 MB 4. 9 MB Proxy 1. 7 GB 83 MB 39 MB 42 MB 48 MB 2. 9 GB 2. 1 GB URLs 15, 499 Requested 5, 319 3, 157 3, 205 3, 404 14, 586 11, 657 Docs Evaluated 870 (5%) 802 (14%) 384 (12%) 353 (11%) 200 (6%) 1, 328 (8%) 1, 160 (10%) Tasks 6 11 19 25 12 21 33 Topics 9 80 17 35 25 40 26

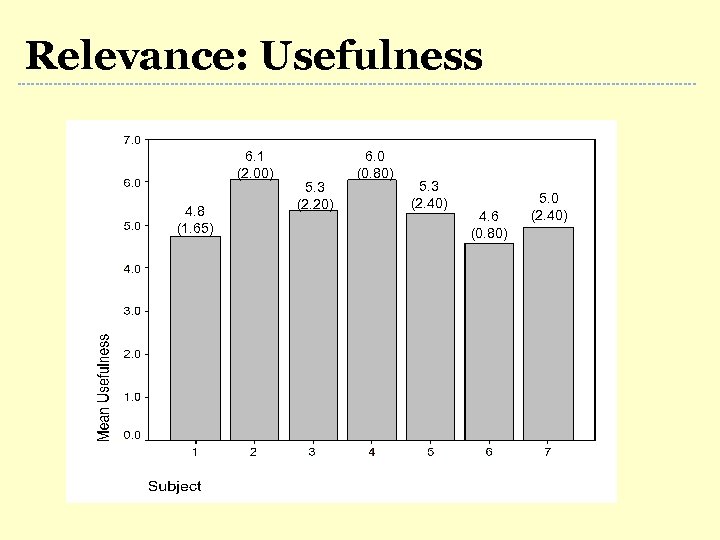

Relevance: Usefulness 6. 1 (2. 00) 4. 8 (1. 65) 5. 3 (2. 20) 6. 0 (0. 80) 5. 3 (2. 40) 4. 6 (0. 80) 5. 0 (2. 40)

Relevance: Usefulness 6. 1 (2. 00) 4. 8 (1. 65) 5. 3 (2. 20) 6. 0 (0. 80) 5. 3 (2. 40) 4. 6 (0. 80) 5. 0 (2. 40)

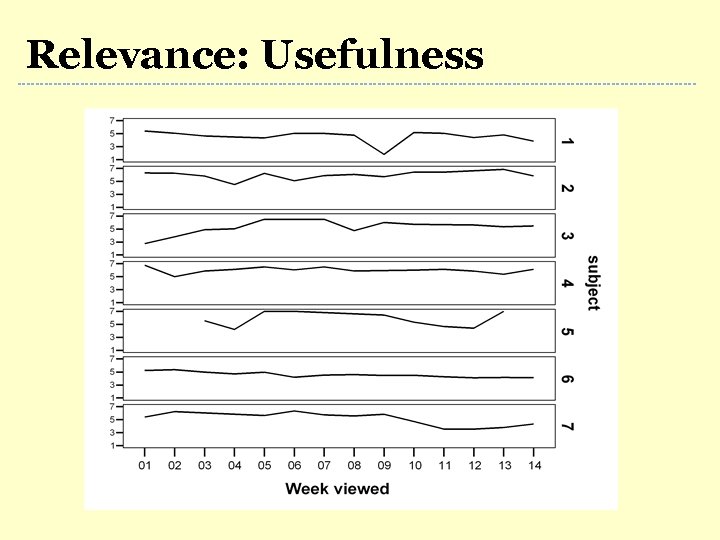

Relevance: Usefulness

Relevance: Usefulness

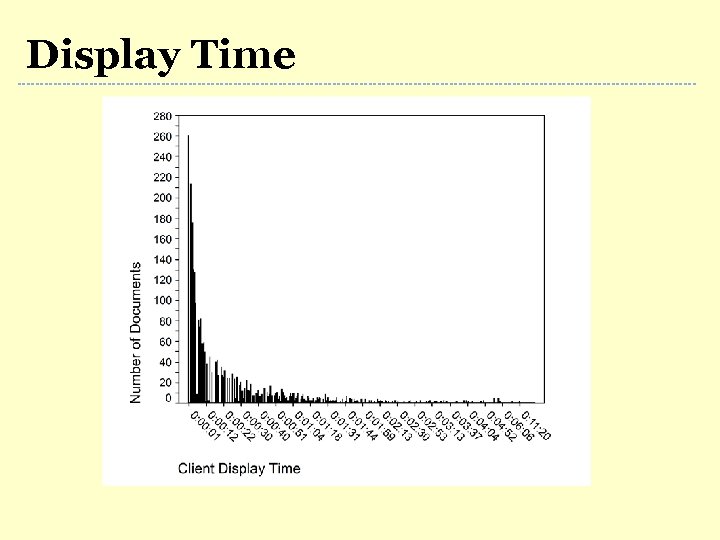

Display Time

Display Time

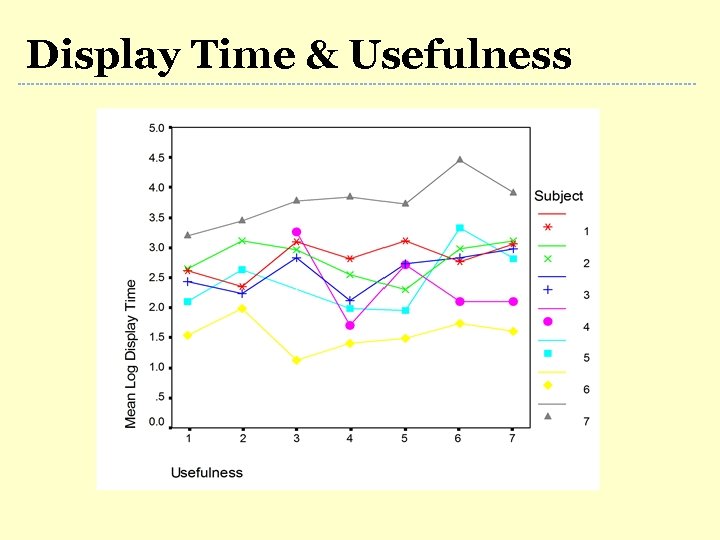

Display Time & Usefulness

Display Time & Usefulness

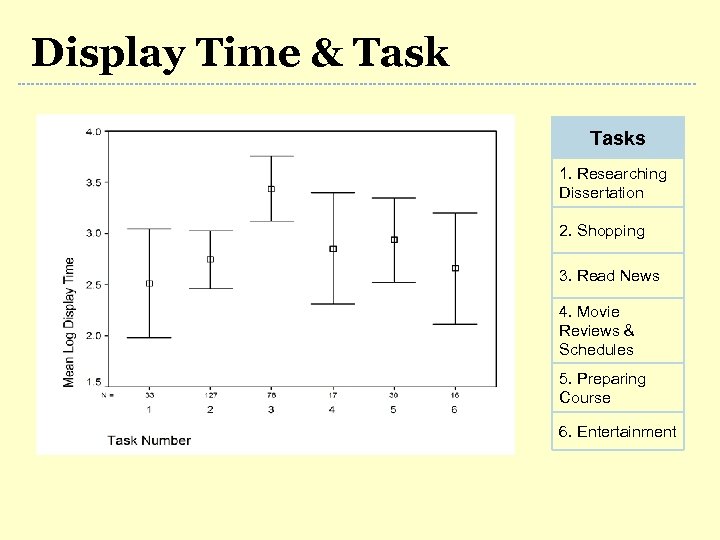

Display Time & Tasks 1. Researching Dissertation 2. Shopping 3. Read News 4. Movie Reviews & Schedules 5. Preparing Course 6. Entertainment

Display Time & Tasks 1. Researching Dissertation 2. Shopping 3. Read News 4. Movie Reviews & Schedules 5. Preparing Course 6. Entertainment

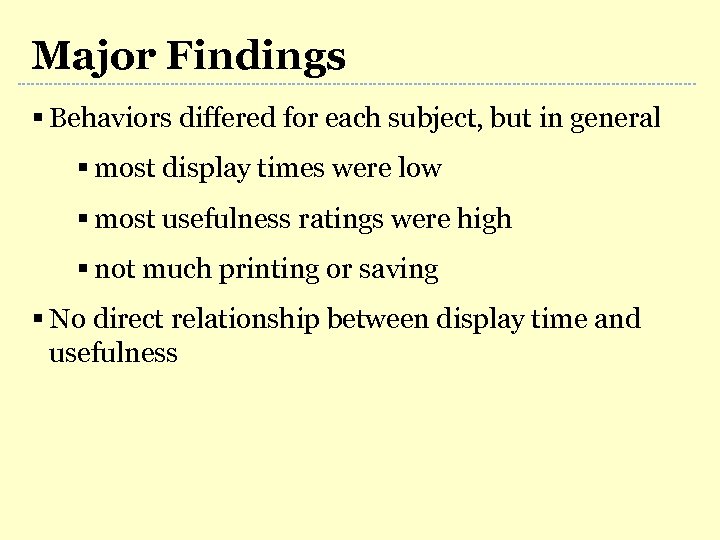

Major Findings § Behaviors differed for each subject, but in general § most display times were low § most usefulness ratings were high § not much printing or saving § No direct relationship between display time and usefulness

Major Findings § Behaviors differed for each subject, but in general § most display times were low § most usefulness ratings were high § not much printing or saving § No direct relationship between display time and usefulness

Major Findings § Main effects for display time and all contextual variables: § Task (5 subjects) § Topic (6 subjects) § Familiarity (5 subjects) ØLower levels of familiarity associated with higher display times § No clear interaction effects among behaviors, context and relevance

Major Findings § Main effects for display time and all contextual variables: § Task (5 subjects) § Topic (6 subjects) § Familiarity (5 subjects) ØLower levels of familiarity associated with higher display times § No clear interaction effects among behaviors, context and relevance

Personalizing Search § Using the display time, task and relevance information from the study, we evaluated the effectiveness of a set of personalized retrieval algorithms § Four algorithms for using display time as implicit feedback were tested: 1. User 2. Task 3. User + Task 4. General

Personalizing Search § Using the display time, task and relevance information from the study, we evaluated the effectiveness of a set of personalized retrieval algorithms § Four algorithms for using display time as implicit feedback were tested: 1. User 2. Task 3. User + Task 4. General

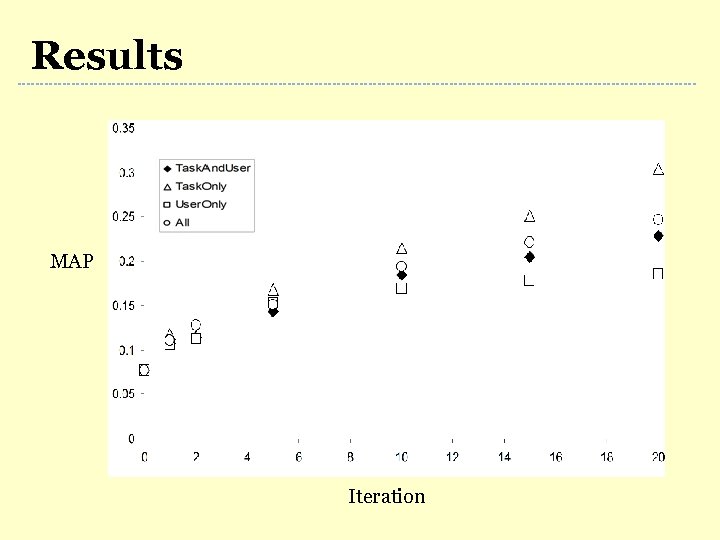

Results MAP Iteration

Results MAP Iteration

Major Findings § Tailoring display time thresholds based on task information improved performance, but doing so based on user information did not § There was a lot of variability between subjects, with the user-centered algorithms performing well for some and poorly for others § The effectiveness of most of the algorithms increased with time (and more data)

Major Findings § Tailoring display time thresholds based on task information improved performance, but doing so based on user information did not § There was a lot of variability between subjects, with the user-centered algorithms performing well for some and poorly for others § The effectiveness of most of the algorithms increased with time (and more data)

Some Problems

Some Problems

Relevance § What are we modeling? Does click = relevance? § Relevance is multi-dimensional and dynamic § A single measure does to adequately reflect ‘relevance’ § Most pages are likely to be rated as useful, even if the value or importance of the information differs

Relevance § What are we modeling? Does click = relevance? § Relevance is multi-dimensional and dynamic § A single measure does to adequately reflect ‘relevance’ § Most pages are likely to be rated as useful, even if the value or importance of the information differs

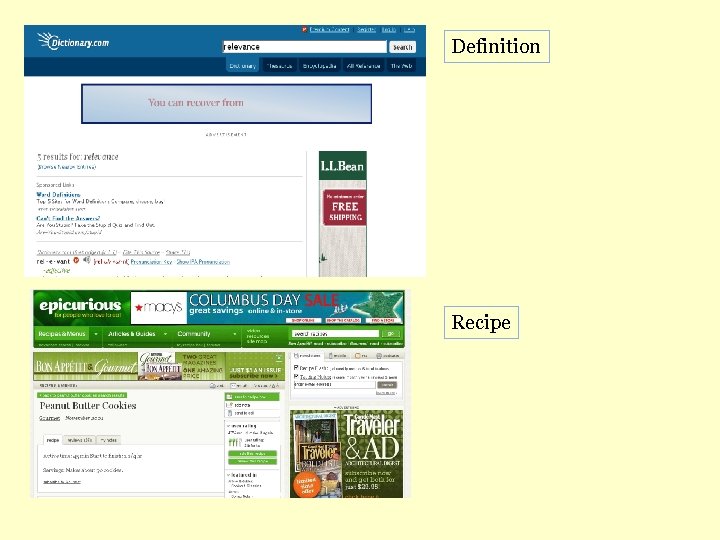

Definition Recipe

Definition Recipe

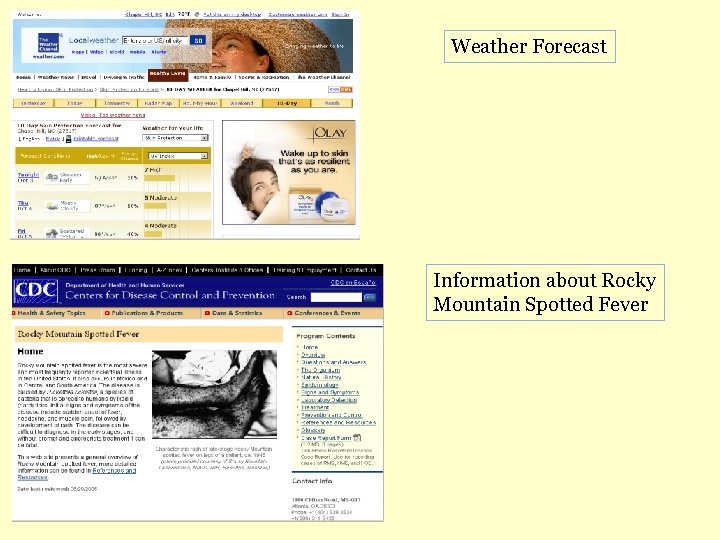

Weather Forecast Information about Rocky Mountain Spotted Fever

Weather Forecast Information about Rocky Mountain Spotted Fever

Paper about Personalization

Paper about Personalization

Page Structure § Some behaviors are more likely to occur on some types of pages § A more ‘intelligent’ modeling function would know when and what to observe and expect § The structure of pages encourage/inhibit certain behaviors § Not all pages are equally as useful for modeling a user’s interests

Page Structure § Some behaviors are more likely to occur on some types of pages § A more ‘intelligent’ modeling function would know when and what to observe and expect § The structure of pages encourage/inhibit certain behaviors § Not all pages are equally as useful for modeling a user’s interests

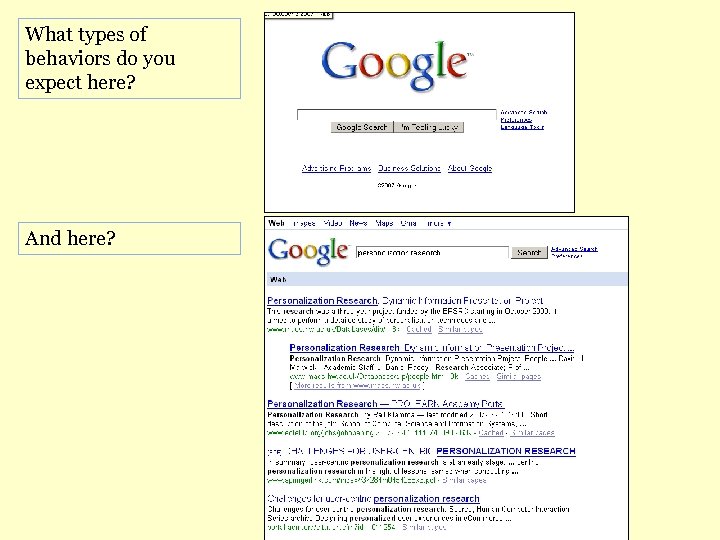

What types of behaviors do you expect here? And here?

What types of behaviors do you expect here? And here?

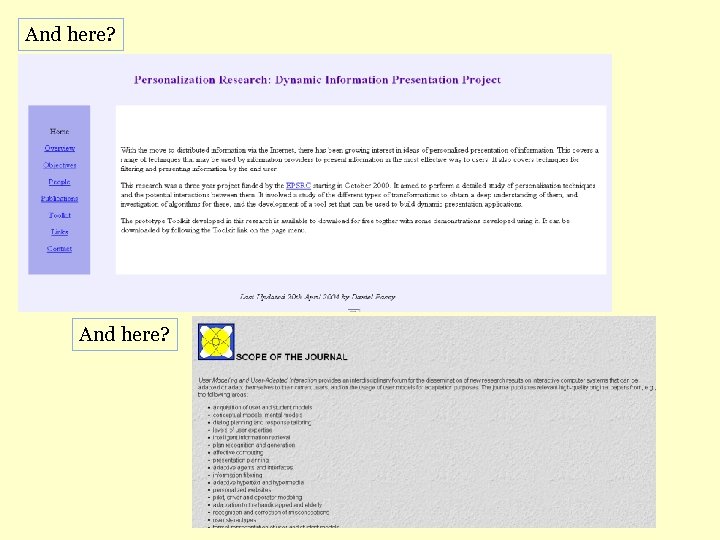

And here?

And here?

The Future

The Future

Future § New interaction styles and systems create new opportunities for explicit and implicit feedback § Collaborative search features and query recommendation § Features/Systems that support the entire search process (e. g. , saving, organizing, etc. ) § QA systems § New types of feedback § Negative § Physiological

Future § New interaction styles and systems create new opportunities for explicit and implicit feedback § Collaborative search features and query recommendation § Features/Systems that support the entire search process (e. g. , saving, organizing, etc. ) § QA systems § New types of feedback § Negative § Physiological

Thank You Diane Kelly (dianek@email. unc. edu) WEB: http: //ils. unc. edu/~dianek/research. html Collaborators: Nick Belkin, Xin Fu, Vijay Dollu, Ryen White

Thank You Diane Kelly (dianek@email. unc. edu) WEB: http: //ils. unc. edu/~dianek/research. html Collaborators: Nick Belkin, Xin Fu, Vijay Dollu, Ryen White

![TREC [Text REtrieval Conference] It’s not this … TREC [Text REtrieval Conference] It’s not this …](https://present5.com/presentation/5b04e3369304fdddaaae2a97736c154f/image-49.jpg) TREC [Text REtrieval Conference] It’s not this …

TREC [Text REtrieval Conference] It’s not this …

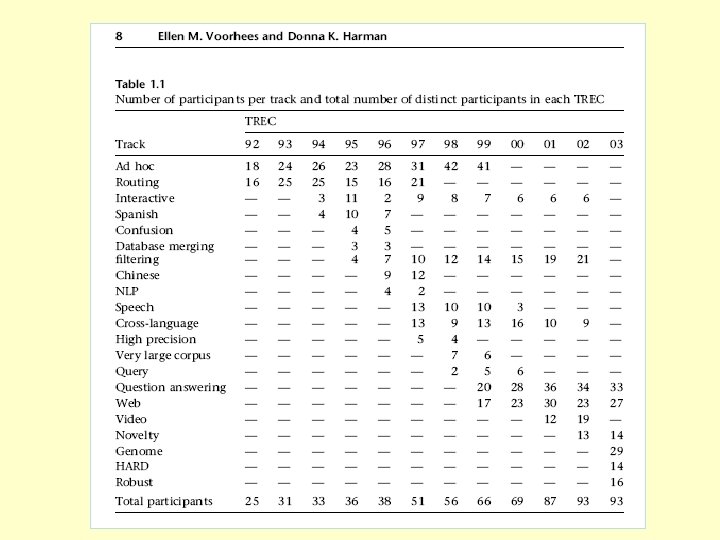

What is TREC? § TREC is a workshop series sponsored by the National Institute of Standards and Technology (NIST) and the US Department of Defense. § It’s purpose is to build infrastructure for large-scale evaluation of text retrieval technology. § TREC collections and evaluation measures are the de facto standard for evaluation in IR. § TREC is comprised of different tracks each of which focuses on different issues (e. g. , question answering, filtering).

What is TREC? § TREC is a workshop series sponsored by the National Institute of Standards and Technology (NIST) and the US Department of Defense. § It’s purpose is to build infrastructure for large-scale evaluation of text retrieval technology. § TREC collections and evaluation measures are the de facto standard for evaluation in IR. § TREC is comprised of different tracks each of which focuses on different issues (e. g. , question answering, filtering).

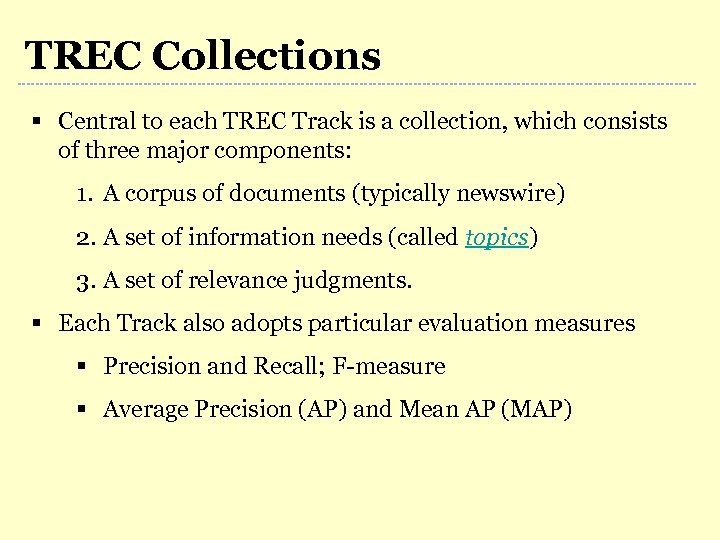

TREC Collections § Central to each TREC Track is a collection, which consists of three major components: 1. A corpus of documents (typically newswire) 2. A set of information needs (called topics) 3. A set of relevance judgments. § Each Track also adopts particular evaluation measures § Precision and Recall; F-measure § Average Precision (AP) and Mean AP (MAP)

TREC Collections § Central to each TREC Track is a collection, which consists of three major components: 1. A corpus of documents (typically newswire) 2. A set of information needs (called topics) 3. A set of relevance judgments. § Each Track also adopts particular evaluation measures § Precision and Recall; F-measure § Average Precision (AP) and Mean AP (MAP)

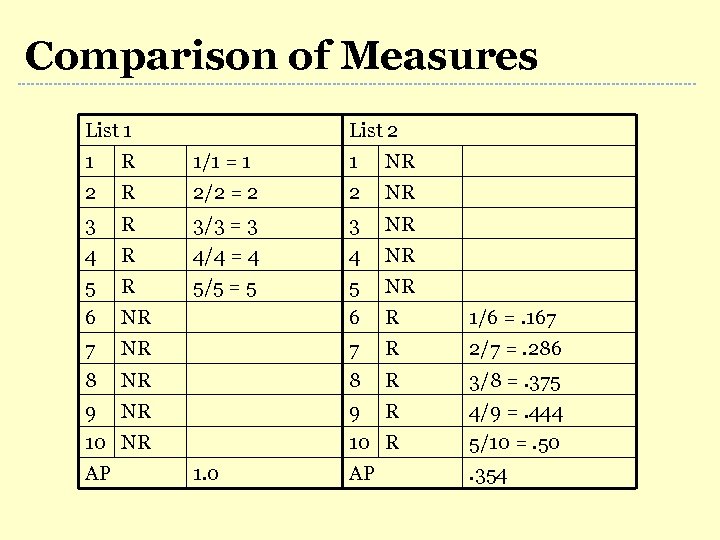

Comparison of Measures List 1 List 2 1 R 1/1 = 1 1 NR 2 R 2/2 = 2 2 NR 3 R 3/3 = 3 3 NR 4 R 4/4 = 4 4 NR 5 R 5/5 = 5 5 NR 6 R 1/6 =. 167 7 NR 7 R 2/7 =. 286 8 NR 8 R 3/8 =. 375 9 NR 9 R 4/9 =. 444 10 R 5/10 =. 50 AP . 354 10 NR AP 1. 0

Comparison of Measures List 1 List 2 1 R 1/1 = 1 1 NR 2 R 2/2 = 2 2 NR 3 R 3/3 = 3 3 NR 4 R 4/4 = 4 4 NR 5 R 5/5 = 5 5 NR 6 R 1/6 =. 167 7 NR 7 R 2/7 =. 286 8 NR 8 R 3/8 =. 375 9 NR 9 R 4/9 =. 444 10 R 5/10 =. 50 AP . 354 10 NR AP 1. 0

Learn more about TREC § http: //trec. nist. gov § Voorhees, E. M. , & Harman, D. K. (2005). TREC: Experiment and Evaluation in Information Retrieval, Cambridge, MA: MIT Press. BACK

Learn more about TREC § http: //trec. nist. gov § Voorhees, E. M. , & Harman, D. K. (2005). TREC: Experiment and Evaluation in Information Retrieval, Cambridge, MA: MIT Press. BACK

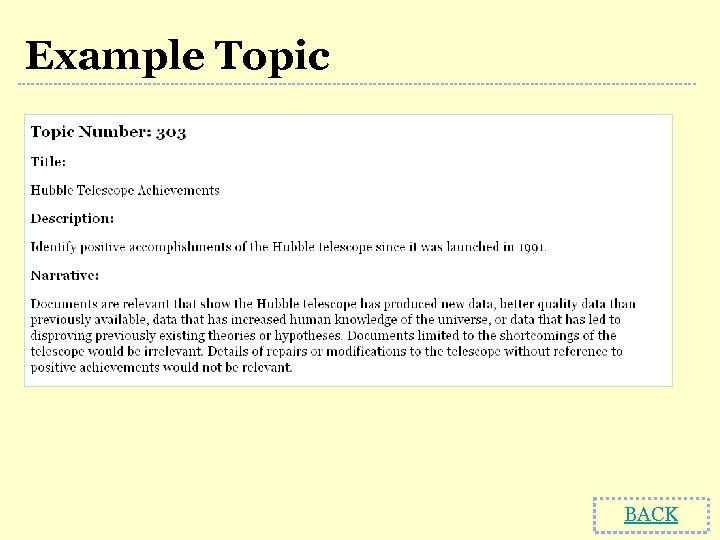

Example Topic BACK

Example Topic BACK

Learn more about IR § ACM SIGIR Conference § Sparck-Jones, K. , & Willett, P. (1997). Readings in Information Retrieval. Morgan-Kaufman Publishers. § Baeza-Yates, R. , & Ribeiro-Neto, B. (1999). Modern information retrieval. New York, NY: ACM Press. § Grossman, D. A. , Frieder, O. (2004). Information retrieval: Algorithms and Heuristics. The Netherlands: Springer. BACK

Learn more about IR § ACM SIGIR Conference § Sparck-Jones, K. , & Willett, P. (1997). Readings in Information Retrieval. Morgan-Kaufman Publishers. § Baeza-Yates, R. , & Ribeiro-Neto, B. (1999). Modern information retrieval. New York, NY: ACM Press. § Grossman, D. A. , Frieder, O. (2004). Information retrieval: Algorithms and Heuristics. The Netherlands: Springer. BACK