a91b75429a9a414a620e0be30baa911b.ppt

- Количество слайдов: 57

Performance Technology for Productive, High-End Parallel Computing Allen D. Malony malony@cs. uoregon. edu Department of Computer and Information Science Performance Research Laboratory Neuro. Informatics Center University of Oregon

Performance Technology for Productive, High-End Parallel Computing Allen D. Malony malony@cs. uoregon. edu Department of Computer and Information Science Performance Research Laboratory Neuro. Informatics Center University of Oregon

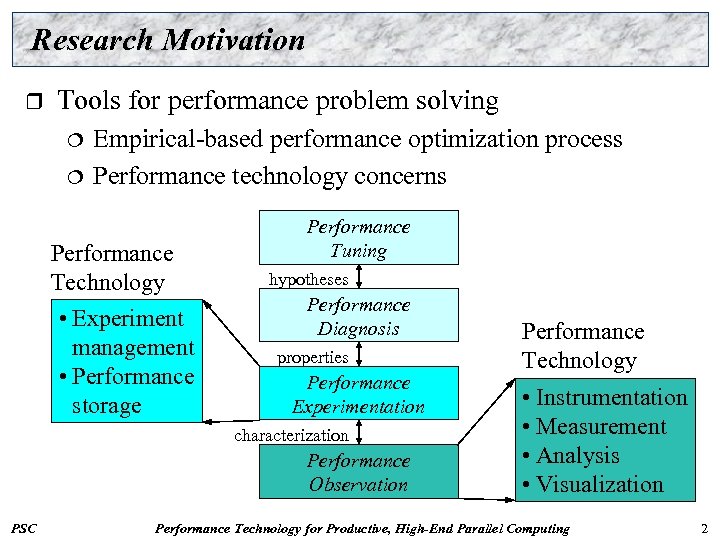

Research Motivation r Tools for performance problem solving ¦ ¦ Empirical-based performance optimization process Performance technology concerns Performance Technology • Experiment management • Performance storage Performance Tuning hypotheses Performance Diagnosis properties Performance Experimentation characterization Performance Observation PSC Performance Technology • Instrumentation • Measurement • Analysis • Visualization Performance Technology for Productive, High-End Parallel Computing 2

Research Motivation r Tools for performance problem solving ¦ ¦ Empirical-based performance optimization process Performance technology concerns Performance Technology • Experiment management • Performance storage Performance Tuning hypotheses Performance Diagnosis properties Performance Experimentation characterization Performance Observation PSC Performance Technology • Instrumentation • Measurement • Analysis • Visualization Performance Technology for Productive, High-End Parallel Computing 2

Challenges in Performance Problem Solving r r r How to make the process more effective (productive)? Process may depend on scale of parallel system What are the important events and performance metrics? ¦ ¦ r Process and tools can/must be more application-aware ¦ r r r PSC Tied to application structure and computational model Tied to application domain and algorithms Tools have poor support for application-specific aspects What are the significant issues that will affect the technology used to support the process? Enhance application development and benchmarking New paradigm in performance process and technology Performance Technology for Productive, High-End Parallel Computing 3

Challenges in Performance Problem Solving r r r How to make the process more effective (productive)? Process may depend on scale of parallel system What are the important events and performance metrics? ¦ ¦ r Process and tools can/must be more application-aware ¦ r r r PSC Tied to application structure and computational model Tied to application domain and algorithms Tools have poor support for application-specific aspects What are the significant issues that will affect the technology used to support the process? Enhance application development and benchmarking New paradigm in performance process and technology Performance Technology for Productive, High-End Parallel Computing 3

Large Scale Performance Problem Solving r r How does our view of this process change when we consider very large-scale parallel systems? What are the significant issues that will affect the technology used to support the process? Parallel performance observation is clearly needed In general, there is the concern for intrusion ¦ r Scaling complicates observation and analysis ¦ ¦ r PSC Seen as a tradeoff with performance diagnosis accuracy Performance data size becomes a concern Analysis complexity increases Nature of application development may change Performance Technology for Productive, High-End Parallel Computing 4

Large Scale Performance Problem Solving r r How does our view of this process change when we consider very large-scale parallel systems? What are the significant issues that will affect the technology used to support the process? Parallel performance observation is clearly needed In general, there is the concern for intrusion ¦ r Scaling complicates observation and analysis ¦ ¦ r PSC Seen as a tradeoff with performance diagnosis accuracy Performance data size becomes a concern Analysis complexity increases Nature of application development may change Performance Technology for Productive, High-End Parallel Computing 4

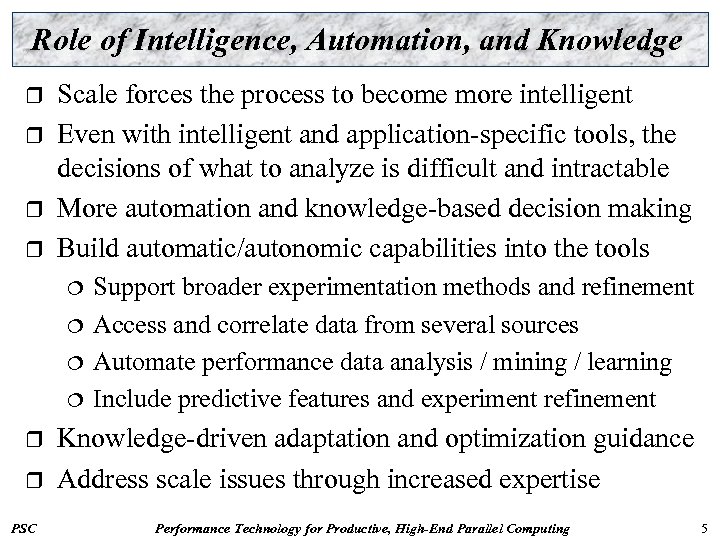

Role of Intelligence, Automation, and Knowledge r r Scale forces the process to become more intelligent Even with intelligent and application-specific tools, the decisions of what to analyze is difficult and intractable More automation and knowledge-based decision making Build automatic/autonomic capabilities into the tools ¦ ¦ r r PSC Support broader experimentation methods and refinement Access and correlate data from several sources Automate performance data analysis / mining / learning Include predictive features and experiment refinement Knowledge-driven adaptation and optimization guidance Address scale issues through increased expertise Performance Technology for Productive, High-End Parallel Computing 5

Role of Intelligence, Automation, and Knowledge r r Scale forces the process to become more intelligent Even with intelligent and application-specific tools, the decisions of what to analyze is difficult and intractable More automation and knowledge-based decision making Build automatic/autonomic capabilities into the tools ¦ ¦ r r PSC Support broader experimentation methods and refinement Access and correlate data from several sources Automate performance data analysis / mining / learning Include predictive features and experiment refinement Knowledge-driven adaptation and optimization guidance Address scale issues through increased expertise Performance Technology for Productive, High-End Parallel Computing 5

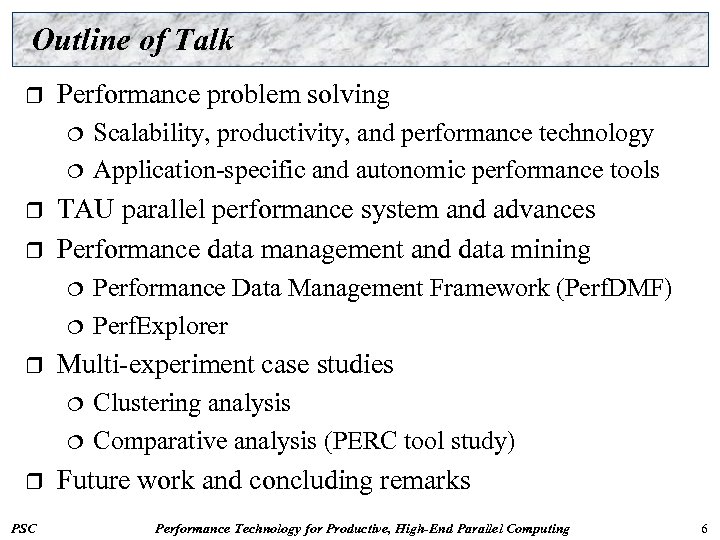

Outline of Talk r Performance problem solving ¦ ¦ r r TAU parallel performance system and advances Performance data management and data mining ¦ ¦ r ¦ PSC Performance Data Management Framework (Perf. DMF) Perf. Explorer Multi-experiment case studies ¦ r Scalability, productivity, and performance technology Application-specific and autonomic performance tools Clustering analysis Comparative analysis (PERC tool study) Future work and concluding remarks Performance Technology for Productive, High-End Parallel Computing 6

Outline of Talk r Performance problem solving ¦ ¦ r r TAU parallel performance system and advances Performance data management and data mining ¦ ¦ r ¦ PSC Performance Data Management Framework (Perf. DMF) Perf. Explorer Multi-experiment case studies ¦ r Scalability, productivity, and performance technology Application-specific and autonomic performance tools Clustering analysis Comparative analysis (PERC tool study) Future work and concluding remarks Performance Technology for Productive, High-End Parallel Computing 6

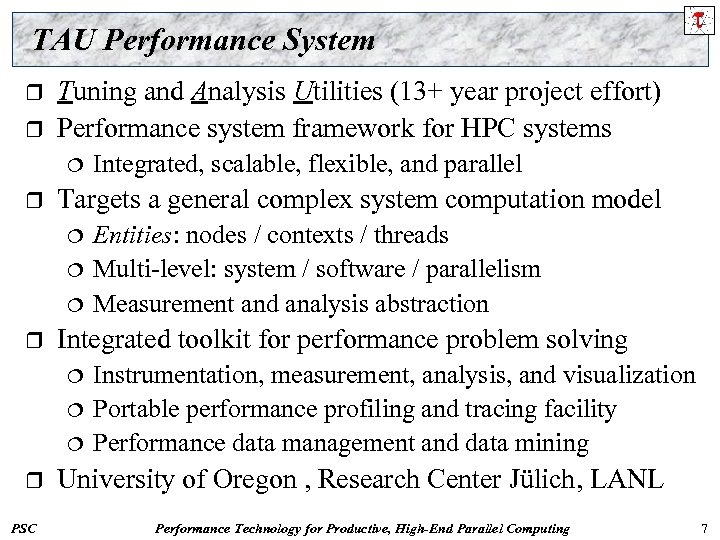

TAU Performance System r r Tuning and Analysis Utilities (13+ year project effort) Performance system framework for HPC systems ¦ r Targets a general complex system computation model ¦ ¦ ¦ r ¦ ¦ PSC Entities: nodes / contexts / threads Multi-level: system / software / parallelism Measurement and analysis abstraction Integrated toolkit for performance problem solving ¦ r Integrated, scalable, flexible, and parallel Instrumentation, measurement, analysis, and visualization Portable performance profiling and tracing facility Performance data management and data mining University of Oregon , Research Center Jülich, LANL Performance Technology for Productive, High-End Parallel Computing 7

TAU Performance System r r Tuning and Analysis Utilities (13+ year project effort) Performance system framework for HPC systems ¦ r Targets a general complex system computation model ¦ ¦ ¦ r ¦ ¦ PSC Entities: nodes / contexts / threads Multi-level: system / software / parallelism Measurement and analysis abstraction Integrated toolkit for performance problem solving ¦ r Integrated, scalable, flexible, and parallel Instrumentation, measurement, analysis, and visualization Portable performance profiling and tracing facility Performance data management and data mining University of Oregon , Research Center Jülich, LANL Performance Technology for Productive, High-End Parallel Computing 7

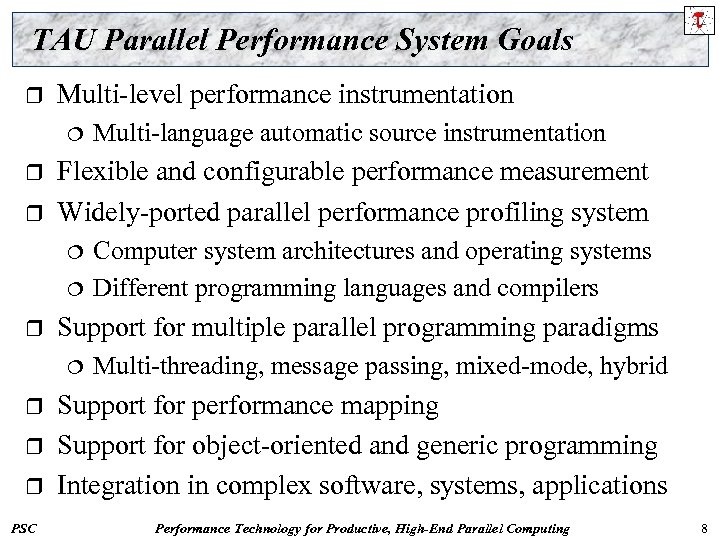

TAU Parallel Performance System Goals r Multi-level performance instrumentation ¦ r r Flexible and configurable performance measurement Widely-ported parallel performance profiling system ¦ ¦ r r r PSC Computer system architectures and operating systems Different programming languages and compilers Support for multiple parallel programming paradigms ¦ r Multi-language automatic source instrumentation Multi-threading, message passing, mixed-mode, hybrid Support for performance mapping Support for object-oriented and generic programming Integration in complex software, systems, applications Performance Technology for Productive, High-End Parallel Computing 8

TAU Parallel Performance System Goals r Multi-level performance instrumentation ¦ r r Flexible and configurable performance measurement Widely-ported parallel performance profiling system ¦ ¦ r r r PSC Computer system architectures and operating systems Different programming languages and compilers Support for multiple parallel programming paradigms ¦ r Multi-language automatic source instrumentation Multi-threading, message passing, mixed-mode, hybrid Support for performance mapping Support for object-oriented and generic programming Integration in complex software, systems, applications Performance Technology for Productive, High-End Parallel Computing 8

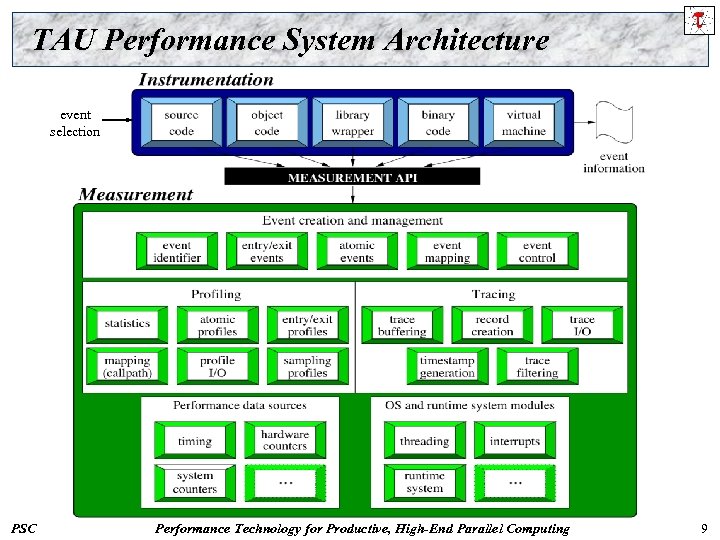

TAU Performance System Architecture event selection PSC Performance Technology for Productive, High-End Parallel Computing 9

TAU Performance System Architecture event selection PSC Performance Technology for Productive, High-End Parallel Computing 9

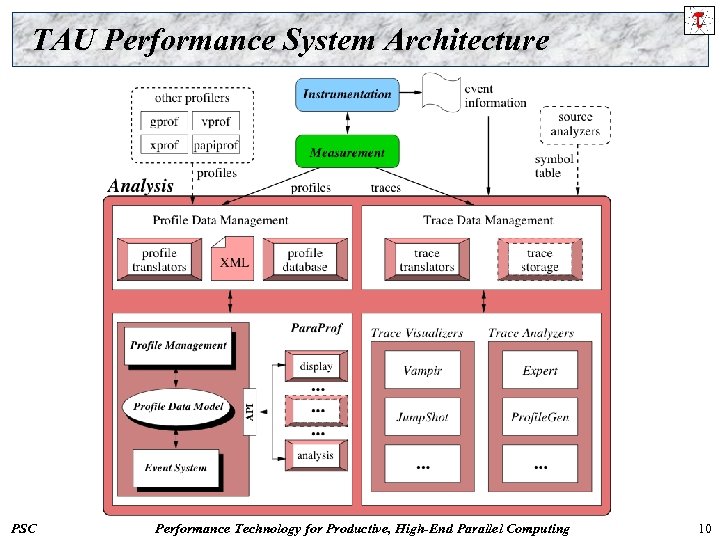

TAU Performance System Architecture PSC Performance Technology for Productive, High-End Parallel Computing 10

TAU Performance System Architecture PSC Performance Technology for Productive, High-End Parallel Computing 10

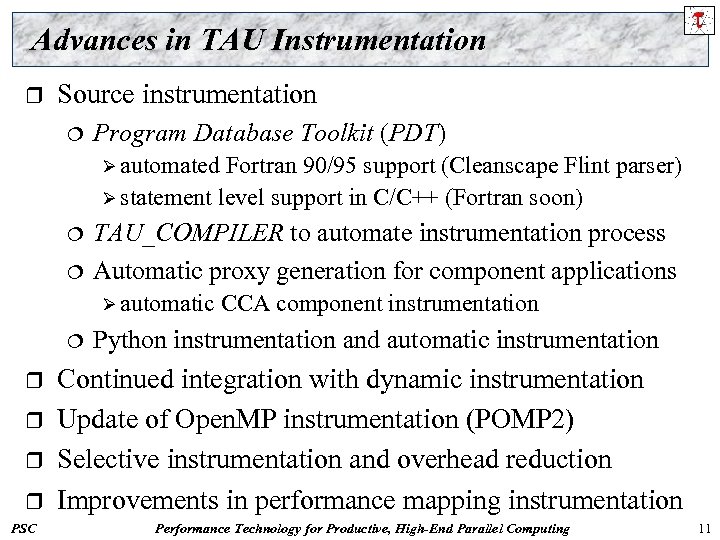

Advances in TAU Instrumentation r Source instrumentation ¦ Program Database Toolkit (PDT) Ø automated Fortran 90/95 support (Cleanscape Flint parser) Ø statement level support in C/C++ (Fortran soon) ¦ ¦ TAU_COMPILER to automate instrumentation process Automatic proxy generation for component applications Ø automatic ¦ r r PSC CCA component instrumentation Python instrumentation and automatic instrumentation Continued integration with dynamic instrumentation Update of Open. MP instrumentation (POMP 2) Selective instrumentation and overhead reduction Improvements in performance mapping instrumentation Performance Technology for Productive, High-End Parallel Computing 11

Advances in TAU Instrumentation r Source instrumentation ¦ Program Database Toolkit (PDT) Ø automated Fortran 90/95 support (Cleanscape Flint parser) Ø statement level support in C/C++ (Fortran soon) ¦ ¦ TAU_COMPILER to automate instrumentation process Automatic proxy generation for component applications Ø automatic ¦ r r PSC CCA component instrumentation Python instrumentation and automatic instrumentation Continued integration with dynamic instrumentation Update of Open. MP instrumentation (POMP 2) Selective instrumentation and overhead reduction Improvements in performance mapping instrumentation Performance Technology for Productive, High-End Parallel Computing 11

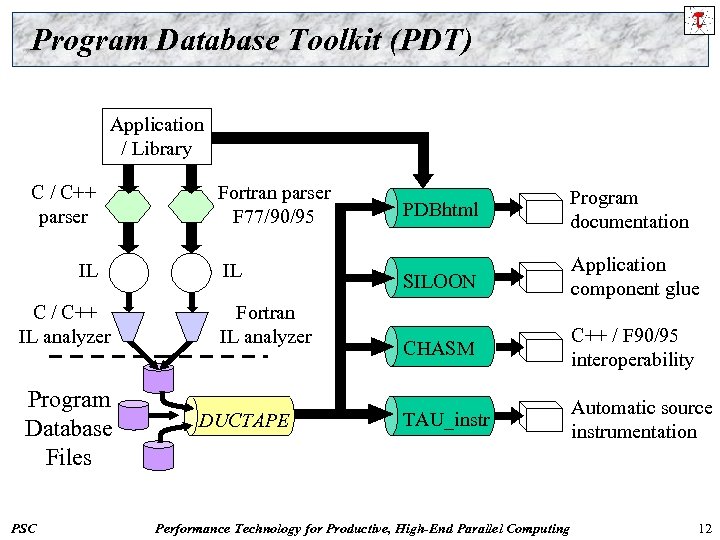

Program Database Toolkit (PDT) Application / Library C / C++ parser IL C / C++ IL analyzer Program Database Files PSC Fortran parser F 77/90/95 IL Fortran IL analyzer DUCTAPE PDBhtml Program documentation SILOON Application component glue CHASM C++ / F 90/95 interoperability TAU_instr Automatic source instrumentation Performance Technology for Productive, High-End Parallel Computing 12

Program Database Toolkit (PDT) Application / Library C / C++ parser IL C / C++ IL analyzer Program Database Files PSC Fortran parser F 77/90/95 IL Fortran IL analyzer DUCTAPE PDBhtml Program documentation SILOON Application component glue CHASM C++ / F 90/95 interoperability TAU_instr Automatic source instrumentation Performance Technology for Productive, High-End Parallel Computing 12

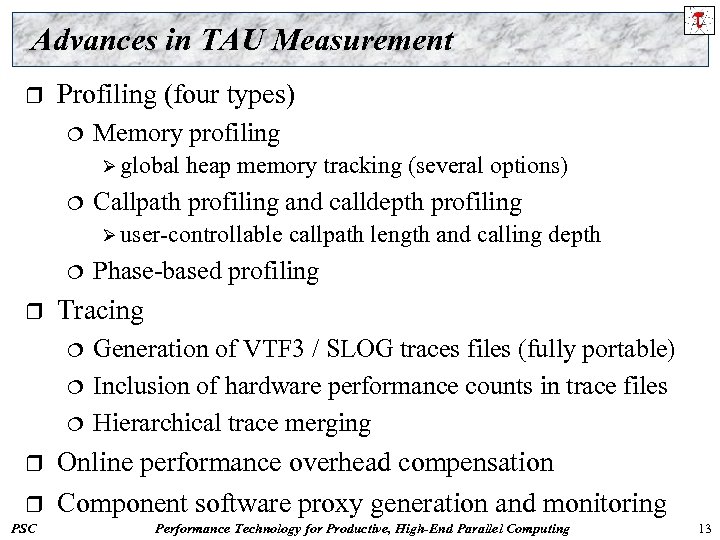

Advances in TAU Measurement r Profiling (four types) ¦ Memory profiling Ø global ¦ heap memory tracking (several options) Callpath profiling and calldepth profiling Ø user-controllable ¦ r ¦ ¦ r PSC Phase-based profiling Tracing ¦ r callpath length and calling depth Generation of VTF 3 / SLOG traces files (fully portable) Inclusion of hardware performance counts in trace files Hierarchical trace merging Online performance overhead compensation Component software proxy generation and monitoring Performance Technology for Productive, High-End Parallel Computing 13

Advances in TAU Measurement r Profiling (four types) ¦ Memory profiling Ø global ¦ heap memory tracking (several options) Callpath profiling and calldepth profiling Ø user-controllable ¦ r ¦ ¦ r PSC Phase-based profiling Tracing ¦ r callpath length and calling depth Generation of VTF 3 / SLOG traces files (fully portable) Inclusion of hardware performance counts in trace files Hierarchical trace merging Online performance overhead compensation Component software proxy generation and monitoring Performance Technology for Productive, High-End Parallel Computing 13

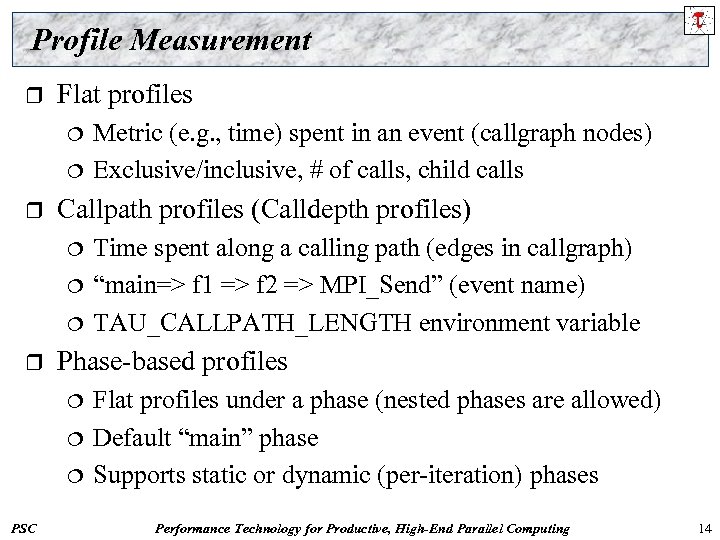

Profile Measurement r Flat profiles ¦ ¦ r Callpath profiles (Calldepth profiles) ¦ ¦ ¦ r Time spent along a calling path (edges in callgraph) “main=> f 1 => f 2 => MPI_Send” (event name) TAU_CALLPATH_LENGTH environment variable Phase-based profiles ¦ ¦ ¦ PSC Metric (e. g. , time) spent in an event (callgraph nodes) Exclusive/inclusive, # of calls, child calls Flat profiles under a phase (nested phases are allowed) Default “main” phase Supports static or dynamic (per-iteration) phases Performance Technology for Productive, High-End Parallel Computing 14

Profile Measurement r Flat profiles ¦ ¦ r Callpath profiles (Calldepth profiles) ¦ ¦ ¦ r Time spent along a calling path (edges in callgraph) “main=> f 1 => f 2 => MPI_Send” (event name) TAU_CALLPATH_LENGTH environment variable Phase-based profiles ¦ ¦ ¦ PSC Metric (e. g. , time) spent in an event (callgraph nodes) Exclusive/inclusive, # of calls, child calls Flat profiles under a phase (nested phases are allowed) Default “main” phase Supports static or dynamic (per-iteration) phases Performance Technology for Productive, High-End Parallel Computing 14

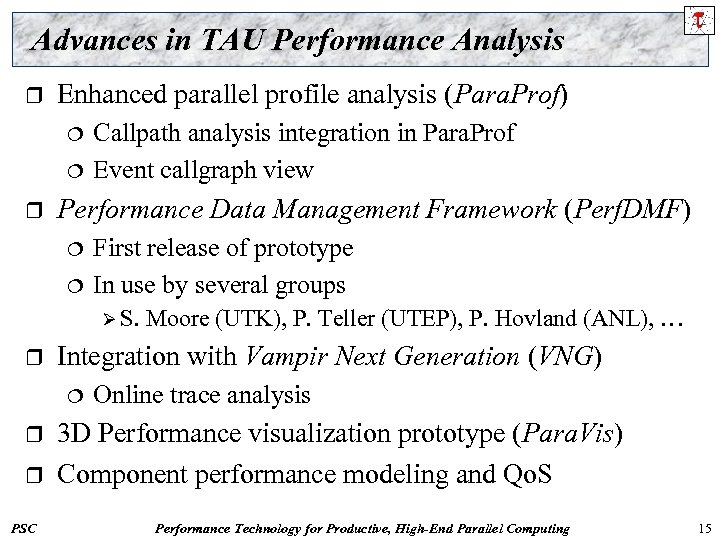

Advances in TAU Performance Analysis r Enhanced parallel profile analysis (Para. Prof) ¦ ¦ r Callpath analysis integration in Para. Prof Event callgraph view Performance Data Management Framework (Perf. DMF) ¦ ¦ First release of prototype In use by several groups Ø S. r Integration with Vampir Next Generation (VNG) ¦ r r PSC Moore (UTK), P. Teller (UTEP), P. Hovland (ANL), … Online trace analysis 3 D Performance visualization prototype (Para. Vis) Component performance modeling and Qo. S Performance Technology for Productive, High-End Parallel Computing 15

Advances in TAU Performance Analysis r Enhanced parallel profile analysis (Para. Prof) ¦ ¦ r Callpath analysis integration in Para. Prof Event callgraph view Performance Data Management Framework (Perf. DMF) ¦ ¦ First release of prototype In use by several groups Ø S. r Integration with Vampir Next Generation (VNG) ¦ r r PSC Moore (UTK), P. Teller (UTEP), P. Hovland (ANL), … Online trace analysis 3 D Performance visualization prototype (Para. Vis) Component performance modeling and Qo. S Performance Technology for Productive, High-End Parallel Computing 15

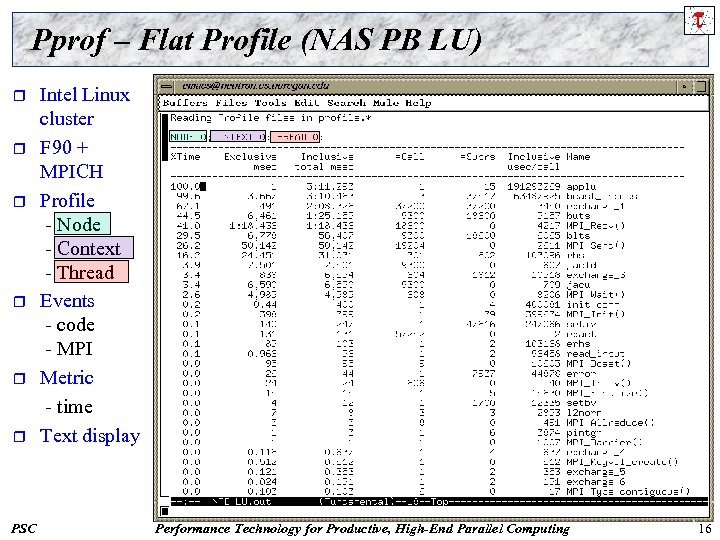

Pprof – Flat Profile (NAS PB LU) r r r PSC Intel Linux cluster F 90 + MPICH Profile - Node - Context - Thread Events - code - MPI Metric - time Text display Performance Technology for Productive, High-End Parallel Computing 16

Pprof – Flat Profile (NAS PB LU) r r r PSC Intel Linux cluster F 90 + MPICH Profile - Node - Context - Thread Events - code - MPI Metric - time Text display Performance Technology for Productive, High-End Parallel Computing 16

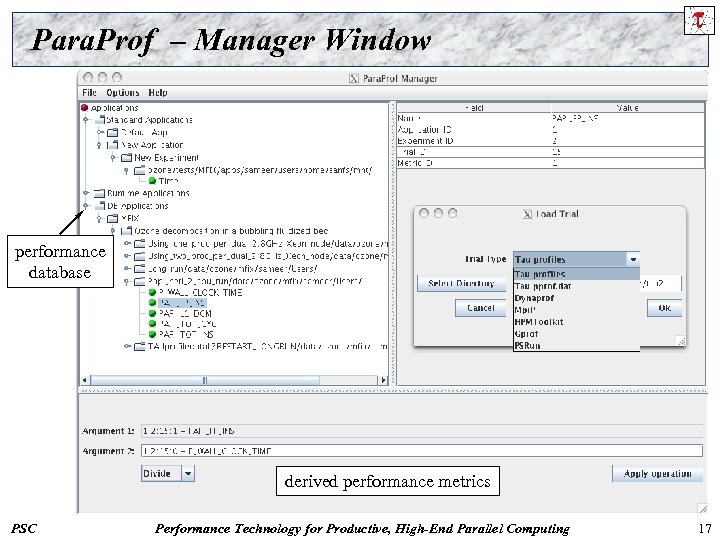

Para. Prof – Manager Window performance database derived performance metrics PSC Performance Technology for Productive, High-End Parallel Computing 17

Para. Prof – Manager Window performance database derived performance metrics PSC Performance Technology for Productive, High-End Parallel Computing 17

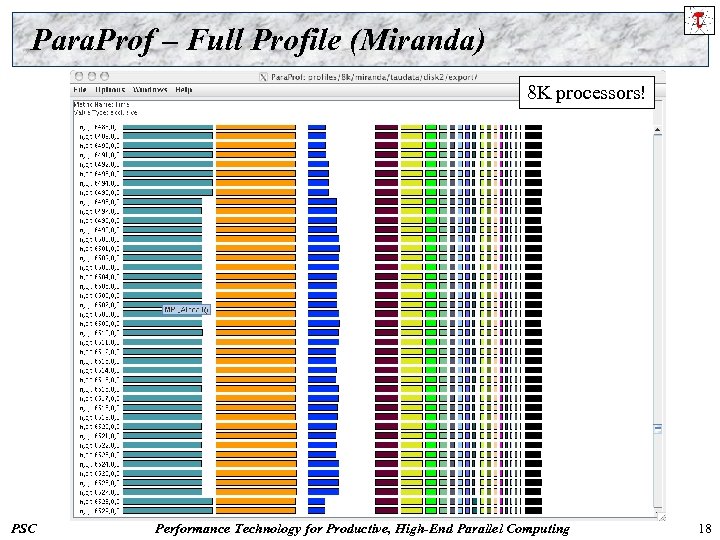

Para. Prof – Full Profile (Miranda) 8 K processors! PSC Performance Technology for Productive, High-End Parallel Computing 18

Para. Prof – Full Profile (Miranda) 8 K processors! PSC Performance Technology for Productive, High-End Parallel Computing 18

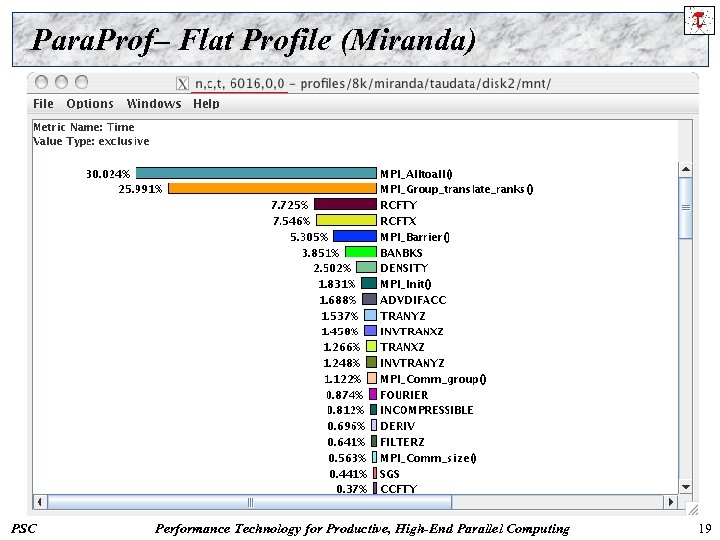

Para. Prof– Flat Profile (Miranda) PSC Performance Technology for Productive, High-End Parallel Computing 19

Para. Prof– Flat Profile (Miranda) PSC Performance Technology for Productive, High-End Parallel Computing 19

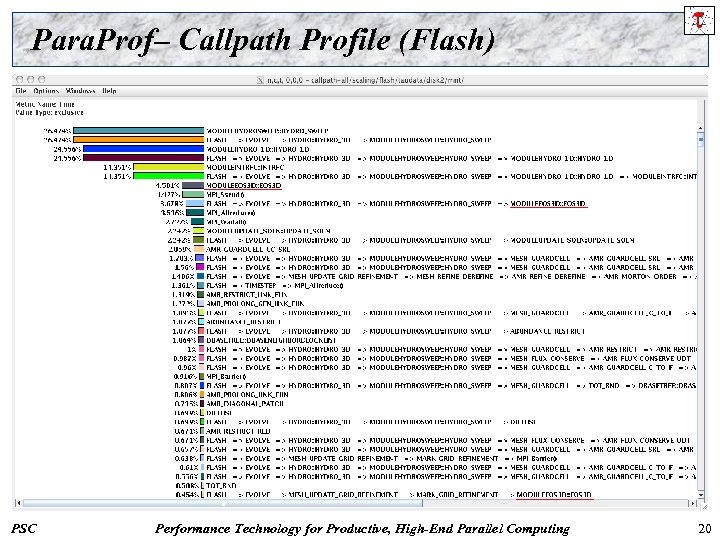

Para. Prof– Callpath Profile (Flash) PSC Performance Technology for Productive, High-End Parallel Computing 20

Para. Prof– Callpath Profile (Flash) PSC Performance Technology for Productive, High-End Parallel Computing 20

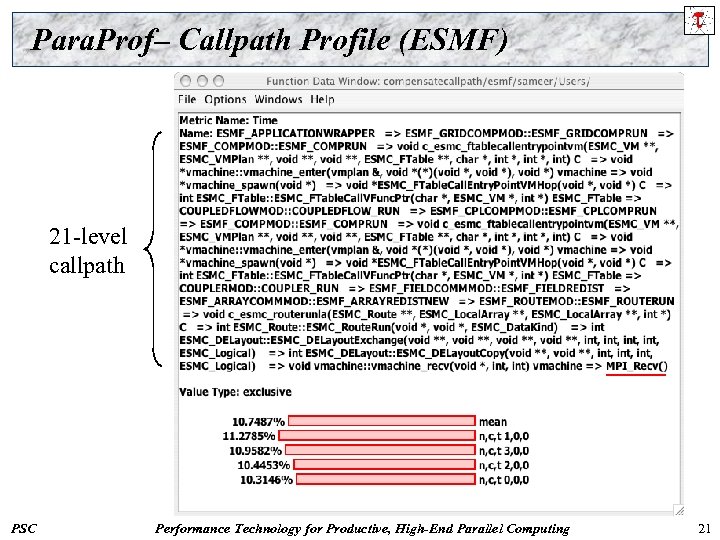

Para. Prof– Callpath Profile (ESMF) 21 -level callpath PSC Performance Technology for Productive, High-End Parallel Computing 21

Para. Prof– Callpath Profile (ESMF) 21 -level callpath PSC Performance Technology for Productive, High-End Parallel Computing 21

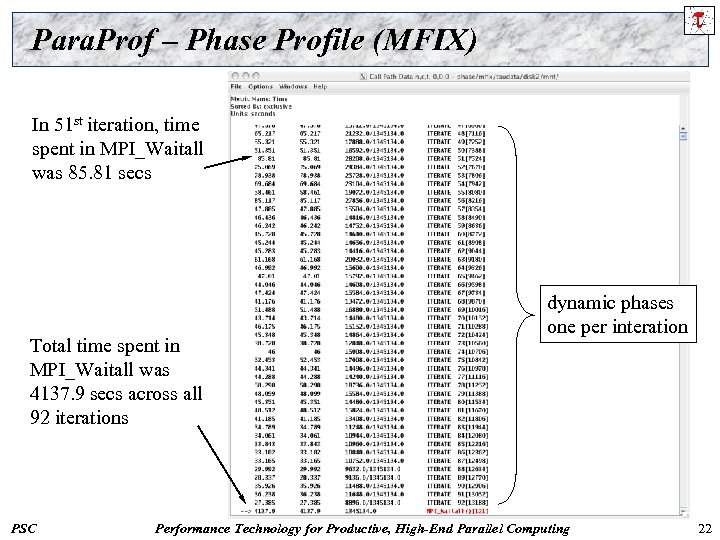

Para. Prof – Phase Profile (MFIX) In 51 st iteration, time spent in MPI_Waitall was 85. 81 secs Total time spent in MPI_Waitall was 4137. 9 secs across all 92 iterations PSC dynamic phases one per interation Performance Technology for Productive, High-End Parallel Computing 22

Para. Prof – Phase Profile (MFIX) In 51 st iteration, time spent in MPI_Waitall was 85. 81 secs Total time spent in MPI_Waitall was 4137. 9 secs across all 92 iterations PSC dynamic phases one per interation Performance Technology for Productive, High-End Parallel Computing 22

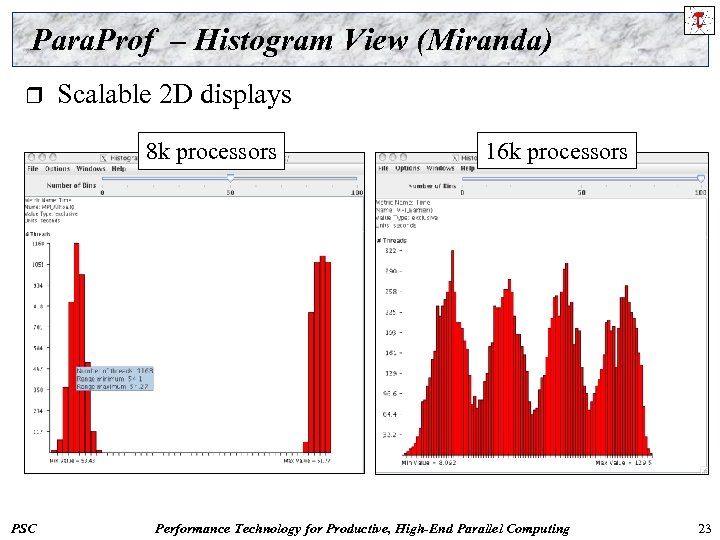

Para. Prof – Histogram View (Miranda) r Scalable 2 D displays 8 k processors PSC 16 k processors Performance Technology for Productive, High-End Parallel Computing 23

Para. Prof – Histogram View (Miranda) r Scalable 2 D displays 8 k processors PSC 16 k processors Performance Technology for Productive, High-End Parallel Computing 23

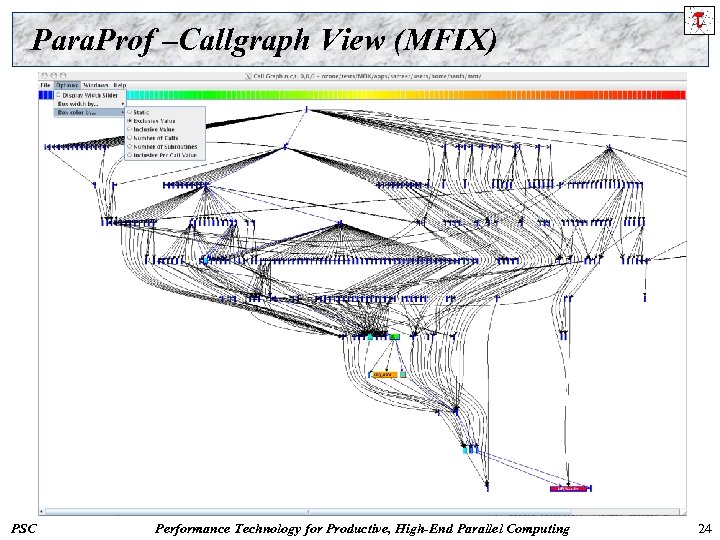

Para. Prof –Callgraph View (MFIX) PSC Performance Technology for Productive, High-End Parallel Computing 24

Para. Prof –Callgraph View (MFIX) PSC Performance Technology for Productive, High-End Parallel Computing 24

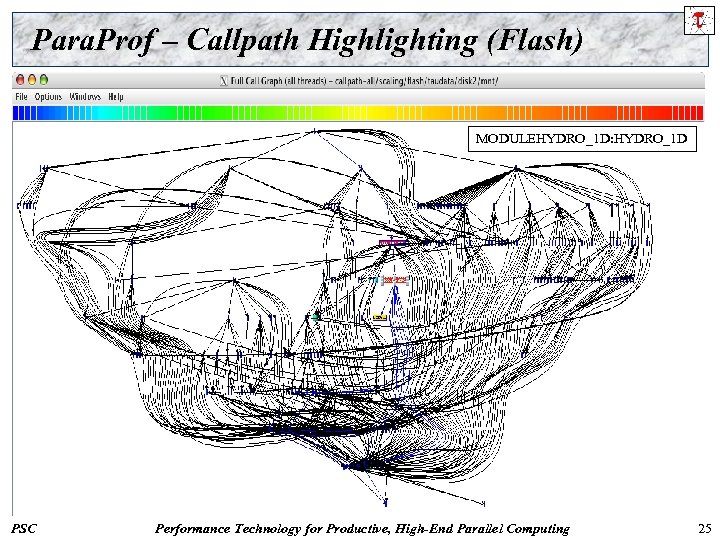

Para. Prof – Callpath Highlighting (Flash) MODULEHYDRO_1 D: HYDRO_1 D PSC Performance Technology for Productive, High-End Parallel Computing 25

Para. Prof – Callpath Highlighting (Flash) MODULEHYDRO_1 D: HYDRO_1 D PSC Performance Technology for Productive, High-End Parallel Computing 25

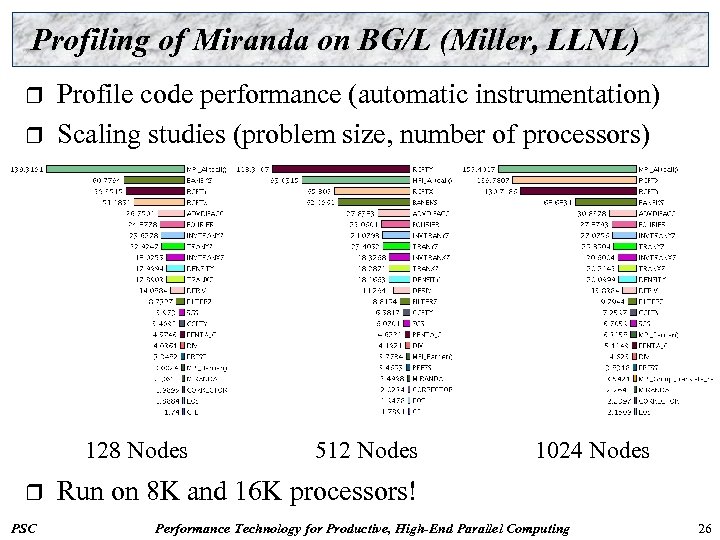

Profiling of Miranda on BG/L (Miller, LLNL) r r Profile code performance (automatic instrumentation) Scaling studies (problem size, number of processors) 128 Nodes r PSC 512 Nodes 1024 Nodes Run on 8 K and 16 K processors! Performance Technology for Productive, High-End Parallel Computing 26

Profiling of Miranda on BG/L (Miller, LLNL) r r Profile code performance (automatic instrumentation) Scaling studies (problem size, number of processors) 128 Nodes r PSC 512 Nodes 1024 Nodes Run on 8 K and 16 K processors! Performance Technology for Productive, High-End Parallel Computing 26

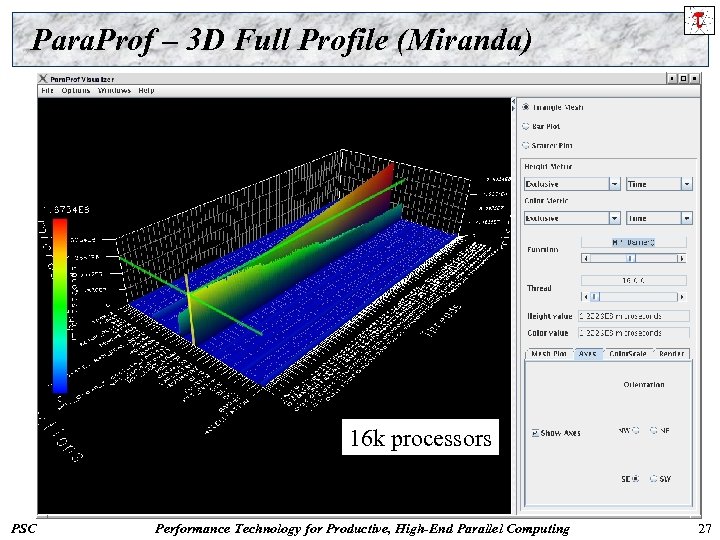

Para. Prof – 3 D Full Profile (Miranda) 16 k processors PSC Performance Technology for Productive, High-End Parallel Computing 27

Para. Prof – 3 D Full Profile (Miranda) 16 k processors PSC Performance Technology for Productive, High-End Parallel Computing 27

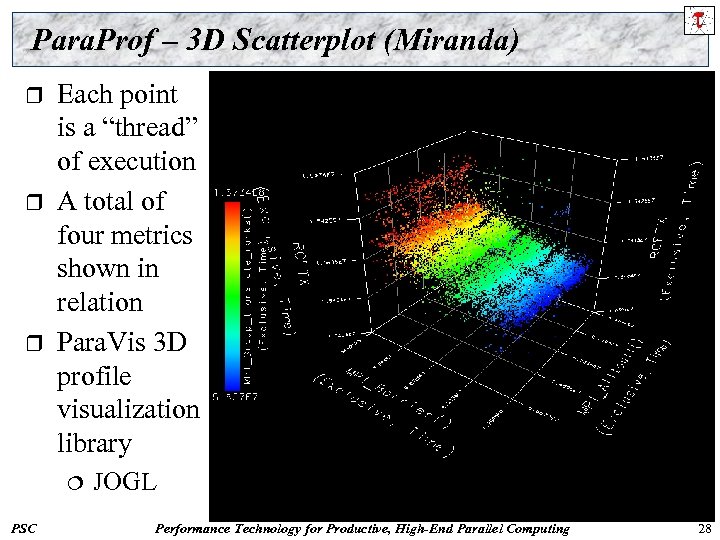

Para. Prof – 3 D Scatterplot (Miranda) r r r Each point is a “thread” of execution A total of four metrics shown in relation Para. Vis 3 D profile visualization library ¦ PSC JOGL Performance Technology for Productive, High-End Parallel Computing 28

Para. Prof – 3 D Scatterplot (Miranda) r r r Each point is a “thread” of execution A total of four metrics shown in relation Para. Vis 3 D profile visualization library ¦ PSC JOGL Performance Technology for Productive, High-End Parallel Computing 28

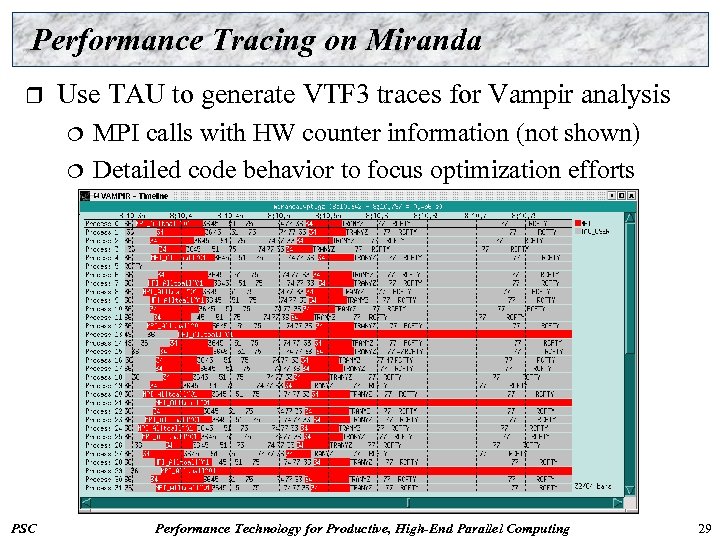

Performance Tracing on Miranda r Use TAU to generate VTF 3 traces for Vampir analysis ¦ ¦ PSC MPI calls with HW counter information (not shown) Detailed code behavior to focus optimization efforts Performance Technology for Productive, High-End Parallel Computing 29

Performance Tracing on Miranda r Use TAU to generate VTF 3 traces for Vampir analysis ¦ ¦ PSC MPI calls with HW counter information (not shown) Detailed code behavior to focus optimization efforts Performance Technology for Productive, High-End Parallel Computing 29

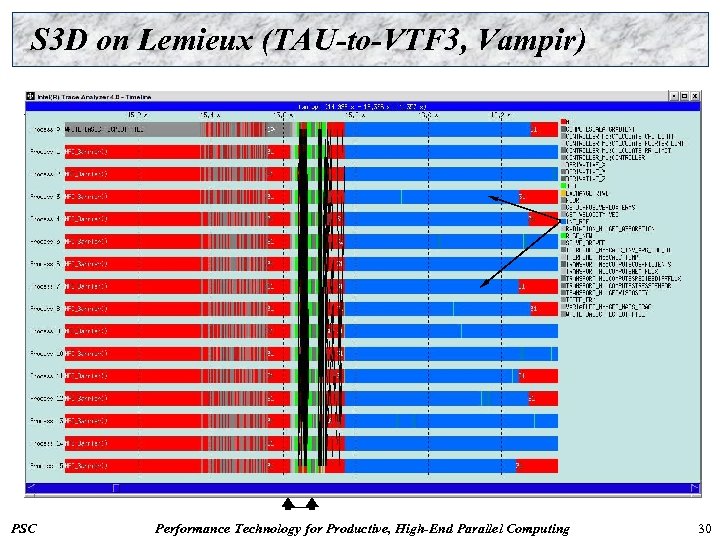

S 3 D on Lemieux (TAU-to-VTF 3, Vampir) PSC Performance Technology for Productive, High-End Parallel Computing 30

S 3 D on Lemieux (TAU-to-VTF 3, Vampir) PSC Performance Technology for Productive, High-End Parallel Computing 30

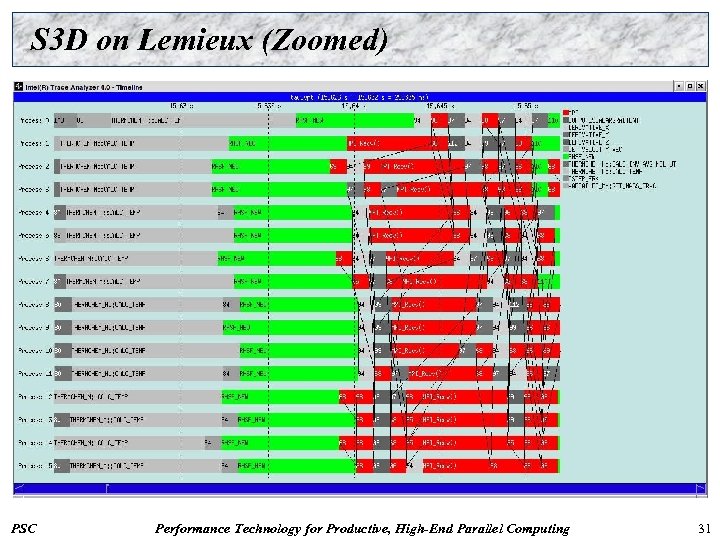

S 3 D on Lemieux (Zoomed) PSC Performance Technology for Productive, High-End Parallel Computing 31

S 3 D on Lemieux (Zoomed) PSC Performance Technology for Productive, High-End Parallel Computing 31

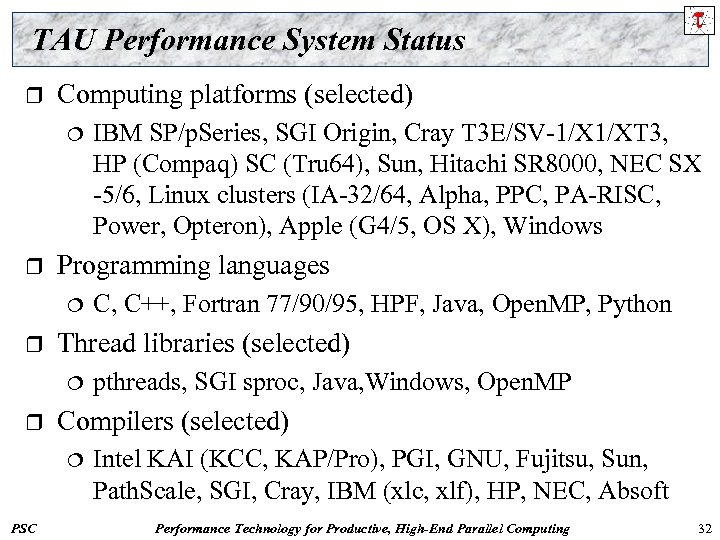

TAU Performance System Status r Computing platforms (selected) ¦ r Programming languages ¦ r pthreads, SGI sproc, Java, Windows, Open. MP Compilers (selected) ¦ PSC C, C++, Fortran 77/90/95, HPF, Java, Open. MP, Python Thread libraries (selected) ¦ r IBM SP/p. Series, SGI Origin, Cray T 3 E/SV-1/X 1/XT 3, HP (Compaq) SC (Tru 64), Sun, Hitachi SR 8000, NEC SX -5/6, Linux clusters (IA-32/64, Alpha, PPC, PA-RISC, Power, Opteron), Apple (G 4/5, OS X), Windows Intel KAI (KCC, KAP/Pro), PGI, GNU, Fujitsu, Sun, Path. Scale, SGI, Cray, IBM (xlc, xlf), HP, NEC, Absoft Performance Technology for Productive, High-End Parallel Computing 32

TAU Performance System Status r Computing platforms (selected) ¦ r Programming languages ¦ r pthreads, SGI sproc, Java, Windows, Open. MP Compilers (selected) ¦ PSC C, C++, Fortran 77/90/95, HPF, Java, Open. MP, Python Thread libraries (selected) ¦ r IBM SP/p. Series, SGI Origin, Cray T 3 E/SV-1/X 1/XT 3, HP (Compaq) SC (Tru 64), Sun, Hitachi SR 8000, NEC SX -5/6, Linux clusters (IA-32/64, Alpha, PPC, PA-RISC, Power, Opteron), Apple (G 4/5, OS X), Windows Intel KAI (KCC, KAP/Pro), PGI, GNU, Fujitsu, Sun, Path. Scale, SGI, Cray, IBM (xlc, xlf), HP, NEC, Absoft Performance Technology for Productive, High-End Parallel Computing 32

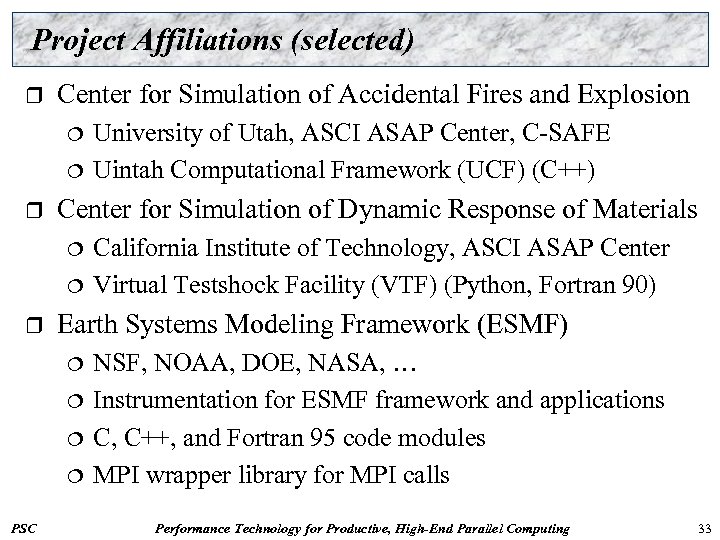

Project Affiliations (selected) r Center for Simulation of Accidental Fires and Explosion ¦ ¦ r Center for Simulation of Dynamic Response of Materials ¦ ¦ r California Institute of Technology, ASCI ASAP Center Virtual Testshock Facility (VTF) (Python, Fortran 90) Earth Systems Modeling Framework (ESMF) ¦ ¦ PSC University of Utah, ASCI ASAP Center, C-SAFE Uintah Computational Framework (UCF) (C++) NSF, NOAA, DOE, NASA, … Instrumentation for ESMF framework and applications C, C++, and Fortran 95 code modules MPI wrapper library for MPI calls Performance Technology for Productive, High-End Parallel Computing 33

Project Affiliations (selected) r Center for Simulation of Accidental Fires and Explosion ¦ ¦ r Center for Simulation of Dynamic Response of Materials ¦ ¦ r California Institute of Technology, ASCI ASAP Center Virtual Testshock Facility (VTF) (Python, Fortran 90) Earth Systems Modeling Framework (ESMF) ¦ ¦ PSC University of Utah, ASCI ASAP Center, C-SAFE Uintah Computational Framework (UCF) (C++) NSF, NOAA, DOE, NASA, … Instrumentation for ESMF framework and applications C, C++, and Fortran 95 code modules MPI wrapper library for MPI calls Performance Technology for Productive, High-End Parallel Computing 33

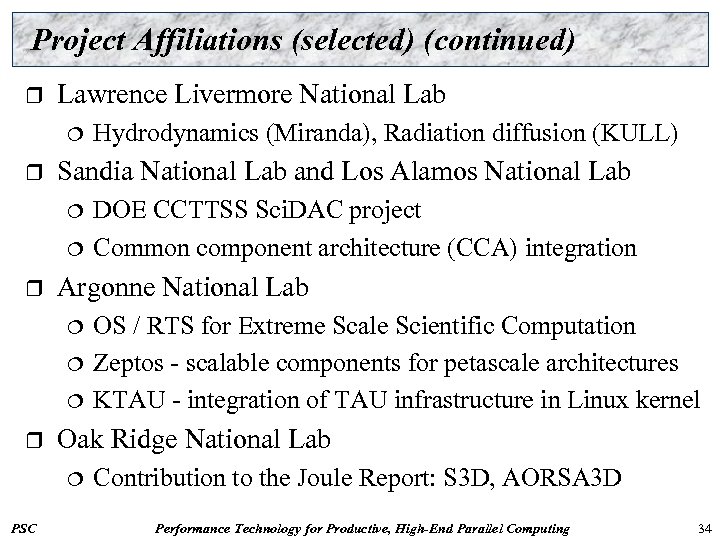

Project Affiliations (selected) (continued) r Lawrence Livermore National Lab ¦ r Sandia National Lab and Los Alamos National Lab ¦ ¦ r ¦ ¦ OS / RTS for Extreme Scale Scientific Computation Zeptos - scalable components for petascale architectures KTAU - integration of TAU infrastructure in Linux kernel Oak Ridge National Lab ¦ PSC DOE CCTTSS Sci. DAC project Common component architecture (CCA) integration Argonne National Lab ¦ r Hydrodynamics (Miranda), Radiation diffusion (KULL) Contribution to the Joule Report: S 3 D, AORSA 3 D Performance Technology for Productive, High-End Parallel Computing 34

Project Affiliations (selected) (continued) r Lawrence Livermore National Lab ¦ r Sandia National Lab and Los Alamos National Lab ¦ ¦ r ¦ ¦ OS / RTS for Extreme Scale Scientific Computation Zeptos - scalable components for petascale architectures KTAU - integration of TAU infrastructure in Linux kernel Oak Ridge National Lab ¦ PSC DOE CCTTSS Sci. DAC project Common component architecture (CCA) integration Argonne National Lab ¦ r Hydrodynamics (Miranda), Radiation diffusion (KULL) Contribution to the Joule Report: S 3 D, AORSA 3 D Performance Technology for Productive, High-End Parallel Computing 34

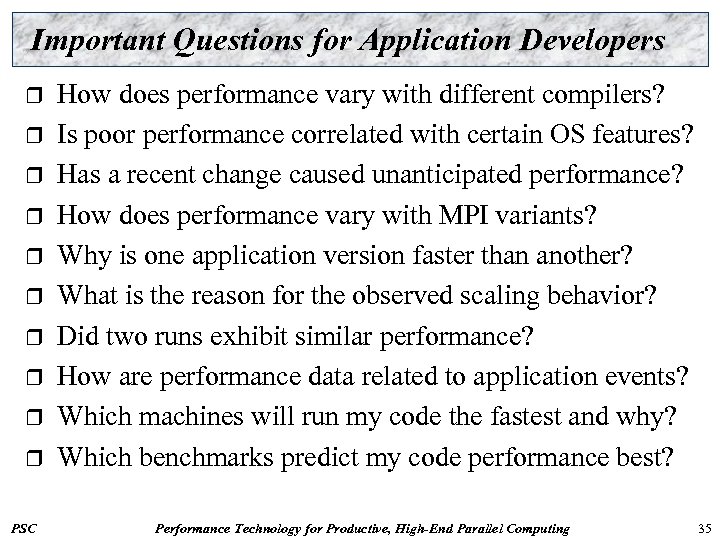

Important Questions for Application Developers r r r r r PSC How does performance vary with different compilers? Is poor performance correlated with certain OS features? Has a recent change caused unanticipated performance? How does performance vary with MPI variants? Why is one application version faster than another? What is the reason for the observed scaling behavior? Did two runs exhibit similar performance? How are performance data related to application events? Which machines will run my code the fastest and why? Which benchmarks predict my code performance best? Performance Technology for Productive, High-End Parallel Computing 35

Important Questions for Application Developers r r r r r PSC How does performance vary with different compilers? Is poor performance correlated with certain OS features? Has a recent change caused unanticipated performance? How does performance vary with MPI variants? Why is one application version faster than another? What is the reason for the observed scaling behavior? Did two runs exhibit similar performance? How are performance data related to application events? Which machines will run my code the fastest and why? Which benchmarks predict my code performance best? Performance Technology for Productive, High-End Parallel Computing 35

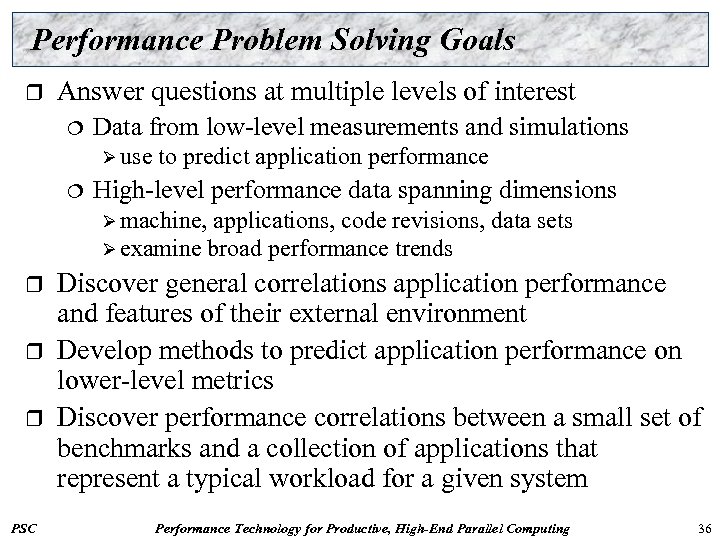

Performance Problem Solving Goals r Answer questions at multiple levels of interest ¦ Data from low-level measurements and simulations Ø use ¦ to predict application performance High-level performance data spanning dimensions Ø machine, applications, code revisions, data sets Ø examine broad performance trends r r r PSC Discover general correlations application performance and features of their external environment Develop methods to predict application performance on lower-level metrics Discover performance correlations between a small set of benchmarks and a collection of applications that represent a typical workload for a given system Performance Technology for Productive, High-End Parallel Computing 36

Performance Problem Solving Goals r Answer questions at multiple levels of interest ¦ Data from low-level measurements and simulations Ø use ¦ to predict application performance High-level performance data spanning dimensions Ø machine, applications, code revisions, data sets Ø examine broad performance trends r r r PSC Discover general correlations application performance and features of their external environment Develop methods to predict application performance on lower-level metrics Discover performance correlations between a small set of benchmarks and a collection of applications that represent a typical workload for a given system Performance Technology for Productive, High-End Parallel Computing 36

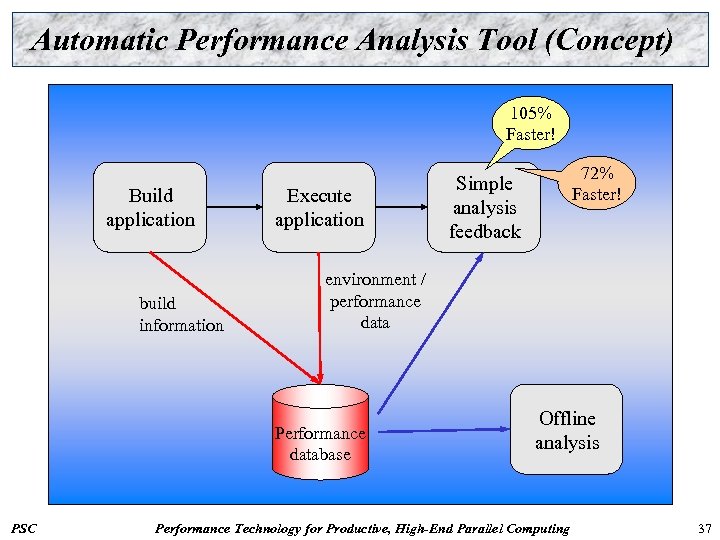

Automatic Performance Analysis Tool (Concept) 105% Faster! Build application build information Execute application environment / performance data Performance database PSC 72% Faster! Simple analysis feedback Offline analysis Performance Technology for Productive, High-End Parallel Computing 37

Automatic Performance Analysis Tool (Concept) 105% Faster! Build application build information Execute application environment / performance data Performance database PSC 72% Faster! Simple analysis feedback Offline analysis Performance Technology for Productive, High-End Parallel Computing 37

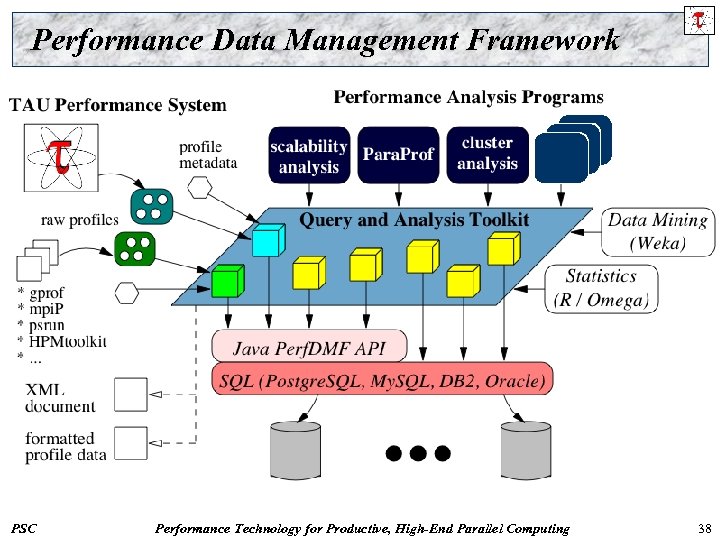

Performance Data Management Framework PSC Performance Technology for Productive, High-End Parallel Computing 38

Performance Data Management Framework PSC Performance Technology for Productive, High-End Parallel Computing 38

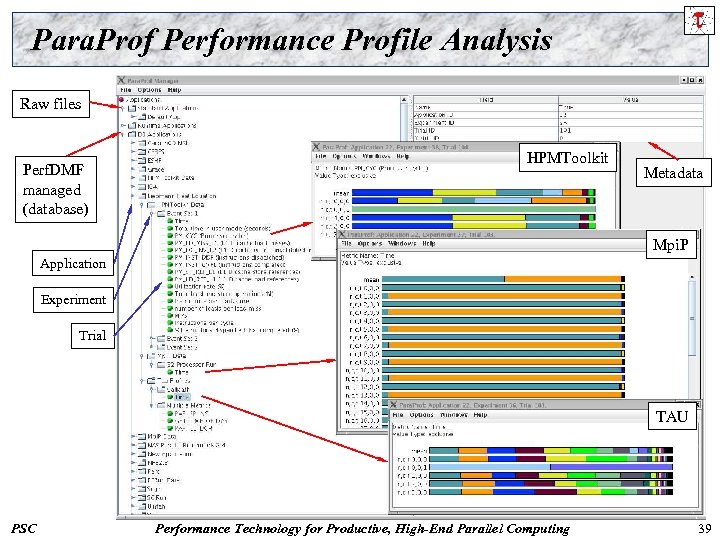

Para. Prof Performance Profile Analysis Raw files Perf. DMF managed (database) HPMToolkit Metadata Mpi. P Application Experiment Trial TAU PSC Performance Technology for Productive, High-End Parallel Computing 39

Para. Prof Performance Profile Analysis Raw files Perf. DMF managed (database) HPMToolkit Metadata Mpi. P Application Experiment Trial TAU PSC Performance Technology for Productive, High-End Parallel Computing 39

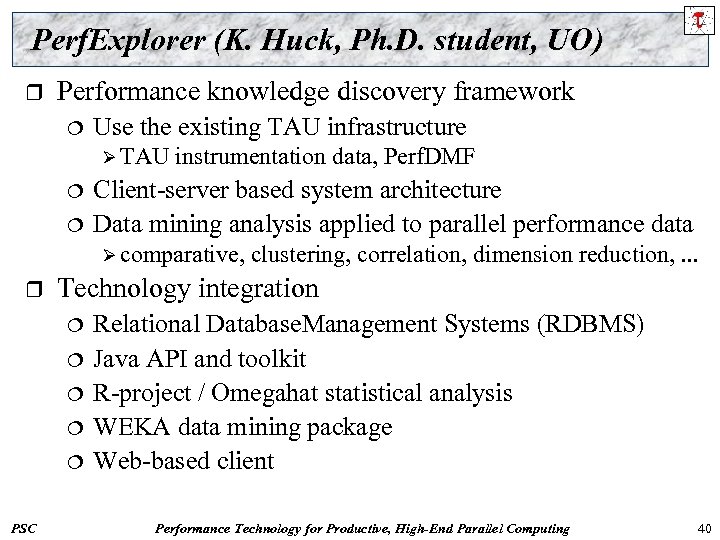

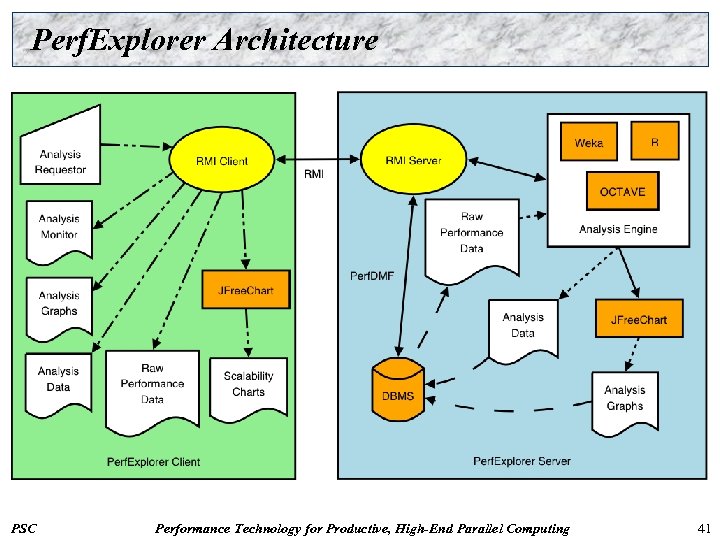

Perf. Explorer (K. Huck, Ph. D. student, UO) r Performance knowledge discovery framework ¦ Use the existing TAU infrastructure Ø TAU ¦ ¦ instrumentation data, Perf. DMF Client-server based system architecture Data mining analysis applied to parallel performance data Ø comparative, r Technology integration ¦ ¦ ¦ PSC clustering, correlation, dimension reduction, . . . Relational Database. Management Systems (RDBMS) Java API and toolkit R-project / Omegahat statistical analysis WEKA data mining package Web-based client Performance Technology for Productive, High-End Parallel Computing 40

Perf. Explorer (K. Huck, Ph. D. student, UO) r Performance knowledge discovery framework ¦ Use the existing TAU infrastructure Ø TAU ¦ ¦ instrumentation data, Perf. DMF Client-server based system architecture Data mining analysis applied to parallel performance data Ø comparative, r Technology integration ¦ ¦ ¦ PSC clustering, correlation, dimension reduction, . . . Relational Database. Management Systems (RDBMS) Java API and toolkit R-project / Omegahat statistical analysis WEKA data mining package Web-based client Performance Technology for Productive, High-End Parallel Computing 40

Perf. Explorer Architecture PSC Performance Technology for Productive, High-End Parallel Computing 41

Perf. Explorer Architecture PSC Performance Technology for Productive, High-End Parallel Computing 41

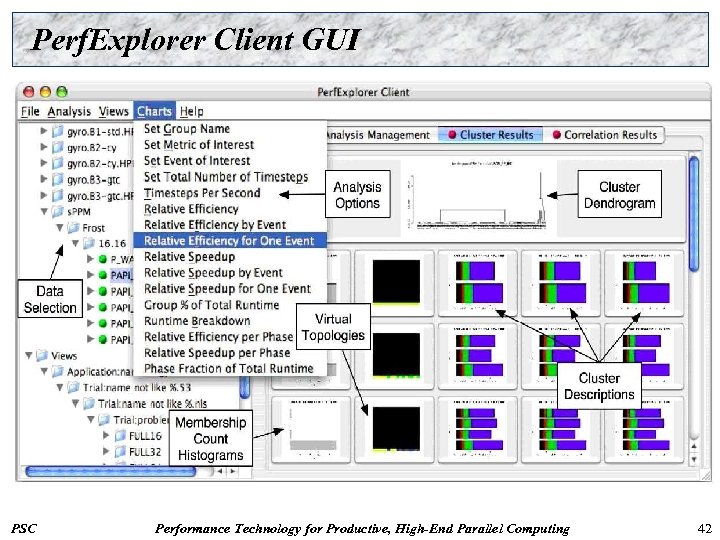

Perf. Explorer Client GUI PSC Performance Technology for Productive, High-End Parallel Computing 42

Perf. Explorer Client GUI PSC Performance Technology for Productive, High-End Parallel Computing 42

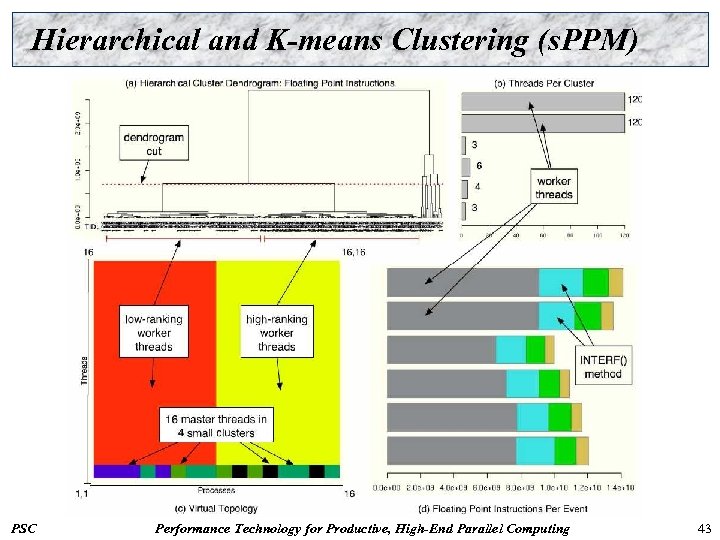

Hierarchical and K-means Clustering (s. PPM) PSC Performance Technology for Productive, High-End Parallel Computing 43

Hierarchical and K-means Clustering (s. PPM) PSC Performance Technology for Productive, High-End Parallel Computing 43

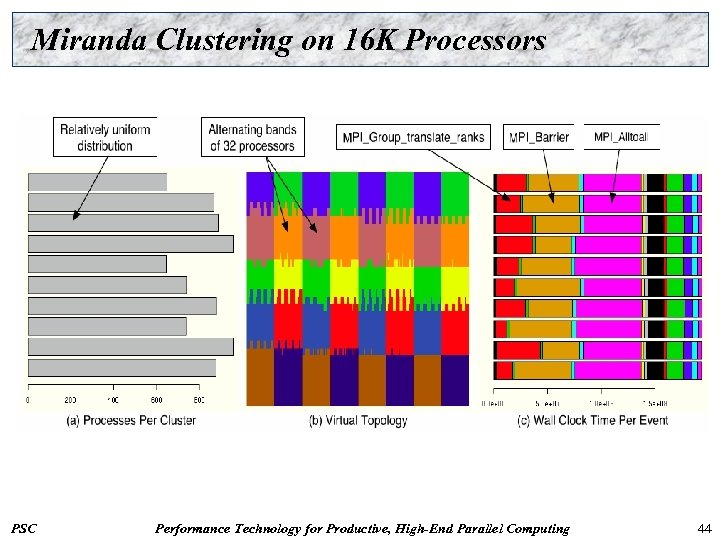

Miranda Clustering on 16 K Processors PSC Performance Technology for Productive, High-End Parallel Computing 44

Miranda Clustering on 16 K Processors PSC Performance Technology for Productive, High-End Parallel Computing 44

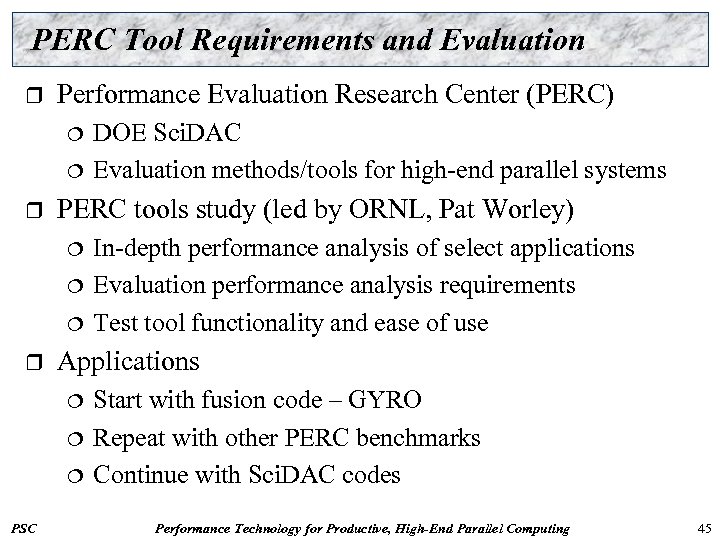

PERC Tool Requirements and Evaluation r Performance Evaluation Research Center (PERC) ¦ ¦ r PERC tools study (led by ORNL, Pat Worley) ¦ ¦ ¦ r In-depth performance analysis of select applications Evaluation performance analysis requirements Test tool functionality and ease of use Applications ¦ ¦ ¦ PSC DOE Sci. DAC Evaluation methods/tools for high-end parallel systems Start with fusion code – GYRO Repeat with other PERC benchmarks Continue with Sci. DAC codes Performance Technology for Productive, High-End Parallel Computing 45

PERC Tool Requirements and Evaluation r Performance Evaluation Research Center (PERC) ¦ ¦ r PERC tools study (led by ORNL, Pat Worley) ¦ ¦ ¦ r In-depth performance analysis of select applications Evaluation performance analysis requirements Test tool functionality and ease of use Applications ¦ ¦ ¦ PSC DOE Sci. DAC Evaluation methods/tools for high-end parallel systems Start with fusion code – GYRO Repeat with other PERC benchmarks Continue with Sci. DAC codes Performance Technology for Productive, High-End Parallel Computing 45

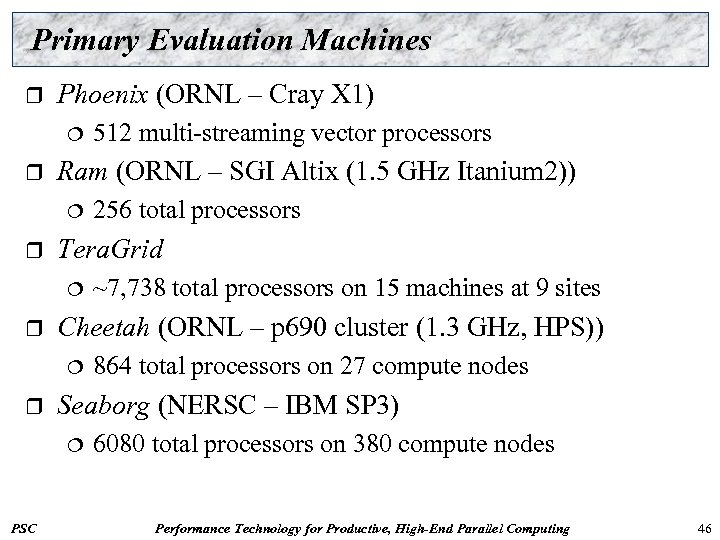

Primary Evaluation Machines r Phoenix (ORNL – Cray X 1) ¦ r Ram (ORNL – SGI Altix (1. 5 GHz Itanium 2)) ¦ r 864 total processors on 27 compute nodes Seaborg (NERSC – IBM SP 3) ¦ PSC ~7, 738 total processors on 15 machines at 9 sites Cheetah (ORNL – p 690 cluster (1. 3 GHz, HPS)) ¦ r 256 total processors Tera. Grid ¦ r 512 multi-streaming vector processors 6080 total processors on 380 compute nodes Performance Technology for Productive, High-End Parallel Computing 46

Primary Evaluation Machines r Phoenix (ORNL – Cray X 1) ¦ r Ram (ORNL – SGI Altix (1. 5 GHz Itanium 2)) ¦ r 864 total processors on 27 compute nodes Seaborg (NERSC – IBM SP 3) ¦ PSC ~7, 738 total processors on 15 machines at 9 sites Cheetah (ORNL – p 690 cluster (1. 3 GHz, HPS)) ¦ r 256 total processors Tera. Grid ¦ r 512 multi-streaming vector processors 6080 total processors on 380 compute nodes Performance Technology for Productive, High-End Parallel Computing 46

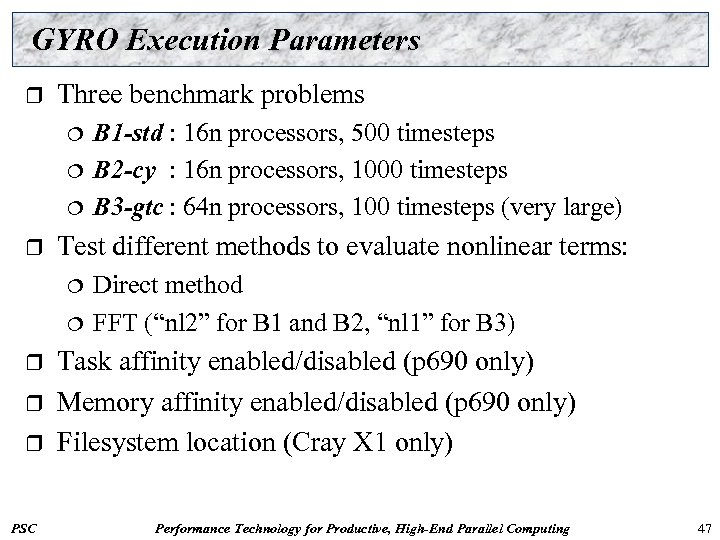

GYRO Execution Parameters r Three benchmark problems ¦ ¦ ¦ r Test different methods to evaluate nonlinear terms: ¦ ¦ r r r PSC B 1 -std : 16 n processors, 500 timesteps B 2 -cy : 16 n processors, 1000 timesteps B 3 -gtc : 64 n processors, 100 timesteps (very large) Direct method FFT (“nl 2” for B 1 and B 2, “nl 1” for B 3) Task affinity enabled/disabled (p 690 only) Memory affinity enabled/disabled (p 690 only) Filesystem location (Cray X 1 only) Performance Technology for Productive, High-End Parallel Computing 47

GYRO Execution Parameters r Three benchmark problems ¦ ¦ ¦ r Test different methods to evaluate nonlinear terms: ¦ ¦ r r r PSC B 1 -std : 16 n processors, 500 timesteps B 2 -cy : 16 n processors, 1000 timesteps B 3 -gtc : 64 n processors, 100 timesteps (very large) Direct method FFT (“nl 2” for B 1 and B 2, “nl 1” for B 3) Task affinity enabled/disabled (p 690 only) Memory affinity enabled/disabled (p 690 only) Filesystem location (Cray X 1 only) Performance Technology for Productive, High-End Parallel Computing 47

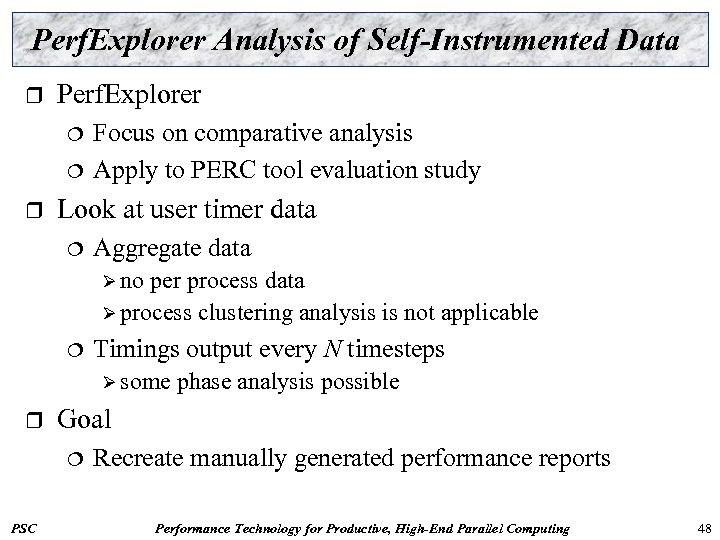

Perf. Explorer Analysis of Self-Instrumented Data r Perf. Explorer ¦ ¦ r Focus on comparative analysis Apply to PERC tool evaluation study Look at user timer data ¦ Aggregate data Ø no per process data Ø process clustering analysis is not applicable ¦ Timings output every N timesteps Ø some r Goal ¦ PSC phase analysis possible Recreate manually generated performance reports Performance Technology for Productive, High-End Parallel Computing 48

Perf. Explorer Analysis of Self-Instrumented Data r Perf. Explorer ¦ ¦ r Focus on comparative analysis Apply to PERC tool evaluation study Look at user timer data ¦ Aggregate data Ø no per process data Ø process clustering analysis is not applicable ¦ Timings output every N timesteps Ø some r Goal ¦ PSC phase analysis possible Recreate manually generated performance reports Performance Technology for Productive, High-End Parallel Computing 48

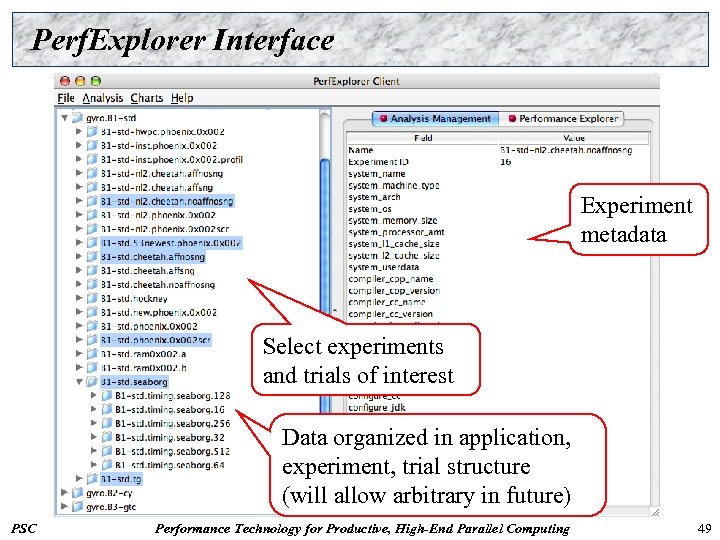

Perf. Explorer Interface Experiment metadata Select experiments and trials of interest Data organized in application, experiment, trial structure (will allow arbitrary in future) PSC Performance Technology for Productive, High-End Parallel Computing 49

Perf. Explorer Interface Experiment metadata Select experiments and trials of interest Data organized in application, experiment, trial structure (will allow arbitrary in future) PSC Performance Technology for Productive, High-End Parallel Computing 49

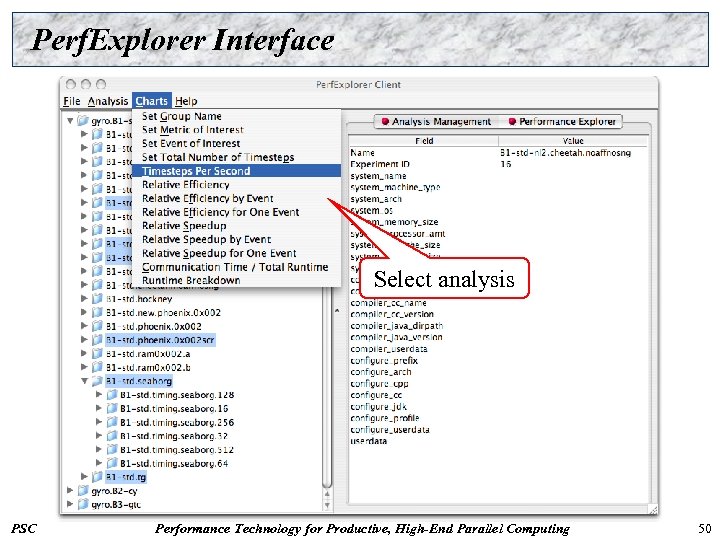

Perf. Explorer Interface Select analysis PSC Performance Technology for Productive, High-End Parallel Computing 50

Perf. Explorer Interface Select analysis PSC Performance Technology for Productive, High-End Parallel Computing 50

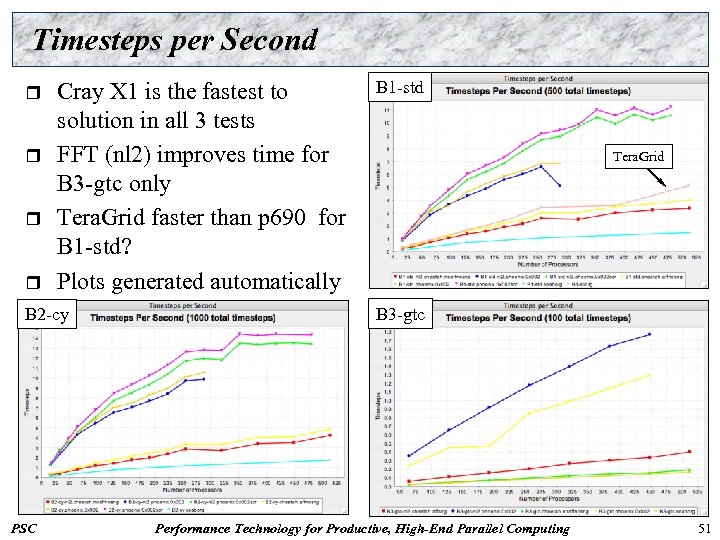

Timesteps per Second r r Cray X 1 is the fastest to solution in all 3 tests FFT (nl 2) improves time for B 3 -gtc only Tera. Grid faster than p 690 for B 1 -std? Plots generated automatically B 2 -cy B 1 -std Tera. Grid B 3 -gtc PSC Performance Technology for Productive, High-End Parallel Computing 51

Timesteps per Second r r Cray X 1 is the fastest to solution in all 3 tests FFT (nl 2) improves time for B 3 -gtc only Tera. Grid faster than p 690 for B 1 -std? Plots generated automatically B 2 -cy B 1 -std Tera. Grid B 3 -gtc PSC Performance Technology for Productive, High-End Parallel Computing 51

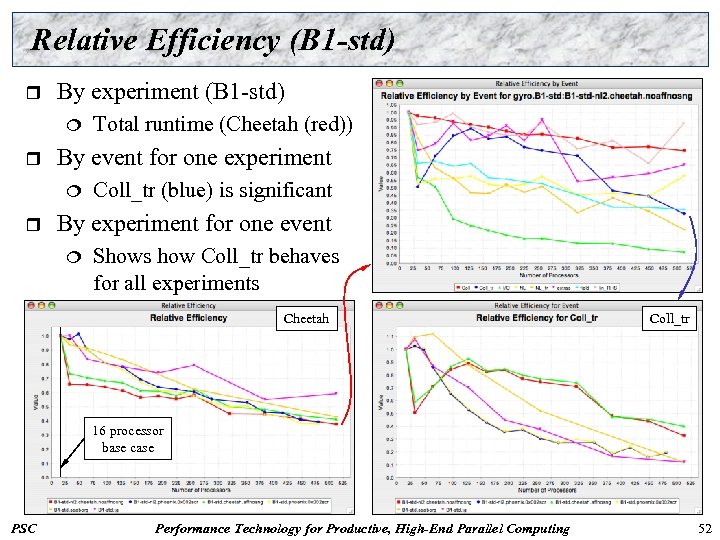

Relative Efficiency (B 1 -std) r By experiment (B 1 -std) ¦ r By event for one experiment ¦ r Total runtime (Cheetah (red)) Coll_tr (blue) is significant By experiment for one event ¦ Shows how Coll_tr behaves for all experiments Cheetah Coll_tr 16 processor base case PSC Performance Technology for Productive, High-End Parallel Computing 52

Relative Efficiency (B 1 -std) r By experiment (B 1 -std) ¦ r By event for one experiment ¦ r Total runtime (Cheetah (red)) Coll_tr (blue) is significant By experiment for one event ¦ Shows how Coll_tr behaves for all experiments Cheetah Coll_tr 16 processor base case PSC Performance Technology for Productive, High-End Parallel Computing 52

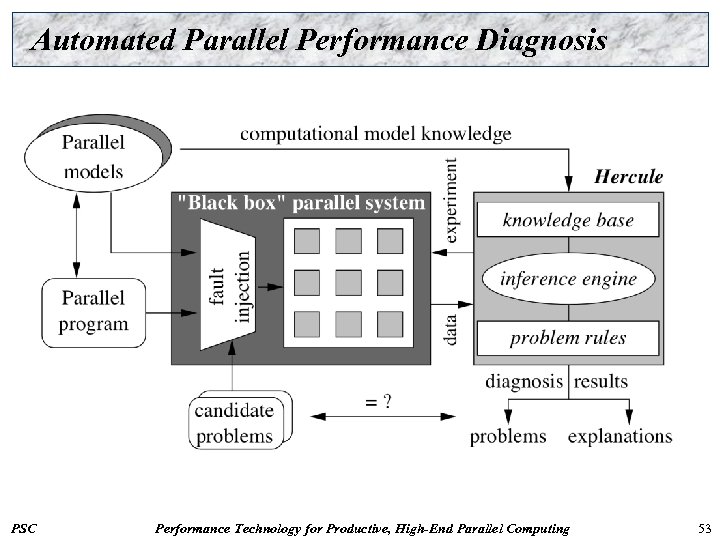

Automated Parallel Performance Diagnosis PSC Performance Technology for Productive, High-End Parallel Computing 53

Automated Parallel Performance Diagnosis PSC Performance Technology for Productive, High-End Parallel Computing 53

Current and Future Work r Para. Prof ¦ r Perf. DMF ¦ ¦ r PSC Adding new database backends and distributed support Building support for user-created tables Perf. Explorer ¦ r Developing phase-based performance displays Extending comparative and clustering analysis Adding new data mining capabilities Building in scripting support Performance regression testing tool (Perf. Regress) Integrate in Eclipse Parallel Tool Project (PTP) Performance Technology for Productive, High-End Parallel Computing 54

Current and Future Work r Para. Prof ¦ r Perf. DMF ¦ ¦ r PSC Adding new database backends and distributed support Building support for user-created tables Perf. Explorer ¦ r Developing phase-based performance displays Extending comparative and clustering analysis Adding new data mining capabilities Building in scripting support Performance regression testing tool (Perf. Regress) Integrate in Eclipse Parallel Tool Project (PTP) Performance Technology for Productive, High-End Parallel Computing 54

Concluding Discussion Performance tools must be used effectively r More intelligent performance systems for productive use r Evolve to application-specific performance technology ¦ Deal with scale by “full range” performance exploration ¦ Autonomic and integrated tools ¦ Knowledge-based and knowledge-driven process ¦ r Performance observation methods do not necessarily need to change in a fundamental sense ¦ More automatically controlled and efficiently use Develop next-generation tools and deliver to community r Open source with support by Para. Tools, Inc. r PSC Performance Technology for Productive, High-End Parallel Computing 55

Concluding Discussion Performance tools must be used effectively r More intelligent performance systems for productive use r Evolve to application-specific performance technology ¦ Deal with scale by “full range” performance exploration ¦ Autonomic and integrated tools ¦ Knowledge-based and knowledge-driven process ¦ r Performance observation methods do not necessarily need to change in a fundamental sense ¦ More automatically controlled and efficiently use Develop next-generation tools and deliver to community r Open source with support by Para. Tools, Inc. r PSC Performance Technology for Productive, High-End Parallel Computing 55

Support Acknowledgements r r Department of Energy (DOE) ¦ Office of Science contracts ¦ University of Utah ASCI Level 1 sub-contract ¦ ASC/NNSA Level 3 contract NSF ¦ r r PSC High-End Computing Grant Research Centre Juelich ¦ John von Neumann Institute ¦ Dr. Bernd Mohr Los Alamos National Laboratory Performance Technology for Productive, High-End Parallel Computing 56

Support Acknowledgements r r Department of Energy (DOE) ¦ Office of Science contracts ¦ University of Utah ASCI Level 1 sub-contract ¦ ASC/NNSA Level 3 contract NSF ¦ r r PSC High-End Computing Grant Research Centre Juelich ¦ John von Neumann Institute ¦ Dr. Bernd Mohr Los Alamos National Laboratory Performance Technology for Productive, High-End Parallel Computing 56

PSC Performance Technology for Productive, High-End Parallel Computing 57

PSC Performance Technology for Productive, High-End Parallel Computing 57