97bc7e7c7dd9c57a9303d8e4b3adf5c6.ppt

- Количество слайдов: 50

Performance Engineering Research Institute (DOE Sci. DAC) Presented by Katherine Yelick LBNL and UC Berkeley LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org

Performance Engineering Research Institute (DOE Sci. DAC) Presented by Katherine Yelick LBNL and UC Berkeley LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org

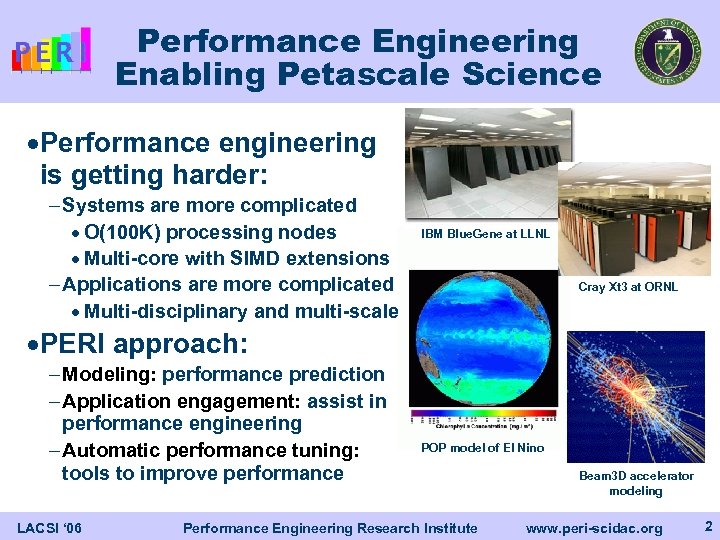

Performance Engineering Enabling Petascale Science ·Performance engineering is getting harder: - Systems are more complicated · O(100 K) processing nodes · Multi-core with SIMD extensions - Applications are more complicated · Multi-disciplinary and multi-scale IBM Blue. Gene at LLNL Cray Xt 3 at ORNL ·PERI approach: - Modeling: performance prediction - Application engagement: assist in performance engineering - Automatic performance tuning: tools to improve performance LACSI ‘ 06 POP model of El Nino Performance Engineering Research Institute Beam 3 D accelerator modeling www. peri-scidac. org 2

Performance Engineering Enabling Petascale Science ·Performance engineering is getting harder: - Systems are more complicated · O(100 K) processing nodes · Multi-core with SIMD extensions - Applications are more complicated · Multi-disciplinary and multi-scale IBM Blue. Gene at LLNL Cray Xt 3 at ORNL ·PERI approach: - Modeling: performance prediction - Application engagement: assist in performance engineering - Automatic performance tuning: tools to improve performance LACSI ‘ 06 POP model of El Nino Performance Engineering Research Institute Beam 3 D accelerator modeling www. peri-scidac. org 2

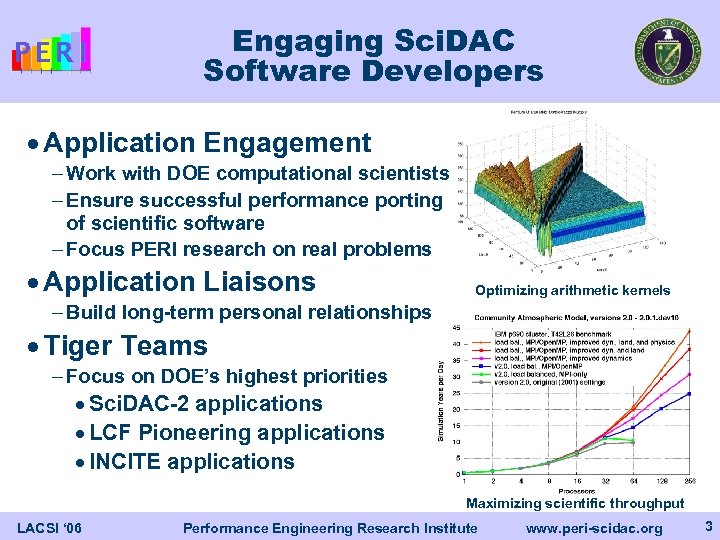

Engaging Sci. DAC Software Developers · Application Engagement - Work with DOE computational scientists - Ensure successful performance porting of scientific software - Focus PERI research on real problems · Application Liaisons Optimizing arithmetic kernels - Build long-term personal relationships · Tiger Teams - Focus on DOE’s highest priorities · Sci. DAC-2 applications · LCF Pioneering applications · INCITE applications Maximizing scientific throughput LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 3

Engaging Sci. DAC Software Developers · Application Engagement - Work with DOE computational scientists - Ensure successful performance porting of scientific software - Focus PERI research on real problems · Application Liaisons Optimizing arithmetic kernels - Build long-term personal relationships · Tiger Teams - Focus on DOE’s highest priorities · Sci. DAC-2 applications · LCF Pioneering applications · INCITE applications Maximizing scientific throughput LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 3

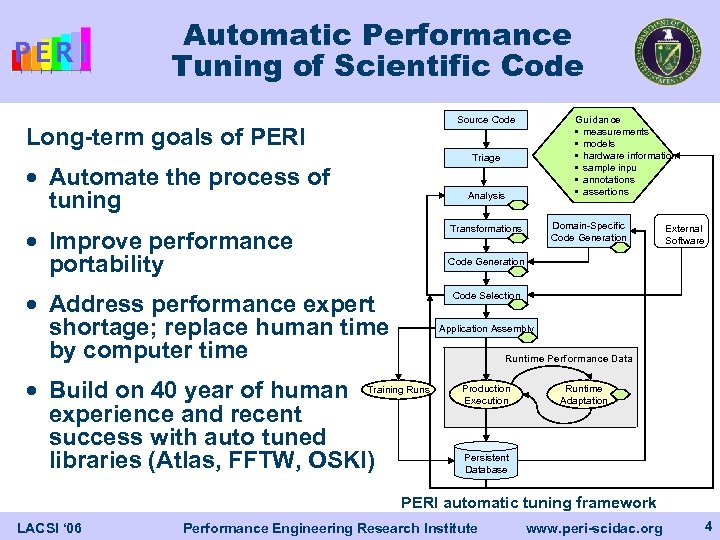

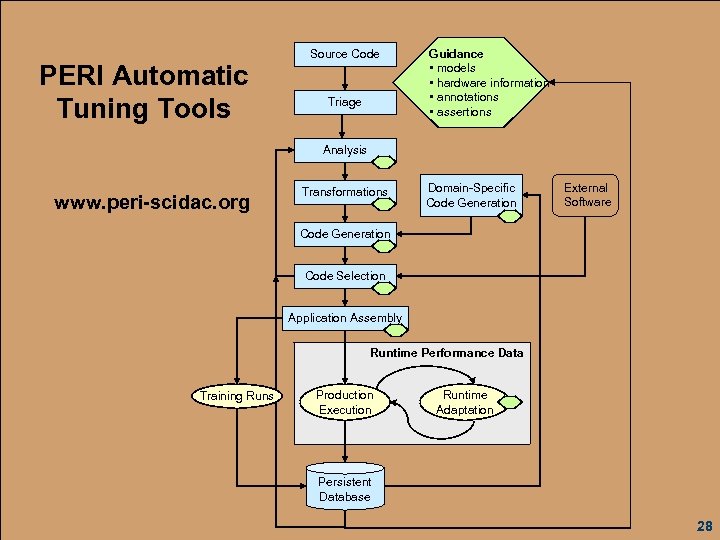

Automatic Performance Tuning of Scientific Code Guidance • measurements • models • hardware information • sample inpu • annotations • assertions Source Code Long-term goals of PERI Triage · Automate the process of tuning Analysis Domain-Specific Code Generation Transformations · Improve performance portability External Software Code Generation · Address performance expert shortage; replace human time by computer time Code Selection Application Assembly Runtime Performance Data · Build on 40 year of human Training Runs experience and recent success with auto tuned libraries (Atlas, FFTW, OSKI) Production Execution Runtime Adaptation Persistent Database PERI automatic tuning framework LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 4

Automatic Performance Tuning of Scientific Code Guidance • measurements • models • hardware information • sample inpu • annotations • assertions Source Code Long-term goals of PERI Triage · Automate the process of tuning Analysis Domain-Specific Code Generation Transformations · Improve performance portability External Software Code Generation · Address performance expert shortage; replace human time by computer time Code Selection Application Assembly Runtime Performance Data · Build on 40 year of human Training Runs experience and recent success with auto tuned libraries (Atlas, FFTW, OSKI) Production Execution Runtime Adaptation Persistent Database PERI automatic tuning framework LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 4

Participating Institutions Lead PI: Bob Lucas Institutions: Argonne National Laboratory Lawrence Berkeley National Laboratory Lawrence Livermore National Laboratory Oak Ridge National Laboratory Rice University of California at San Diego University of Maryland University of North Carolina University of Southern California University of Tennessee LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 5

Participating Institutions Lead PI: Bob Lucas Institutions: Argonne National Laboratory Lawrence Berkeley National Laboratory Lawrence Livermore National Laboratory Oak Ridge National Laboratory Rice University of California at San Diego University of Maryland University of North Carolina University of Southern California University of Tennessee LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 5

Major Tuning Activities in PERI · Triage: discover tuning targets - Identifying bottlenecks (HPC Toolkit) - Use hardware events (PAPI) · Library-based tuning - Dense linear algebra (Atlas) - Sparse linear algebra (OSKI) - Stencil operations · Application-based tuning - Parameterized applications (Active Harmony) - Automatic source-based tuning (Rose and CG) LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 6

Major Tuning Activities in PERI · Triage: discover tuning targets - Identifying bottlenecks (HPC Toolkit) - Use hardware events (PAPI) · Library-based tuning - Dense linear algebra (Atlas) - Sparse linear algebra (OSKI) - Stencil operations · Application-based tuning - Parameterized applications (Active Harmony) - Automatic source-based tuning (Rose and CG) LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 6

Triage Tools: HPC Toolkit Goal: Discover tuning opportunities (Rice) Features of HPC Toolkit: · Ease of use - No manual code instrumentation; handle large multi-lingual codes · Perform detailed measurements - Both communication and computation - Many granularities: node, core, procedure, loop, and statement · Identify inefficiencies in code: - Parallel inefficiencies: load imbalance, communication overhead, etc. - Computation inefficiencies: pipeline stalls, memory bottlenecks, etc. LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 7

Triage Tools: HPC Toolkit Goal: Discover tuning opportunities (Rice) Features of HPC Toolkit: · Ease of use - No manual code instrumentation; handle large multi-lingual codes · Perform detailed measurements - Both communication and computation - Many granularities: node, core, procedure, loop, and statement · Identify inefficiencies in code: - Parallel inefficiencies: load imbalance, communication overhead, etc. - Computation inefficiencies: pipeline stalls, memory bottlenecks, etc. LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 7

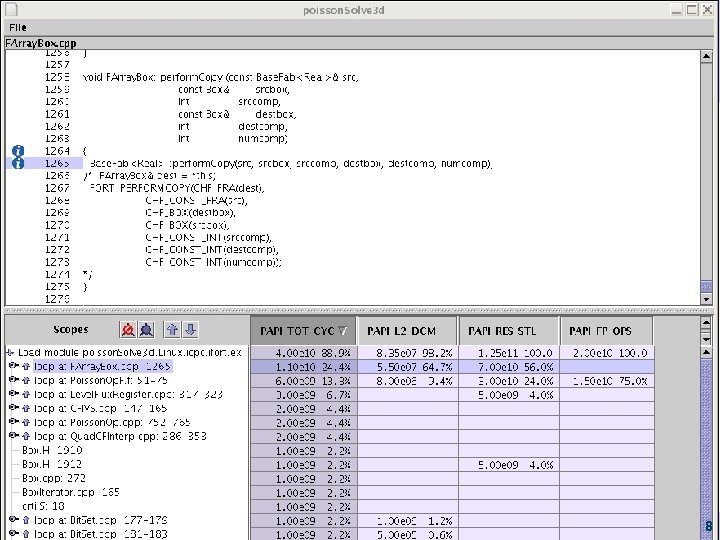

LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 8

LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 8

On-line Hardware Monitoring: PAPI Goal: machine-independent Performance API (UTK) · Multi-substrate support recently added to PAPI · Enables simultaneous monitoring of - On-processor counters - Off-processor counters (e. g. , network counters) - Temperature sensors - Heterogeneous multi-core hybrid systems · Online monitoring will help enable runtime tuning LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 9

On-line Hardware Monitoring: PAPI Goal: machine-independent Performance API (UTK) · Multi-substrate support recently added to PAPI · Enables simultaneous monitoring of - On-processor counters - Off-processor counters (e. g. , network counters) - Temperature sensors - Heterogeneous multi-core hybrid systems · Online monitoring will help enable runtime tuning LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 9

Major Tuning Activities in PERI · Triage: discover tuning targets - Identifying bottlenecks (HPC Toolkit) - Use hardware events (PAPI) · Library-based tuning - Dense linear algebra (Atlas) - Sparse linear algebra (OSKI) - Stencil operations · Application-based tuning - Parameterized applications (Active Harmony) - Automatic source-based tuning (Rose and CG) LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 10

Major Tuning Activities in PERI · Triage: discover tuning targets - Identifying bottlenecks (HPC Toolkit) - Use hardware events (PAPI) · Library-based tuning - Dense linear algebra (Atlas) - Sparse linear algebra (OSKI) - Stencil operations · Application-based tuning - Parameterized applications (Active Harmony) - Automatic source-based tuning (Rose and CG) LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 10

Dense Linear Algebra: Atlas Goal: Auto-tuning for dense linear algebra (UTK) Atlas features and plans: · Performance portability across processors · Massively multi-threaded and multi-core architectures, which requires - Asynchrony (e. g. , lookahead) - Modern vectorization (SIMD extensions) - Hiding of memory latency - Overlap of communication with computation · Hand techniques being automated · Better search algorithms and parallel search LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 11

Dense Linear Algebra: Atlas Goal: Auto-tuning for dense linear algebra (UTK) Atlas features and plans: · Performance portability across processors · Massively multi-threaded and multi-core architectures, which requires - Asynchrony (e. g. , lookahead) - Modern vectorization (SIMD extensions) - Hiding of memory latency - Overlap of communication with computation · Hand techniques being automated · Better search algorithms and parallel search LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 11

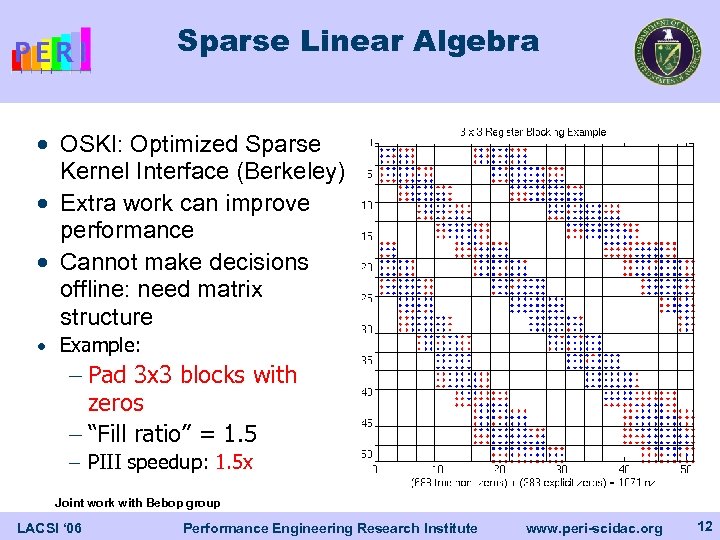

Sparse Linear Algebra · OSKI: Optimized Sparse Kernel Interface (Berkeley) · Extra work can improve performance · Cannot make decisions offline: need matrix structure · Example: - Pad 3 x 3 blocks with zeros - “Fill ratio” = 1. 5 - PIII speedup: 1. 5 x Joint work with Bebop group LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 12

Sparse Linear Algebra · OSKI: Optimized Sparse Kernel Interface (Berkeley) · Extra work can improve performance · Cannot make decisions offline: need matrix structure · Example: - Pad 3 x 3 blocks with zeros - “Fill ratio” = 1. 5 - PIII speedup: 1. 5 x Joint work with Bebop group LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 12

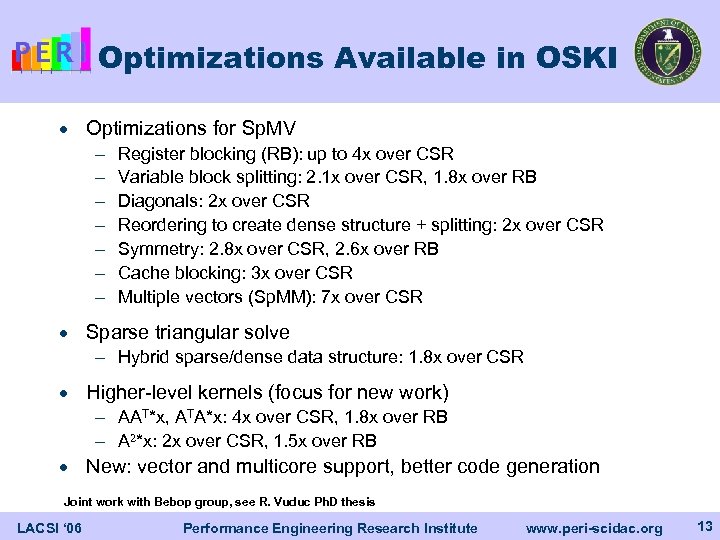

Optimizations Available in OSKI · Optimizations for Sp. MV - Register blocking (RB): up to 4 x over CSR Variable block splitting: 2. 1 x over CSR, 1. 8 x over RB Diagonals: 2 x over CSR Reordering to create dense structure + splitting: 2 x over CSR Symmetry: 2. 8 x over CSR, 2. 6 x over RB Cache blocking: 3 x over CSR Multiple vectors (Sp. MM): 7 x over CSR · Sparse triangular solve - Hybrid sparse/dense data structure: 1. 8 x over CSR · Higher-level kernels (focus for new work) - AAT*x, ATA*x: 4 x over CSR, 1. 8 x over RB - A *x: 2 x over CSR, 1. 5 x over RB · New: vector and multicore support, better code generation Joint work with Bebop group, see R. Vuduc Ph. D thesis LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 13

Optimizations Available in OSKI · Optimizations for Sp. MV - Register blocking (RB): up to 4 x over CSR Variable block splitting: 2. 1 x over CSR, 1. 8 x over RB Diagonals: 2 x over CSR Reordering to create dense structure + splitting: 2 x over CSR Symmetry: 2. 8 x over CSR, 2. 6 x over RB Cache blocking: 3 x over CSR Multiple vectors (Sp. MM): 7 x over CSR · Sparse triangular solve - Hybrid sparse/dense data structure: 1. 8 x over CSR · Higher-level kernels (focus for new work) - AAT*x, ATA*x: 4 x over CSR, 1. 8 x over RB - A *x: 2 x over CSR, 1. 5 x over RB · New: vector and multicore support, better code generation Joint work with Bebop group, see R. Vuduc Ph. D thesis LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 13

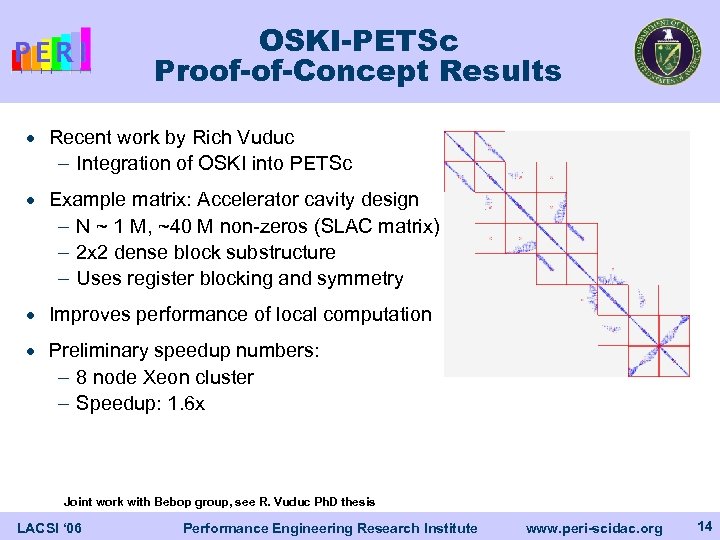

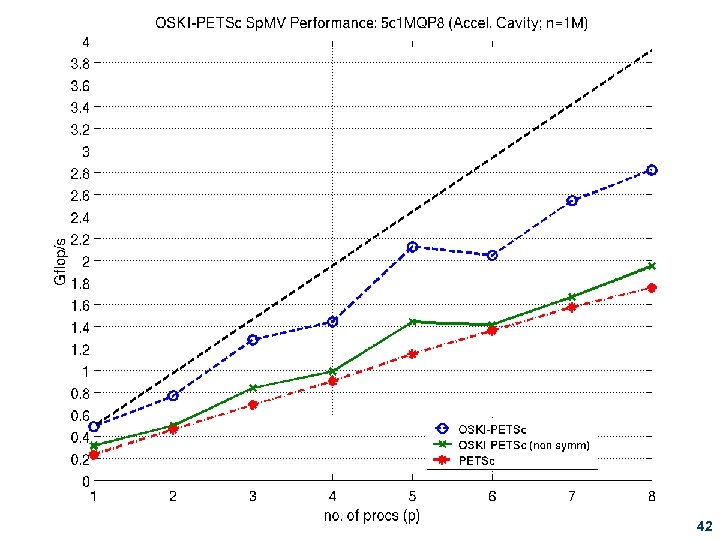

OSKI-PETSc Proof-of-Concept Results · Recent work by Rich Vuduc - Integration of OSKI into PETSc · Example matrix: Accelerator cavity design - N ~ 1 M, ~40 M non-zeros (SLAC matrix) - 2 x 2 dense block substructure - Uses register blocking and symmetry · Improves performance of local computation · Preliminary speedup numbers: - 8 node Xeon cluster - Speedup: 1. 6 x Joint work with Bebop group, see R. Vuduc Ph. D thesis LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 14

OSKI-PETSc Proof-of-Concept Results · Recent work by Rich Vuduc - Integration of OSKI into PETSc · Example matrix: Accelerator cavity design - N ~ 1 M, ~40 M non-zeros (SLAC matrix) - 2 x 2 dense block substructure - Uses register blocking and symmetry · Improves performance of local computation · Preliminary speedup numbers: - 8 node Xeon cluster - Speedup: 1. 6 x Joint work with Bebop group, see R. Vuduc Ph. D thesis LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 14

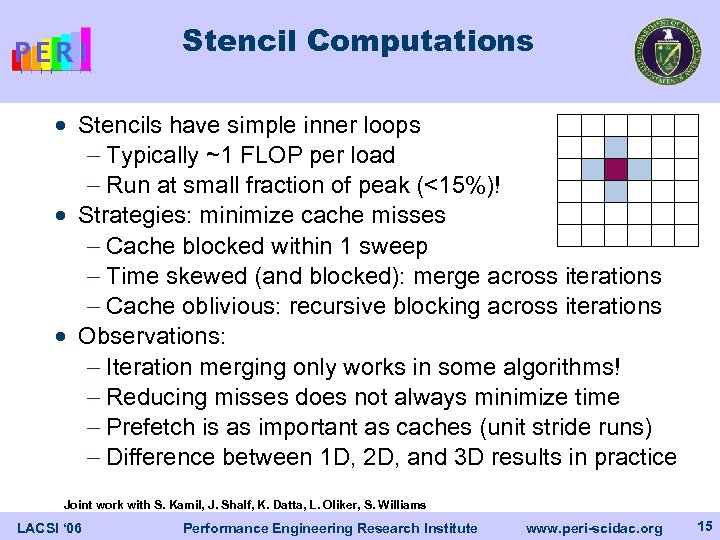

Stencil Computations · Stencils have simple inner loops - Typically ~1 FLOP per load - Run at small fraction of peak (<15%)! · Strategies: minimize cache misses - Cache blocked within 1 sweep - Time skewed (and blocked): merge across iterations - Cache oblivious: recursive blocking across iterations · Observations: - Iteration merging only works in some algorithms! - Reducing misses does not always minimize time - Prefetch is as important as caches (unit stride runs) - Difference between 1 D, 2 D, and 3 D results in practice Joint work with S. Kamil, J. Shalf, K. Datta, L. Oliker, S. Williams LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 15

Stencil Computations · Stencils have simple inner loops - Typically ~1 FLOP per load - Run at small fraction of peak (<15%)! · Strategies: minimize cache misses - Cache blocked within 1 sweep - Time skewed (and blocked): merge across iterations - Cache oblivious: recursive blocking across iterations · Observations: - Iteration merging only works in some algorithms! - Reducing misses does not always minimize time - Prefetch is as important as caches (unit stride runs) - Difference between 1 D, 2 D, and 3 D results in practice Joint work with S. Kamil, J. Shalf, K. Datta, L. Oliker, S. Williams LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 15

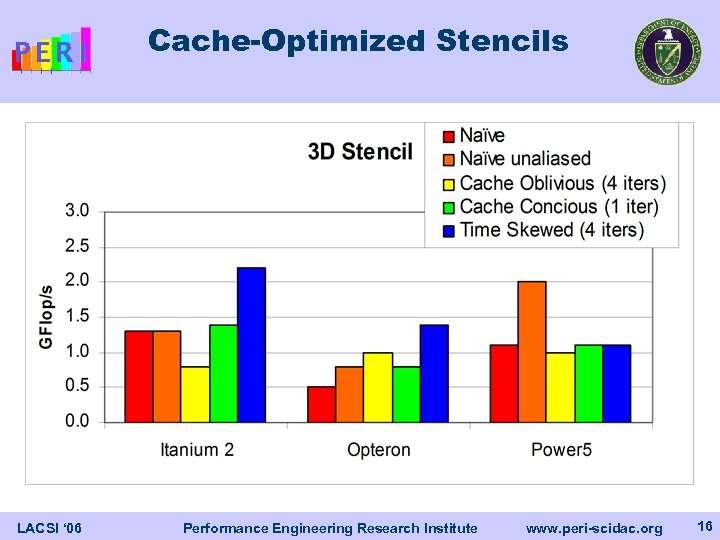

Cache-Optimized Stencils LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 16

Cache-Optimized Stencils LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 16

Tuning for the Cell Architecture · Cell will be used in the PS 3 high volume · Current system problems: -Off-chip bandwidth and power -Double precision floating point interface · ~14 x slower than single · Problem for computationally intensive kernels (BLAS 3) · Consider a variation call Cell+ that fixes this · Memory system -Software controlled memory (like explicit out of core) -Improves bandwidth and power usage -But increases programming complexity Joint work with S. Williams, J. Shalf, L. Oliker, P. Husbands, S. Kamil LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 17

Tuning for the Cell Architecture · Cell will be used in the PS 3 high volume · Current system problems: -Off-chip bandwidth and power -Double precision floating point interface · ~14 x slower than single · Problem for computationally intensive kernels (BLAS 3) · Consider a variation call Cell+ that fixes this · Memory system -Software controlled memory (like explicit out of core) -Improves bandwidth and power usage -But increases programming complexity Joint work with S. Williams, J. Shalf, L. Oliker, P. Husbands, S. Kamil LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 17

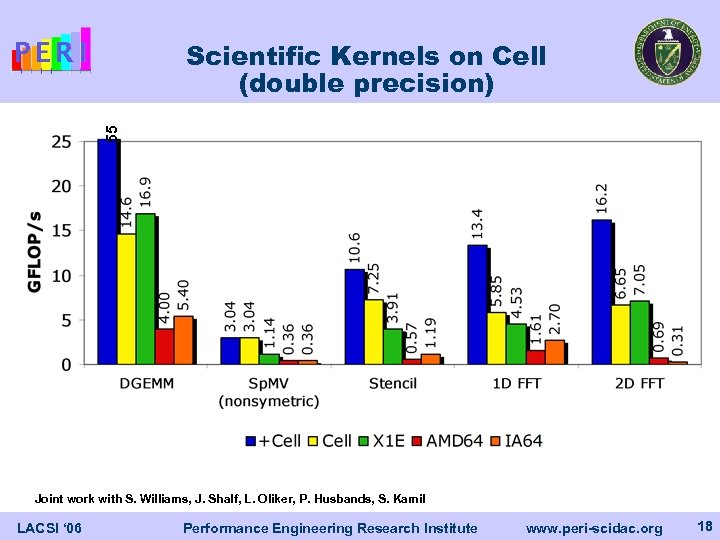

55 Scientific Kernels on Cell (double precision) Joint work with S. Williams, J. Shalf, L. Oliker, P. Husbands, S. Kamil LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 18

55 Scientific Kernels on Cell (double precision) Joint work with S. Williams, J. Shalf, L. Oliker, P. Husbands, S. Kamil LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 18

Major Tuning Activities in PERI · Triage: discover tuning targets - Identifying bottlenecks (HPC Toolkit) - Use hardware events (PAPI) · Library-based tuning - Dense linear algebra (Atlas) - Sparse linear algebra (OSKI) - Stencil operations · Application-based tuning - Parameterized applications (Active Harmony) - Automatic source-based tuning (Rose and CG) LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 19

Major Tuning Activities in PERI · Triage: discover tuning targets - Identifying bottlenecks (HPC Toolkit) - Use hardware events (PAPI) · Library-based tuning - Dense linear algebra (Atlas) - Sparse linear algebra (OSKI) - Stencil operations · Application-based tuning - Parameterized applications (Active Harmony) - Automatic source-based tuning (Rose and CG) LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 19

User-Assisted Runtime Performance Optimization · Active Harmony: Runtime optimization (UMD) - Automatic library selection (code) · Monitor library performance · Switch library if necessary - Automatic performance tuning (parameter) · Monitor system performance · Adjust runtime parameters - Results · Cluster-based web service – up to 16% improvement · POP – up to 17% improvement · GS 2 – up to 3. 4 x faster - New: improved search algorithms · Tuning of component-based software (ANL) LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 20

User-Assisted Runtime Performance Optimization · Active Harmony: Runtime optimization (UMD) - Automatic library selection (code) · Monitor library performance · Switch library if necessary - Automatic performance tuning (parameter) · Monitor system performance · Adjust runtime parameters - Results · Cluster-based web service – up to 16% improvement · POP – up to 17% improvement · GS 2 – up to 3. 4 x faster - New: improved search algorithms · Tuning of component-based software (ANL) LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 20

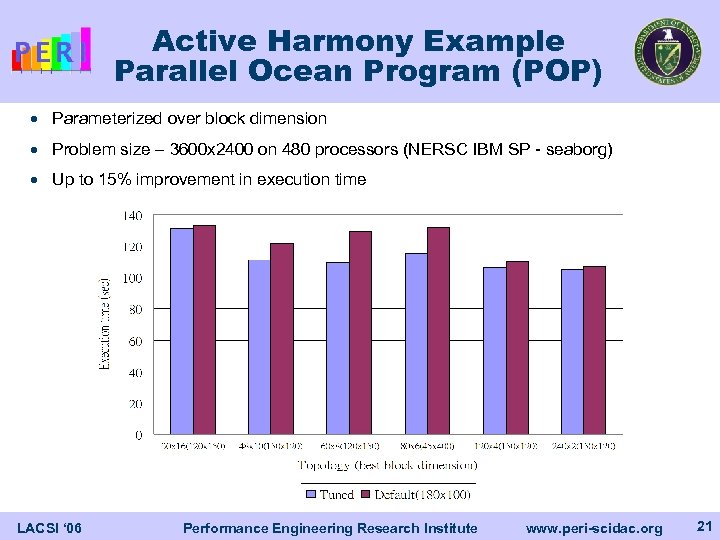

Active Harmony Example Parallel Ocean Program (POP) · Parameterized over block dimension · Problem size – 3600 x 2400 on 480 processors (NERSC IBM SP - seaborg) · Up to 15% improvement in execution time LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 21

Active Harmony Example Parallel Ocean Program (POP) · Parameterized over block dimension · Problem size – 3600 x 2400 on 480 processors (NERSC IBM SP - seaborg) · Up to 15% improvement in execution time LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 21

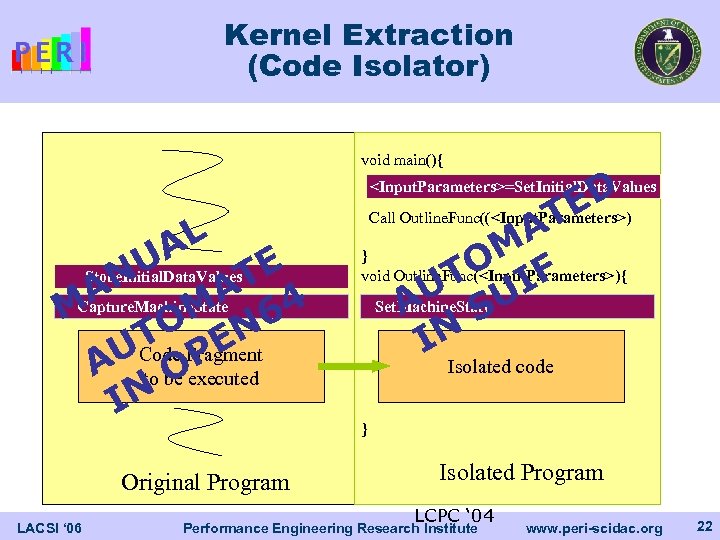

Kernel Extraction (Code Isolator) void main(){ ED Call Outline. Func((

Kernel Extraction (Code Isolator) void main(){ ED Call Outline. Func((

ROSE Project · Software analysis and optimization for scientific applications · Tool for building source-to-source translators · Support for C and C++ · F 90 in development · Loop optimizations · Performance analysis · Software engineering · Lab, academic, and industry use · Domain-specific analysis and optimizations · Development of new optimization approaches · Optimization of object-oriented abstractions LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 23

ROSE Project · Software analysis and optimization for scientific applications · Tool for building source-to-source translators · Support for C and C++ · F 90 in development · Loop optimizations · Performance analysis · Software engineering · Lab, academic, and industry use · Domain-specific analysis and optimizations · Development of new optimization approaches · Optimization of object-oriented abstractions LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 23

Source Based Optimizations in Rose Robustness (handles real lab applications): • Kull (ASC), ALE 3 D (ASC), Ares (ASC, in progress), hyper, IRS (ASC, benchmark), Khola (ASC, benchmark), CHOMBO (LBL AMR framework), ROSE (compiles itself, in progress) • Ten separate million line applications (current work) Custom Application Analysis: • Analysis to find and remove static class initialization • Custom Loop Classification for Kull loop structures • Analysis for combined procedure inlining, code motion, and loop fusion for Kull loop structures (optimization done by hand in one loop by Brian Miller, automating search for other opportunities in Kull (current work). Optimization: • Demonstrated data structure splitting for Kull (2 X improvement on a Kull benchmark; implemented by hand now in Kull) LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 24

Source Based Optimizations in Rose Robustness (handles real lab applications): • Kull (ASC), ALE 3 D (ASC), Ares (ASC, in progress), hyper, IRS (ASC, benchmark), Khola (ASC, benchmark), CHOMBO (LBL AMR framework), ROSE (compiles itself, in progress) • Ten separate million line applications (current work) Custom Application Analysis: • Analysis to find and remove static class initialization • Custom Loop Classification for Kull loop structures • Analysis for combined procedure inlining, code motion, and loop fusion for Kull loop structures (optimization done by hand in one loop by Brian Miller, automating search for other opportunities in Kull (current work). Optimization: • Demonstrated data structure splitting for Kull (2 X improvement on a Kull benchmark; implemented by hand now in Kull) LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 24

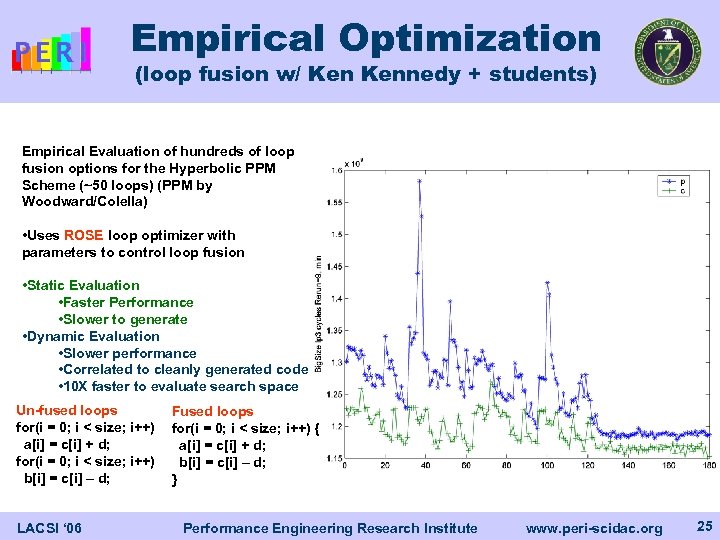

Empirical Optimization (loop fusion w/ Kennedy + students) Empirical Evaluation of hundreds of loop fusion options for the Hyperbolic PPM Scheme (~50 loops) (PPM by Woodward/Colella) • Uses ROSE loop optimizer with parameters to control loop fusion • Static Evaluation • Faster Performance • Slower to generate • Dynamic Evaluation • Slower performance • Correlated to cleanly generated code • 10 X faster to evaluate search space Un-fused loops for(i = 0; i < size; i++) a[i] = c[i] + d; for(i = 0; i < size; i++) b[i] = c[i] – d; LACSI ‘ 06 Fused loops for(i = 0; i < size; i++) { a[i] = c[i] + d; b[i] = c[i] – d; } Performance Engineering Research Institute www. peri-scidac. org 25

Empirical Optimization (loop fusion w/ Kennedy + students) Empirical Evaluation of hundreds of loop fusion options for the Hyperbolic PPM Scheme (~50 loops) (PPM by Woodward/Colella) • Uses ROSE loop optimizer with parameters to control loop fusion • Static Evaluation • Faster Performance • Slower to generate • Dynamic Evaluation • Slower performance • Correlated to cleanly generated code • 10 X faster to evaluate search space Un-fused loops for(i = 0; i < size; i++) a[i] = c[i] + d; for(i = 0; i < size; i++) b[i] = c[i] – d; LACSI ‘ 06 Fused loops for(i = 0; i < size; i++) { a[i] = c[i] + d; b[i] = c[i] – d; } Performance Engineering Research Institute www. peri-scidac. org 25

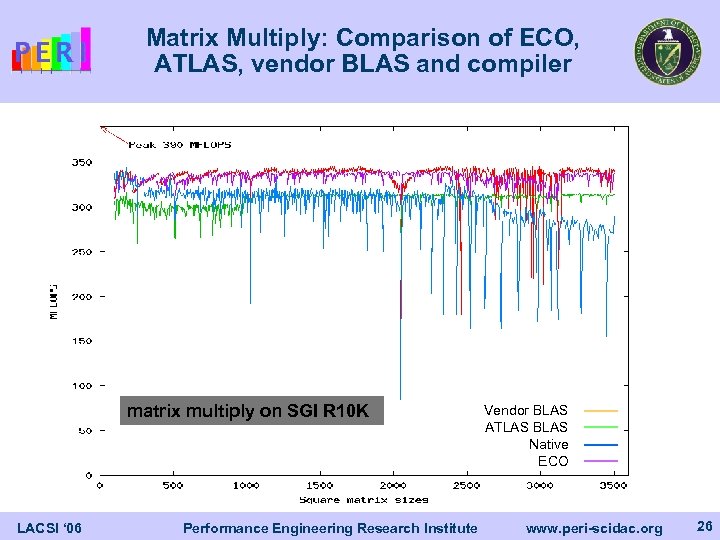

Matrix Multiply: Comparison of ECO, ATLAS, vendor BLAS and compiler matrix multiply on SGI R 10 K LACSI ‘ 06 Performance Engineering Research Institute Vendor BLAS ATLAS BLAS Native ECO www. peri-scidac. org 26

Matrix Multiply: Comparison of ECO, ATLAS, vendor BLAS and compiler matrix multiply on SGI R 10 K LACSI ‘ 06 Performance Engineering Research Institute Vendor BLAS ATLAS BLAS Native ECO www. peri-scidac. org 26

Summary · Many solved and open problems in automatic tuning · Berkeley-specific activities - OSKI: extra floating point work can save time - Stencil tuning: beware of prefetch - New architectures: vectors, Cell, integration with PETSc for clusters · PERI - Basic auto-tuning framework · Library and application level tuning; online and offline · Source transformations and domain specific generators · Many forms of “guidance” to control optimizations - Performance modeling and application engagement too - Opportunities to collaborate LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 27

Summary · Many solved and open problems in automatic tuning · Berkeley-specific activities - OSKI: extra floating point work can save time - Stencil tuning: beware of prefetch - New architectures: vectors, Cell, integration with PETSc for clusters · PERI - Basic auto-tuning framework · Library and application level tuning; online and offline · Source transformations and domain specific generators · Many forms of “guidance” to control optimizations - Performance modeling and application engagement too - Opportunities to collaborate LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 27

PERI Automatic Tuning Tools Source Code Triage Guidance • models • hardware information • annotations • assertions Analysis www. peri-scidac. org Transformations Domain-Specific Code Generation External Software Code Generation Code Selection Application Assembly Runtime Performance Data Training Runs Production Execution Runtime Adaptation Persistent Database LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 28

PERI Automatic Tuning Tools Source Code Triage Guidance • models • hardware information • annotations • assertions Analysis www. peri-scidac. org Transformations Domain-Specific Code Generation External Software Code Generation Code Selection Application Assembly Runtime Performance Data Training Runs Production Execution Runtime Adaptation Persistent Database LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 28

Runtime Tuning with Components Tuning of component-based software (Norris & Hovland, ANL) · Initial implementation of intra-component performance analysis for CQo. S (FY 08, Q 1) · Intra-component analysis for generating performance models of single components (FY 08, Q 4) · Define specification for CQo. S support for component Sci. DAC apps (FY 09, Q 1) LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 29

Runtime Tuning with Components Tuning of component-based software (Norris & Hovland, ANL) · Initial implementation of intra-component performance analysis for CQo. S (FY 08, Q 1) · Intra-component analysis for generating performance models of single components (FY 08, Q 4) · Define specification for CQo. S support for component Sci. DAC apps (FY 09, Q 1) LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 29

Source-Based Empirical Optimization Source-based optimization (Quinlan/LLNL, Hall/ISI) · Combine Model-guided and empirical optimization - compiler models prune unprofitable solutions - empirical data provide accurate measure of optimization impact · Supporting framework - kernel extraction tools (code isolator) · Prototypes for C/C++ and F 90 - experience base to maintain previous results (later years) · More talks on these projects later today LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 30

Source-Based Empirical Optimization Source-based optimization (Quinlan/LLNL, Hall/ISI) · Combine Model-guided and empirical optimization - compiler models prune unprofitable solutions - empirical data provide accurate measure of optimization impact · Supporting framework - kernel extraction tools (code isolator) · Prototypes for C/C++ and F 90 - experience base to maintain previous results (later years) · More talks on these projects later today LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 30

FY 07 Plan for Source Tuning (USC) · 1. From proposal: - “Develop optimizations for imperfectly nested loops” - STATUS: New transformation framework underway, uses Omega · 2. Nearer term milestone for out-year deliverable - Frontend to kernel extraction tool in Open 64 - PLAN: Instrument original application code to collect loop bounds, control flow and input data · 3. New! - Targeting locality + multimedia extension architectures (Alti. Vec and SSE 3) - STATUS: Preliminary MM results on Alti. Vec, working on SSE 3 LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 32

FY 07 Plan for Source Tuning (USC) · 1. From proposal: - “Develop optimizations for imperfectly nested loops” - STATUS: New transformation framework underway, uses Omega · 2. Nearer term milestone for out-year deliverable - Frontend to kernel extraction tool in Open 64 - PLAN: Instrument original application code to collect loop bounds, control flow and input data · 3. New! - Targeting locality + multimedia extension architectures (Alti. Vec and SSE 3) - STATUS: Preliminary MM results on Alti. Vec, working on SSE 3 LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 32

Source-Based Tuning (LLNL) · Develop optimization that span multiple kernels Q 2) (FY 08 · Initial integration of analysis and transformation engine (FY 08 Q 3) · Deliver kernel extraction tool for C/C++ to PERC-3 portal (FY 09 Q 4) LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 33

Source-Based Tuning (LLNL) · Develop optimization that span multiple kernels Q 2) (FY 08 · Initial integration of analysis and transformation engine (FY 08 Q 3) · Deliver kernel extraction tool for C/C++ to PERC-3 portal (FY 09 Q 4) LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 33

Tuning (UNC) · Develop triage tools for power usage and optimization (FY 07 Q 4) · Develop tools to characterize energy and running time (FY 07 Q 4) · Initial integration of power consumption analysis(FY 08 Q 3) · Develop adaptive algorithms for optimizing energy and running time (FY 08 Q 4) LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 34

Tuning (UNC) · Develop triage tools for power usage and optimization (FY 07 Q 4) · Develop tools to characterize energy and running time (FY 07 Q 4) · Initial integration of power consumption analysis(FY 08 Q 3) · Develop adaptive algorithms for optimizing energy and running time (FY 08 Q 4) LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 34

Pop Quiz · What are: - HPCToolkit - Rose - Be. BOP - Active Harmony - PAPI - Atlas - Eco - OSKI · Should we have an index for PERI portal? - 1 -sentence description of each tool and relationship to LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org PERI (if any) 35

Pop Quiz · What are: - HPCToolkit - Rose - Be. BOP - Active Harmony - PAPI - Atlas - Eco - OSKI · Should we have an index for PERI portal? - 1 -sentence description of each tool and relationship to LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org PERI (if any) 35

Challenges · Technical challenges - Multicore, etc. : · This is under control (modulo inability to control SMPs) · Would do well to target key apps kernels - Scaling, communication, load imbalance: · Less experience here, but some results for communication tuning · Load imbalance is likely to be an app-level problem · Management challenges - Tuning core community is as described · Minor: Mary and Dan need to work closely - Lots of “outer circle” tuning. Research Institute Performance Engineering activities LACSI ‘ 06 www. peri-scidac. org 36

Challenges · Technical challenges - Multicore, etc. : · This is under control (modulo inability to control SMPs) · Would do well to target key apps kernels - Scaling, communication, load imbalance: · Less experience here, but some results for communication tuning · Load imbalance is likely to be an app-level problem · Management challenges - Tuning core community is as described · Minor: Mary and Dan need to work closely - Lots of “outer circle” tuning. Research Institute Performance Engineering activities LACSI ‘ 06 www. peri-scidac. org 36

PERI Tuning · Motivation: - Hand-tuning is too time-consuming, and is not robust… - Especially as we move towards Petascale · Topology matter, multi-core memory systems are complicated, memory and network latency are not getting better · Solution: automatic performance tuning - Use tools to identified tuning opportunities - Build apps to be auto-tunable by parameters + tool - Use auto-tuned libraries in applications - Tune full applications using source-to-source transforms LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 37

PERI Tuning · Motivation: - Hand-tuning is too time-consuming, and is not robust… - Especially as we move towards Petascale · Topology matter, multi-core memory systems are complicated, memory and network latency are not getting better · Solution: automatic performance tuning - Use tools to identified tuning opportunities - Build apps to be auto-tunable by parameters + tool - Use auto-tuned libraries in applications - Tune full applications using source-to-source transforms LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 37

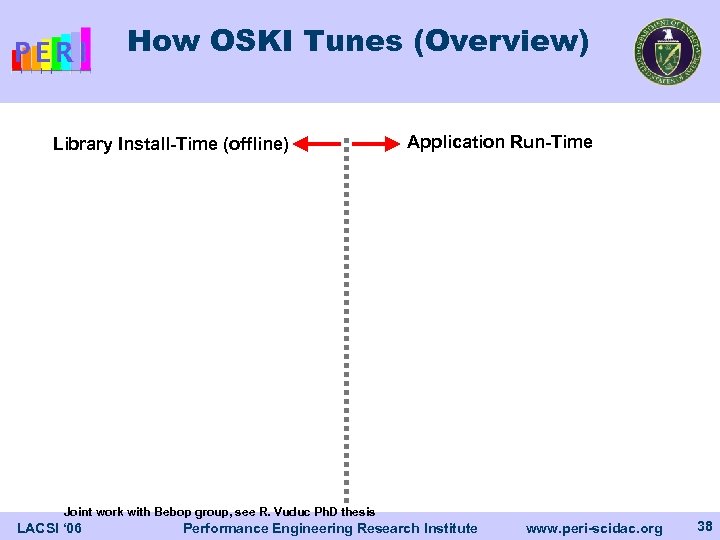

How OSKI Tunes (Overview) Library Install-Time (offline) Application Run-Time Joint work with Bebop group, see R. Vuduc Ph. D thesis LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 38

How OSKI Tunes (Overview) Library Install-Time (offline) Application Run-Time Joint work with Bebop group, see R. Vuduc Ph. D thesis LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 38

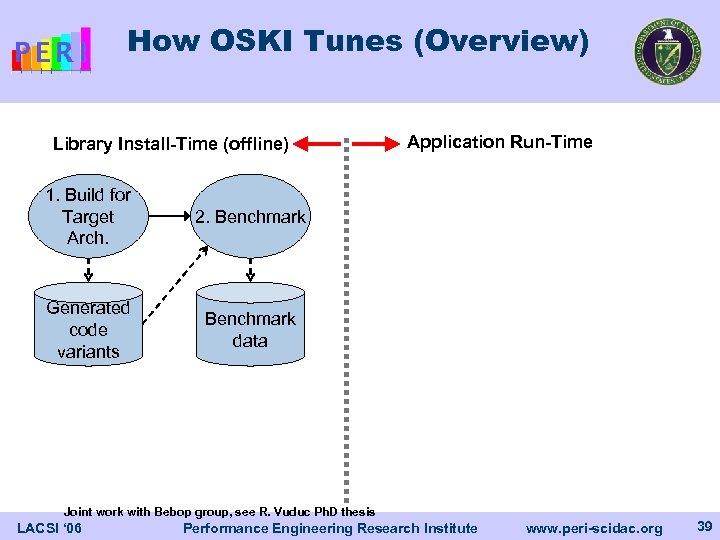

How OSKI Tunes (Overview) Library Install-Time (offline) 1. Build for Target Arch. 2. Benchmark Generated code variants Application Run-Time Benchmark data Joint work with Bebop group, see R. Vuduc Ph. D thesis LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 39

How OSKI Tunes (Overview) Library Install-Time (offline) 1. Build for Target Arch. 2. Benchmark Generated code variants Application Run-Time Benchmark data Joint work with Bebop group, see R. Vuduc Ph. D thesis LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 39

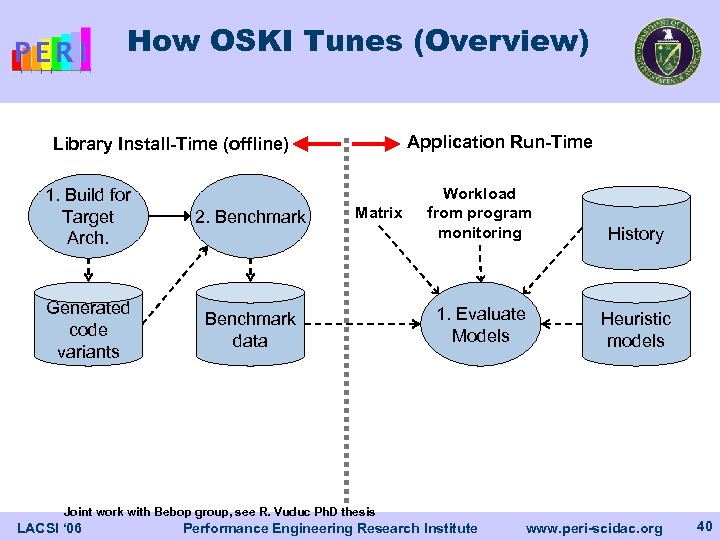

How OSKI Tunes (Overview) Application Run-Time Library Install-Time (offline) 1. Build for Target Arch. 2. Benchmark Generated code variants Benchmark data Matrix Workload from program monitoring History 1. Evaluate Models Heuristic models Joint work with Bebop group, see R. Vuduc Ph. D thesis LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 40

How OSKI Tunes (Overview) Application Run-Time Library Install-Time (offline) 1. Build for Target Arch. 2. Benchmark Generated code variants Benchmark data Matrix Workload from program monitoring History 1. Evaluate Models Heuristic models Joint work with Bebop group, see R. Vuduc Ph. D thesis LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 40

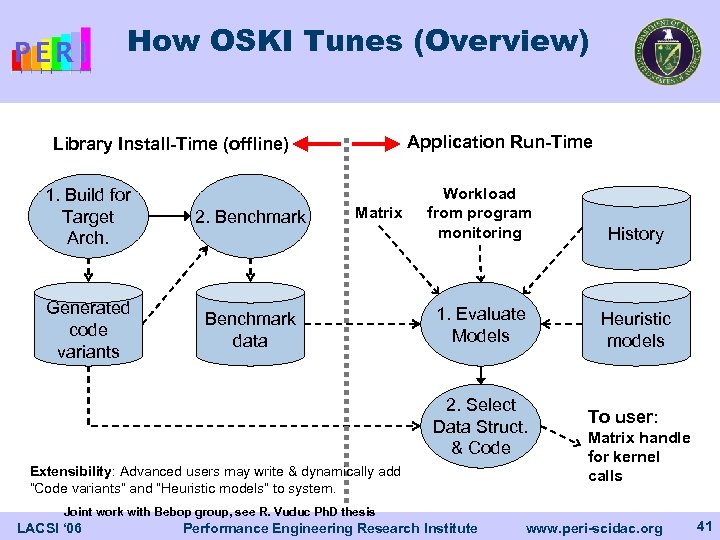

How OSKI Tunes (Overview) Application Run-Time Library Install-Time (offline) 1. Build for Target Arch. 2. Benchmark Generated code variants Benchmark data Matrix Workload from program monitoring History 1. Evaluate Models Heuristic models 2. Select Data Struct. & Code Extensibility: Advanced users may write & dynamically add “Code variants” and “Heuristic models” to system. To user: Matrix handle for kernel calls Joint work with Bebop group, see R. Vuduc Ph. D thesis LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 41

How OSKI Tunes (Overview) Application Run-Time Library Install-Time (offline) 1. Build for Target Arch. 2. Benchmark Generated code variants Benchmark data Matrix Workload from program monitoring History 1. Evaluate Models Heuristic models 2. Select Data Struct. & Code Extensibility: Advanced users may write & dynamically add “Code variants” and “Heuristic models” to system. To user: Matrix handle for kernel calls Joint work with Bebop group, see R. Vuduc Ph. D thesis LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 41

OSKI-PETSc Performance: Accel. Cavity LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 42

OSKI-PETSc Performance: Accel. Cavity LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 42

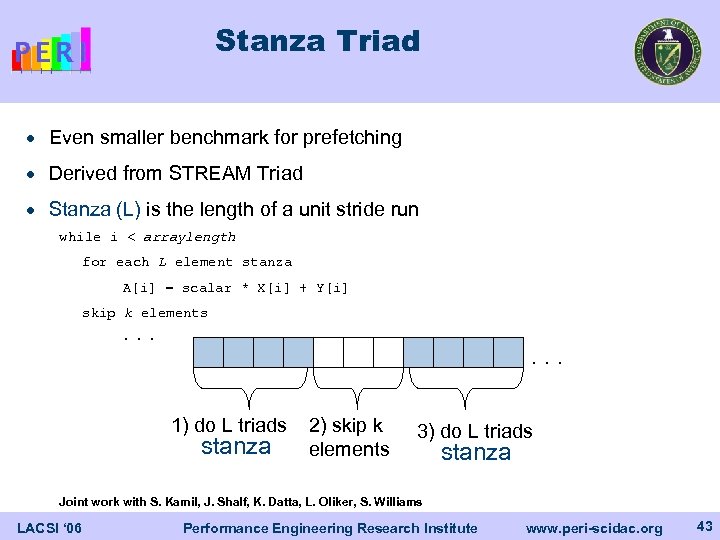

Stanza Triad · Even smaller benchmark for prefetching · Derived from STREAM Triad · Stanza (L) is the length of a unit stride run while i < arraylength for each L element stanza A[i] = scalar * X[i] + Y[i] skip k elements . . . 1) do L triads stanza 2) skip k elements 3) do L triads stanza Joint work with S. Kamil, J. Shalf, K. Datta, L. Oliker, S. Williams LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 43

Stanza Triad · Even smaller benchmark for prefetching · Derived from STREAM Triad · Stanza (L) is the length of a unit stride run while i < arraylength for each L element stanza A[i] = scalar * X[i] + Y[i] skip k elements . . . 1) do L triads stanza 2) skip k elements 3) do L triads stanza Joint work with S. Kamil, J. Shalf, K. Datta, L. Oliker, S. Williams LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 43

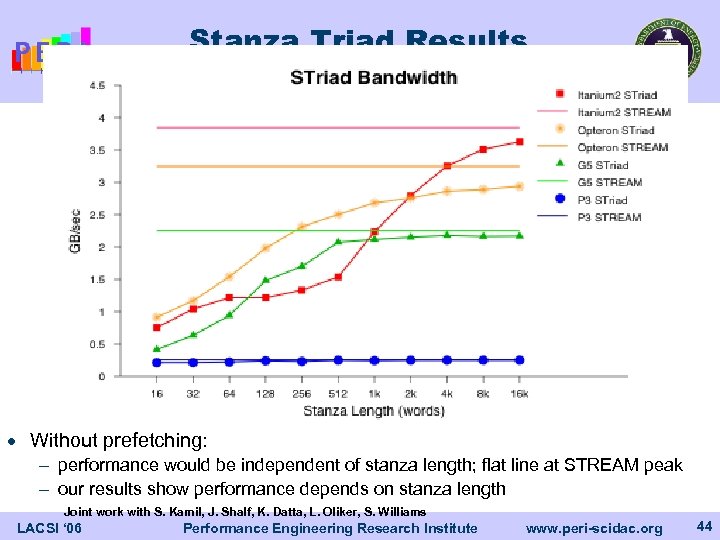

Stanza Triad Results · Without prefetching: - performance would be independent of stanza length; flat line at STREAM peak - our results show performance depends on stanza length Joint work with S. Kamil, J. Shalf, K. Datta, L. Oliker, S. Williams LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 44

Stanza Triad Results · Without prefetching: - performance would be independent of stanza length; flat line at STREAM peak - our results show performance depends on stanza length Joint work with S. Kamil, J. Shalf, K. Datta, L. Oliker, S. Williams LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 44

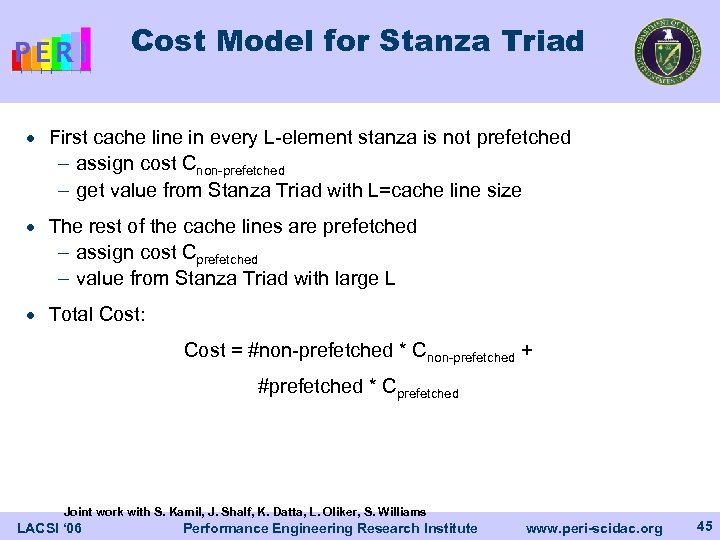

Cost Model for Stanza Triad · First cache line in every L-element stanza is not prefetched - assign cost Cnon-prefetched - get value from Stanza Triad with L=cache line size · The rest of the cache lines are prefetched - assign cost Cprefetched - value from Stanza Triad with large L · Total Cost: Cost = #non-prefetched * Cnon-prefetched + #prefetched * Cprefetched Joint work with S. Kamil, J. Shalf, K. Datta, L. Oliker, S. Williams LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 45

Cost Model for Stanza Triad · First cache line in every L-element stanza is not prefetched - assign cost Cnon-prefetched - get value from Stanza Triad with L=cache line size · The rest of the cache lines are prefetched - assign cost Cprefetched - value from Stanza Triad with large L · Total Cost: Cost = #non-prefetched * Cnon-prefetched + #prefetched * Cprefetched Joint work with S. Kamil, J. Shalf, K. Datta, L. Oliker, S. Williams LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 45

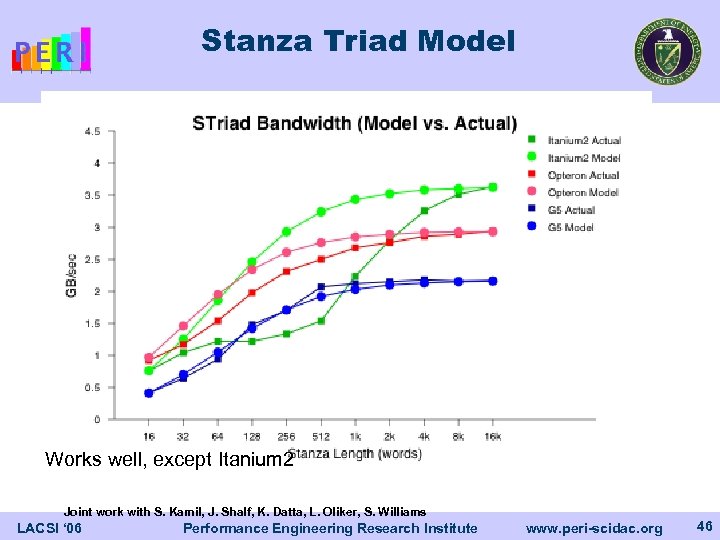

Stanza Triad Model Works well, except Itanium 2 Joint work with S. Kamil, J. Shalf, K. Datta, L. Oliker, S. Williams LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 46

Stanza Triad Model Works well, except Itanium 2 Joint work with S. Kamil, J. Shalf, K. Datta, L. Oliker, S. Williams LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 46

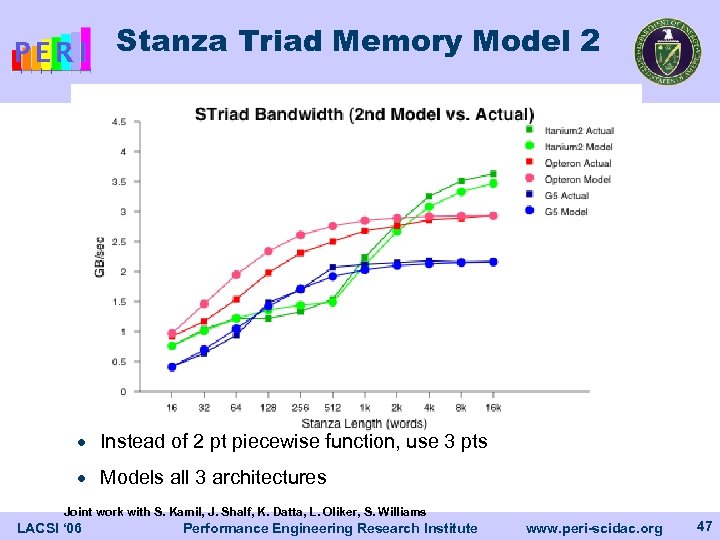

Stanza Triad Memory Model 2 · Instead of 2 pt piecewise function, use 3 pts · Models all 3 architectures Joint work with S. Kamil, J. Shalf, K. Datta, L. Oliker, S. Williams LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 47

Stanza Triad Memory Model 2 · Instead of 2 pt piecewise function, use 3 pts · Models all 3 architectures Joint work with S. Kamil, J. Shalf, K. Datta, L. Oliker, S. Williams LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 47

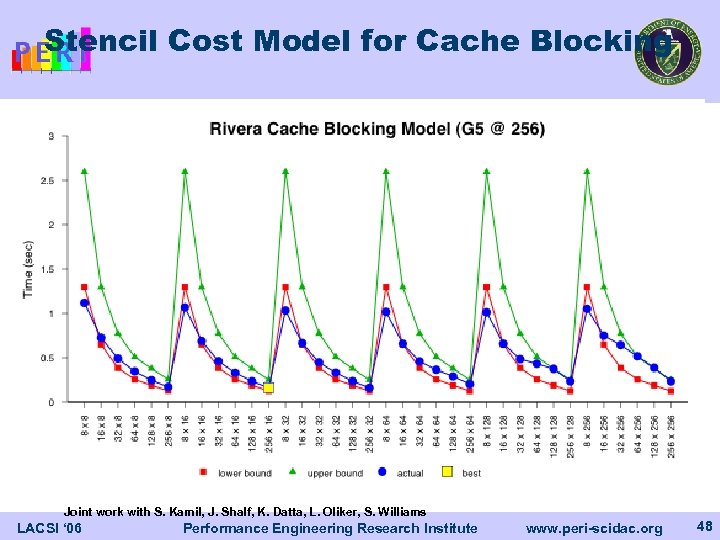

Stencil Cost Model for Cache Blocking Joint work with S. Kamil, J. Shalf, K. Datta, L. Oliker, S. Williams LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 48

Stencil Cost Model for Cache Blocking Joint work with S. Kamil, J. Shalf, K. Datta, L. Oliker, S. Williams LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 48

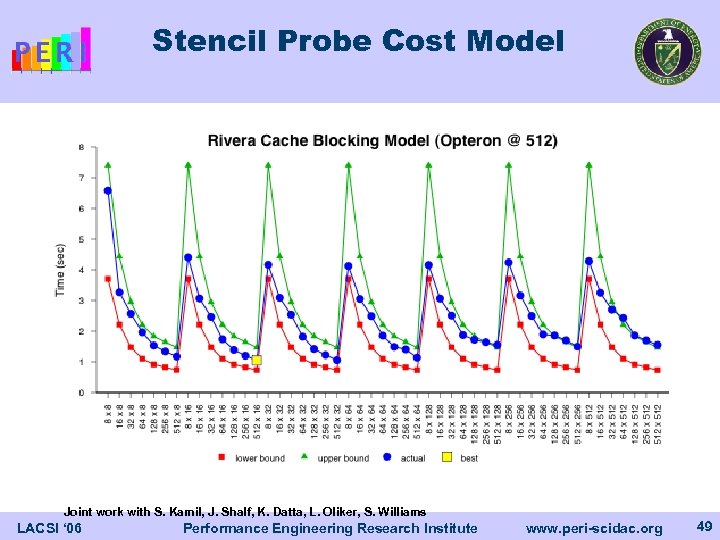

Stencil Probe Cost Model Joint work with S. Kamil, J. Shalf, K. Datta, L. Oliker, S. Williams LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 49

Stencil Probe Cost Model Joint work with S. Kamil, J. Shalf, K. Datta, L. Oliker, S. Williams LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 49

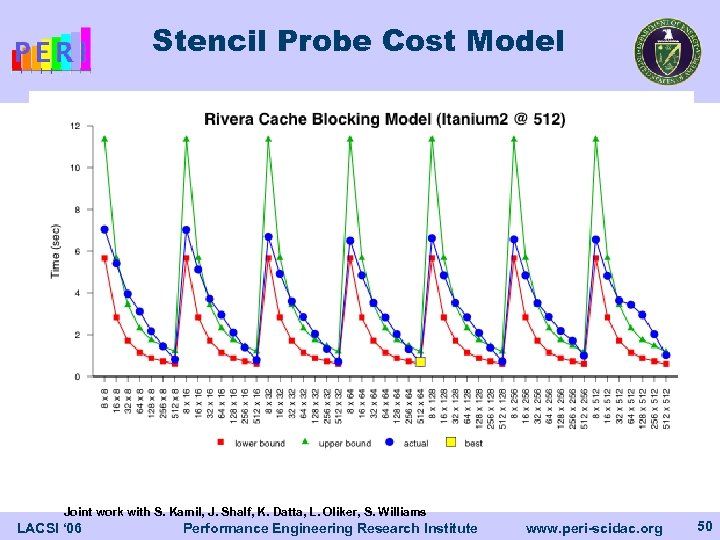

Stencil Probe Cost Model Joint work with S. Kamil, J. Shalf, K. Datta, L. Oliker, S. Williams LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 50

Stencil Probe Cost Model Joint work with S. Kamil, J. Shalf, K. Datta, L. Oliker, S. Williams LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 50

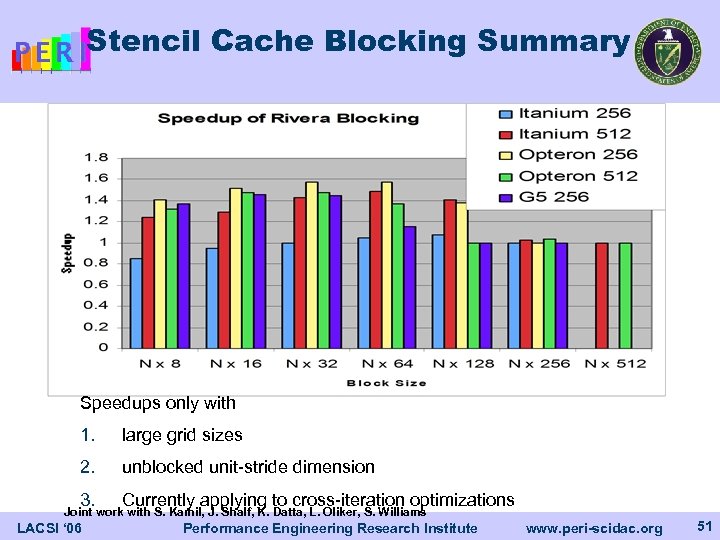

Stencil Cache Blocking Summary Speedups only with 1. large grid sizes 2. unblocked unit-stride dimension 3. Currently applying to cross-iteration optimizations Joint work with S. Kamil, J. Shalf, K. Datta, L. Oliker, S. Williams LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 51

Stencil Cache Blocking Summary Speedups only with 1. large grid sizes 2. unblocked unit-stride dimension 3. Currently applying to cross-iteration optimizations Joint work with S. Kamil, J. Shalf, K. Datta, L. Oliker, S. Williams LACSI ‘ 06 Performance Engineering Research Institute www. peri-scidac. org 51