d69c910642e1a9b152c74e029d760508.ppt

- Количество слайдов: 43

Peer-to-Peer Support for Massively Multiplayer Games Bjorn Knutsson, Honghui Lu, Wei Xu, Bryan Hopkins Presented by Mohammed Alam (Shahed)

Outline • • Introduction Overview of MMG Peer-to-Peer Infrastructure Distributed Game Design Game on P 2 P overlay Experimental Results Future Work and Discussion 2

Outline • • Introduction Overview of MMG Peer-to-Peer Infrastructure Distributed Game Design Game on P 2 P overlay Experimental Results Future Work and Discussion 3

Introduction • Proposes use of P 2 P overlays to support Massively multiplayer games (MMG) • Primary contribution of paper: – Architectural (P 2 P for MMG) – Evaluative 4

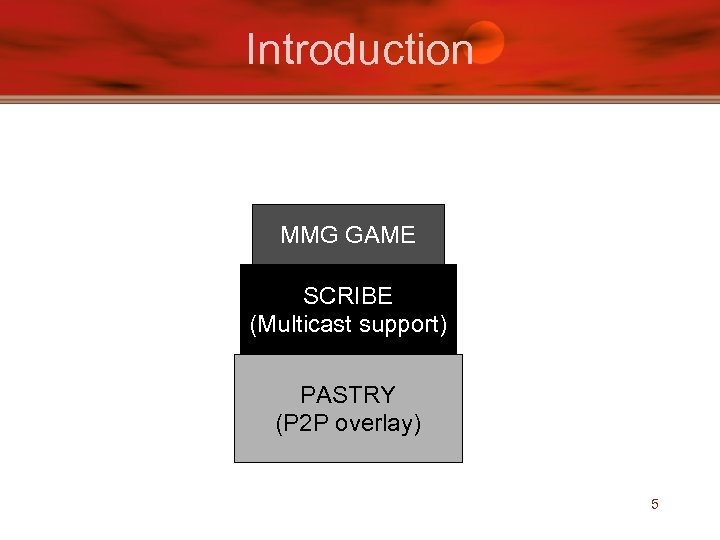

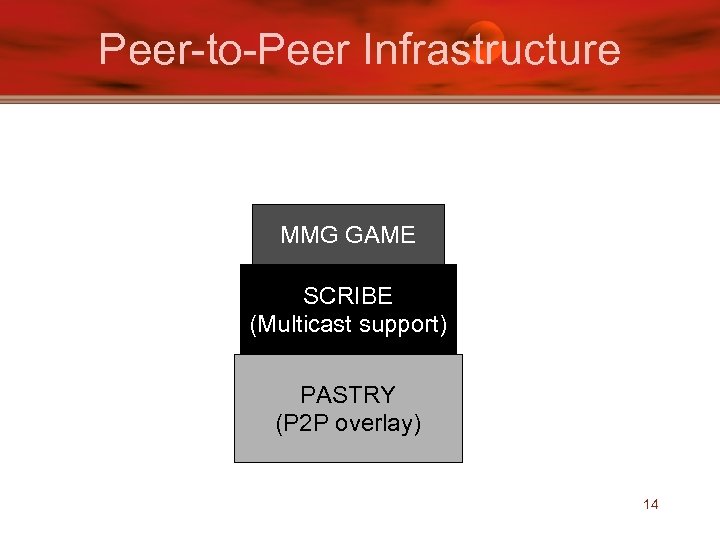

Introduction MMG GAME SCRIBE (Multicast support) PASTRY (P 2 P overlay) 5

Introduction • Players contribute memory, CPU cycles and bandwidth for shared game state • Three potential problems: – Performance – Availability – security 6

Outline • • Introduction Overview of MMG Peer-to-Peer Infrastructure Distributed Game Design Game on P 2 P overlay Experimental Results Future Work and Discussion 7

Overview of MMG • Thousands of players co-exist in same game world • Most MMG’s are role playing games (RPG) or real-time strategy(RTS) or hybrids • Examples: Everquest, Ultima online, Sims online 8

Overview of MMG • GAME STATES World made up of - immutable landscape information (terrain) - Characters controlled by players - Mutable objects (food, tools, weapons) - Non-player characters (NPCs) controlled by automated algorithms 9

Overview of MMG • GAME STATES (contd. . ) – Game world divided into connected regions – Regions on different servers • Regions further divided to keep data in memory small 10

Overview of MMG • EXISTING SYSTEM SUPPORT – Client-server architecture • Server responsible for – Maintain & disseminate game state – Account management & authentication • Scalability achieved by – Dedicated servers – Clustering servers » LAN or computing grid 11

Overview of MMG • Latency – Varies – Guiding ‘avatars’ tolerates more latency – First person shooter games (180 millisecond latency max) – Real time strategy (several seconds) 12

Outline • • Introduction Overview of MMG Peer-to-Peer Infrastructure Distributed Game Design Game on P 2 P overlay Experimental Results Future Work and Discussion 13

Peer-to-Peer Infrastructure MMG GAME SCRIBE (Multicast support) PASTRY (P 2 P overlay) 14

Outline • • Introduction Overview of MMG Peer-to-Peer Infrastructure Distributed Game Design Game on P 2 P overlay Experimental Results Future Work and Discussion 15

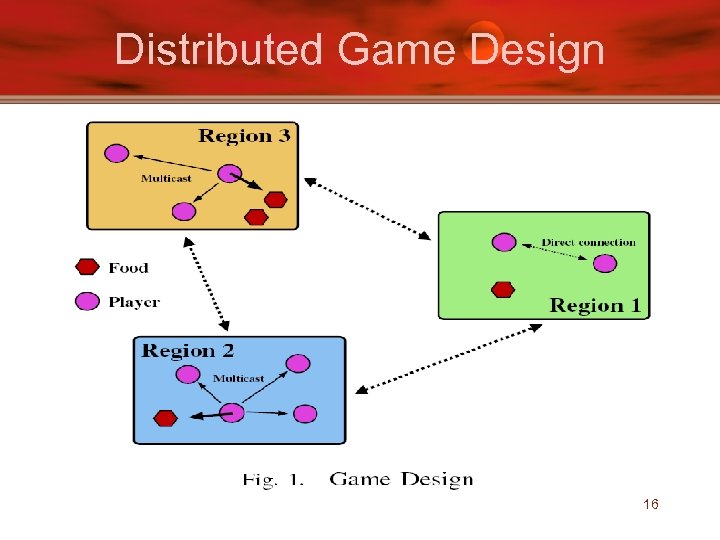

Distributed Game Design 16

Distributed Game Design • Persistent user state is centralized – Example: payment information, character • Allows central server to delegate bandwidth and process intensive game state to peers 17

Distributed Game Design • Game design based on fact that: – Players have limited movement speed – Limited sensing capability – Hence data shows temporal and spatial localities – Use Interest Management • Limit amount of state player has access to 18

Distributed Game Design • Players in same region form interest group • State updates relevant to group disseminated only within group • Player changes group when going from region to region 19

Distributed Game Design • GAME STATE CONSISTENCY – Must be consistent among players in a region – Basic approach: employ coordinators to resolve update conflicts – Split game state management into three classes to handle update conflicts: • Player state • Object state • The Map 20

Distributed Game Design • Player state – Single writer multiple reader – Player-player interaction effects only the 2 players involved – Position change is most common event • Use best effort multicast to players in same region • Use dead reckoning to handle loss or delay 21

Distributed Game Design • Object state – Use coordinator-based mechanism for shared objects – Each object assigned a coordinator – Coordinator resolves conflicting updates and keeps current value 22

Outline • • Introduction Overview of MMG Peer-to-Peer Infrastructure Distributed Game Design Game on P 2 P overlay Experimental Results Future Work and Discussion 23

Game on P 2 P overlay • Map game states to players – Group players & objects by region – Map regions to peers using pastry Key – Each region is assigned ID – Live Node with closest ID becomes coordinator – Random Mapping reduces chance of coordinator becoming member of region (reduces cheating) – Currently all objects in region coordinated by one Node – Could assign coordinator for each object 24

Game on P 2 P overlay • Shared state replication – Lightweight primary- backup to handle failures – Failure detected using regular game events – Dynamically replicate coordinator when failure detected – Keep at least one replica at all times – Uses property of P 2 P (route message with key K to node ID, N , closest to K) 25

Game on P 2 P overlay • Shared state replication (contd. . ) – The replica kept at M which is the next closest to message or object K – If new node added which is closer to message K than coordinator • Forwards to coordinator • Updates itself • Takes over as coordinator 26

Game on P 2 P overlay • Catastrophic failure – Both coordinator and replica dead – Problem solved by cached information from nodes interested in area 27

Outline • • Introduction Overview of MMG Peer-to-Peer Infrastructure Distributed Game Design Game on P 2 P overlay Experimental Results Future Work and Discussion 28

Experimental Results • Prototype Implementation of “Sim. Mud” • Used Free. Pastry (open source) • Maximum simulation size constrained by memory to 4000 virtual nodes • Players eat and fight every 20 seconds • Remain in a region for 40 seconds • Position updates every 150 millisec by multicast 29

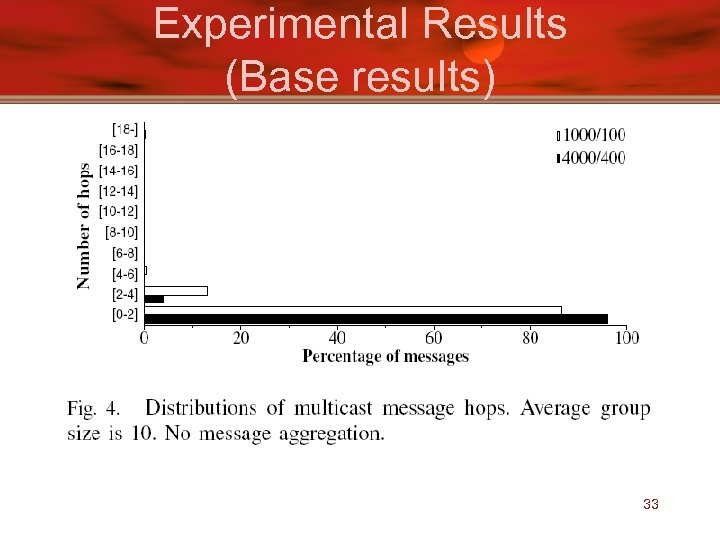

Experimental Results • Base Results – No players join or leave – 300 seconds of game play – Average 10 players per region – Link between nodes have random delay of 3 -100 ms to simulate network delay 30

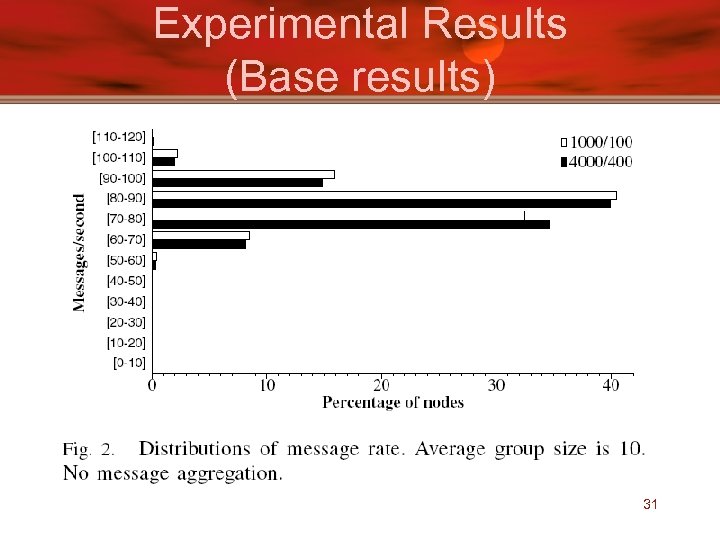

Experimental Results (Base results) 31

Experimental Results (Base results) • 1000 to 4000 players with 100 to 400 regions • Each node receives 50 – 120 messages • 70 update messages per second – 10 players * 7 position updates • Unicast and multicast message take around 6 hops 32

Experimental Results (Base results) 33

Experimental Results • Breakdown of type of messages – 99% messages are position updates – Region changes take most bandwidth – Message rate of object updates higher than player-player updates • Object updates multicast to region • Object update sent to replica • Player player interaction effects only players 34

Experimental Results • Effect of Population Growth – As long as average density remains same, population growth does not make difference • Effect of Population Density – Ran with 1000 players , 25 regions – Position updates increases linearly per node – Non – uniform player distribution hurts performance 35

Experimental Results • Three ways to deal with population density problem – Allow max number of players in region – Different regions have different size – System dynamically repartitions regions with increasing players 36

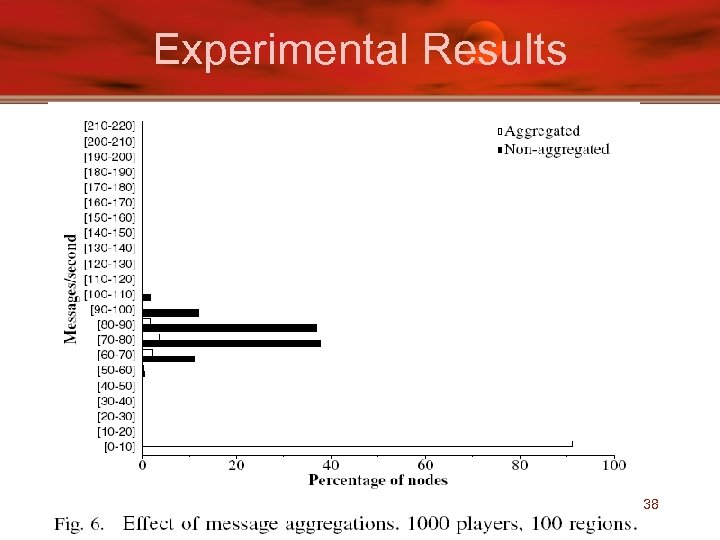

Experimental Results • Effect of message aggregation – Since updates are multicast, aggregate them at root – Position update aggregated from all players before transmit – Cuts bandwidth requirement by half – Nodes receive less messages 37

Experimental Results 38

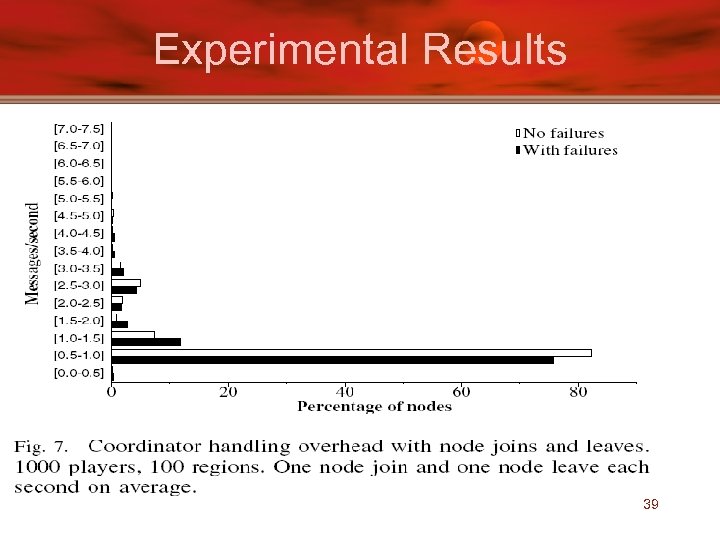

Experimental Results 39

Experimental Results • Effect of network dynamics – Nodes join and depart at regular intervals – Simulate one random node join and depart per second – Per-node failure rate of 0. 06 per minute – Average session length of 16. 7 minutes (close to 18 minutes for half life) – Average message rate increased from 24. 12 to 24. 52 – Catastrophic failure every 20 hours 40

Outline • • Introduction Overview of MMG Peer-to-Peer Infrastructure Distributed Game Design Game on P 2 P overlay Experimental Results Future Work and Discussion 41

Future Work • Assumes uniform latency for now • Testing games with more states and on global distributed network platforms • Stop cheating by detection 42

Discussion • Assigning random coordinators could hurt in P 2 P (modem vs high-speed) • How close can the results obtained in simulation on one machine work in real • Given range of 7. 2 k. B/sec – 22. 34 KB/sec in easy game. What about games with more states • How would aggregating messages be bad? – In their case waits for all messages to come before sending? Latency issues? 43

d69c910642e1a9b152c74e029d760508.ppt