33caae3cfd5ca243ae981daf7e3191ab.ppt

- Количество слайдов: 32

PDS Data Movement and Storage Planning (PMWG) PDS MC F 2 F UCLA Dan Crichton November 28 -29, 2012 1

PDS Data Movement and Storage Planning (PMWG) PDS MC F 2 F UCLA Dan Crichton November 28 -29, 2012 1

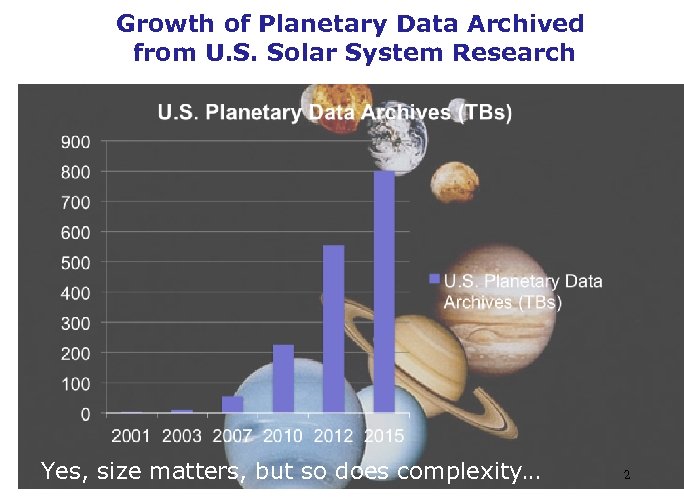

Growth of Planetary Data Archived from U. S. Solar System Research Yes, size matters, but so does complexity… 2

Growth of Planetary Data Archived from U. S. Solar System Research Yes, size matters, but so does complexity… 2

Big Data Challenges • • • Storage Computation Movement of Data Heterogeneity Distribution …can affect how we generate, manage, and analyze science data. …commodity computing can help, if architected correctly

Big Data Challenges • • • Storage Computation Movement of Data Heterogeneity Distribution …can affect how we generate, manage, and analyze science data. …commodity computing can help, if architected correctly

Big Data Technologies

Big Data Technologies

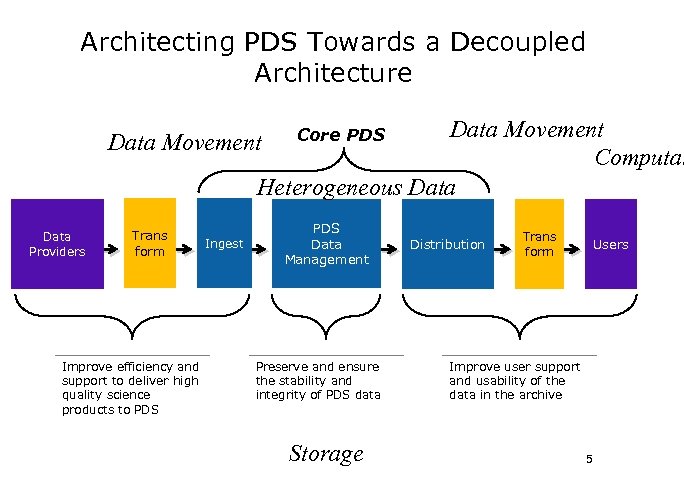

Architecting PDS Towards a Decoupled Architecture Data Movement Computat Heterogeneous Data Movement Data Providers Trans form Improve efficiency and support to deliver high quality science products to PDS Ingest Core PDS Data Management Preserve and ensure the stability and integrity of PDS data Storage Distribution Trans form Users Improve user support and usability of the data in the archive 5

Architecting PDS Towards a Decoupled Architecture Data Movement Computat Heterogeneous Data Movement Data Providers Trans form Improve efficiency and support to deliver high quality science products to PDS Ingest Core PDS Data Management Preserve and ensure the stability and integrity of PDS data Storage Distribution Trans form Users Improve user support and usability of the data in the archive 5

Big Data Challenges • • • Storage Computation Movement of Data Heterogeneity Distribution …can affect how we generate, manage, and analyze science data.

Big Data Challenges • • • Storage Computation Movement of Data Heterogeneity Distribution …can affect how we generate, manage, and analyze science data.

Storage Eye Chart • • • Direct Attached Storage (DAS) • DAS based storage (usually disk or tape) is directly attached to internal server (point-topoint). Network Attached Storage (NAS) • A NAS unit or “appliance” is a dedicated storage server connected to an Ethernet network that provides file-based data storage services to other devices on the network. NAS units remove the responsibility of file serving from other servers on the network. Storage Area Network (SAN) • SAN is an architecture to connect detached storage devices, such as disk arrays, tape libraries, and optical jukeboxes, to servers in a way that the devices appear as local resources. Redundant Array of Inexpensive Disks (RAID) • The concept of RAID is to combine multiple inexpensive disk drives into an array of disk drives which perform (usually) better then a single disk drive. The RAID array will appear as a single drive to the connected server. RAID technology is typically employed in a DAS, NAS, or SAN solution. Cloud Storage • Cloud Storage involves storage capacity that is accessed through the internet or wide area network (WAN) , storage is usually purchased on an as-needed basis. Users can expand capacity on the fly. Providers operates a highly scalable storage infrastructure , often in physically dispersed locations. Solid State Drive Storage • Solid State Drive storage technology is evolving to a point where SSDs can, in some cases, start to supplant traditional storage. SSDs that use DRAM-based technology (volatile memory) cannot survive a power loss but flash-based SSDs (non-volatile), although slower then DRAM-based SSDs, do not require a battery backup and therefore become acceptable in the enterprise. It has recently been announced that 1 TB SSDs are available for industrial applications, like military, medical and the like. SSD technology is

Storage Eye Chart • • • Direct Attached Storage (DAS) • DAS based storage (usually disk or tape) is directly attached to internal server (point-topoint). Network Attached Storage (NAS) • A NAS unit or “appliance” is a dedicated storage server connected to an Ethernet network that provides file-based data storage services to other devices on the network. NAS units remove the responsibility of file serving from other servers on the network. Storage Area Network (SAN) • SAN is an architecture to connect detached storage devices, such as disk arrays, tape libraries, and optical jukeboxes, to servers in a way that the devices appear as local resources. Redundant Array of Inexpensive Disks (RAID) • The concept of RAID is to combine multiple inexpensive disk drives into an array of disk drives which perform (usually) better then a single disk drive. The RAID array will appear as a single drive to the connected server. RAID technology is typically employed in a DAS, NAS, or SAN solution. Cloud Storage • Cloud Storage involves storage capacity that is accessed through the internet or wide area network (WAN) , storage is usually purchased on an as-needed basis. Users can expand capacity on the fly. Providers operates a highly scalable storage infrastructure , often in physically dispersed locations. Solid State Drive Storage • Solid State Drive storage technology is evolving to a point where SSDs can, in some cases, start to supplant traditional storage. SSDs that use DRAM-based technology (volatile memory) cannot survive a power loss but flash-based SSDs (non-volatile), although slower then DRAM-based SSDs, do not require a battery backup and therefore become acceptable in the enterprise. It has recently been announced that 1 TB SSDs are available for industrial applications, like military, medical and the like. SSD technology is

Storage Architectural Concepts • Decentralized • In-house storage locally attached • Resource managed (procured, backed up, maintained, replenished) by locally • Centralized • Common storage at a central remote • But, not necessarily separation of data, catalog and services • Cloud • Storage as a virtual cloud infrastructure resourced over the Internet • Resource managed by a third party / organization 8

Storage Architectural Concepts • Decentralized • In-house storage locally attached • Resource managed (procured, backed up, maintained, replenished) by locally • Centralized • Common storage at a central remote • But, not necessarily separation of data, catalog and services • Cloud • Storage as a virtual cloud infrastructure resourced over the Internet • Resource managed by a third party / organization 8

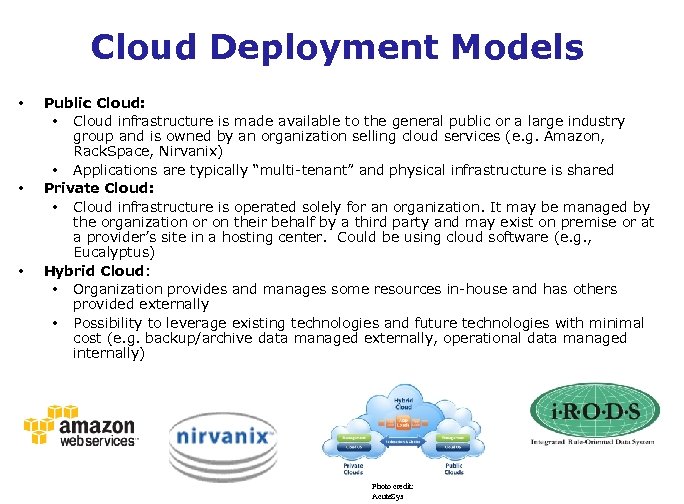

Cloud Deployment Models • • • Public Cloud: • Cloud infrastructure is made available to the general public or a large industry group and is owned by an organization selling cloud services (e. g. Amazon, Rack. Space, Nirvanix) • Applications are typically “multi-tenant” and physical infrastructure is shared Private Cloud: • Cloud infrastructure is operated solely for an organization. It may be managed by the organization or on their behalf by a third party and may exist on premise or at a provider’s site in a hosting center. Could be using cloud software (e. g. , Eucalyptus) Hybrid Cloud: • Organization provides and manages some resources in-house and has others provided externally • Possibility to leverage existing technologies and future technologies with minimal cost (e. g. backup/archive data managed externally, operational data managed internally) Photo credit: Acute. Sys

Cloud Deployment Models • • • Public Cloud: • Cloud infrastructure is made available to the general public or a large industry group and is owned by an organization selling cloud services (e. g. Amazon, Rack. Space, Nirvanix) • Applications are typically “multi-tenant” and physical infrastructure is shared Private Cloud: • Cloud infrastructure is operated solely for an organization. It may be managed by the organization or on their behalf by a third party and may exist on premise or at a provider’s site in a hosting center. Could be using cloud software (e. g. , Eucalyptus) Hybrid Cloud: • Organization provides and manages some resources in-house and has others provided externally • Possibility to leverage existing technologies and future technologies with minimal cost (e. g. backup/archive data managed externally, operational data managed internally) Photo credit: Acute. Sys

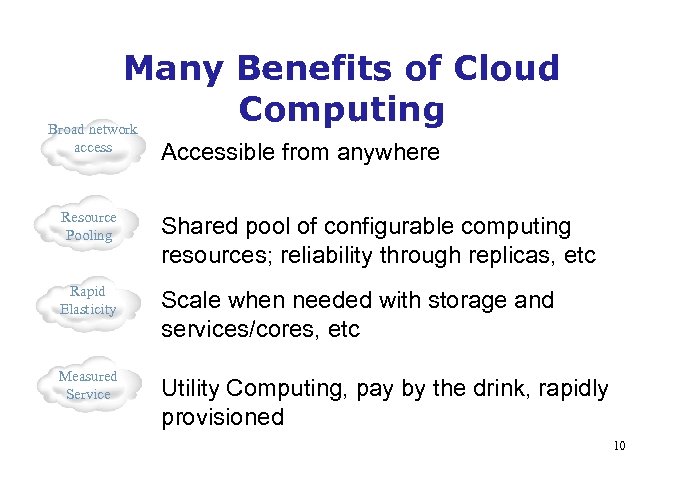

Many Benefits of Cloud Computing Broad network access Accessible from anywhere Resource Pooling Shared pool of configurable computing resources; reliability through replicas, etc Rapid Elasticity Scale when needed with storage and services/cores, etc Measured Service Utility Computing, pay by the drink, rapidly provisioned 10

Many Benefits of Cloud Computing Broad network access Accessible from anywhere Resource Pooling Shared pool of configurable computing resources; reliability through replicas, etc Rapid Elasticity Scale when needed with storage and services/cores, etc Measured Service Utility Computing, pay by the drink, rapidly provisioned 10

Challenges of Cloud Storage • • Data Integrity Ownership (local control, etc) Security ITAR Data movement to/from cloud Procurement Cost arrangements

Challenges of Cloud Storage • • Data Integrity Ownership (local control, etc) Security ITAR Data movement to/from cloud Procurement Cost arrangements

The Planetary Cloud Experiment • Utility to PDS • How does it fit PDS 4 architecture • APIs • Decoupled storage and services • Data movement challenges? • Cloud Storage Tested as a secondary storage option • i. RODS @ SDSC, Amazon (S 3), Nirvanix 12

The Planetary Cloud Experiment • Utility to PDS • How does it fit PDS 4 architecture • APIs • Decoupled storage and services • Data movement challenges? • Cloud Storage Tested as a secondary storage option • i. RODS @ SDSC, Amazon (S 3), Nirvanix 12

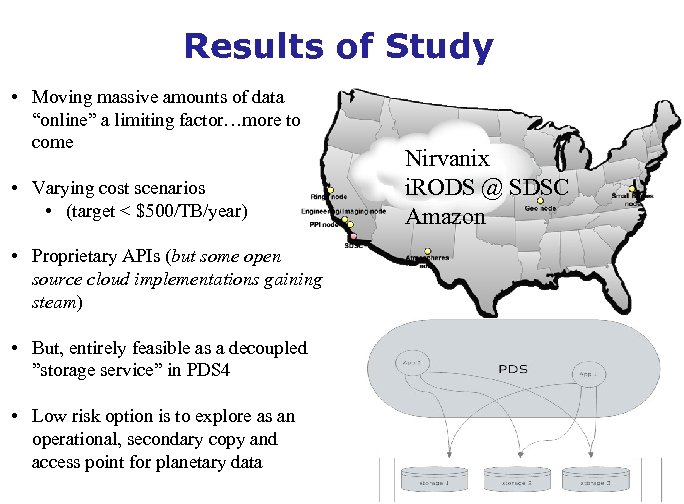

Results of Study • Moving massive amounts of data “online” a limiting factor…more to come • Varying cost scenarios • (target < $500/TB/year) • Proprietary APIs (but some open source cloud implementations gaining steam) • But, entirely feasible as a decoupled ”storage service” in PDS 4 • Low risk option is to explore as an operational, secondary copy and access point for planetary data Nirvanix i. RODS @ SDSC Amazon

Results of Study • Moving massive amounts of data “online” a limiting factor…more to come • Varying cost scenarios • (target < $500/TB/year) • Proprietary APIs (but some open source cloud implementations gaining steam) • But, entirely feasible as a decoupled ”storage service” in PDS 4 • Low risk option is to explore as an operational, secondary copy and access point for planetary data Nirvanix i. RODS @ SDSC Amazon

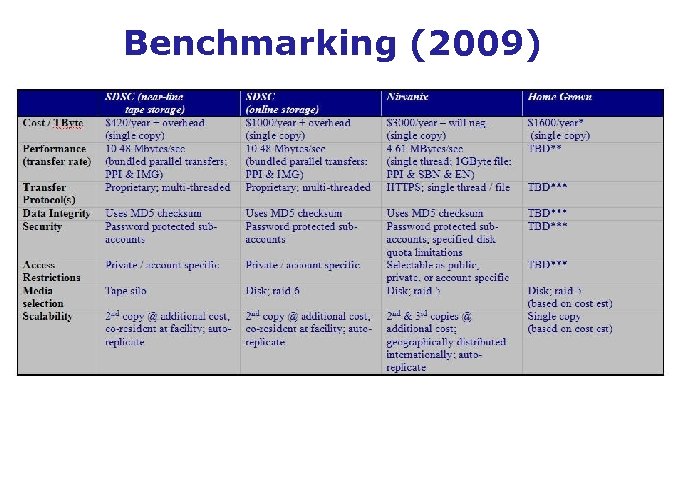

Benchmarking (2009)

Benchmarking (2009)

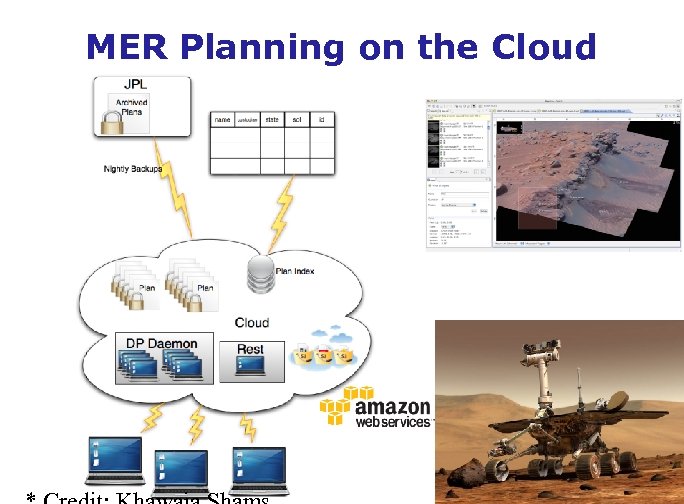

MER Planning on the Cloud

MER Planning on the Cloud

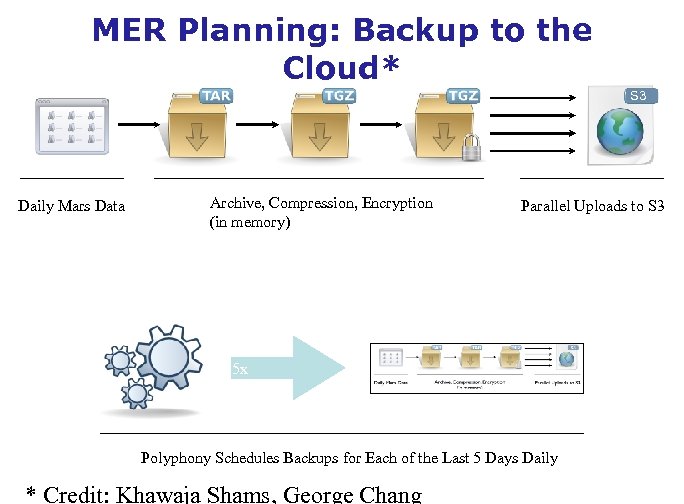

MER Planning: Backup to the Cloud* S 3 Daily Mars Data Archive, Compression, Encryption (in memory) Parallel Uploads to S 3 5 x Polyphony Schedules Backups for Each of the Last 5 Days Daily * Credit: Khawaja Shams, George Chang

MER Planning: Backup to the Cloud* S 3 Daily Mars Data Archive, Compression, Encryption (in memory) Parallel Uploads to S 3 5 x Polyphony Schedules Backups for Each of the Last 5 Days Daily * Credit: Khawaja Shams, George Chang

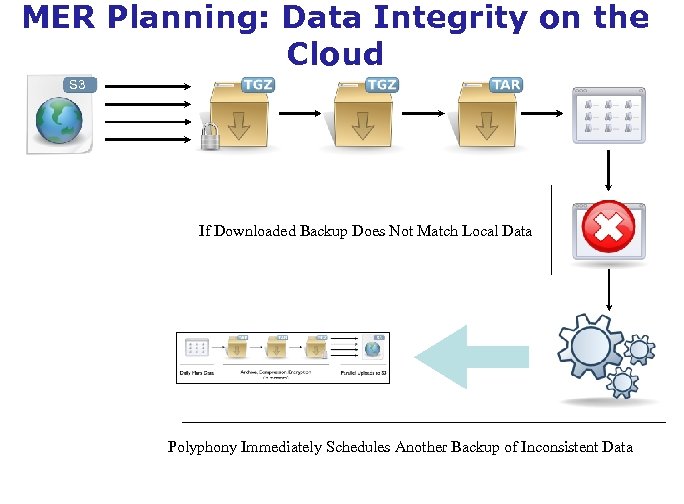

MER Planning: Data Integrity on the Cloud S 3 If Downloaded Backup Does Not Match Local Data Polyphony Immediately Schedules Another Backup of Inconsistent Data

MER Planning: Data Integrity on the Cloud S 3 If Downloaded Backup Does Not Match Local Data Polyphony Immediately Schedules Another Backup of Inconsistent Data

Big Data Challenges • • • Storage Computation Movement of Data Heterogeneity Distribution …can affect how we generate, manage, and analyze science data.

Big Data Challenges • • • Storage Computation Movement of Data Heterogeneity Distribution …can affect how we generate, manage, and analyze science data.

Cloud Computing and Computation • On-demand computation (scaling to massive number of cores) • Amazon EC 2, one of the most popular • Commoditizing super-computing • Again, architecting systems to decouple “processing” and “computation” so it can be executed on the cloud is key… two examples • LMMP example (to come) • Airborne data processing (to come) • Coupled with computational frameworks (e. g. , Apache Hadoop) • Open source implementation of Map-Reduce

Cloud Computing and Computation • On-demand computation (scaling to massive number of cores) • Amazon EC 2, one of the most popular • Commoditizing super-computing • Again, architecting systems to decouple “processing” and “computation” so it can be executed on the cloud is key… two examples • LMMP example (to come) • Airborne data processing (to come) • Coupled with computational frameworks (e. g. , Apache Hadoop) • Open source implementation of Map-Reduce

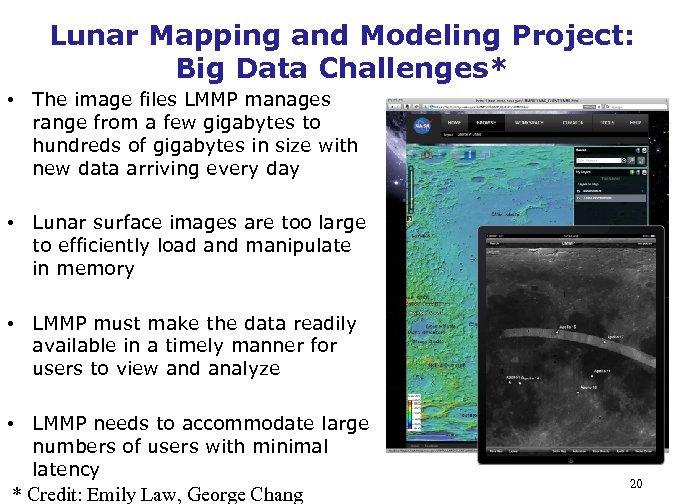

Lunar Mapping and Modeling Project: Big Data Challenges* • The image files LMMP manages range from a few gigabytes to hundreds of gigabytes in size with new data arriving every day • Lunar surface images are too large to efficiently load and manipulate in memory • LMMP must make the data readily available in a timely manner for users to view and analyze • LMMP needs to accommodate large numbers of users with minimal latency * Credit: Emily Law, George Chang 20

Lunar Mapping and Modeling Project: Big Data Challenges* • The image files LMMP manages range from a few gigabytes to hundreds of gigabytes in size with new data arriving every day • Lunar surface images are too large to efficiently load and manipulate in memory • LMMP must make the data readily available in a timely manner for users to view and analyze • LMMP needs to accommodate large numbers of users with minimal latency * Credit: Emily Law, George Chang 20

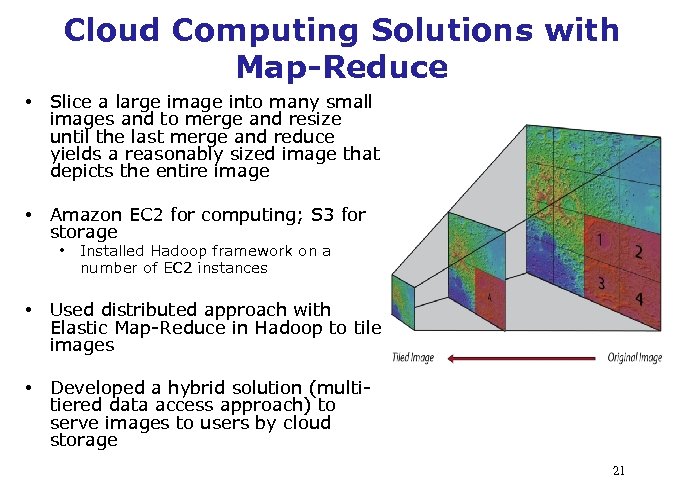

Cloud Computing Solutions with Map-Reduce • Slice a large image into many small images and to merge and resize until the last merge and reduce yields a reasonably sized image that depicts the entire image • Amazon EC 2 for computing; S 3 for storage • Installed Hadoop framework on a number of EC 2 instances • Used distributed approach with Elastic Map-Reduce in Hadoop to tile images • Developed a hybrid solution (multitiered data access approach) to serve images to users by cloud storage 21

Cloud Computing Solutions with Map-Reduce • Slice a large image into many small images and to merge and resize until the last merge and reduce yields a reasonably sized image that depicts the entire image • Amazon EC 2 for computing; S 3 for storage • Installed Hadoop framework on a number of EC 2 instances • Used distributed approach with Elastic Map-Reduce in Hadoop to tile images • Developed a hybrid solution (multitiered data access approach) to serve images to users by cloud storage 21

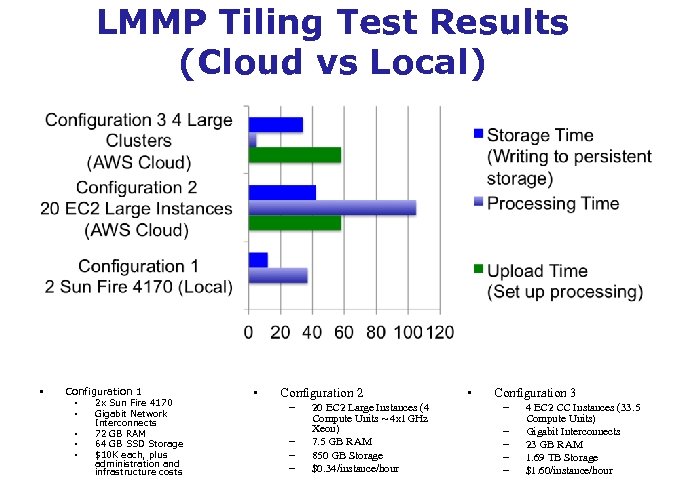

LMMP Tiling Test Results (Cloud vs Local) • Configuration 1 • • • 2 x Sun Fire 4170 Gigabit Network Interconnects 72 GB RAM 64 GB SSD Storage $10 K each, plus administration and infrastructure costs • Configuration 2 – – 20 EC 2 Large Instances (4 Compute Units ~ 4 x 1 GHz Xeon) 7. 5 GB RAM 850 GB Storage $0. 34/instance/hour • Configuration 3 – – – 4 EC 2 CC Instances (33. 5 Compute Units) Gigabit Interconnects 23 GB RAM 1. 69 TB Storage $1. 60/instance/hour

LMMP Tiling Test Results (Cloud vs Local) • Configuration 1 • • • 2 x Sun Fire 4170 Gigabit Network Interconnects 72 GB RAM 64 GB SSD Storage $10 K each, plus administration and infrastructure costs • Configuration 2 – – 20 EC 2 Large Instances (4 Compute Units ~ 4 x 1 GHz Xeon) 7. 5 GB RAM 850 GB Storage $0. 34/instance/hour • Configuration 3 – – – 4 EC 2 CC Instances (33. 5 Compute Units) Gigabit Interconnects 23 GB RAM 1. 69 TB Storage $1. 60/instance/hour

• Cloud Computing: Addressing Challenges are challenges Cloud has shown very promising results, but there • • Proprietary APIs Support for ITAR-sensitive data Data transfer rates to the commercial cloud Firewall issues Procurement Costs for long term storage More work ahead • Amazon EC 2/S 3 reported an “ITAR Region” available • Continued benchmarking and optimization has demonstrated increased data transfer rates, particularly using Internet 2 • JPL developing a “Virtual Private Cloud” connection to Amazon, causing EC 2 nodes to appear inside the JPL Firewall • Improved procurement process to allow JPL projects to use AWS 23

• Cloud Computing: Addressing Challenges are challenges Cloud has shown very promising results, but there • • Proprietary APIs Support for ITAR-sensitive data Data transfer rates to the commercial cloud Firewall issues Procurement Costs for long term storage More work ahead • Amazon EC 2/S 3 reported an “ITAR Region” available • Continued benchmarking and optimization has demonstrated increased data transfer rates, particularly using Internet 2 • JPL developing a “Virtual Private Cloud” connection to Amazon, causing EC 2 nodes to appear inside the JPL Firewall • Improved procurement process to allow JPL projects to use AWS 23

Big Data Challenges • • • Storage Computation Movement of Data Heterogeneity Distribution …can affect how we generate, manage, and analyze science data.

Big Data Challenges • • • Storage Computation Movement of Data Heterogeneity Distribution …can affect how we generate, manage, and analyze science data.

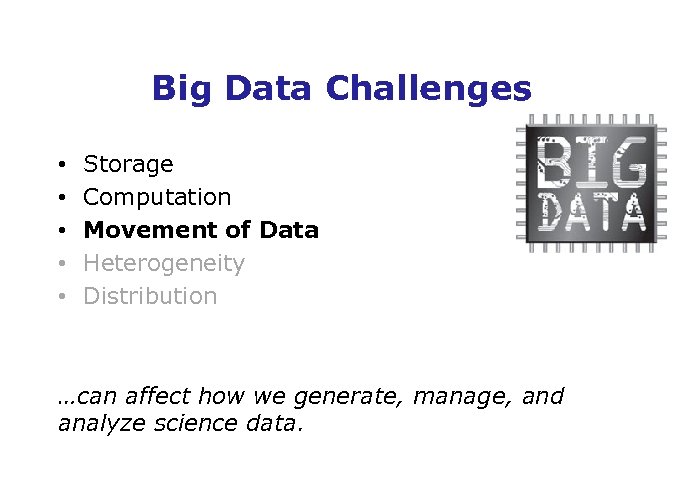

The Planetary Data Movement Experiment • Online data movement has been a limiting factor for embracing big data technologies • Conducted in 2006*, 2009 and 2012 • Evaluate trade offs for moving data • • to PDS between Nodes to NSSDC/deep archive to Cloud * C. Mattmann, S. Kelly, D. Crichton, J. S. Hughes, S. Hardman, R. Joyner and P. Ramirez. A Classification and Evaluation of Data Movement Technologies for the Delivery of Highly Voluminous Scientific Data Products. In Proceedings of the NASA/IEEE Conference on Mass Storage Systems and Technologies (MSST 2006), pp. 131 -25 135, College Park, Maryland, May 15 -18, 2006

The Planetary Data Movement Experiment • Online data movement has been a limiting factor for embracing big data technologies • Conducted in 2006*, 2009 and 2012 • Evaluate trade offs for moving data • • to PDS between Nodes to NSSDC/deep archive to Cloud * C. Mattmann, S. Kelly, D. Crichton, J. S. Hughes, S. Hardman, R. Joyner and P. Ramirez. A Classification and Evaluation of Data Movement Technologies for the Delivery of Highly Voluminous Scientific Data Products. In Proceedings of the NASA/IEEE Conference on Mass Storage Systems and Technologies (MSST 2006), pp. 131 -25 135, College Park, Maryland, May 15 -18, 2006

Data Xfer Technologies Evaluated • FTP uses a single connection from transferring files; in general it is ubiquitous and where possible the simplest way for PDS to transfer data electronically • bb. FTP uses multiple threads/connections to improve data transfer. It works well as long as the number of connections are kept to a reasonable limit • Grid. FTP uses multiple threads/connections. It is part of the Globus project and is used by the climate research community to move models. In general, tests have shown that it is more difficult to set up due to the security infrastructure, etc • i. RODS uses multiple threads/connections to improve data transfer. It works well as long as the number of connections are kept to a reasonable limit • FDT uses multiple threads/connections to improve data transfer. It works well as long as the number of connections are kept to a reasonable limit

Data Xfer Technologies Evaluated • FTP uses a single connection from transferring files; in general it is ubiquitous and where possible the simplest way for PDS to transfer data electronically • bb. FTP uses multiple threads/connections to improve data transfer. It works well as long as the number of connections are kept to a reasonable limit • Grid. FTP uses multiple threads/connections. It is part of the Globus project and is used by the climate research community to move models. In general, tests have shown that it is more difficult to set up due to the security infrastructure, etc • i. RODS uses multiple threads/connections to improve data transfer. It works well as long as the number of connections are kept to a reasonable limit • FDT uses multiple threads/connections to improve data transfer. It works well as long as the number of connections are kept to a reasonable limit

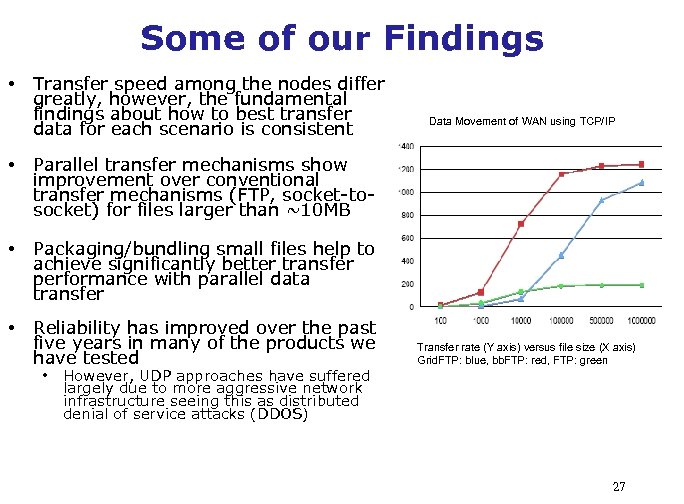

Some of our Findings • Transfer speed among the nodes differ greatly, however, the fundamental findings about how to best transfer data for each scenario is consistent • Parallel transfer mechanisms show improvement over conventional transfer mechanisms (FTP, socket-tosocket) for files larger than ~10 MB • Packaging/bundling small files help to achieve significantly better transfer performance with parallel data transfer • Reliability has improved over the past five years in many of the products we have tested Data Movement of WAN using TCP/IP • However, UDP approaches have suffered largely due to more aggressive network infrastructure seeing this as distributed denial of service attacks (DDOS) Transfer rate (Y axis) versus file size (X axis) Grid. FTP: blue, bb. FTP: red, FTP: green 27

Some of our Findings • Transfer speed among the nodes differ greatly, however, the fundamental findings about how to best transfer data for each scenario is consistent • Parallel transfer mechanisms show improvement over conventional transfer mechanisms (FTP, socket-tosocket) for files larger than ~10 MB • Packaging/bundling small files help to achieve significantly better transfer performance with parallel data transfer • Reliability has improved over the past five years in many of the products we have tested Data Movement of WAN using TCP/IP • However, UDP approaches have suffered largely due to more aggressive network infrastructure seeing this as distributed denial of service attacks (DDOS) Transfer rate (Y axis) versus file size (X axis) Grid. FTP: blue, bb. FTP: red, FTP: green 27

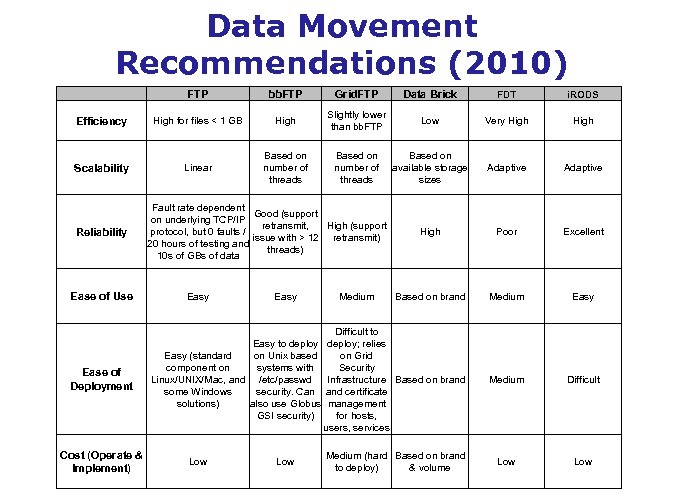

Data Movement Recommendations (2010) FTP bb. FTP Grid. FTP Data Brick FDT i. RODS Efficiency High for files < 1 GB High Slightly lower than bb. FTP Low Very High Scalability Linear Based on number of threads Based on available storage sizes Adaptive High Poor Excellent Based on brand Medium Easy Difficult to deploy; relies on Grid Security Infrastructure Based on brand certificate management for hosts, users, services Medium Difficult Medium (hard Based on brand to deploy) & volume Low Reliability Ease of Use Ease of Deployment Cost (Operate & Implement) Fault rate dependent Good (support on underlying TCP/IP retransmit, High (support protocol, but 0 faults / issue with > 12 retransmit) 20 hours of testing and threads) 10 s of GBs of data Easy to deploy Easy (standard on Unix based component on systems with Linux/UNIX/Mac, and /etc/passwd some Windows security. Can solutions) also use Globus GSI security) Low Medium

Data Movement Recommendations (2010) FTP bb. FTP Grid. FTP Data Brick FDT i. RODS Efficiency High for files < 1 GB High Slightly lower than bb. FTP Low Very High Scalability Linear Based on number of threads Based on available storage sizes Adaptive High Poor Excellent Based on brand Medium Easy Difficult to deploy; relies on Grid Security Infrastructure Based on brand certificate management for hosts, users, services Medium Difficult Medium (hard Based on brand to deploy) & volume Low Reliability Ease of Use Ease of Deployment Cost (Operate & Implement) Fault rate dependent Good (support on underlying TCP/IP retransmit, High (support protocol, but 0 faults / issue with > 12 retransmit) 20 hours of testing and threads) 10 s of GBs of data Easy to deploy Easy (standard on Unix based component on systems with Linux/UNIX/Mac, and /etc/passwd some Windows security. Can solutions) also use Globus GSI security) Low Medium

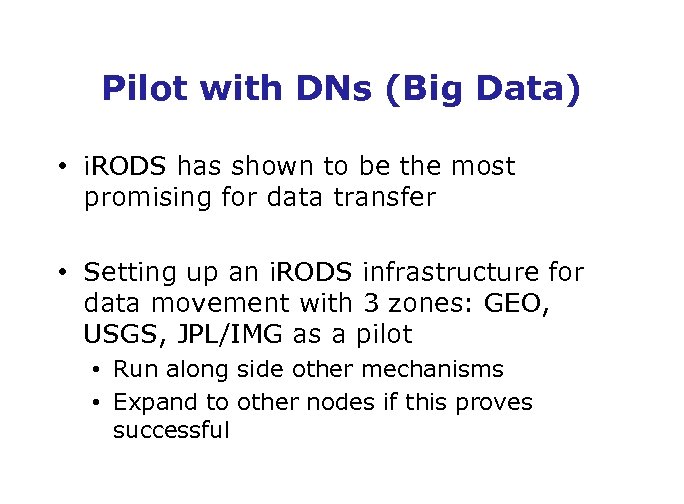

Pilot with DNs (Big Data) • i. RODS has shown to be the most promising for data transfer • Setting up an i. RODS infrastructure for data movement with 3 zones: GEO, USGS, JPL/IMG as a pilot • Run along side other mechanisms • Expand to other nodes if this proves successful

Pilot with DNs (Big Data) • i. RODS has shown to be the most promising for data transfer • Setting up an i. RODS infrastructure for data movement with 3 zones: GEO, USGS, JPL/IMG as a pilot • Run along side other mechanisms • Expand to other nodes if this proves successful

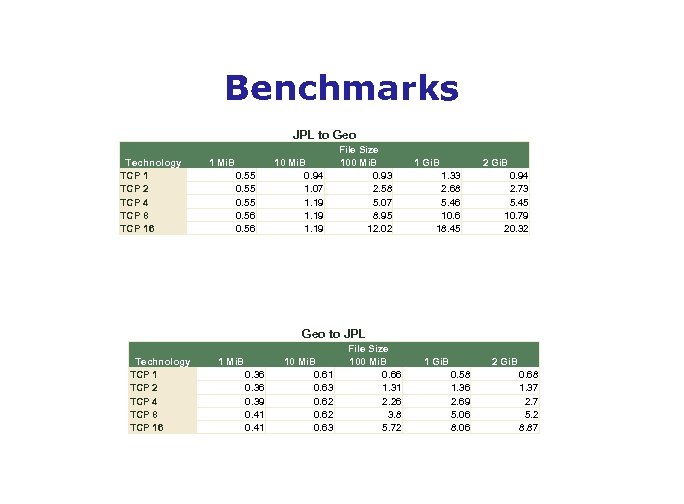

Benchmarks JPL to Geo Technology TCP 1 TCP 2 TCP 4 TCP 8 TCP 16 1 Mi. B 0. 55 0. 56 10 Mi. B 0. 94 1. 07 1. 19 File Size 100 Mi. B 0. 93 2. 58 5. 07 8. 95 12. 02 1 Gi. B 2 Gi. B 1. 33 2. 68 5. 46 10. 6 18. 45 0. 94 2. 73 5. 45 10. 79 20. 32 Geo to JPL Technology TCP 1 TCP 2 TCP 4 TCP 8 TCP 16 1 Mi. B 0. 36 0. 39 0. 41 10 Mi. B 0. 61 0. 63 0. 62 0. 63 File Size 100 Mi. B 0. 66 1. 31 2. 26 3. 8 5. 72 1 Gi. B 2 Gi. B 0. 58 1. 36 2. 69 5. 06 8. 06 0. 68 1. 37 2. 7 5. 2 8. 87

Benchmarks JPL to Geo Technology TCP 1 TCP 2 TCP 4 TCP 8 TCP 16 1 Mi. B 0. 55 0. 56 10 Mi. B 0. 94 1. 07 1. 19 File Size 100 Mi. B 0. 93 2. 58 5. 07 8. 95 12. 02 1 Gi. B 2 Gi. B 1. 33 2. 68 5. 46 10. 6 18. 45 0. 94 2. 73 5. 45 10. 79 20. 32 Geo to JPL Technology TCP 1 TCP 2 TCP 4 TCP 8 TCP 16 1 Mi. B 0. 36 0. 39 0. 41 10 Mi. B 0. 61 0. 63 0. 62 0. 63 File Size 100 Mi. B 0. 66 1. 31 2. 26 3. 8 5. 72 1 Gi. B 2 Gi. B 0. 58 1. 36 2. 69 5. 06 8. 06 0. 68 1. 37 2. 7 5. 2 8. 87

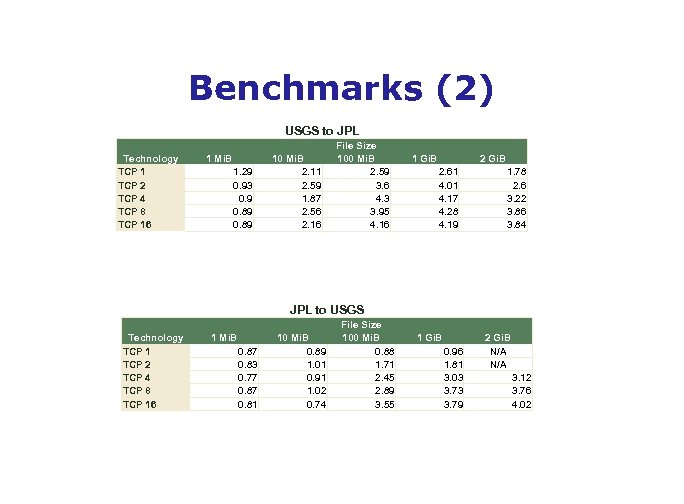

Benchmarks (2) USGS to JPL Technology TCP 1 TCP 2 TCP 4 TCP 8 TCP 16 1 Mi. B 1. 29 0. 93 0. 9 0. 89 10 Mi. B 2. 11 2. 59 1. 87 2. 56 2. 16 File Size 100 Mi. B 2. 59 3. 6 4. 3 3. 95 4. 16 1 Gi. B 2. 61 4. 01 4. 17 4. 28 4. 19 1. 78 2. 6 3. 22 3. 86 3. 84 JPL to USGS Technology TCP 1 TCP 2 TCP 4 TCP 8 TCP 16 1 Mi. B 0. 87 0. 83 0. 77 0. 81 10 Mi. B 0. 89 1. 01 0. 91 1. 02 0. 74 File Size 100 Mi. B 0. 88 1. 71 2. 45 2. 89 3. 55 1 Gi. B 0. 96 1. 81 3. 03 3. 79 2 Gi. B N/A 3. 12 3. 76 4. 02

Benchmarks (2) USGS to JPL Technology TCP 1 TCP 2 TCP 4 TCP 8 TCP 16 1 Mi. B 1. 29 0. 93 0. 9 0. 89 10 Mi. B 2. 11 2. 59 1. 87 2. 56 2. 16 File Size 100 Mi. B 2. 59 3. 6 4. 3 3. 95 4. 16 1 Gi. B 2. 61 4. 01 4. 17 4. 28 4. 19 1. 78 2. 6 3. 22 3. 86 3. 84 JPL to USGS Technology TCP 1 TCP 2 TCP 4 TCP 8 TCP 16 1 Mi. B 0. 87 0. 83 0. 77 0. 81 10 Mi. B 0. 89 1. 01 0. 91 1. 02 0. 74 File Size 100 Mi. B 0. 88 1. 71 2. 45 2. 89 3. 55 1 Gi. B 0. 96 1. 81 3. 03 3. 79 2 Gi. B N/A 3. 12 3. 76 4. 02

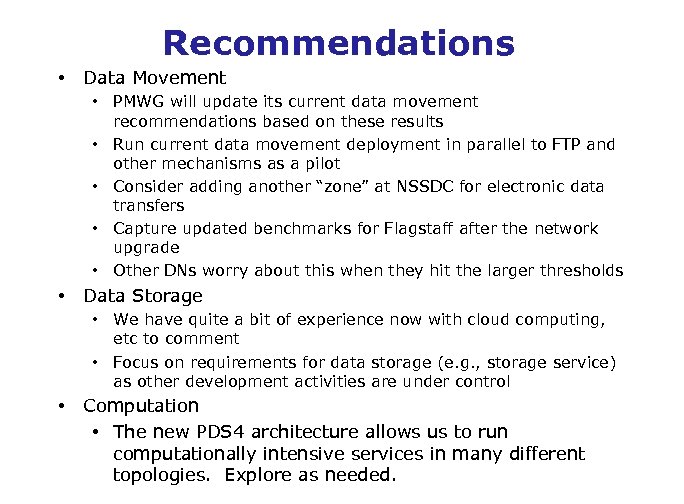

Recommendations • Data Movement • PMWG will update its current data movement recommendations based on these results • Run current data movement deployment in parallel to FTP and other mechanisms as a pilot • Consider adding another “zone” at NSSDC for electronic data transfers • Capture updated benchmarks for Flagstaff after the network upgrade • Other DNs worry about this when they hit the larger thresholds • Data Storage • We have quite a bit of experience now with cloud computing, etc to comment • Focus on requirements for data storage (e. g. , storage service) as other development activities are under control • Computation • The new PDS 4 architecture allows us to run computationally intensive services in many different topologies. Explore as needed.

Recommendations • Data Movement • PMWG will update its current data movement recommendations based on these results • Run current data movement deployment in parallel to FTP and other mechanisms as a pilot • Consider adding another “zone” at NSSDC for electronic data transfers • Capture updated benchmarks for Flagstaff after the network upgrade • Other DNs worry about this when they hit the larger thresholds • Data Storage • We have quite a bit of experience now with cloud computing, etc to comment • Focus on requirements for data storage (e. g. , storage service) as other development activities are under control • Computation • The new PDS 4 architecture allows us to run computationally intensive services in many different topologies. Explore as needed.