9ce378094b32b1543318b8a02ca46373.ppt

- Количество слайдов: 15

Parallelization of CPAIMD using Charm++ Parallel Programming Lab

CPAIMD • Collaboration with Glenn Martyna and Mark Tuckerman • MPI code – PINY – Scalability problems • When #procs >= #orbitals • Charm++ approach – Better scalability using virtualization – Further divide orbitals

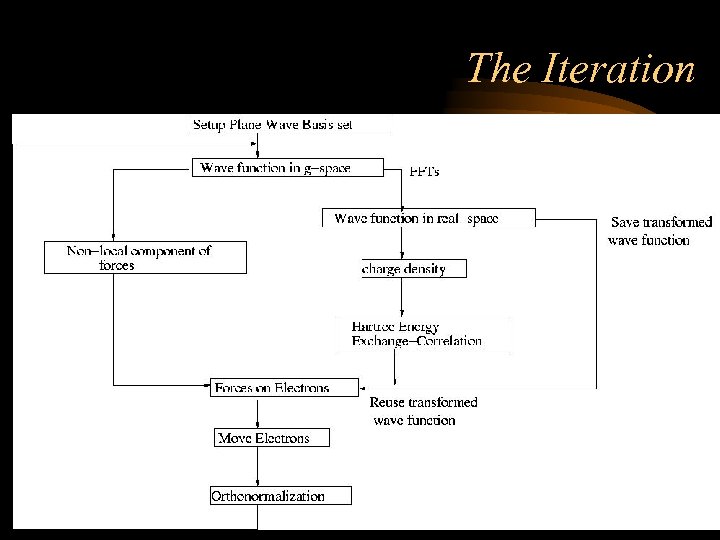

The Iteration

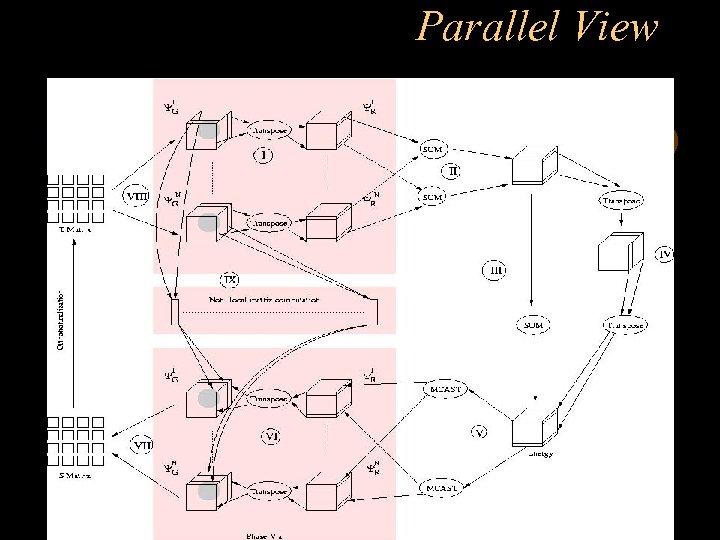

The Iteration (contd. ) • Start with 128 “states” – State – spatial representation of electron • FFT each of 128 states – In parallel – Planar decomposition => transpose • Compute densities (DFT) – Compute energies using density • Compute Forces and move electrons • Orthonormalize states • Start over

Parallel View

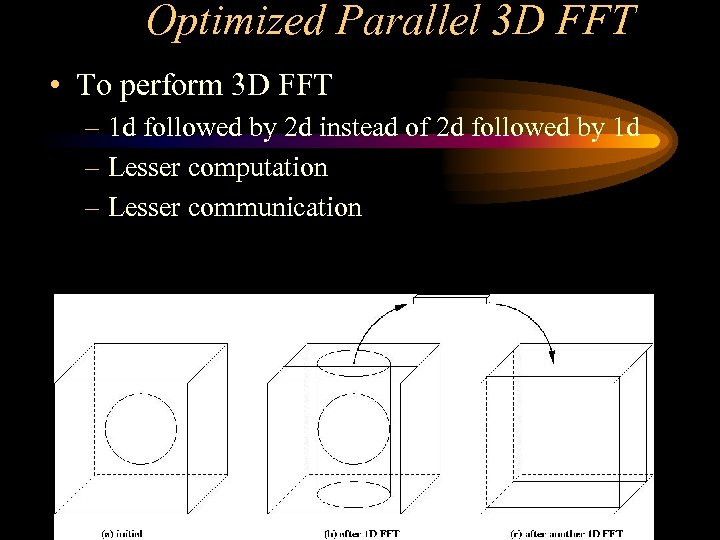

Optimized Parallel 3 D FFT • To perform 3 D FFT – 1 d followed by 2 d instead of 2 d followed by 1 d – Lesser computation – Lesser communication

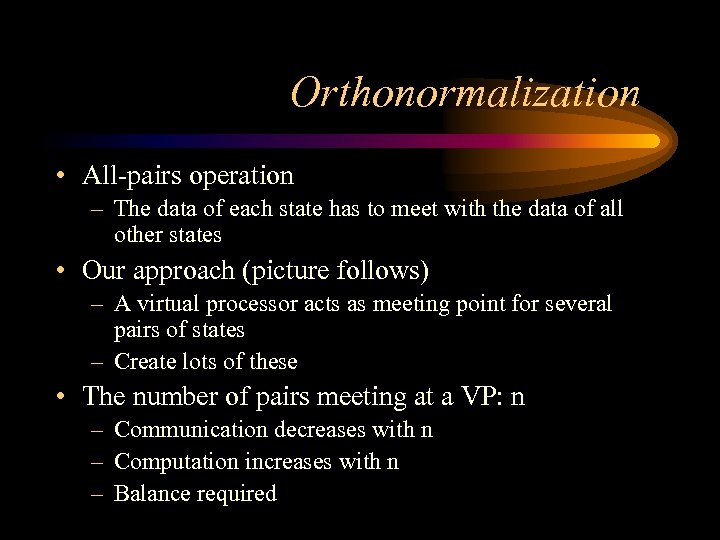

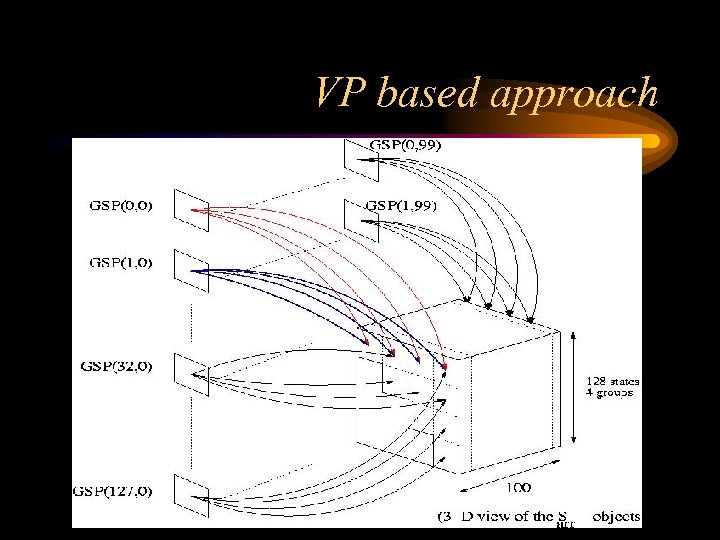

Orthonormalization • All-pairs operation – The data of each state has to meet with the data of all other states • Our approach (picture follows) – A virtual processor acts as meeting point for several pairs of states – Create lots of these • The number of pairs meeting at a VP: n – Communication decreases with n – Computation increases with n – Balance required

VP based approach

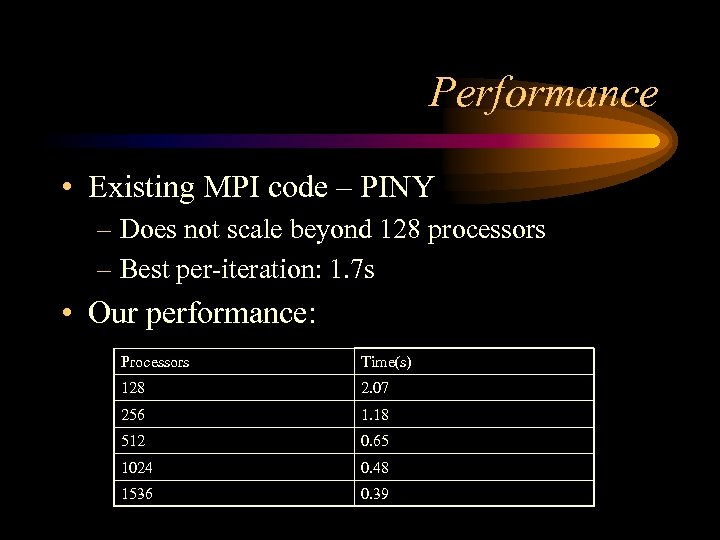

Performance • Existing MPI code – PINY – Does not scale beyond 128 processors – Best per-iteration: 1. 7 s • Our performance: Processors Time(s) 128 2. 07 256 1. 18 512 0. 65 1024 0. 48 1536 0. 39

Load balancing • Load imbalance due to distribution of data in orbitals – Planes are sections of a sphere – Hence imbalance • Computation – more points • Communication – more data to send

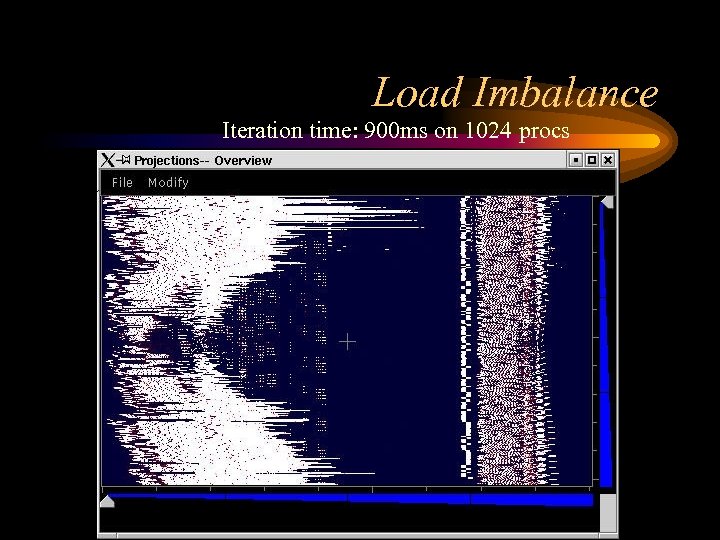

Load Imbalance Iteration time: 900 ms on 1024 procs

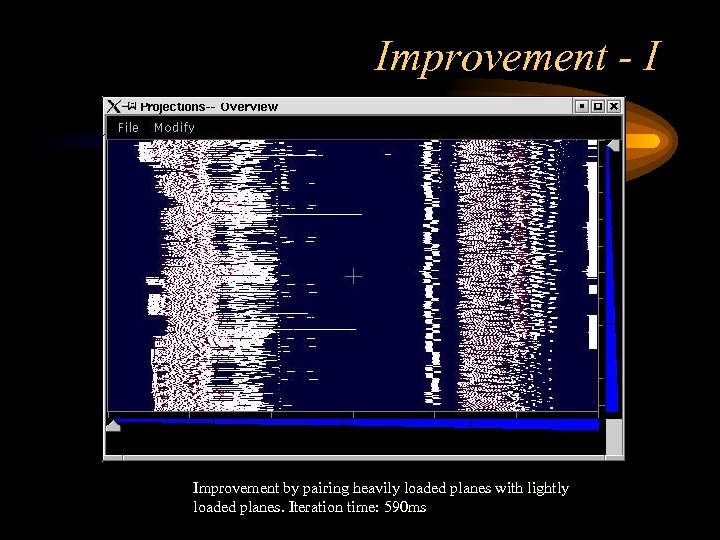

Improvement - I Improvement by pairing heavily loaded planes with lightly loaded planes. Iteration time: 590 ms

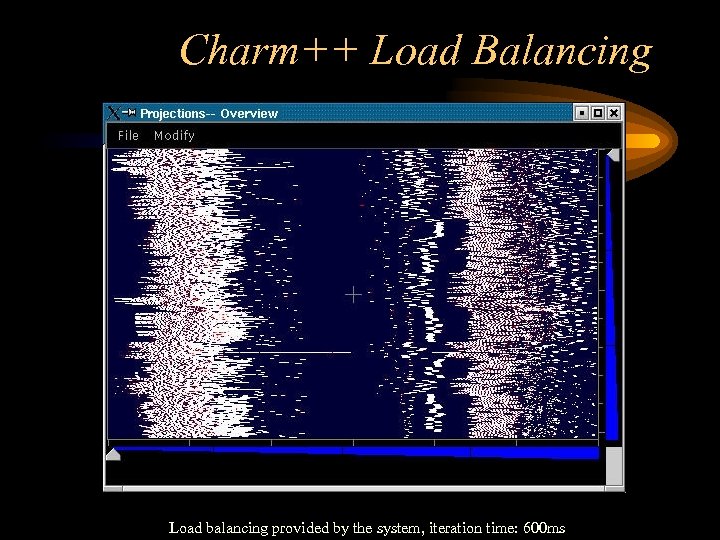

Charm++ Load Balancing Load balancing provided by the system, iteration time: 600 ms

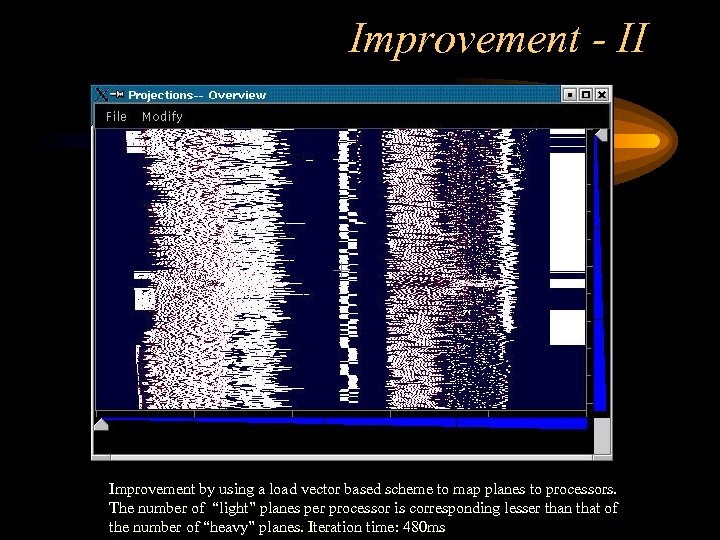

Improvement - II Improvement by using a load vector based scheme to map planes to processors. The number of “light” planes per processor is corresponding lesser than that of the number of “heavy” planes. Iteration time: 480 ms

Scope for Improvement • Load balancing – Charm++ load balancer shows encouraging results on 512 pes – Combination of automated and manual load-balancing • Avoiding copying when sending messages – In ffts – Sending large read-only messages • FFTs can be made more efficient – Use double packing – Make assumption about data distribution when performing FFTs • Alternative implementation of orthonormalization

9ce378094b32b1543318b8a02ca46373.ppt