398695dad0cdd8195d206f4dd1b60edf.ppt

- Количество слайдов: 21

Parallel Computing 1 Motivation & Contexts Ondřej Jakl Institute of Geonics, Academy of Sci. of the CR 1

Parallel Computing 1 Motivation & Contexts Ondřej Jakl Institute of Geonics, Academy of Sci. of the CR 1

Outline of the lecture • • • Motivation for parallel processing Recent impulses Parallel versus distributed computing XXX-Computing Levels of parallelism Implicit parallelism 2

Outline of the lecture • • • Motivation for parallel processing Recent impulses Parallel versus distributed computing XXX-Computing Levels of parallelism Implicit parallelism 2

Why parallel processing? (1) • Despite of their increasing power, (sequential) computers are not powerful enough – at least their users think so • Interest in parallel processing since the very ancient era of computers – R. Feynman’s “parallel” calculations on electro-mechanical tabulators in the Manhattan Project developing the atomic bomb during WW 2 – ILLIAC IV (1964 -1982) had 64 processors [next lecture] ILLIAC IV • In fact, natural attitude to cope with demanding work in everyday life – house construction, call centre, assembly line in manufacturing, etc. – stacking/reshelving a set of library books – digging a well? 3

Why parallel processing? (1) • Despite of their increasing power, (sequential) computers are not powerful enough – at least their users think so • Interest in parallel processing since the very ancient era of computers – R. Feynman’s “parallel” calculations on electro-mechanical tabulators in the Manhattan Project developing the atomic bomb during WW 2 – ILLIAC IV (1964 -1982) had 64 processors [next lecture] ILLIAC IV • In fact, natural attitude to cope with demanding work in everyday life – house construction, call centre, assembly line in manufacturing, etc. – stacking/reshelving a set of library books – digging a well? 3

Why parallel processing? (2) • The idea: Let us divide the computing load among several co-operating processing elements. Then, the task can be larger and the computation will finish sooner. • In other words: Parallel processing promises increase of – performance and capacities but also – performance/cost ratio – reliability – programming productivity – etc. • Parallel program – gives rise to at least two simultaneous and cooperating tasks (processes, threads), usually running on different processing elements – the tasks cooperate: synchronization and/or data exchange based on interprocess communication/interaction (message passing, data sharing) 4

Why parallel processing? (2) • The idea: Let us divide the computing load among several co-operating processing elements. Then, the task can be larger and the computation will finish sooner. • In other words: Parallel processing promises increase of – performance and capacities but also – performance/cost ratio – reliability – programming productivity – etc. • Parallel program – gives rise to at least two simultaneous and cooperating tasks (processes, threads), usually running on different processing elements – the tasks cooperate: synchronization and/or data exchange based on interprocess communication/interaction (message passing, data sharing) 4

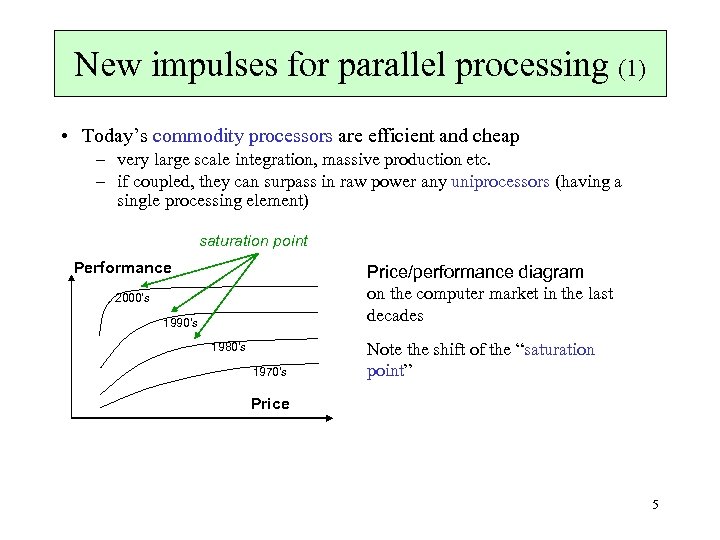

New impulses for parallel processing (1) • Today’s commodity processors are efficient and cheap – very large scale integration, massive production etc. – if coupled, they can surpass in raw power any uniprocessors (having a single processing element) saturation point Performance Price/performance diagram on the computer market in the last decades 2000's 1990's 1980's 1970's Note the shift of the “saturation point” Price 5

New impulses for parallel processing (1) • Today’s commodity processors are efficient and cheap – very large scale integration, massive production etc. – if coupled, they can surpass in raw power any uniprocessors (having a single processing element) saturation point Performance Price/performance diagram on the computer market in the last decades 2000's 1990's 1980's 1970's Note the shift of the “saturation point” Price 5

New impulses for parallel processing (2) • The sequential (von Neumann) architecture seems to be approaching physical limits – given by the speed of light – heat effect – nevertheless the manufacturers are keeping on to increase the performance of processors thanks to innovative approaches • including low-level parallelism, multicore architectures, etc. • Moore’s Law • Computer networks (LAN, WAN) are faster (Gbit/s), more reliable and appropriate for network (distributed) computing 6

New impulses for parallel processing (2) • The sequential (von Neumann) architecture seems to be approaching physical limits – given by the speed of light – heat effect – nevertheless the manufacturers are keeping on to increase the performance of processors thanks to innovative approaches • including low-level parallelism, multicore architectures, etc. • Moore’s Law • Computer networks (LAN, WAN) are faster (Gbit/s), more reliable and appropriate for network (distributed) computing 6

New impulses for parallel processing (3) • New (commercial) applications drive the demand on computer power – data-intensive: videoconferencing, virtual reality, data mining, speech recognition, etc. – computing-intensive: scientific applications – numerical simulation of complex processes: manufacturing, chemistry, etc. – merging of data-intensive and computing-intensive applications • Grand Challenge problems, games and entertainment, etc. • On-going standardization eases the development and maintenance of parallel software 7

New impulses for parallel processing (3) • New (commercial) applications drive the demand on computer power – data-intensive: videoconferencing, virtual reality, data mining, speech recognition, etc. – computing-intensive: scientific applications – numerical simulation of complex processes: manufacturing, chemistry, etc. – merging of data-intensive and computing-intensive applications • Grand Challenge problems, games and entertainment, etc. • On-going standardization eases the development and maintenance of parallel software 7

Recent impulse: multi-core • 2005 -2006: advent of multi-core chips in PC – greater transistor densities – more than one processing element (instruction execution engine) can be placed on a single chip – cheap, are replacing processors with a single processing element • End of the “free lunch” – software developers have enjoyed steadily improving performance for decades – programs that cannot exploit multi-core chips do not realize any performance improvements • in general the case of the existing software – most programmers do not know how to write parallel programs – era of programmers not caring about what is under the hood is over 8

Recent impulse: multi-core • 2005 -2006: advent of multi-core chips in PC – greater transistor densities – more than one processing element (instruction execution engine) can be placed on a single chip – cheap, are replacing processors with a single processing element • End of the “free lunch” – software developers have enjoyed steadily improving performance for decades – programs that cannot exploit multi-core chips do not realize any performance improvements • in general the case of the existing software – most programmers do not know how to write parallel programs – era of programmers not caring about what is under the hood is over 8

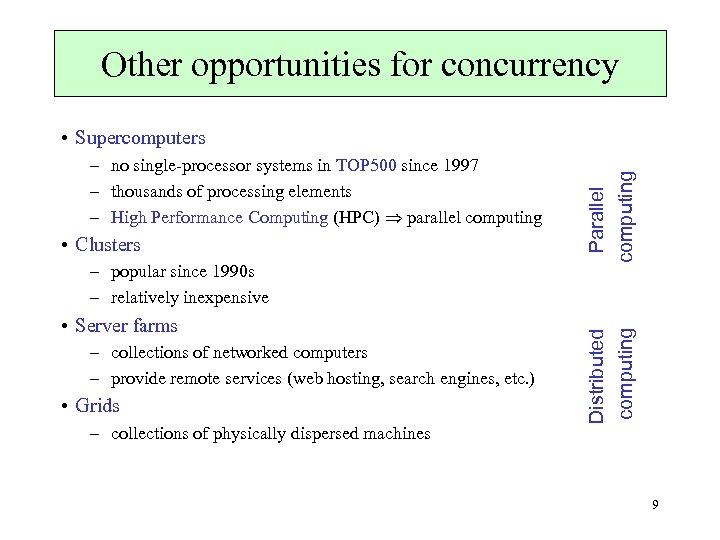

Other opportunities for concurrency • Clusters – popular since 1990 s – relatively inexpensive • Server farms – collections of networked computers – provide remote services (web hosting, search engines, etc. ) • Grids – collections of physically dispersed machines Distributed computing – no single-processor systems in TOP 500 since 1997 – thousands of processing elements – High Performance Computing (HPC) parallel computing Parallel computing • Supercomputers 9

Other opportunities for concurrency • Clusters – popular since 1990 s – relatively inexpensive • Server farms – collections of networked computers – provide remote services (web hosting, search engines, etc. ) • Grids – collections of physically dispersed machines Distributed computing – no single-processor systems in TOP 500 since 1997 – thousands of processing elements – High Performance Computing (HPC) parallel computing Parallel computing • Supercomputers 9

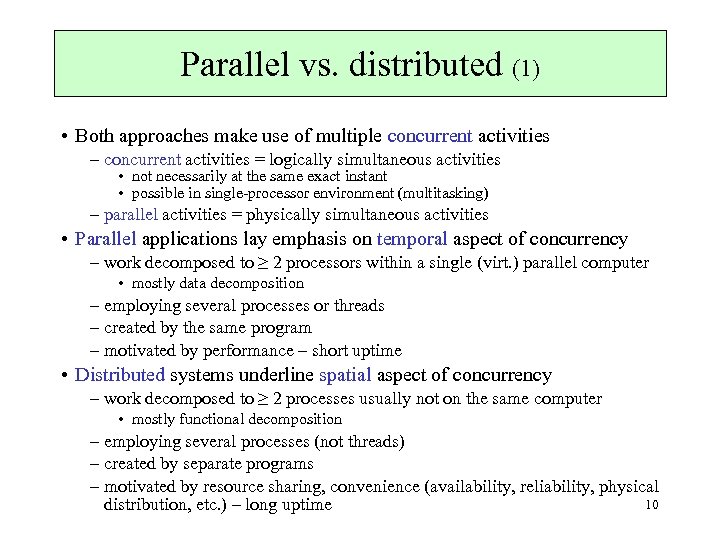

Parallel vs. distributed (1) • Both approaches make use of multiple concurrent activities – concurrent activities = logically simultaneous activities • not necessarily at the same exact instant • possible in single-processor environment (multitasking) – parallel activities = physically simultaneous activities • Parallel applications lay emphasis on temporal aspect of concurrency – work decomposed to ≥ 2 processors within a single (virt. ) parallel computer • mostly data decomposition – employing several processes or threads – created by the same program – motivated by performance – short uptime • Distributed systems underline spatial aspect of concurrency – work decomposed to ≥ 2 processes usually not on the same computer • mostly functional decomposition – employing several processes (not threads) – created by separate programs – motivated by resource sharing, convenience (availability, reliability, physical 10 distribution, etc. ) – long uptime

Parallel vs. distributed (1) • Both approaches make use of multiple concurrent activities – concurrent activities = logically simultaneous activities • not necessarily at the same exact instant • possible in single-processor environment (multitasking) – parallel activities = physically simultaneous activities • Parallel applications lay emphasis on temporal aspect of concurrency – work decomposed to ≥ 2 processors within a single (virt. ) parallel computer • mostly data decomposition – employing several processes or threads – created by the same program – motivated by performance – short uptime • Distributed systems underline spatial aspect of concurrency – work decomposed to ≥ 2 processes usually not on the same computer • mostly functional decomposition – employing several processes (not threads) – created by separate programs – motivated by resource sharing, convenience (availability, reliability, physical 10 distribution, etc. ) – long uptime

![Parallel vs. distributed (2) [Hughes 04] Parallel Distributed tightly coupled loosely coupled Another view: Parallel vs. distributed (2) [Hughes 04] Parallel Distributed tightly coupled loosely coupled Another view:](https://present5.com/presentation/398695dad0cdd8195d206f4dd1b60edf/image-11.jpg) Parallel vs. distributed (2) [Hughes 04] Parallel Distributed tightly coupled loosely coupled Another view: parallel = shared memory, distributed = private memory 11

Parallel vs. distributed (2) [Hughes 04] Parallel Distributed tightly coupled loosely coupled Another view: parallel = shared memory, distributed = private memory 11

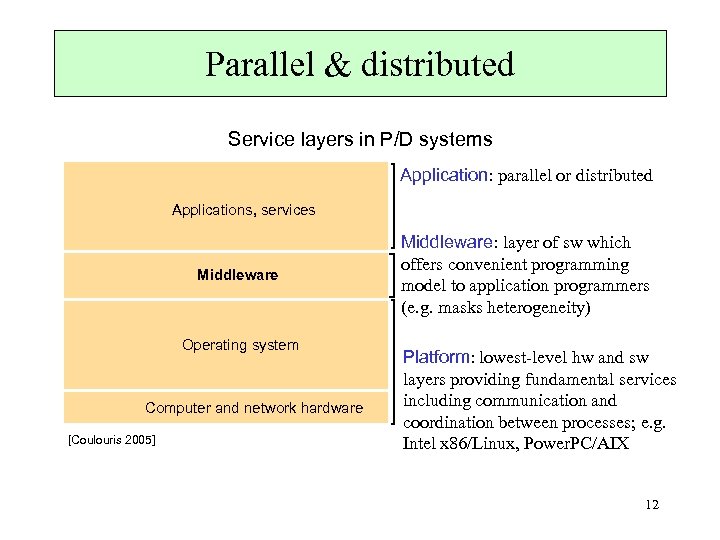

Parallel & distributed Service layers in P/D systems Application: parallel or distributed Applications, services Middleware Operating system Computer and network hardware [Coulouris 2005] Middleware: layer of sw which offers convenient programming model to application programmers (e. g. masks heterogeneity) Platform: lowest-level hw and sw layers providing fundamental services including communication and coordination between processes; e. g. Intel x 86/Linux, Power. PC/AIX 12

Parallel & distributed Service layers in P/D systems Application: parallel or distributed Applications, services Middleware Operating system Computer and network hardware [Coulouris 2005] Middleware: layer of sw which offers convenient programming model to application programmers (e. g. masks heterogeneity) Platform: lowest-level hw and sw layers providing fundamental services including communication and coordination between processes; e. g. Intel x 86/Linux, Power. PC/AIX 12

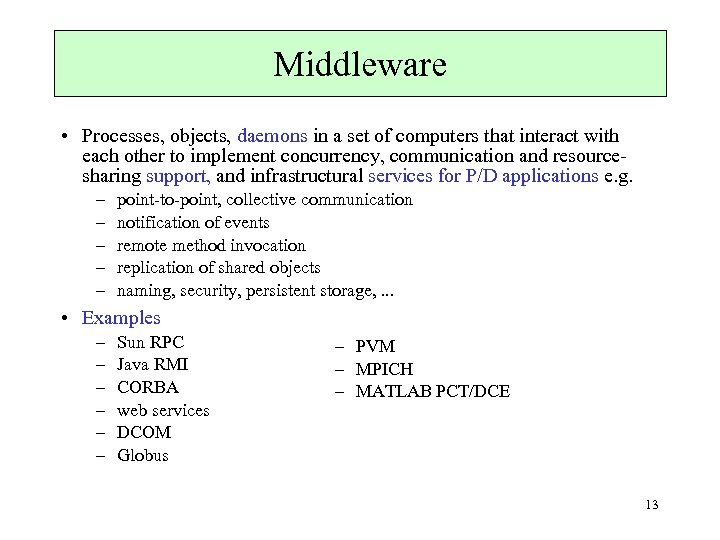

Middleware • Processes, objects, daemons in a set of computers that interact with each other to implement concurrency, communication and resourcesharing support, and infrastructural services for P/D applications e. g. – – – point-to-point, collective communication notification of events remote method invocation replication of shared objects naming, security, persistent storage, . . . • Examples – – – Sun RPC Java RMI CORBA web services DCOM Globus – PVM – MPICH – MATLAB PCT/DCE 13

Middleware • Processes, objects, daemons in a set of computers that interact with each other to implement concurrency, communication and resourcesharing support, and infrastructural services for P/D applications e. g. – – – point-to-point, collective communication notification of events remote method invocation replication of shared objects naming, security, persistent storage, . . . • Examples – – – Sun RPC Java RMI CORBA web services DCOM Globus – PVM – MPICH – MATLAB PCT/DCE 13

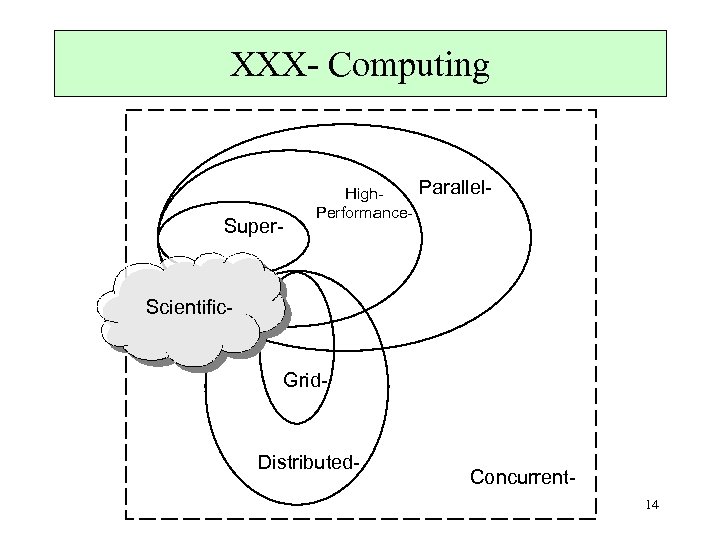

XXX- Computing Super- High. Performance- Parallel- Scientific- Grid- Distributed- Concurrent 14

XXX- Computing Super- High. Performance- Parallel- Scientific- Grid- Distributed- Concurrent 14

Levels of parallelism • Level of jobs – More processing units make it possible to simultaneously process several jobs, mutually independent – The throughput of the system is increased, the run time of jobs is not affected ð Level of tasks/threads – Jobs are divided into cooperating processes/threads running in parallel – Opportunity to decrease the execution time • Level of machine instructions (next slide) 15

Levels of parallelism • Level of jobs – More processing units make it possible to simultaneously process several jobs, mutually independent – The throughput of the system is increased, the run time of jobs is not affected ð Level of tasks/threads – Jobs are divided into cooperating processes/threads running in parallel – Opportunity to decrease the execution time • Level of machine instructions (next slide) 15

Instruction level parallelism • The source of acceleration of processor chips – together with increasing clock speed • retarded by limitations of the memory technology – transparently available to sequential programs – hidden, implicit parallelism – preserves the illusion of sequential execution – seems to have reached the point of diminishing returns • Modern processors are highly parallel systems – Separate wires and caches for instruction and data – Pipelined execution: more instructions processed at once – each in another stage [next slide] – Superscalar execution: more (arithmetic) instructions executed at once – on multiplied execution units [next slide] – Out-of-order execution, speculative execution, etc. 16

Instruction level parallelism • The source of acceleration of processor chips – together with increasing clock speed • retarded by limitations of the memory technology – transparently available to sequential programs – hidden, implicit parallelism – preserves the illusion of sequential execution – seems to have reached the point of diminishing returns • Modern processors are highly parallel systems – Separate wires and caches for instruction and data – Pipelined execution: more instructions processed at once – each in another stage [next slide] – Superscalar execution: more (arithmetic) instructions executed at once – on multiplied execution units [next slide] – Out-of-order execution, speculative execution, etc. 16

![Pipelined execution [Amarasinghe] 17 Pipelined execution [Amarasinghe] 17](https://present5.com/presentation/398695dad0cdd8195d206f4dd1b60edf/image-17.jpg) Pipelined execution [Amarasinghe] 17

Pipelined execution [Amarasinghe] 17

![Superscalar execution [Amarasinghe] 18 Superscalar execution [Amarasinghe] 18](https://present5.com/presentation/398695dad0cdd8195d206f4dd1b60edf/image-18.jpg) Superscalar execution [Amarasinghe] 18

Superscalar execution [Amarasinghe] 18

Further study • Books with unifying optics for both parallel and distributed processing: • [Andrews 2000] Foundations of Multithreaded, Parallel, and Distributed Programming • [Hughes 2004] Parallel And Distributed Programming Using C++ • [Dowd 1998] High Performance Computing deals with many contexts of HPC, not only parallelism 19

Further study • Books with unifying optics for both parallel and distributed processing: • [Andrews 2000] Foundations of Multithreaded, Parallel, and Distributed Programming • [Hughes 2004] Parallel And Distributed Programming Using C++ • [Dowd 1998] High Performance Computing deals with many contexts of HPC, not only parallelism 19

20

20

Other contexts • GPU computin 22

Other contexts • GPU computin 22