79125c9021c1769a226c91a8e4bbcf93.ppt

- Количество слайдов: 35

Parallel CC & Petaflop Applications Ryan Olson Cray, Inc.

Parallel CC & Petaflop Applications Ryan Olson Cray, Inc.

Did you know … ZTeraflop - Current ZPetaflop - Imminent ZWhat’s next? ZExaflop ZZettaflop ZYOTTAflop!

Did you know … ZTeraflop - Current ZPetaflop - Imminent ZWhat’s next? ZExaflop ZZettaflop ZYOTTAflop!

Outline Sanibel Symposium This Talk Z Programming Models Z Distributed Data Interface Z Parallel CC Implementations Z GAMESS MP-CCSD(T) Z Benchmarks Z O vs. V Z Petascale Applications Z Local & Many. Body Methods

Outline Sanibel Symposium This Talk Z Programming Models Z Distributed Data Interface Z Parallel CC Implementations Z GAMESS MP-CCSD(T) Z Benchmarks Z O vs. V Z Petascale Applications Z Local & Many. Body Methods

Programming Models The Distributed Data Interface (DDI) Z Programming Interface, not Programming Model Z Choose the key functionality from the best programming models and provide: Z Common Interface Z Simple and Portable Z General Implementation Z Provide an interface to: Z Z SPMD: TCGMSG, MPI AMOs: SHMEM, GA SMPs: Open. MP, p. Threads SIMD: GPUs, Vector directives, SSE, etc. Z Use the best models for the underlying hardware.

Programming Models The Distributed Data Interface (DDI) Z Programming Interface, not Programming Model Z Choose the key functionality from the best programming models and provide: Z Common Interface Z Simple and Portable Z General Implementation Z Provide an interface to: Z Z SPMD: TCGMSG, MPI AMOs: SHMEM, GA SMPs: Open. MP, p. Threads SIMD: GPUs, Vector directives, SSE, etc. Z Use the best models for the underlying hardware.

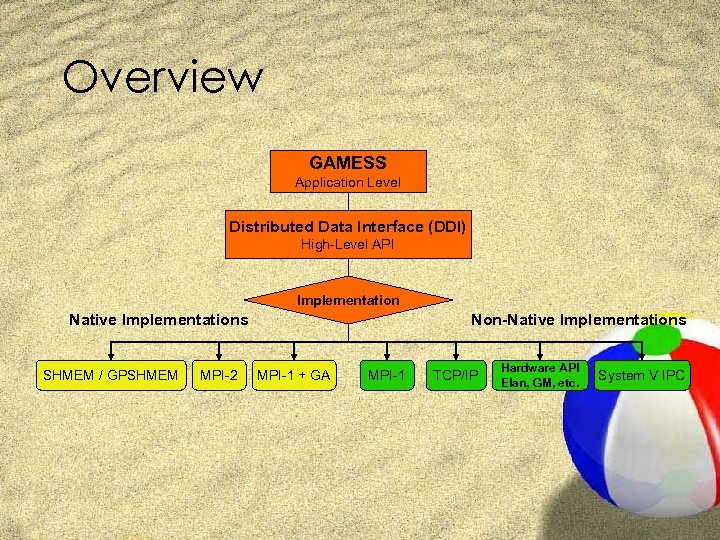

Overview GAMESS Application Level Distributed Data Interface (DDI) High-Level API Implementation Native Implementations SHMEM / GPSHMEM MPI-2 Non-Native Implementations MPI-1 + GA MPI-1 TCP/IP Hardware API Elan, GM, etc. System V IPC

Overview GAMESS Application Level Distributed Data Interface (DDI) High-Level API Implementation Native Implementations SHMEM / GPSHMEM MPI-2 Non-Native Implementations MPI-1 + GA MPI-1 TCP/IP Hardware API Elan, GM, etc. System V IPC

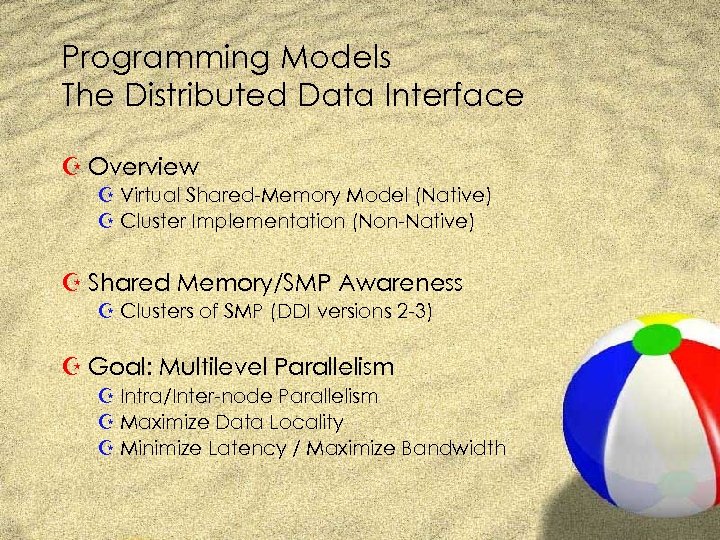

Programming Models The Distributed Data Interface Z Overview Z Virtual Shared-Memory Model (Native) Z Cluster Implementation (Non-Native) Z Shared Memory/SMP Awareness Z Clusters of SMP (DDI versions 2 -3) Z Goal: Multilevel Parallelism Z Intra/Inter-node Parallelism Z Maximize Data Locality Z Minimize Latency / Maximize Bandwidth

Programming Models The Distributed Data Interface Z Overview Z Virtual Shared-Memory Model (Native) Z Cluster Implementation (Non-Native) Z Shared Memory/SMP Awareness Z Clusters of SMP (DDI versions 2 -3) Z Goal: Multilevel Parallelism Z Intra/Inter-node Parallelism Z Maximize Data Locality Z Minimize Latency / Maximize Bandwidth

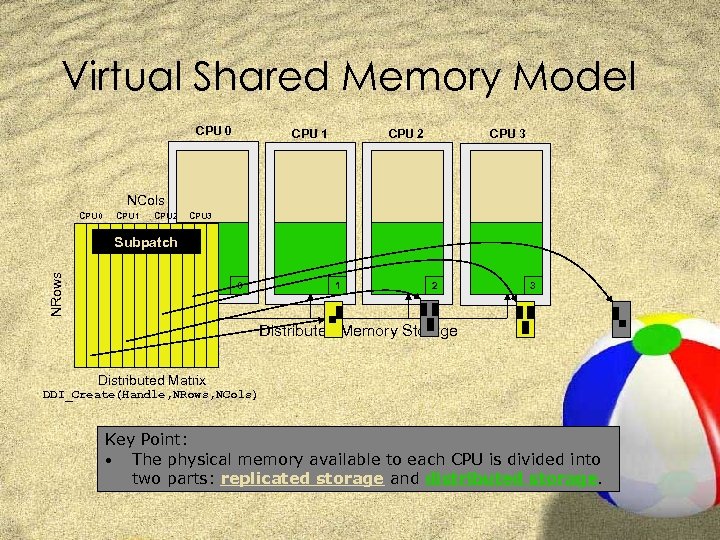

Virtual Shared Memory Model CPU 0 CPU 1 CPU 3 CPU 2 NCols CPU 0 CPU 1 CPU 2 CPU 3 NRows Subpatch 0 1 2 3 Distributed Memory Storage Distributed Matrix DDI_Create(Handle, NRows, NCols) Key Point: • The physical memory available to each CPU is divided into two parts: replicated storage and distributed storage.

Virtual Shared Memory Model CPU 0 CPU 1 CPU 3 CPU 2 NCols CPU 0 CPU 1 CPU 2 CPU 3 NRows Subpatch 0 1 2 3 Distributed Memory Storage Distributed Matrix DDI_Create(Handle, NRows, NCols) Key Point: • The physical memory available to each CPU is divided into two parts: replicated storage and distributed storage.

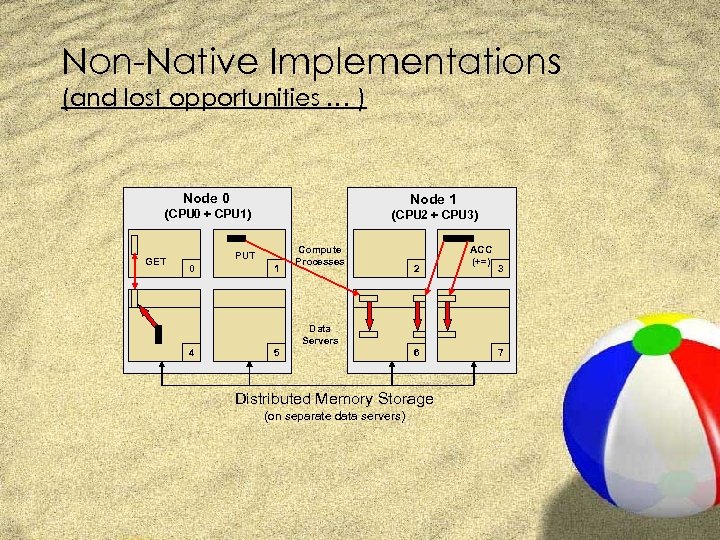

Non-Native Implementations (and lost opportunities … ) Node 0 Node 1 (CPU 0 + CPU 1) (CPU 2 + CPU 3) GET PUT 0 1 Compute Processes 2 ACC (+=) 3 Data Servers 4 5 6 Distributed Memory Storage (on separate data servers) 7

Non-Native Implementations (and lost opportunities … ) Node 0 Node 1 (CPU 0 + CPU 1) (CPU 2 + CPU 3) GET PUT 0 1 Compute Processes 2 ACC (+=) 3 Data Servers 4 5 6 Distributed Memory Storage (on separate data servers) 7

DDI till 2003 …

DDI till 2003 …

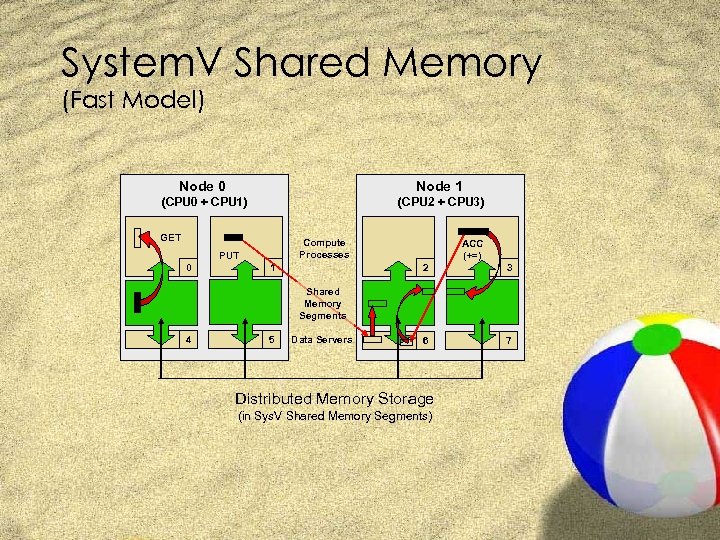

System. V Shared Memory (Fast Model) Node 0 Node 1 (CPU 0 + CPU 1) (CPU 2 + CPU 3) GET Compute Processes PUT 0 1 ACC (+=) 2 3 6 7 Shared Memory Segments 4 5 Data Servers Distributed Memory Storage (in Sys. V Shared Memory Segments)

System. V Shared Memory (Fast Model) Node 0 Node 1 (CPU 0 + CPU 1) (CPU 2 + CPU 3) GET Compute Processes PUT 0 1 ACC (+=) 2 3 6 7 Shared Memory Segments 4 5 Data Servers Distributed Memory Storage (in Sys. V Shared Memory Segments)

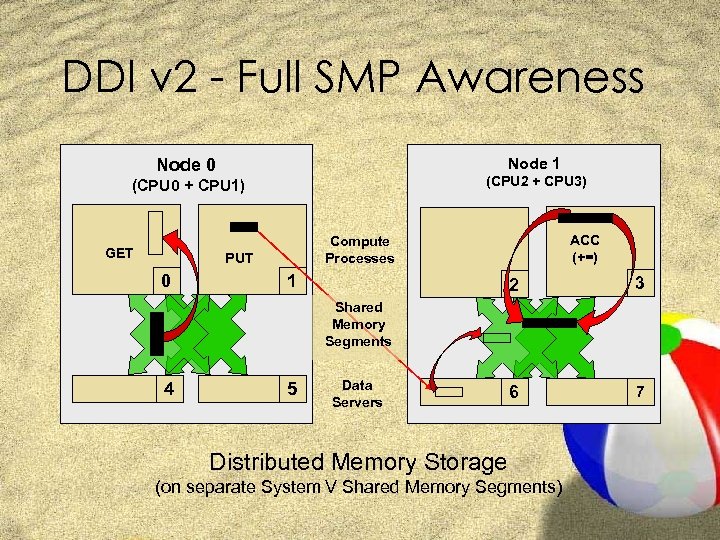

DDI v 2 - Full SMP Awareness Node 1 Node 0 (CPU 2 + CPU 3) (CPU 0 + CPU 1) GET PUT 0 ACC (+=) Compute Processes 1 2 3 6 7 Shared Memory Segments 4 5 Data Servers Distributed Memory Storage (on separate System V Shared Memory Segments)

DDI v 2 - Full SMP Awareness Node 1 Node 0 (CPU 2 + CPU 3) (CPU 0 + CPU 1) GET PUT 0 ACC (+=) Compute Processes 1 2 3 6 7 Shared Memory Segments 4 5 Data Servers Distributed Memory Storage (on separate System V Shared Memory Segments)

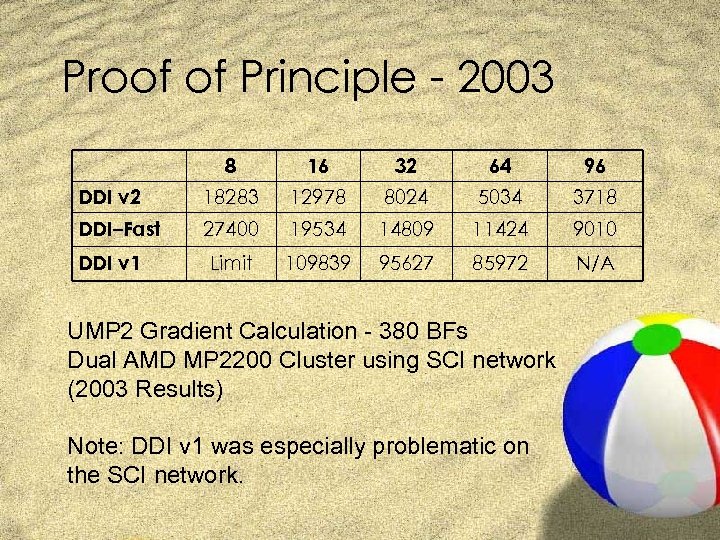

Proof of Principle - 2003 8 16 32 64 96 DDI v 2 18283 12978 8024 5034 3718 DDI–Fast 27400 19534 14809 11424 9010 Limit 109839 95627 85972 N/A DDI v 1 UMP 2 Gradient Calculation - 380 BFs Dual AMD MP 2200 Cluster using SCI network (2003 Results) Note: DDI v 1 was especially problematic on the SCI network.

Proof of Principle - 2003 8 16 32 64 96 DDI v 2 18283 12978 8024 5034 3718 DDI–Fast 27400 19534 14809 11424 9010 Limit 109839 95627 85972 N/A DDI v 1 UMP 2 Gradient Calculation - 380 BFs Dual AMD MP 2200 Cluster using SCI network (2003 Results) Note: DDI v 1 was especially problematic on the SCI network.

DDI v 2 Z The DDI Library is SMP Aware. Z offers new interfaces to make application SMP aware. Z DDI programs inherit improvements in the library. Z DDI programs do not automatically become SMP aware, unless they utilize the new interface.

DDI v 2 Z The DDI Library is SMP Aware. Z offers new interfaces to make application SMP aware. Z DDI programs inherit improvements in the library. Z DDI programs do not automatically become SMP aware, unless they utilize the new interface.

Parallel CC and Threads (Shared Memory Parallelism) ZBentz and Kendall ZParallel BLAS 3 ZWOMPAT ‘ 05 ZOpen. MP ZParallelized Remaining Terms ZProof of Principle

Parallel CC and Threads (Shared Memory Parallelism) ZBentz and Kendall ZParallel BLAS 3 ZWOMPAT ‘ 05 ZOpen. MP ZParallelized Remaining Terms ZProof of Principle

Results • Au 4 ==> GOOD • • • CCSD = (T) No Disk I/O problems Both CCSD and (T) scale well • Au+(C 3 H 6) ==> POOR/AVERAGE • • CCSD scales poorly due to I/O vs. FLOP Balance (T) scales well, overshadowed by bad CCSD performance • Au 8 ==> GOOD • • • CCSD scales reasonable (Greater FLOP count, about equal I/O). N 7 (T) step dominates over the relatively small time for CCSD. (T) scales well, so the overall performance is good.

Results • Au 4 ==> GOOD • • • CCSD = (T) No Disk I/O problems Both CCSD and (T) scale well • Au+(C 3 H 6) ==> POOR/AVERAGE • • CCSD scales poorly due to I/O vs. FLOP Balance (T) scales well, overshadowed by bad CCSD performance • Au 8 ==> GOOD • • • CCSD scales reasonable (Greater FLOP count, about equal I/O). N 7 (T) step dominates over the relatively small time for CCSD. (T) scales well, so the overall performance is good.

Detailed Speedups …

Detailed Speedups …

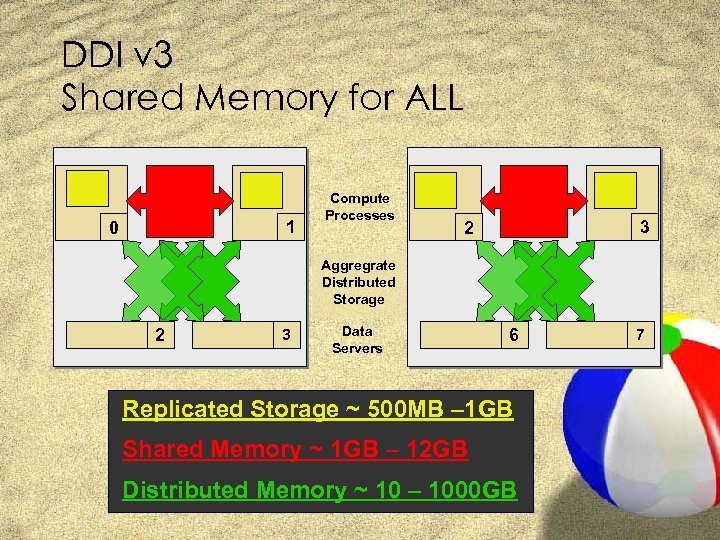

DDI v 3 Shared Memory for ALL 1 0 Compute Processes 3 2 Aggregrate Distributed Storage 2 3 Data Servers 6 Replicated Storage ~ 500 MB – 1 GB Shared Memory ~ 1 GB – 12 GB Distributed Memory ~ 10 – 1000 GB 7

DDI v 3 Shared Memory for ALL 1 0 Compute Processes 3 2 Aggregrate Distributed Storage 2 3 Data Servers 6 Replicated Storage ~ 500 MB – 1 GB Shared Memory ~ 1 GB – 12 GB Distributed Memory ~ 10 – 1000 GB 7

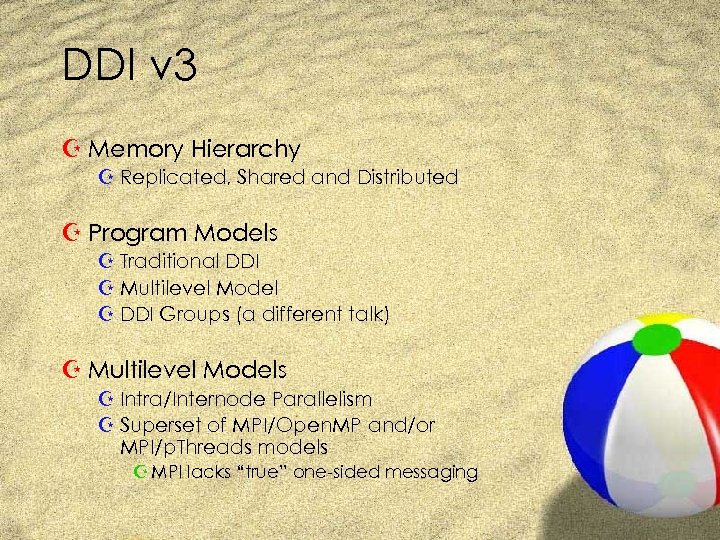

DDI v 3 Z Memory Hierarchy Z Replicated, Shared and Distributed Z Program Models Z Traditional DDI Z Multilevel Model Z DDI Groups (a different talk) Z Multilevel Models Z Intra/Internode Parallelism Z Superset of MPI/Open. MP and/or MPI/p. Threads models Z MPI lacks “true” one-sided messaging

DDI v 3 Z Memory Hierarchy Z Replicated, Shared and Distributed Z Program Models Z Traditional DDI Z Multilevel Model Z DDI Groups (a different talk) Z Multilevel Models Z Intra/Internode Parallelism Z Superset of MPI/Open. MP and/or MPI/p. Threads models Z MPI lacks “true” one-sided messaging

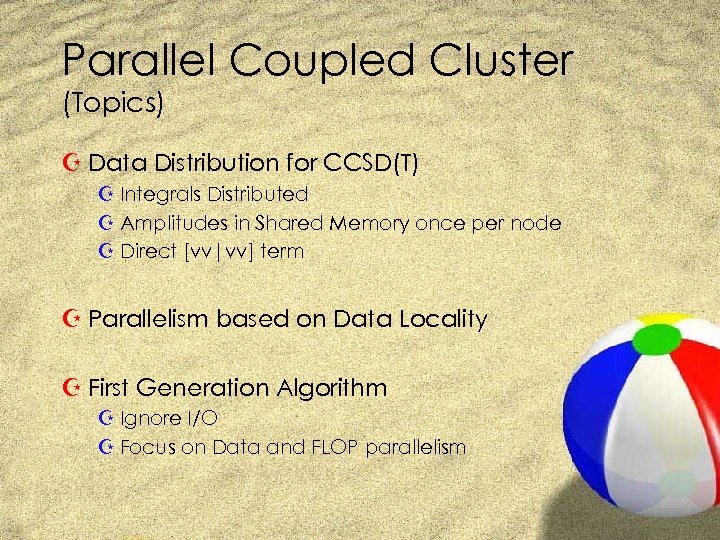

Parallel Coupled Cluster (Topics) Z Data Distribution for CCSD(T) Z Integrals Distributed Z Amplitudes in Shared Memory once per node Z Direct [vv|vv] term Z Parallelism based on Data Locality Z First Generation Algorithm Z Ignore I/O Z Focus on Data and FLOP parallelism

Parallel Coupled Cluster (Topics) Z Data Distribution for CCSD(T) Z Integrals Distributed Z Amplitudes in Shared Memory once per node Z Direct [vv|vv] term Z Parallelism based on Data Locality Z First Generation Algorithm Z Ignore I/O Z Focus on Data and FLOP parallelism

![Important Array Sizes (in GB) v [vv|oo] [vo|vo] T 2 o v [vv|vo] o Important Array Sizes (in GB) v [vv|oo] [vo|vo] T 2 o v [vv|vo] o](https://present5.com/presentation/79125c9021c1769a226c91a8e4bbcf93/image-20.jpg) Important Array Sizes (in GB) v [vv|oo] [vo|vo] T 2 o v [vv|vo] o

Important Array Sizes (in GB) v [vv|oo] [vo|vo] T 2 o v [vv|vo] o

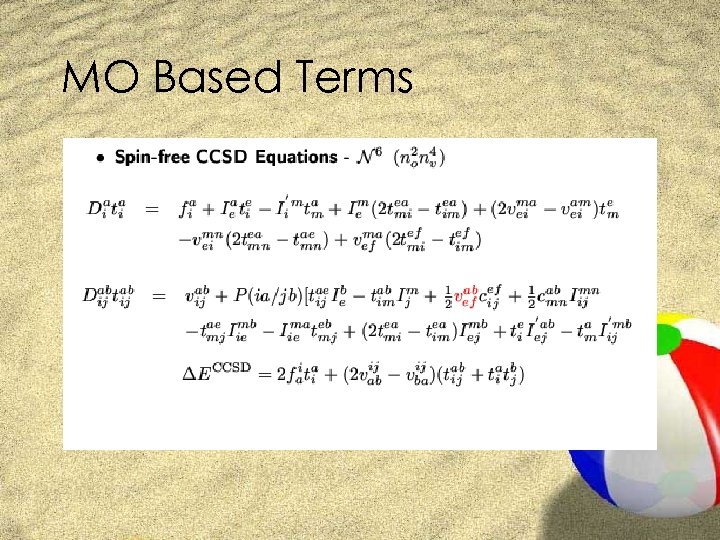

MO Based Terms

MO Based Terms

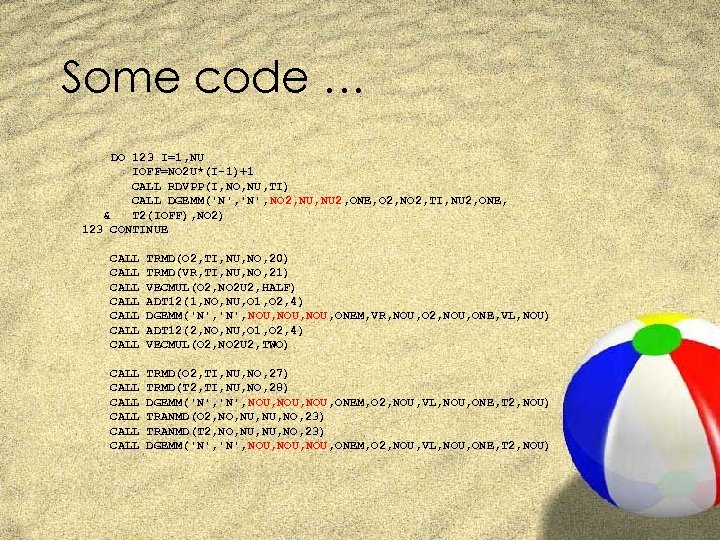

Some code … DO 123 I=1, NU IOFF=NO 2 U*(I-1)+1 CALL RDVPP(I, NO, NU, TI) CALL DGEMM('N', NO 2, NU 2, ONE, O 2, NO 2, TI, NU 2, ONE, & T 2(IOFF), NO 2) 123 CONTINUE CALL CALL TRMD(O 2, TI, NU, NO, 20) TRMD(VR, TI, NU, NO, 21) VECMUL(O 2, NO 2 U 2, HALF) ADT 12(1, NO, NU, O 1, O 2, 4) DGEMM('N', NOU, ONEM, VR, NOU, O 2, NOU, ONE, VL, NOU) ADT 12(2, NO, NU, O 1, O 2, 4) VECMUL(O 2, NO 2 U 2, TWO) CALL CALL TRMD(O 2, TI, NU, NO, 27) TRMD(T 2, TI, NU, NO, 28) DGEMM('N', NOU, ONEM, O 2, NOU, VL, NOU, ONE, T 2, NOU) TRANMD(O 2, NO, NU, NO, 23) TRANMD(T 2, NO, NU, NO, 23) DGEMM('N', NOU, ONEM, O 2, NOU, VL, NOU, ONE, T 2, NOU)

Some code … DO 123 I=1, NU IOFF=NO 2 U*(I-1)+1 CALL RDVPP(I, NO, NU, TI) CALL DGEMM('N', NO 2, NU 2, ONE, O 2, NO 2, TI, NU 2, ONE, & T 2(IOFF), NO 2) 123 CONTINUE CALL CALL TRMD(O 2, TI, NU, NO, 20) TRMD(VR, TI, NU, NO, 21) VECMUL(O 2, NO 2 U 2, HALF) ADT 12(1, NO, NU, O 1, O 2, 4) DGEMM('N', NOU, ONEM, VR, NOU, O 2, NOU, ONE, VL, NOU) ADT 12(2, NO, NU, O 1, O 2, 4) VECMUL(O 2, NO 2 U 2, TWO) CALL CALL TRMD(O 2, TI, NU, NO, 27) TRMD(T 2, TI, NU, NO, 28) DGEMM('N', NOU, ONEM, O 2, NOU, VL, NOU, ONE, T 2, NOU) TRANMD(O 2, NO, NU, NO, 23) TRANMD(T 2, NO, NU, NO, 23) DGEMM('N', NOU, ONEM, O 2, NOU, VL, NOU, ONE, T 2, NOU)

![MO Parallelization T 2 Soln 0 1 [vo*|vo*], [vv|o*o*] [vv|v*o*] T 2 Soln 2 MO Parallelization T 2 Soln 0 1 [vo*|vo*], [vv|o*o*] [vv|v*o*] T 2 Soln 2](https://present5.com/presentation/79125c9021c1769a226c91a8e4bbcf93/image-23.jpg) MO Parallelization T 2 Soln 0 1 [vo*|vo*], [vv|o*o*] [vv|v*o*] T 2 Soln 2 3 [vo*|vo*], [vv|o*o*] [vv|v*o*] Goal: Disjoint updates to the solution matrix. Avoid locking/critical sections whenever possible.

MO Parallelization T 2 Soln 0 1 [vo*|vo*], [vv|o*o*] [vv|v*o*] T 2 Soln 2 3 [vo*|vo*], [vv|o*o*] [vv|v*o*] Goal: Disjoint updates to the solution matrix. Avoid locking/critical sections whenever possible.

![Direct [VV|VV] Term do = 1, nshell do = 1, 11 21 22…occ indices…(No. Direct [VV|VV] Term do = 1, nshell do = 1, 11 21 22…occ indices…(No.](https://present5.com/presentation/79125c9021c1769a226c91a8e4bbcf93/image-24.jpg) Direct [VV|VV] Term do = 1, nshell do = 1, 11 21 22…occ indices…(No. No)* 11 12 13 …atomic orbital indices … Nbf 2 do = 1, nshell compute: transform: PUT end do transform: contract: PUT and for i j end do synchronize for each “local” ij column do GET reorder: shell --> AO order transform: STORE end do in “local” solution vector GET 0 1 … processes … P-1

Direct [VV|VV] Term do = 1, nshell do = 1, 11 21 22…occ indices…(No. No)* 11 12 13 …atomic orbital indices … Nbf 2 do = 1, nshell compute: transform: PUT end do transform: contract: PUT and for i j end do synchronize for each “local” ij column do GET reorder: shell --> AO order transform: STORE end do in “local” solution vector GET 0 1 … processes … P-1

![(T) Parallelism ZTrivial -- in theory Z[vv|vo] distributed Zv 3 work arrays Zat large (T) Parallelism ZTrivial -- in theory Z[vv|vo] distributed Zv 3 work arrays Zat large](https://present5.com/presentation/79125c9021c1769a226c91a8e4bbcf93/image-25.jpg) (T) Parallelism ZTrivial -- in theory Z[vv|vo] distributed Zv 3 work arrays Zat large v -- stored in shared memory Zdisjoint updates where both quantities are shared

(T) Parallelism ZTrivial -- in theory Z[vv|vo] distributed Zv 3 work arrays Zat large v -- stored in shared memory Zdisjoint updates where both quantities are shared

Timings … (H 2 O)6 Prism - aug’-cc-p. VTZ Fastest timing: < 6 hours on 8 x 8 Power 5

Timings … (H 2 O)6 Prism - aug’-cc-p. VTZ Fastest timing: < 6 hours on 8 x 8 Power 5

![Improvements … ZSemi-Direct [vv|vv] term (IKCUT) ZConcurrent MO terms ZGeneralized amplitudes storage Improvements … ZSemi-Direct [vv|vv] term (IKCUT) ZConcurrent MO terms ZGeneralized amplitudes storage](https://present5.com/presentation/79125c9021c1769a226c91a8e4bbcf93/image-27.jpg) Improvements … ZSemi-Direct [vv|vv] term (IKCUT) ZConcurrent MO terms ZGeneralized amplitudes storage

Improvements … ZSemi-Direct [vv|vv] term (IKCUT) ZConcurrent MO terms ZGeneralized amplitudes storage

![Semi-Direct [VV|VV] Term do = 1, nshell do = 1, Z Define IKCUT ! Semi-Direct [VV|VV] Term do = 1, nshell do = 1, Z Define IKCUT !](https://present5.com/presentation/79125c9021c1769a226c91a8e4bbcf93/image-28.jpg) Semi-Direct [VV|VV] Term do = 1, nshell do = 1, Z Define IKCUT ! I-SHELL ! K-SHELL do = 1, nshell compute: Z Store if: LEN(I)+LEN(K) > IKCUT transform: end do transform: Z Automatic contention avoidance Z Adjustable: Fully direct to fully conventional. contract: PUT end do and for i j

Semi-Direct [VV|VV] Term do = 1, nshell do = 1, Z Define IKCUT ! I-SHELL ! K-SHELL do = 1, nshell compute: Z Store if: LEN(I)+LEN(K) > IKCUT transform: end do transform: Z Automatic contention avoidance Z Adjustable: Fully direct to fully conventional. contract: PUT end do and for i j

![Semi-Direct [vv|vv] Timings Water Tetramer / aug’-cc-p. VTZ Storage: Shared NFS mounted (bad example). Semi-Direct [vv|vv] Timings Water Tetramer / aug’-cc-p. VTZ Storage: Shared NFS mounted (bad example).](https://present5.com/presentation/79125c9021c1769a226c91a8e4bbcf93/image-29.jpg) Semi-Direct [vv|vv] Timings Water Tetramer / aug’-cc-p. VTZ Storage: Shared NFS mounted (bad example). Local Disk or a higher quality Parallel File System (LUSTRE, etc. ) should perform better. However: GPUs generate AOs much faster than they can be read off the disk.

Semi-Direct [vv|vv] Timings Water Tetramer / aug’-cc-p. VTZ Storage: Shared NFS mounted (bad example). Local Disk or a higher quality Parallel File System (LUSTRE, etc. ) should perform better. However: GPUs generate AOs much faster than they can be read off the disk.

Concurrency Z Everything N-ways parallel Z NO Z Biggest mistake Z Parallelizing every MO term over all cores. Z Fix Z Concurrency

Concurrency Z Everything N-ways parallel Z NO Z Biggest mistake Z Parallelizing every MO term over all cores. Z Fix Z Concurrency

![Concurrent MO terms Nodes [vv|vv] MO Terms - Parallelized over the minimum number of Concurrent MO terms Nodes [vv|vv] MO Terms - Parallelized over the minimum number of](https://present5.com/presentation/79125c9021c1769a226c91a8e4bbcf93/image-31.jpg) Concurrent MO terms Nodes [vv|vv] MO Terms - Parallelized over the minimum number of nodes while still efficient & fast. MO nodes join the [vv|vv] term already in progress … dynamic load balancing.

Concurrent MO terms Nodes [vv|vv] MO Terms - Parallelized over the minimum number of nodes while still efficient & fast. MO nodes join the [vv|vv] term already in progress … dynamic load balancing.

Adaptive Computing Z Self Adjusting / Self Tuning Z Concurrent MO terms Z Value of IKCUT Z Use the iterations to improve the calculation: ZAdjust initial node assignments ZIncrease IKCUT Z Monte Carlo approach to tuning paramaters.

Adaptive Computing Z Self Adjusting / Self Tuning Z Concurrent MO terms Z Value of IKCUT Z Use the iterations to improve the calculation: ZAdjust initial node assignments ZIncrease IKCUT Z Monte Carlo approach to tuning paramaters.

![Conclusions … Z Good First Start … Z [vv|vv] scales perfectly with node count. Conclusions … Z Good First Start … Z [vv|vv] scales perfectly with node count.](https://present5.com/presentation/79125c9021c1769a226c91a8e4bbcf93/image-33.jpg) Conclusions … Z Good First Start … Z [vv|vv] scales perfectly with node count. Z multilevel parallelism Z adjustable i/o usage Z A lot to do … Z Z improve intra-node memory bottlenecks concurrent MO terms generalized amplitude storage adaptive computing Z Use the knowledge from these hand coded methods to refine the CS structure in automated methods.

Conclusions … Z Good First Start … Z [vv|vv] scales perfectly with node count. Z multilevel parallelism Z adjustable i/o usage Z A lot to do … Z Z improve intra-node memory bottlenecks concurrent MO terms generalized amplitude storage adaptive computing Z Use the knowledge from these hand coded methods to refine the CS structure in automated methods.

Acknowledgements People Z Mark Gordon Z Mike Schmidt Z Jonathan Bentz Z Ricky Kendall Z Alistair Rendell Funding Z Do. E Sci. DAC Z SCL (Ames Lab) Z APAC / ANU Z NSF Z MSI

Acknowledgements People Z Mark Gordon Z Mike Schmidt Z Jonathan Bentz Z Ricky Kendall Z Alistair Rendell Funding Z Do. E Sci. DAC Z SCL (Ames Lab) Z APAC / ANU Z NSF Z MSI

Petaflop Applications (benchmarks, too) Z Petaflop = ~125, 000 2. 2 GHz AMD Opteron cores. Z O vs. V Z small O, big V ==> CBS Limit Z big O ==> see below Z Local and Many-Body Methods Z FMO, EE-MB, etc. - use existing parallel methods Z Sampling

Petaflop Applications (benchmarks, too) Z Petaflop = ~125, 000 2. 2 GHz AMD Opteron cores. Z O vs. V Z small O, big V ==> CBS Limit Z big O ==> see below Z Local and Many-Body Methods Z FMO, EE-MB, etc. - use existing parallel methods Z Sampling