b77dff9215eeab6917a8124a64382e82.ppt

- Количество слайдов: 31

PAPI Directions Dan Terpstra Innovative Computing Lab University of Tennessee

PAPI Directions ¨ Overview Ø What’s PAPI? Ø What’s New? Ø Features Ø Platforms Ø What’s Next? Ø Network PAPI Ø Thermal PAPI Ø When? Ø PAPI release roadmap Ø What’s ICL? Ø (a word from our sponsor) IBM Petascale Workshop 2006

What’s PAPI? ¨ A software layer (library) designed to provide the tool developer and application engineer with a consistent interface and methodology for use of the performance counter hardware found in most major microprocessors. ¨ Countable events are defined in two ways: Ø platform-neutral Preset Events Ø Platform-dependent Native Events ¨ Preset Events can be derived from multiple Native Events ¨ All events referenced by name and collected in Event. Sets for sampling ¨ Events can be multiplexed if counters are limited ¨ Statistical sampling is implemented by: Ø Software overflow with timer driven sampling Ø Hardware overflow if supported by the platform IBM Petascale Workshop 2006

Where’s PAPI ¨ PAPI runs on most modern processors and Operating Systems of interest to HPC: Ø Ø Ø Ø Ø IBM POWER 3, 4, 5 / AIX POWER 4, 5 / Linux Power. PC-32 and -64 / Linux Blue Gene Intel Pentium II, III, 4, M, EM 64 T, etc. / Linux Intel Itanium AMD Athlon, Opteron / Linux Cray T 3 E, X 1, XD 3, XT 3 Catamount Altix, Sparc, … ¨ NOTE: All Linux implementations require the perfctr kernel patch. Ø Except Itanium which uses the built-in perfmon interface Ø Perfmon 2 development is underway to replace perfctr and be pre-installed in the kernel – NO PATCHES NEEDED! IBM Petascale Workshop 2006

Extending PAPI beyond the CPU ¨ PAPI has historically targeted on on- processor performance counters ¨ Several categories of off-processor counters exist Ø network interfaces: Myrinet, Infiniband, Gig. E Ø memory interfaces: Cray X 1 Ø thermal and power interfaces: ACPI ¨ CHALLENGE: Ø Extend the PAPI interface to address multiple counter domains Ø Preserve the PAPI calling semantics, ease of use, and platform independence for existing applications IBM Petascale Workshop 2006

Multi-Substrate PAPI ¨ Goals: ØIsolate hardware dependent code in a separable ‘substrate’ module ØExtend platform independent code to support multiple simultaneous substrates ØAdd or modify API calls to support access to any of several substrates ØModify build environment for easy selection and configuration of multiple available substrates IBM Petascale Workshop 2006

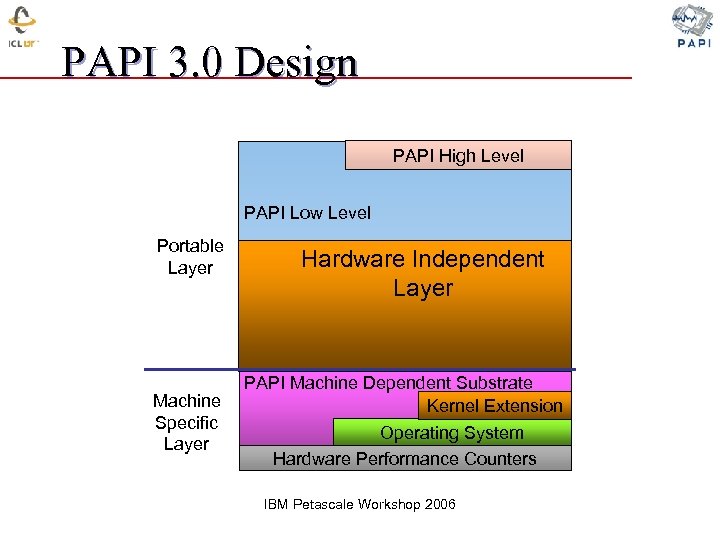

PAPI 3. 0 Design PAPI High Level PAPI Low Level Portable Layer Machine Specific Layer Hardware Independent Layer PAPI Machine Dependent Substrate Kernel Extension Operating System Hardware Performance Counters IBM Petascale Workshop 2006

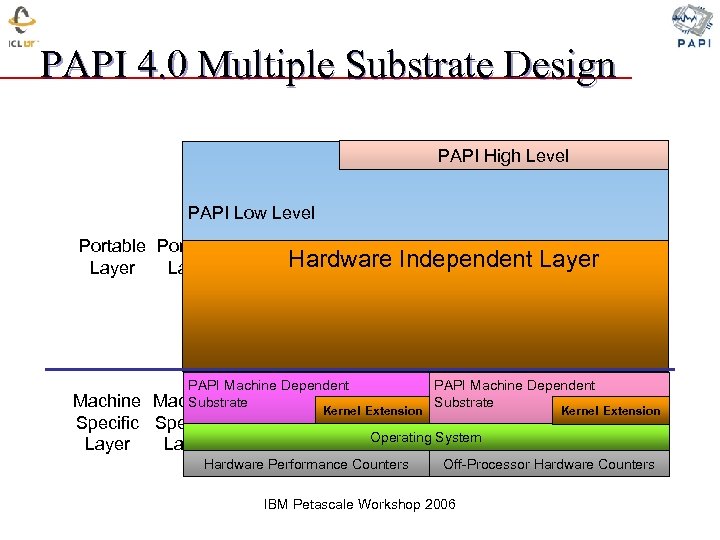

PAPI 4. 0 Multiple Substrate Design PAPI Level High PAPI Low Level PAPI Low Portable Layer Hardware Independent Layer PAPI Machine Dependent Machine Substrate Machine Specific Layer Dependent Substrate PAPI Machine Dependent Substrate Kernel Extension Operating System Hardware Performance Counters Off-Processor Hardware Counters Hardware Performance Counters IBM Petascale Workshop 2006

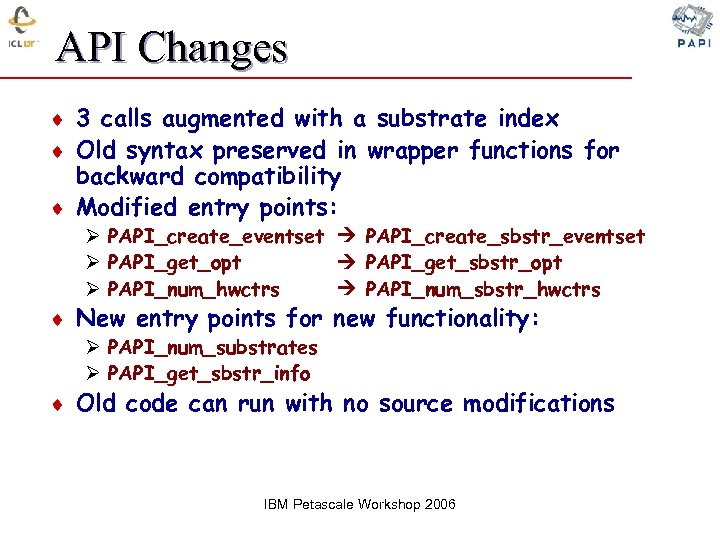

API Changes ¨ 3 calls augmented with a substrate index ¨ Old syntax preserved in wrapper functions for backward compatibility ¨ Modified entry points: Ø PAPI_create_eventset PAPI_create_sbstr_eventset Ø PAPI_get_opt PAPI_get_sbstr_opt Ø PAPI_num_hwctrs PAPI_num_sbstr_hwctrs ¨ New entry points for new functionality: Ø PAPI_num_substrates Ø PAPI_get_sbstr_info ¨ Old code can run with no source modifications IBM Petascale Workshop 2006

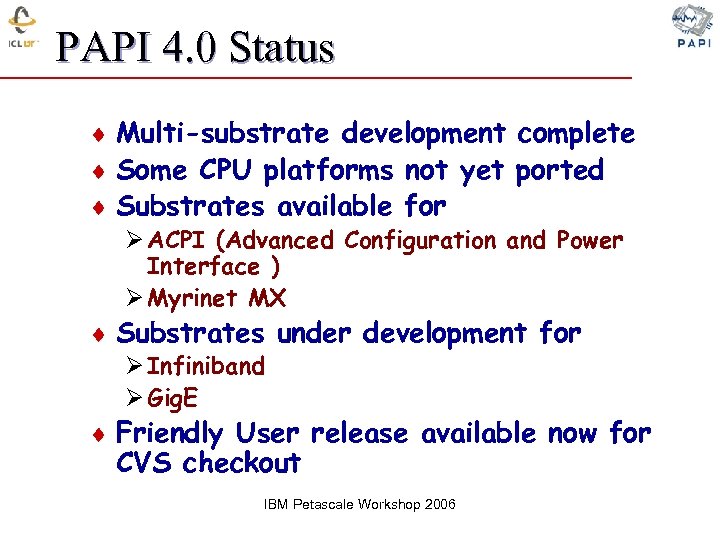

PAPI 4. 0 Status ¨ Multi-substrate development complete ¨ Some CPU platforms not yet ported ¨ Substrates available for Ø ACPI (Advanced Configuration and Power Interface ) Ø Myrinet MX ¨ Substrates under development for Ø Infiniband Ø Gig. E ¨ Friendly User release available now for CVS checkout IBM Petascale Workshop 2006

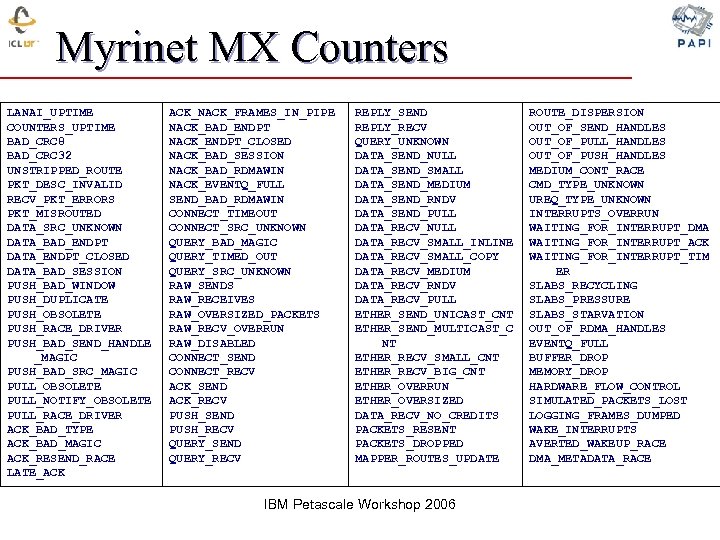

Myrinet MX Counters LANAI_UPTIME COUNTERS_UPTIME BAD_CRC 8 BAD_CRC 32 UNSTRIPPED_ROUTE PKT_DESC_INVALID RECV_PKT_ERRORS PKT_MISROUTED DATA_SRC_UNKNOWN DATA_BAD_ENDPT DATA_ENDPT_CLOSED DATA_BAD_SESSION PUSH_BAD_WINDOW PUSH_DUPLICATE PUSH_OBSOLETE PUSH_RACE_DRIVER PUSH_BAD_SEND_HANDLE _MAGIC PUSH_BAD_SRC_MAGIC PULL_OBSOLETE PULL_NOTIFY_OBSOLETE PULL_RACE_DRIVER ACK_BAD_TYPE ACK_BAD_MAGIC ACK_RESEND_RACE LATE_ACK ACK_NACK_FRAMES_IN_PIPE NACK_BAD_ENDPT NACK_ENDPT_CLOSED NACK_BAD_SESSION NACK_BAD_RDMAWIN NACK_EVENTQ_FULL SEND_BAD_RDMAWIN CONNECT_TIMEOUT CONNECT_SRC_UNKNOWN QUERY_BAD_MAGIC QUERY_TIMED_OUT QUERY_SRC_UNKNOWN RAW_SENDS RAW_RECEIVES RAW_OVERSIZED_PACKETS RAW_RECV_OVERRUN RAW_DISABLED CONNECT_SEND CONNECT_RECV ACK_SEND ACK_RECV PUSH_SEND PUSH_RECV QUERY_SEND QUERY_RECV REPLY_SEND REPLY_RECV QUERY_UNKNOWN DATA_SEND_NULL DATA_SEND_SMALL DATA_SEND_MEDIUM DATA_SEND_RNDV DATA_SEND_PULL DATA_RECV_NULL DATA_RECV_SMALL_INLINE DATA_RECV_SMALL_COPY DATA_RECV_MEDIUM DATA_RECV_RNDV DATA_RECV_PULL ETHER_SEND_UNICAST_CNT ETHER_SEND_MULTICAST_C NT ETHER_RECV_SMALL_CNT ETHER_RECV_BIG_CNT ETHER_OVERRUN ETHER_OVERSIZED DATA_RECV_NO_CREDITS PACKETS_RESENT PACKETS_DROPPED MAPPER_ROUTES_UPDATE IBM Petascale Workshop 2006 ROUTE_DISPERSION OUT_OF_SEND_HANDLES OUT_OF_PULL_HANDLES OUT_OF_PUSH_HANDLES MEDIUM_CONT_RACE CMD_TYPE_UNKNOWN UREQ_TYPE_UNKNOWN INTERRUPTS_OVERRUN WAITING_FOR_INTERRUPT_DMA WAITING_FOR_INTERRUPT_ACK WAITING_FOR_INTERRUPT_TIM ER SLABS_RECYCLING SLABS_PRESSURE SLABS_STARVATION OUT_OF_RDMA_HANDLES EVENTQ_FULL BUFFER_DROP MEMORY_DROP HARDWARE_FLOW_CONTROL SIMULATED_PACKETS_LOST LOGGING_FRAMES_DUMPED WAKE_INTERRUPTS AVERTED_WAKEUP_RACE DMA_METADATA_RACE

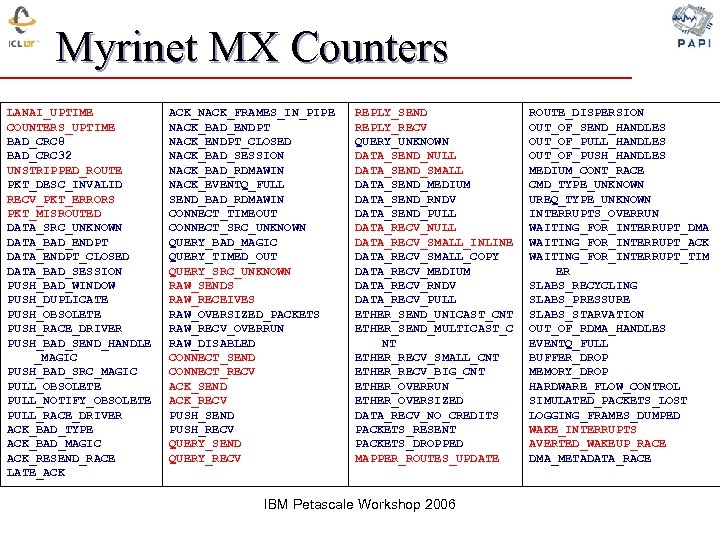

Myrinet MX Counters LANAI_UPTIME COUNTERS_UPTIME BAD_CRC 8 BAD_CRC 32 UNSTRIPPED_ROUTE PKT_DESC_INVALID RECV_PKT_ERRORS PKT_MISROUTED DATA_SRC_UNKNOWN DATA_BAD_ENDPT DATA_ENDPT_CLOSED DATA_BAD_SESSION PUSH_BAD_WINDOW PUSH_DUPLICATE PUSH_OBSOLETE PUSH_RACE_DRIVER PUSH_BAD_SEND_HANDLE _MAGIC PUSH_BAD_SRC_MAGIC PULL_OBSOLETE PULL_NOTIFY_OBSOLETE PULL_RACE_DRIVER ACK_BAD_TYPE ACK_BAD_MAGIC ACK_RESEND_RACE LATE_ACK ACK_NACK_FRAMES_IN_PIPE NACK_BAD_ENDPT NACK_ENDPT_CLOSED NACK_BAD_SESSION NACK_BAD_RDMAWIN NACK_EVENTQ_FULL SEND_BAD_RDMAWIN CONNECT_TIMEOUT CONNECT_SRC_UNKNOWN QUERY_BAD_MAGIC QUERY_TIMED_OUT QUERY_SRC_UNKNOWN RAW_SENDS RAW_RECEIVES RAW_OVERSIZED_PACKETS RAW_RECV_OVERRUN RAW_DISABLED CONNECT_SEND CONNECT_RECV ACK_SEND ACK_RECV PUSH_SEND PUSH_RECV QUERY_SEND QUERY_RECV REPLY_SEND REPLY_RECV QUERY_UNKNOWN DATA_SEND_NULL DATA_SEND_SMALL DATA_SEND_MEDIUM DATA_SEND_RNDV DATA_SEND_PULL DATA_RECV_NULL DATA_RECV_SMALL_INLINE DATA_RECV_SMALL_COPY DATA_RECV_MEDIUM DATA_RECV_RNDV DATA_RECV_PULL ETHER_SEND_UNICAST_CNT ETHER_SEND_MULTICAST_C NT ETHER_RECV_SMALL_CNT ETHER_RECV_BIG_CNT ETHER_OVERRUN ETHER_OVERSIZED DATA_RECV_NO_CREDITS PACKETS_RESENT PACKETS_DROPPED MAPPER_ROUTES_UPDATE IBM Petascale Workshop 2006 ROUTE_DISPERSION OUT_OF_SEND_HANDLES OUT_OF_PULL_HANDLES OUT_OF_PUSH_HANDLES MEDIUM_CONT_RACE CMD_TYPE_UNKNOWN UREQ_TYPE_UNKNOWN INTERRUPTS_OVERRUN WAITING_FOR_INTERRUPT_DMA WAITING_FOR_INTERRUPT_ACK WAITING_FOR_INTERRUPT_TIM ER SLABS_RECYCLING SLABS_PRESSURE SLABS_STARVATION OUT_OF_RDMA_HANDLES EVENTQ_FULL BUFFER_DROP MEMORY_DROP HARDWARE_FLOW_CONTROL SIMULATED_PACKETS_LOST LOGGING_FRAMES_DUMPED WAKE_INTERRUPTS AVERTED_WAKEUP_RACE DMA_METADATA_RACE

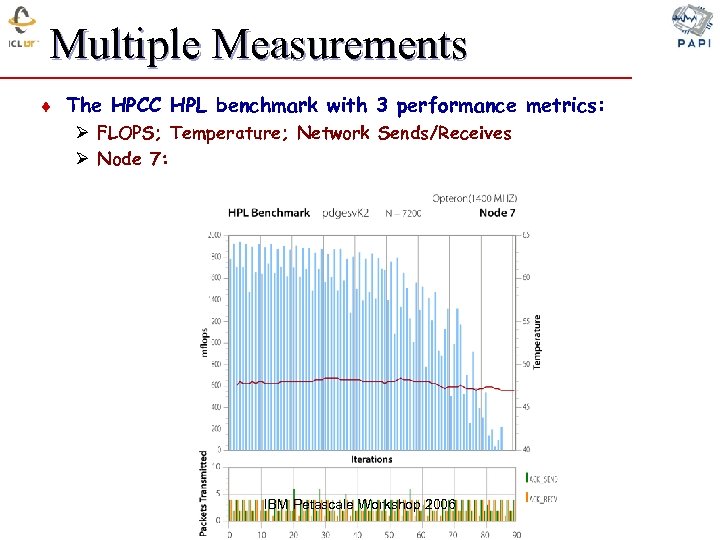

Multiple Measurements ¨ The HPCC HPL benchmark with 3 performance metrics: Ø FLOPS; Temperature; Network Sends/Receives Ø Node 7: IBM Petascale Workshop 2006

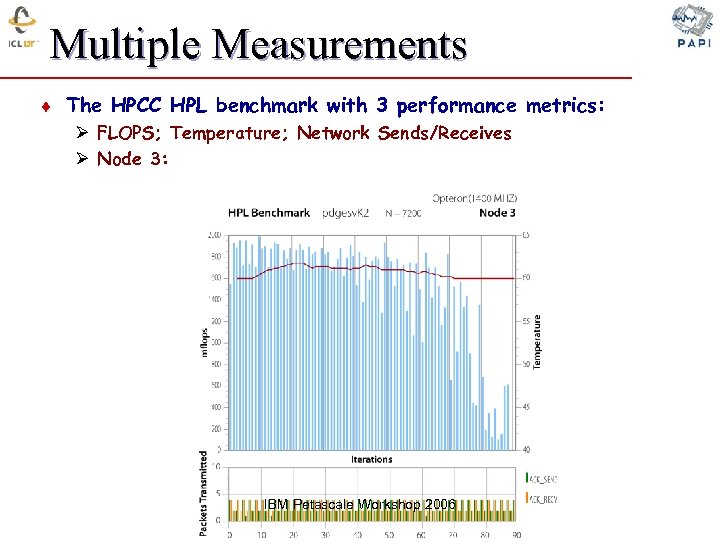

Multiple Measurements ¨ The HPCC HPL benchmark with 3 performance metrics: Ø FLOPS; Temperature; Network Sends/Receives Ø Node 3: IBM Petascale Workshop 2006

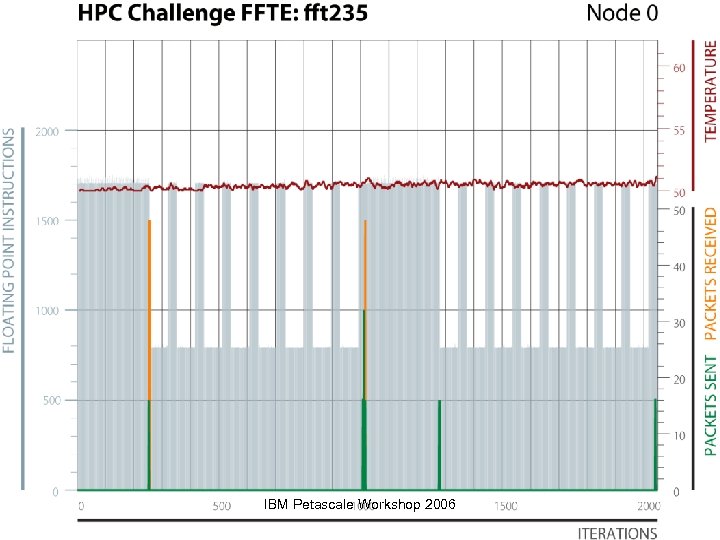

IBM Petascale Workshop 2006

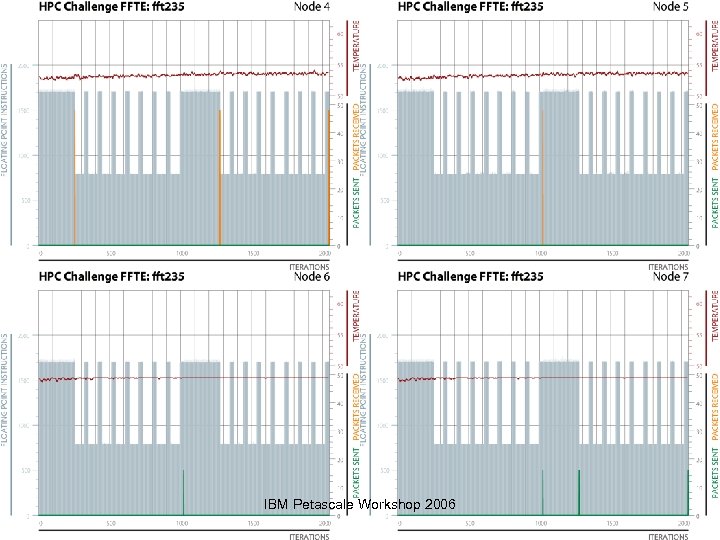

IBM Petascale Workshop 2006

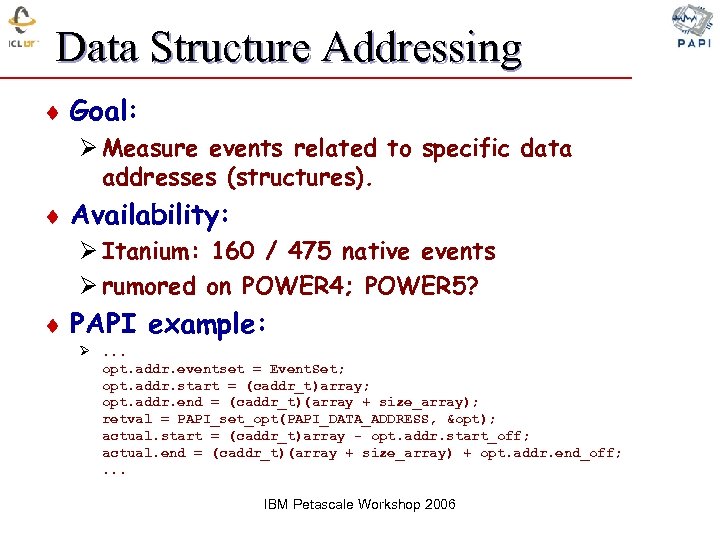

Data Structure Addressing ¨ Goal: Ø Measure events related to specific data addresses (structures). ¨ Availability: Ø Itanium: 160 / 475 native events Ø rumored on POWER 4; POWER 5? ¨ PAPI example: Ø. . . opt. addr. eventset = Event. Set; opt. addr. start = (caddr_t)array; opt. addr. end = (caddr_t)(array + size_array); retval = PAPI_set_opt(PAPI_DATA_ADDRESS, &opt); actual. start = (caddr_t)array - opt. addr. start_off; actual. end = (caddr_t)(array + size_array) + opt. addr. end_off; . . . IBM Petascale Workshop 2006

Rensselaer to Build and House $100 Million Supercomputer NY Times, May 11, 2006 Rensselaer Polytechnic Institute announced yesterday that it was combining forces with New York State and I. B. M. to build a $100 million supercomputer that will be among the 10 most powerful in the world. The computer, a type of I. B. M. system known as Blue Gene, will be on Rensselaer's campus in Troy, N. Y. , and will have the power to perform more than 70 trillion calculations per second. It will mainly be used to help researchers make smaller, faster semiconductor devices and for nanotechnology research. IBM Petascale Workshop 2006

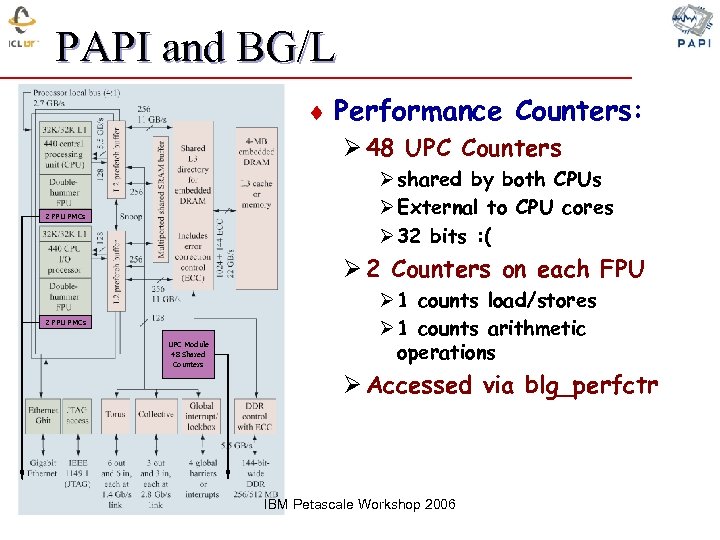

PAPI and BG/L ¨ Performance Counters: Ø 48 UPC Counters Ø shared by both CPUs Ø External to CPU cores Ø 32 bits : ( 2 FPU PMCs Ø 2 Counters on each FPU 2 FPU PMCs UPC Module 48 Shared Counters Ø 1 counts load/stores Ø 1 counts arithmetic operations Ø Accessed via blg_perfctr IBM Petascale Workshop 2006

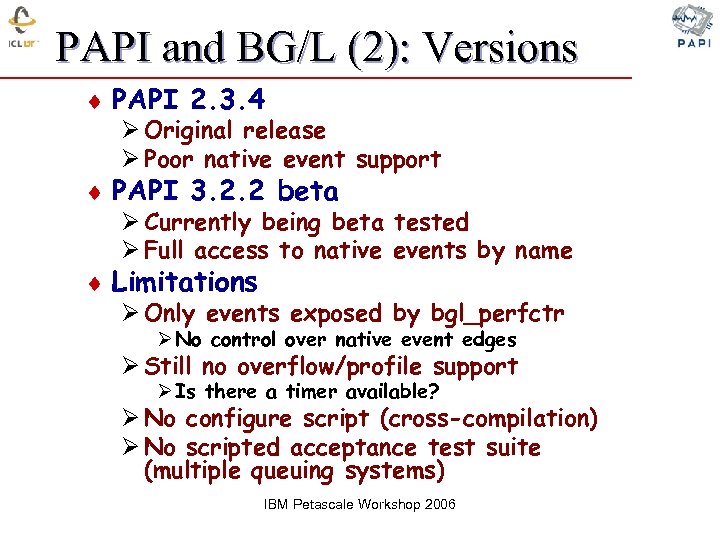

PAPI and BG/L (2): Versions ¨ PAPI 2. 3. 4 Ø Original release Ø Poor native event support ¨ PAPI 3. 2. 2 beta Ø Currently being beta tested Ø Full access to native events by name ¨ Limitations Ø Only events exposed by bgl_perfctr Ø No control over native event edges Ø Still no overflow/profile support Ø Is there a timer available? Ø No configure script (cross-compilation) Ø No scripted acceptance test suite (multiple queuing systems) IBM Petascale Workshop 2006

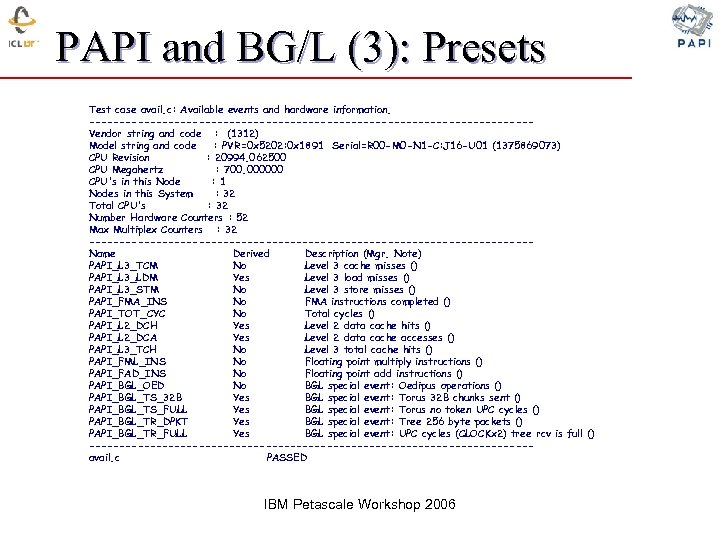

PAPI and BG/L (3): Presets Test case avail. c: Available events and hardware information. ------------------------------------Vendor string and code : (1312) Model string and code : PVR=0 x 5202: 0 x 1891 Serial=R 00 -M 0 -N 1 -C: J 16 -U 01 (1375869073) CPU Revision : 20994. 062500 CPU Megahertz : 700. 000000 CPU's in this Node : 1 Nodes in this System : 32 Total CPU's : 32 Number Hardware Counters : 52 Max Multiplex Counters : 32 ------------------------------------Name Derived Description (Mgr. Note) PAPI_L 3_TCM No Level 3 cache misses () PAPI_L 3_LDM Yes Level 3 load misses () PAPI_L 3_STM No Level 3 store misses () PAPI_FMA_INS No FMA instructions completed () PAPI_TOT_CYC No Total cycles () PAPI_L 2_DCH Yes Level 2 data cache hits () PAPI_L 2_DCA Yes Level 2 data cache accesses () PAPI_L 3_TCH No Level 3 total cache hits () PAPI_FML_INS No Floating point multiply instructions () PAPI_FAD_INS No Floating point add instructions () PAPI_BGL_OED No BGL special event: Oedipus operations () PAPI_BGL_TS_32 B Yes BGL special event: Torus 32 B chunks sent () PAPI_BGL_TS_FULL Yes BGL special event: Torus no token UPC cycles () PAPI_BGL_TR_DPKT Yes BGL special event: Tree 256 byte packets () PAPI_BGL_TR_FULL Yes BGL special event: UPC cycles (CLOCKx 2) tree rcv is full () ------------------------------------avail. c PASSED IBM Petascale Workshop 2006

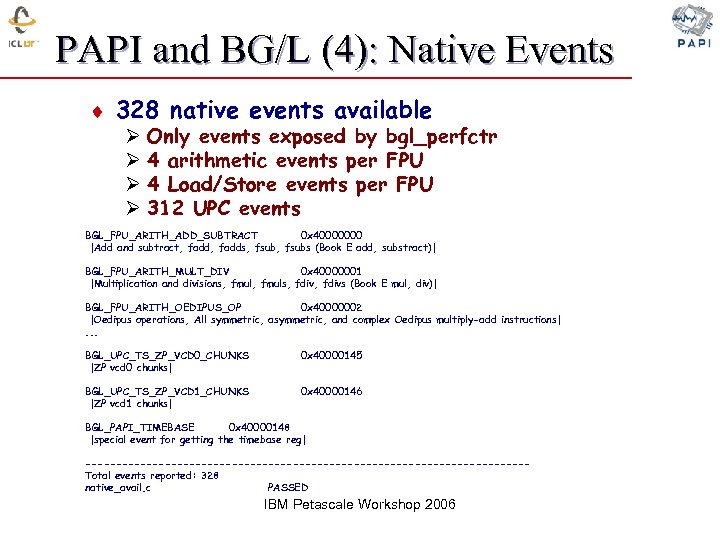

PAPI and BG/L (4): Native Events ¨ 328 native events available Ø Ø Only events exposed by bgl_perfctr 4 arithmetic events per FPU 4 Load/Store events per FPU 312 UPC events BGL_FPU_ARITH_ADD_SUBTRACT 0 x 40000000 |Add and subtract, fadds, fsubs (Book E add, substract)| BGL_FPU_ARITH_MULT_DIV 0 x 40000001 |Multiplication and divisions, fmuls, fdivs (Book E mul, div)| BGL_FPU_ARITH_OEDIPUS_OP 0 x 40000002 |Oedipus operations, All symmetric, and complex Oedipus multiply-add instructions|. . . BGL_UPC_TS_ZP_VCD 0_CHUNKS |ZP vcd 0 chunks| 0 x 40000145 BGL_UPC_TS_ZP_VCD 1_CHUNKS |ZP vcd 1 chunks| 0 x 40000146 BGL_PAPI_TIMEBASE 0 x 40000148 |special event for getting the timebase reg| ------------------------------------Total events reported: 328 native_avail. c PASSED IBM Petascale Workshop 2006

XT 3 and Catamount The Oak Ridger February 21, 2006 “The Cray XT 3 Jaguar, the flagship computing system in ORNL's Leadership Computing Facility, was ranked tenth in the world in a November 2005 survey of supercomputers, delivering 20. 5 trillion operations per second (teraflops). ” IBM Petascale Workshop 2006

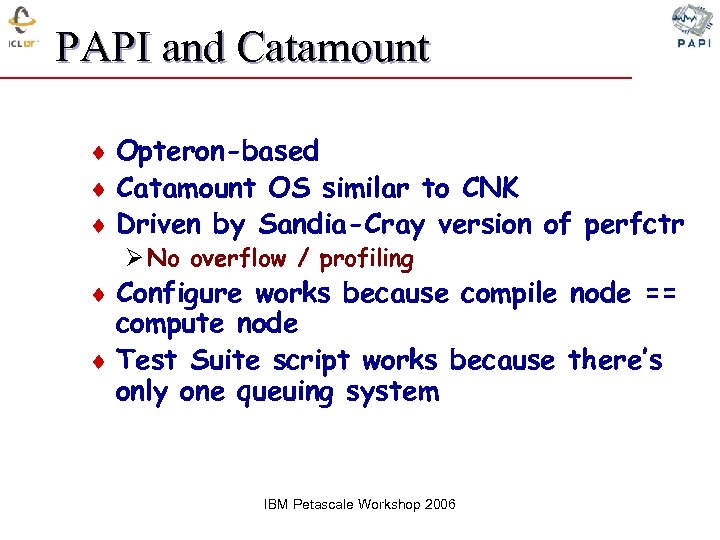

PAPI and Catamount ¨ Opteron-based ¨ Catamount OS similar to CNK ¨ Driven by Sandia-Cray version of perfctr Ø No overflow / profiling ¨ Configure works because compile node == compute node ¨ Test Suite script works because there’s only one queuing system IBM Petascale Workshop 2006

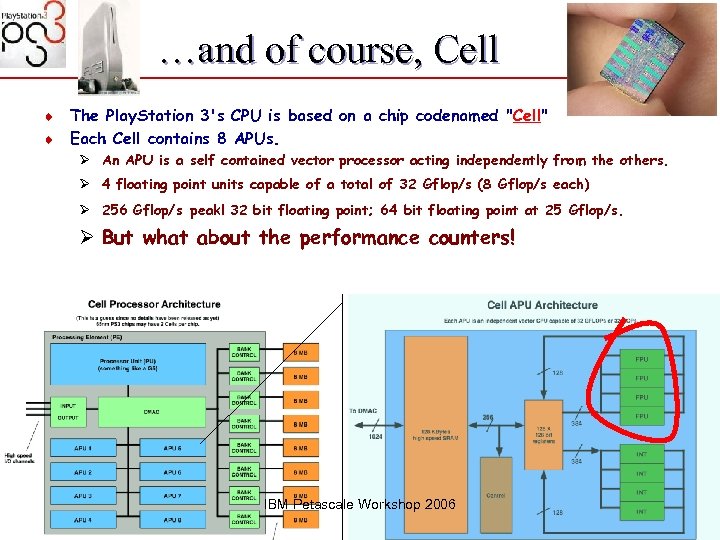

…and of course, Cell The Play. Station 3's CPU is based on a chip codenamed "Cell" ¨ Each Cell contains 8 APUs. ¨ Ø An APU is a self contained vector processor acting independently from the others. Ø 4 floating point units capable of a total of 32 Gflop/s (8 Gflop/s each) Ø 256 Gflop/s peak! 32 bit floating point; 64 bit floating point at 25 Gflop/s. Ø But what about the performance counters! IBM Petascale Workshop 2006

When? PAPI Release Schedule ¨ PAPI 3. 3. 0: Real. Soon. Now™ Ø BG/L in beta testing Ø Merging and deprecating PAPI 3. 0. 8. 1 Ø Regression testing on other platforms ¨ PAPI 4. 0: Q 2, 2006 Ø Porting some substrates to Multi-substrate model Ø Developing additional non-cpu substrates ¨ Wanna Help? Distributed Testing… IBM Petascale Workshop 2006

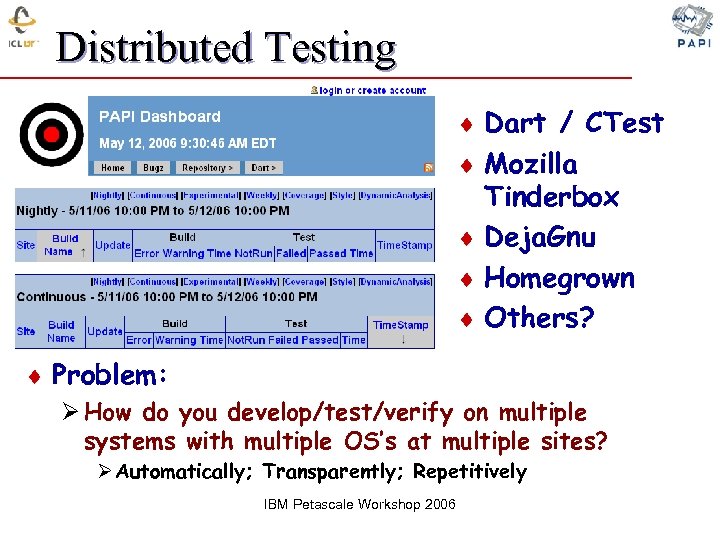

Distributed Testing ¨ Dart / CTest ¨ Mozilla Tinderbox ¨ Deja. Gnu ¨ Homegrown ¨ Others? ¨ Problem: Ø How do you develop/test/verify on multiple systems with multiple OS’s at multiple sites? Ø Automatically; Transparently; Repetitively IBM Petascale Workshop 2006

A Word from our Sponsor… Innovative Computing Laboratory ¨ Jack’s Research Group in the CS Department ¨ Size- About 45 -50 people Ø 16 students; 19 scientific staff; 10 support staff; 1 visitors ¨ Funding Ø NSF Ø Supercomputer Centers (UCSD & NCSA) Ø Next Generation Software (NGS) Ø Info Tech Res. (ITR) Ø Middleware Init. (NMI) Ø DOE Ø Scientific Discovery through Advanced Computing (Sci. DAC) Ø Math in Comp Sci (MICS) Ø DARPA Ø High Productivity Computing Systems Ø DOD Ø Modernization ¨ Work with companies Ø AMD, Cray, Dolphin, Microsoft, Math. Works, Intel, Sun, Myricom, SGI, HP, IBM, Northrop -Grumman ¨ Ph. D Dissertation, MS Project ¨ Equipment Ø A number of clusters Ø Desktop machines Ø Office setup ¨ Summer internships Ø Industry, ORNL, … ¨ Travel to meetings ¨ IBM Petascale Workshop 2006 Participate in publications

ICL Class of 2005 IBM Petascale Workshop 2006

Speculative Performance Positions ¨ Post. Doc Positions Probably Available ØPAPI ØNew Platforms (Cell? ) ØNew Substrates (Infiniband? ) ØKOJAK ØAutomated Performance Analysis ØECLIPSE PTP & TAU Integration ¨ See me for brochures or more info IBM Petascale Workshop 2006

PAPI Directions Dan Terpstra Innovative Computing Lab University of Tennessee

b77dff9215eeab6917a8124a64382e82.ppt