b13bf048737a8c34652bf08e697261c6.ppt

- Количество слайдов: 29

Pan. DA & Networking Kaushik De Univ. of Texas at Arlington ANSE@Snowmass, UM July 31, 2013

Pan. DA & Networking Kaushik De Univ. of Texas at Arlington ANSE@Snowmass, UM July 31, 2013

Introduction § Background § Pan. DA is a distributed computing workload management system § Pan. DA relies on networking for workload data transfer/access § § However, networking is assumed in Pan. DA – not integrated in workflow Note: data transfer/access is done asynchronously: by DQ 2 in ATLAS, Ph. EDEx in CMS, pandamover/FAX for special cases… § Data transfer/access systems can provide first level of network optimizations – Pan. DA will use these enhancements as available § Ambitious goal for Pan. DA § Direct integration of networking with Pan. DA workflow – never attempted before for large scale automated WMS systems Kaushik De July 31, 2013

Introduction § Background § Pan. DA is a distributed computing workload management system § Pan. DA relies on networking for workload data transfer/access § § However, networking is assumed in Pan. DA – not integrated in workflow Note: data transfer/access is done asynchronously: by DQ 2 in ATLAS, Ph. EDEx in CMS, pandamover/FAX for special cases… § Data transfer/access systems can provide first level of network optimizations – Pan. DA will use these enhancements as available § Ambitious goal for Pan. DA § Direct integration of networking with Pan. DA workflow – never attempted before for large scale automated WMS systems Kaushik De July 31, 2013

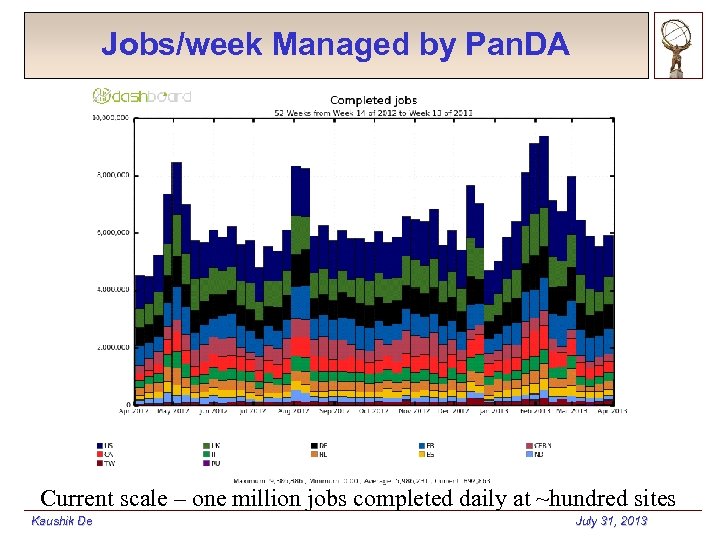

Jobs/week Managed by Pan. DA Current scale – one million jobs completed daily at ~hundred sites Kaushik De July 31, 2013

Jobs/week Managed by Pan. DA Current scale – one million jobs completed daily at ~hundred sites Kaushik De July 31, 2013

Concept: Network as a Resource § Pan. DA as workload manager § Pan. DA automatically chooses job execution site § § § Multi-level decision tree – task brokerage, job brokerage, dispatcher Also manages predictive future workflows – at task definition, PD 2 P Site selection is based on processing and storage requirements § § § Can we use network information in this decision? Can we go even further – network provisioning? Further – network knowledge used for all phases of job cycle? § Network as resource § Optimal site selection should take network capability into account § § We do this already – but indirectly using job completion metrics Network as a resource should be managed (i. e. provisioning) § Kaushik De We also do this crudely – mostly through timeouts, self throttling July 31, 2013

Concept: Network as a Resource § Pan. DA as workload manager § Pan. DA automatically chooses job execution site § § § Multi-level decision tree – task brokerage, job brokerage, dispatcher Also manages predictive future workflows – at task definition, PD 2 P Site selection is based on processing and storage requirements § § § Can we use network information in this decision? Can we go even further – network provisioning? Further – network knowledge used for all phases of job cycle? § Network as resource § Optimal site selection should take network capability into account § § We do this already – but indirectly using job completion metrics Network as a resource should be managed (i. e. provisioning) § Kaushik De We also do this crudely – mostly through timeouts, self throttling July 31, 2013

Scope of Effort § Three parallel efforts to integrate networking in Pan. DA § US ATLAS funded – primarily to improve integration with FAX § ASCR funded – big. Pan. DA project, taking Pan. DA beyond LHC § Next Generation Workload Management and Analysis System for Big Data, DOE funded (BNL, U Texas Arlington) § ANSE funded – this project § We are coordinating the three efforts to maximize results Kaushik De July 31, 2013

Scope of Effort § Three parallel efforts to integrate networking in Pan. DA § US ATLAS funded – primarily to improve integration with FAX § ASCR funded – big. Pan. DA project, taking Pan. DA beyond LHC § Next Generation Workload Management and Analysis System for Big Data, DOE funded (BNL, U Texas Arlington) § ANSE funded – this project § We are coordinating the three efforts to maximize results Kaushik De July 31, 2013

First Steps § Started the work on integrating network information into Pan. DA few months ago, following community discussions § New hires funded by ASCR and ANSE (and Pan. DA core team) § Step 1: Introduce network information into Pan. DA Start with slowly varying (static) information from external probes § Populate various databases used by Pan. DA § § Step 2: Use network information for workload management § Start with simple use cases that lead to measurable improvements in work flow / user experience § From Pan. DA perspective we assume Network measurements are available § Network information is reliable § We can examine this assumption later – need to start somewhere § Kaushik De July 30, 2013

First Steps § Started the work on integrating network information into Pan. DA few months ago, following community discussions § New hires funded by ASCR and ANSE (and Pan. DA core team) § Step 1: Introduce network information into Pan. DA Start with slowly varying (static) information from external probes § Populate various databases used by Pan. DA § § Step 2: Use network information for workload management § Start with simple use cases that lead to measurable improvements in work flow / user experience § From Pan. DA perspective we assume Network measurements are available § Network information is reliable § We can examine this assumption later – need to start somewhere § Kaushik De July 30, 2013

Sources of Network Information § DDM Sonar measurements § ATLAS measures transfer rates for files between Tier 1 and Tier 2 sites (information used for site white/blacklisting) § Measurements available for small, medium, and large files § perf. Sonar measurements § All WLCG sites are being instrumented with PS boxes § US sites are already instrumented and fully monitored § FAX measurements § Read-time for remote files are measured for pairs of sites § Standard Pan. DA test jobs (Hammer. Cloud jobs) are used § This is not an exclusive list – just a starting point Kaushik De July 30, 2013

Sources of Network Information § DDM Sonar measurements § ATLAS measures transfer rates for files between Tier 1 and Tier 2 sites (information used for site white/blacklisting) § Measurements available for small, medium, and large files § perf. Sonar measurements § All WLCG sites are being instrumented with PS boxes § US sites are already instrumented and fully monitored § FAX measurements § Read-time for remote files are measured for pairs of sites § Standard Pan. DA test jobs (Hammer. Cloud jobs) are used § This is not an exclusive list – just a starting point Kaushik De July 30, 2013

Data Repositories § Native data repositories § Historical data stored from collectors § SSB – site status board for sonar and PS data (currently) § HC FAX data is kept independently and uploaded § AGIS (ATLAS Grid Information System) § Most recent / processed data only – updated periodically § Mixture of push/pull – moving to JSON API (pushed only) § sched. Config. DB § Internal Oracle DB used by Pan. DA for fast access § Currently test cron updates – switching to standard collector § All work is currently in ‘dev’ branch Kaushik De July 30, 2013

Data Repositories § Native data repositories § Historical data stored from collectors § SSB – site status board for sonar and PS data (currently) § HC FAX data is kept independently and uploaded § AGIS (ATLAS Grid Information System) § Most recent / processed data only – updated periodically § Mixture of push/pull – moving to JSON API (pushed only) § sched. Config. DB § Internal Oracle DB used by Pan. DA for fast access § Currently test cron updates – switching to standard collector § All work is currently in ‘dev’ branch Kaushik De July 30, 2013

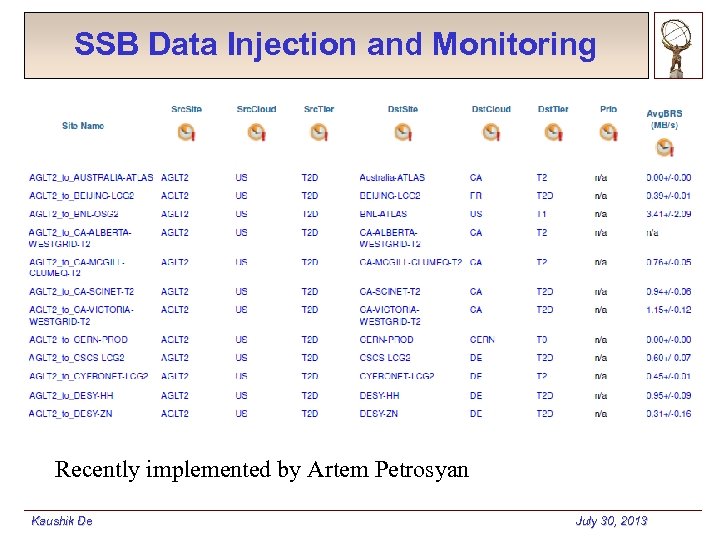

SSB Data Injection and Monitoring Recently implemented by Artem Petrosyan Kaushik De July 30, 2013

SSB Data Injection and Monitoring Recently implemented by Artem Petrosyan Kaushik De July 30, 2013

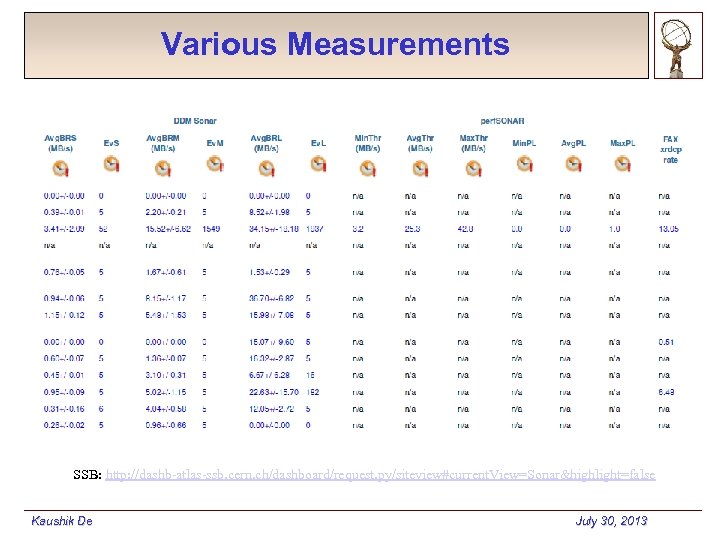

Various Measurements SSB: http: //dashb-atlas-ssb. cern. ch/dashboard/request. py/siteview#current. View=Sonar&highlight=false Kaushik De July 30, 2013

Various Measurements SSB: http: //dashb-atlas-ssb. cern. ch/dashboard/request. py/siteview#current. View=Sonar&highlight=false Kaushik De July 30, 2013

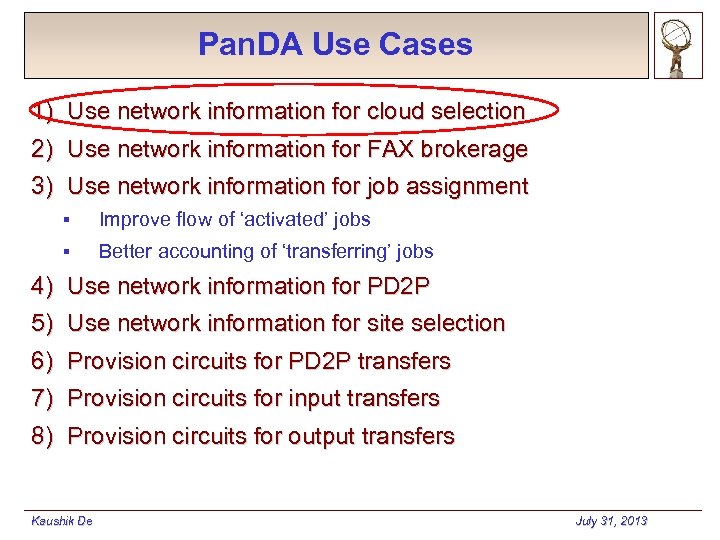

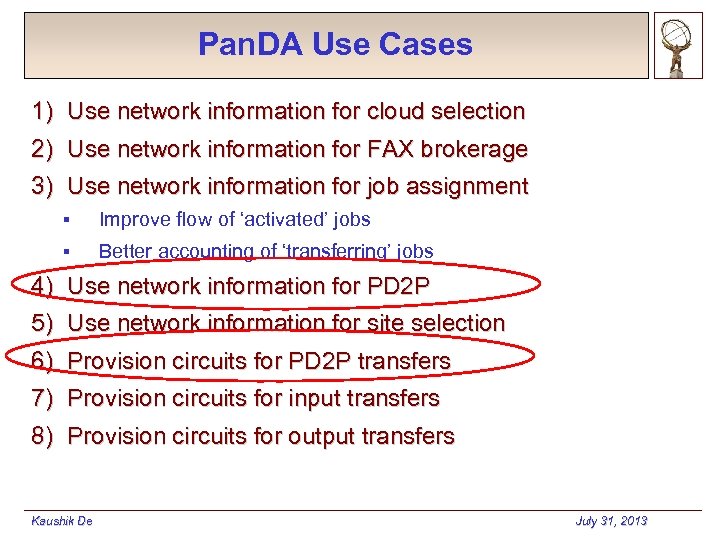

Pan. DA Use Cases 1) Use network information for cloud selection 2) Use network information for FAX brokerage 3) Use network information for job assignment § Improve flow of ‘activated’ jobs § Better accounting of ‘transferring’ jobs 4) Use network information for PD 2 P 5) Use network information for site selection 6) Provision circuits for PD 2 P transfers 7) Provision circuits for input transfers 8) Provision circuits for output transfers Kaushik De July 31, 2013

Pan. DA Use Cases 1) Use network information for cloud selection 2) Use network information for FAX brokerage 3) Use network information for job assignment § Improve flow of ‘activated’ jobs § Better accounting of ‘transferring’ jobs 4) Use network information for PD 2 P 5) Use network information for site selection 6) Provision circuits for PD 2 P transfers 7) Provision circuits for input transfers 8) Provision circuits for output transfers Kaushik De July 31, 2013

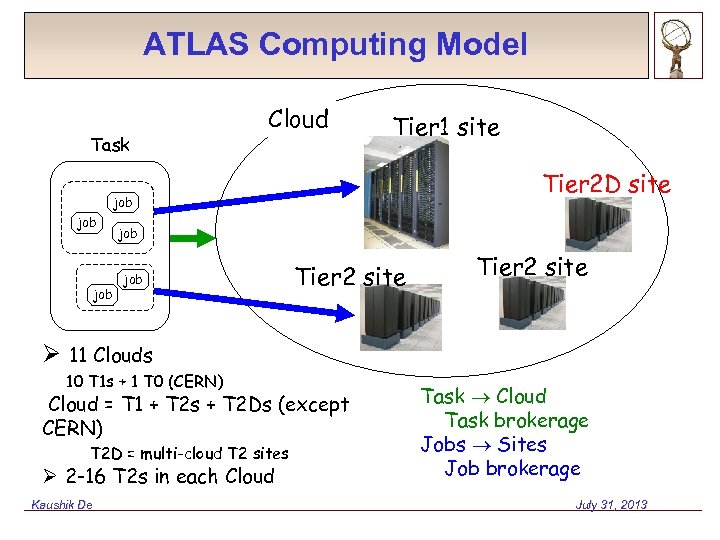

ATLAS Computing Model Task Cloud Tier 1 site Tier 2 D site job job job Tier 2 site 11 Clouds 10 T 1 s + 1 T 0 (CERN) Cloud = T 1 + T 2 s + T 2 Ds (except CERN) T 2 D = multi-cloud T 2 sites 2 -16 T 2 s in each Cloud Kaushik De Task Cloud Task brokerage Jobs Sites Job brokerage July 31, 2013

ATLAS Computing Model Task Cloud Tier 1 site Tier 2 D site job job job Tier 2 site 11 Clouds 10 T 1 s + 1 T 0 (CERN) Cloud = T 1 + T 2 s + T 2 Ds (except CERN) T 2 D = multi-cloud T 2 sites 2 -16 T 2 s in each Cloud Kaushik De Task Cloud Task brokerage Jobs Sites Job brokerage July 31, 2013

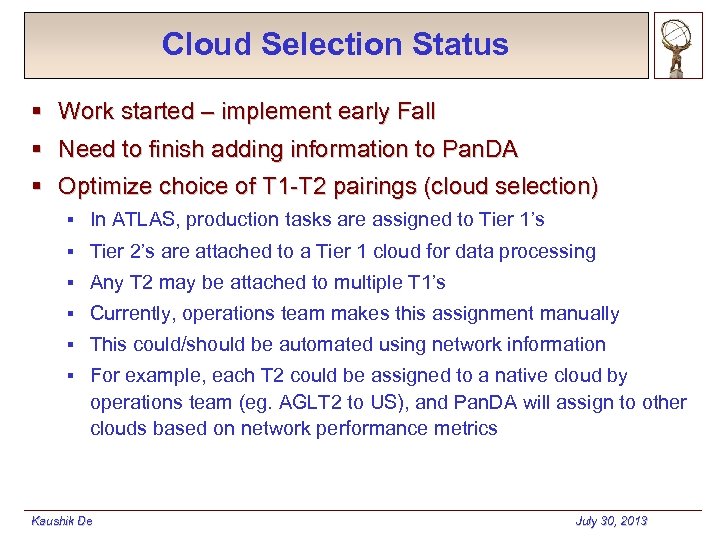

Cloud Selection Status § Work started – implement early Fall § Need to finish adding information to Pan. DA § Optimize choice of T 1 -T 2 pairings (cloud selection) § In ATLAS, production tasks are assigned to Tier 1’s § Tier 2’s are attached to a Tier 1 cloud for data processing § Any T 2 may be attached to multiple T 1’s § Currently, operations team makes this assignment manually § This could/should be automated using network information § For example, each T 2 could be assigned to a native cloud by operations team (eg. AGLT 2 to US), and Pan. DA will assign to other clouds based on network performance metrics Kaushik De July 30, 2013

Cloud Selection Status § Work started – implement early Fall § Need to finish adding information to Pan. DA § Optimize choice of T 1 -T 2 pairings (cloud selection) § In ATLAS, production tasks are assigned to Tier 1’s § Tier 2’s are attached to a Tier 1 cloud for data processing § Any T 2 may be attached to multiple T 1’s § Currently, operations team makes this assignment manually § This could/should be automated using network information § For example, each T 2 could be assigned to a native cloud by operations team (eg. AGLT 2 to US), and Pan. DA will assign to other clouds based on network performance metrics Kaushik De July 30, 2013

Pan. DA Use Cases 1) Use network information for cloud selection 2) Use network information for FAX brokerage 3) Use network information for job assignment § Improve flow of ‘activated’ jobs § Better accounting of ‘transferring’ jobs 4) Use network information for PD 2 P 5) Use network information for site selection 6) Provision circuits for PD 2 P transfers 7) Provision circuits for input transfers 8) Provision circuits for output transfers Kaushik De July 31, 2013

Pan. DA Use Cases 1) Use network information for cloud selection 2) Use network information for FAX brokerage 3) Use network information for job assignment § Improve flow of ‘activated’ jobs § Better accounting of ‘transferring’ jobs 4) Use network information for PD 2 P 5) Use network information for site selection 6) Provision circuits for PD 2 P transfers 7) Provision circuits for input transfers 8) Provision circuits for output transfers Kaushik De July 31, 2013

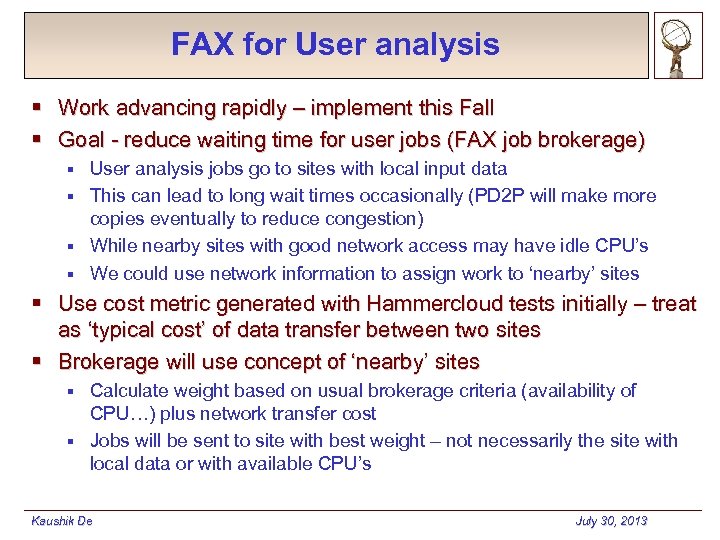

FAX for User analysis § Work advancing rapidly – implement this Fall § Goal - reduce waiting time for user jobs (FAX job brokerage) User analysis jobs go to sites with local input data § This can lead to long wait times occasionally (PD 2 P will make more copies eventually to reduce congestion) § While nearby sites with good network access may have idle CPU’s § We could use network information to assign work to ‘nearby’ sites § § Use cost metric generated with Hammercloud tests initially – treat as ‘typical cost’ of data transfer between two sites § Brokerage will use concept of ‘nearby’ sites Calculate weight based on usual brokerage criteria (availability of CPU…) plus network transfer cost § Jobs will be sent to site with best weight – not necessarily the site with local data or with available CPU’s § Kaushik De July 30, 2013

FAX for User analysis § Work advancing rapidly – implement this Fall § Goal - reduce waiting time for user jobs (FAX job brokerage) User analysis jobs go to sites with local input data § This can lead to long wait times occasionally (PD 2 P will make more copies eventually to reduce congestion) § While nearby sites with good network access may have idle CPU’s § We could use network information to assign work to ‘nearby’ sites § § Use cost metric generated with Hammercloud tests initially – treat as ‘typical cost’ of data transfer between two sites § Brokerage will use concept of ‘nearby’ sites Calculate weight based on usual brokerage criteria (availability of CPU…) plus network transfer cost § Jobs will be sent to site with best weight – not necessarily the site with local data or with available CPU’s § Kaushik De July 30, 2013

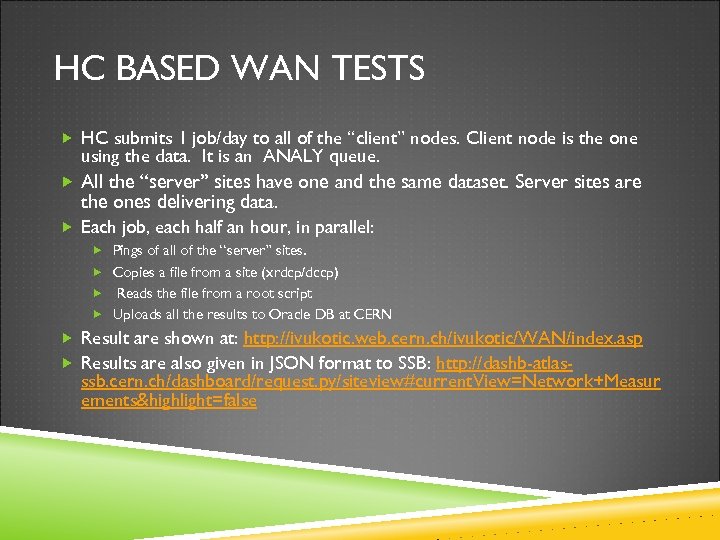

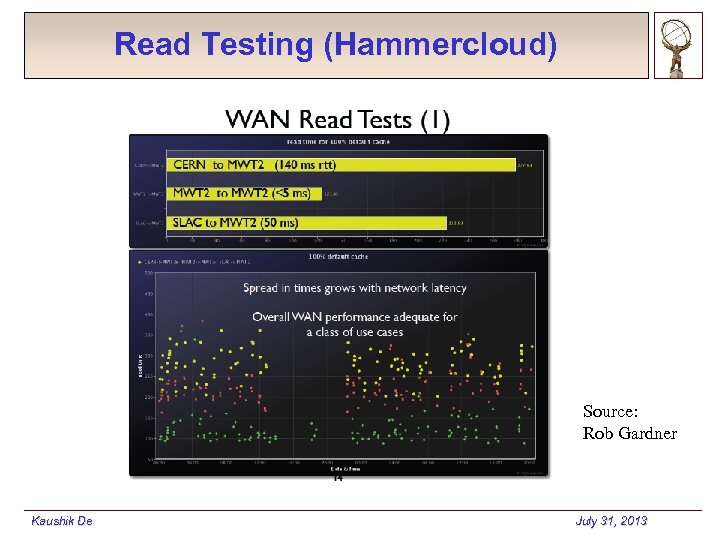

HC BASED WAN TESTS HC submits 1 job/day to all of the “client” nodes. Client node is the one using the data. It is an ANALY queue. All the “server” sites have one and the same dataset. Server sites are the ones delivering data. Each job, each half an hour, in parallel: Pings of all of the “server” sites. Copies a file from a site (xrdcp/dccp) Reads the file from a root script Uploads all the results to Oracle DB at CERN Result are shown at: http: //ivukotic. web. cern. ch/ivukotic/WAN/index. asp Results are also given in JSON format to SSB: http: //dashb-atlas- ssb. cern. ch/dashboard/request. py/siteview#current. View=Network+Measur ements&highlight=false

HC BASED WAN TESTS HC submits 1 job/day to all of the “client” nodes. Client node is the one using the data. It is an ANALY queue. All the “server” sites have one and the same dataset. Server sites are the ones delivering data. Each job, each half an hour, in parallel: Pings of all of the “server” sites. Copies a file from a site (xrdcp/dccp) Reads the file from a root script Uploads all the results to Oracle DB at CERN Result are shown at: http: //ivukotic. web. cern. ch/ivukotic/WAN/index. asp Results are also given in JSON format to SSB: http: //dashb-atlas- ssb. cern. ch/dashboard/request. py/siteview#current. View=Network+Measur ements&highlight=false

Read Testing (Hammercloud) Source: Rob Gardner Kaushik De July 31, 2013

Read Testing (Hammercloud) Source: Rob Gardner Kaushik De July 31, 2013

Medium Term Plan § After the Fall – improve based on tests § Improve source of information § Reliability of network information § Dynamic network information § Internal measurements Kaushik De July 30, 2013

Medium Term Plan § After the Fall – improve based on tests § Improve source of information § Reliability of network information § Dynamic network information § Internal measurements Kaushik De July 30, 2013

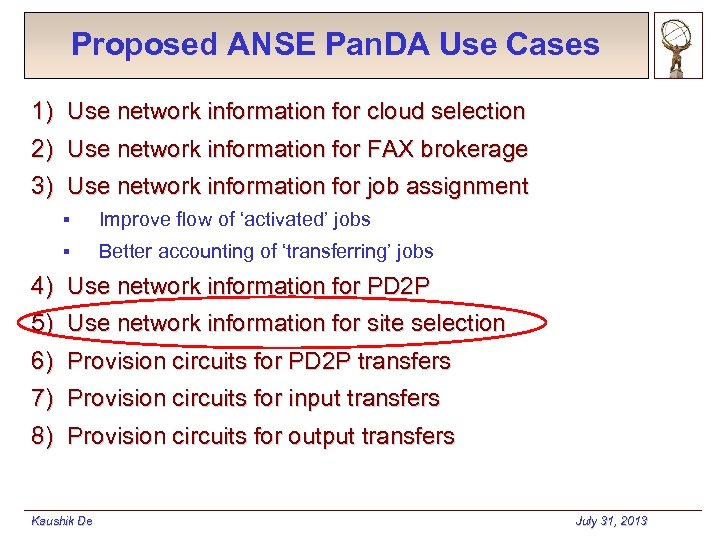

Proposed ANSE Pan. DA Use Cases 1) Use network information for cloud selection 2) Use network information for FAX brokerage 3) Use network information for job assignment § Improve flow of ‘activated’ jobs § Better accounting of ‘transferring’ jobs 4) Use network information for PD 2 P 5) Use network information for site selection 6) Provision circuits for PD 2 P transfers 7) Provision circuits for input transfers 8) Provision circuits for output transfers Kaushik De July 31, 2013

Proposed ANSE Pan. DA Use Cases 1) Use network information for cloud selection 2) Use network information for FAX brokerage 3) Use network information for job assignment § Improve flow of ‘activated’ jobs § Better accounting of ‘transferring’ jobs 4) Use network information for PD 2 P 5) Use network information for site selection 6) Provision circuits for PD 2 P transfers 7) Provision circuits for input transfers 8) Provision circuits for output transfers Kaushik De July 31, 2013

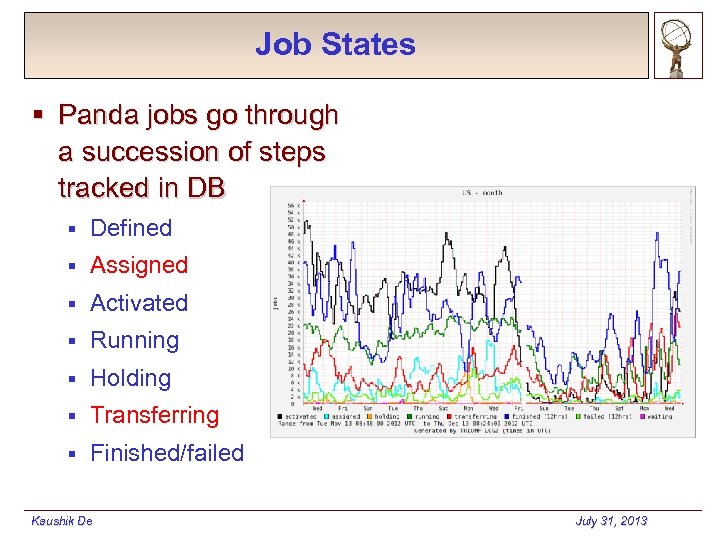

Job States § Panda jobs go through a succession of steps tracked in DB § Defined § Assigned § Activated § Running § Holding § Transferring § Finished/failed Kaushik De July 31, 2013

Job States § Panda jobs go through a succession of steps tracked in DB § Defined § Assigned § Activated § Running § Holding § Transferring § Finished/failed Kaushik De July 31, 2013

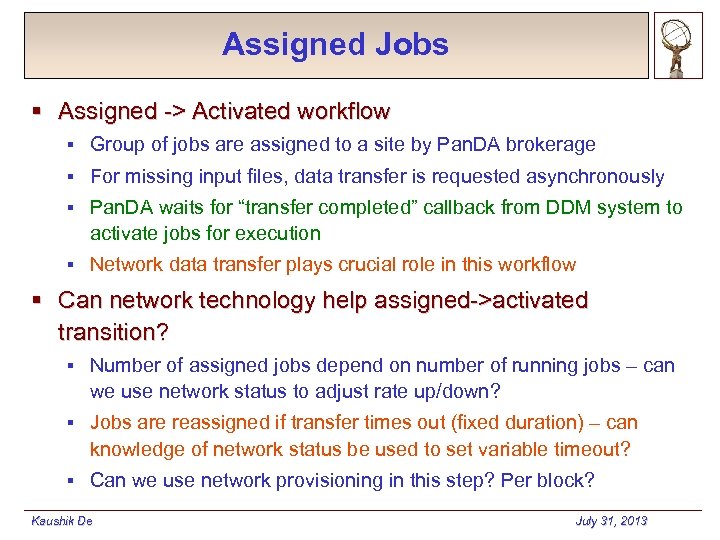

Assigned Jobs § Assigned -> Activated workflow § Group of jobs are assigned to a site by Pan. DA brokerage § For missing input files, data transfer is requested asynchronously § Pan. DA waits for “transfer completed” callback from DDM system to activate jobs for execution § Network data transfer plays crucial role in this workflow § Can network technology help assigned->activated transition? § Number of assigned jobs depend on number of running jobs – can we use network status to adjust rate up/down? § Jobs are reassigned if transfer times out (fixed duration) – can knowledge of network status be used to set variable timeout? § Can we use network provisioning in this step? Per block? Kaushik De July 31, 2013

Assigned Jobs § Assigned -> Activated workflow § Group of jobs are assigned to a site by Pan. DA brokerage § For missing input files, data transfer is requested asynchronously § Pan. DA waits for “transfer completed” callback from DDM system to activate jobs for execution § Network data transfer plays crucial role in this workflow § Can network technology help assigned->activated transition? § Number of assigned jobs depend on number of running jobs – can we use network status to adjust rate up/down? § Jobs are reassigned if transfer times out (fixed duration) – can knowledge of network status be used to set variable timeout? § Can we use network provisioning in this step? Per block? Kaushik De July 31, 2013

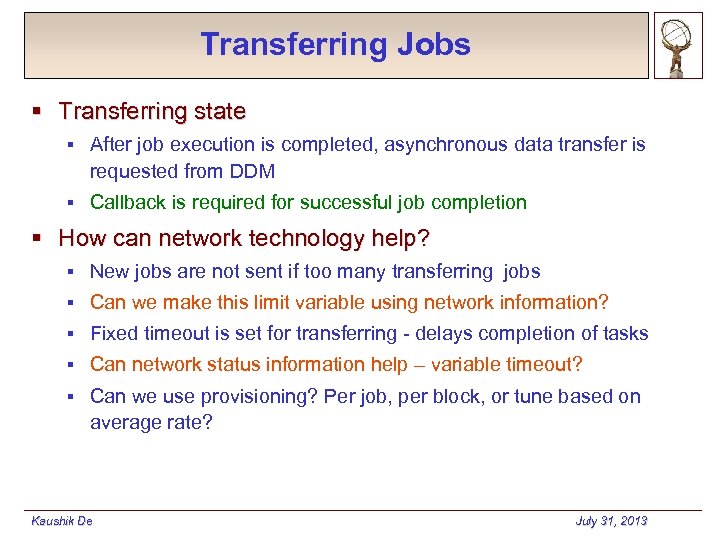

Transferring Jobs § Transferring state § After job execution is completed, asynchronous data transfer is requested from DDM § Callback is required for successful job completion § How can network technology help? § New jobs are not sent if too many transferring jobs § Can we make this limit variable using network information? § Fixed timeout is set for transferring - delays completion of tasks § Can network status information help – variable timeout? § Can we use provisioning? Per job, per block, or tune based on average rate? Kaushik De July 31, 2013

Transferring Jobs § Transferring state § After job execution is completed, asynchronous data transfer is requested from DDM § Callback is required for successful job completion § How can network technology help? § New jobs are not sent if too many transferring jobs § Can we make this limit variable using network information? § Fixed timeout is set for transferring - delays completion of tasks § Can network status information help – variable timeout? § Can we use provisioning? Per job, per block, or tune based on average rate? Kaushik De July 31, 2013

Pan. DA Use Cases 1) Use network information for cloud selection 2) Use network information for FAX brokerage 3) Use network information for job assignment § Improve flow of ‘activated’ jobs § Better accounting of ‘transferring’ jobs 4) Use network information for PD 2 P 5) Use network information for site selection 6) Provision circuits for PD 2 P transfers 7) Provision circuits for input transfers 8) Provision circuits for output transfers Kaushik De July 31, 2013

Pan. DA Use Cases 1) Use network information for cloud selection 2) Use network information for FAX brokerage 3) Use network information for job assignment § Improve flow of ‘activated’ jobs § Better accounting of ‘transferring’ jobs 4) Use network information for PD 2 P 5) Use network information for site selection 6) Provision circuits for PD 2 P transfers 7) Provision circuits for input transfers 8) Provision circuits for output transfers Kaushik De July 31, 2013

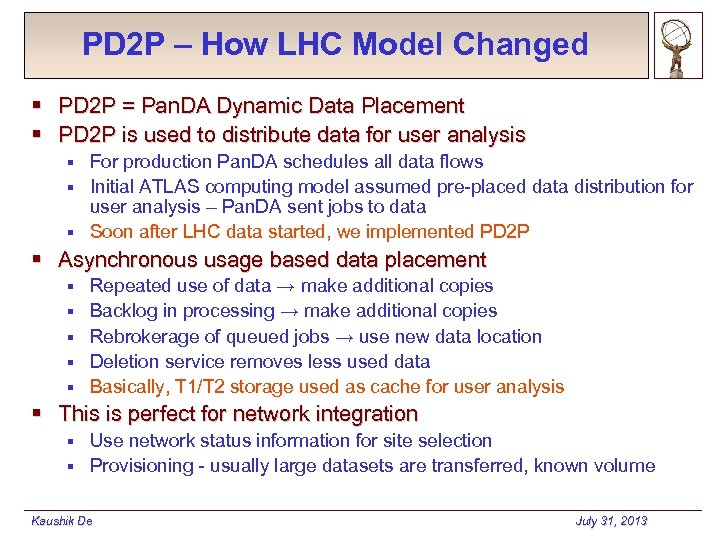

PD 2 P – How LHC Model Changed § PD 2 P = Pan. DA Dynamic Data Placement § PD 2 P is used to distribute data for user analysis For production Pan. DA schedules all data flows § Initial ATLAS computing model assumed pre-placed data distribution for user analysis – Pan. DA sent jobs to data § Soon after LHC data started, we implemented PD 2 P § § Asynchronous usage based data placement § § § Repeated use of data → make additional copies Backlog in processing → make additional copies Rebrokerage of queued jobs → use new data location Deletion service removes less used data Basically, T 1/T 2 storage used as cache for user analysis § This is perfect for network integration Use network status information for site selection § Provisioning - usually large datasets are transferred, known volume § Kaushik De July 31, 2013

PD 2 P – How LHC Model Changed § PD 2 P = Pan. DA Dynamic Data Placement § PD 2 P is used to distribute data for user analysis For production Pan. DA schedules all data flows § Initial ATLAS computing model assumed pre-placed data distribution for user analysis – Pan. DA sent jobs to data § Soon after LHC data started, we implemented PD 2 P § § Asynchronous usage based data placement § § § Repeated use of data → make additional copies Backlog in processing → make additional copies Rebrokerage of queued jobs → use new data location Deletion service removes less used data Basically, T 1/T 2 storage used as cache for user analysis § This is perfect for network integration Use network status information for site selection § Provisioning - usually large datasets are transferred, known volume § Kaushik De July 31, 2013

Proposed ANSE Pan. DA Use Cases 1) Use network information for cloud selection 2) Use network information for FAX brokerage 3) Use network information for job assignment § Improve flow of ‘activated’ jobs § Better accounting of ‘transferring’ jobs 4) Use network information for PD 2 P 5) Use network information for site selection 6) Provision circuits for PD 2 P transfers 7) Provision circuits for input transfers 8) Provision circuits for output transfers Kaushik De July 31, 2013

Proposed ANSE Pan. DA Use Cases 1) Use network information for cloud selection 2) Use network information for FAX brokerage 3) Use network information for job assignment § Improve flow of ‘activated’ jobs § Better accounting of ‘transferring’ jobs 4) Use network information for PD 2 P 5) Use network information for site selection 6) Provision circuits for PD 2 P transfers 7) Provision circuits for input transfers 8) Provision circuits for output transfers Kaushik De July 31, 2013

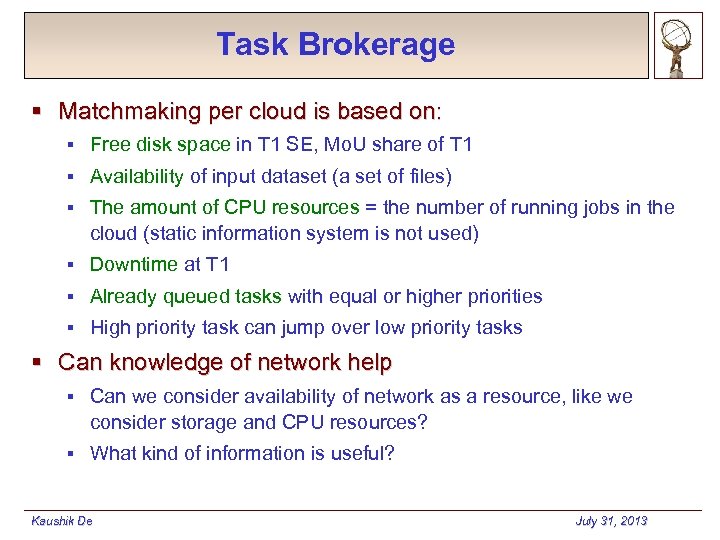

Task Brokerage § Matchmaking per cloud is based on: § Free disk space in T 1 SE, Mo. U share of T 1 § Availability of input dataset (a set of files) § The amount of CPU resources = the number of running jobs in the cloud (static information system is not used) § Downtime at T 1 § Already queued tasks with equal or higher priorities § High priority task can jump over low priority tasks § Can knowledge of network help § Can we consider availability of network as a resource, like we consider storage and CPU resources? § What kind of information is useful? Kaushik De July 31, 2013

Task Brokerage § Matchmaking per cloud is based on: § Free disk space in T 1 SE, Mo. U share of T 1 § Availability of input dataset (a set of files) § The amount of CPU resources = the number of running jobs in the cloud (static information system is not used) § Downtime at T 1 § Already queued tasks with equal or higher priorities § High priority task can jump over low priority tasks § Can knowledge of network help § Can we consider availability of network as a resource, like we consider storage and CPU resources? § What kind of information is useful? Kaushik De July 31, 2013

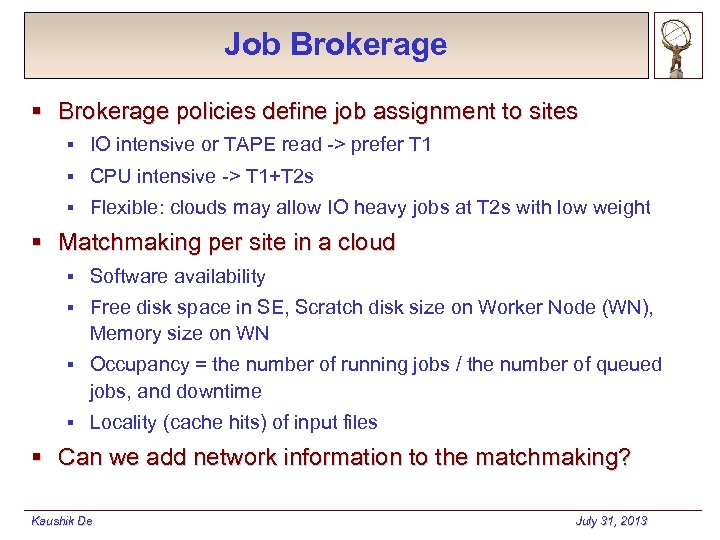

Job Brokerage § Brokerage policies define job assignment to sites § IO intensive or TAPE read -> prefer T 1 § CPU intensive -> T 1+T 2 s § Flexible: clouds may allow IO heavy jobs at T 2 s with low weight § Matchmaking per site in a cloud § Software availability § Free disk space in SE, Scratch disk size on Worker Node (WN), Memory size on WN § Occupancy = the number of running jobs / the number of queued jobs, and downtime § Locality (cache hits) of input files § Can we add network information to the matchmaking? Kaushik De July 31, 2013

Job Brokerage § Brokerage policies define job assignment to sites § IO intensive or TAPE read -> prefer T 1 § CPU intensive -> T 1+T 2 s § Flexible: clouds may allow IO heavy jobs at T 2 s with low weight § Matchmaking per site in a cloud § Software availability § Free disk space in SE, Scratch disk size on Worker Node (WN), Memory size on WN § Occupancy = the number of running jobs / the number of queued jobs, and downtime § Locality (cache hits) of input files § Can we add network information to the matchmaking? Kaushik De July 31, 2013

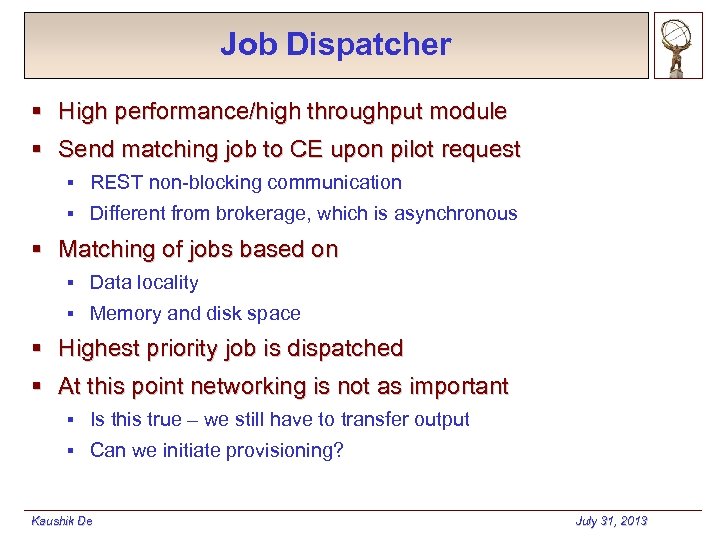

Job Dispatcher § High performance/high throughput module § Send matching job to CE upon pilot request § REST non-blocking communication § Different from brokerage, which is asynchronous § Matching of jobs based on § Data locality § Memory and disk space § Highest priority job is dispatched § At this point networking is not as important § Is this true – we still have to transfer output § Can we initiate provisioning? Kaushik De July 31, 2013

Job Dispatcher § High performance/high throughput module § Send matching job to CE upon pilot request § REST non-blocking communication § Different from brokerage, which is asynchronous § Matching of jobs based on § Data locality § Memory and disk space § Highest priority job is dispatched § At this point networking is not as important § Is this true – we still have to transfer output § Can we initiate provisioning? Kaushik De July 31, 2013

Summary § Many different parts of Pan. DA can benefit from better integration with networking § Development has started § Stay tuned for results Kaushik De July 31, 2013

Summary § Many different parts of Pan. DA can benefit from better integration with networking § Development has started § Stay tuned for results Kaushik De July 31, 2013