e651664939c8a3aca69a2d2212987e1d.ppt

- Количество слайдов: 32

Overview of U. S. ATLAS Computing Facility Michael Ernst Brookhaven National Laboratory BNL-FZK-IN 2 P 3 Tier-1 Meeting 22 May, 2007

Overview of U. S. ATLAS Computing Facility Michael Ernst Brookhaven National Laboratory BNL-FZK-IN 2 P 3 Tier-1 Meeting 22 May, 2007

Participants from BNL Facilities Group Ø Antonio Chan q Farms Architecture, Benchmarking, Condor, Virtualization, Facilities Operation, Procurement Ø Dantong Yu q Networking, 3 D Services Ø Hironori Ito q DDM Operations Ø John Hover q Grid Middleware, EGEE/OSG Interoperability Ø Michael Ernst q Overview, Tier-1 - Tier-2 Relation Ø Ofer Rind q d. Cache, Storage Evaluation Project Ø Shigeki Misawa q Mass Storage (HPSS), Cyber Security M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 2

Participants from BNL Facilities Group Ø Antonio Chan q Farms Architecture, Benchmarking, Condor, Virtualization, Facilities Operation, Procurement Ø Dantong Yu q Networking, 3 D Services Ø Hironori Ito q DDM Operations Ø John Hover q Grid Middleware, EGEE/OSG Interoperability Ø Michael Ernst q Overview, Tier-1 - Tier-2 Relation Ø Ofer Rind q d. Cache, Storage Evaluation Project Ø Shigeki Misawa q Mass Storage (HPSS), Cyber Security M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 2

U. S. in the ATLAS Computing Model Ø Facilities Project as subproject under US ATLAS Software and Computing q Facility Manager responsible for Tier-1 and 5 Tier-2 s Ø BNL Tier-1 responsibilities (~10 Tier-1’s total) q Archival shares of raw and reconstructed data, and associated calibration & reprocessing q Store and serve 100% of ATLAS reconstruction (ESD), analysis (AOD) and physics tag data (TAG) q Physics group level managed production/analysis q Resources dedicated to U. S. physicists: additional per-physicist capacity at 50% of the level managed centrally by ATLAS o o q Allow ~ X 2 acceleration of analysis of one 20% data stream Actual allocation of these resource will be done by U. S. ATLAS Resource Allocation Committee U. S. Tier-2’s have a complimentary role o o q Bulk of simulation and end user analysis support Store and serve 100% of AOD, TAG and subset of ESD Tier-1 and Tier-2’s both support institutional and individual users o Primarily end user analysis M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 3

U. S. in the ATLAS Computing Model Ø Facilities Project as subproject under US ATLAS Software and Computing q Facility Manager responsible for Tier-1 and 5 Tier-2 s Ø BNL Tier-1 responsibilities (~10 Tier-1’s total) q Archival shares of raw and reconstructed data, and associated calibration & reprocessing q Store and serve 100% of ATLAS reconstruction (ESD), analysis (AOD) and physics tag data (TAG) q Physics group level managed production/analysis q Resources dedicated to U. S. physicists: additional per-physicist capacity at 50% of the level managed centrally by ATLAS o o q Allow ~ X 2 acceleration of analysis of one 20% data stream Actual allocation of these resource will be done by U. S. ATLAS Resource Allocation Committee U. S. Tier-2’s have a complimentary role o o q Bulk of simulation and end user analysis support Store and serve 100% of AOD, TAG and subset of ESD Tier-1 and Tier-2’s both support institutional and individual users o Primarily end user analysis M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 3

U. S. ATLAS Tier-2 Centers Ø North East q Boston University q Harvard Ø Great Lakes q Michigan University Ann Arbor q Michigan State Ø Mid West q University of Chicago q Indiana University Ø South West q University of Texas Arlington q Oklahoma University Ø SLAC M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 4

U. S. ATLAS Tier-2 Centers Ø North East q Boston University q Harvard Ø Great Lakes q Michigan University Ann Arbor q Michigan State Ø Mid West q University of Chicago q Indiana University Ø South West q University of Texas Arlington q Oklahoma University Ø SLAC M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 4

ATLAS Computing at BNL Ø Principal objectives q Fulfill role as principal U. S. center (Tier-1) in the tiered ATLAS (and LHC) computing model o o q Supply capacity to ATLAS as agreed in the MOU (~23%) Guarantee the capability and capacity needed by US ATLAS physics program Establish a critical mass in computing and software to support ATLAS physics analysis at BNL and elsewhere in the U. S. q Contribute to ATLAS software most critical to enabling physics analysis at BNL and the U. S. q Provide to U. S. ATLAS physicists expertise and facilities and strengthen U. S. ATLAS physics q Leverage projects outside ATLAS, in particular Open Science Grid (OSG), where they can strengthen the BNL and U. S. ATLAS programs M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 5

ATLAS Computing at BNL Ø Principal objectives q Fulfill role as principal U. S. center (Tier-1) in the tiered ATLAS (and LHC) computing model o o q Supply capacity to ATLAS as agreed in the MOU (~23%) Guarantee the capability and capacity needed by US ATLAS physics program Establish a critical mass in computing and software to support ATLAS physics analysis at BNL and elsewhere in the U. S. q Contribute to ATLAS software most critical to enabling physics analysis at BNL and the U. S. q Provide to U. S. ATLAS physicists expertise and facilities and strengthen U. S. ATLAS physics q Leverage projects outside ATLAS, in particular Open Science Grid (OSG), where they can strengthen the BNL and U. S. ATLAS programs M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 5

Revision of Required Capacities Ø Many adjustments in requirement estimates during past year q Change in LHC startup schedule q Identification and removal of Heavy Ion contribution from HEP requirement q Inclusion of efficiency factors for resources utilization o o Programmatic CPU – 85% o q Chaotic CPU – 60% Disk – 70% Adjustment in author count based calculation of contributions to Tier 1 and Tier-2’s to reflect that CERN contributes Tier 0 and CAF but not otherwise o US 20% (~actual US author fraction) → 23% (US fraction of non-CERN authors) M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 6

Revision of Required Capacities Ø Many adjustments in requirement estimates during past year q Change in LHC startup schedule q Identification and removal of Heavy Ion contribution from HEP requirement q Inclusion of efficiency factors for resources utilization o o Programmatic CPU – 85% o q Chaotic CPU – 60% Disk – 70% Adjustment in author count based calculation of contributions to Tier 1 and Tier-2’s to reflect that CERN contributes Tier 0 and CAF but not otherwise o US 20% (~actual US author fraction) → 23% (US fraction of non-CERN authors) M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 6

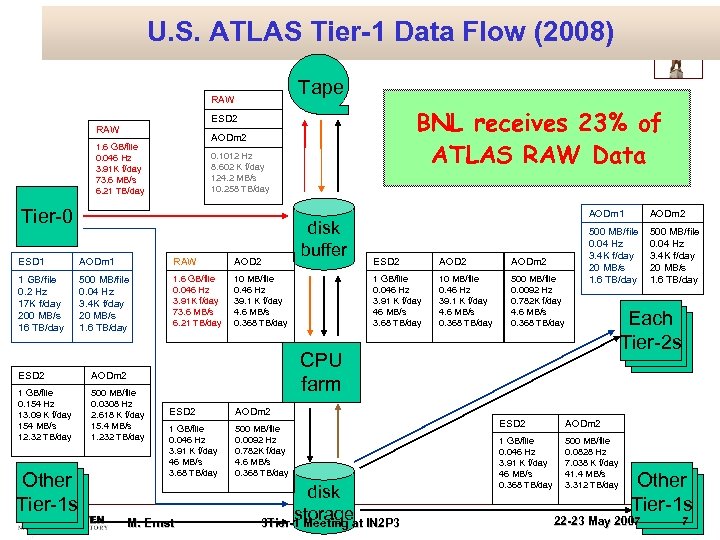

U. S. ATLAS Tier-1 Data Flow (2008) Tape RAW BNL receives 23% of ATLAS RAW Data ESD 2 RAW AODm 2 1. 6 GB/file 0. 046 Hz 3. 91 K f/day 73. 6 MB/s 6. 21 TB/day 0. 1012 Hz 8. 602 K f/day 124. 2 MB/s 10. 258 TB/day Tier-0 ESD 1 AODm 1 RAW AOD 2 1 GB/file 0. 2 Hz 17 K f/day 200 MB/s 16 TB/day 500 MB/file 0. 04 Hz 3. 4 K f/day 20 MB/s 1. 6 TB/day 1. 6 GB/file 0. 046 Hz 3. 91 K f/day 73. 6 MB/s 6. 21 TB/day disk buffer 10 MB/file 0. 46 Hz 39. 1 K f/day 4. 6 MB/s 0. 368 TB/day ESD 2 500 MB/file 0. 0308 Hz 2. 618 K f/day 15. 4 MB/s 1. 232 TB/day Other T 1 Tier-1 s T 1 ESD 2 AODm 2 1 GB/file 0. 046 Hz 3. 91 K f/day 46 MB/s 3. 68 TB/day 10 MB/file 0. 46 Hz 39. 1 K f/day 4. 6 MB/s 0. 368 TB/day 500 MB/file 0. 0092 Hz 0. 782 K f/day 4. 6 MB/s 0. 368 TB/day AODm 2 500 MB/file 0. 04 Hz 3. 4 K f/day 20 MB/s 1. 6 TB/day Each T 1 Tier-2 s T 1 CPU farm AODm 2 1 GB/file 0. 154 Hz 13. 09 K f/day 154 MB/s 12. 32 TB/day AODm 1 ESD 2 AODm 2 1 GB/file 0. 046 Hz 3. 91 K f/day 46 MB/s 3. 68 TB/day 500 MB/file 0. 0092 Hz 0. 782 K f/day 4. 6 MB/s 0. 368 TB/day M. Ernst disk storage 3 Tier-1 Meeting at IN 2 P 3 ESD 2 AODm 2 1 GB/file 0. 046 Hz 3. 91 K f/day 46 MB/s 0. 368 TB/day 500 MB/file 0. 0828 Hz 7. 038 K f/day 41. 4 MB/s 3. 312 TB/day Other T 1 Tier-1 s T 1 22 -23 May 2007 7

U. S. ATLAS Tier-1 Data Flow (2008) Tape RAW BNL receives 23% of ATLAS RAW Data ESD 2 RAW AODm 2 1. 6 GB/file 0. 046 Hz 3. 91 K f/day 73. 6 MB/s 6. 21 TB/day 0. 1012 Hz 8. 602 K f/day 124. 2 MB/s 10. 258 TB/day Tier-0 ESD 1 AODm 1 RAW AOD 2 1 GB/file 0. 2 Hz 17 K f/day 200 MB/s 16 TB/day 500 MB/file 0. 04 Hz 3. 4 K f/day 20 MB/s 1. 6 TB/day 1. 6 GB/file 0. 046 Hz 3. 91 K f/day 73. 6 MB/s 6. 21 TB/day disk buffer 10 MB/file 0. 46 Hz 39. 1 K f/day 4. 6 MB/s 0. 368 TB/day ESD 2 500 MB/file 0. 0308 Hz 2. 618 K f/day 15. 4 MB/s 1. 232 TB/day Other T 1 Tier-1 s T 1 ESD 2 AODm 2 1 GB/file 0. 046 Hz 3. 91 K f/day 46 MB/s 3. 68 TB/day 10 MB/file 0. 46 Hz 39. 1 K f/day 4. 6 MB/s 0. 368 TB/day 500 MB/file 0. 0092 Hz 0. 782 K f/day 4. 6 MB/s 0. 368 TB/day AODm 2 500 MB/file 0. 04 Hz 3. 4 K f/day 20 MB/s 1. 6 TB/day Each T 1 Tier-2 s T 1 CPU farm AODm 2 1 GB/file 0. 154 Hz 13. 09 K f/day 154 MB/s 12. 32 TB/day AODm 1 ESD 2 AODm 2 1 GB/file 0. 046 Hz 3. 91 K f/day 46 MB/s 3. 68 TB/day 500 MB/file 0. 0092 Hz 0. 782 K f/day 4. 6 MB/s 0. 368 TB/day M. Ernst disk storage 3 Tier-1 Meeting at IN 2 P 3 ESD 2 AODm 2 1 GB/file 0. 046 Hz 3. 91 K f/day 46 MB/s 0. 368 TB/day 500 MB/file 0. 0828 Hz 7. 038 K f/day 41. 4 MB/s 3. 312 TB/day Other T 1 Tier-1 s T 1 22 -23 May 2007 7

Computing System Commissioning (CSC) 2006 Production Total Production US Production on Open Science Grid US Production BNL 46% M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 8

Computing System Commissioning (CSC) 2006 Production Total Production US Production on Open Science Grid US Production BNL 46% M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 8

Tier 1 Utilization ØBNL Tier 1 is largest ATLAS Tier 1 and is delivering capacities consistent with this role M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 9

Tier 1 Utilization ØBNL Tier 1 is largest ATLAS Tier 1 and is delivering capacities consistent with this role M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 9

Tier 1 Facility Capacity Projection M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 10

Tier 1 Facility Capacity Projection M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 10

Tier 1 Facility Evolution Plan for FY ‘ 07 Ø Addition of 5 new positions q Increase from 15 FTE in ’ 06 to 20 during ’ 07, which is anticipated steady state Ø Equipment upgrade q CPU: Linux Farm: 1. 4 MSI 2 k ~2. 6 MSI 2 k o Details to be provided in Tony’s talk q Disk: 0. 52 PB ~1. 5 PB q Modest Mass Storage Upgrade o o No Tape Drives, Licenses (RTU) for existing slots in STK Robot, d. Cache Write Pools Details to be provided in Shigeki’s talk Ø Facility Expansion Plans q Shared physical infrastructure for RHIC & ATLAS Facility q Will run out of power and space o o o 2 -phase expansion planned to add 4500 sq. ft. in 2008 and 2009 2 -phase UPS extension planned to add 2 MW in 2007 and 2010 Details to be provided in Tony’s talk M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 11

Tier 1 Facility Evolution Plan for FY ‘ 07 Ø Addition of 5 new positions q Increase from 15 FTE in ’ 06 to 20 during ’ 07, which is anticipated steady state Ø Equipment upgrade q CPU: Linux Farm: 1. 4 MSI 2 k ~2. 6 MSI 2 k o Details to be provided in Tony’s talk q Disk: 0. 52 PB ~1. 5 PB q Modest Mass Storage Upgrade o o No Tape Drives, Licenses (RTU) for existing slots in STK Robot, d. Cache Write Pools Details to be provided in Shigeki’s talk Ø Facility Expansion Plans q Shared physical infrastructure for RHIC & ATLAS Facility q Will run out of power and space o o o 2 -phase expansion planned to add 4500 sq. ft. in 2008 and 2009 2 -phase UPS extension planned to add 2 MW in 2007 and 2010 Details to be provided in Tony’s talk M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 11

Tier 1 Resource Procurement Schedule Ø Procurement for 480 new CPU cores (120 nodes) in progress Delivery announced for last week in May q Adds 1, 1 MSI 2 k, 2. 5 MSI 2 k total usable for ATLAS in June q o A bit more than we have pledged to w. LCG for 2007 (to be delivered by 1 July) Total job slots now at 1288, will be total of 1688 in June q 4. 5 TB (gross) / 3 TB (net) disk space / Worker Node, 740 TB (net) total for ATLAS q o o Includes 70% efficiency factor as defined by ATLAS & w. LCG Decided to add 720 (gross) / 500 (net) TB using Sun Thumpers in July Ø Work on tripling the disk capacity Increasing imbalance between CPU power and disk capacity per worker node is forcing us to look into alternate storage architectures q Model using distributed d. Cache managed disk space installed on compute nodes seems n longer viable nor cost-effective q Have launched a storage evaluation project q o o Benchmark and analyze a spectrum of solutions Well connected with similar activities through other labs (e. g. Fermilab) and HEPi. X Foundation for storage procurement Details to be provided in Ofer’s talk M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 12

Tier 1 Resource Procurement Schedule Ø Procurement for 480 new CPU cores (120 nodes) in progress Delivery announced for last week in May q Adds 1, 1 MSI 2 k, 2. 5 MSI 2 k total usable for ATLAS in June q o A bit more than we have pledged to w. LCG for 2007 (to be delivered by 1 July) Total job slots now at 1288, will be total of 1688 in June q 4. 5 TB (gross) / 3 TB (net) disk space / Worker Node, 740 TB (net) total for ATLAS q o o Includes 70% efficiency factor as defined by ATLAS & w. LCG Decided to add 720 (gross) / 500 (net) TB using Sun Thumpers in July Ø Work on tripling the disk capacity Increasing imbalance between CPU power and disk capacity per worker node is forcing us to look into alternate storage architectures q Model using distributed d. Cache managed disk space installed on compute nodes seems n longer viable nor cost-effective q Have launched a storage evaluation project q o o Benchmark and analyze a spectrum of solutions Well connected with similar activities through other labs (e. g. Fermilab) and HEPi. X Foundation for storage procurement Details to be provided in Ofer’s talk M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 12

Expected Computing Capacity Evolution M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 13

Expected Computing Capacity Evolution M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 13

Expected Storage Capacity Evolution M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 14

Expected Storage Capacity Evolution M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 14

SI 2 K/CPU Evolution (quad-core in FY’ 08) M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 15

SI 2 K/CPU Evolution (quad-core in FY’ 08) M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 15

SI 2 K/Watt Evolution (quad-core in FY’ 08) M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 16

SI 2 K/Watt Evolution (quad-core in FY’ 08) M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 16

Storage Evolution Scenarios M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 17

Storage Evolution Scenarios M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 17

Rack Count Note: Numbers include equipment replacement after 4 years M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 18

Rack Count Note: Numbers include equipment replacement after 4 years M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 18

Projected Space Requirements M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 19

Projected Space Requirements M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 19

Projected Power Requirements M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 20

Projected Power Requirements M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 20

Infrastructure Planning at Tier-1 Ø Currently available space filled Now Ø Soon running out of Power sq 2009 ft. 00 d) 40 nne + la (p M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 21

Infrastructure Planning at Tier-1 Ø Currently available space filled Now Ø Soon running out of Power sq 2009 ft. 00 d) 40 nne + la (p M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 21

More development in 2007 Given the LHC / ATLAS Milestones Ø This year is all about moving from development/deployment into stable and continuous operations q Deployment of full-scale hardware by 4/1/08 as requested by LCG MB o q Operations o o q Reasonable ? Monitoring and alarming Framework in place to support 24 x 7 Significant number of detailed checks and automation In the process of defining levels of support and rules to follow, depending on the issue/alarm (building on RHIC operations) Details to be provided in Tony’s talk Still more work to do on performance o o Did we make good choices in site architecture? Does it all scale? >75% use of resources through Grid Interfaces Data Transfer has been/still is an issue Storage System performance, including demonstrating higher data-serving rates to applications for ATLAS data analysis s Looking also into xrootd (have setup a tesbed, working with U. S. ATLAS Software developers and SLAC) M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 22

More development in 2007 Given the LHC / ATLAS Milestones Ø This year is all about moving from development/deployment into stable and continuous operations q Deployment of full-scale hardware by 4/1/08 as requested by LCG MB o q Operations o o q Reasonable ? Monitoring and alarming Framework in place to support 24 x 7 Significant number of detailed checks and automation In the process of defining levels of support and rules to follow, depending on the issue/alarm (building on RHIC operations) Details to be provided in Tony’s talk Still more work to do on performance o o Did we make good choices in site architecture? Does it all scale? >75% use of resources through Grid Interfaces Data Transfer has been/still is an issue Storage System performance, including demonstrating higher data-serving rates to applications for ATLAS data analysis s Looking also into xrootd (have setup a tesbed, working with U. S. ATLAS Software developers and SLAC) M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 22

Transition to Operations We need to run our sites with reasonable amount of manpower! Stability is important, maybe more than performance Ø Define milestones for uptime, success rates as measured by Site Availability Monitoring tests and DDM data replication exercises Ø The Tier-1 center at BNL is tightly coupled to the Tier-2’s in the US Ø Tier-2’s soon to act more like the Tier-2’s of the Computing Model Ø Details about SLAs and procedures in Tony’s talk M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 23

Transition to Operations We need to run our sites with reasonable amount of manpower! Stability is important, maybe more than performance Ø Define milestones for uptime, success rates as measured by Site Availability Monitoring tests and DDM data replication exercises Ø The Tier-1 center at BNL is tightly coupled to the Tier-2’s in the US Ø Tier-2’s soon to act more like the Tier-2’s of the Computing Model Ø Details about SLAs and procedures in Tony’s talk M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 23

Issues (1/2) Ø On the Critical Path q While (Pan. DA managed) Production is coming along very nicely Data Transfer (and Storage? ) and Data Replication is on the critical path q DDM/DQ 2 (ATLAS Distributed Data Management) is vulnerable and apparently not up to the performance level required q Data Management and Transfers least transparent component / functionality o Dashboard monitoring informative to some extent but not really helpful in case of problems s o Ø No transfers, or slowly moving – why? Trouble at source or destination? Nature / reason of trouble? Details on Networking to be provided in Dantong’s talk Very complex situation – Diagnosis difficult and requires expert-level knowledge in multiple areas • • Currently limited to few experts – not scalable and does not allow site admins to assess how their site is doing Often requires access to distributed log files M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 24

Issues (1/2) Ø On the Critical Path q While (Pan. DA managed) Production is coming along very nicely Data Transfer (and Storage? ) and Data Replication is on the critical path q DDM/DQ 2 (ATLAS Distributed Data Management) is vulnerable and apparently not up to the performance level required q Data Management and Transfers least transparent component / functionality o Dashboard monitoring informative to some extent but not really helpful in case of problems s o Ø No transfers, or slowly moving – why? Trouble at source or destination? Nature / reason of trouble? Details on Networking to be provided in Dantong’s talk Very complex situation – Diagnosis difficult and requires expert-level knowledge in multiple areas • • Currently limited to few experts – not scalable and does not allow site admins to assess how their site is doing Often requires access to distributed log files M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 24

Issues (2/2) Ø (Still) on the Critical Path q Storage Systems and Tier-1 / Tier-2 Sites o o o No technology baseline in U. S. ATLAS Impact on operational readiness and interoperability unclear Significant number of technical problems at all levels (FTS, DDM/DQ 2, SRM/d. Cache) M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 25

Issues (2/2) Ø (Still) on the Critical Path q Storage Systems and Tier-1 / Tier-2 Sites o o o No technology baseline in U. S. ATLAS Impact on operational readiness and interoperability unclear Significant number of technical problems at all levels (FTS, DDM/DQ 2, SRM/d. Cache) M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 25

Securing the Facilities’ Readiness Ø Towards ATLAS Milestones Put an Integration Program in place which aims at building an Integrated Virtual Computing Facility that we need to support LHC Analysis in the US q With exercises designed to verify sites’ readiness, stability and performance q Coordinated by U. S. ATLAS Facility Manager (M. Ernst / BNL) q o o In the process of visiting all U. S. ATLAS Tier-2 Centers Organizing quarterly F-to-F meetings w/ Tier-2’s, now incl. Tier-3’s Ø Exploit commonality and establish (technology) baseline whenever possible q Synergy allows to bundle resources (development and operations) Ø Site Certification Site admins are asked to install well defined software packages and to make needed capacities available to the Collaboration q We will continuously run use-case oriented exercises and will document and archive the results q o o o Heartbeat – Data Transfers on a basic level Dataset replication based on high-level functionality (DDM/DQ 2) Processing (Analysis job profile) s s o Grid Job submission (Pan. DA) – distribution based on data affinity Local data access (from SE) Monitor and archive results from exercises M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 26

Securing the Facilities’ Readiness Ø Towards ATLAS Milestones Put an Integration Program in place which aims at building an Integrated Virtual Computing Facility that we need to support LHC Analysis in the US q With exercises designed to verify sites’ readiness, stability and performance q Coordinated by U. S. ATLAS Facility Manager (M. Ernst / BNL) q o o In the process of visiting all U. S. ATLAS Tier-2 Centers Organizing quarterly F-to-F meetings w/ Tier-2’s, now incl. Tier-3’s Ø Exploit commonality and establish (technology) baseline whenever possible q Synergy allows to bundle resources (development and operations) Ø Site Certification Site admins are asked to install well defined software packages and to make needed capacities available to the Collaboration q We will continuously run use-case oriented exercises and will document and archive the results q o o o Heartbeat – Data Transfers on a basic level Dataset replication based on high-level functionality (DDM/DQ 2) Processing (Analysis job profile) s s o Grid Job submission (Pan. DA) – distribution based on data affinity Local data access (from SE) Monitor and archive results from exercises M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 26

Tier-1 Cyber Security Ø Tier-1 is within BNL IT Division managed cyber security domain q Site Firewall – partitions BNL including Tier 1 from world q Tier 1 Firewall – similarly partitions Tier 1 / RHIC enclave from rest of BNL q Details to be provided in Shigeki’s talk Ø ATLAS Tier 1 / RHIC facility is among most aggressive organizations at BNL in cyber security q Tom Throwe, facility deputy director, chaired Lab’s Cyber Security Advisory Comm. for last year & involved in site wide configuration auditing system q Tier 1 / RHIC first at BNL to establish firewall protected internal enclave o q Affording protection against cyber security problems elsewhere within BNL Moved this past year from simple ssh to 2 factor authentication o o => ssh keys and/or one-time passwords (ssh keys phase out expected) This action addresses only facility intrusions of last 2 years s which were a result of externally sniffed passwords M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 27

Tier-1 Cyber Security Ø Tier-1 is within BNL IT Division managed cyber security domain q Site Firewall – partitions BNL including Tier 1 from world q Tier 1 Firewall – similarly partitions Tier 1 / RHIC enclave from rest of BNL q Details to be provided in Shigeki’s talk Ø ATLAS Tier 1 / RHIC facility is among most aggressive organizations at BNL in cyber security q Tom Throwe, facility deputy director, chaired Lab’s Cyber Security Advisory Comm. for last year & involved in site wide configuration auditing system q Tier 1 / RHIC first at BNL to establish firewall protected internal enclave o q Affording protection against cyber security problems elsewhere within BNL Moved this past year from simple ssh to 2 factor authentication o o => ssh keys and/or one-time passwords (ssh keys phase out expected) This action addresses only facility intrusions of last 2 years s which were a result of externally sniffed passwords M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 27

Site Cyber Access and Incident Handling Ø ATLAS Login Account Granting q Requires BNL Guest Number and cyber security training q Currently granting temporary accounts with application for Guest Number and phone verification from known ATLAS sponsor Ø Grid Access Does Not Require Local Account q Does require Grid certificate and proper Virtual Organization membership Ø User Traceability Required q Grid users are mapped to unique local accounts q US ATLAS Pan. DA will use “glexec” (part of Condor startd) to allow job re-authentication as payload user Ø Cyber Security Incidences Response q WLCG/OSG security protocols exist to protect against damage and contain problems q Bob Cowles (SLAC) is U. S. ATLAS cyber security officer q BNL cyber security as well as BNL Tier-1 are involved in defining and acting when these protocols are invoked q BNL Tier-1, with local full ESD copy, has reduced sensitivity to disruption by an incident elsewhere in the WLCG M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 28

Site Cyber Access and Incident Handling Ø ATLAS Login Account Granting q Requires BNL Guest Number and cyber security training q Currently granting temporary accounts with application for Guest Number and phone verification from known ATLAS sponsor Ø Grid Access Does Not Require Local Account q Does require Grid certificate and proper Virtual Organization membership Ø User Traceability Required q Grid users are mapped to unique local accounts q US ATLAS Pan. DA will use “glexec” (part of Condor startd) to allow job re-authentication as payload user Ø Cyber Security Incidences Response q WLCG/OSG security protocols exist to protect against damage and contain problems q Bob Cowles (SLAC) is U. S. ATLAS cyber security officer q BNL cyber security as well as BNL Tier-1 are involved in defining and acting when these protocols are invoked q BNL Tier-1, with local full ESD copy, has reduced sensitivity to disruption by an incident elsewhere in the WLCG M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 28

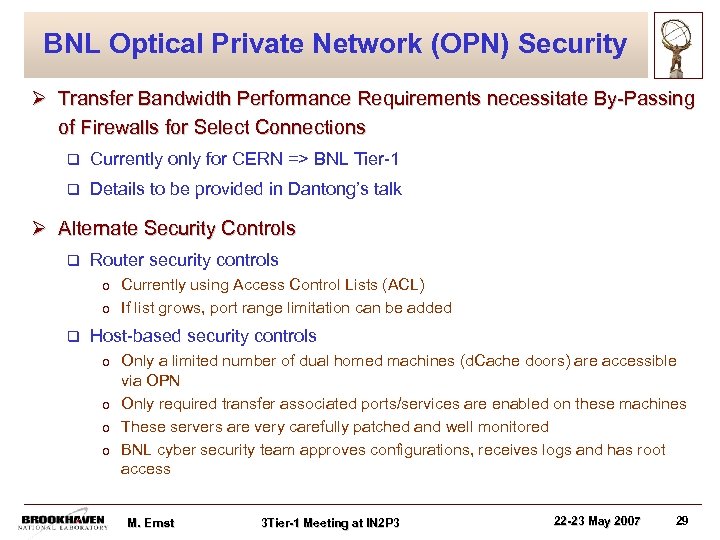

BNL Optical Private Network (OPN) Security Ø Transfer Bandwidth Performance Requirements necessitate By-Passing of Firewalls for Select Connections q Currently only for CERN => BNL Tier-1 q Details to be provided in Dantong’s talk Ø Alternate Security Controls q Router security controls o o q Currently using Access Control Lists (ACL) If list grows, port range limitation can be added Host-based security controls o o Only a limited number of dual homed machines (d. Cache doors) are accessible via OPN Only required transfer associated ports/services are enabled on these machines These servers are very carefully patched and well monitored BNL cyber security team approves configurations, receives logs and has root access M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 29

BNL Optical Private Network (OPN) Security Ø Transfer Bandwidth Performance Requirements necessitate By-Passing of Firewalls for Select Connections q Currently only for CERN => BNL Tier-1 q Details to be provided in Dantong’s talk Ø Alternate Security Controls q Router security controls o o q Currently using Access Control Lists (ACL) If list grows, port range limitation can be added Host-based security controls o o Only a limited number of dual homed machines (d. Cache doors) are accessible via OPN Only required transfer associated ports/services are enabled on these machines These servers are very carefully patched and well monitored BNL cyber security team approves configurations, receives logs and has root access M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 29

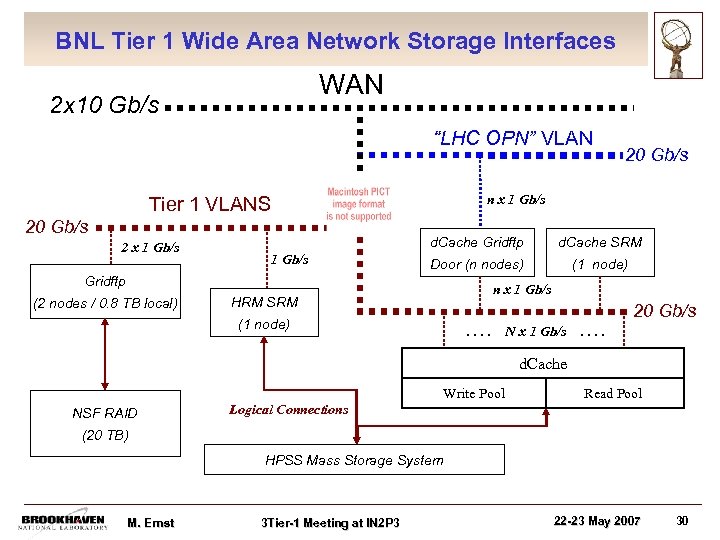

BNL Wide Area Network Storage Interfaces Tier 1 WAN Storage Interfaces WAN 2 x 10 Gb/s “LHC OPN” VLAN n x 1 Gb/s Tier 1 VLANS 20 Gb/s 2 x 1 Gb/s d. Cache Gridftp 1 Gb/s d. Cache SRM Door (n nodes) (1 node) Gridftp (2 nodes / 0. 8 TB local) 20 Gb/s n x 1 Gb/s HRM SRM (1 node) 20 Gb/s. . N x 1 Gb/s . . d. Cache Write Pool NSF RAID Read Pool Logical Connections (20 TB) HPSS Mass Storage System M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 30

BNL Wide Area Network Storage Interfaces Tier 1 WAN Storage Interfaces WAN 2 x 10 Gb/s “LHC OPN” VLAN n x 1 Gb/s Tier 1 VLANS 20 Gb/s 2 x 1 Gb/s d. Cache Gridftp 1 Gb/s d. Cache SRM Door (n nodes) (1 node) Gridftp (2 nodes / 0. 8 TB local) 20 Gb/s n x 1 Gb/s HRM SRM (1 node) 20 Gb/s. . N x 1 Gb/s . . d. Cache Write Pool NSF RAID Read Pool Logical Connections (20 TB) HPSS Mass Storage System M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 30

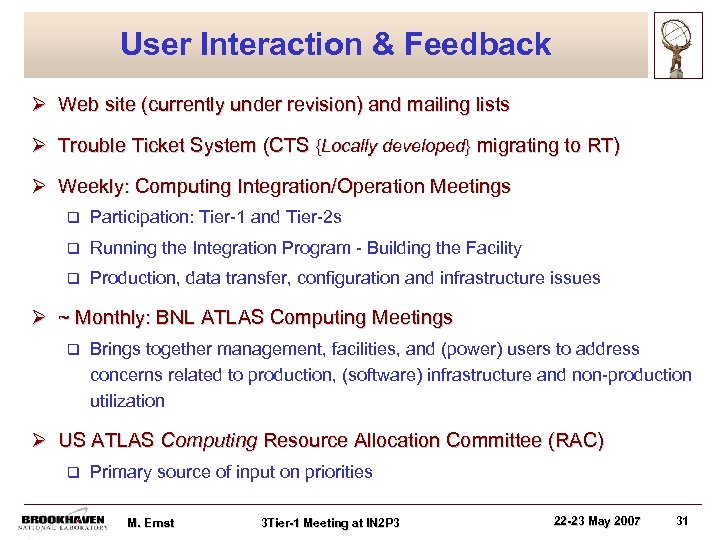

User Interaction & Feedback Ø Web site (currently under revision) and mailing lists Ø Trouble Ticket System (CTS {Locally developed} migrating to RT) Ø Weekly: Computing Integration/Operation Meetings q Participation: Tier-1 and Tier-2 s q Running the Integration Program - Building the Facility q Production, data transfer, configuration and infrastructure issues Ø ~ Monthly: BNL ATLAS Computing Meetings q Brings together management, facilities, and (power) users to address concerns related to production, (software) infrastructure and non-production utilization Ø US ATLAS Computing Resource Allocation Committee (RAC) q Primary source of input on priorities M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 31

User Interaction & Feedback Ø Web site (currently under revision) and mailing lists Ø Trouble Ticket System (CTS {Locally developed} migrating to RT) Ø Weekly: Computing Integration/Operation Meetings q Participation: Tier-1 and Tier-2 s q Running the Integration Program - Building the Facility q Production, data transfer, configuration and infrastructure issues Ø ~ Monthly: BNL ATLAS Computing Meetings q Brings together management, facilities, and (power) users to address concerns related to production, (software) infrastructure and non-production utilization Ø US ATLAS Computing Resource Allocation Committee (RAC) q Primary source of input on priorities M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 31

Summary Ø The BNL Tier-1 serves as the hub and principal center of the US community, with scale-up for data taking underway Ø US ATLAS Tier-1 facility at BNL is on track to meet the performance and capacity requirements of the ATLAS computing model augmented to supply appropriate additional support to US physicists Ø The facilities, both Tier-1 and Tier-2’s, have performed well in both ATLAS computer system commissioning and WLCG service challenges q An Integration Program is needed to ensure readiness in view of the complexity and the steep rampup Ø Staffing pressure continues at the Tier-1 due to: … rapid growth in capacity and usage, both programmatic and chaotic q … support role for Tier 2’s (and Production development & operations) q … need for in-house expertise in growing number of critical areas q o q Database admin and usage optimization this year added to … data storage, management and transfer, user and access management, etc. Staff growth to address this is critically important so recruiting will continue to be an major activity this year Ø Space, Power & Cooling at the Tier-1 center on the critical path M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 32

Summary Ø The BNL Tier-1 serves as the hub and principal center of the US community, with scale-up for data taking underway Ø US ATLAS Tier-1 facility at BNL is on track to meet the performance and capacity requirements of the ATLAS computing model augmented to supply appropriate additional support to US physicists Ø The facilities, both Tier-1 and Tier-2’s, have performed well in both ATLAS computer system commissioning and WLCG service challenges q An Integration Program is needed to ensure readiness in view of the complexity and the steep rampup Ø Staffing pressure continues at the Tier-1 due to: … rapid growth in capacity and usage, both programmatic and chaotic q … support role for Tier 2’s (and Production development & operations) q … need for in-house expertise in growing number of critical areas q o q Database admin and usage optimization this year added to … data storage, management and transfer, user and access management, etc. Staff growth to address this is critically important so recruiting will continue to be an major activity this year Ø Space, Power & Cooling at the Tier-1 center on the critical path M. Ernst 3 Tier-1 Meeting at IN 2 P 3 22 -23 May 2007 32