f3ebc502531518140d1ef582426f065b.ppt

- Количество слайдов: 82

Overview of Our Sensors For Robotics

Machine vision • Computer vision • To recover useful information about a scene from its 2 -D projections. • To take images as inputs and produce other types of outputs (object shape, object contour, etc. ) • Geometry + Measurement + Interpretation • To create a model of the real world from images.

Topics • Computer vision system • Image enhancement • Image analysis • Pattern Classification

Related fields • Image processing – Transformation of images into other images – Image compression, image enhancement – Useful in early stages of a machine vision system • • Computer graphics Pattern recognition Artificial intelligence Psychophysics

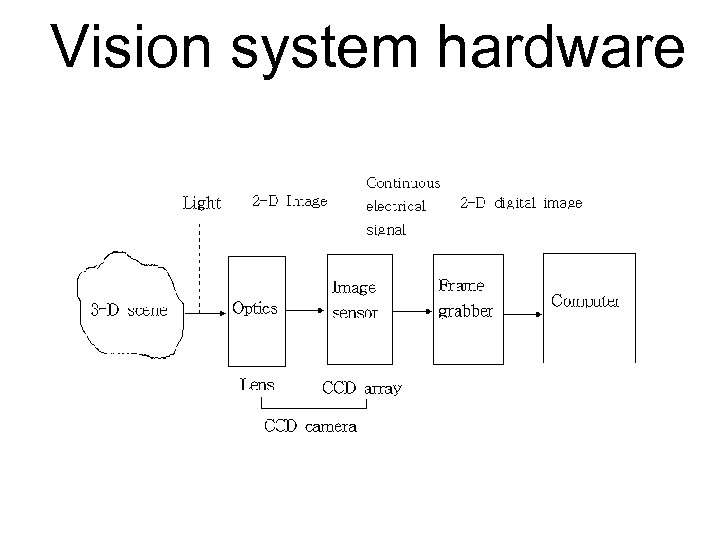

Vision system hardware

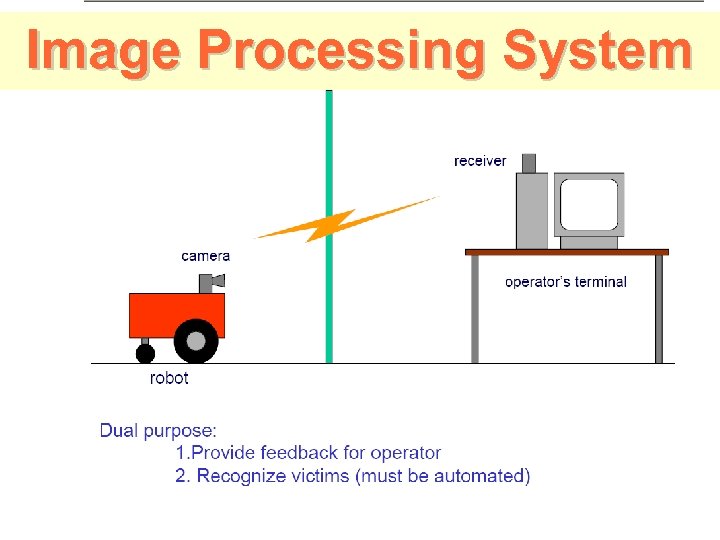

Image Processing System

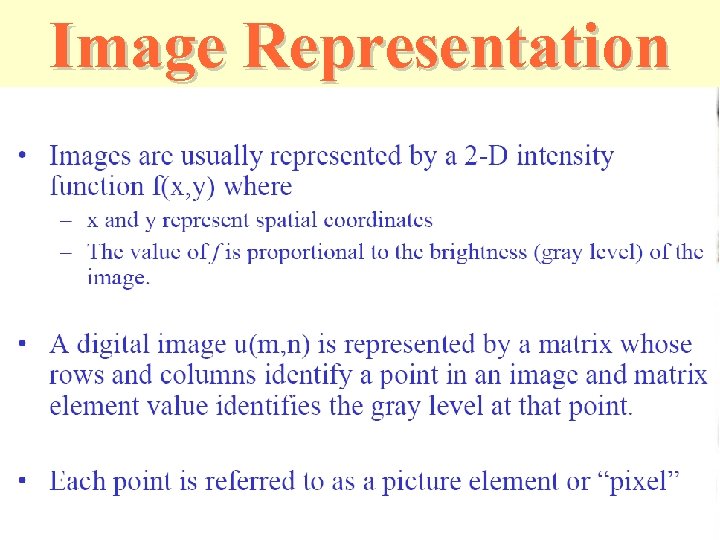

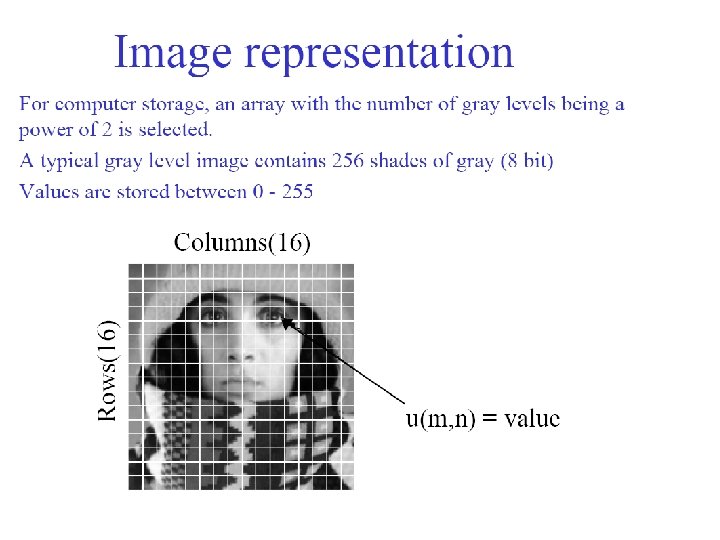

Image Representation

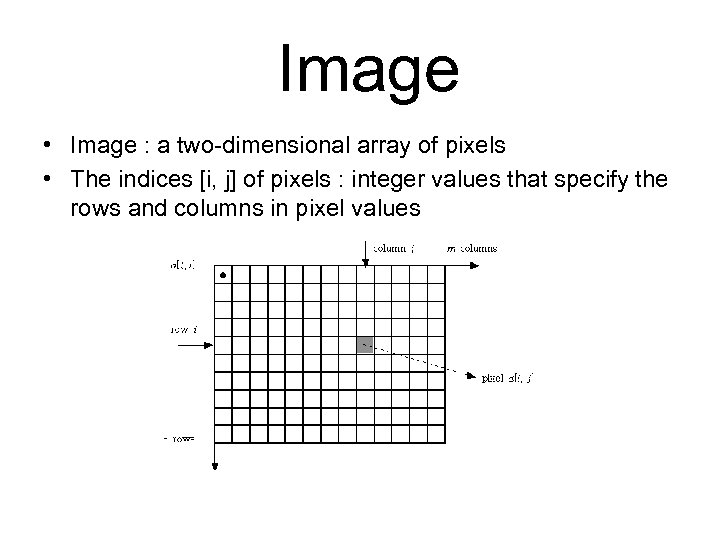

Image • Image : a two-dimensional array of pixels • The indices [i, j] of pixels : integer values that specify the rows and columns in pixel values

Sampling, pixeling and quantization • Sampling – The real image is sampled at a finite number of points. – Sampling rate : image resolution • how many pixels the digital image will have • e. g. ) 640 x 480, 320 x 240, etc. • Pixel – Each image sample – At the sample point, an integer value of the image intensity

• Quantization – Each sample is represented with the finite word size of the computer. – How many intensity levels can be used to represent the intensity value at each sample point. – e. g. ) 28 = 256, 25 = 32, etc.

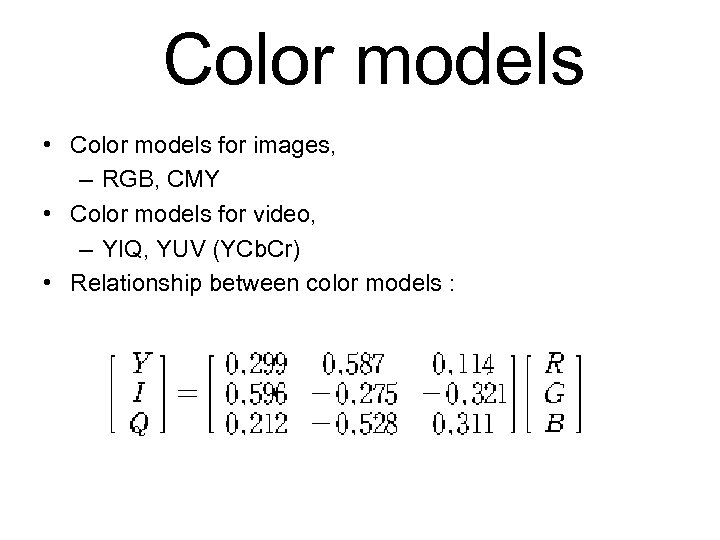

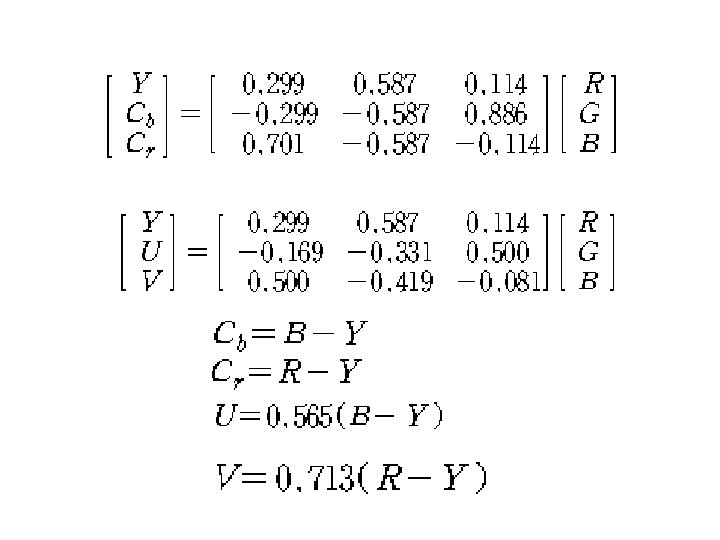

Color models • Color models for images, – RGB, CMY • Color models for video, – YIQ, YUV (YCb. Cr) • Relationship between color models :

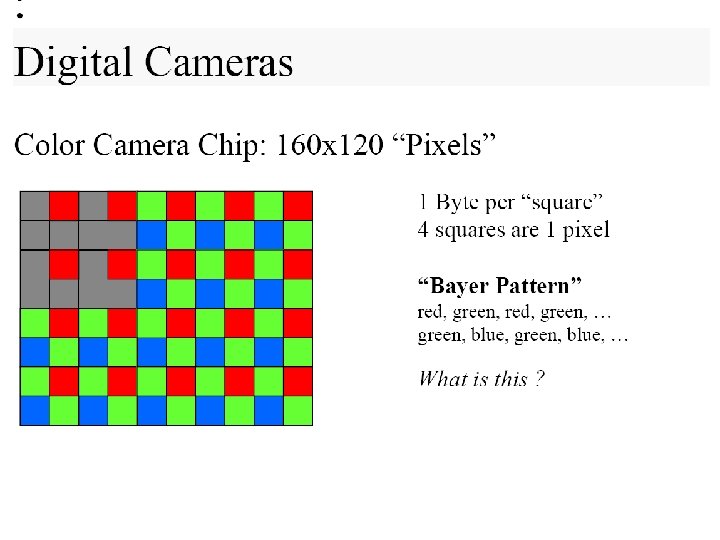

6. 7. Digital Cameras

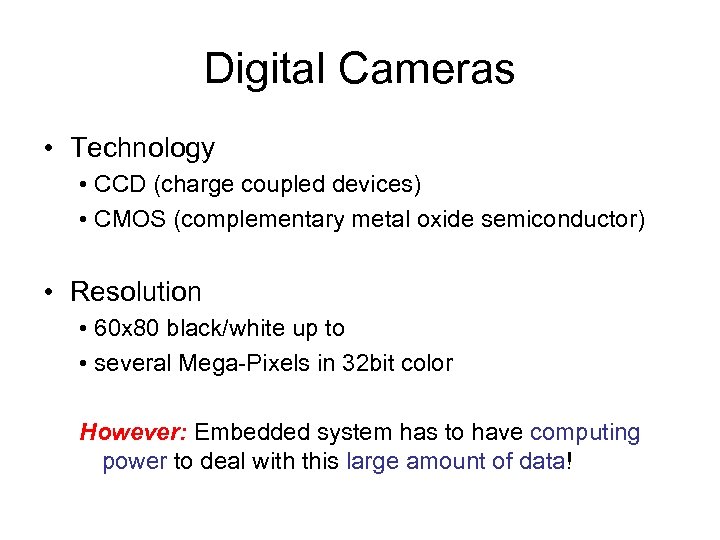

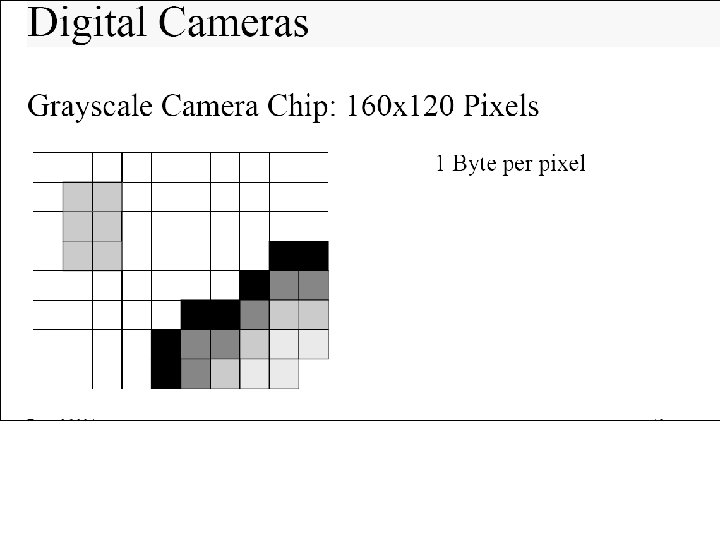

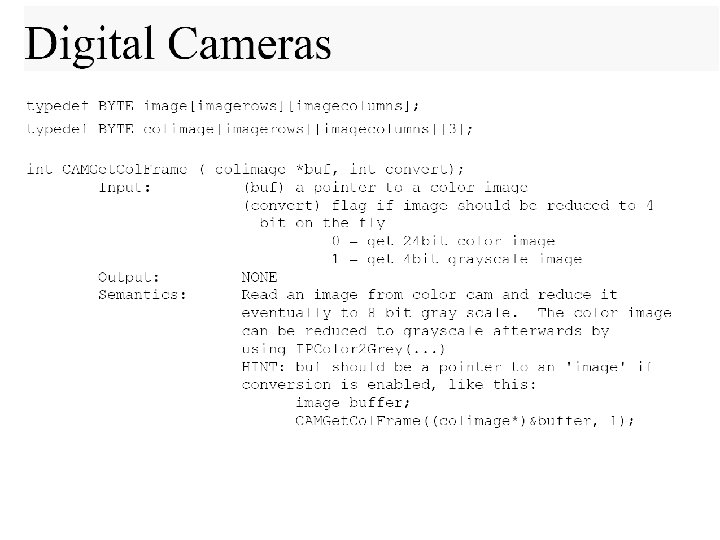

Digital Cameras • Technology • CCD (charge coupled devices) • CMOS (complementary metal oxide semiconductor) • Resolution • 60 x 80 black/white up to • several Mega-Pixels in 32 bit color However: Embedded system has to have computing power to deal with this large amount of data!

Vision (camera + framegrabber)

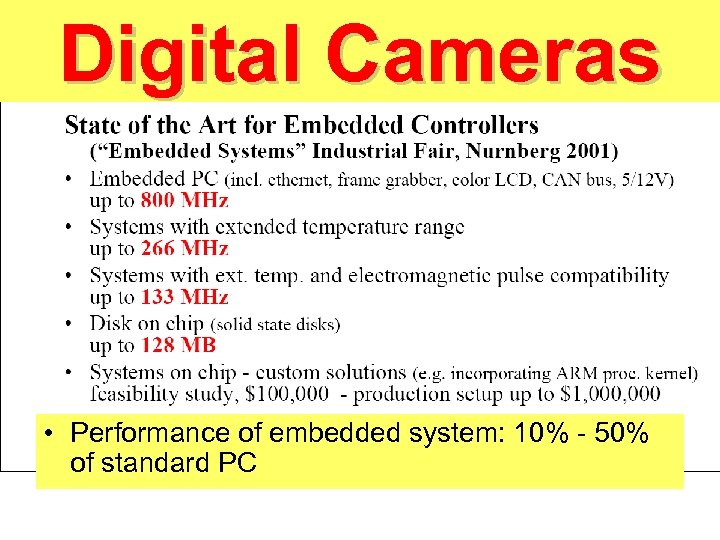

Digital Cameras • Performance of embedded system: 10% - 50% of standard PC

Interfacing Digital Cameras to CPU • Interfacing to CPU: • Completely depends on sensor chip specs • Many sensors provide several different interfacing protocols • versatile in hardware design • software gets very complicated • Typically: 8 bit parallel (or 4, 16, serial) • Numerous control signals required

Interfacing Digital Cameras to CPU • Digital camera sensors are very complex units. – In many respects they are themselves similar to an embedded controller chip. • Some sensors buffer camera data and allow slow reading via handshake (ideal for slow microprocessors) • Most sensors send full image as a stream after start signal – (CPU must be fast enough to read or use hardware buffer or DMA) • We will not go into further details in this course. However, we consider camera access routines

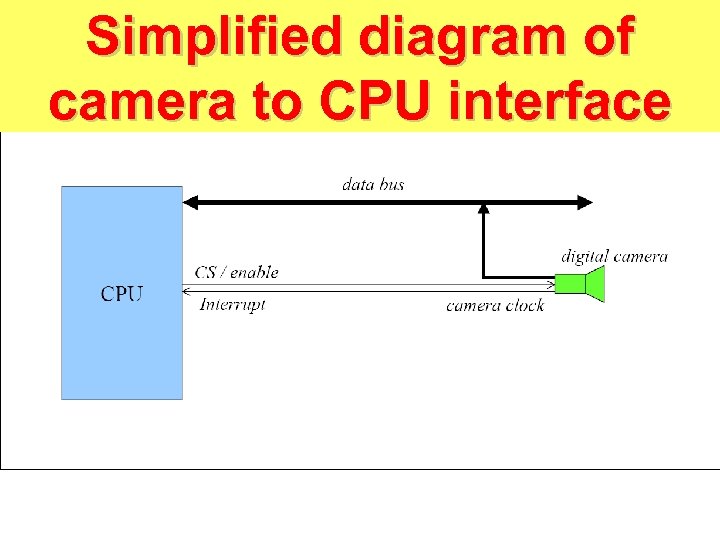

Simplified diagram of camera to CPU interface

Problem with Digital Cameras • Problem • Every pixel from the camera causes an interrupt • Interrupt service routines take long, since they need to store register contents on the stack • Everything is slowed down • Solution • Use RAM buffer for image and read full image with single interrupt

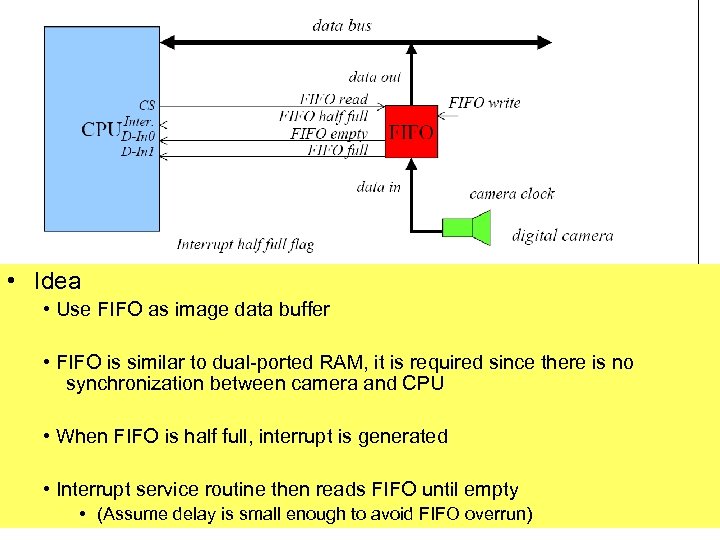

• Idea • Use FIFO as image data buffer • FIFO is similar to dual-ported RAM, it is required since there is no synchronization between camera and CPU • When FIFO is half full, interrupt is generated • Interrupt service routine then reads FIFO until empty • (Assume delay is small enough to avoid FIFO overrun)

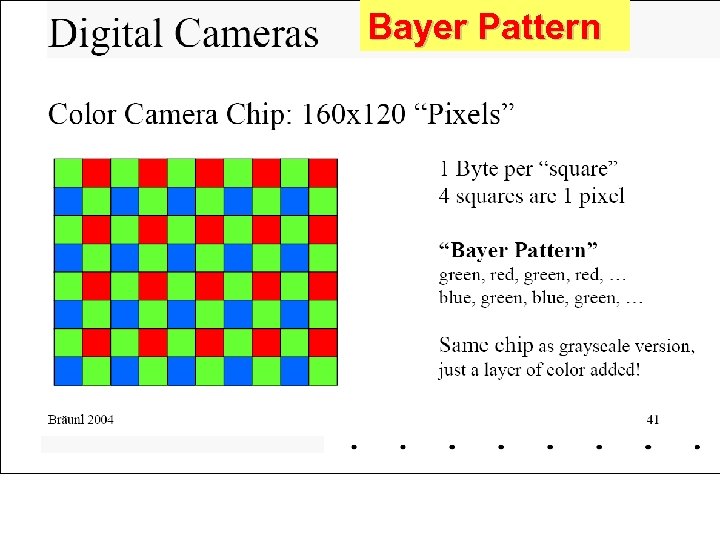

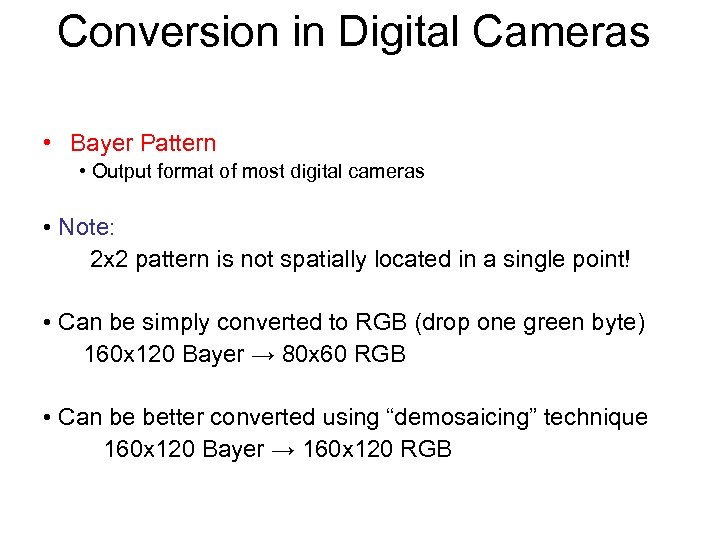

Bayer Pattern

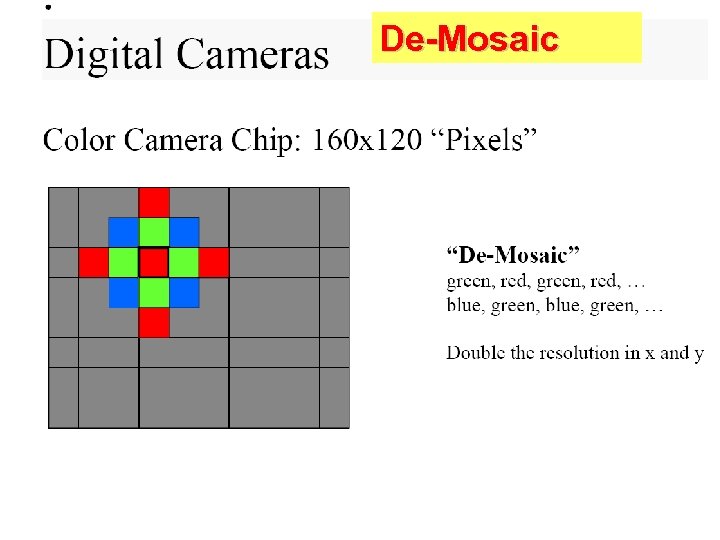

De-Mosaic

Conversion in Digital Cameras • Bayer Pattern • Output format of most digital cameras • Note: 2 x 2 pattern is not spatially located in a single point! • Can be simply converted to RGB (drop one green byte) 160 x 120 Bayer → 80 x 60 RGB • Can be better converted using “demosaicing” technique 160 x 120 Bayer → 160 x 120 RGB

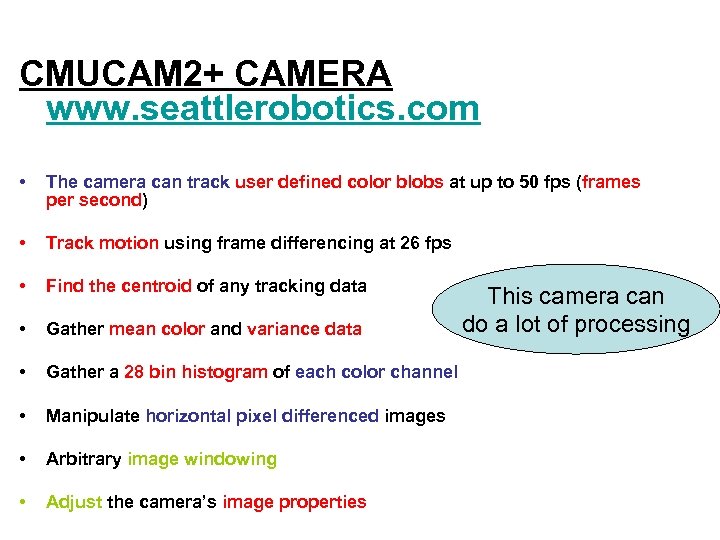

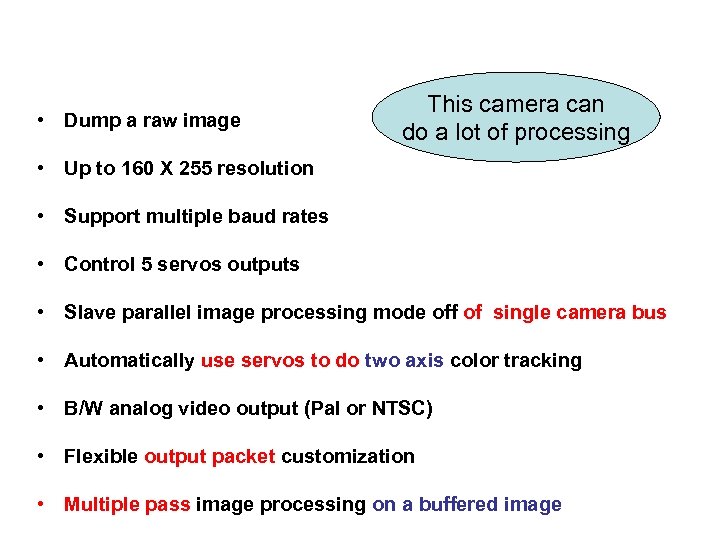

CMUCAM 2+ CAMERA www. seattlerobotics. com • The camera can track user defined color blobs at up to 50 fps (frames per second) • Track motion using frame differencing at 26 fps • Find the centroid of any tracking data • Gather mean color and variance data • Gather a 28 bin histogram of each color channel • Manipulate horizontal pixel differenced images • Arbitrary image windowing • Adjust the camera’s image properties This camera can do a lot of processing

• Dump a raw image This camera can do a lot of processing • Up to 160 X 255 resolution • Support multiple baud rates • Control 5 servos outputs • Slave parallel image processing mode off of single camera bus • Automatically use servos to do two axis color tracking • B/W analog video output (Pal or NTSC) • Flexible output packet customization • Multiple pass image processing on a buffered image

Vision Guided Robotics and Applications in Industry and Medicine

Contents • • • Robotics in General Industrial Robotics Medical Robotics What can Computer Vision do for Robotics? Vision Sensors Issues / Problems Visual Servoing Application Examples Summary

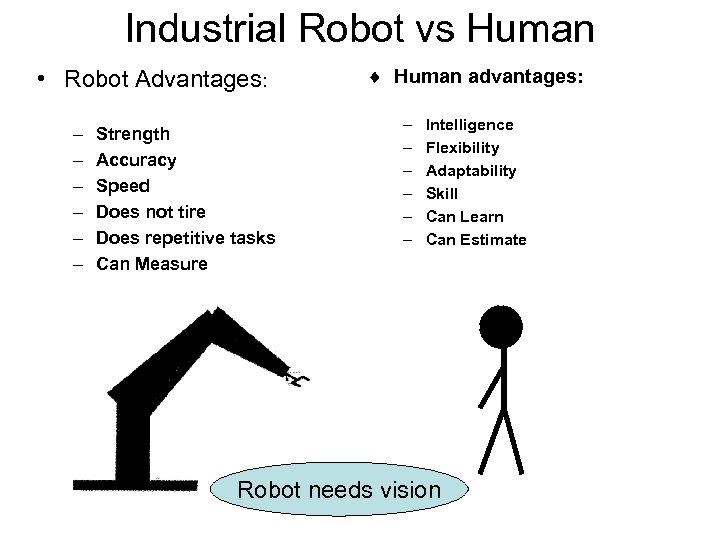

Industrial Robot vs Human • Robot Advantages: – – – Strength Accuracy Speed Does not tire Does repetitive tasks Can Measure ¨ Human advantages: – – – Intelligence Flexibility Adaptability Skill Can Learn Can Estimate Robot needs vision

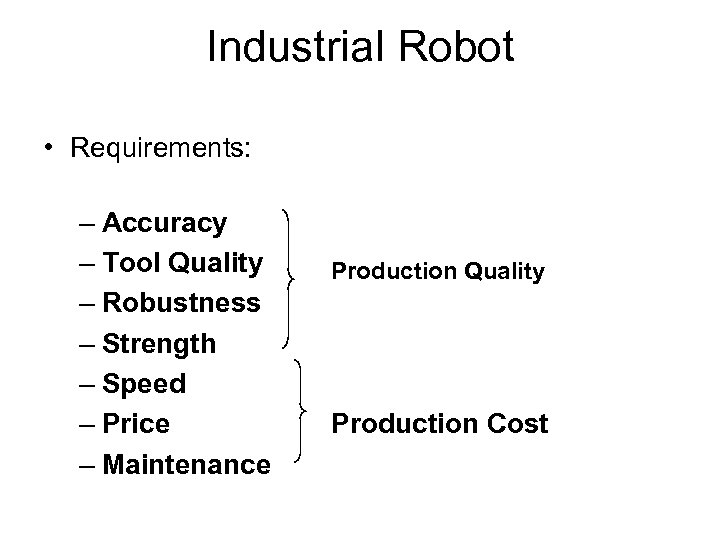

Industrial Robot • Requirements: – Accuracy – Tool Quality – Robustness – Strength – Speed – Price – Maintenance Production Quality Production Cost

Medical (Surgical) Robot • Requirements – Safety – Accuracy – Reliability – Tool Quality – Price – Maintenance – Man-Machine Interface

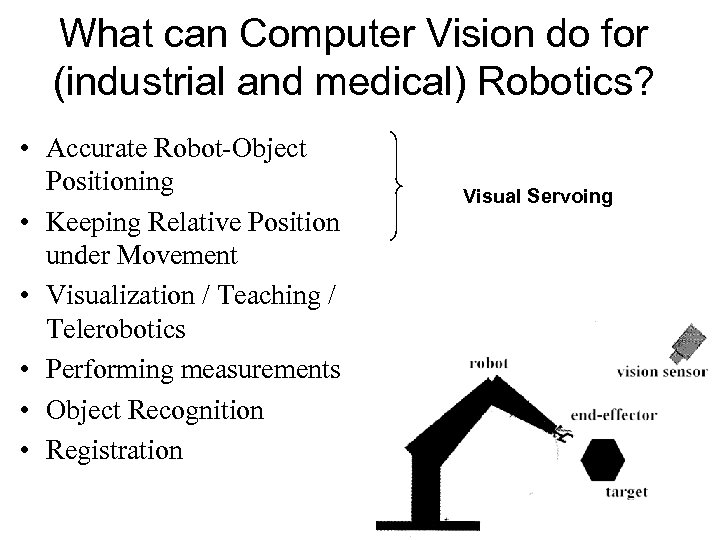

What can Computer Vision do for (industrial and medical) Robotics? • Accurate Robot-Object Positioning • Keeping Relative Position under Movement • Visualization / Teaching / Telerobotics • Performing measurements • Object Recognition • Registration Visual Servoing

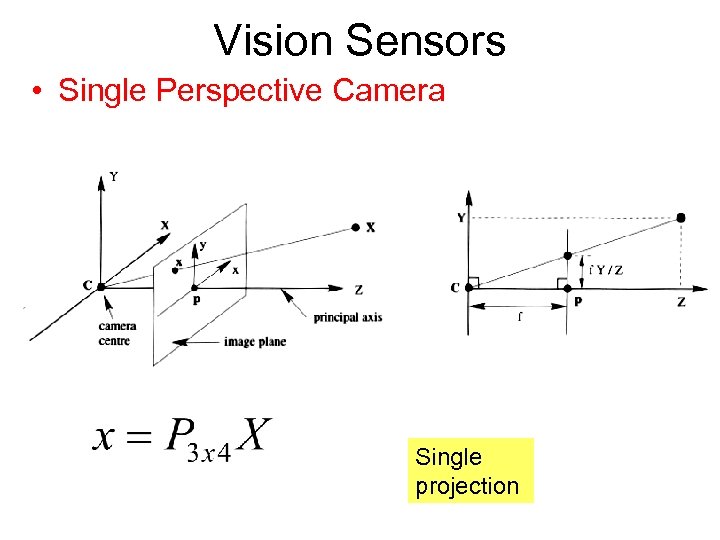

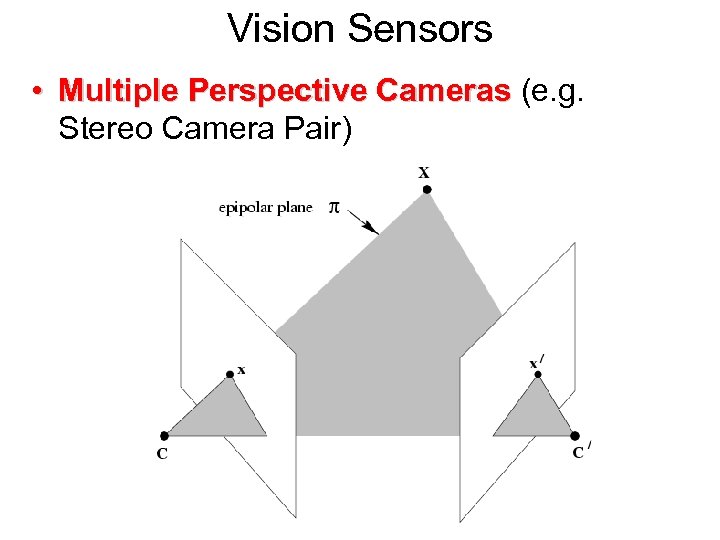

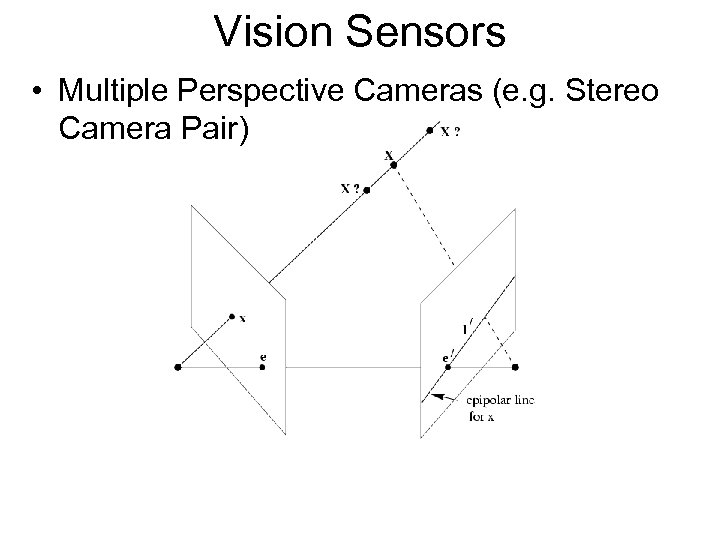

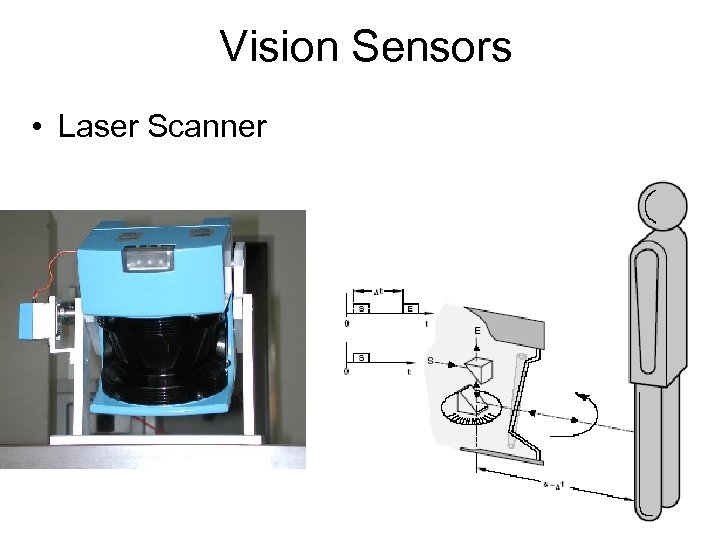

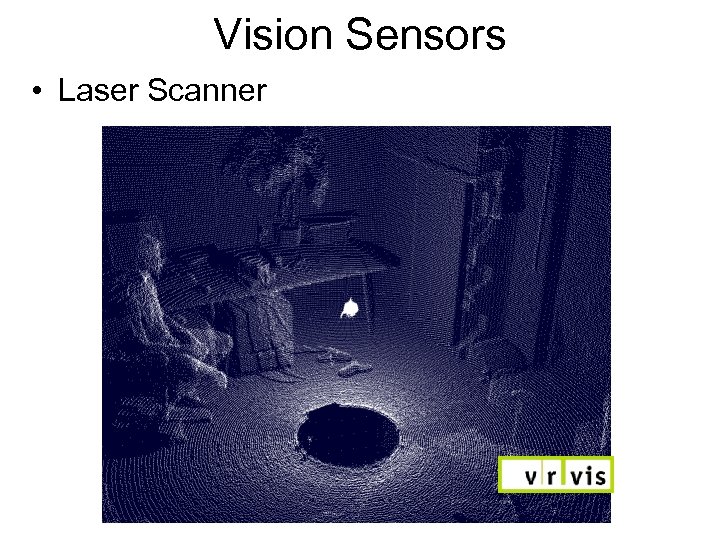

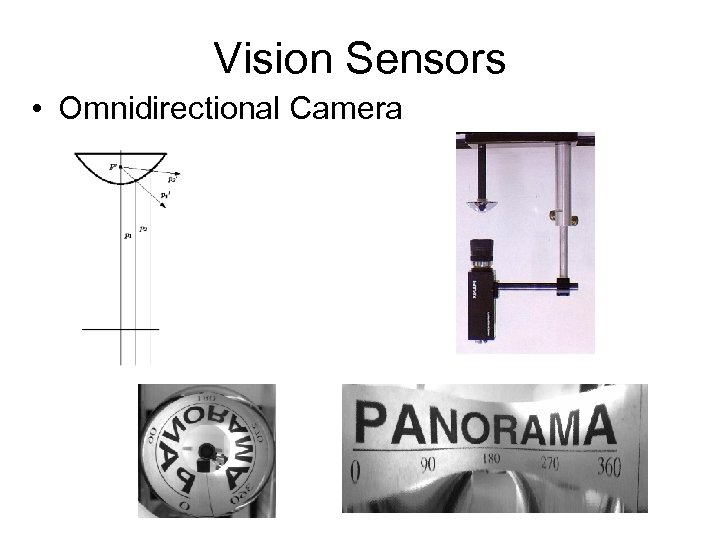

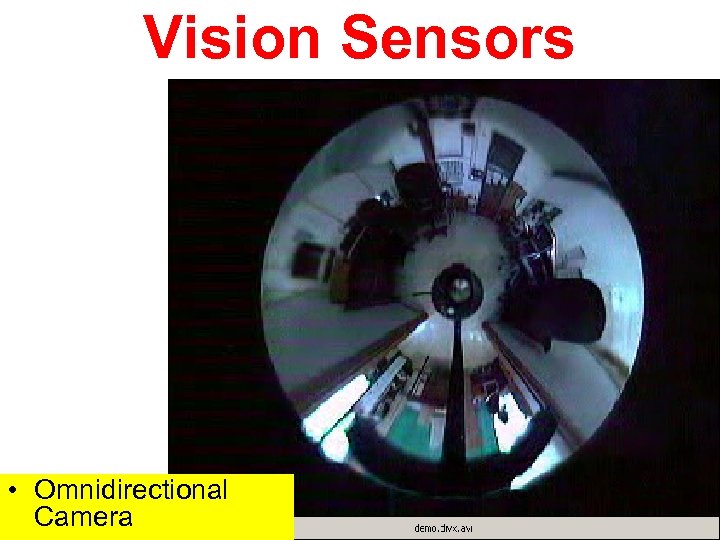

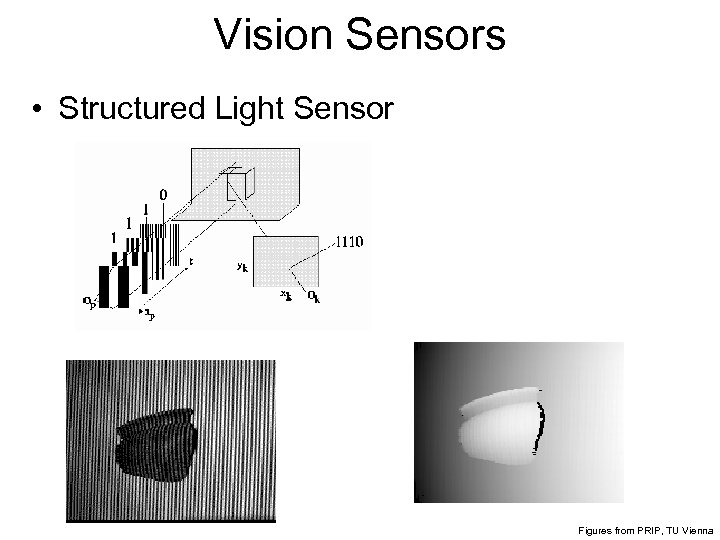

Vision Sensors • Single Perspective Camera • Multiple Perspective Cameras (e. g. Stereo Camera Pair) • Laser Scanner • Omnidirectional Camera • Structured Light Sensor

Vision Sensors • Single Perspective Camera Single projection

Vision Sensors • Multiple Perspective Cameras (e. g. Cameras Stereo Camera Pair)

Vision Sensors • Multiple Perspective Cameras (e. g. Stereo Camera Pair)

Vision Sensors • Laser Scanner

Vision Sensors • Laser Scanner

Vision Sensors • Omnidirectional Camera

Vision Sensors • Omnidirectional Camera

Vision Sensors • Structured Light Sensor Figures from PRIP, TU Vienna

Issues/Problems of Vision Guided Robotics • Measurement Frequency • Measurement Uncertainty • Occlusion, Camera Positioning • Sensor dimensions

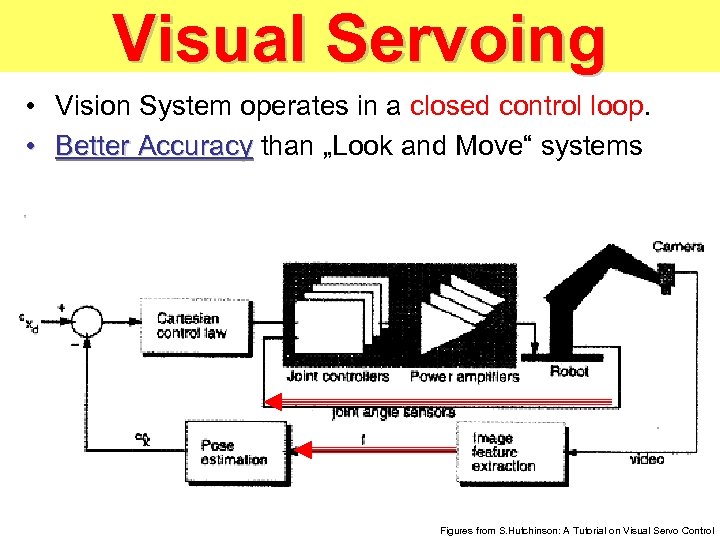

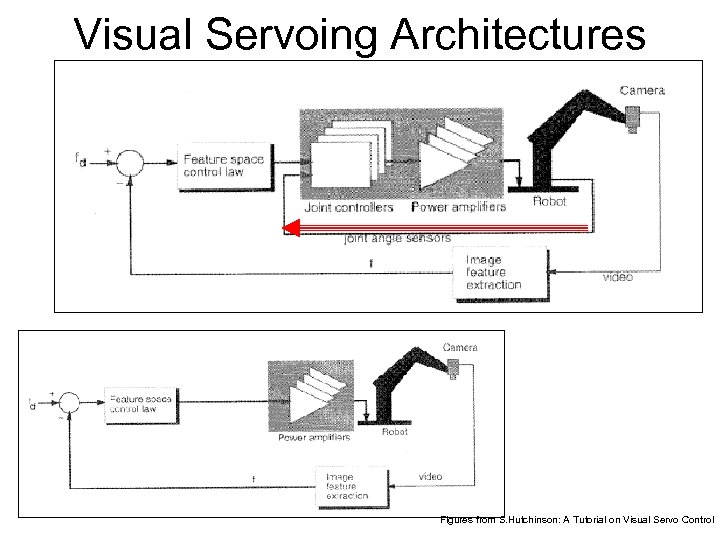

Visual Servoing • Vision System operates in a closed control loop. • Better Accuracy than „Look and Move“ systems Better Accuracy Figures from S. Hutchinson: A Tutorial on Visual Servo Control

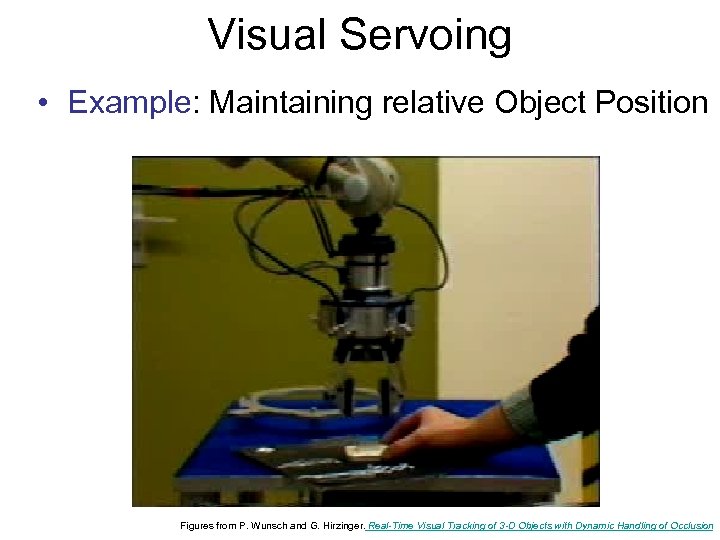

Visual Servoing • Example: Maintaining relative Object Position Figures from P. Wunsch and G. Hirzinger. Real-Time Visual Tracking of 3 -D Objects with Dynamic Handling of Occlusion

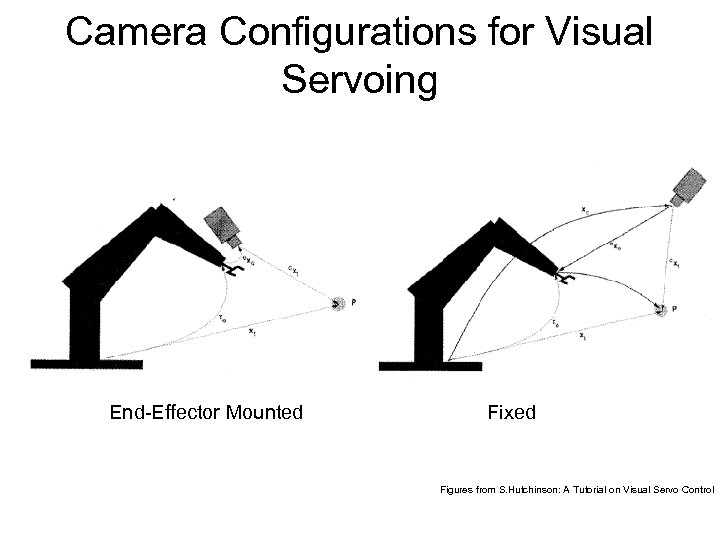

Camera Configurations for Visual Servoing End-Effector Mounted Fixed Figures from S. Hutchinson: A Tutorial on Visual Servo Control

Visual Servoing Architectures Figures from S. Hutchinson: A Tutorial on Visual Servo Control

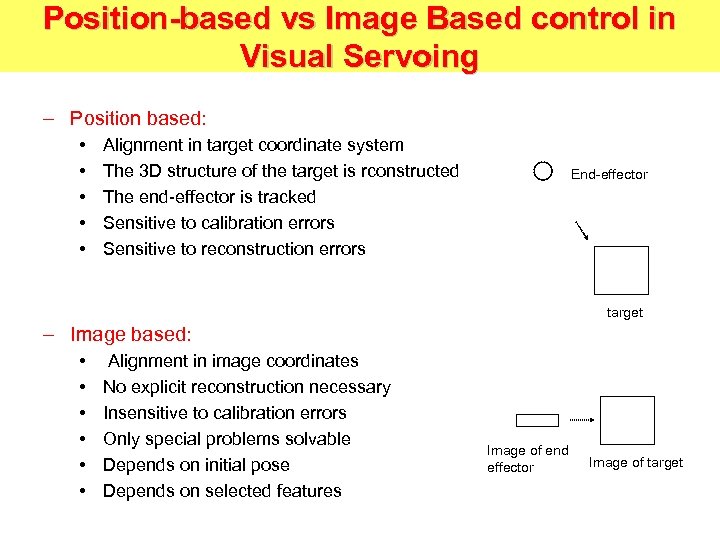

Position-based vs Image Based control in Visual Servoing – Position based: • • • Alignment in target coordinate system The 3 D structure of the target is rconstructed The end-effector is tracked Sensitive to calibration errors Sensitive to reconstruction errors End-effector target – Image based: • • • Alignment in image coordinates No explicit reconstruction necessary Insensitive to calibration errors Only special problems solvable Depends on initial pose Depends on selected features Image of end effector Image of target

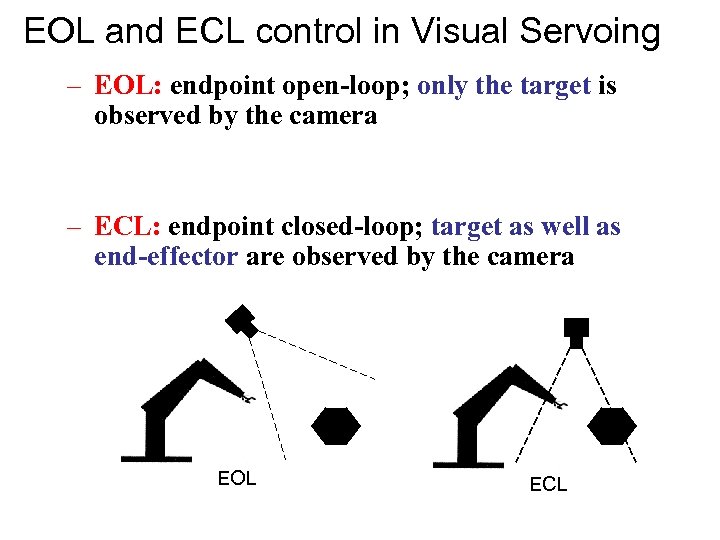

EOL and ECL control in Visual Servoing – EOL: endpoint open-loop; only the target is observed by the camera – ECL: endpoint closed-loop; target as well as end-effector are observed by the camera EOL ECL

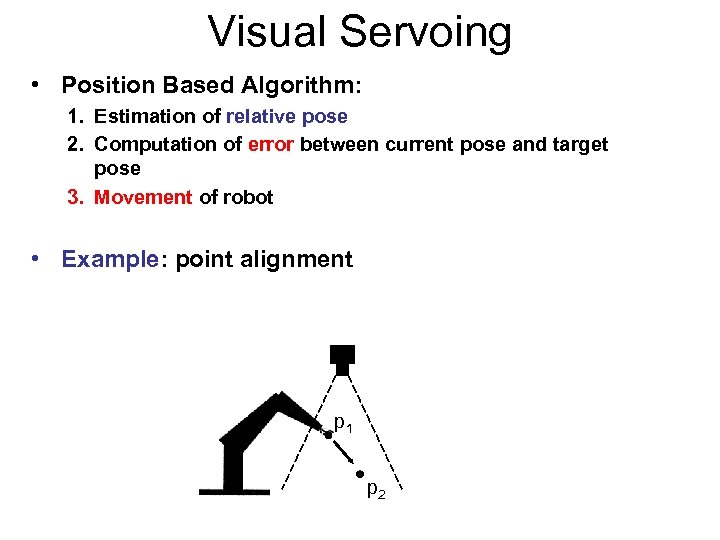

Visual Servoing • Position Based Algorithm: 1. Estimation of relative pose 2. Computation of error between current pose and target pose 3. Movement of robot • Example: point alignment p 1 p 2

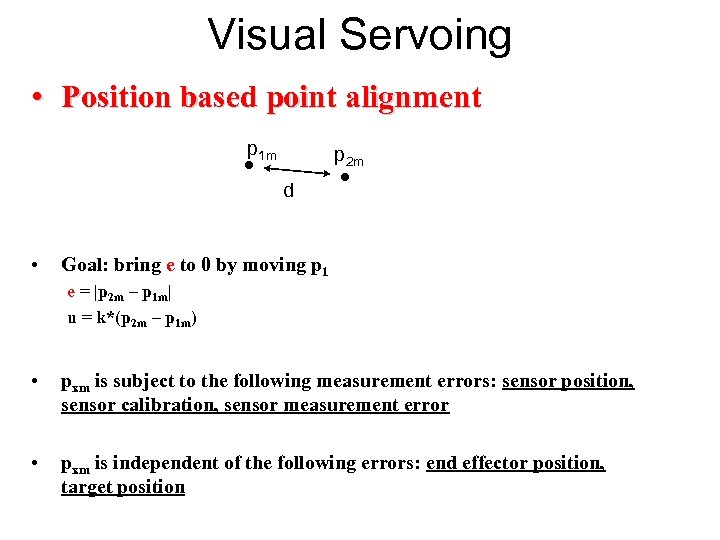

Visual Servoing • Position based point alignment p 1 m p 2 m d • Goal: bring e to 0 by moving p 1 e = |p 2 m – p 1 m| u = k*(p 2 m – p 1 m) • pxm is subject to the following measurement errors: sensor position, sensor calibration, sensor measurement error • pxm is independent of the following errors: end effector position, target position

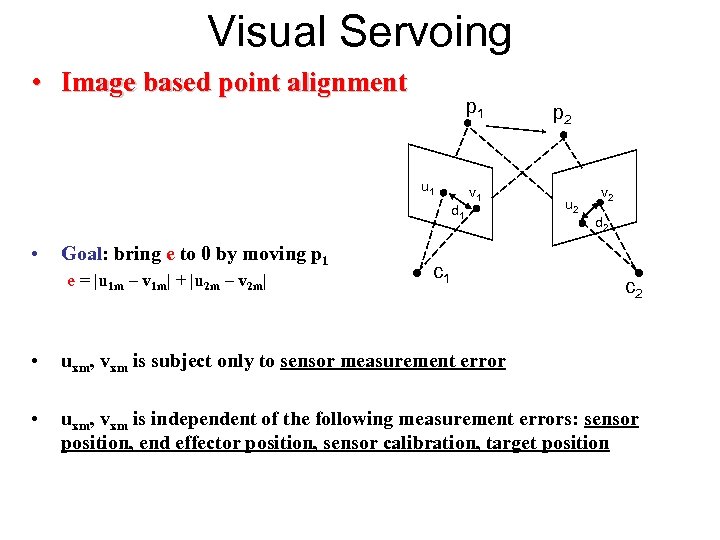

Visual Servoing • Image based point alignment p 1 u 1 d 1 • Goal: bring e to 0 by moving p 1 e = |u 1 m – v 1 m| + |u 2 m – v 2 m| v 1 c 1 p 2 u 2 v 2 d 2 c 2 • uxm, vxm is subject only to sensor measurement error • uxm, vxm is independent of the following measurement errors: sensor position, end effector position, sensor calibration, target position

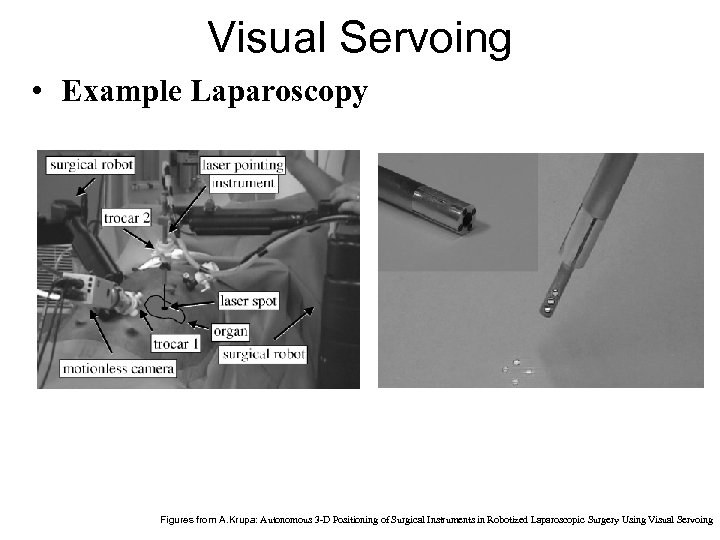

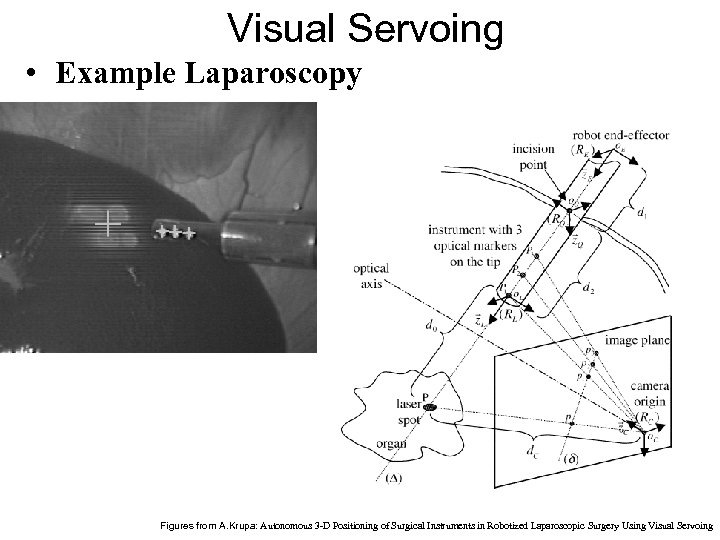

Visual Servoing • Example Laparoscopy Figures from A. Krupa: Autonomous 3 -D Positioning of Surgical Instruments in Robotized Laparoscopic Surgery Using Visual Servoing

Visual Servoing • Example Laparoscopy Figures from A. Krupa: Autonomous 3 -D Positioning of Surgical Instruments in Robotized Laparoscopic Surgery Using Visual Servoing

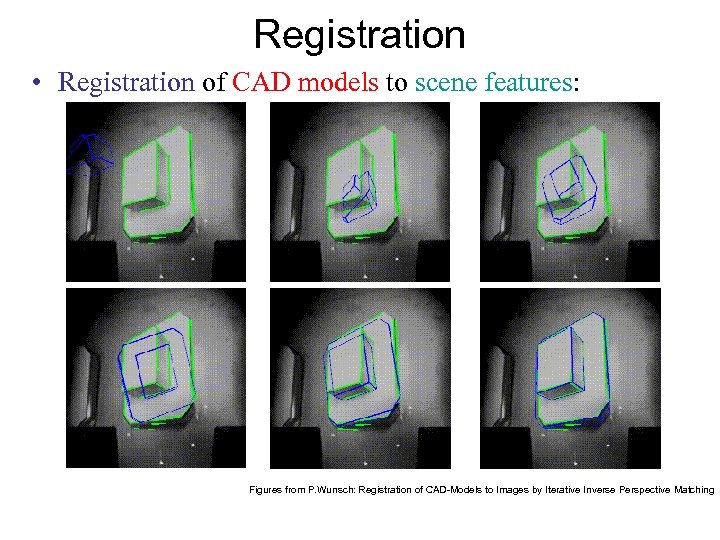

Registration • Registration of CAD models to scene features: Figures from P. Wunsch: Registration of CAD-Models to Images by Iterative Inverse Perspective Matching

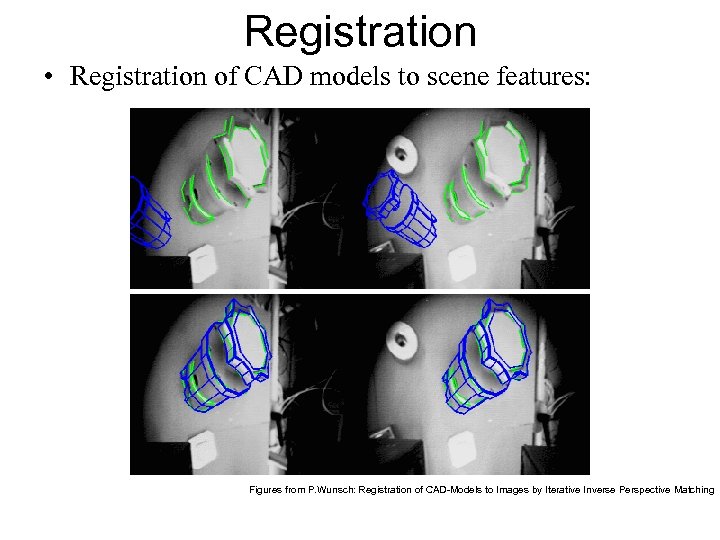

Registration • Registration of CAD models to scene features: Figures from P. Wunsch: Registration of CAD-Models to Images by Iterative Inverse Perspective Matching

Summary on tracking and servoing • Computer Vision provides accurate and versatile measurements for robotic manipulators • With current general purpose hardware, depth and pose measurements can be performed in real time • In industrial robotics, vision systems are deployed in a fully automated way. • In medicine, computer vision can make more intelligent „surgical assistants“ possible.

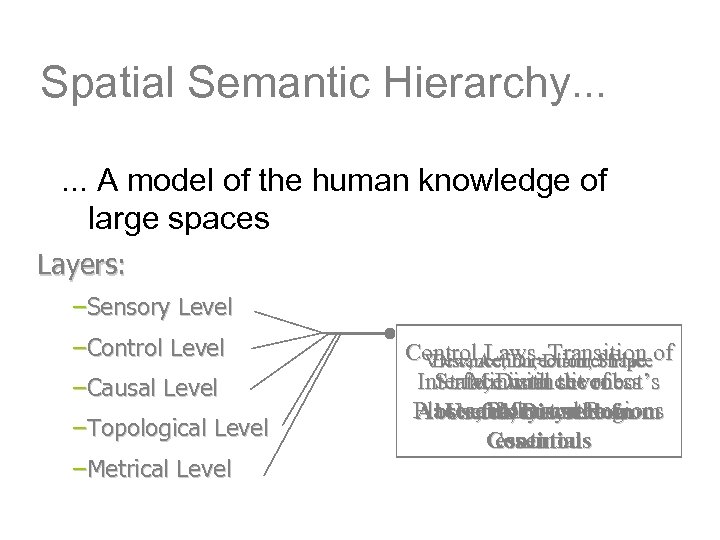

Omnidirectional Vision Systems CABOTO Robot’s task: Building a topological map of an unknown environment; Sensor: Omnidirectional vision system; Work’s aim: Prove effectiveness of omnidirectional sensors for Spatial Semantic Hierarchy; SSH

Spatial Semantic Hierarchy. . . A model of the human knowledge of large spaces Layers: –Sensory Level –Control Level –Causal Level –Topological Level –Metrical Level Control. Action, Distinct Placeof Distance, Direction, Shape View, Laws, Transition Interface with set of State, Distinctiveness Minimal the robot’s Places, Paths and Regions sensory system Measure Useful, but seldom Abstracts Discrete from Continous essential

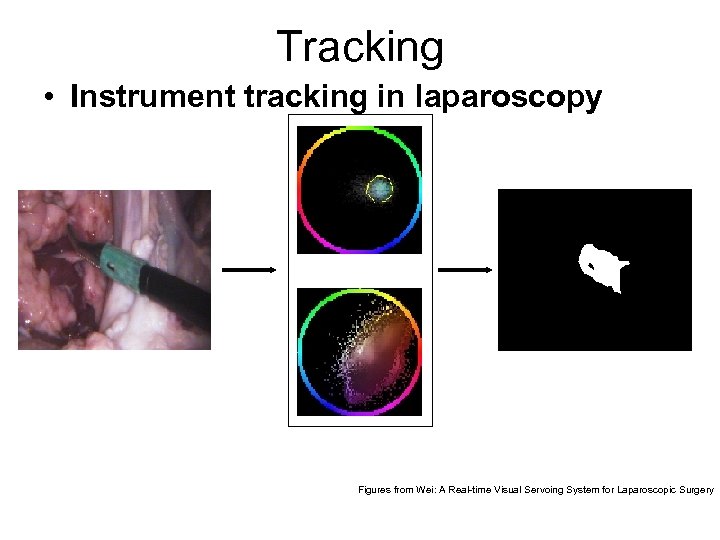

Tracking • Instrument tracking in laparoscopy Figures from Wei: A Real-time Visual Servoing System for Laparoscopic Surgery

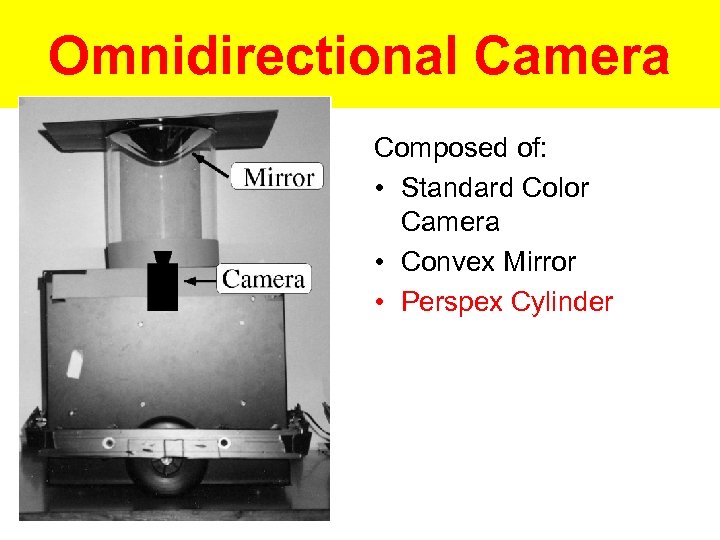

Omnidirectional Camera Composed of: • Standard Color Camera • Convex Mirror • Perspex Cylinder

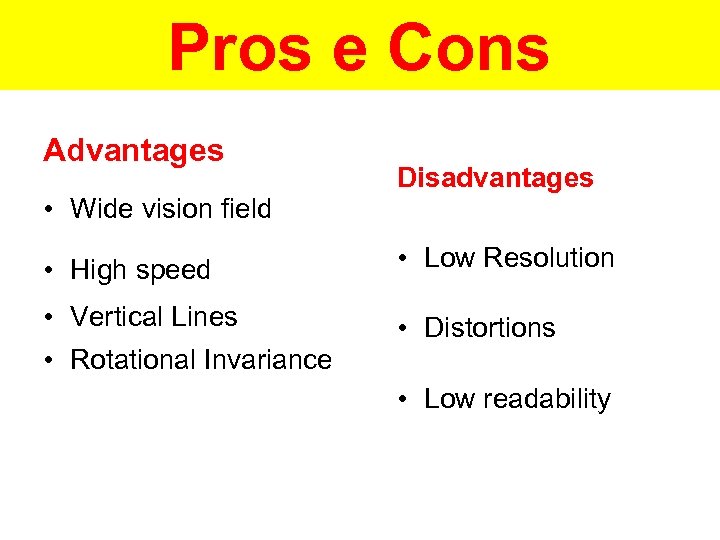

Pros e Cons Advantages • Wide vision field Disadvantages • High speed • Low Resolution • Vertical Lines • Distortions • Rotational Invariance • Low readability

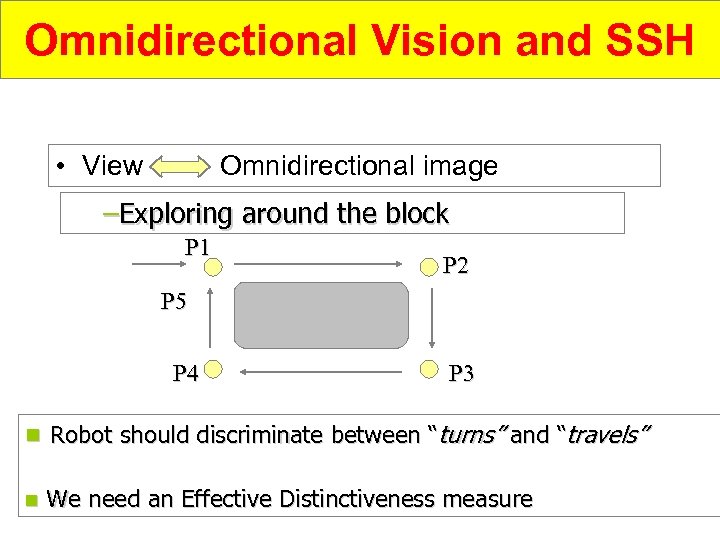

Omnidirectional Vision and SSH • View Omnidirectional image –Exploring around the block P 1 P 2 P 5 P 4 P 3 n Robot should discriminate between “turns” and “travels” n We need an Effective Distinctiveness measure

Assumptions for vision system • Man-made environment • Floor flat and horizontal • Wall and objects surfaces are vertical • Static objects • Constant Lighting • Robot translates or rotates • No encoders

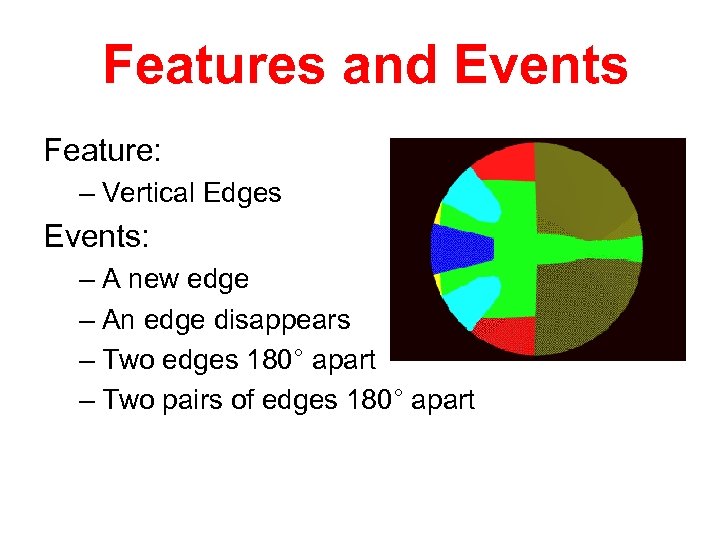

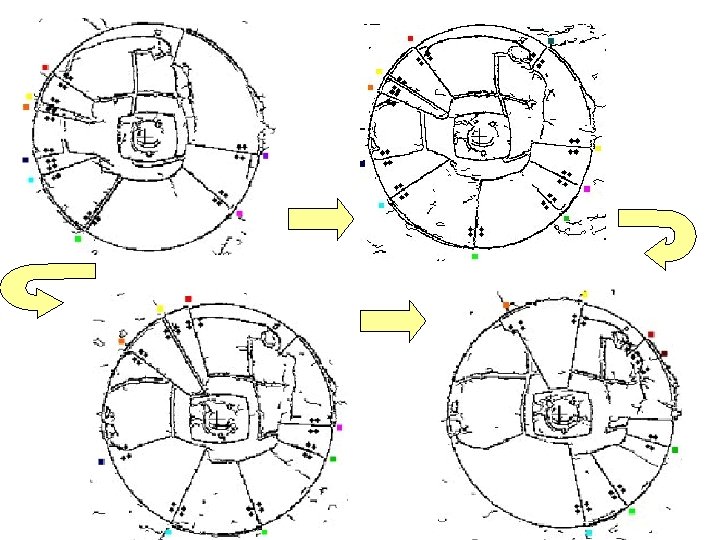

Features and Events Feature: – Vertical Edges Events: – A new edge – An edge disappears – Two edges 180° apart – Two pairs of edges 180° apart

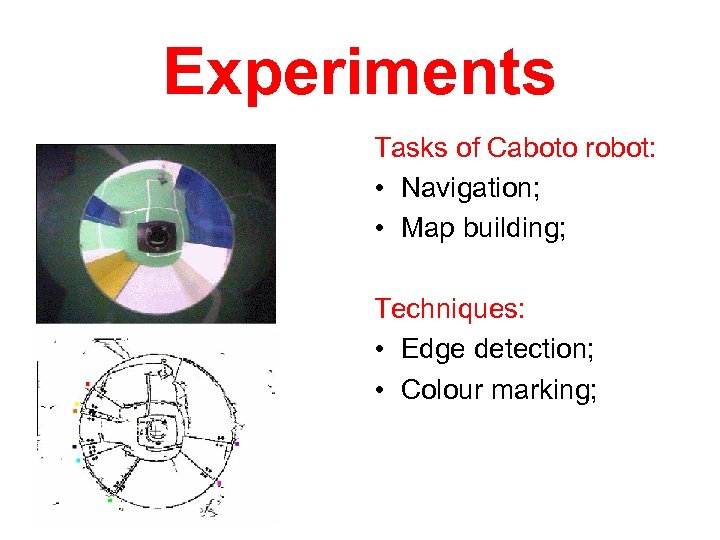

Experiments Tasks of Caboto robot: • Navigation; • Map building; Techniques: • Edge detection; • Colour marking;

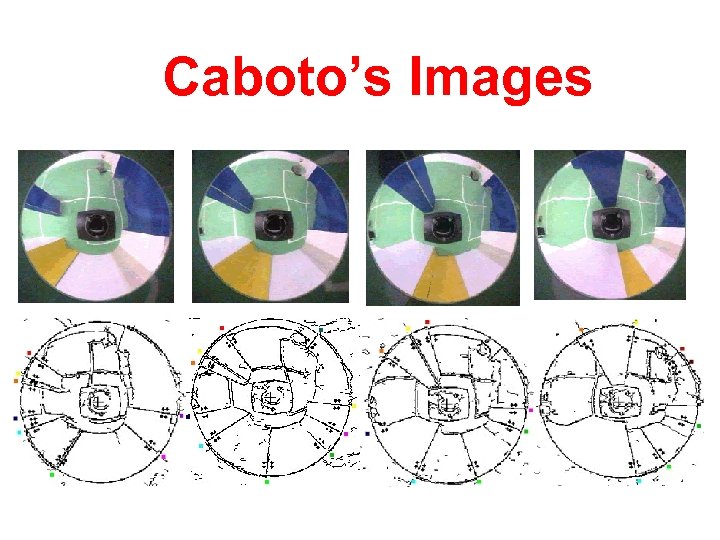

Caboto’s Images

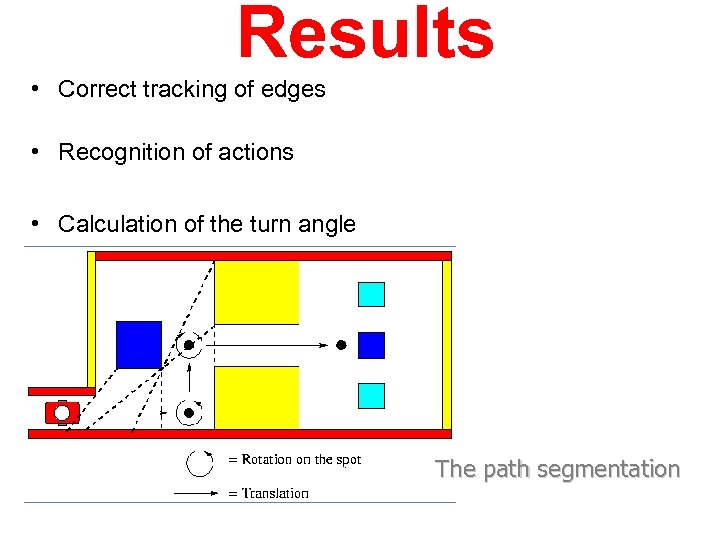

Results • Correct tracking of edges • Recognition of actions • Calculation of the turn angle The path segmentation

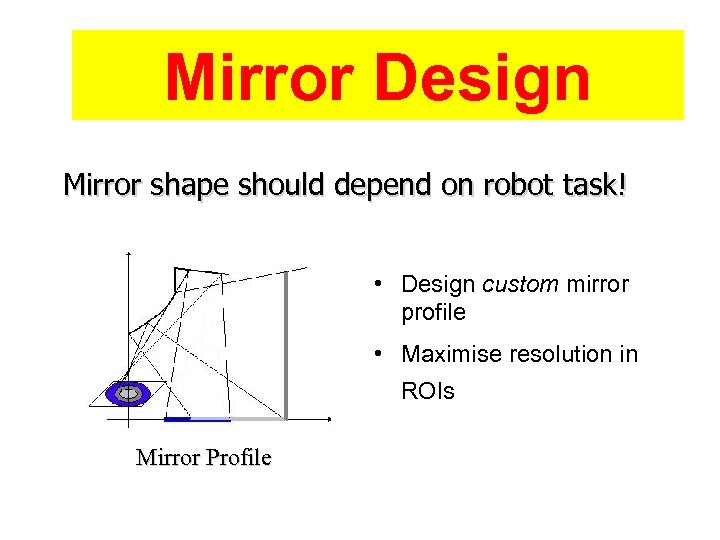

Mirror Design Mirror shape should depend on robot task! • Design custom mirror profile • Maximise resolution in ROIs Mirror Profile

The new mirror

Conclusion on Omnivision camera • Omnidirectional vision sensor is a good sensor for map building with SSH • Motion of the robot was estimated without active vision • The use of a mirror designed for this application will improve the system

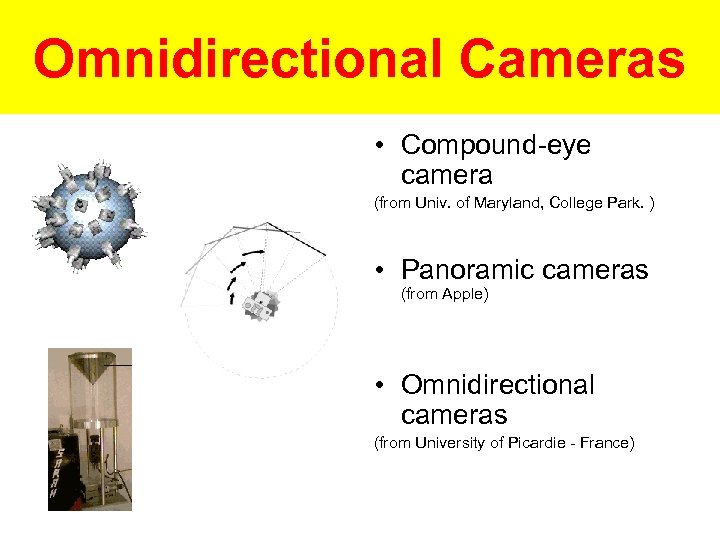

Omnidirectional Cameras • Compound-eye camera (from Univ. of Maryland, College Park. ) • Panoramic cameras (from Apple) • Omnidirectional cameras (from University of Picardie - France)

Student info. • % of lab marks can be deducted if rules and regulation are not followed ex: by not cleaning up your bench or sliding your chairs back underneath bench top. • For more technical information on boards, devices and sensors check out my web page at : www. site. uottawa. ca/~alan • Students are responsible for their own extra parts ex: if you want to add a sensor or device that the dept. doesn’t have you are responsible for the purchase and delivery of that part, on rare occasion did the school purchase those parts. • Back packs off bench tops • TA’s will have student # based on station # • Important issue regarding the design of a new project is to do a current analysis before the start of your design • Setup a leader among your team so that you are better organized • Do not wait, before starting your project start now ! • Prepare yourself before coming to the lab • It doesn’t work ! Ask yourself is it software or hardware, use the scope to trouble shoot • Fuses keeps on blowing, stop and do some investigation. • Do not cut any servo, battery and other device wire connectors. If you must please come and see me • No design must exceed 50 volts, ex: do not work with 120 volts AC • I can give you what I have regarding metal, wood and plastic recycled pieces and do some cuts or holes with my band saw and drill press for you, • PLEASE DO NOT ask me to barrow my tools. If you need to do a task with a special tool that I have then I shall do it for you.

Problems for students 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. 14. 15. 16. Hardware and software components of a vision system for a mobile robot Image representation for intelligent processing Sampling, pixeling and Quantization Color models Types of digital cameras. Interfacing digital cameras to CPU. Problems with cameras. Bayer Patterns and conversion. What is good about CMUCAM? Use of vision in industrial robots. Use of multiple-perspective cameras. Use of omnivision cameras. Types of visual servoing. Applications of visual servoing Visual servoing in surgery Explain tracking applications of vision.

References • Photo’s , Text and Schematics Information • • www. acroname. com www. lynxmotion. com www. drrobot. com Alan Stewart • Dr. Gaurav Sukhatme • Thomas Braunl • Students 2002, class 479 • E. Menegatti, M. Wright, E. Pagello

f3ebc502531518140d1ef582426f065b.ppt