196c0b86b744f05b50df4c201144953e.ppt

- Количество слайдов: 24

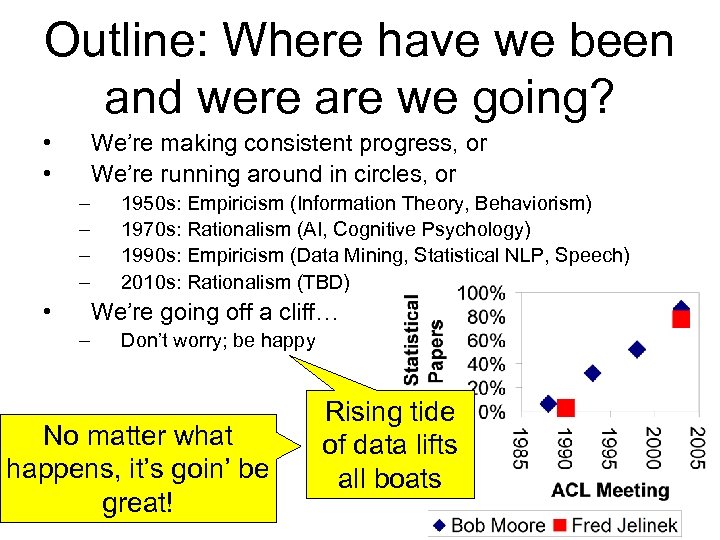

Outline: Where have we been and were are we going? • • We’re making consistent progress, or We’re running around in circles, or – – • 1950 s: Empiricism (Information Theory, Behaviorism) 1970 s: Rationalism (AI, Cognitive Psychology) 1990 s: Empiricism (Data Mining, Statistical NLP, Speech) 2010 s: Rationalism (TBD) We’re going off a cliff… – Don’t worry; be happy No matter what happens, it’s goin’ be great! Rising tide of data lifts all boats

Rising Tide of Data Lifts All Boats If you have a lot of data, then you don’t need a lot of methodology • 1985: “There is no data like more data” – Fighting words uttered by radical fringe elements (Mercer at Arden House) • 1995: The Web changes everything • All you need is data (magic sauce) – – – – No linguistics No artificial intelligence (representation) No machine learning No statistics No error analysis No data mining No text mining

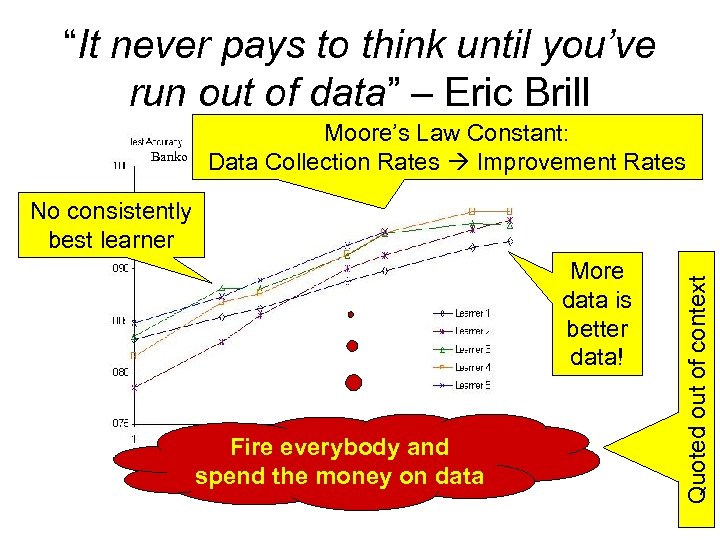

“It never pays to think until you’ve run out of data” – Eric Brill Moore’s Law Constant: Banko & Brill: Mitigating the Paucity-of-Data Problem (HLT 2001) Data Collection Rates Improvement Rates More data is better data! Fire everybody and spend the money on data Quoted out of context No consistently best learner

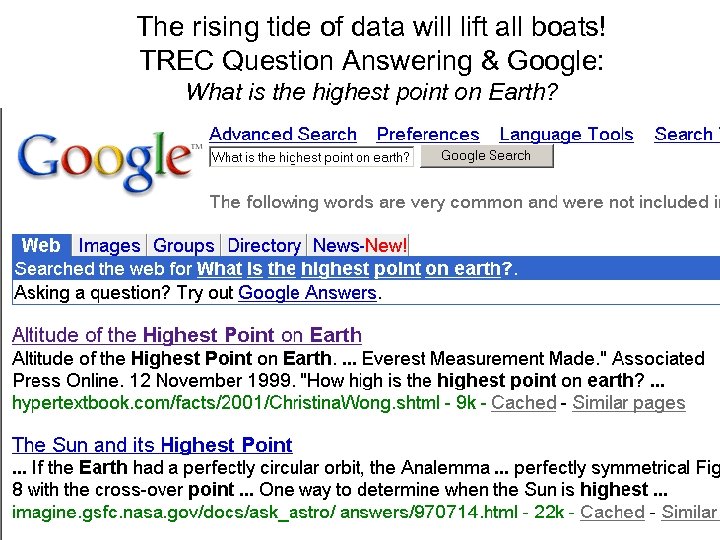

The rising tide of data will lift all boats! TREC Question Answering & Google: What is the highest point on Earth?

The rising tide of data will lift all boats! Acquiring Lexical Resources from Data: Dictionaries, Ontologies, Word. Nets, Language Models, etc. http: //labs 1. google. com/sets England France Germany Italy Japan China India Indonesia Cat Dog Horse Fish cat more ls rm Ireland Spain Scotland Belgium Canada Austria Australia Malaysia Korea Taiwan Thailand Singapore Australia Bangladesh Bird Rabbit Cattle Rat Livestock Mouse Human mv cd cp mkdir man tail pwd

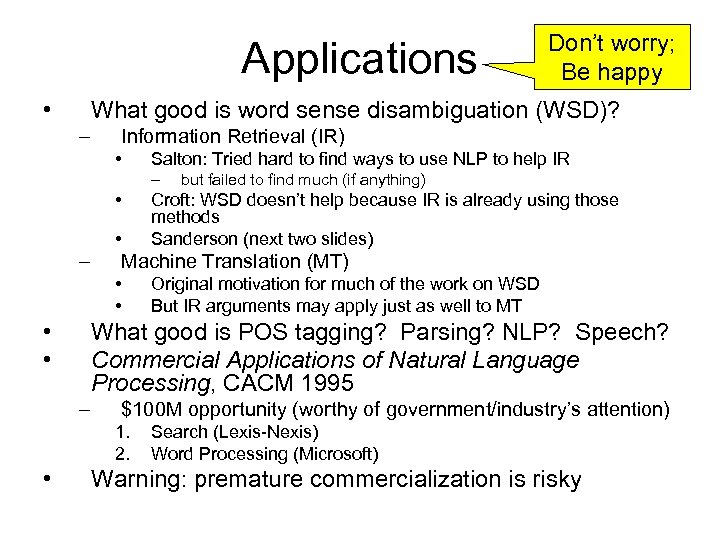

Applications • What good is word sense disambiguation (WSD)? – Information Retrieval (IR) • Salton: Tried hard to find ways to use NLP to help IR – • • but failed to find much (if anything) Croft: WSD doesn’t help because IR is already using those methods Sanderson (next two slides) Machine Translation (MT) • • Original motivation for much of the work on WSD But IR arguments may apply just as well to MT What good is POS tagging? Parsing? NLP? Speech? Commercial Applications of Natural Language Processing, CACM 1995 – $100 M opportunity (worthy of government/industry’s attention) 1. 2. • Don’t worry; Be happy Search (Lexis-Nexis) Word Processing (Microsoft) Warning: premature commercialization is risky

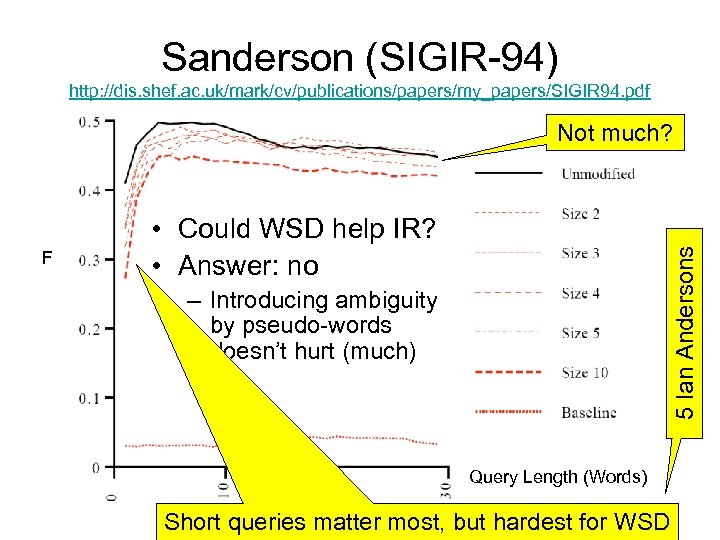

Sanderson (SIGIR-94) http: //dis. shef. ac. uk/mark/cv/publications/papers/my_papers/SIGIR 94. pdf Not much? 5 Ian Andersons F • Could WSD help IR? • Answer: no – Introducing ambiguity by pseudo-words doesn’t hurt (much) Query Length (Words) Short queries matter most, but hardest for WSD

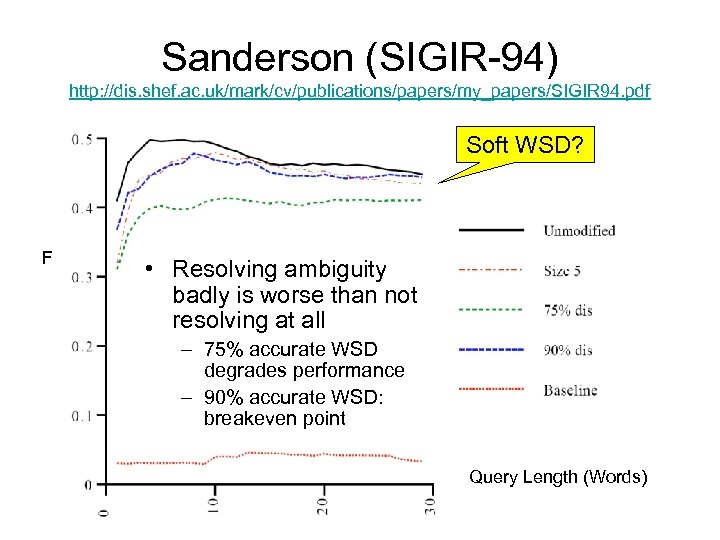

Sanderson (SIGIR-94) http: //dis. shef. ac. uk/mark/cv/publications/papers/my_papers/SIGIR 94. pdf Soft WSD? F • Resolving ambiguity badly is worse than not resolving at all – 75% accurate WSD degrades performance – 90% accurate WSD: breakeven point Query Length (Words)

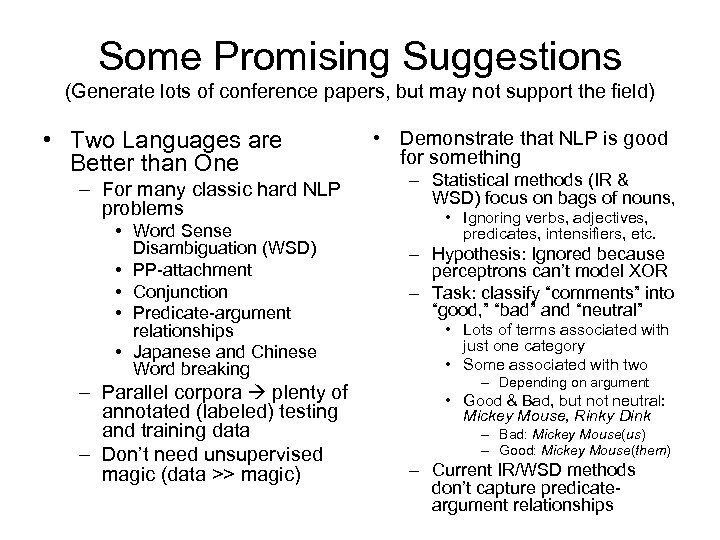

Some Promising Suggestions (Generate lots of conference papers, but may not support the field) • Two Languages are Better than One – For many classic hard NLP problems • Word Sense Disambiguation (WSD) • PP-attachment • Conjunction • Predicate-argument relationships • Japanese and Chinese Word breaking – Parallel corpora plenty of annotated (labeled) testing and training data – Don’t need unsupervised magic (data >> magic) • Demonstrate that NLP is good for something – Statistical methods (IR & WSD) focus on bags of nouns, • Ignoring verbs, adjectives, predicates, intensifiers, etc. – Hypothesis: Ignored because perceptrons can’t model XOR – Task: classify “comments” into “good, ” “bad” and “neutral” • Lots of terms associated with just one category • Some associated with two – Depending on argument • Good & Bad, but not neutral: Mickey Mouse, Rinky Dink – Bad: Mickey Mouse(us) – Good: Mickey Mouse(them) – Current IR/WSD methods don’t capture predicateargument relationships

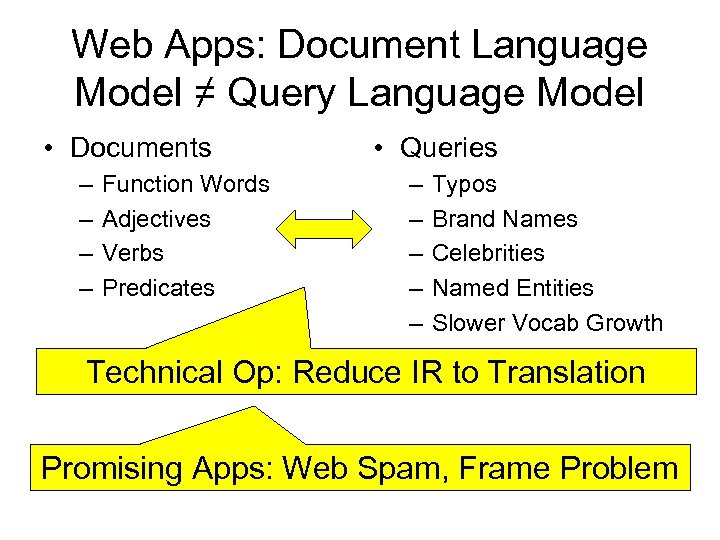

Web Apps: Document Language Model ≠ Query Language Model • Documents – – Function Words Adjectives Verbs Predicates • Queries – – – Typos Brand Names Celebrities Named Entities Slower Vocab Growth Technical Op: Reduce IR to Translation Promising Apps: Web Spam, Frame Problem

Speech Data Mining & Call Centers: An Intelligence Bonanza • Some companies are collecting information with technology designed to monitor incoming calls for service quality. • Last summer, Continental Airlines Inc. installed software from Witness Systems Inc. to monitor the 5, 200 agents in its four reservation centers. • But the Houston airline quickly realized that the system, which records customer phone calls and information on the responding agent's computer screen, also was an intelligence bonanza, says André Harris, reservations training and quality-assurance director.

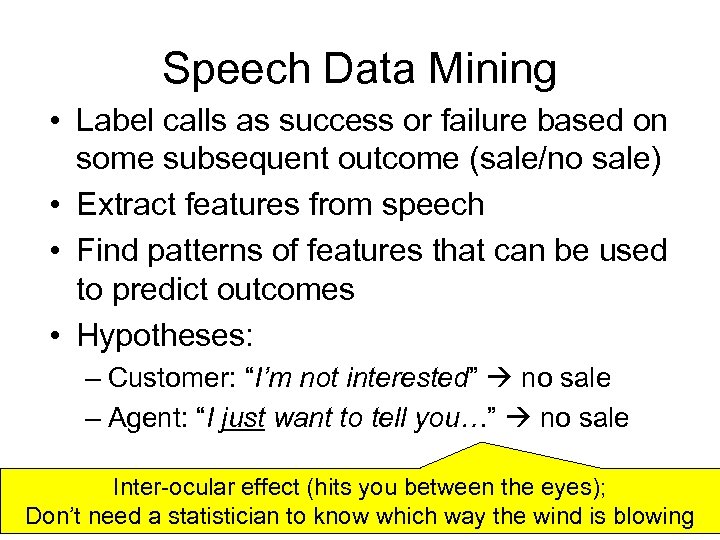

Speech Data Mining • Label calls as success or failure based on some subsequent outcome (sale/no sale) • Extract features from speech • Find patterns of features that can be used to predict outcomes • Hypotheses: – Customer: “I’m not interested” no sale – Agent: “I just want to tell you…” no sale Inter-ocular effect (hits you between the eyes); Don’t need a statistician to know which way the wind is blowing

Outline • We’re making consistent progress, or • We’re running around in circles, or – Don’t worry; be happy • We’re going off a cliff… According to unnamed sources: Speech Winter Language Winter Dot Boom Dot Bust

Sample of 20 Survey Questions (Strong Emphasis on Applications) • When will – More than 50% of new PCs have dictation on them, either at purchase or shortly after. – Most telephone Interactive Voice Response (IVR) systems accept speech input. – Automatic airline reservation by voice over the telephone is the norm. – TV closed-captioning (subtitling) is automatic and pervasive. – Telephones are answered by an intelligent answering machine that converses with the calling party to determine the nature and priority of the call. – Public proceedings (e. g. , courts, public inquiries, parliament, etc. ) are transcribed automatically. • Two surveys of ASRU attendees: 1997 & 2003

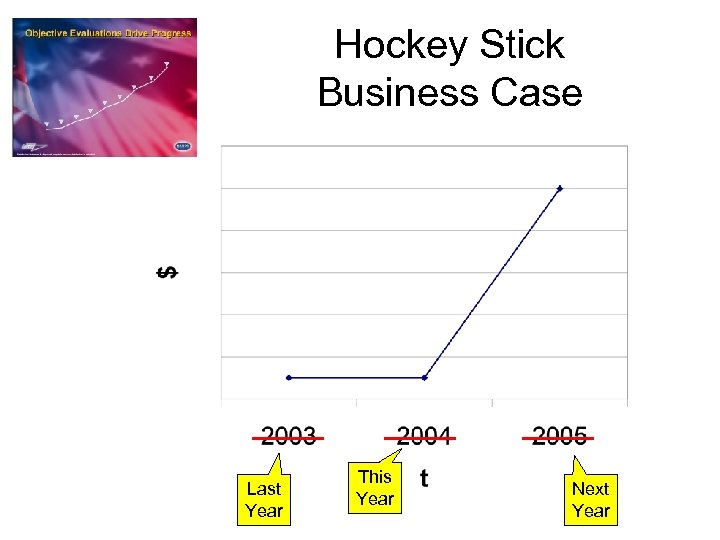

Hockey Stick Business Case Last Year This Year Next Year

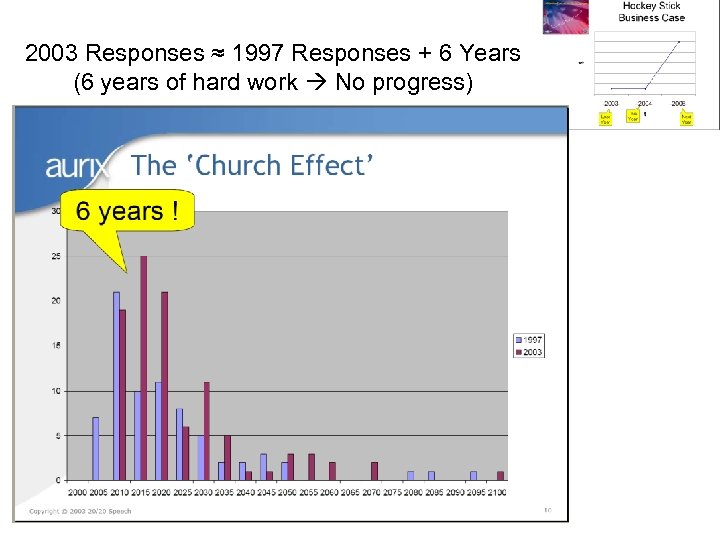

2003 Responses ≈ 1997 Responses + 6 Years (6 years of hard work No progress)

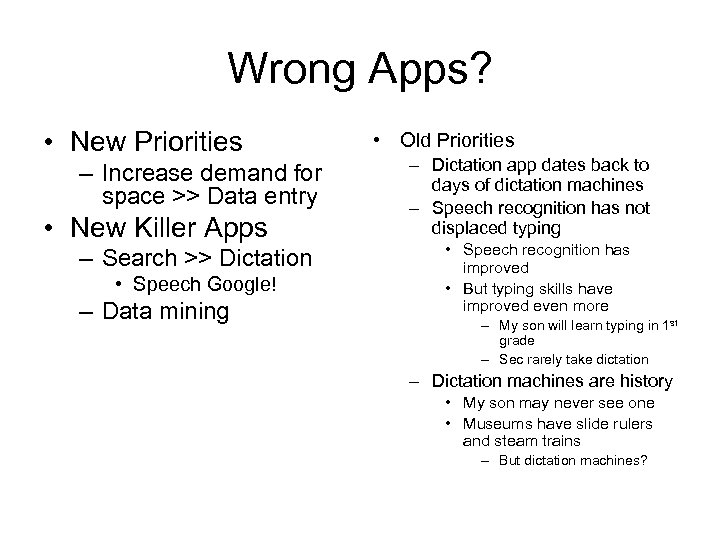

Wrong Apps? • New Priorities – Increase demand for space >> Data entry • New Killer Apps – Search >> Dictation • Speech Google! – Data mining • Old Priorities – Dictation app dates back to days of dictation machines – Speech recognition has not displaced typing • Speech recognition has improved • But typing skills have improved even more – My son will learn typing in 1 st grade – Sec rarely take dictation – Dictation machines are history • My son may never see one • Museums have slide rulers and steam trains – But dictation machines?

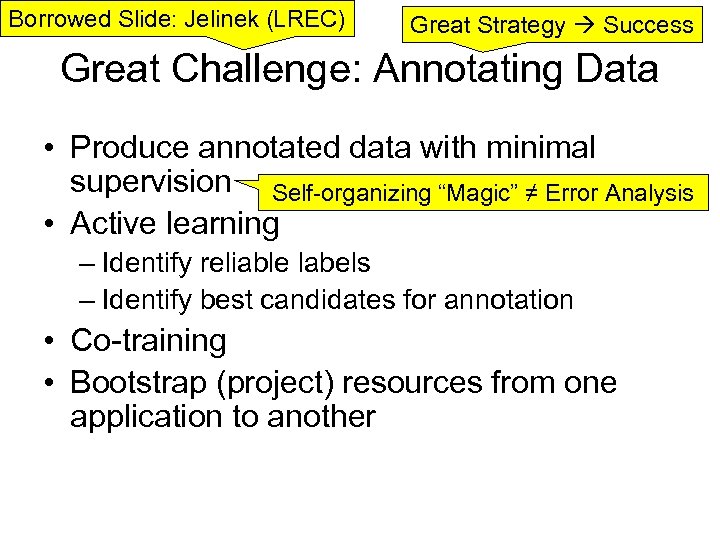

Borrowed Slide: Jelinek (LREC) Great Strategy Success Great Challenge: Annotating Data • Produce annotated data with minimal supervision Self-organizing “Magic” ≠ Error Analysis • Active learning – Identify reliable labels – Identify best candidates for annotation • Co-training • Bootstrap (project) resources from one application to another

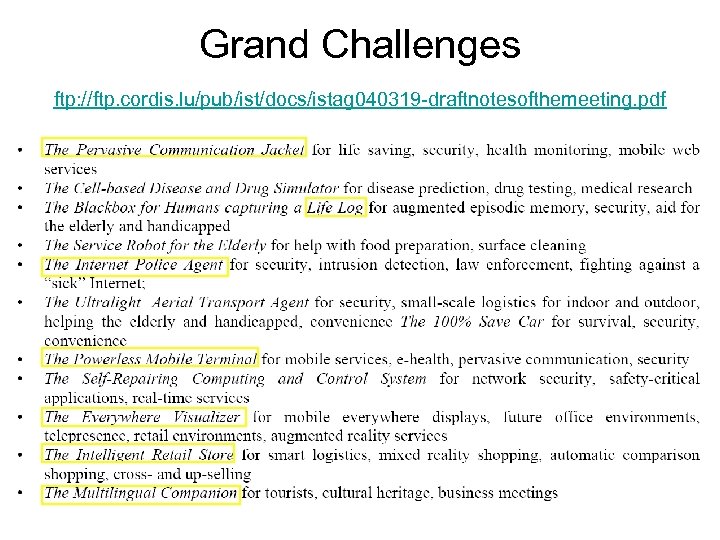

Grand Challenges ftp: //ftp. cordis. lu/pub/ist/docs/istag 040319 -draftnotesofthemeeting. pdf

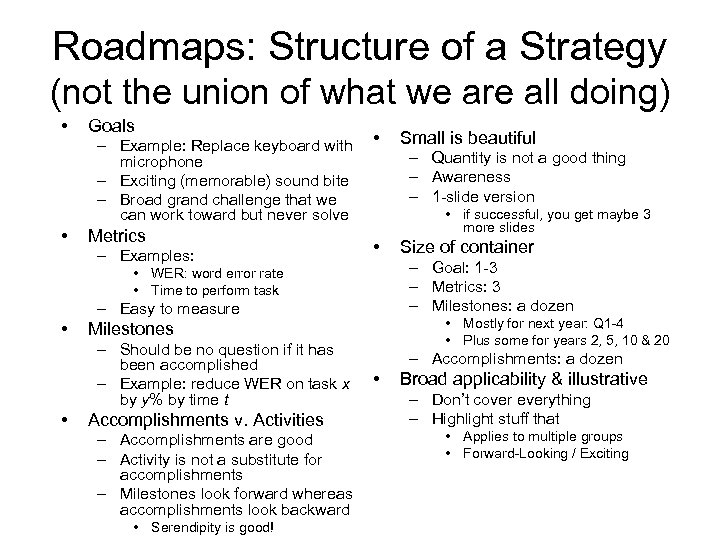

Roadmaps: Structure of a Strategy (not the union of what we are all doing) • Goals – Example: Replace keyboard with microphone – Exciting (memorable) sound bite – Broad grand challenge that we can work toward but never solve • Metrics – Examples: • – Quantity is not a good thing – Awareness – 1 -slide version • if successful, you get maybe 3 more slides • – Easy to measure • • Mostly for next year: Q 1 -4 • Plus some for years 2, 5, 10 & 20 Milestones – Should be no question if it has been accomplished – Example: reduce WER on task x by y% by time t Accomplishments v. Activities – Accomplishments are good – Activity is not a substitute for accomplishments – Milestones look forward whereas accomplishments look backward • Serendipity is good! Size of container – Goal: 1 -3 – Metrics: 3 – Milestones: a dozen • WER: word error rate • Time to perform task • Small is beautiful – Accomplishments: a dozen • Broad applicability & illustrative – Don’t cover everything – Highlight stuff that • Applies to multiple groups • Forward-Looking / Exciting

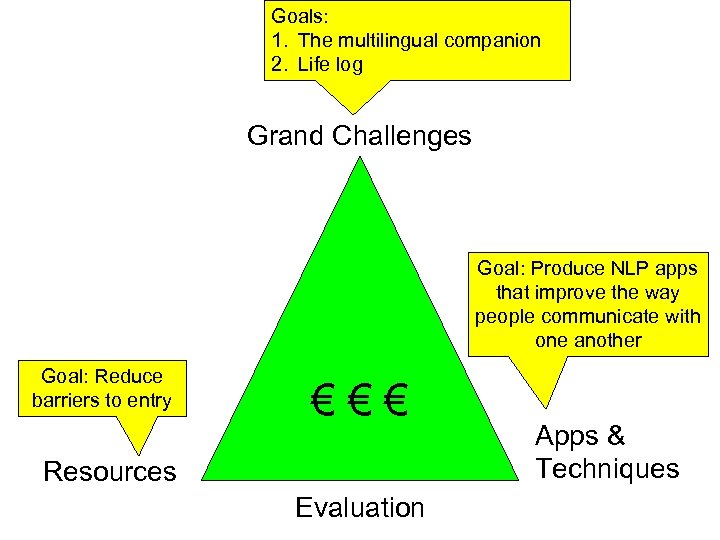

Goals: 1. The multilingual companion 2. Life log Grand Challenges Goal: Produce NLP apps that improve the way people communicate with one another Goal: Reduce barriers to entry €€€ Resources Evaluation Apps & Techniques

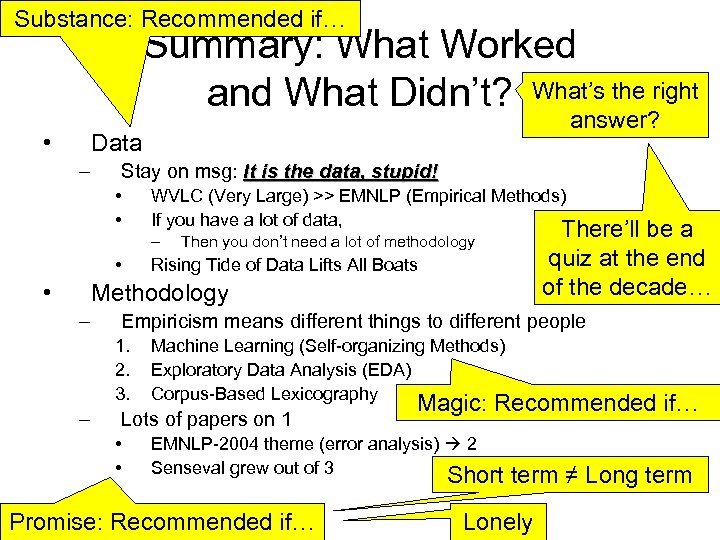

Substance: Recommended if… Summary: What Worked and What Didn’t? What’s the right • answer? Data – Stay on msg: It is the data, stupid! • • WVLC (Very Large) >> EMNLP (Empirical Methods) If you have a lot of data, – • • Then you don’t need a lot of methodology Rising Tide of Data Lifts All Boats Methodology – Empiricism means different things to different people 1. 2. 3. – There’ll be a quiz at the end of the decade… Machine Learning (Self-organizing Methods) Exploratory Data Analysis (EDA) Corpus-Based Lexicography Lots of papers on 1 • • Magic: Recommended if… EMNLP-2004 theme (error analysis) 2 Senseval grew out of 3 Promise: Recommended if… Short term ≠ Long term Lonely

196c0b86b744f05b50df4c201144953e.ppt