df8b62008e68fb3a3d5ed5050c09526d.ppt

- Количество слайдов: 42

Outline for today ê Administrative ê Next week: Monday lecture, Friday discussion ê Objective ê Google File System ê Paper: Award paper at SOSP in 2003 ê Slides: with thanks, adapted from slides of Prof. Cox

Outline for today ê Administrative ê Next week: Monday lecture, Friday discussion ê Objective ê Google File System ê Paper: Award paper at SOSP in 2003 ê Slides: with thanks, adapted from slides of Prof. Cox

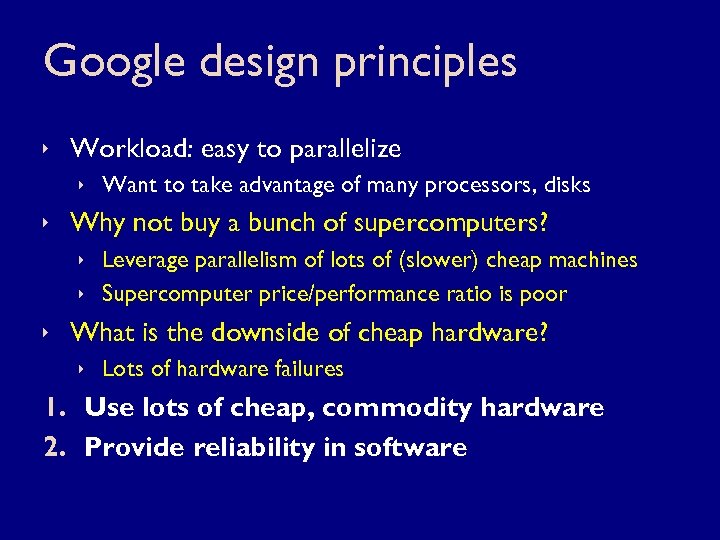

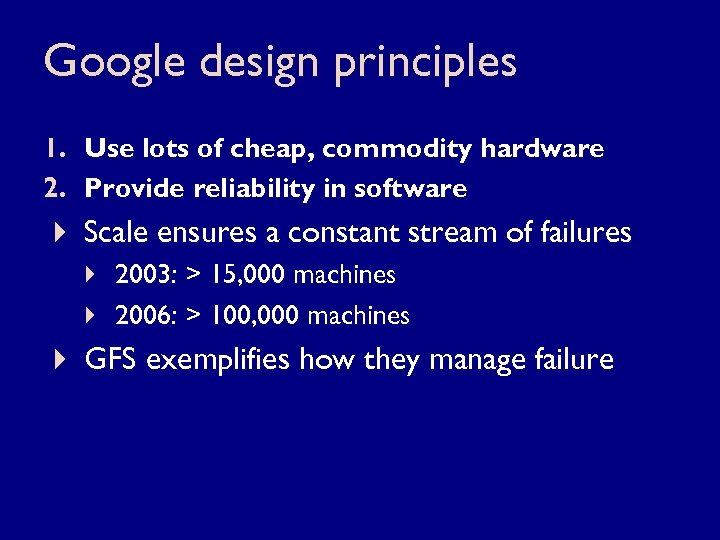

Google design principles ê Workload: easy to parallelize ê Want to take advantage of many processors, disks ê Why not buy a bunch of supercomputers? ê Leverage parallelism of lots of (slower) cheap machines ê Supercomputer price/performance ratio is poor ê What is the downside of cheap hardware? ê Lots of hardware failures 1. Use lots of cheap, commodity hardware 2. Provide reliability in software

Google design principles ê Workload: easy to parallelize ê Want to take advantage of many processors, disks ê Why not buy a bunch of supercomputers? ê Leverage parallelism of lots of (slower) cheap machines ê Supercomputer price/performance ratio is poor ê What is the downside of cheap hardware? ê Lots of hardware failures 1. Use lots of cheap, commodity hardware 2. Provide reliability in software

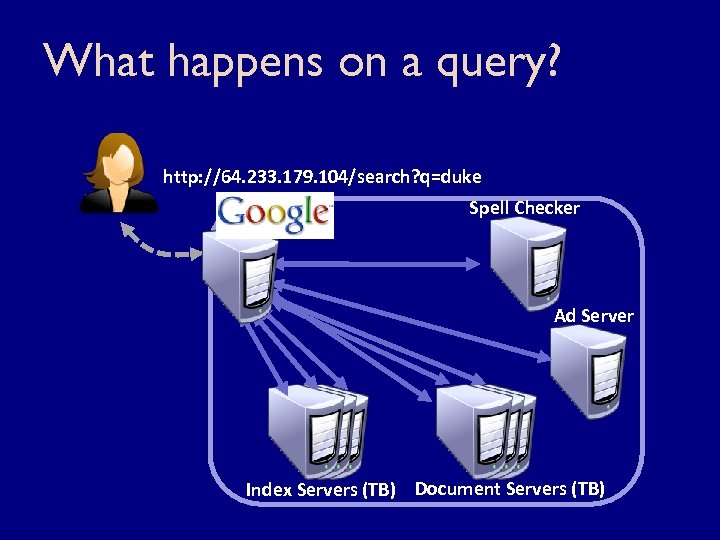

What happens on a query? http: //64. 233. 179. 104/search? q=duke Spell Checker Ad Server Index Servers (TB) Document Servers (TB)

What happens on a query? http: //64. 233. 179. 104/search? q=duke Spell Checker Ad Server Index Servers (TB) Document Servers (TB)

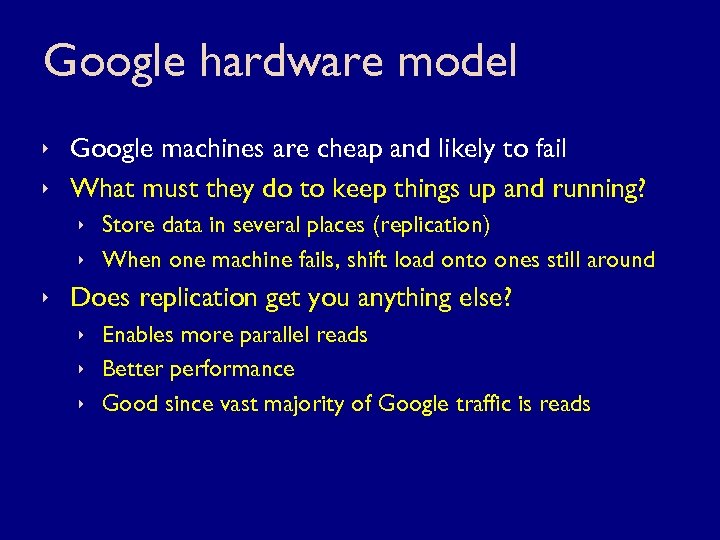

Google hardware model ê Google machines are cheap and likely to fail ê What must they do to keep things up and running? ê Store data in several places (replication) ê When one machine fails, shift load onto ones still around ê Does replication get you anything else? ê Enables more parallel reads ê Better performance ê Good since vast majority of Google traffic is reads

Google hardware model ê Google machines are cheap and likely to fail ê What must they do to keep things up and running? ê Store data in several places (replication) ê When one machine fails, shift load onto ones still around ê Does replication get you anything else? ê Enables more parallel reads ê Better performance ê Good since vast majority of Google traffic is reads

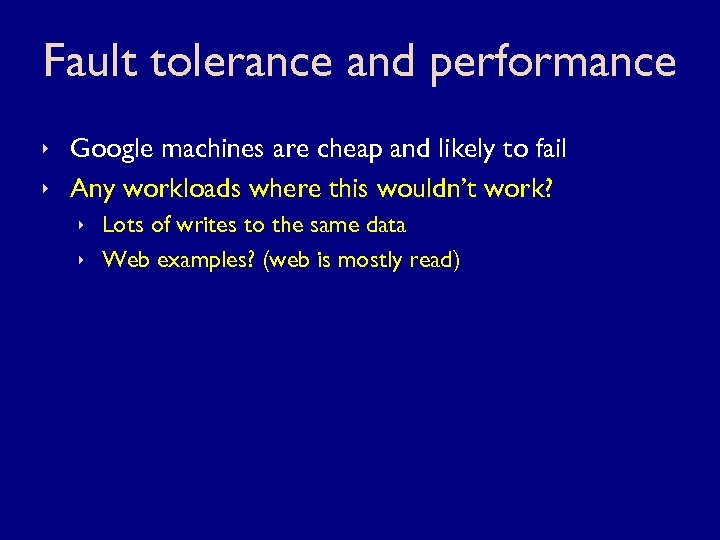

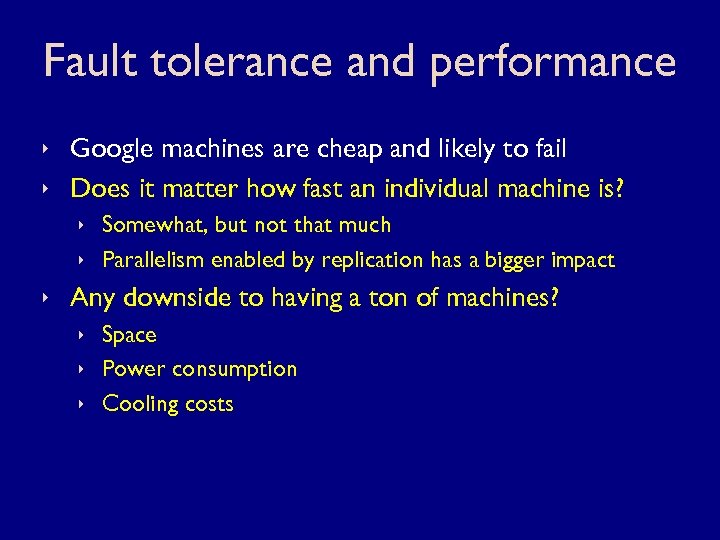

Fault tolerance and performance ê Google machines are cheap and likely to fail ê Any workloads where this wouldn’t work? ê Lots of writes to the same data ê Web examples? (web is mostly read)

Fault tolerance and performance ê Google machines are cheap and likely to fail ê Any workloads where this wouldn’t work? ê Lots of writes to the same data ê Web examples? (web is mostly read)

Fault tolerance and performance ê Google machines are cheap and likely to fail ê Does it matter how fast an individual machine is? ê Somewhat, but not that much ê Parallelism enabled by replication has a bigger impact ê Any downside to having a ton of machines? ê Space ê Power consumption ê Cooling costs

Fault tolerance and performance ê Google machines are cheap and likely to fail ê Does it matter how fast an individual machine is? ê Somewhat, but not that much ê Parallelism enabled by replication has a bigger impact ê Any downside to having a ton of machines? ê Space ê Power consumption ê Cooling costs

Google design principles 1. Use lots of cheap, commodity hardware 2. Provide reliability in software 4 Scale ensures a constant stream of failures 4 2003: > 15, 000 machines 4 2006: > 100, 000 machines 4 GFS exemplifies how they manage failure

Google design principles 1. Use lots of cheap, commodity hardware 2. Provide reliability in software 4 Scale ensures a constant stream of failures 4 2003: > 15, 000 machines 4 2006: > 100, 000 machines 4 GFS exemplifies how they manage failure

Sources of failure ê Software ê Application bugs, OS bugs ê Human errors ê Hardware ê Disks, memory ê Connectors, networking ê Power supplies

Sources of failure ê Software ê Application bugs, OS bugs ê Human errors ê Hardware ê Disks, memory ê Connectors, networking ê Power supplies

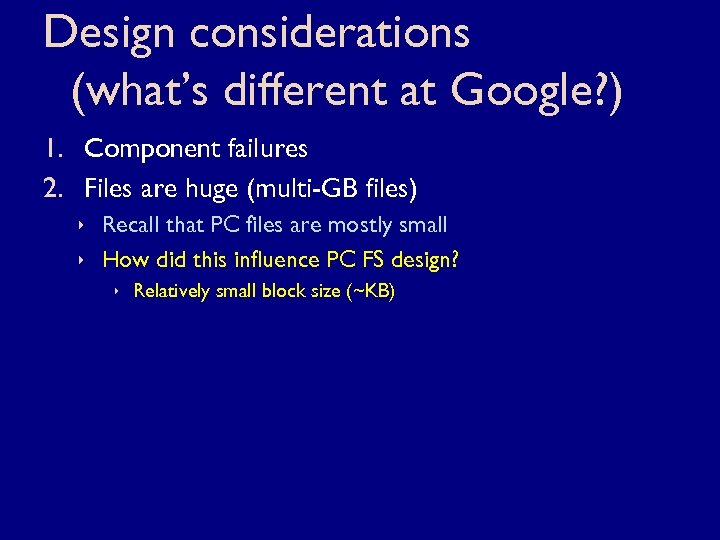

Design considerations (what’s different at Google? ) 1. Component failures 2. Files are huge (multi-GB files) ê Recall that PC files are mostly small ê How did this influence PC FS design? ê Relatively small block size (~KB)

Design considerations (what’s different at Google? ) 1. Component failures 2. Files are huge (multi-GB files) ê Recall that PC files are mostly small ê How did this influence PC FS design? ê Relatively small block size (~KB)

Design considerations (what’s different at Google? ) 1. Component failures 2. Files are huge (multi-GB files) 3. Most writes are large, sequential appends ê Old data is rarely over-written

Design considerations (what’s different at Google? ) 1. Component failures 2. Files are huge (multi-GB files) 3. Most writes are large, sequential appends ê Old data is rarely over-written

Design considerations (what’s different at Google? ) 1. 2. 3. 4. Component failures Files are huge (multi-GB files) Most writes are large, sequential appends Reads are large and streamed or small and random ê Once written, files are only read, often sequentially ê Is this like or unlike PC file systems? ê PC reads are mostly sequential reads of small files ê How do sequential reads of large files affect client caching? ê Caching is pretty much useless

Design considerations (what’s different at Google? ) 1. 2. 3. 4. Component failures Files are huge (multi-GB files) Most writes are large, sequential appends Reads are large and streamed or small and random ê Once written, files are only read, often sequentially ê Is this like or unlike PC file systems? ê PC reads are mostly sequential reads of small files ê How do sequential reads of large files affect client caching? ê Caching is pretty much useless

Design considerations (what’s different at Google? ) 1. 2. 3. 4. 5. Component failures Files are huge (multi-GB files) Most writes are large, sequential appends Reads are large and streamed or small and random Design file system for apps that use it ê ê Files are often used as producer-consumer queues 100 s of producers trying to append concurrently Want atomicity of append with minimal synchronization Want support for atomic append

Design considerations (what’s different at Google? ) 1. 2. 3. 4. 5. Component failures Files are huge (multi-GB files) Most writes are large, sequential appends Reads are large and streamed or small and random Design file system for apps that use it ê ê Files are often used as producer-consumer queues 100 s of producers trying to append concurrently Want atomicity of append with minimal synchronization Want support for atomic append

Design considerations (what’s different at Google? ) 1. 2. 3. 4. 5. 6. Component failures Files are huge (multi-GB files) Most writes are large, sequential appends Reads are large and streamed or small and random Design file system for apps that use it High sustained bandwidth better than low latency ê What is the difference between BW and latency? ê Network as road (BW = # lanes, latency = speed limit)

Design considerations (what’s different at Google? ) 1. 2. 3. 4. 5. 6. Component failures Files are huge (multi-GB files) Most writes are large, sequential appends Reads are large and streamed or small and random Design file system for apps that use it High sustained bandwidth better than low latency ê What is the difference between BW and latency? ê Network as road (BW = # lanes, latency = speed limit)

Google File System (GFS) ê Similar API to POSIX ê Create/delete, open/close, read/write ê GFS-specific calls ê Snapshot (low-cost copy) ê Record_append ê (allows concurrent appends, ensures atomicity of each append) ê What does this description of record_append mean? ê Individual appends may be interleaved arbitrarily ê Each append’s data will not be interleaved with another’s

Google File System (GFS) ê Similar API to POSIX ê Create/delete, open/close, read/write ê GFS-specific calls ê Snapshot (low-cost copy) ê Record_append ê (allows concurrent appends, ensures atomicity of each append) ê What does this description of record_append mean? ê Individual appends may be interleaved arbitrarily ê Each append’s data will not be interleaved with another’s

GFS architecture ê ê ê Cluster-based Single logical master Multiple chunkservers Clusters are accessed by multiple clients Clients are commodity Linux machines Machines can be both clients and servers

GFS architecture ê ê ê Cluster-based Single logical master Multiple chunkservers Clusters are accessed by multiple clients Clients are commodity Linux machines Machines can be both clients and servers

GFS architecture

GFS architecture

File data storage ê Files are broken into fixed-size chunks ê Chunks are immutable ê Chunks are named by a globally unique ID ê ID is chosen by the master ê ID is called a chunk handle ê Servers store chunks as normal Linux files ê Servers accept reads/writes with handle + byte range ê Chunks are replicated at 3 servers

File data storage ê Files are broken into fixed-size chunks ê Chunks are immutable ê Chunks are named by a globally unique ID ê ID is chosen by the master ê ID is called a chunk handle ê Servers store chunks as normal Linux files ê Servers accept reads/writes with handle + byte range ê Chunks are replicated at 3 servers

File meta-data storage ê Master maintains all meta-data ê What do we mean by meta-data? ê ê Namespace info Access control info Mapping from files to chunks Current chunk locations

File meta-data storage ê Master maintains all meta-data ê What do we mean by meta-data? ê ê Namespace info Access control info Mapping from files to chunks Current chunk locations

Other master responsibilities ê Chunk lease management ê Garbage collection of orphaned chunks ê How might a chunk become orphaned? ê If a chunk is no longer in any file ê Chunk migration between servers ê Heart. Beat messages to chunkservers

Other master responsibilities ê Chunk lease management ê Garbage collection of orphaned chunks ê How might a chunk become orphaned? ê If a chunk is no longer in any file ê Chunk migration between servers ê Heart. Beat messages to chunkservers

Client details ê ê Client code is just a library No in-memory data caching at the client or servers Clients still cache meta-data Why don’t servers cache data (trick question)? ê Chunks are stored as regular Linux files ê Linux’s in-kernel buffer cache already caches chunk content

Client details ê ê Client code is just a library No in-memory data caching at the client or servers Clients still cache meta-data Why don’t servers cache data (trick question)? ê Chunks are stored as regular Linux files ê Linux’s in-kernel buffer cache already caches chunk content

Master design issues ê Single (logical) master per cluster ê Master’s state is actually replicated elsewhere ê Logically single because client speak to one name ê Pros ê Simplifies design ê Master endowed with global knowledge ê (makes good placement, replication decisions)

Master design issues ê Single (logical) master per cluster ê Master’s state is actually replicated elsewhere ê Logically single because client speak to one name ê Pros ê Simplifies design ê Master endowed with global knowledge ê (makes good placement, replication decisions)

Master design issues ê Single (logical) master per cluster ê Master’s state is actually replicated elsewhere ê Logically single because client speak to one name ê Cons? ê Could become a bottleneck ê (recall how replication can improve performance) ê How to keep from becoming a bottleneck? ê Minimize its involvement in reads/writes ê Clients talk to master very briefly ê Most communication is with servers

Master design issues ê Single (logical) master per cluster ê Master’s state is actually replicated elsewhere ê Logically single because client speak to one name ê Cons? ê Could become a bottleneck ê (recall how replication can improve performance) ê How to keep from becoming a bottleneck? ê Minimize its involvement in reads/writes ê Clients talk to master very briefly ê Most communication is with servers

Example read ê Client uses fixed size chunks to compute chunk index within a file

Example read ê Client uses fixed size chunks to compute chunk index within a file

Example read ê Client asks master for the chunk handle at index i of the file

Example read ê Client asks master for the chunk handle at index i of the file

Example read ê Master replies with the chunk handle and list of replicas

Example read ê Master replies with the chunk handle and list of replicas

Example read ê ê Client caches handle and replica list (maps filename + chunk index chunk handle + replica list)

Example read ê ê Client caches handle and replica list (maps filename + chunk index chunk handle + replica list)

Example read ê ê Client sends a request to the closest chunk server Server returns data to client

Example read ê ê Client sends a request to the closest chunk server Server returns data to client

Example read ê Any possible optimizations? ê ê Could ask for multiple chunk handles at once (batching) Server could return handles for sequent indices (pre-fetching)

Example read ê Any possible optimizations? ê ê Could ask for multiple chunk handles at once (batching) Server could return handles for sequent indices (pre-fetching)

Chunk size ê Recall how we chose block/page sizes 1. If too small, too much storage used for meta-data 2. If too large, too much internal fragmentation ê 2. is only an issue if most files are small ê In GFS, most files are really big ê What would be a reasonable chunk size? ê They chose 64 MB

Chunk size ê Recall how we chose block/page sizes 1. If too small, too much storage used for meta-data 2. If too large, too much internal fragmentation ê 2. is only an issue if most files are small ê In GFS, most files are really big ê What would be a reasonable chunk size? ê They chose 64 MB

Master’s meta-data 1. File and chunk namespaces 2. Mapping from files to chunks 3. Chunk replica locations ê All are kept in-memory ê 1. and 2. are also kept persistent ê Use an operation log

Master’s meta-data 1. File and chunk namespaces 2. Mapping from files to chunks 3. Chunk replica locations ê All are kept in-memory ê 1. and 2. are also kept persistent ê Use an operation log

Operation log ê Historical record of all meta-data updates ê Only persistent record of meta-data updates ê Replicated at multiple machines ê Appending to log is transactional ê Log records are synchronously flushed at all replicas ê To recover, the master replays the operation log

Operation log ê Historical record of all meta-data updates ê Only persistent record of meta-data updates ê Replicated at multiple machines ê Appending to log is transactional ê Log records are synchronously flushed at all replicas ê To recover, the master replays the operation log

Atomic record_append ê Traditional write ê Concurrent writes to same file region are not serialized ê Region can end up containing fragments from many clients ê Record_append ê Client only specifies the data to append ê GFS appends it to the file at least once atomically ê GFS chooses the offset ê Why is this simpler than forcing clients to synchronize? ê Clients would need a distributed locking scheme ê What about clients failing while holding a lock? ê Could use leases among clients, but what if machine is just slow?

Atomic record_append ê Traditional write ê Concurrent writes to same file region are not serialized ê Region can end up containing fragments from many clients ê Record_append ê Client only specifies the data to append ê GFS appends it to the file at least once atomically ê GFS chooses the offset ê Why is this simpler than forcing clients to synchronize? ê Clients would need a distributed locking scheme ê What about clients failing while holding a lock? ê Could use leases among clients, but what if machine is just slow?

Mutation order ê Mutations are performed at each chunk’s replica ê Master chooses a primary for each chunk ê Others are called secondary replicas ê Primary chooses an order for all mutations ê Called “serializing” ê All replicas follow this “serial” order

Mutation order ê Mutations are performed at each chunk’s replica ê Master chooses a primary for each chunk ê Others are called secondary replicas ê Primary chooses an order for all mutations ê Called “serializing” ê All replicas follow this “serial” order

Example mutation ê Client asks master ê Primary replica ê Secondary replicas

Example mutation ê Client asks master ê Primary replica ê Secondary replicas

Example mutation ê Master returns ê Primary replica ê Secondary replicas

Example mutation ê Master returns ê Primary replica ê Secondary replicas

Example mutation ê Client sends data ê To all replicas ê Replicas ê Only buffer data ê Do not apply ê Ack client

Example mutation ê Client sends data ê To all replicas ê Replicas ê Only buffer data ê Do not apply ê Ack client

Example mutation ê Client tells primary ê Write request ê Identifies sent data ê Primary replica ê Assigns serial #s ê Writes data locally ê (in serial order)

Example mutation ê Client tells primary ê Write request ê Identifies sent data ê Primary replica ê Assigns serial #s ê Writes data locally ê (in serial order)

Example mutation ê Primary replica ê Forwards request ê to secondaries ê Secondary replicas ê Write data locally ê (in serial order)

Example mutation ê Primary replica ê Forwards request ê to secondaries ê Secondary replicas ê Write data locally ê (in serial order)

Example mutation ê Secondary replicas ê Ack primary ê Like “votes”

Example mutation ê Secondary replicas ê Ack primary ê Like “votes”

Example mutation ê Primary replica ê Ack client ê Like a commit

Example mutation ê Primary replica ê Ack client ê Like a commit

Example mutation ê Errors? ê Require consensus ê Just retry

Example mutation ê Errors? ê Require consensus ê Just retry

Master namespace mgt ê Pathnames for naming do not mean per-directory files as structure. ê No aliases (hard or symbolic links) ê One “flat” structure with prefix compression ê Locking ê To create /home/user/alex/foo: r-lock /home, /home/user/alex w-lock /home/user/alex/foo ê Can concurrently create /home/user/alex/bar since /home/user/alex is not w-locked itself ê Deadlock prevented by careful ordering of lock acquisition

Master namespace mgt ê Pathnames for naming do not mean per-directory files as structure. ê No aliases (hard or symbolic links) ê One “flat” structure with prefix compression ê Locking ê To create /home/user/alex/foo: r-lock /home, /home/user/alex w-lock /home/user/alex/foo ê Can concurrently create /home/user/alex/bar since /home/user/alex is not w-locked itself ê Deadlock prevented by careful ordering of lock acquisition