a1f7f0bf8f4490fb0dd64c524cdbba39.ppt

- Количество слайдов: 20

OSG Auth. Z components Dane Skow Gabriele Carcassi

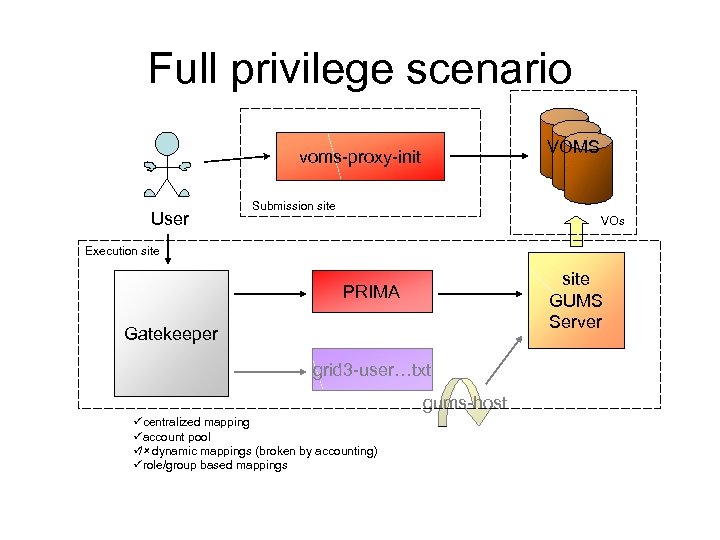

Full privilege scenario VOMS voms-proxy-init User Submission site VOs Execution site GUMS Server PRIMA Gatekeeper grid 3 -user…txt gums-host ücentralized mapping üaccount pool ü dynamic mappings (broken by accounting) /û ürole/group based mappings

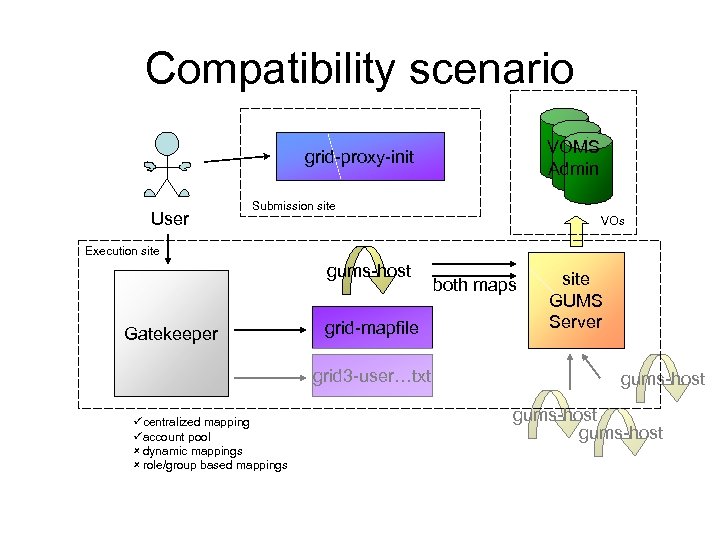

Compatibility scenario VOMS Admin grid-proxy-init User Submission site VOs Execution site gums-host Gatekeeper grid-mapfile grid 3 -user…txt ücentralized mapping üaccount pool û dynamic mappings û role/group based mappings both maps site GUMS Server gums-host

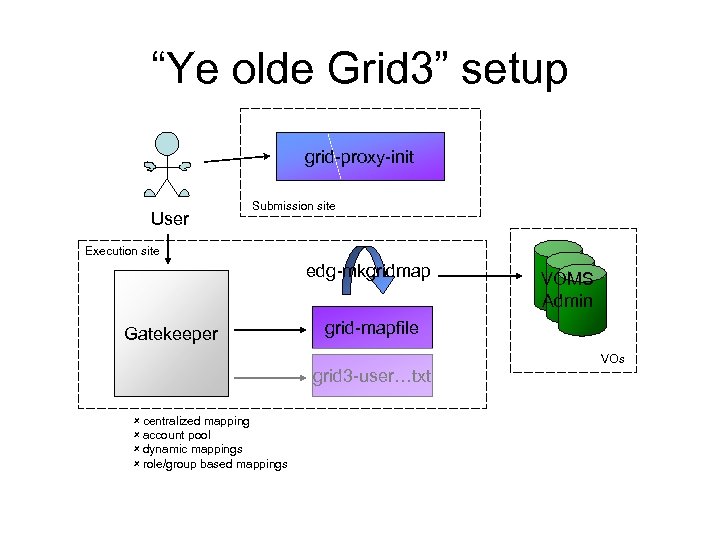

“Ye olde Grid 3” setup grid-proxy-init User Submission site Execution site edg-mkgridmap Gatekeeper grid-mapfile grid 3 -user…txt û centralized mapping û account pool û dynamic mappings û role/group based mappings VOMS Admin VOs

PRIMA module • It’s a C library that implement the gatekeeper callout – Gets the credentials – Validates certificate and attributes – Formats a SAML message and sends it to GUMS – Parses the response – Returns the uid to the gatekeeper • Distributed as part of VDT

Details • PRIMA sends only the first VOMS FQAN, not the whole list encoded in the certificate. GUMS makes decisions only on one FQAN.

Attribute verification • PRIMA can verify the VOMS attributes, but typically we do not do that – In OSG we lack a mechanism to easily distribute the certificates of the VO servers – GUMS verifies the presence in the VO • periodically downloads the full list of users from the VO server (has to do that for maps generation) • prevents forging a fake VO • foresee to disable in case attribute verification is done at the gatekeeper end, and no maps are needed – Should attribute verification be delegated to the server?

PRIMA Complaints • Mainly about the log – Not clear error information (the actual GUMS errors are not passed through the protocol) – Lacks a one liner entry with all information when successful (there is one, but, for example, lacks the FQAN)

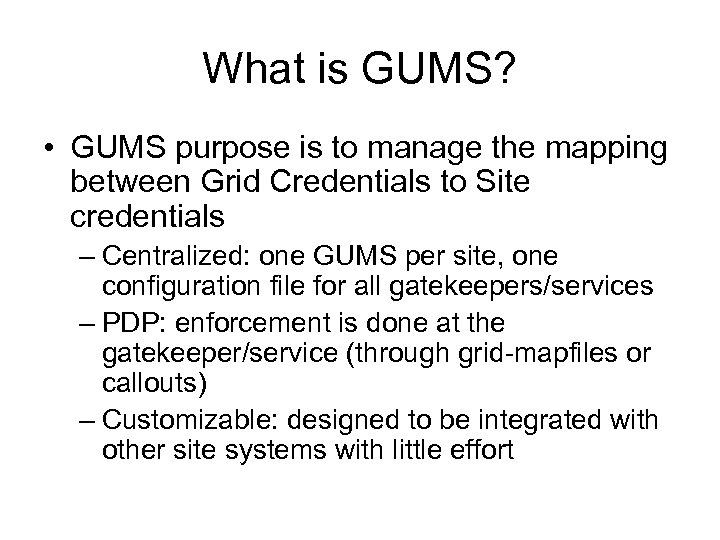

What is GUMS? • GUMS purpose is to manage the mapping between Grid Credentials to Site credentials – Centralized: one GUMS per site, one configuration file for all gatekeepers/services – PDP: enforcement is done at the gatekeeper/service (through grid-mapfiles or callouts) – Customizable: designed to be integrated with other site systems with little effort

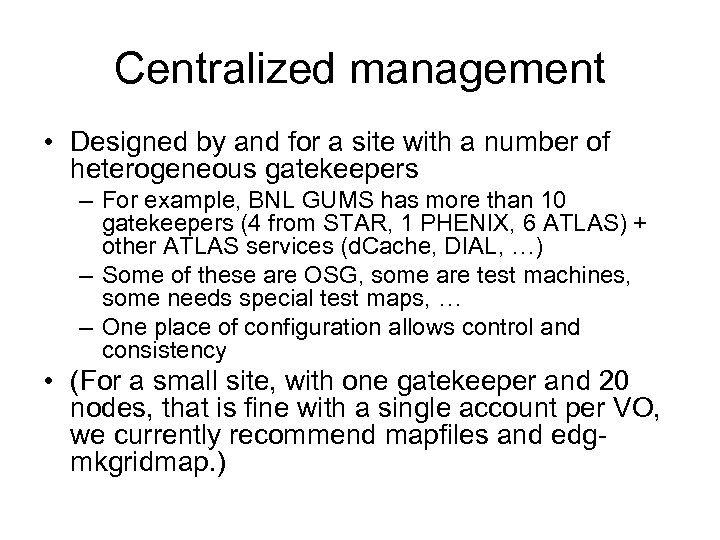

Centralized management • Designed by and for a site with a number of heterogeneous gatekeepers – For example, BNL GUMS has more than 10 gatekeepers (4 from STAR, 1 PHENIX, 6 ATLAS) + other ATLAS services (d. Cache, DIAL, …) – Some of these are OSG, some are test machines, some needs special test maps, … – One place of configuration allows control and consistency • (For a small site, with one gatekeeper and 20 nodes, that is fine with a single account per VO, we currently recommend mapfiles and edgmkgridmap. )

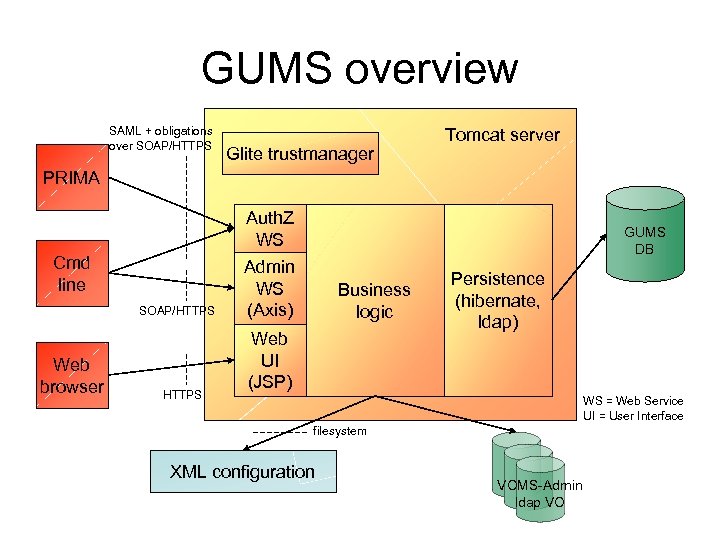

GUMS overview SAML + obligations over SOAP/HTTPS Glite trustmanager Tomcat server PRIMA Auth. Z WS Cmd line SOAP/HTTPS Web browser HTTPS GUMS DB Admin WS (Axis) Business logic Web UI (JSP) Persistence (hibernate, ldap) WS = Web Service UI = User Interface filesystem XML configuration … … VO VOMS-Admin VO ldap VO

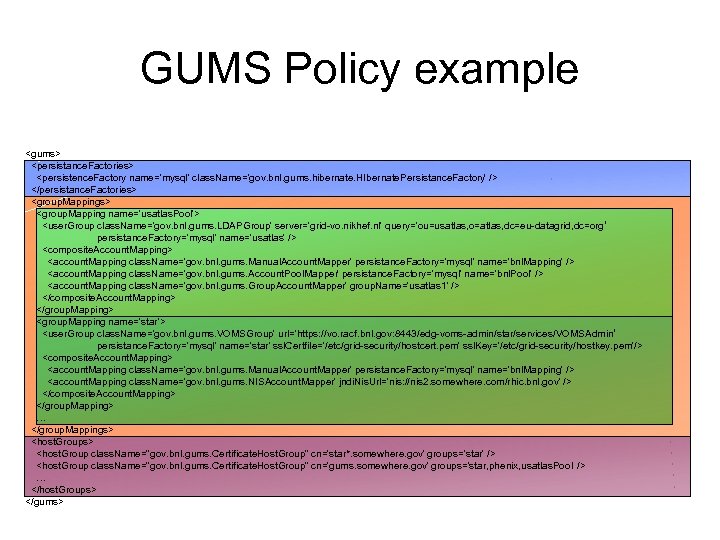

GUMS Policy example <gums> <persistance. Factories> <persistence. Factory name='mysql' class. Name='gov. bnl. gums. hibernate. HIbernate. Persistance. Factory' /> </persistance. Factories> <group. Mapping name='usatlas. Pool'> <user. Group class. Name='gov. bnl. gums. LDAPGroup' server='grid-vo. nikhef. nl' query='ou=usatlas, o=atlas, dc=eu-datagrid, dc=org‘ persistance. Factory='mysql' name='usatlas' /> <composite. Account. Mapping> <account. Mapping class. Name='gov. bnl. gums. Manual. Account. Mapper' persistance. Factory='mysql' name='bnl. Mapping' /> <account. Mapping class. Name='gov. bnl. gums. Account. Pool. Mapper' persistance. Factory='mysql' name='bnl. Pool' /> <account. Mapping class. Name='gov. bnl. gums. Group. Account. Mapper' group. Name='usatlas 1' /> </composite. Account. Mapping> </group. Mapping> <group. Mapping name='star'> <user. Group class. Name='gov. bnl. gums. VOMSGroup' url='https: //vo. racf. bnl. gov: 8443/edg-voms-admin/star/services/VOMSAdmin‘ persistance. Factory='mysql' name='star' ssl. Certfile='/etc/grid-security/hostcert. pem' ssl. Key='/etc/grid-security/hostkey. pem'/> <composite. Account. Mapping> <account. Mapping class. Name='gov. bnl. gums. Manual. Account. Mapper' persistance. Factory='mysql' name='bnl. Mapping' /> <account. Mapping class. Name='gov. bnl. gums. NISAccount. Mapper' jndi. Nis. Url='nis: //nis 2. somewhere. com/rhic. bnl. gov' /> </composite. Account. Mapping> </group. Mapping> … </group. Mappings> <host. Group class. Name="gov. bnl. gums. Certificate. Host. Group" cn='star*. somewhere. gov' groups='star' /> <host. Group class. Name="gov. bnl. gums. Certificate. Host. Group" cn='gums. somewhere. gov' groups='star, phenix, usatlas. Pool' /> … </host. Groups> </gums>

GUMS Authorization • GUMS admin can perform any operation through web service and web ui door • Host can only perform read operations (map generation and mapping requests) for itself • Configuration can be changed through filesystem only (automatically reloaded when changed)

GUMS performance • BNL production server gives ~30 req/sec… – Not that good – Is not the bottleneck right now, as the production gatekeeper can only give ~5 req/sec • Performance test show that – – Overall delay (client-server-client) is ~220 ms The GUMS logic is responsible for up to 20 ms The rest is plain AXIS SOAP + SSL It’s not glite trustmanager’s fault either…

GUMS performance • JClarens group confirmed this while comparing SOAP with XML-RPC – XML-RPC without SSL: 373 req/sec – with SSL: 274 – SOAP without SSL: 218 req/sec – with SSL: 23 – 10 times slower! • Is it SOAP? Is it Axis implementation? • At least, GUMS can run on a cluster – All state resides in the database, transactions are used, no session transfer needed, no cluster cache needed – Almost all… the configuration file is on filesystem, an needs to be updated on all machines (at the same time)

GUMS Complaints • The configuration file is difficult – It usually takes people a few tries – We should simplify it – We should probably have ways to “share” parts of it (contact a location to get standard OSG groups definitions? )

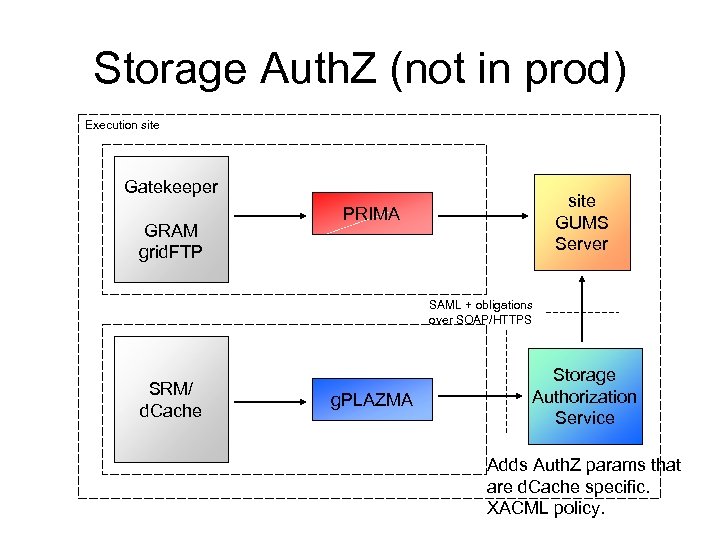

Storage Auth. Z (not in prod) Execution site Gatekeeper GRAM grid. FTP site GUMS Server PRIMA SAML + obligations over SOAP/HTTPS SRM/ d. Cache g. PLAZMA Storage Authorization Service Adds Auth. Z params that are d. Cache specific. XACML policy.

Storage Auth. Z • g. Plazma is d. Cache authorization infrastructure, which can be set to contact the Storage Authorization Service – Distributed as part of d. Cache, Beta quality • The Storage Auth. Z Service speaks the same SAML GUMS does, and is configured with a XACML policy – Contact GUMS to retrieve the mapping – Adds other Auth. Z parameters (i. e. gid, user home path, …) – Prototype level

Other issues: maps • GUMS is able to generate grid-mapfiles and also an inverse accounting map used by OSG accounting – Want to move away from them: creating a map means exploring all the policy, which breaks dynamic account mapping (i. e. for a pool, we have to assigns accounts to everybody) • Assumption: we believe that inverse maps (uid-> DN) are not needed – For example, in accounting what you really need is a history of what uid was assigned to what DN. That changes with time. It’s better handled by looking at log files.

Conclusions • GUMS and PRIMA are deployed in production on a number of OSG sites • Privilege project depends on the following formats: – VOMS Proxy format (PRIMA) – Auth. Z request: SAML + obligations (everything)

a1f7f0bf8f4490fb0dd64c524cdbba39.ppt