4a6aa192d02daba6b0d5ada8422ea408.ppt

- Количество слайдов: 16

Oracle for Physics Services and Support Levels physics-database. support@cern. ch Maria Girone, IT-ADC 6 April 2005 AAM, 6 April 2005 Physics Database Services and Support Levels

Outline • Database Services for Physics at CERN • Scalable service for the LHC • Update on service in 2005 • Deployment of physics applications • Conclusions AAM, 6 April 2005 Physics Database Services and Support Levels 2

Database Services for Physics • Mandate – Coordination of the deployment of physics database applications – Administration of the physics databases in co-operation with the experiments or grid deployment teams – Consultancy for application design, development and tuning • Organized the Developers Database Workshop in January 2005 – Involvement in 3 D project and LCG Service Challenges • Provide database services for LHC and non-LHC experiments – Grid File Catalogs (RLS replacement expected in few months) – ~50 dedicated Linux disk servers – Sun Cluster (PDB 01) • public 2 -node for applications related to book keeping, file transfer, physics production processing, on-line integration, detector construction and calibration AAM, 6 April 2005 Physics Database Services and Support Levels 3

Challenges in 2005 • Service requirements from experiments will increase for LHC start-up preparation – Requirements still uncertain in some areas – Need to plan for a scalable service • Current infrastructure based on Sun cluster is already not sufficient to achieve required performance and application isolation – Linux based solution with ORACLE Real Application Cluster looks promising – Need to provide guaranteed resources to key applications • Need to identify them with experiments • Deploy them only after a validation phase AAM, 6 April 2005 Physics Database Services and Support Levels 4

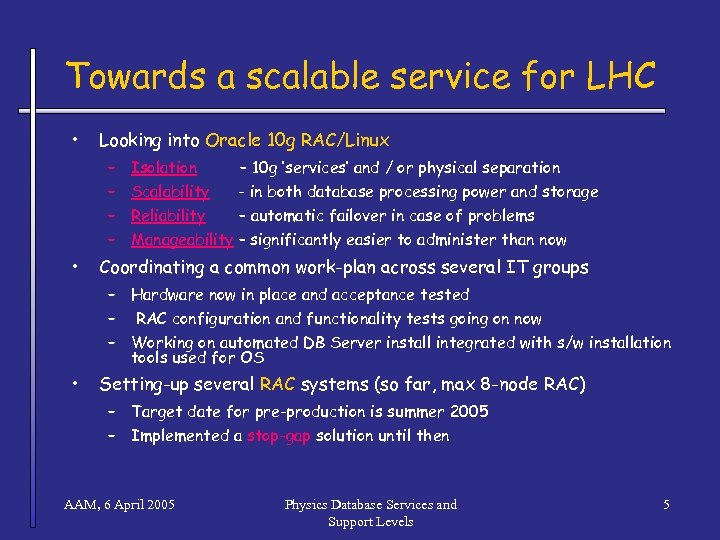

Towards a scalable service for LHC • Looking into Oracle 10 g RAC/Linux – – • Isolation – 10 g ‘services’ and / or physical separation Scalability - in both database processing power and storage Reliability – automatic failover in case of problems Manageability – significantly easier to administer than now Coordinating a common work-plan across several IT groups – Hardware now in place and acceptance tested – RAC configuration and functionality tests going on now – Working on automated DB Server install integrated with s/w installation tools used for OS • Setting-up several RAC systems (so far, max 8 -node RAC) – Target date for pre-production is summer 2005 – Implemented a stop-gap solution until then AAM, 6 April 2005 Physics Database Services and Support Levels 5

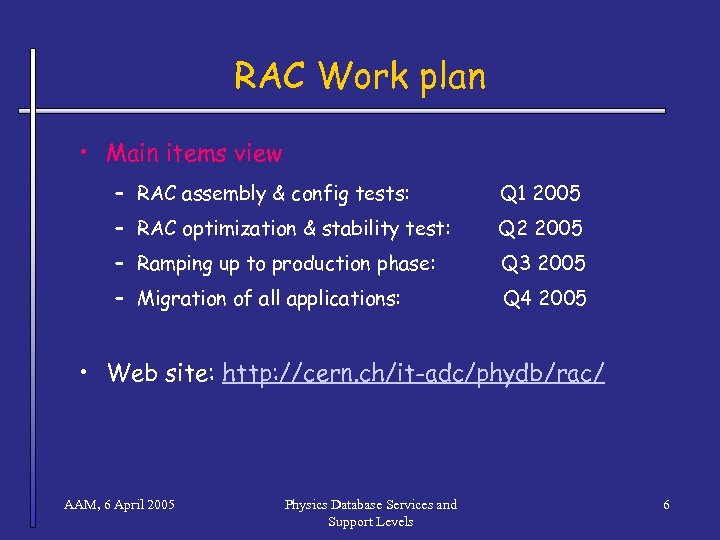

RAC Work plan • Main items view – RAC assembly & config tests: Q 1 2005 – RAC optimization & stability test: Q 2 2005 – Ramping up to production phase: Q 3 2005 – Migration of all applications: Q 4 2005 • Web site: http: //cern. ch/it-adc/phydb/rac/ AAM, 6 April 2005 Physics Database Services and Support Levels 6

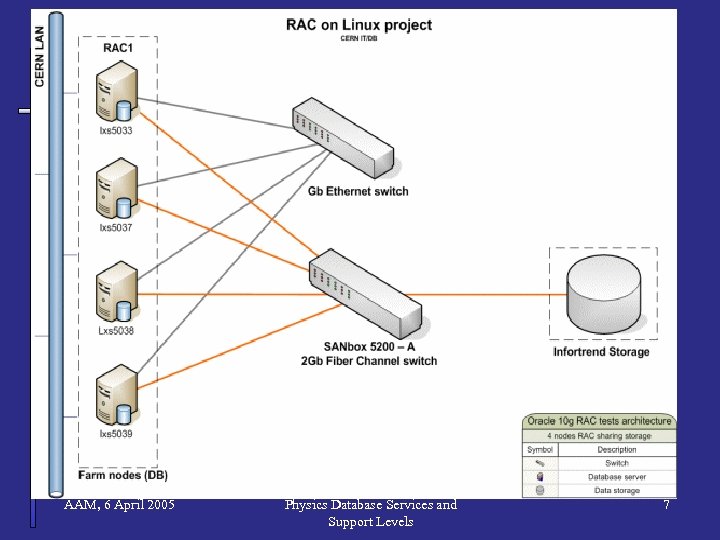

AAM, 6 April 2005 Physics Database Services and Support Levels 7

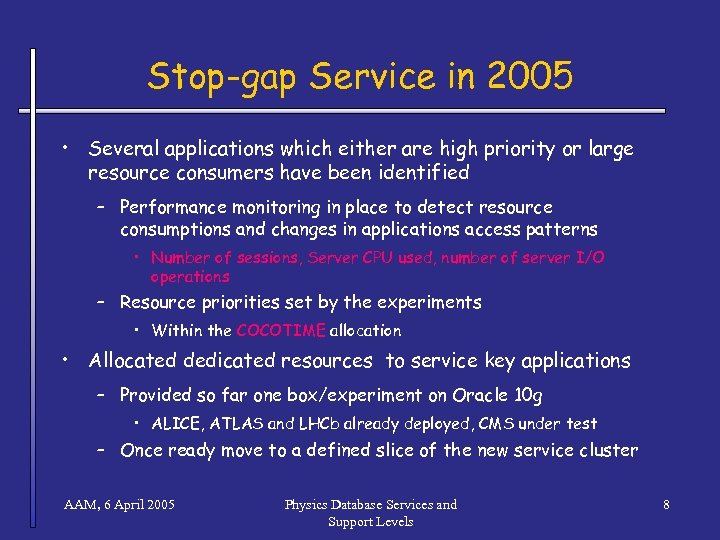

Stop-gap Service in 2005 • Several applications which either are high priority or large resource consumers have been identified – Performance monitoring in place to detect resource consumptions and changes in applications access patterns • Number of sessions, Server CPU used, number of server I/O operations – Resource priorities set by the experiments • Within the COCOTIME allocation • Allocated dedicated resources to service key applications – Provided so far one box/experiment on Oracle 10 g • ALICE, ATLAS and LHCb already deployed, CMS under test – Once ready move to a defined slice of the new service cluster AAM, 6 April 2005 Physics Database Services and Support Levels 8

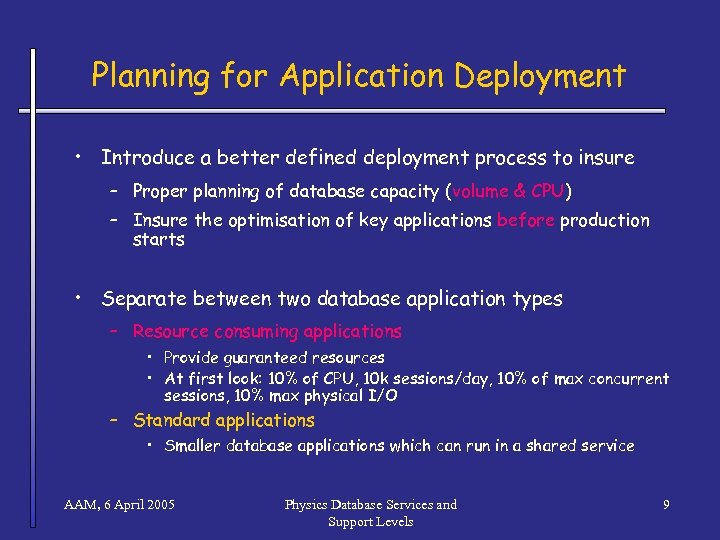

Planning for Application Deployment • Introduce a better defined deployment process to insure – Proper planning of database capacity (volume & CPU) – Insure the optimisation of key applications before production starts • Separate between two database application types – Resource consuming applications • Provide guaranteed resources • At first look: 10% of CPU, 10 k sessions/day, 10% of max concurrent sessions, 10% max physical I/O – Standard applications • Smaller database applications which can run in a shared service AAM, 6 April 2005 Physics Database Services and Support Levels 9

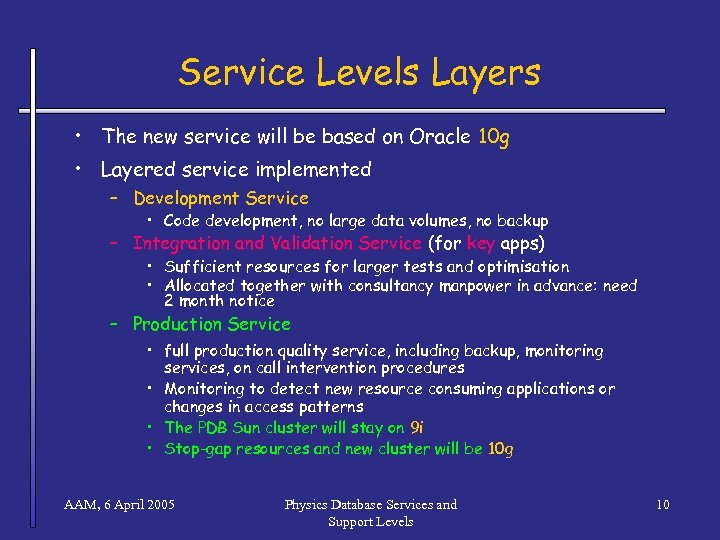

Service Levels Layers • The new service will be based on Oracle 10 g • Layered service implemented – Development Service • Code development, no large data volumes, no backup – Integration and Validation Service (for key apps) • Sufficient resources for larger tests and optimisation • Allocated together with consultancy manpower in advance: need 2 month notice – Production Service • full production quality service, including backup, monitoring services, on call intervention procedures • Monitoring to detect new resource consuming applications or changes in access patterns • The PDB Sun cluster will stay on 9 i • Stop-gap resources and new cluster will be 10 g AAM, 6 April 2005 Physics Database Services and Support Levels 10

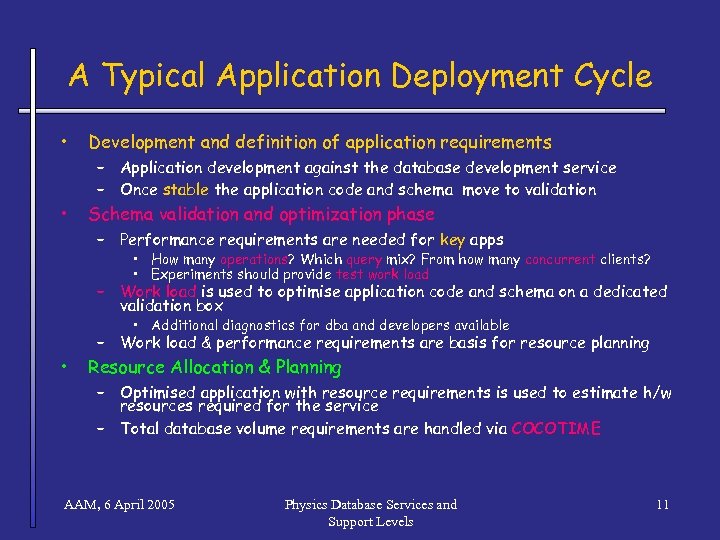

A Typical Application Deployment Cycle • • Development and definition of application requirements – Application development against the database development service – Once stable the application code and schema move to validation Schema validation and optimization phase – Performance requirements are needed for key apps • How many operations? Which query mix? From how many concurrent clients? • Experiments should provide test work load – Work load is used to optimise application code and schema on a dedicated validation box • Additional diagnostics for dba and developers available • – Work load & performance requirements are basis for resource planning Resource Allocation & Planning – Optimised application with resource requirements is used to estimate h/w resources required for the service – Total database volume requirements are handled via COCOTIME AAM, 6 April 2005 Physics Database Services and Support Levels 11

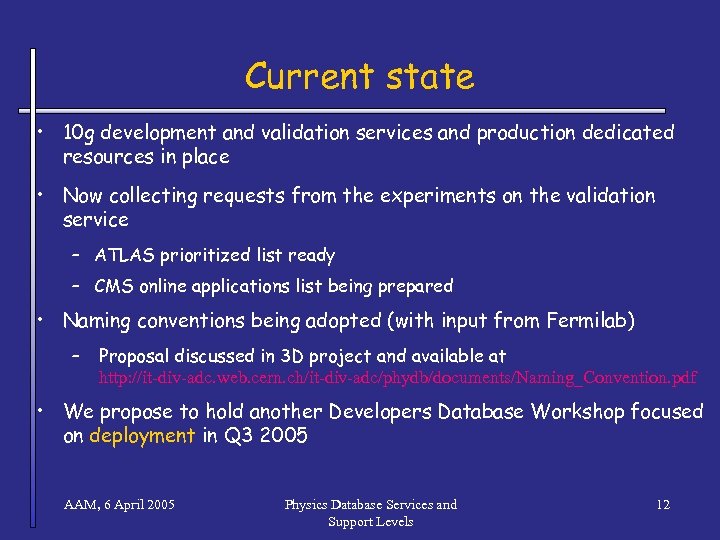

Current state • 10 g development and validation services and production dedicated resources in place • Now collecting requests from the experiments on the validation service – ATLAS prioritized list ready – CMS online applications list being prepared • Naming conventions being adopted (with input from Fermilab) – Proposal discussed in 3 D project and available at http: //it-div-adc. web. cern. ch/it-div-adc/phydb/documents/Naming_Convention. pdf • We propose to hold another Developers Database Workshop focused on deployment in Q 3 2005 AAM, 6 April 2005 Physics Database Services and Support Levels 12

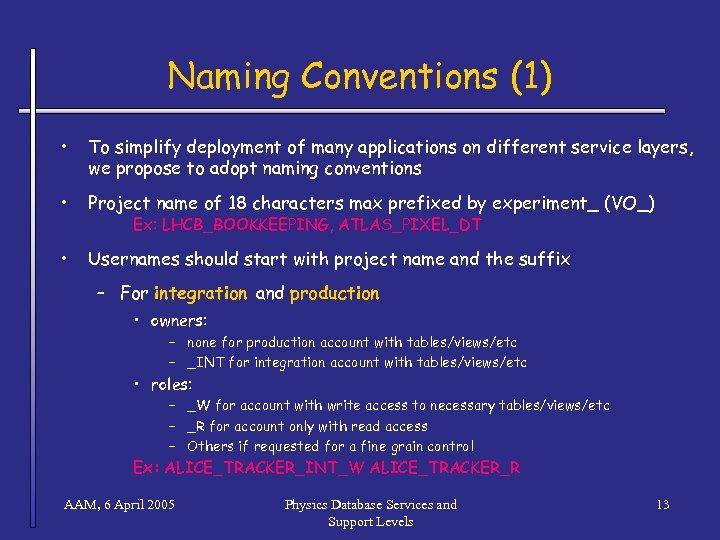

Naming Conventions (1) • To simplify deployment of many applications on different service layers, we propose to adopt naming conventions • Project name of 18 characters max prefixed by experiment_ (VO_) • Usernames should start with project name and the suffix Ex: LHCB_BOOKKEEPING, ATLAS_PIXEL_DT – For integration and production • owners: – none for production account with tables/views/etc – _INT for integration account with tables/views/etc • roles: – _W for account with write access to necessary tables/views/etc – _R for account only with read access – Others if requested for a fine grain control Ex: ALICE_TRACKER_INT_W ALICE_TRACKER_R AAM, 6 April 2005 Physics Database Services and Support Levels 13

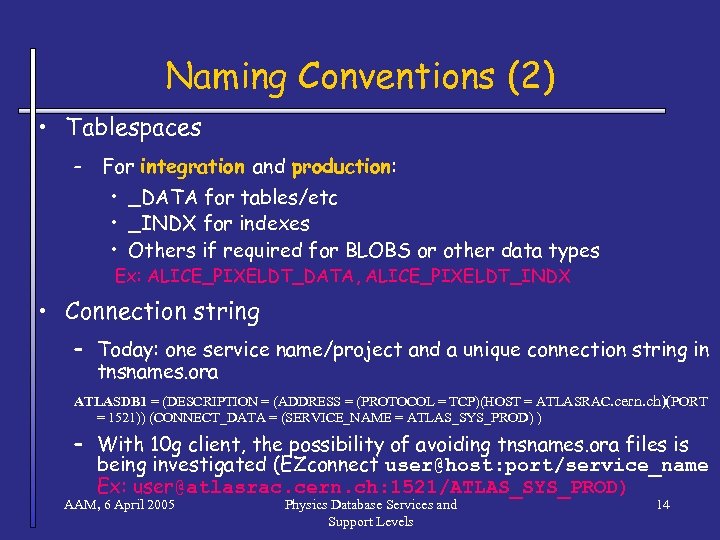

Naming Conventions (2) • Tablespaces – For integration and production: • _DATA for tables/etc • _INDX for indexes • Others if required for BLOBS or other data types Ex: ALICE_PIXELDT_DATA, ALICE_PIXELDT_INDX • Connection string – Today: one service name/project and a unique connection string in tnsnames. ora ATLASDB 1 = (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = ATLASRAC. cern. ch)(PORT = 1521)) (CONNECT_DATA = (SERVICE_NAME = ATLAS_SYS_PROD) ) – With 10 g client, the possibility of avoiding tnsnames. ora files is being investigated (EZconnect user@host: port/service_name Ex: user@atlasrac. cern. ch: 1521/ATLAS_SYS_PROD) AAM, 6 April 2005 Physics Database Services and Support Levels 14

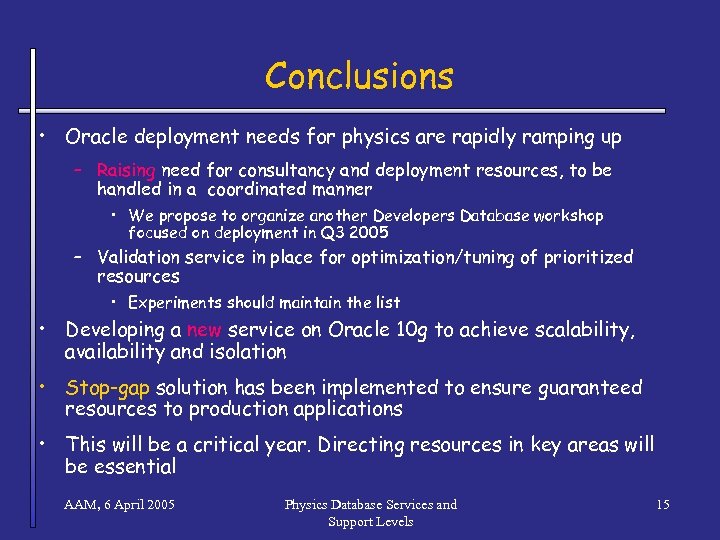

Conclusions • Oracle deployment needs for physics are rapidly ramping up – Raising need for consultancy and deployment resources, to be handled in a coordinated manner • We propose to organize another Developers Database workshop focused on deployment in Q 3 2005 – Validation service in place for optimization/tuning of prioritized resources • Experiments should maintain the list • Developing a new service on Oracle 10 g to achieve scalability, availability and isolation • Stop-gap solution has been implemented to ensure guaranteed resources to production applications • This will be a critical year. Directing resources in key areas will be essential AAM, 6 April 2005 Physics Database Services and Support Levels 15

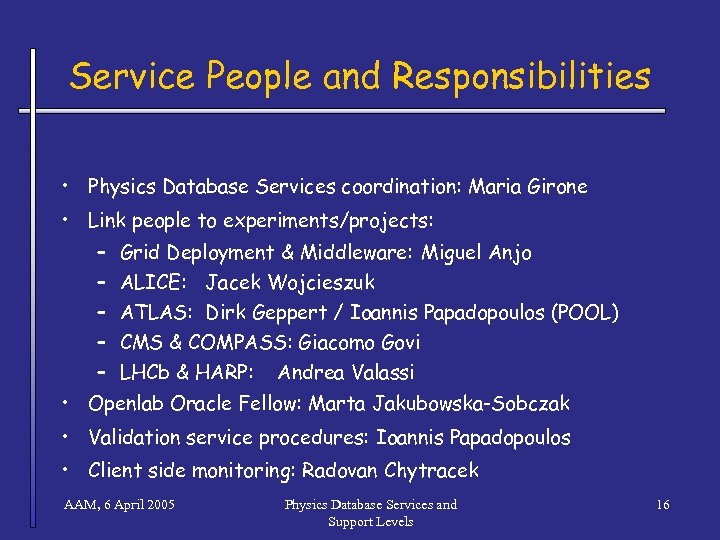

Service People and Responsibilities • Physics Database Services coordination: Maria Girone • Link people to experiments/projects: – – – Grid Deployment & Middleware: Miguel Anjo ALICE: Jacek Wojcieszuk ATLAS: Dirk Geppert / Ioannis Papadopoulos (POOL) CMS & COMPASS: Giacomo Govi LHCb & HARP: Andrea Valassi • Openlab Oracle Fellow: Marta Jakubowska-Sobczak • Validation service procedures: Ioannis Papadopoulos • Client side monitoring: Radovan Chytracek AAM, 6 April 2005 Physics Database Services and Support Levels 16

4a6aa192d02daba6b0d5ada8422ea408.ppt