a8012f86d7c5af9403df813aa395a427.ppt

- Количество слайдов: 38

Optimizing the Performance of Sparse Matrix-Vector Multiplication Eun-Jin Im U. C. Berkeley 6/13/00 U. C. Berkeley 1

Optimizing the Performance of Sparse Matrix-Vector Multiplication Eun-Jin Im U. C. Berkeley 6/13/00 U. C. Berkeley 1

Overview n n Motivation Optimization techniques n n n n Register Blocking Cache Blocking Multiple Vectors Sparsity system Related Work Contribution Conclusion 6/13/00 U. C. Berkeley 2

Overview n n Motivation Optimization techniques n n n n Register Blocking Cache Blocking Multiple Vectors Sparsity system Related Work Contribution Conclusion 6/13/00 U. C. Berkeley 2

Motivation : Usage n Sparse Matrix-Vector Multiplication n Usage of this operation: n n n Iterative Solvers Explicit Methods Eigenvalue and Singular Value Problems Applications in structure modeling, fluid dynamics, document retrieval(Latent Semantic Indexing) and many other simulation areas 6/13/00 U. C. Berkeley 3

Motivation : Usage n Sparse Matrix-Vector Multiplication n Usage of this operation: n n n Iterative Solvers Explicit Methods Eigenvalue and Singular Value Problems Applications in structure modeling, fluid dynamics, document retrieval(Latent Semantic Indexing) and many other simulation areas 6/13/00 U. C. Berkeley 3

Motivation : Performance (1) n n Matrix-vector multiplication (BLAS 2) is slower than matrix-matrix multiplication (BLAS 3) For example, on 167 MHz Ultra. SPARC I, n n Ø Vendor optimized matrix-vector multiplication: 57 Mflops Vendor optimized matrix-matrix multiplication: 185 Mflops The reason: lower ratio of the number of floating point operations to the number of memory operation 6/13/00 U. C. Berkeley 4

Motivation : Performance (1) n n Matrix-vector multiplication (BLAS 2) is slower than matrix-matrix multiplication (BLAS 3) For example, on 167 MHz Ultra. SPARC I, n n Ø Vendor optimized matrix-vector multiplication: 57 Mflops Vendor optimized matrix-matrix multiplication: 185 Mflops The reason: lower ratio of the number of floating point operations to the number of memory operation 6/13/00 U. C. Berkeley 4

Motivation : Performance (2) n n Sparse matrix operation is slower than dense matrix operation. For example, on 167 MHz Ultra. SPARC I, n n Ø Dense matrix-vector multiplication : naïve implementation : 38 Mflops vendor optimized implementation : 57 Mflops Sparse matrix-vector multiplication (Naïve implementation) 5. 7 - 25 Mflops The reason : indirect data structure, thus inefficient memory accesses 6/13/00 U. C. Berkeley 5

Motivation : Performance (2) n n Sparse matrix operation is slower than dense matrix operation. For example, on 167 MHz Ultra. SPARC I, n n Ø Dense matrix-vector multiplication : naïve implementation : 38 Mflops vendor optimized implementation : 57 Mflops Sparse matrix-vector multiplication (Naïve implementation) 5. 7 - 25 Mflops The reason : indirect data structure, thus inefficient memory accesses 6/13/00 U. C. Berkeley 5

Motivation : Optimized libraries n Old approach : Hand-Optimized Libraries n n New approach : Automatic generation of libraries n n Vendor-supplied BLAS, LAPACK PHi. PAC (dense linear algebra) ATLAS (dense linear algebra) FFTW (fast fourier transform) Our approach : Automatic generation of libraries for sparse matrices Additional dimension : nonzero structure of sparse matrices 6/13/00 U. C. Berkeley 6

Motivation : Optimized libraries n Old approach : Hand-Optimized Libraries n n New approach : Automatic generation of libraries n n Vendor-supplied BLAS, LAPACK PHi. PAC (dense linear algebra) ATLAS (dense linear algebra) FFTW (fast fourier transform) Our approach : Automatic generation of libraries for sparse matrices Additional dimension : nonzero structure of sparse matrices 6/13/00 U. C. Berkeley 6

Sparse Matrix Formats n There are large number of sparse matrix formats. n Point-entry Coordinate format (COO), Compressed Sparse Row (CSR), Compressed Sparse Column (CSC), Sparse Diagonal (DIA), … n Block-entry Block Coordinate (BCO), Block Sparse Row (BSR), Block Sparse Column (BSC), Block Diagonal (BDI), Variable Block Compressed Sparse Row (VBR), … 6/13/00 U. C. Berkeley 7

Sparse Matrix Formats n There are large number of sparse matrix formats. n Point-entry Coordinate format (COO), Compressed Sparse Row (CSR), Compressed Sparse Column (CSC), Sparse Diagonal (DIA), … n Block-entry Block Coordinate (BCO), Block Sparse Row (BSR), Block Sparse Column (BSC), Block Diagonal (BDI), Variable Block Compressed Sparse Row (VBR), … 6/13/00 U. C. Berkeley 7

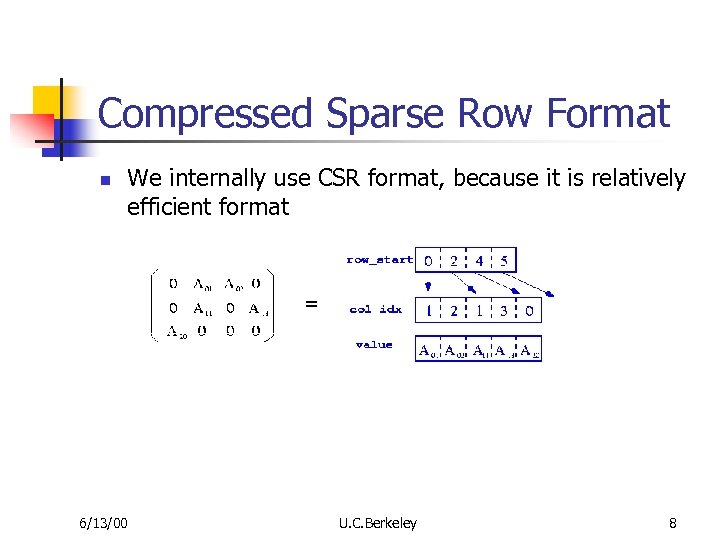

Compressed Sparse Row Format n We internally use CSR format, because it is relatively efficient format 6/13/00 U. C. Berkeley 8

Compressed Sparse Row Format n We internally use CSR format, because it is relatively efficient format 6/13/00 U. C. Berkeley 8

Optimization Techniques n n n Register Blocking Cache Blocking Multiple vector 6/13/00 U. C. Berkeley 9

Optimization Techniques n n n Register Blocking Cache Blocking Multiple vector 6/13/00 U. C. Berkeley 9

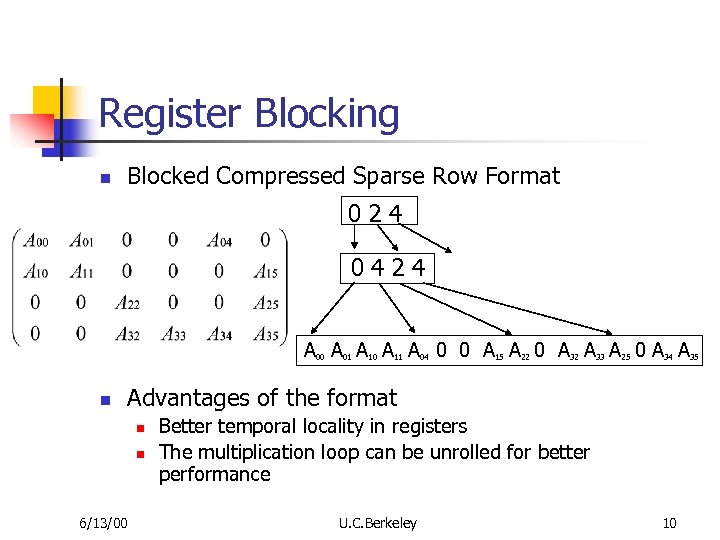

Register Blocking n Blocked Compressed Sparse Row Format 024 0424 A A A 0 0 A A A 0 A A 00 n 01 10 11 04 15 22 32 33 25 34 Advantages of the format n n 6/13/00 Better temporal locality in registers The multiplication loop can be unrolled for better performance U. C. Berkeley 10 35

Register Blocking n Blocked Compressed Sparse Row Format 024 0424 A A A 0 0 A A A 0 A A 00 n 01 10 11 04 15 22 32 33 25 34 Advantages of the format n n 6/13/00 Better temporal locality in registers The multiplication loop can be unrolled for better performance U. C. Berkeley 10 35

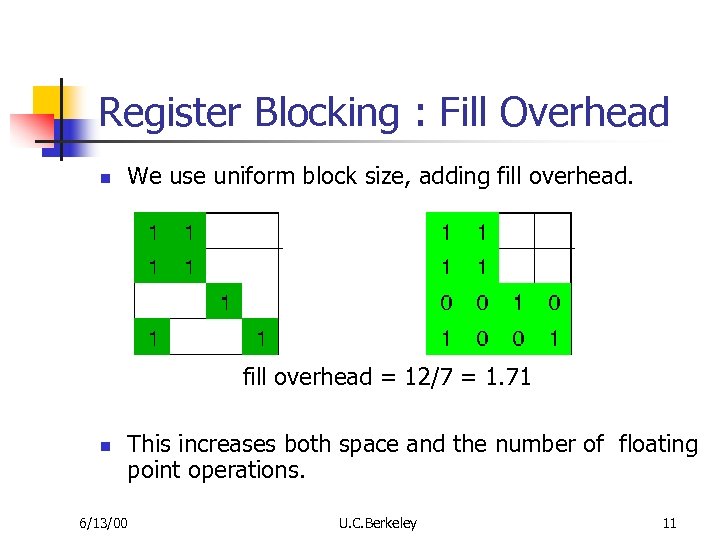

Register Blocking : Fill Overhead n We use uniform block size, adding fill overhead = 12/7 = 1. 71 n This increases both space and the number of floating point operations. 6/13/00 U. C. Berkeley 11

Register Blocking : Fill Overhead n We use uniform block size, adding fill overhead = 12/7 = 1. 71 n This increases both space and the number of floating point operations. 6/13/00 U. C. Berkeley 11

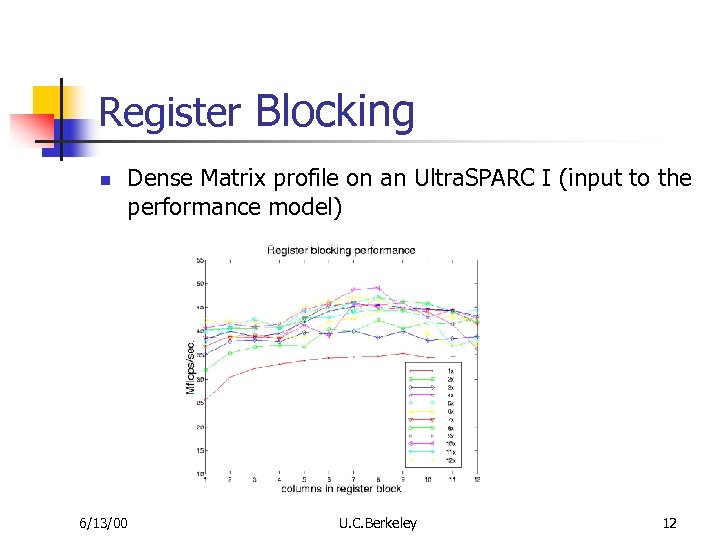

Register Blocking n Dense Matrix profile on an Ultra. SPARC I (input to the performance model) 6/13/00 U. C. Berkeley 12

Register Blocking n Dense Matrix profile on an Ultra. SPARC I (input to the performance model) 6/13/00 U. C. Berkeley 12

Register Blocking : Selecting the block size n The hard part of the problem is picking the block size so that : n n n It minimizes the fill overhead It maximizes the raw performance Two approaches : n n 6/13/00 Exhaustive search Using a model U. C. Berkeley 13

Register Blocking : Selecting the block size n The hard part of the problem is picking the block size so that : n n n It minimizes the fill overhead It maximizes the raw performance Two approaches : n n 6/13/00 Exhaustive search Using a model U. C. Berkeley 13

Register Blocking: Performance model n Two components to the performance model n n Multiplication performance of dense matrix represented in sparse format Estimated fill overhead Predicted performance for block size r x c = 6/13/00 dense r x c blocked performance fill overhead U. C. Berkeley 14

Register Blocking: Performance model n Two components to the performance model n n Multiplication performance of dense matrix represented in sparse format Estimated fill overhead Predicted performance for block size r x c = 6/13/00 dense r x c blocked performance fill overhead U. C. Berkeley 14

Benchmark matrices n n n Matrix 1: Dense matrix (1000 x 1000) Matrices 2 -17 : Finite Element Method matrices Matrices 18 -39 : matrices from Structural Engineering, Device Simulation Matrices 40 -44 : Linear Programming matrices Matrix 45 : document retrieval matrix used for Latent Semantic Indexing Matrix 46 : random matrix (10000 x 10000, 0. 15%) 6/13/00 U. C. Berkeley 15

Benchmark matrices n n n Matrix 1: Dense matrix (1000 x 1000) Matrices 2 -17 : Finite Element Method matrices Matrices 18 -39 : matrices from Structural Engineering, Device Simulation Matrices 40 -44 : Linear Programming matrices Matrix 45 : document retrieval matrix used for Latent Semantic Indexing Matrix 46 : random matrix (10000 x 10000, 0. 15%) 6/13/00 U. C. Berkeley 15

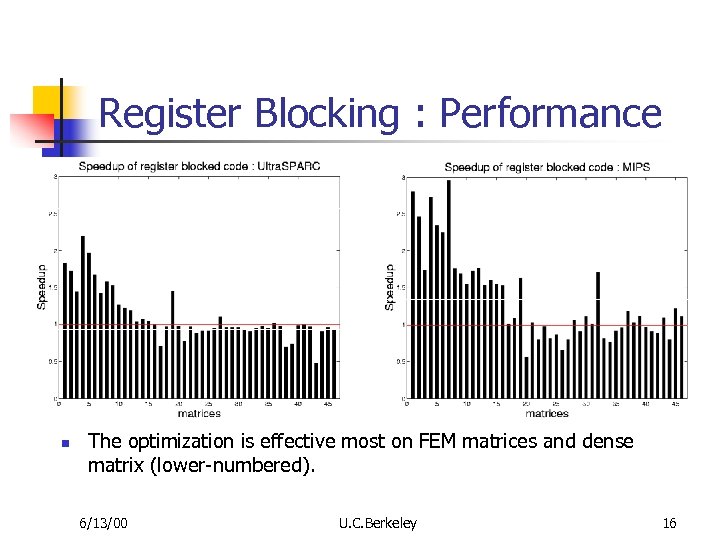

Register Blocking : Performance n The optimization is effective most on FEM matrices and dense matrix (lower-numbered). 6/13/00 U. C. Berkeley 16

Register Blocking : Performance n The optimization is effective most on FEM matrices and dense matrix (lower-numbered). 6/13/00 U. C. Berkeley 16

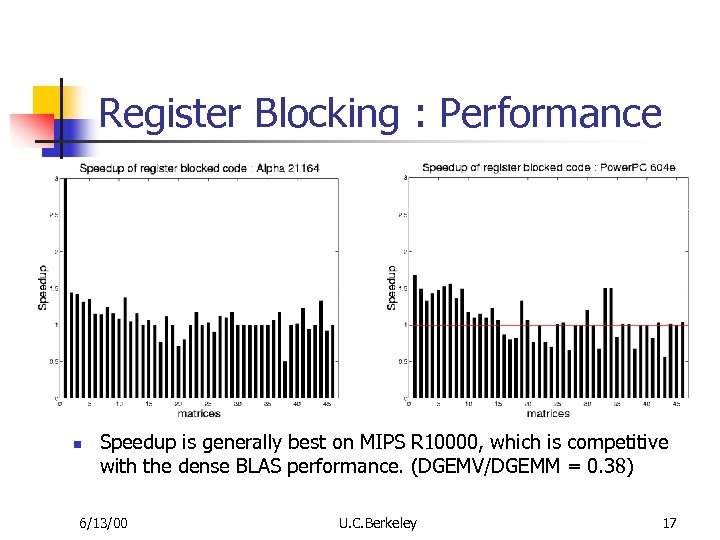

Register Blocking : Performance n Speedup is generally best on MIPS R 10000, which is competitive with the dense BLAS performance. (DGEMV/DGEMM = 0. 38) 6/13/00 U. C. Berkeley 17

Register Blocking : Performance n Speedup is generally best on MIPS R 10000, which is competitive with the dense BLAS performance. (DGEMV/DGEMM = 0. 38) 6/13/00 U. C. Berkeley 17

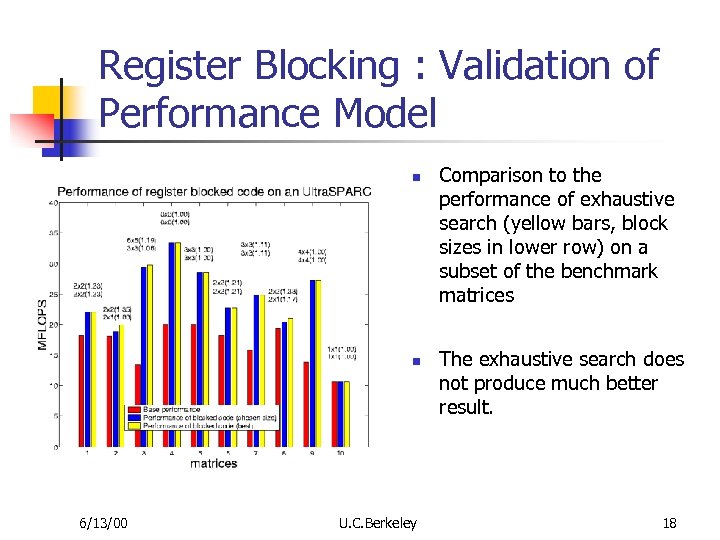

Register Blocking : Validation of Performance Model n n 6/13/00 U. C. Berkeley Comparison to the performance of exhaustive search (yellow bars, block sizes in lower row) on a subset of the benchmark matrices The exhaustive search does not produce much better result. 18

Register Blocking : Validation of Performance Model n n 6/13/00 U. C. Berkeley Comparison to the performance of exhaustive search (yellow bars, block sizes in lower row) on a subset of the benchmark matrices The exhaustive search does not produce much better result. 18

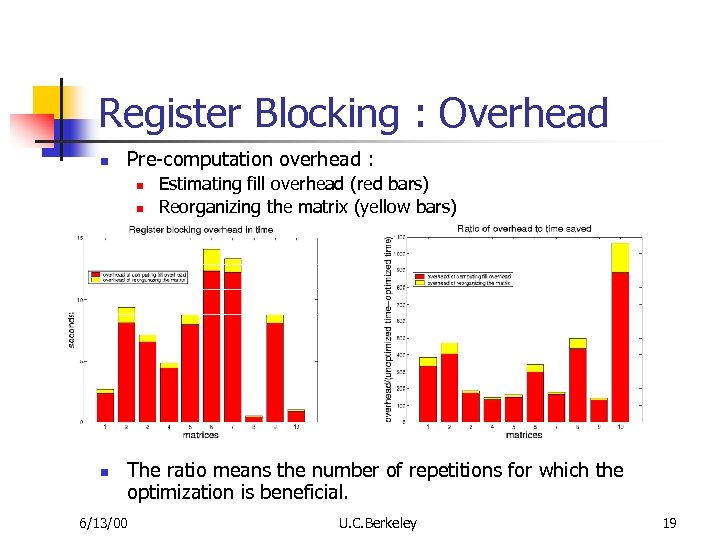

Register Blocking : Overhead n Pre-computation overhead : n n n Estimating fill overhead (red bars) Reorganizing the matrix (yellow bars) The ratio means the number of repetitions for which the optimization is beneficial. 6/13/00 U. C. Berkeley 19

Register Blocking : Overhead n Pre-computation overhead : n n n Estimating fill overhead (red bars) Reorganizing the matrix (yellow bars) The ratio means the number of repetitions for which the optimization is beneficial. 6/13/00 U. C. Berkeley 19

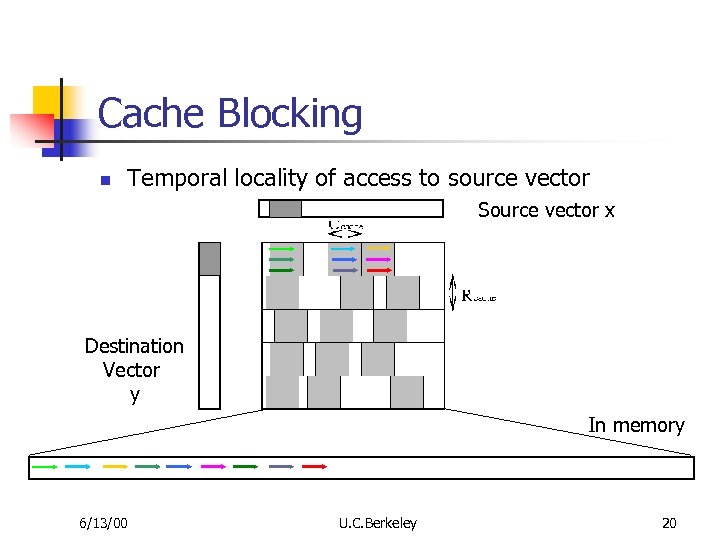

Cache Blocking n Temporal locality of access to source vector Source vector x Destination Vector y In memory 6/13/00 U. C. Berkeley 20

Cache Blocking n Temporal locality of access to source vector Source vector x Destination Vector y In memory 6/13/00 U. C. Berkeley 20

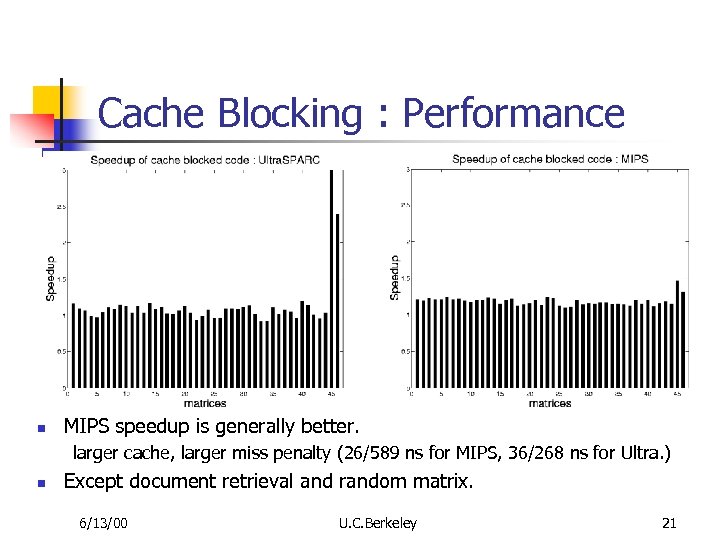

Cache Blocking : Performance n MIPS speedup is generally better. larger cache, larger miss penalty (26/589 ns for MIPS, 36/268 ns for Ultra. ) n Except document retrieval and random matrix. 6/13/00 U. C. Berkeley 21

Cache Blocking : Performance n MIPS speedup is generally better. larger cache, larger miss penalty (26/589 ns for MIPS, 36/268 ns for Ultra. ) n Except document retrieval and random matrix. 6/13/00 U. C. Berkeley 21

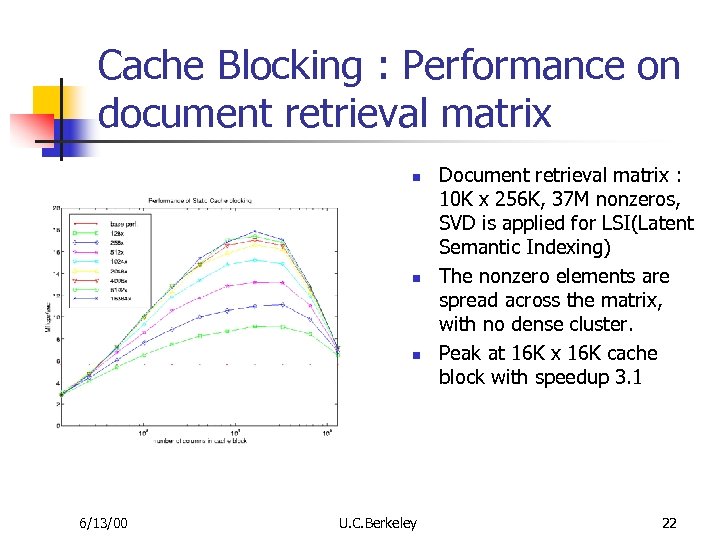

Cache Blocking : Performance on document retrieval matrix n n n 6/13/00 U. C. Berkeley Document retrieval matrix : 10 K x 256 K, 37 M nonzeros, SVD is applied for LSI(Latent Semantic Indexing) The nonzero elements are spread across the matrix, with no dense cluster. Peak at 16 K x 16 K cache block with speedup 3. 1 22

Cache Blocking : Performance on document retrieval matrix n n n 6/13/00 U. C. Berkeley Document retrieval matrix : 10 K x 256 K, 37 M nonzeros, SVD is applied for LSI(Latent Semantic Indexing) The nonzero elements are spread across the matrix, with no dense cluster. Peak at 16 K x 16 K cache block with speedup 3. 1 22

Cache Blocking : When and how to use cache blocking n From the experiment, the matrices for which cache blocking is most effective are large and “random”. n n We developed a measurement of “randomness” of matrix. We perform search in coarse grain, to decide cache block size. 6/13/00 U. C. Berkeley 23

Cache Blocking : When and how to use cache blocking n From the experiment, the matrices for which cache blocking is most effective are large and “random”. n n We developed a measurement of “randomness” of matrix. We perform search in coarse grain, to decide cache block size. 6/13/00 U. C. Berkeley 23

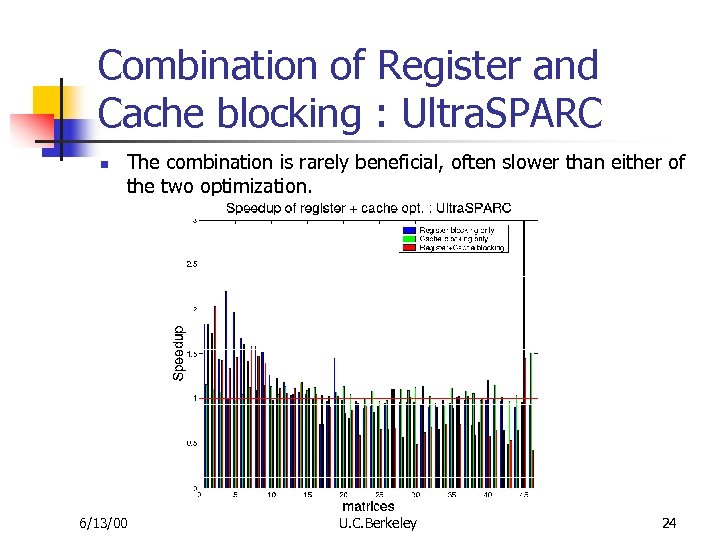

Combination of Register and Cache blocking : Ultra. SPARC n The combination is rarely beneficial, often slower than either of the two optimization. 6/13/00 U. C. Berkeley 24

Combination of Register and Cache blocking : Ultra. SPARC n The combination is rarely beneficial, often slower than either of the two optimization. 6/13/00 U. C. Berkeley 24

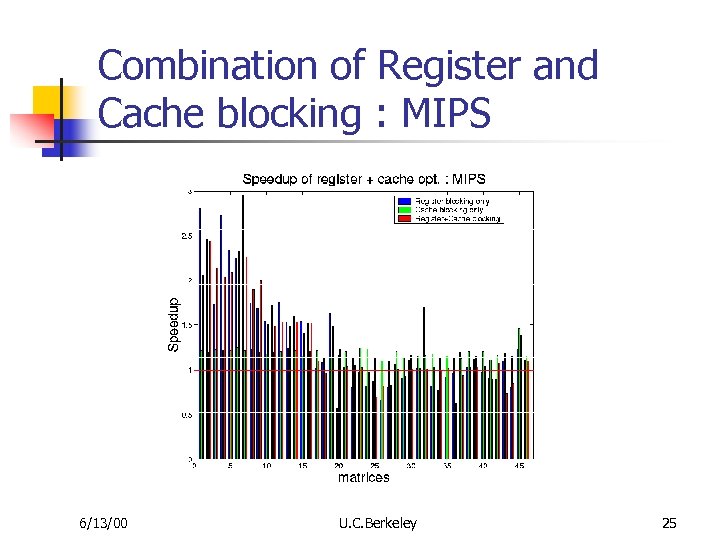

Combination of Register and Cache blocking : MIPS 6/13/00 U. C. Berkeley 25

Combination of Register and Cache blocking : MIPS 6/13/00 U. C. Berkeley 25

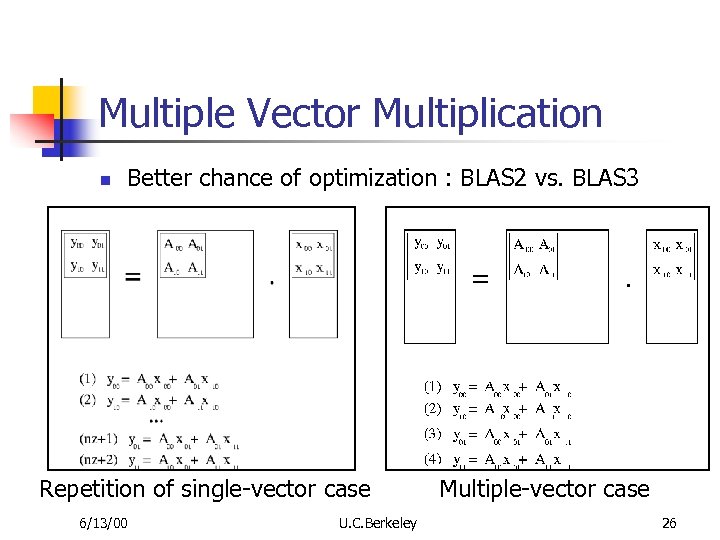

Multiple Vector Multiplication n Better chance of optimization : BLAS 2 vs. BLAS 3 Repetition of single-vector case 6/13/00 U. C. Berkeley Multiple-vector case 26

Multiple Vector Multiplication n Better chance of optimization : BLAS 2 vs. BLAS 3 Repetition of single-vector case 6/13/00 U. C. Berkeley Multiple-vector case 26

Multiple Vector Multiplication : Performances n n Register blocking performance Cache blocking performance 6/13/00 U. C. Berkeley 27

Multiple Vector Multiplication : Performances n n Register blocking performance Cache blocking performance 6/13/00 U. C. Berkeley 27

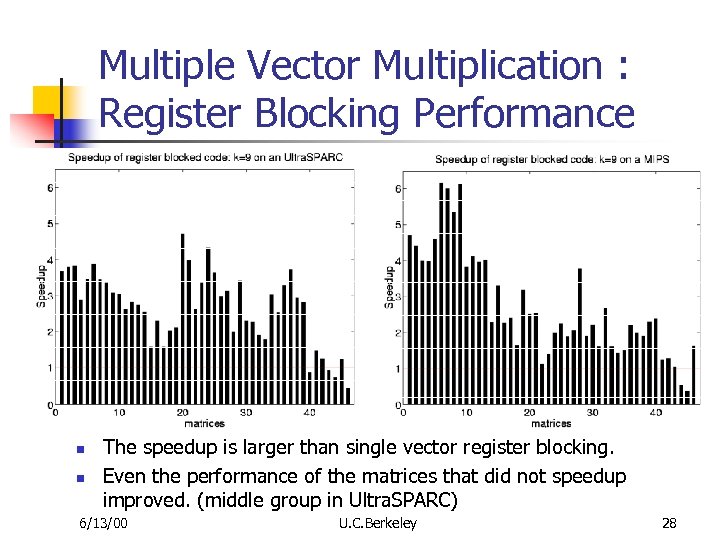

Multiple Vector Multiplication : Register Blocking Performance n n The speedup is larger than single vector register blocking. Even the performance of the matrices that did not speedup improved. (middle group in Ultra. SPARC) 6/13/00 U. C. Berkeley 28

Multiple Vector Multiplication : Register Blocking Performance n n The speedup is larger than single vector register blocking. Even the performance of the matrices that did not speedup improved. (middle group in Ultra. SPARC) 6/13/00 U. C. Berkeley 28

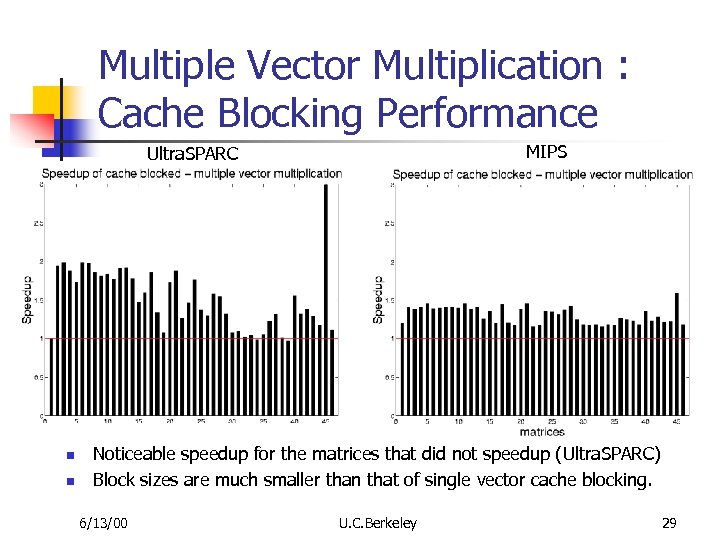

Multiple Vector Multiplication : Cache Blocking Performance MIPS Ultra. SPARC n n Noticeable speedup for the matrices that did not speedup (Ultra. SPARC) Block sizes are much smaller than that of single vector cache blocking. 6/13/00 U. C. Berkeley 29

Multiple Vector Multiplication : Cache Blocking Performance MIPS Ultra. SPARC n n Noticeable speedup for the matrices that did not speedup (Ultra. SPARC) Block sizes are much smaller than that of single vector cache blocking. 6/13/00 U. C. Berkeley 29

Sparsity System : Purpose n n n Guide a choice of optimization Automatic selection of optimization parameters such as block size, number of vectors http: //comix. cs. berkeley. edu/~ejim/sparsity 6/13/00 U. C. Berkeley 30

Sparsity System : Purpose n n n Guide a choice of optimization Automatic selection of optimization parameters such as block size, number of vectors http: //comix. cs. berkeley. edu/~ejim/sparsity 6/13/00 U. C. Berkeley 30

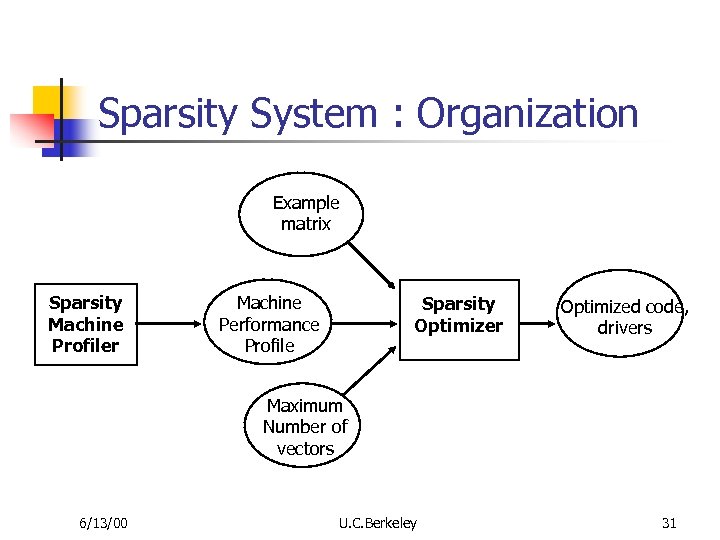

Sparsity System : Organization Example matrix Sparsity Machine Profiler Machine Performance Profile Sparsity Optimizer Optimized code, drivers Maximum Number of vectors 6/13/00 U. C. Berkeley 31

Sparsity System : Organization Example matrix Sparsity Machine Profiler Machine Performance Profile Sparsity Optimizer Optimized code, drivers Maximum Number of vectors 6/13/00 U. C. Berkeley 31

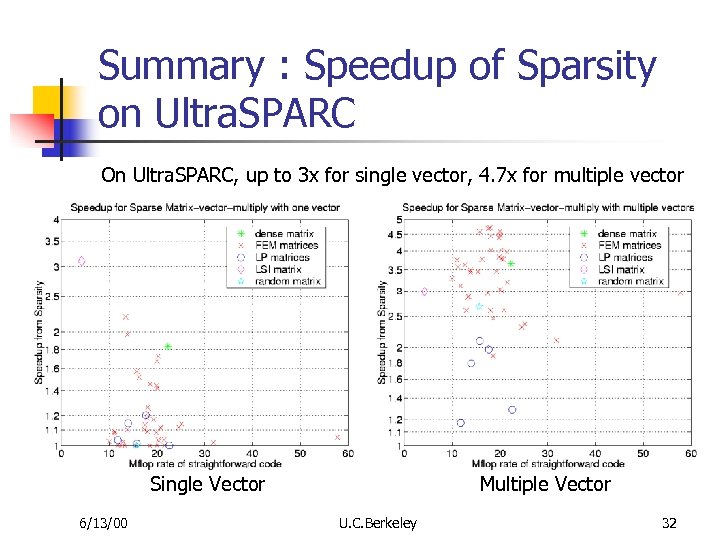

Summary : Speedup of Sparsity on Ultra. SPARC On Ultra. SPARC, up to 3 x for single vector, 4. 7 x for multiple vector Single Vector 6/13/00 Multiple Vector U. C. Berkeley 32

Summary : Speedup of Sparsity on Ultra. SPARC On Ultra. SPARC, up to 3 x for single vector, 4. 7 x for multiple vector Single Vector 6/13/00 Multiple Vector U. C. Berkeley 32

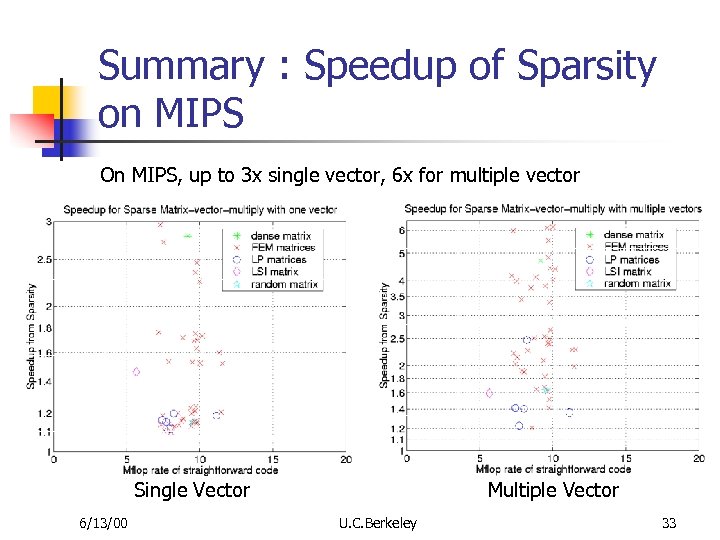

Summary : Speedup of Sparsity on MIPS On MIPS, up to 3 x single vector, 6 x for multiple vector Single Vector 6/13/00 Multiple Vector U. C. Berkeley 33

Summary : Speedup of Sparsity on MIPS On MIPS, up to 3 x single vector, 6 x for multiple vector Single Vector 6/13/00 Multiple Vector U. C. Berkeley 33

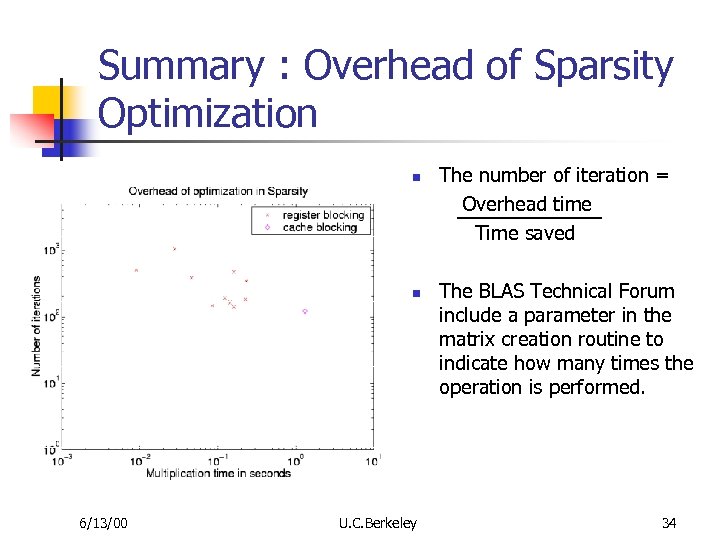

Summary : Overhead of Sparsity Optimization n n 6/13/00 U. C. Berkeley The number of iteration = Overhead time Time saved The BLAS Technical Forum include a parameter in the matrix creation routine to indicate how many times the operation is performed. 34

Summary : Overhead of Sparsity Optimization n n 6/13/00 U. C. Berkeley The number of iteration = Overhead time Time saved The BLAS Technical Forum include a parameter in the matrix creation routine to indicate how many times the operation is performed. 34

Related Work (1) n Dense Matrix Optimization n Automatic Generation of Libraries n n PHi. PAC, ATLAS and FFTW Sparse Matrix Standardization and Libraries n n n Loop transformation by compilers : M. Wolf, etc. Hand-optimized libraries : BLAS, LAPACK BLAS Technical Forum NIST Sparse BLAS, MV++, Sparse. Lib++, TNT Hand Optimization of Sparse Matrix-Vector Multi. n 6/13/00 S. Toledo, Oliker et. al. U. C. Berkeley 35

Related Work (1) n Dense Matrix Optimization n Automatic Generation of Libraries n n PHi. PAC, ATLAS and FFTW Sparse Matrix Standardization and Libraries n n n Loop transformation by compilers : M. Wolf, etc. Hand-optimized libraries : BLAS, LAPACK BLAS Technical Forum NIST Sparse BLAS, MV++, Sparse. Lib++, TNT Hand Optimization of Sparse Matrix-Vector Multi. n 6/13/00 S. Toledo, Oliker et. al. U. C. Berkeley 35

Related Work (2) n Sparse Matrix Packages n n Compiling Sparse Matrix Code n n SPARSKIT, PSPARSELIB, Aztec, Block. Solve 95, Spark 98 Sparse compiler (Bik), Bernoulli compiler (Kotlyar) On-demand Code Generation n 6/13/00 NIST Sparse. BLAS, Sparse compiler U. C. Berkeley 36

Related Work (2) n Sparse Matrix Packages n n Compiling Sparse Matrix Code n n SPARSKIT, PSPARSELIB, Aztec, Block. Solve 95, Spark 98 Sparse compiler (Bik), Bernoulli compiler (Kotlyar) On-demand Code Generation n 6/13/00 NIST Sparse. BLAS, Sparse compiler U. C. Berkeley 36

Contribution n n Thorough investigation of memory hierarchy optimization for sparse matrix-vector multiplication Performance study on benchmark matrices Development of performance model to choose optimization parameter Sparsity system for automatic tuning and code generation of sparse matrix-vector multiplication 6/13/00 U. C. Berkeley 37

Contribution n n Thorough investigation of memory hierarchy optimization for sparse matrix-vector multiplication Performance study on benchmark matrices Development of performance model to choose optimization parameter Sparsity system for automatic tuning and code generation of sparse matrix-vector multiplication 6/13/00 U. C. Berkeley 37

Conclusion n Memory hierarchy optimization for sparse matrixvector multiplication n n Register Blocking : matrices with dense local structure benefit Cache Blocking : large matrices with random structure benefit Multiple vector multiplication improves the performance further because of reuse of matrix elements The choice of optimization depends on both matrix structure and machine architecture. The automated system helps this complicated and time-consuming process. 6/13/00 U. C. Berkeley 38

Conclusion n Memory hierarchy optimization for sparse matrixvector multiplication n n Register Blocking : matrices with dense local structure benefit Cache Blocking : large matrices with random structure benefit Multiple vector multiplication improves the performance further because of reuse of matrix elements The choice of optimization depends on both matrix structure and machine architecture. The automated system helps this complicated and time-consuming process. 6/13/00 U. C. Berkeley 38