a6ee010d8d77ca02561831492b529404.ppt

- Количество слайдов: 29

Optimizing for Time and Space in Distributed Scientific Workflows Ewa Deelman University of Southern California Information Sciences Institute Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

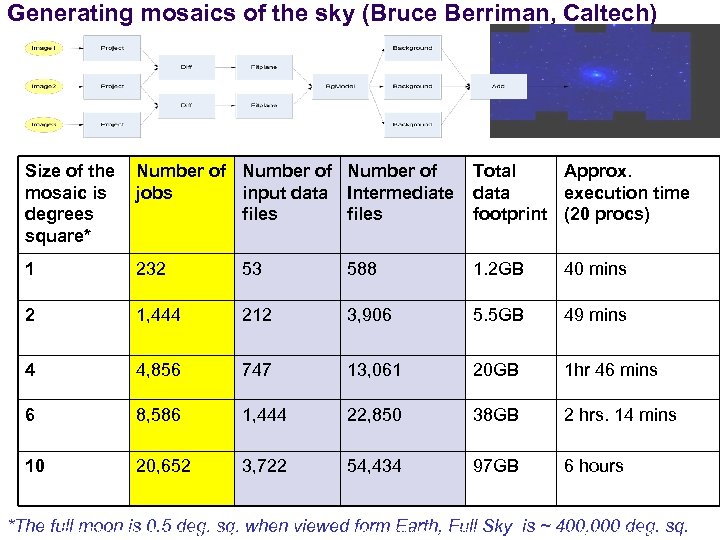

Generating mosaics of the sky (Bruce Berriman, Caltech) Size of the mosaic is degrees square* Number of jobs input data Intermediate files Total Approx. data execution time footprint (20 procs) 1 232 53 588 1. 2 GB 40 mins 2 1, 444 212 3, 906 5. 5 GB 49 mins 4 4, 856 747 13, 061 20 GB 1 hr 46 mins 6 8, 586 1, 444 22, 850 38 GB 2 hrs. 14 mins 10 20, 652 3, 722 54, 434 97 GB 6 hours *The full moon is 0. 5 deg. sq. when viewedwww. isi. edu/~deelman Sky is ~ 400, 000 deg. sq. form Earth, Full Ewa Deelman, deelman@isi. edu pegasus. isi. edu

Issue l How to manage such applications on a variety of resources and distributed resources? Approach l Structure the application as a workflow l l Tie the execution resources together l l Allow the scientist to describe the application at a highlevel Using Condor and/or Globus Provide an automated mapping and execution tool to map the high-level description onto the available resources Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

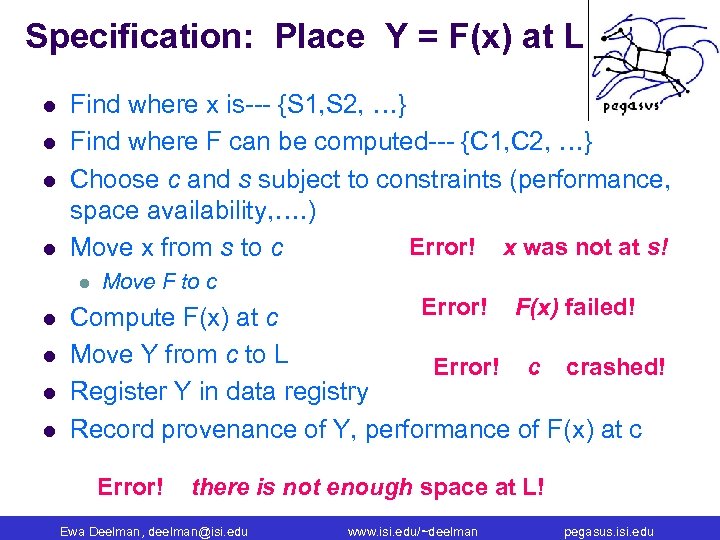

Specification: Place Y = F(x) at L l l Find where x is--- {S 1, S 2, …} Find where F can be computed--- {C 1, C 2, …} Choose c and s subject to constraints (performance, space availability, …. ) Error! x was not at s! Move x from s to c l l l Move F to c Error! F(x) failed! Compute F(x) at c Move Y from c to L Error! c crashed! Register Y in data registry Record provenance of Y, performance of F(x) at c Error! there is not enough space at L! Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

Pegasus-Workflow Management System l l Leverages abstraction for workflow description to obtain ease of use, scalability, and portability Provides a compiler to map from high-level descriptions to executable workflows Correct mapping l Performance enhanced mapping l l Provides a runtime engine to carry out the instructions Scalable manner l Reliable manner l In collaboration with Miron Livny, UW Madison, funded under NSF-OCI SDCI Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

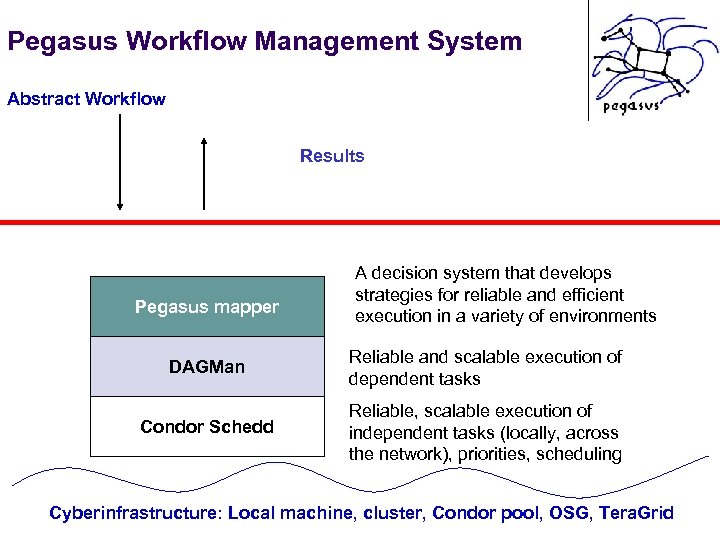

Pegasus Workflow Management System Abstract Workflow Results Pegasus mapper A decision system that develops strategies for reliable and efficient execution in a variety of environments DAGMan Reliable and scalable execution of dependent tasks Condor Schedd Reliable, scalable execution of independent tasks (locally, across the network), priorities, scheduling Cyberinfrastructure: Local machine, cluster, Condor pool, OSG, Tera. Grid Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

Basic Workflow Mapping l Select where to run the computations l Apply a scheduling algorithm l l l Transform task nodes into nodes with executable descriptions l l HEFT, min-min, round-robin, random The quality of the scheduling depends on the quality of information Execution location Environment variables initializes Appropriate command-line parameters set Select which data to access l Add stage-in nodes to move data to computations l Add stage-out nodes to transfer data out of remote sites to storage l Add data transfer nodes between computation nodes that execute on different resources Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

Basic Workflow Mapping l l Add nodes that register the newly-created data products Add data cleanup nodes to remove data from remote sites when no longer needed l l reduces workflow data footprint Provide provenance capture steps l Information about source of data, executables invoked, environment variables, parameters, machines used, performance Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

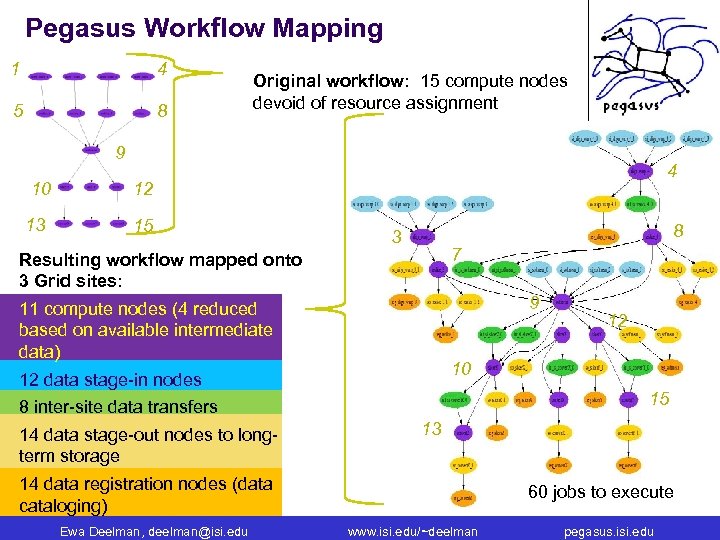

Pegasus Workflow Mapping 1 4 5 8 Original workflow: 15 compute nodes devoid of resource assignment 9 10 13 4 12 15 8 3 7 Resulting workflow mapped onto 3 Grid sites: 9 11 compute nodes (4 reduced based on available intermediate data) 10 12 data stage-in nodes 15 8 inter-site data transfers 14 data stage-out nodes to longterm storage 13 14 data registration nodes (data cataloging) Ewa Deelman, deelman@isi. edu 12 60 jobs to execute www. isi. edu/~deelman pegasus. isi. edu

Time Optimizations during Mapping l Node clustering for fine-grained computations l l Data reuse in case intermediate data products are available l l Can obtain significant performance benefits for some applications (in Montage ~80%, SCEC ~50% ) Performance and reliability advantages—workflow-level checkpointing Workflow partitioning to adapt to changes in the environment l Map and execute small portions of the workflow at a time Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

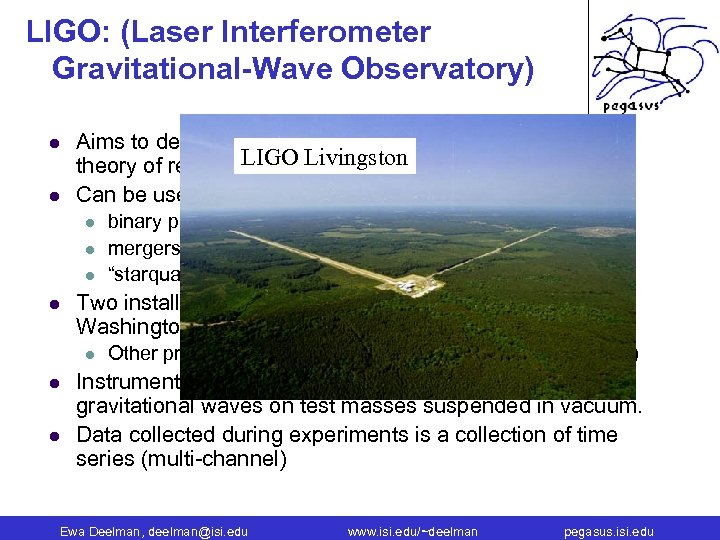

LIGO: (Laser Interferometer Gravitational-Wave Observatory) l l Aims to detect gravitational waves predicted by Einstein’s theory of relativity. Can be used to detect l l Two installations: in Louisiana (Livingston) and Washington State l l l binary pulsars mergers of black holes “starquakes” in neutron stars Other projects: Virgo (Italy), GEO (Germany), Tama (Japan) Instruments are designed to measure the effect of gravitational waves on test masses suspended in vacuum. Data collected during experiments is a collection of time series (multi-channel) Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

LIGO: (Laser Interferometer Gravitational-Wave Observatory) l l Aims to detect gravitational waves predicted by Einstein’s LIGO Livingston theory of relativity. Can be used to detect l l Two installations: in Louisiana (Livingston) and Washington State l l l binary pulsars mergers of black holes “starquakes” in neutron stars Other projects: Virgo (Italy), GEO (Germany), Tama (Japan) Instruments are designed to measure the effect of gravitational waves on test masses suspended in vacuum. Data collected during experiments is a collection of time series (multi-channel) Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

Example of LIGO’s computations l l Binary inspiral analysis Size of analysis for meaningful results l l l at least 221 GBytes of gravitational-wave data approximately 70, 000 computational tasks Desired analysis: l Data from November 2005 --November 2006 l l 10 TB of input data Approximately 185, 000 computations edges l 1 Tb of output data Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

LIGO’s computational resources l LIGO Data Grid l l l Condor clusters managed by the collaboration ~ 6, 000 CPUs Open Science Grid l l l A US cyberinfrastructure shared by many applications ~ 20 Virtual Organizations ~ 258 GB of shared scratch disk space on OSG sites Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

Problem l How to “fit” the computations onto the OSG l l l Take into account intermediate data products Minimize the data footprint of the workflow Schedule the workflow tasks in a disk-space aware fashion Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

Workflow Footprint l In order to improve the workflow footprint, we need to determine when data are no longer needed: l l l Because data was consumed by the next component and no other component needs it Because data was staged-out to permanent storage Because data are no longer needed on a resource and have been stage-out to the resource that needs it Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

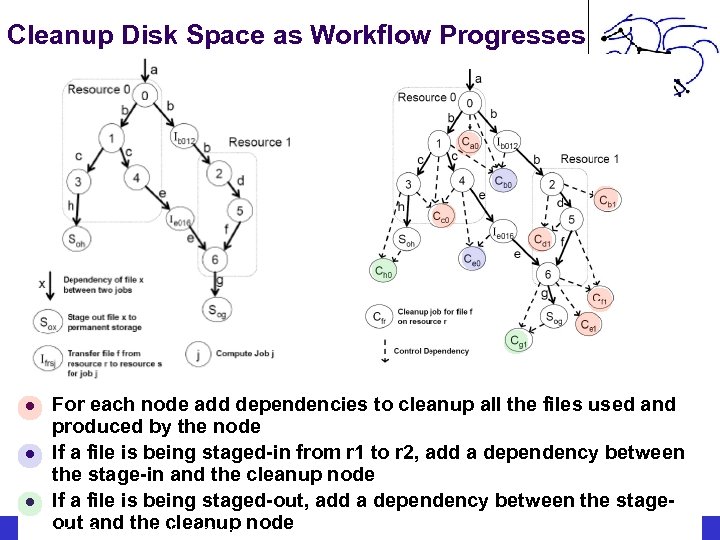

Cleanup Disk Space as Workflow Progresses l l l For each node add dependencies to cleanup all the files used and produced by the node If a file is being staged-in from r 1 to r 2, add a dependency between the stage-in and the cleanup node If a file is being staged-out, add a dependency between the stageout Deelman, deelman@isi. edunode and the cleanup Ewa www. isi. edu/~deelman pegasus. isi. edu

Experiments on the Grid with LIGO and Montage Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

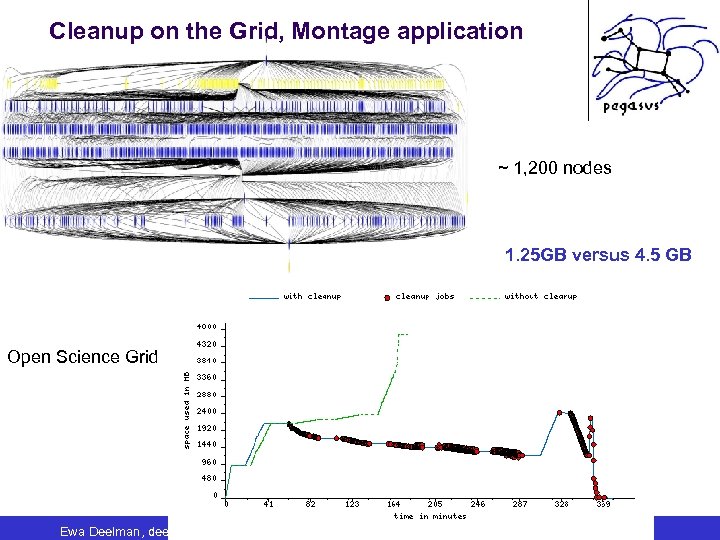

Cleanup on the Grid, Montage application ~ 1, 200 nodes 1. 25 GB versus 4. 5 GB Open Science Grid Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

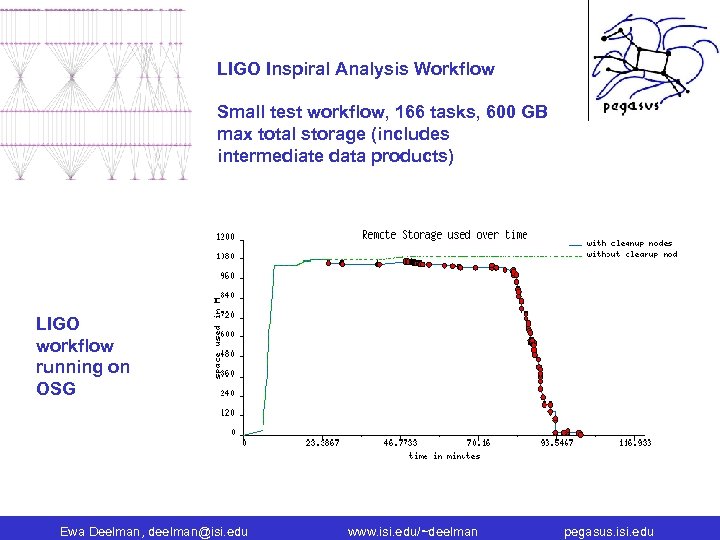

LIGO Inspiral Analysis Workflow Small test workflow, 166 tasks, 600 GB max total storage (includes intermediate data products) LIGO workflow running on OSG Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

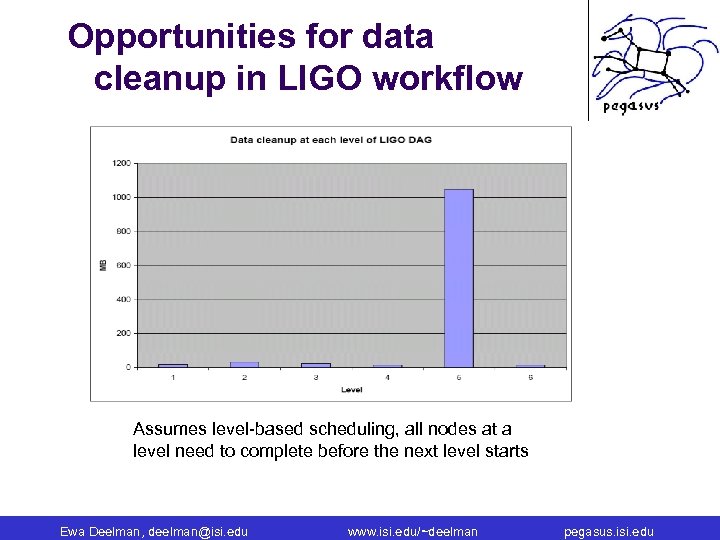

Opportunities for data cleanup in LIGO workflow Assumes level-based scheduling, all nodes at a level need to complete before the next level starts Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

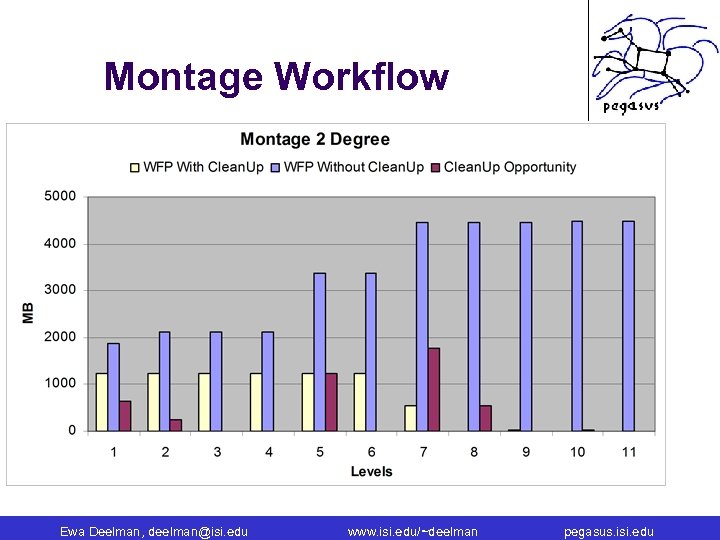

Montage Workflow Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

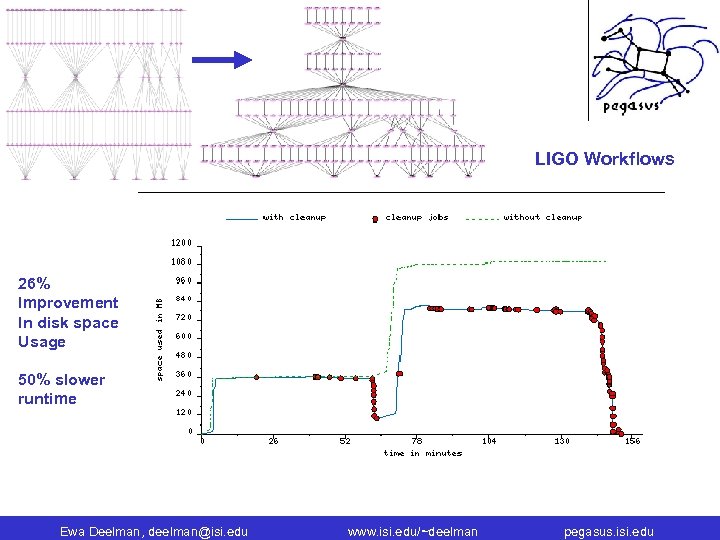

LIGO Workflows 26% Improvement In disk space Usage 50% slower runtime Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

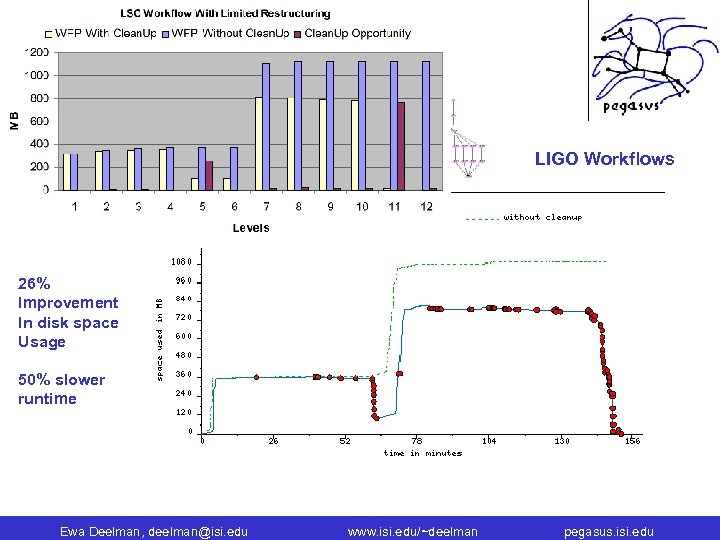

LIGO Workflows 26% Improvement In disk space Usage 50% slower runtime Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

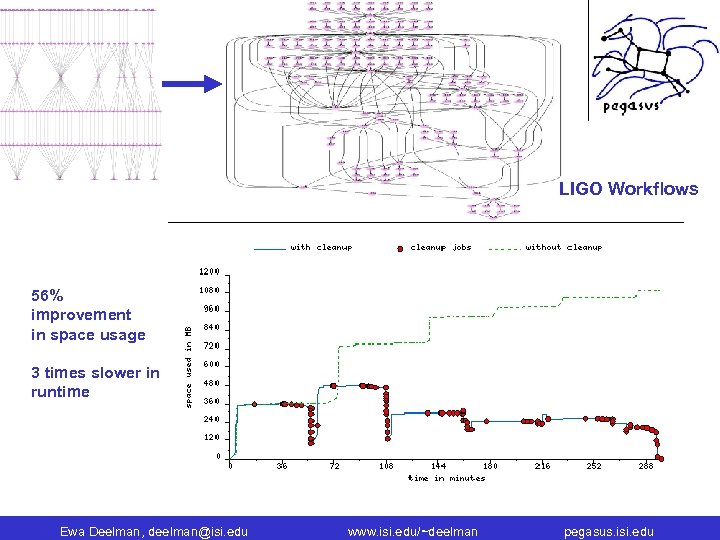

LIGO Workflows 56% improvement in space usage 3 times slower in runtime Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

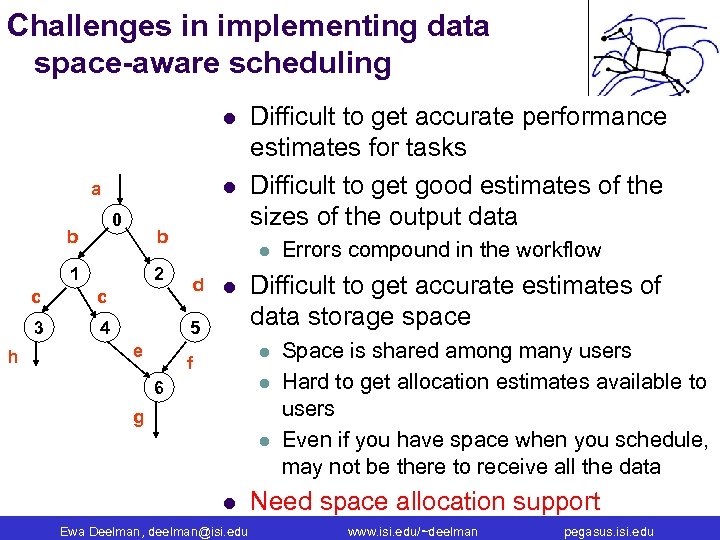

Challenges in implementing data space-aware scheduling l l a 0 b b 1 4 d c 3 h l 2 c l 5 e Difficult to get accurate performance estimates for tasks Difficult to get good estimates of the sizes of the output data Difficult to get accurate estimates of data storage space l f l 6 g l l Ewa Deelman, deelman@isi. edu Errors compound in the workflow Space is shared among many users Hard to get allocation estimates available to users Even if you have space when you schedule, may not be there to receive all the data Need space allocation support www. isi. edu/~deelman pegasus. isi. edu

Conclusions l l Data are an important part of today’s applications and need to be managed Optimizing workflow disk space usage l l Data workflow footprint concept applicable within one resource Data-aware scheduling across resources Designed an algorithm which can cleanup the data as a workflow progresses l The effectiveness of the algorithm depends on the structure of the workflow and its data characteristics Workflow restructuring may be needed to decrease footprint Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

Acknowledgments l Henan Zhao, Rizos Sakellariou l l Kent Blackburn, Duncan Brown, Stephen Fairhurst, David Meyers l l Montage, Caltech and LSU, USA Miron Livny and Kent Wenger l l LIGO, Caltech, USA G. Bruce Berriman, John Good, Daniel S. Katz l l University of Manchester, UK DAGMan, UW Madison Gurmeet Singh, Karan Vahi, Arun Ramakrishnan, Gaurang Mehta l USC Information Science Institute, Pegasus Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

Relevant Links l Condor: www. cs. wisc. edu/condor Pegasus: pegasus. isi. edu LIGO: www. ligo. caltech. edu Montage: montage. ipac. caltech. edu/ Open Science Grid: www. opensciencegrid. org l Workflows for e-Science l l I. J. Taylor, E. Deelman, D. B. Gannon M. Shields (Eds. ), Springer, Dec. 2006 l l NSF Workshop on Challenges of Scientific Workflows : www. isi. edu/nsf-workflows 06, E. Deelman and Y. Gil (chairs) OGF Workflow research group www. isi. edu/~deelman/wfm-rg Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

a6ee010d8d77ca02561831492b529404.ppt