3bb78f2f4db91130f010a2e10a28367f.ppt

- Количество слайдов: 25

Optimism in the Face of Uncertainty: a Unifying approach István Szita & András Lőrincz Eötvös Loránd University Hungary

Optimism in the Face of Uncertainty: a Unifying approach István Szita & András Lőrincz Eötvös Loránd University Hungary

Outline topic: efficient exploration n background n quick overview of exploration methods n construction of the new algorithm n analysis & experimental results n outlook n

Outline topic: efficient exploration n background n quick overview of exploration methods n construction of the new algorithm n analysis & experimental results n outlook n

Background n Markov decision processes ¨ finite, discounted ¨ (…but wait until the end of the talk) n value function-based methods ¨ Q(x, a) n values the efficient exploration problem

Background n Markov decision processes ¨ finite, discounted ¨ (…but wait until the end of the talk) n value function-based methods ¨ Q(x, a) n values the efficient exploration problem

Basic exploration: -greedy n n extremely simple sufficient for convergence in the limit ¨ for many classical methods like Q-learning, Dyna, Sarsa ¨ …under suitable conditions n extremely inefficient

Basic exploration: -greedy n n extremely simple sufficient for convergence in the limit ¨ for many classical methods like Q-learning, Dyna, Sarsa ¨ …under suitable conditions n extremely inefficient

Advanced exploration n in case of uncertainty, be optimistic! ¨ …details n vary we will use concepts from ¨ R-max ¨ optimistic initial values ¨ exploration bonus methods ¨ model-based interval estimation n there are many others, ¨ Bayesian methods ¨ UCT ¨ delayed Q-learning ¨…

Advanced exploration n in case of uncertainty, be optimistic! ¨ …details n vary we will use concepts from ¨ R-max ¨ optimistic initial values ¨ exploration bonus methods ¨ model-based interval estimation n there are many others, ¨ Bayesian methods ¨ UCT ¨ delayed Q-learning ¨…

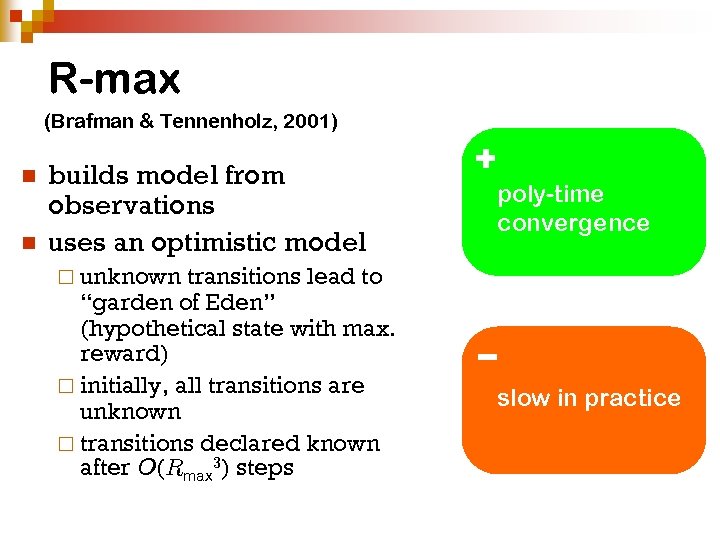

R-max (Brafman & Tennenholz, 2001) n n builds model from observations uses an optimistic model transitions lead to “garden of Eden” (hypothetical state with max. reward) ¨ initially, all transitions are unknown ¨ transitions declared known after O(Rmax 3) steps + poly-time convergence ¨ unknown − slow in practice

R-max (Brafman & Tennenholz, 2001) n n builds model from observations uses an optimistic model transitions lead to “garden of Eden” (hypothetical state with max. reward) ¨ initially, all transitions are unknown ¨ transitions declared known after O(Rmax 3) steps + poly-time convergence ¨ unknown − slow in practice

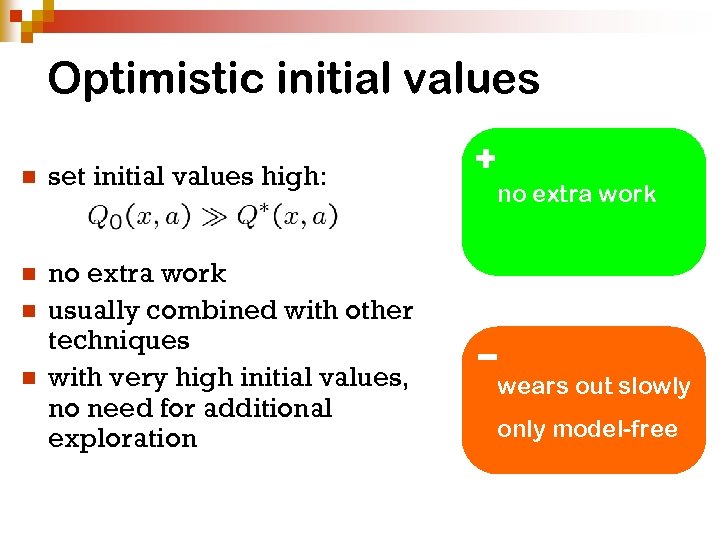

Optimistic initial values n set initial values high: n no extra work usually combined with other techniques with very high initial values, no need for additional exploration n n + no extra work − wears out slowly only model-free

Optimistic initial values n set initial values high: n no extra work usually combined with other techniques with very high initial values, no need for additional exploration n n + no extra work − wears out slowly only model-free

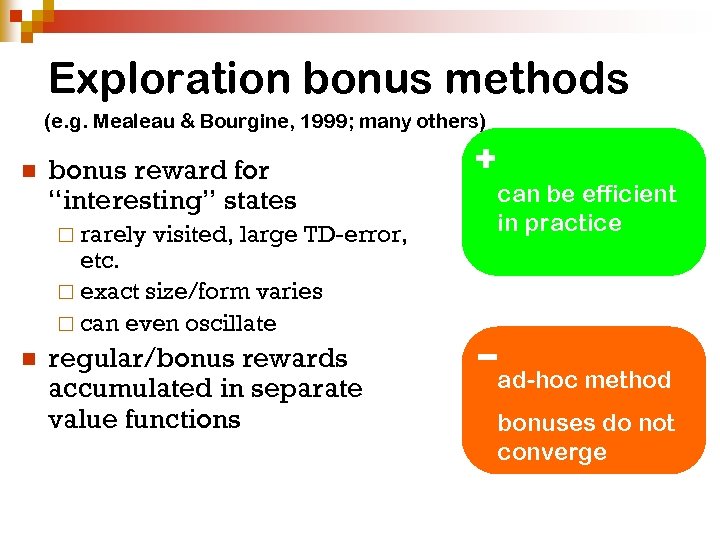

Exploration bonus methods (e. g. Mealeau & Bourgine, 1999; many others) n bonus reward for “interesting” states ¨ rarely visited, large TD-error, etc. ¨ exact size/form varies ¨ can even oscillate n regular/bonus rewards accumulated in separate value functions + can be efficient in practice −ad-hoc method bonuses do not converge

Exploration bonus methods (e. g. Mealeau & Bourgine, 1999; many others) n bonus reward for “interesting” states ¨ rarely visited, large TD-error, etc. ¨ exact size/form varies ¨ can even oscillate n regular/bonus rewards accumulated in separate value functions + can be efficient in practice −ad-hoc method bonuses do not converge

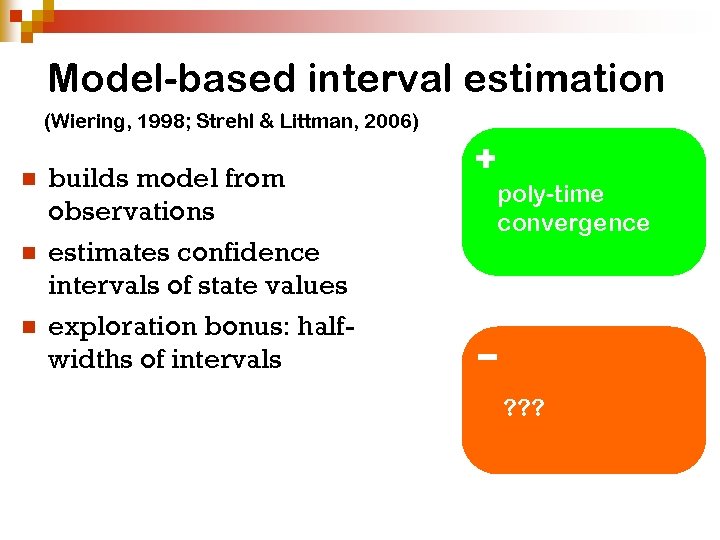

Model-based interval estimation (Wiering, 1998; Strehl & Littman, 2006) n n n builds model from observations estimates confidence intervals of state values exploration bonus: halfwidths of intervals + poly-time convergence − ? ? ?

Model-based interval estimation (Wiering, 1998; Strehl & Littman, 2006) n n n builds model from observations estimates confidence intervals of state values exploration bonus: halfwidths of intervals + poly-time convergence − ? ? ?

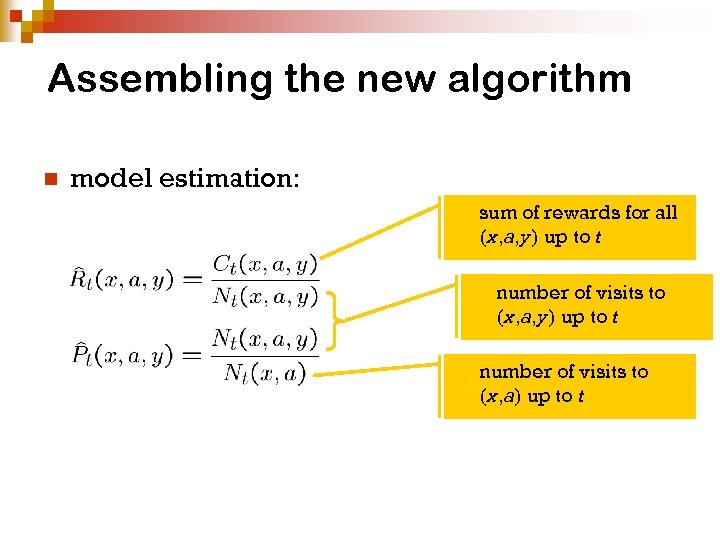

Assembling the new algorithm n model estimation: sum of rewards for all (x, a, y) up to t number of visits to (x, a) up to t

Assembling the new algorithm n model estimation: sum of rewards for all (x, a, y) up to t number of visits to (x, a) up to t

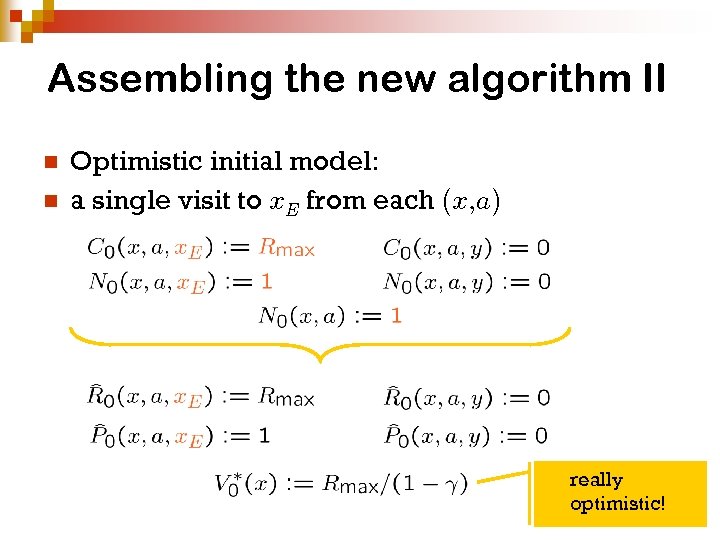

Assembling the new algorithm II n n Optimistic initial model: a single visit to x. E from each (x, a) really optimistic!

Assembling the new algorithm II n n Optimistic initial model: a single visit to x. E from each (x, a) really optimistic!

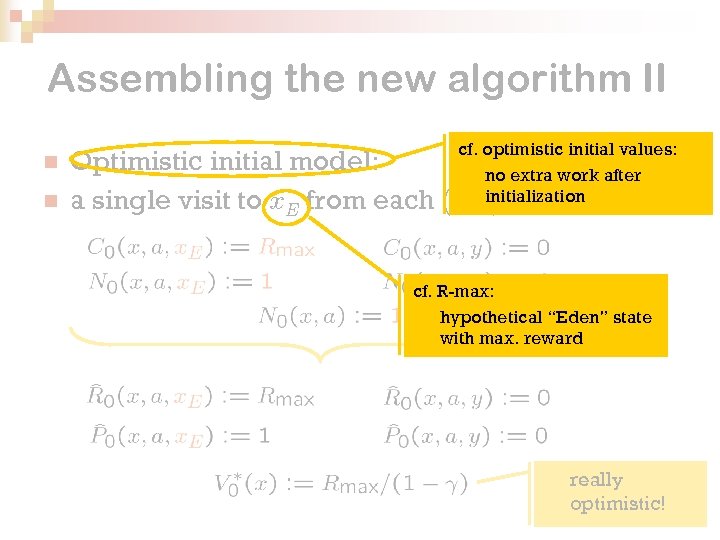

Assembling the new algorithm II n n Optimistic initial model: a single visit to x. E from each cf. optimistic initial values: no extra work after initialization (x, a) cf. R-max: hypothetical “Eden” state with max. reward really optimistic!

Assembling the new algorithm II n n Optimistic initial model: a single visit to x. E from each cf. optimistic initial values: no extra work after initialization (x, a) cf. R-max: hypothetical “Eden” state with max. reward really optimistic!

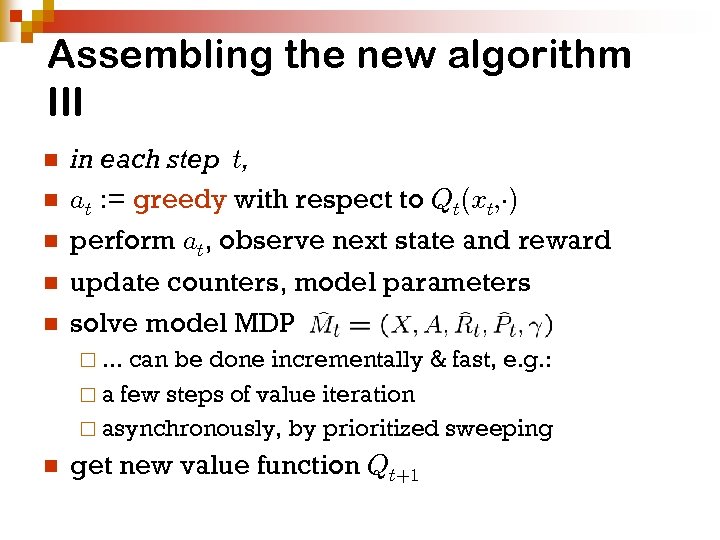

Assembling the new algorithm III n n n in each step t, at : = greedy with respect to Qt(xt, ¢) perform at, observe next state and reward update counters, model parameters solve model MDP ¨. . . can be done incrementally & fast, e. g. : ¨ a few steps of value iteration ¨ asynchronously, by prioritized sweeping n get new value function Qt+1

Assembling the new algorithm III n n n in each step t, at : = greedy with respect to Qt(xt, ¢) perform at, observe next state and reward update counters, model parameters solve model MDP ¨. . . can be done incrementally & fast, e. g. : ¨ a few steps of value iteration ¨ asynchronously, by prioritized sweeping n get new value function Qt+1

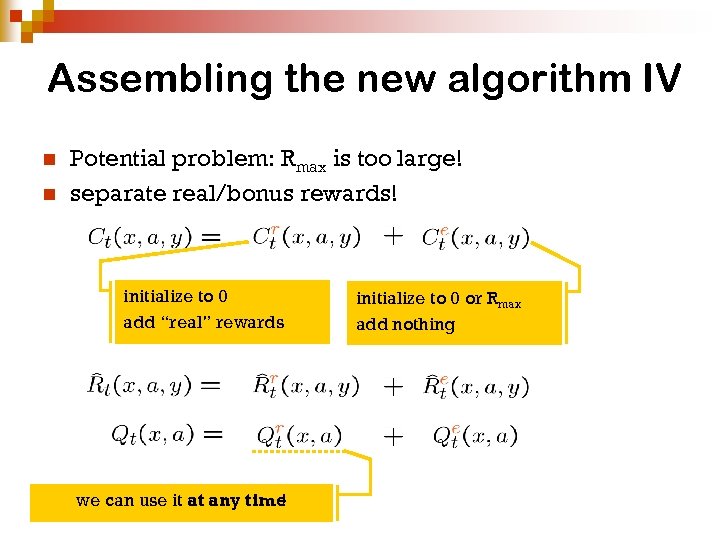

Assembling the new algorithm IV n n Potential problem: Rmax is too large! separate real/bonus rewards! initialize to 0 add “real” rewards we can use it at any time ! initialize to 0 or Rmax add nothing

Assembling the new algorithm IV n n Potential problem: Rmax is too large! separate real/bonus rewards! initialize to 0 add “real” rewards we can use it at any time ! initialize to 0 or Rmax add nothing

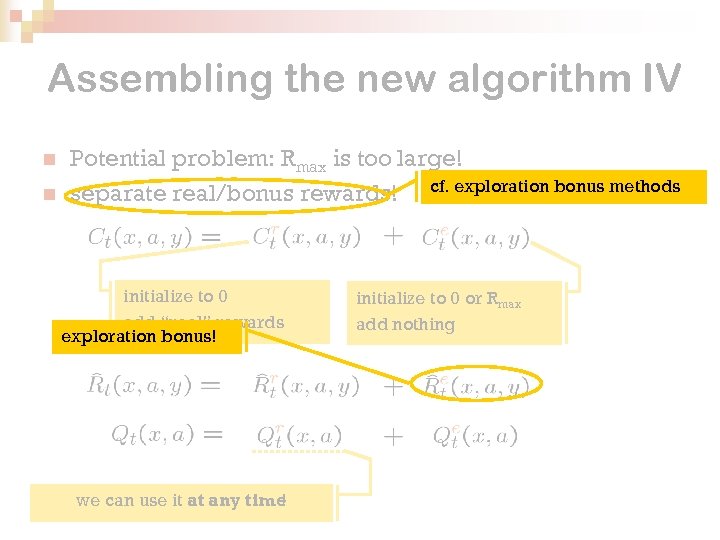

Assembling the new algorithm IV n n Potential problem: Rmax is too large! separate real/bonus rewards! cf. exploration bonus methods initialize to 0 add “real” rewards exploration bonus! we can use it at any time ! initialize to 0 or Rmax add nothing

Assembling the new algorithm IV n n Potential problem: Rmax is too large! separate real/bonus rewards! cf. exploration bonus methods initialize to 0 add “real” rewards exploration bonus! we can use it at any time ! initialize to 0 or Rmax add nothing

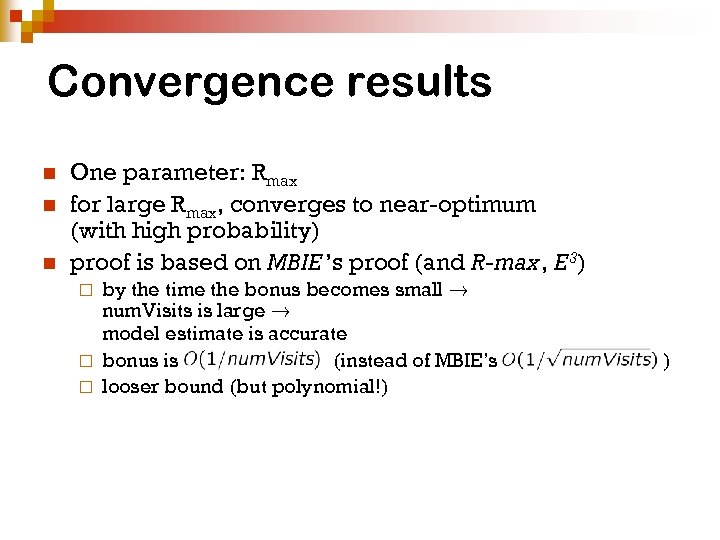

Convergence results n n n One parameter: Rmax for large Rmax, converges to near-optimum (with high probability) proof is based on MBIE ’s proof (and R-max, E 3) by the time the bonus becomes small ! num. Visits is large ! model estimate is accurate ¨ bonus is (instead of MBIE’s ¨ looser bound (but polynomial!) ¨ )

Convergence results n n n One parameter: Rmax for large Rmax, converges to near-optimum (with high probability) proof is based on MBIE ’s proof (and R-max, E 3) by the time the bonus becomes small ! num. Visits is large ! model estimate is accurate ¨ bonus is (instead of MBIE’s ¨ looser bound (but polynomial!) ¨ )

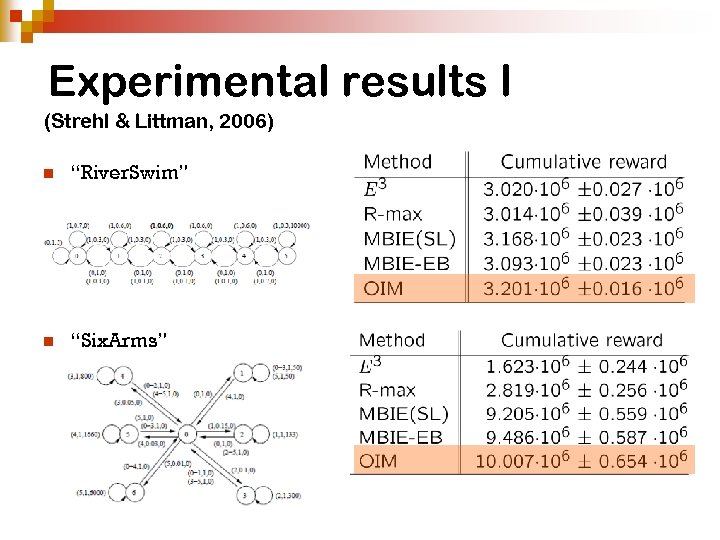

Experimental results I (Strehl & Littman, 2006) n “River. Swim” n “Six. Arms”

Experimental results I (Strehl & Littman, 2006) n “River. Swim” n “Six. Arms”

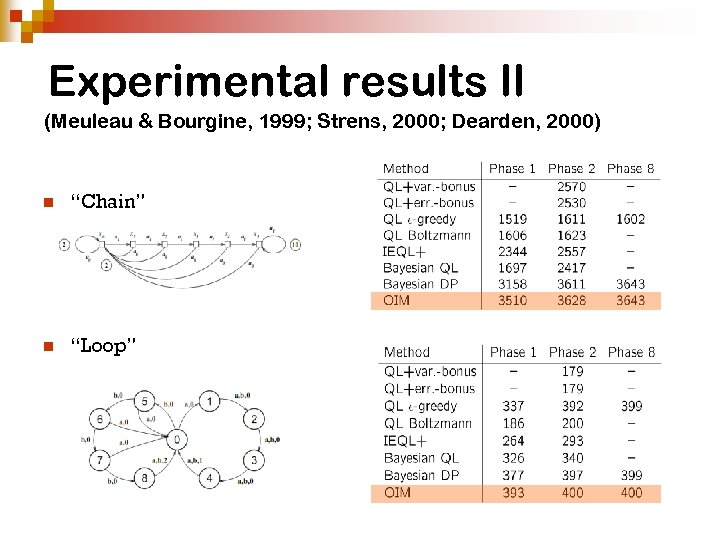

Experimental results II (Meuleau & Bourgine, 1999; Strens, 2000; Dearden, 2000) n “Chain” n “Loop”

Experimental results II (Meuleau & Bourgine, 1999; Strens, 2000; Dearden, 2000) n “Chain” n “Loop”

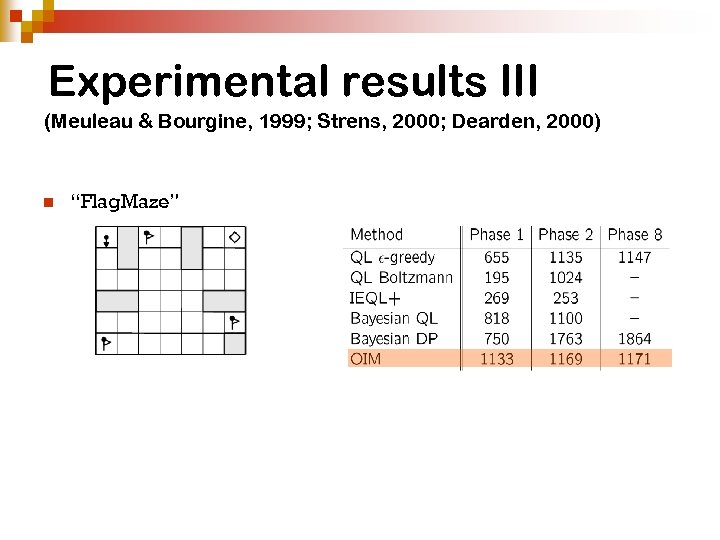

Experimental results III (Meuleau & Bourgine, 1999; Strens, 2000; Dearden, 2000) n “Flag. Maze”

Experimental results III (Meuleau & Bourgine, 1999; Strens, 2000; Dearden, 2000) n “Flag. Maze”

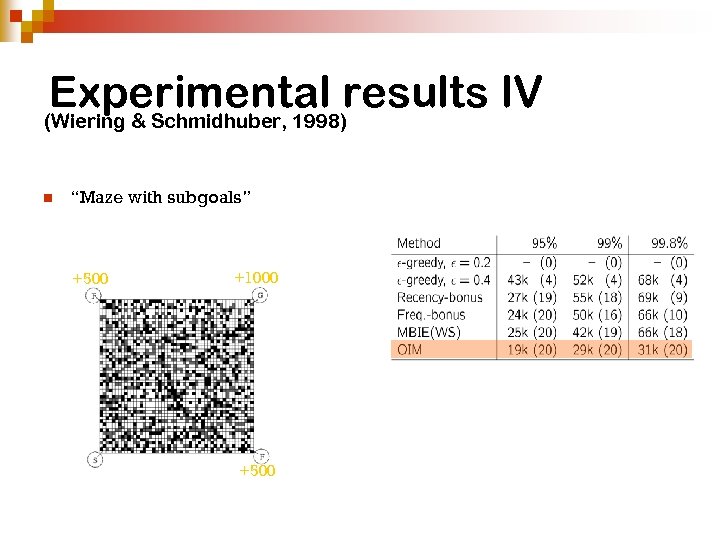

Experimental results IV (Wiering & Schmidhuber, 1998) n “Maze with subgoals” +500 +1000 +500

Experimental results IV (Wiering & Schmidhuber, 1998) n “Maze with subgoals” +500 +1000 +500

Advantages of OIM n polynomial-time convergence (to near-optimum, with high probability) n n convincing performance in practice extremely simple to implement ¨ all work done at initialization ¨ decision making is always greedy n Matlab source code to be released soon

Advantages of OIM n polynomial-time convergence (to near-optimum, with high probability) n n convincing performance in practice extremely simple to implement ¨ all work done at initialization ¨ decision making is always greedy n Matlab source code to be released soon

Outlook n n MDPs are nice, but we need more! extension to factored MDPs: almost ready ¨ (we n need benchmarks) extension to general function approximation: in progress

Outlook n n MDPs are nice, but we need more! extension to factored MDPs: almost ready ¨ (we n need benchmarks) extension to general function approximation: in progress

Thank you for your attention! check our web pages at http: //szityu. web. eotvos. elte. hu http: //people. inf. elte. hu/lorincz/ or my reinforcement learning blog “Gimme Reward” at http: //gimmereward. wordpress. com

Thank you for your attention! check our web pages at http: //szityu. web. eotvos. elte. hu http: //people. inf. elte. hu/lorincz/ or my reinforcement learning blog “Gimme Reward” at http: //gimmereward. wordpress. com

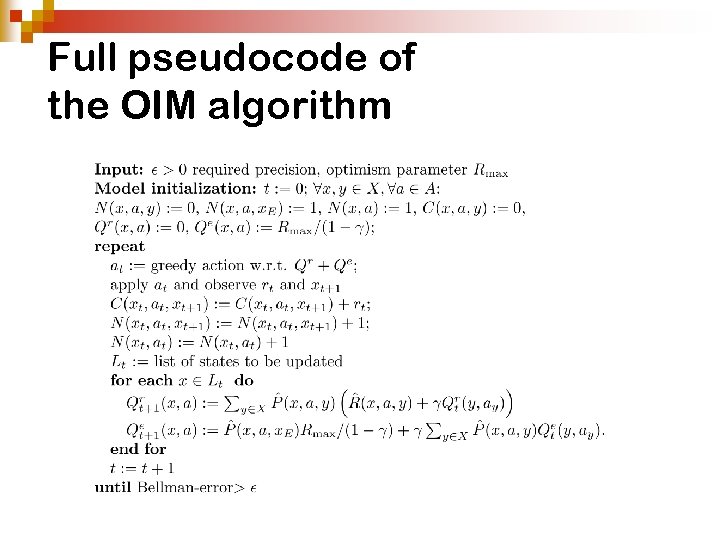

Full pseudocode of the OIM algorithm

Full pseudocode of the OIM algorithm

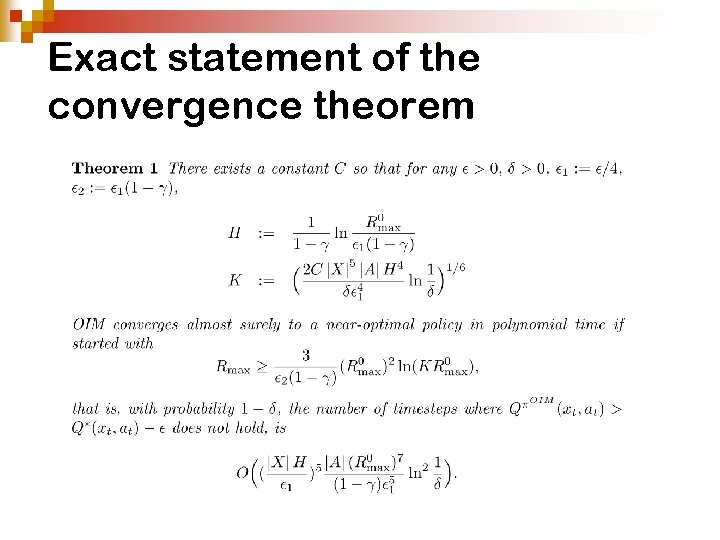

Exact statement of the convergence theorem

Exact statement of the convergence theorem