c0d5709c79de436fb8086175991e61c9.ppt

- Количество слайдов: 8

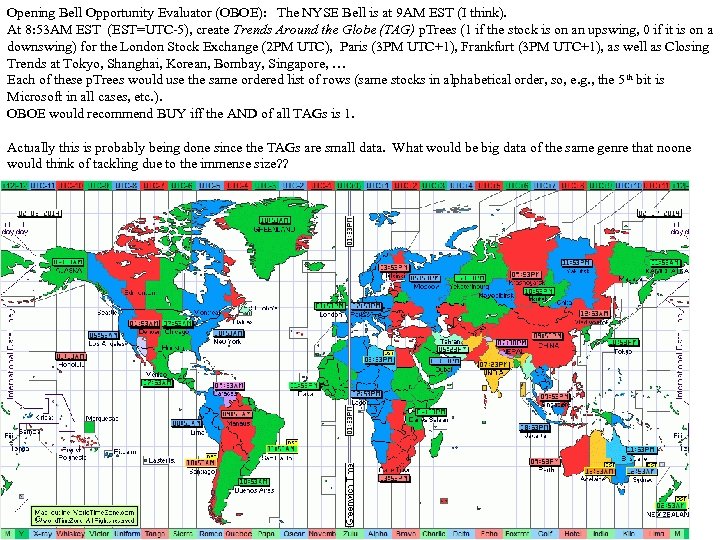

Opening Bell Opportunity Evaluator (OBOE): The NYSE Bell is at 9 AM EST (I think). At 8: 53 AM EST (EST=UTC-5), create Trends Around the Globe (TAG) p. Trees (1 if the stock is on an upswing, 0 if it is on a downswing) for the London Stock Exchange (2 PM UTC), Paris (3 PM UTC+1), Frankfurt (3 PM UTC+1), as well as Closing Trends at Tokyo, Shanghai, Korean, Bombay, Singapore, … Each of these p. Trees would use the same ordered list of rows (same stocks in alphabetical order, so, e. g. , the 5 th bit is Microsoft in all cases, etc. ). OBOE would recommend BUY iff the AND of all TAGs is 1. Actually this is probably being done since the TAGs are small data. What would be big data of the same genre that noone would think of tackling due to the immense size? ?

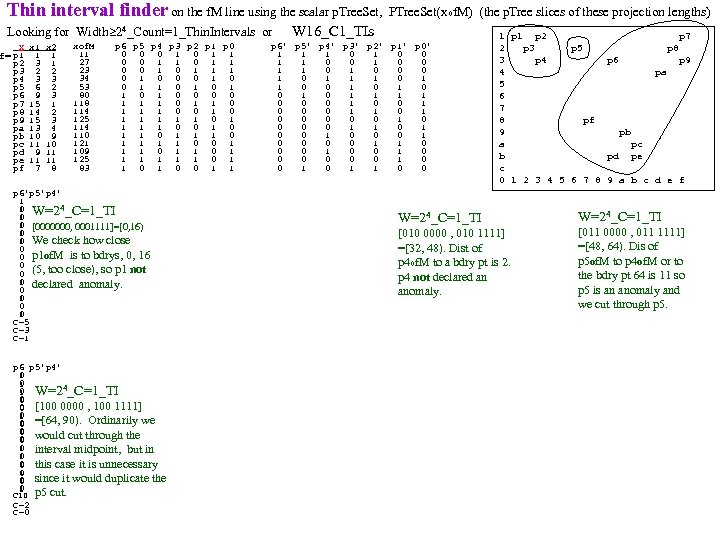

Thin interval finder on the f. M line using the scalar p. Tree. Set, PTree. Set(xof. M) (the p. Tree slices of these projection lengths) Looking for Width 24_Count=1_Thin. Intervals or W 16_C 1_TIs X f= p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 pa pb pc pd pe pf x 1 1 3 2 3 6 9 15 14 15 13 10 11 9 11 7 x 2 1 1 2 3 4 9 10 11 11 8 xof. M 11 27 23 34 53 80 118 114 125 114 110 121 109 125 83 p 6 p 5 p 4 p 3 p 2 p 1 p 0 0 1 0 1 1 1 0 0 0 1 1 0 0 0 0 1 1 1 0 0 1 1 1 1 1 0 0 1 1 1 1 0 0 1 1 1 0 1 1 0 0 1 1 p 6' p 5' p 4' p 3' p 2' p 1' p 0' 1 1 1 0 0 1 1 0 0 0 1 1 1 0 0 1 1 1 1 0 0 0 1 1 0 0 0 0 0 1 1 0 0 0 0 1 1 0 0 0 1 0 0 1 p 2 p 7 2 p 3 p 5 p 8 3 p 4 p 6 p 9 4 pa 5 6 7 8 pf 9 pb a pc b pd pe c 0 1 2 3 4 5 6 7 8 9 a b c d e f p 6'p 5'p 4' 1 1 1 W=24_C=1_TI 1 0 0 1 [0000000, 0001111]=[0, 16) 0 0 1 1 1 0 0 0 We check how close 1 1 0 0 0 p 1 of. M is to bdrys, 0, 16 0 0 (5, too close), so p 1 not 0 0 0 declared anomaly. 0 1 1 0 0 0 1 1 0 C=5 C=3 C=1 p 6'p 5'p 4 1 0 1 1 1 0 0 1 0 1 0 1 0 1 C=5 C=3 C=2 p 6'p 5'p 4 1 0 1 1 1 0 0 1 0 1 0 1 0 1 p 6'p 5 p 4' 0 0 1 1 1 0 1 0 W=24_C=1_TI 1 1 1 0 [010 0000 , 010 1111] 0 0 =[32, 48). Dist of 1 1 0 0 p 4 of. M to a bdry pt is 2. 1 1 0 0 p 4 not declared an 0 1 1 1 0 0 anomaly. 0 1 1 1 0 0 C=5 C=2 C=1 p 6 p 5'p 4' 0 0 1 1 4_C=1_TI 0 W=2 0 1 1 0 0 [100 0000 , 100 1111] 0 1 0 0 1 1 1 =[64, 90). Ordinarily we 0 0 1 1 would cut through the 0 0 1 1 interval midpoint, but in 0 0 1 1 this case it is unnecessary 0 0 1 1 since it would duplicate the 0 0 1 p 6 p 5'p 4 0 1 0 1 0 0 1 1 1 0 1 0 1 p 6 p 5'p 4 0 1 0 1 0 1 0 1 0 1 p 6 p 5 p 4' 0 1 0 0 0 1 1 0 1 0 1 0 1 0 C 10 C=2 C=0 p 5 cut. p 6 p 5 p 4' 1 0 0 1 0 1 1 0 1 1 0 p 6'p 5 p 4 1 0 0 0 1 1 W=24_C=1_TI 1 0 [011 0000 , 011 1111] 1 1 0 1 =[48, 64). Dis of 0 1 0 1 p 5 of. M to p 4 of. M or to 0 1 0 1 the bdry pt 64 is 11 so 1 1 0 0 0 1 p 5 is an anomaly and 1 1 0 0 we cut through p 5. 0 1 0 1 C=5 C=2 C=1 p 6 p 5 p 4 0 0 1 0 1 1 1 1 0 1 p 6 p 5 p 4 0 0 1 0 1 1 1 0 C 10 C=2 C 10 C=8 C=6

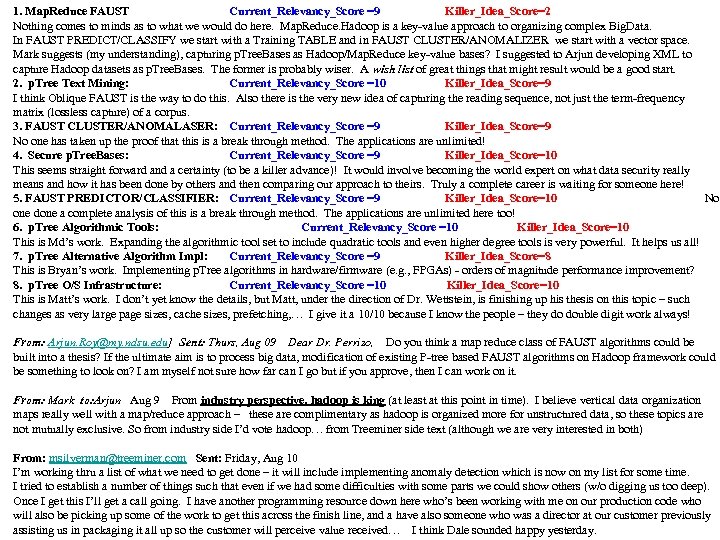

1. Map. Reduce FAUST Current_Relevancy_Score =9 Killer_Idea_Score=2 Nothing comes to minds as to what we would do here. Map. Reduce. Hadoop is a key-value approach to organizing complex Big. Data. In FAUST PREDICT/CLASSIFY we start with a Training TABLE and in FAUST CLUSTER/ANOMALIZER we start with a vector space. Mark suggests (my understanding), capturing p. Tree. Bases as Hadoop/Map. Reduce key-value bases? I suggested to Arjun developing XML to capture Hadoop datasets as p. Tree. Bases. The former is probably wiser. A wish list of great things that might result would be a good start. 2. p. Tree Text Mining: Current_Relevancy_Score =10 Killer_Idea_Score=9 I I think Oblique FAUST is the way to do this. Also there is the very new idea of capturing the reading sequence, not just the term-frequency matrix (lossless capture) of a corpus. 3. FAUST CLUSTER/ANOMALASER: Current_Relevancy_Score =9 Killer_Idea_Score=9 No No one has taken up the proof that this is a break through method. The applications are unlimited! 4. Secure p. Tree. Bases: Current_Relevancy_Score =9 Killer_Idea_Score=10 This seems straight forward and a certainty (to be a killer advance)! It would involve becoming the world expert on what data security really means and how it has been done by others and then comparing our approach to theirs. Truly a complete career is waiting for someone here! 5. FAUST PREDICTOR/CLASSIFIER: Current_Relevancy_Score =9 Killer_Idea_Score=10 No one done a complete analysis of this is a break through method. The applications are unlimited here too! 6. p. Tree Algorithmic Tools: Current_Relevancy_Score =10 Killer_Idea_Score=10 This is Md’s work. Expanding the algorithmic tool set to include quadratic tools and even higher degree tools is very powerful. It helps us all! 7. p. Tree Alternative Algorithm Impl: Current_Relevancy_Score =9 Killer_Idea_Score=8 This is Bryan’s work. Implementing p. Tree algorithms in hardware/firmware (e. g. , FPGAs) - orders of magnitude performance improvement? 8. p. Tree O/S Infrastructure: Current_Relevancy_Score =10 Killer_Idea_Score=10 This is Matt’s work. I don’t yet know the details, but Matt, under the direction of Dr. Wettstein, is finishing up his thesis on this topic – such changes as very large page sizes, cache sizes, prefetching, … I give it a 10/10 because I know the people – they do double digit work always! From: Arjun. Roy@my. ndsu. edu] Sent: Thurs, Aug 09 Dear Dr. Perrizo, Do you think a map reduce class of FAUST algorithms could be built into a thesis? If the ultimate aim is to process big data, modification of existing P-tree based FAUST algorithms on Hadoop framework could be something to look on? I am myself not sure how far can I go but if you approve, then I can work on it. From: Mark to: Arjun Aug 9 From industry perspective, hadoop is king (at least at this point in time). I believe vertical data organization maps really well with a map/reduce approach – these are complimentary as hadoop is organized more for unstructured data, so these topics are not mutually exclusive. So from industry side I’d vote hadoop… from Treeminer side text (although we are very interested in both) From: msilverman@treeminer. com Sent: Friday, Aug 10 I’m working thru a list of what we need to get done – it will include implementing anomaly detection which is now on my list for some time. I tried to establish a number of things such that even if we had some difficulties with some parts we could show others (w/o digging us too deep). Once I get this I’ll get a call going. I have another programming resource down here who’s been working with me on our production code who will also be picking up some of the work to get this across the finish line, and a have also someone who was a director at our customer previously assisting us in packaging it all up so the customer will perceive value received… I think Dale sounded happy yesterday.

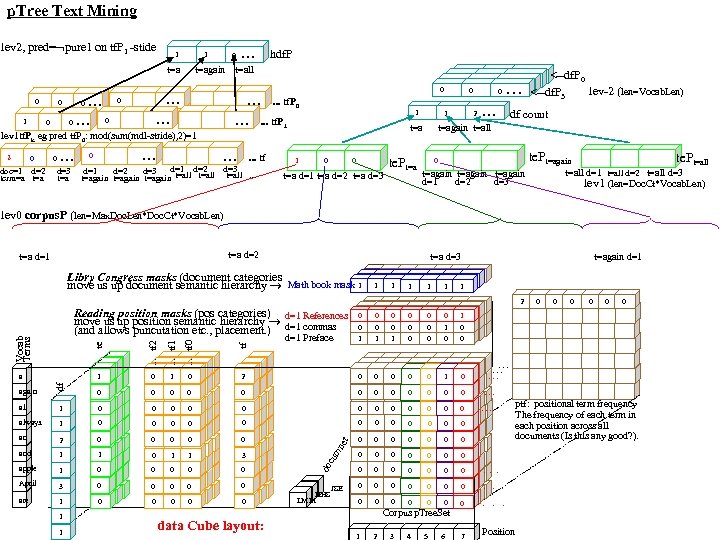

p. Tree Text Mining lev 2, pred= pure 1 on tf. P 1 -stide 1 hdf. P 0 . . . 1 t=again t=all 0 1 0 0 . . . 0 . . . tf. P 0 . . . tf. P 1 lev 1 tf. Pk eg pred tf. P 0: mod(sum(mdl-stride), 2)=1 2 0 0 . . . doc=1 d=2 d=3 term=a t=a . . . 0 . . . tf d=1 d=2 d=3 t=all . . . t=again 1 8 8 0 1 1 1 3 3 3 0 . . . 2 . . . t=again t=all 1 . . . 8 0 1 te. Pt=a 0 0 <--df. P 0 lev-2 (len=Vocab. Len) <--df. P 3 df count te. Pt=again d=1 d=2 d=3 t=a d=1 t=a d=2 t=a d=3 lev 0 corpus. P (len=Max. Doc. Len*Doc. Ct*Vocab. Len) t=a d=2 t=a d=1 t=a d=3 Libry Congress masks (document categories move us up document semantic hierarchy Math book mask 1 1 1 1 0 0 0 0 0 1 0 t=again d=1 1 0 0 2 . . . tf 0 1 0 2 0 0 0 0 0 1 0 0 0 0 0 . . . always. 1 0 0 0 an 2 0 0 0 and 1 1 0 1 1 3 0 0 0 0 apple 0 0 0 0 . . . 0 0 0 0 . 0 0 0 3 0 0 0 are 1 0 0 0 1 data Cube layout: mn cu do 1 April et all 1 0 0 0 . . . 0 again 0 . . . 0 . . . df . . . tf 1 1 a . . . tf 2 d=1 Preface te Vocab Terms Reading position masks (pos categories) d=1 References move us up position semantic hierarchy d=1 commas (and allows puncutation etc. , placement. ) JSE HHS LMM Corpus p. Tree. Set 1 2 3 4 5 6 7 Position te. Pt=all d=1 t=all d=2 t=all d=3 lev 1 (len=Doc. Ct*Vocab. Len) ptf: positional term frequency The frequency of each term in each position across all documents (Is this any good? ).

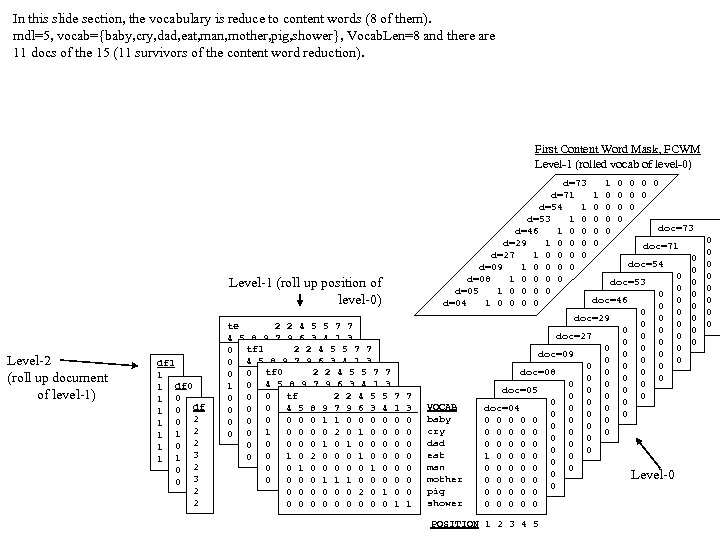

In this slide section, the vocabulary is reduce to content words (8 of them). mdl=5, vocab={baby, cry, dad, eat, man, mother, pig, shower}, Vocab. Len=8 and there are 11 docs of the 15 (11 survivors of the content word reduction). First Content Word Mask, FCWM Level-1 (rolled vocab of level-0) Level-1 (roll up position of level-0) Level-2 (roll up document of level-1) df 1 1 1 df 0 1 0 df 1 0 2 1 1 2 1 0 2 1 1 3 0 2 0 3 2 2 te 2 2 4 5 5 7 7 4 5 8 9 7 9 6 3 4 1 3 0 0 tf 11 1 0 20 20 40 50 50 7 7 0 0 0 40 50 81 90 71 90 60 30 40 1 3 tf 0 2 2 4 5 0 0 00 01 00 00 00 0 50 7 400 00 11 00 00 0 40 1 5 8 9 7 9 6 3 1 0 01 00 0 0 001 1 0 0 0 0 0 1 00 00 00 0 tf 0 01 00 02 02 04 05 011 04 05 08 09 07 09 06 03 0 1 0 0 0 1 0 00 01 0 0 0 1 0 1 0 0 0 0 00 10 00 00 01 01 00 00 00 100 00 00 02 00 01 00 0 0 1 1 0 0 0 1 0 00 00 00 100 00 00 11 00 00 0 0 1 0 0 00 001 00 02 00 00 00 01 00 0 1 1 1 0 0 0 00 1 0 0 0 1 0 0 0 1 1 1 0 0 0 0 0 1 0 0 0 2 0 0 0 0 0 7 3 5 0 4 0 0 0 1 1 0 7 1 0 0 0 0 1 7 3 0 0 0 0 1 d=73 1 0 0 d=71 1 0 0 d=54 1 0 0 d=53 1 0 0 doc=73 d=46 1 0 0 0 0 d=29 1 0 0 doc=71 0 0 d=27 1 0 0 0 0 0 doc=54 0 0 d=09 1 0 0 000000 0 d=08 1 0 0 doc=53 0 0 0 000000 0 d=05 1 0 0 0 00 0 0 0 doc=460 0 000000 0 d=04 1 0 0 0 00 0 0 000000 0 doc=290 0 0 0 010000 0 1 0 0 00 0 0 0 doc=270 0 0 1 0 0 0 0 0 1 1 0 0 0 doc=090 1 0 0 0 0 0 1 0 0 0 0 0 doc=080 0 0 0 0 0 0 1 1 0 0 0 doc=050 1 0 0 0 0 0 0 0 VOCAB doc=040 0 0 1 0 0 0 baby 0 0 0 1 1 0 0 0 0 1 0 0 cry 0 0 0 0 0 dad 0 0 0 0 1 0 0 0 0 eat 1 0 0 0 man 0 0 0 0 Level-0 mother 0 0 0 0 0 pig 0 0 0 shower 0 0 0 POSITION 1 2 3 4 5 0 0 0 0

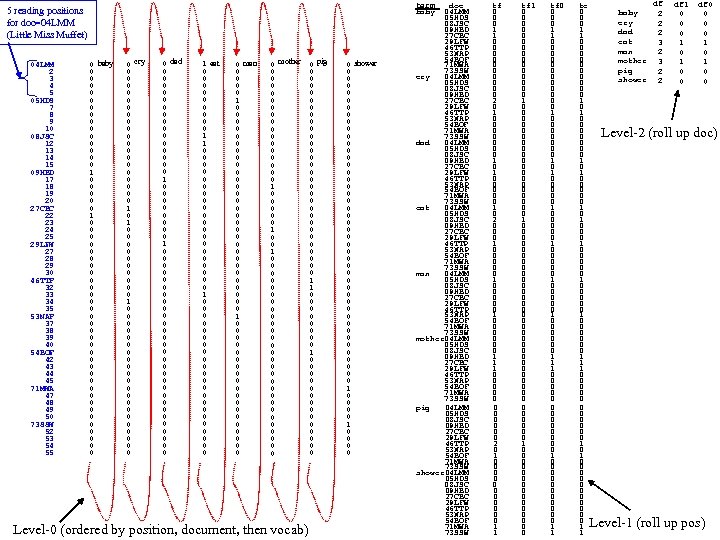

term baby 5 reading positions for doc=04 LMM (Little Miss Muffet) 04 LMM 2 3 4 5 05 HDS 7 8 9 10 08 JSC 12 13 14 15 09 HBD 17 18 19 20 27 CBC 22 23 24 25 29 LFW 27 28 29 30 46 TTP 32 33 34 35 53 NAP 37 38 39 40 54 BOF 42 43 44 45 71 MWA 47 48 49 50 73 SSW 52 53 54 55 0 baby 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 cry 0 0 0 0 0 1 0 0 0 0 0 0 0 0 dad 0 0 0 0 1 0 0 0 0 0 0 0 0 0 1 eat 0 0 0 0 0 1 1 0 0 0 0 0 0 0 0 0 0 0 0 man 0 0 1 0 0 0 0 0 0 0 0 1 0 0 0 0 0 mother 0 pig 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 Level-0 (ordered by position, document, then vocab) 0 shower 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 doc 04 LMM 05 HDS 08 JSC 09 HBD 27 CBC 29 LFW 46 TTP 53 NAP 54 BOF 71 MWA 73 SSW cry 04 LMM 05 HDS 08 JSC 09 HBD 27 CBC 29 LFW 46 TTP 53 NAP 54 BOF 71 MWA 73 SSW dad 04 LMM 05 HDS 08 JSC 09 HBD 27 CBC 29 LFW 46 TTP 53 NAP 54 BOF 71 MWA 73 SSW eat 04 LMM 05 HDS 08 JSC 09 HBD 27 CBC 29 LFW 46 TTP 53 NAP 54 BOF 71 MWA 73 SSW man 04 LMM 05 HDS 08 JSC 09 HBD 27 CBC 29 LFW 46 TTP 53 NAP 54 BOF 71 MWA 73 SSW mother 04 LMM 05 HDS 08 JSC 09 HBD 27 CBC 29 LFW 46 TTP 53 NAP 54 BOF 71 MWA 73 SSW pig 04 LMM 05 HDS 08 JSC 09 HBD 27 CBC 29 LFW 46 TTP 53 NAP 54 BOF 71 MWA 73 SSW shower 04 LMM 05 HDS 08 JSC 09 HBD 27 CBC 29 LFW 46 TTP 53 NAP 54 BOF 71 MWA 73 SSW tf 0 0 0 1 1 0 0 0 0 0 2 0 1 0 0 0 1 0 2 0 0 0 1 0 0 0 1 1 1 0 0 0 2 0 1 0 0 0 1 1 tf 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 tf 0 0 1 1 0 0 0 1 0 0 0 0 0 1 0 0 0 0 0 1 1 1 0 0 0 0 1 0 0 0 1 1 te 0 0 0 1 1 0 0 0 0 0 1 0 0 0 1 0 0 0 0 0 1 1 1 0 0 0 0 0 0 1 1 baby cry dad eat man mother pig shower df 2 2 2 3 2 2 df 1 0 0 0 1 0 0 df 0 0 1 0 0 Level-2 (roll up doc) Level-1 (roll up pos)

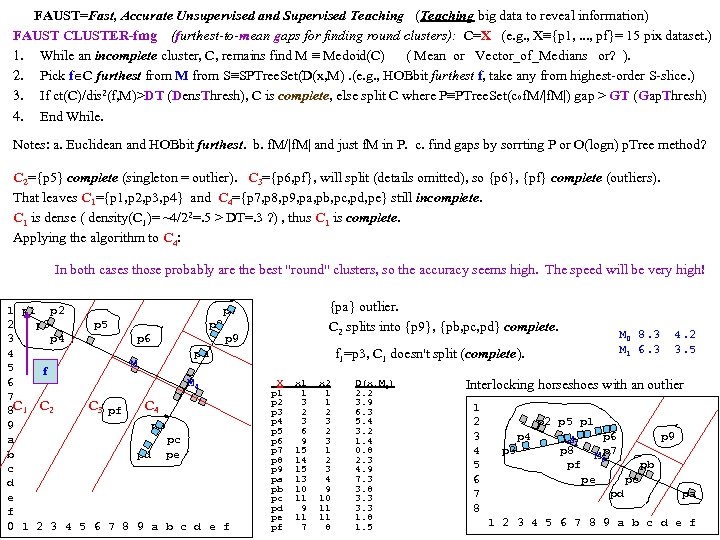

FAUST=Fast, Accurate Unsupervised and Supervised Teaching (Teaching big data to reveal information) FAUST CLUSTER-fmg (furthest-to-mean gaps for finding round clusters): C=X (e. g. , X≡{p 1, . . . , pf}= 15 pix dataset. ) 1. While an incomplete cluster, C, remains find M ≡ Medoid(C) ( Mean or Vector_of_Medians or? ). 2. Pick f C furthest from M from S≡SPTree. Set(D(x, M). (e. g. , HOBbit furthest f, take any from highest-order S-slice. ) 3. If ct(C)/dis 2(f, M)>DT (Dens. Thresh), C is complete, else split C where P≡PTree. Set(cof. M/|f. M|) gap > GT (Gap. Thresh) 4. End While. Notes: a. Euclidean and HOBbit furthest. b. f. M/|f. M| and just f. M in P. c. find gaps by sorrting P or O(logn) p. Tree method? C 2={p 5} complete (singleton = outlier). C 3={p 6, pf}, will split (details omitted), so {p 6}, {pf} complete (outliers). That leaves C 1={p 1, p 2, p 3, p 4} and C 4={p 7, p 8, p 9, pa, pb, pc, pd, pe} still incomplete. C 1 is dense ( density(C 1)= ~4/22=. 5 > DT=. 3 ? ) , thus C 1 is complete. Applying the algorithm to C 4: In both cases those probably are the best "round" clusters, so the accuracy seems high. The speed will be very high! 1 p 2 p 7 2 p 3 p 5 p 8 3 p 4 p 6 p 9 4 pa M 5 f 6 M 4 7 8 C 1 C 2 C 3 C 4 pf 9 pb a pc b pd pe c d e f 0 1 2 3 4 5 6 7 8 9 a b c d e f {pa} outlier. C 2 splits into {p 9}, {pb, pc, pd} complete. M 0 8. 3 M 1 6. 3 f 1=p 3, C 1 doesn't split (complete). X p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 pa pb pc pd pe pf x 1 1 3 2 3 6 9 15 14 15 13 10 11 9 11 7 x 2 1 1 2 3 4 9 10 11 11 8 D(x, M 0) 2. 2 3. 9 6. 3 5. 4 3. 2 1. 4 0. 8 2. 3 4. 9 7. 3 3. 8 3. 3 1. 8 1. 5 4. 2 3. 5 Interlocking horseshoes with an outlier 1 2 3 4 5 6 7 8 p 2 p 5 p 1 p 4 p 3 M 1 p 6 M p 7 p 8 pf pe 0 pc pd p 9 pb pa 1 2 3 4 5 6 7 8 9 a b c d e f

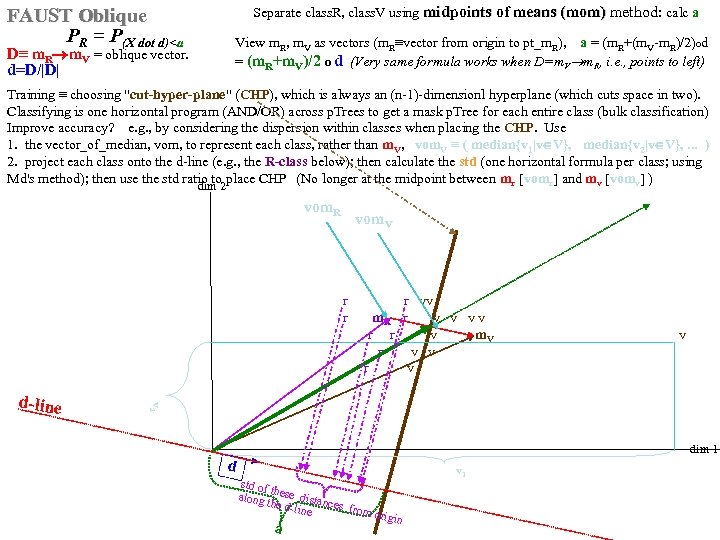

Separate class. R, class. V using midpoints of means (mom) method: calc a midpoints of means (mom) FAUST Oblique PR = P(X dot d)<a View m. R, m. V as vectors (m. R≡vector from origin to pt_m. R), a = (m. R+(m. V-m. R)/2)od = (m. R+m. V)/2 o d (Very same formula works when D=m. V m. R, i. e. , points to left) D≡ m. R m. V = oblique vector. d=D/|D| Training ≡ choosing "cut-hyper-plane" (CHP), which is always an (n-1)-dimensionl hyperplane (which cuts space in two). Classifying is one horizontal program (AND/OR) across p. Trees to get a mask p. Tree for each entire class (bulk classification) Improve accuracy? e. g. , by considering the dispersion within classes when placing the CHP. Use 1. the vector_of_median, vom, to represent each class, rather than m. V, vom. V ≡ ( median{v 1|v V}, median{v 2|v V}, . . . ) 2. project each class onto the d-line (e. g. , the R-class below); then calculate the std (one horizontal formula per class; using Md's method); then use the std ratio to place CHP (No longer at the midpoint between mr [vomr] and mv [vomv] ) dim 2 vom. R r vv r m. R r v v v v r r v m. V v r v v 2 d-line vom. V dim 1 d v 1 std of along these dista n the dline ces from o rig a in

c0d5709c79de436fb8086175991e61c9.ppt