15bd90ec5f89eb89cab9afdeef0f0d0e.ppt

- Количество слайдов: 28

Open Science Grid Middleware at the WLCG LHCC review Ruth Pordes, Fermilab 1

Open Science Grid Middleware at the WLCG LHCC review Ruth Pordes, Fermilab 1

OSG Commitments to the WLCG • OSG is relied on by the US LHC as their Distributed Facility in the US. • Resources accessible through the OSG infrastructure deliver accountable cycles for the US LHC experiments. • OSG interoperates with many other infrastructures in managerial, operational and technical activities. • OSG cooperates specifically with the EGEE to ensure an effective and transparent distributed system for the experiments. 2

OSG Commitments to the WLCG • OSG is relied on by the US LHC as their Distributed Facility in the US. • Resources accessible through the OSG infrastructure deliver accountable cycles for the US LHC experiments. • OSG interoperates with many other infrastructures in managerial, operational and technical activities. • OSG cooperates specifically with the EGEE to ensure an effective and transparent distributed system for the experiments. 2

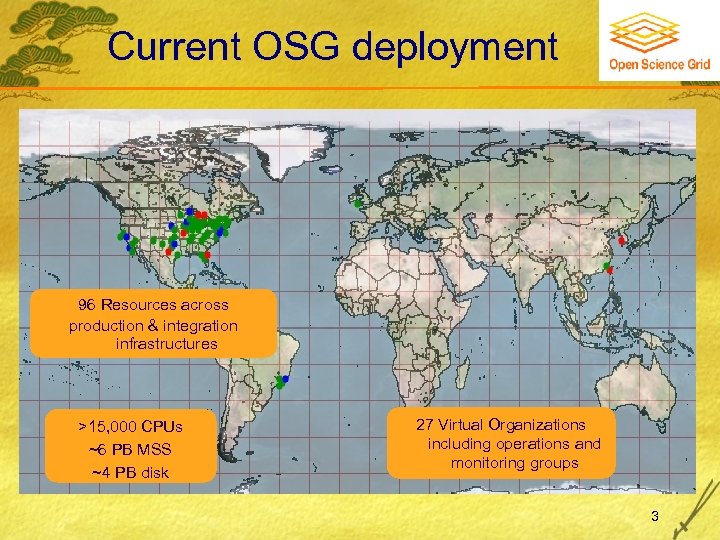

Current OSG deployment 96 Resources across production & integration infrastructures >15, 000 CPUs ~6 PB MSS ~4 PB disk 27 Virtual Organizations including operations and monitoring groups 3

Current OSG deployment 96 Resources across production & integration infrastructures >15, 000 CPUs ~6 PB MSS ~4 PB disk 27 Virtual Organizations including operations and monitoring groups 3

OSG Consortium & Project • Consortium policies are to be open to participation by all researchers. • Project responsibilities are to operate, protect, extend and support the Distributed Facility for the Consortium. • OSG is co-funded by DOE and NSF for 5 years at $6 M/year starting in Sept ‘ 06 currently including major deliverables for US LHC, LIGO and STAR; well used by CDF and D 0; and potentially other experiment major deliverables in the future. 4

OSG Consortium & Project • Consortium policies are to be open to participation by all researchers. • Project responsibilities are to operate, protect, extend and support the Distributed Facility for the Consortium. • OSG is co-funded by DOE and NSF for 5 years at $6 M/year starting in Sept ‘ 06 currently including major deliverables for US LHC, LIGO and STAR; well used by CDF and D 0; and potentially other experiment major deliverables in the future. 4

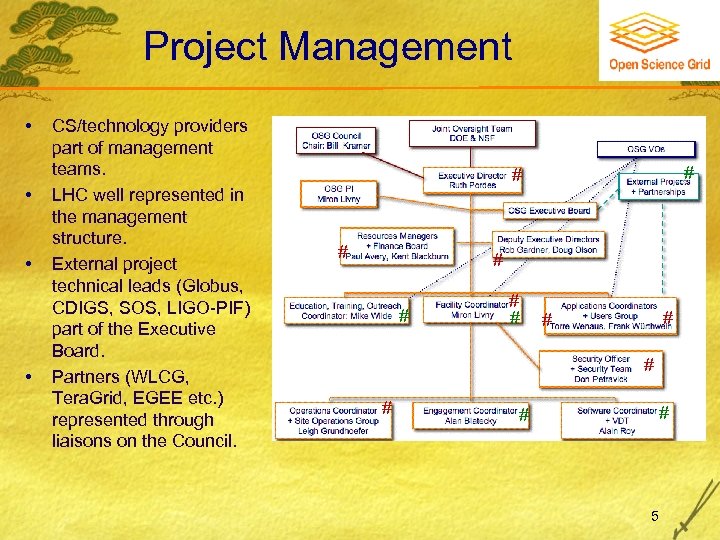

Project Management • • • CS/technology providers part of management teams. LHC well represented in the management structure. External project technical leads (Globus, CDIGS, SOS, LIGO-PIF) part of the Executive Board. Partners (WLCG, Tera. Grid, EGEE etc. ) represented through liaisons on the Council. # # # # • 5

Project Management • • • CS/technology providers part of management teams. LHC well represented in the management structure. External project technical leads (Globus, CDIGS, SOS, LIGO-PIF) part of the Executive Board. Partners (WLCG, Tera. Grid, EGEE etc. ) represented through liaisons on the Council. # # # # • 5

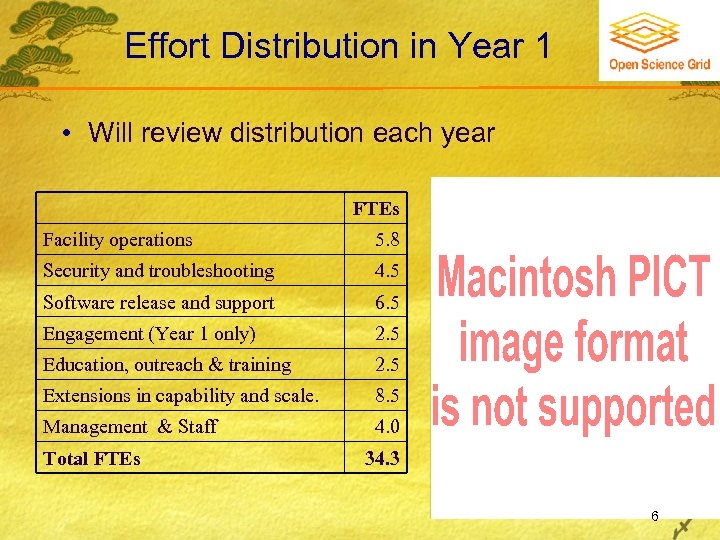

Effort Distribution in Year 1 • Will review distribution each year FTEs Facility operations 5. 8 Security and troubleshooting 4. 5 Software release and support 6. 5 Engagement (Year 1 only) 2. 5 Education, outreach & training 2. 5 Extensions in capability and scale. 8. 5 Management & Staff 4. 0 Total FTEs 34. 3 6

Effort Distribution in Year 1 • Will review distribution each year FTEs Facility operations 5. 8 Security and troubleshooting 4. 5 Software release and support 6. 5 Engagement (Year 1 only) 2. 5 Education, outreach & training 2. 5 Extensions in capability and scale. 8. 5 Management & Staff 4. 0 Total FTEs 34. 3 6

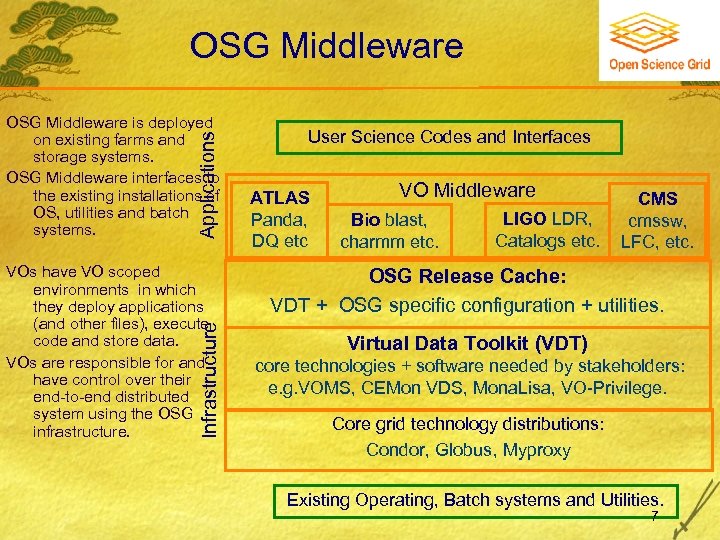

OSG Middleware Applications OSG Middleware is deployed on existing farms and storage systems. OSG Middleware interfaces to the existing installations of OS, utilities and batch systems. Infrastructure VOs have VO scoped environments in which they deploy applications (and other files), execute code and store data. VOs are responsible for and have control over their end-to-end distributed system using the OSG infrastructure. User Science Codes and Interfaces ATLAS Panda, DQ etc VO Middleware Bio blast, charmm etc. LIGO LDR, Catalogs etc. CMS cmssw, LFC, etc. OSG Release Cache: VDT + OSG specific configuration + utilities. Virtual Data Toolkit (VDT) core technologies + software needed by stakeholders: e. g. VOMS, CEMon VDS, Mona. Lisa, VO-Privilege. Core grid technology distributions: Condor, Globus, Myproxy Existing Operating, Batch systems and Utilities. 7

OSG Middleware Applications OSG Middleware is deployed on existing farms and storage systems. OSG Middleware interfaces to the existing installations of OS, utilities and batch systems. Infrastructure VOs have VO scoped environments in which they deploy applications (and other files), execute code and store data. VOs are responsible for and have control over their end-to-end distributed system using the OSG infrastructure. User Science Codes and Interfaces ATLAS Panda, DQ etc VO Middleware Bio blast, charmm etc. LIGO LDR, Catalogs etc. CMS cmssw, LFC, etc. OSG Release Cache: VDT + OSG specific configuration + utilities. Virtual Data Toolkit (VDT) core technologies + software needed by stakeholders: e. g. VOMS, CEMon VDS, Mona. Lisa, VO-Privilege. Core grid technology distributions: Condor, Globus, Myproxy Existing Operating, Batch systems and Utilities. 7

Applications & Middleware • OSG does not provide application-level tools OSG provides midleware VOs build on top of the middleware • OSG packages, tests and distributes common middleware needed by multiple VOs 8

Applications & Middleware • OSG does not provide application-level tools OSG provides midleware VOs build on top of the middleware • OSG packages, tests and distributes common middleware needed by multiple VOs 8

VO Responsibilities • VOs make their priorities clear for the common middleware. • VOs contribute to the testing of new OSG releases. • VOs consider commonality of and are prepared to contribute middleware that they develop or adopt. • VOs often use (and therefore harden) new components in the VO Environment before they are part of the common middleware. 9

VO Responsibilities • VOs make their priorities clear for the common middleware. • VOs contribute to the testing of new OSG releases. • VOs consider commonality of and are prepared to contribute middleware that they develop or adopt. • VOs often use (and therefore harden) new components in the VO Environment before they are part of the common middleware. 9

The VDT 10

The VDT 10

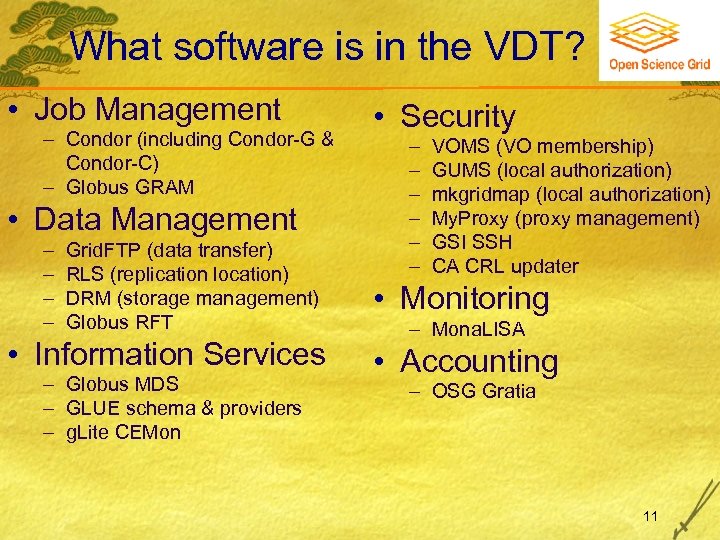

What software is in the VDT? • Job Management Condor (including Condor-G & Condor-C) Globus GRAM • Data Management Grid. FTP (data transfer) RLS (replication location) DRM (storage management) Globus RFT • Information Services Globus MDS GLUE schema & providers g. Lite CEMon • Security VOMS (VO membership) GUMS (local authorization) mkgridmap (local authorization) My. Proxy (proxy management) GSI SSH CA CRL updater • Monitoring Mona. LISA • Accounting OSG Gratia 11

What software is in the VDT? • Job Management Condor (including Condor-G & Condor-C) Globus GRAM • Data Management Grid. FTP (data transfer) RLS (replication location) DRM (storage management) Globus RFT • Information Services Globus MDS GLUE schema & providers g. Lite CEMon • Security VOMS (VO membership) GUMS (local authorization) mkgridmap (local authorization) My. Proxy (proxy management) GSI SSH CA CRL updater • Monitoring Mona. LISA • Accounting OSG Gratia 11

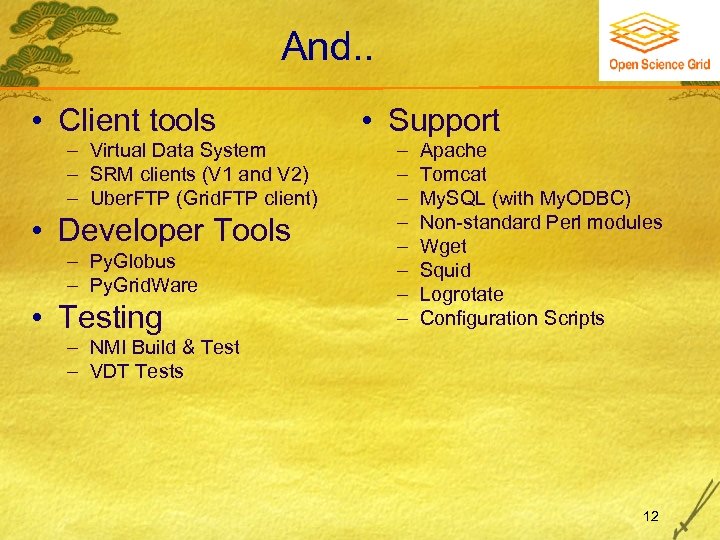

And. . • Client tools Virtual Data System SRM clients (V 1 and V 2) Uber. FTP (Grid. FTP client) • Developer Tools Py. Globus Py. Grid. Ware • Testing • Support Apache Tomcat My. SQL (with My. ODBC) Non-standard Perl modules Wget Squid Logrotate Configuration Scripts NMI Build & Test VDT Tests 12

And. . • Client tools Virtual Data System SRM clients (V 1 and V 2) Uber. FTP (Grid. FTP client) • Developer Tools Py. Globus Py. Grid. Ware • Testing • Support Apache Tomcat My. SQL (with My. ODBC) Non-standard Perl modules Wget Squid Logrotate Configuration Scripts NMI Build & Test VDT Tests 12

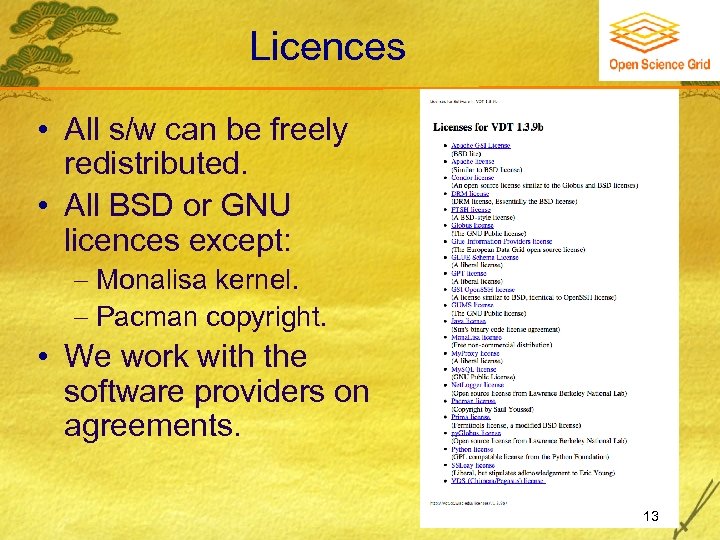

Licences • All s/w can be freely redistributed. • All BSD or GNU licences except: Monalisa kernel. Pacman copyright. • We work with the software providers on agreements. 13

Licences • All s/w can be freely redistributed. • All BSD or GNU licences except: Monalisa kernel. Pacman copyright. • We work with the software providers on agreements. 13

Agreements for S/W in the VDT • When software is added a questionnaire is filled out. • Addition of the software is agreed to by the Executive Director. • In future we are including a security audit. 14

Agreements for S/W in the VDT • When software is added a questionnaire is filled out. • Addition of the software is agreed to by the Executive Director. • In future we are including a security audit. 14

Integrated Security • Risk assessment of middleware is included in security management, operations, and technical controls: Auditing of s/w Checking of the software repositories. Etc. • Security contacts at each site for communication and incident response. • The OSG Security Officer decides, with consultation, the priority and mechanisms for addressing a vulnerability. • Security patches are treated with high priority for testing and deployment. 15

Integrated Security • Risk assessment of middleware is included in security management, operations, and technical controls: Auditing of s/w Checking of the software repositories. Etc. • Security contacts at each site for communication and incident response. • The OSG Security Officer decides, with consultation, the priority and mechanisms for addressing a vulnerability. • Security patches are treated with high priority for testing and deployment. 15

Software Support • VDT/OSG team provides front line support, then works with software providers to solve problems reported by administrators and users. • Issues: Some of the s/w is supported as best effort. Some of the s/w has support funding for the short term only. • Current plans: “Open Source” like support is ok for some components. OSG Consortium members may step up to provide support for others. OSG will have to consider support within the project. 16

Software Support • VDT/OSG team provides front line support, then works with software providers to solve problems reported by administrators and users. • Issues: Some of the s/w is supported as best effort. Some of the s/w has support funding for the short term only. • Current plans: “Open Source” like support is ok for some components. OSG Consortium members may step up to provide support for others. OSG will have to consider support within the project. 16

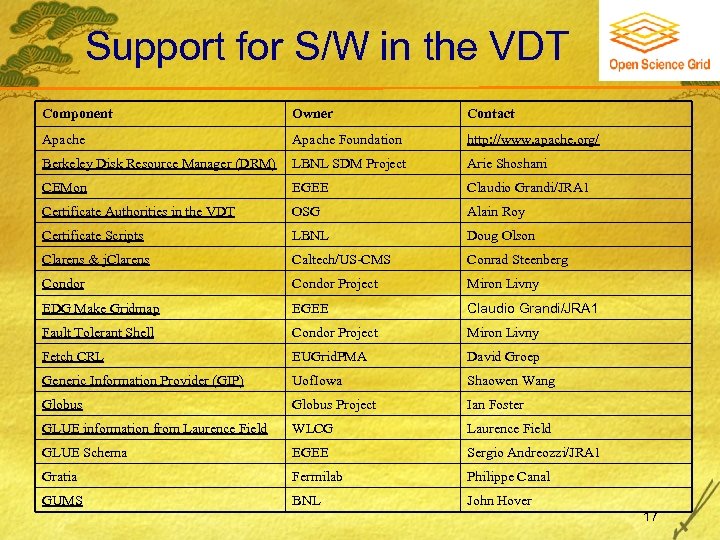

Support for S/W in the VDT Component Owner Contact Apache Foundation http: //www. apache. org/ Berkeley Disk Resource Manager (DRM) LBNL SDM Project Arie Shoshani CEMon EGEE Claudio Grandi/JRA 1 Certificate Authorities in the VDT OSG Alain Roy Certificate Scripts LBNL Doug Olson Clarens & j. Clarens Caltech/US-CMS Conrad Steenberg Condor Project Miron Livny EDG Make Gridmap EGEE Claudio Grandi/JRA 1 Fault Tolerant Shell Condor Project Miron Livny Fetch CRL EUGrid. PMA David Groep Generic Information Provider (GIP) Uof. Iowa Shaowen Wang Globus Project Ian Foster GLUE information from Laurence Field WLCG Laurence Field GLUE Schema EGEE Sergio Andreozzi/JRA 1 Gratia Fermilab Philippe Canal GUMS BNL John Hover 17

Support for S/W in the VDT Component Owner Contact Apache Foundation http: //www. apache. org/ Berkeley Disk Resource Manager (DRM) LBNL SDM Project Arie Shoshani CEMon EGEE Claudio Grandi/JRA 1 Certificate Authorities in the VDT OSG Alain Roy Certificate Scripts LBNL Doug Olson Clarens & j. Clarens Caltech/US-CMS Conrad Steenberg Condor Project Miron Livny EDG Make Gridmap EGEE Claudio Grandi/JRA 1 Fault Tolerant Shell Condor Project Miron Livny Fetch CRL EUGrid. PMA David Groep Generic Information Provider (GIP) Uof. Iowa Shaowen Wang Globus Project Ian Foster GLUE information from Laurence Field WLCG Laurence Field GLUE Schema EGEE Sergio Andreozzi/JRA 1 Gratia Fermilab Philippe Canal GUMS BNL John Hover 17

And. . Component Owner Contact KX 509 NMI University of Michigan Mon. ALISA Caltech/US-CMS Iosif Legrand My. Proxy NCSA myproxy-users@ncsa. uiuc. edu list. Netlogger LBNL Brian Tierney Pacman BU Saul Youssef PRIMA Fermilab Gabriele Garzoglio Py. Globus LBNL Keith Jackson RLS Globus Carl Kesselman Squid http: //www. squid-cache. org squid-bugs@squid-cache. org. SRMCP Fermilab Timur Perelmutov Tcl on sourceforge http: //tcl. sourceforge. net Tom. Cat Apache Foundation http: //tomcat. apache. org Uber. FTP NCSA gridftp@ncsa. uiuc. edu. VDT – scripts, utilities OSG Alain Roy Virtual Data System (VDS) Uof. C, ISI Ian Foster, Carl Kesselman VOMS and VOMS Admin EGEE Vincenzo Ciaschini/JRA 1 18

And. . Component Owner Contact KX 509 NMI University of Michigan Mon. ALISA Caltech/US-CMS Iosif Legrand My. Proxy NCSA myproxy-users@ncsa. uiuc. edu list. Netlogger LBNL Brian Tierney Pacman BU Saul Youssef PRIMA Fermilab Gabriele Garzoglio Py. Globus LBNL Keith Jackson RLS Globus Carl Kesselman Squid http: //www. squid-cache. org squid-bugs@squid-cache. org. SRMCP Fermilab Timur Perelmutov Tcl on sourceforge http: //tcl. sourceforge. net Tom. Cat Apache Foundation http: //tomcat. apache. org Uber. FTP NCSA gridftp@ncsa. uiuc. edu. VDT – scripts, utilities OSG Alain Roy Virtual Data System (VDS) Uof. C, ISI Ian Foster, Carl Kesselman VOMS and VOMS Admin EGEE Vincenzo Ciaschini/JRA 1 18

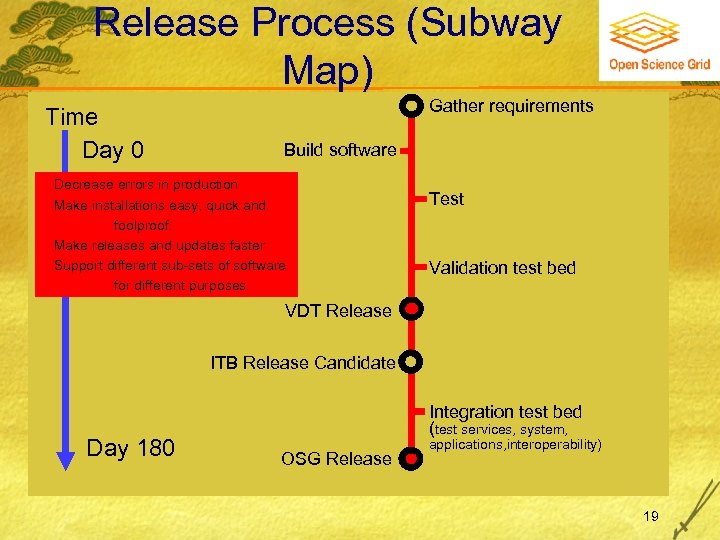

Release Process (Subway Map) Time Day 0 Gather requirements Build software Decrease errors in production Make installations easy, quick and foolproof. Make releases and updates faster Support different sub-sets of software for different purposes. Test Validation test bed VDT Release ITB Release Candidate Day 180 Integration test bed (test services, system, OSG Release applications, interoperability) 19

Release Process (Subway Map) Time Day 0 Gather requirements Build software Decrease errors in production Make installations easy, quick and foolproof. Make releases and updates faster Support different sub-sets of software for different purposes. Test Validation test bed VDT Release ITB Release Candidate Day 180 Integration test bed (test services, system, OSG Release applications, interoperability) 19

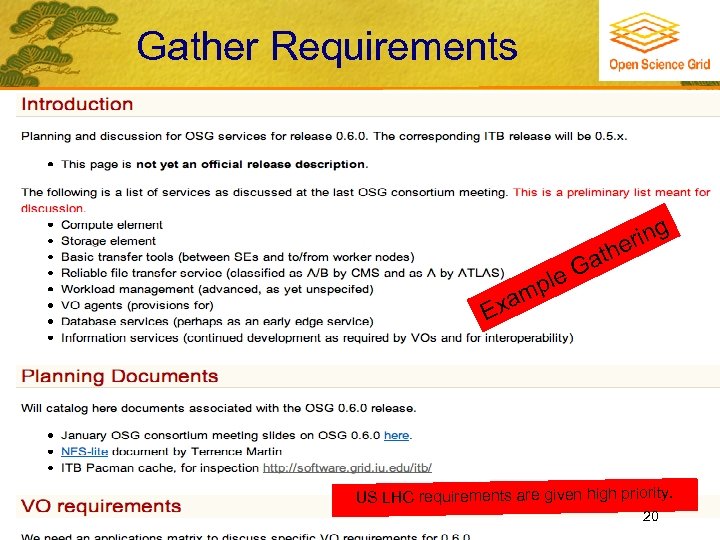

Gather Requirements ple xam ring he at G E US LHC requirements are given high priority. 20

Gather Requirements ple xam ring he at G E US LHC requirements are given high priority. 20

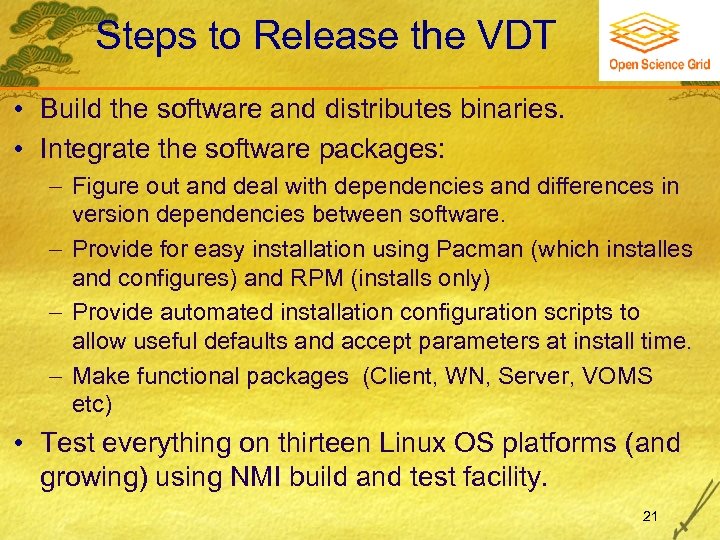

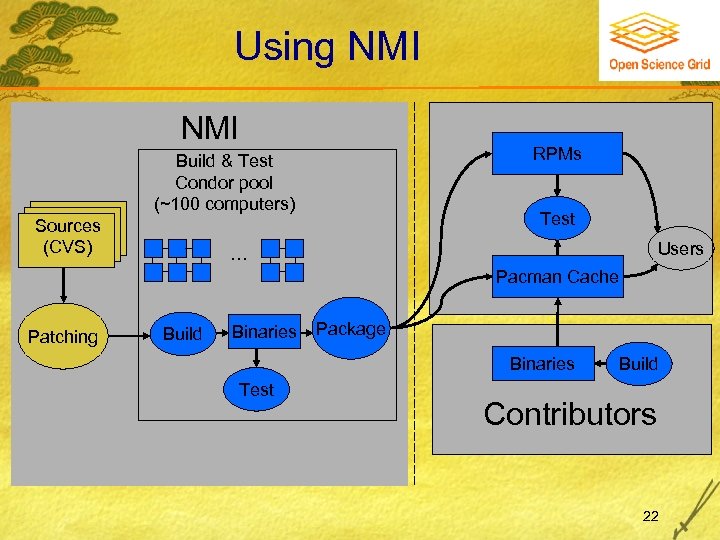

Steps to Release the VDT • Build the software and distributes binaries. • Integrate the software packages: Figure out and deal with dependencies and differences in version dependencies between software. Provide for easy installation using Pacman (which installes and configures) and RPM (installs only) Provide automated installation configuration scripts to allow useful defaults and accept parameters at install time. Make functional packages (Client, WN, Server, VOMS etc) • Test everything on thirteen Linux OS platforms (and growing) using NMI build and test facility. 21

Steps to Release the VDT • Build the software and distributes binaries. • Integrate the software packages: Figure out and deal with dependencies and differences in version dependencies between software. Provide for easy installation using Pacman (which installes and configures) and RPM (installs only) Provide automated installation configuration scripts to allow useful defaults and accept parameters at install time. Make functional packages (Client, WN, Server, VOMS etc) • Test everything on thirteen Linux OS platforms (and growing) using NMI build and test facility. 21

Using NMI RPMs Build & Test Condor pool (~100 computers) Sources (CVS) Test Users … Pacman Cache Patching Build Binaries Package Binaries Test Build Contributors 22

Using NMI RPMs Build & Test Condor pool (~100 computers) Sources (CVS) Test Users … Pacman Cache Patching Build Binaries Package Binaries Test Build Contributors 22

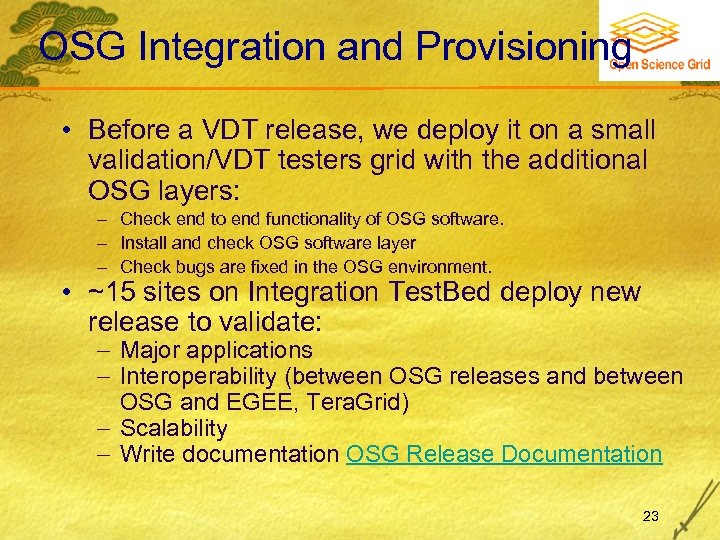

OSG Integration and Provisioning • Before a VDT release, we deploy it on a small validation/VDT testers grid with the additional OSG layers: Check end to end functionality of OSG software. Install and check OSG software layer Check bugs are fixed in the OSG environment. • ~15 sites on Integration Test. Bed deploy new release to validate: Major applications Interoperability (between OSG releases and between OSG and EGEE, Tera. Grid) Scalability Write documentation OSG Release Documentation 23

OSG Integration and Provisioning • Before a VDT release, we deploy it on a small validation/VDT testers grid with the additional OSG layers: Check end to end functionality of OSG software. Install and check OSG software layer Check bugs are fixed in the OSG environment. • ~15 sites on Integration Test. Bed deploy new release to validate: Major applications Interoperability (between OSG releases and between OSG and EGEE, Tera. Grid) Scalability Write documentation OSG Release Documentation 23

OSG Releases • After testing and validation, a production release is made. Sites are free to upgrade “when ready”: not in lock-step. OSG supports the two most recent major releases • No guarantee of backwards compatibility in all services, and new services bring new capabilities. • Knowing the specific environment an application finds at each site becoming more important! 24

OSG Releases • After testing and validation, a production release is made. Sites are free to upgrade “when ready”: not in lock-step. OSG supports the two most recent major releases • No guarantee of backwards compatibility in all services, and new services bring new capabilities. • Knowing the specific environment an application finds at each site becoming more important! 24

Next Steps • Schedule and function priorities driven by needs of US LHC. • Balanced by need to prepare for mediumlonger term sustainability and evolution. • Goal of 2 major releases a year with easier minor updates inbetween. 25

Next Steps • Schedule and function priorities driven by needs of US LHC. • Balanced by need to prepare for mediumlonger term sustainability and evolution. • Goal of 2 major releases a year with easier minor updates inbetween. 25

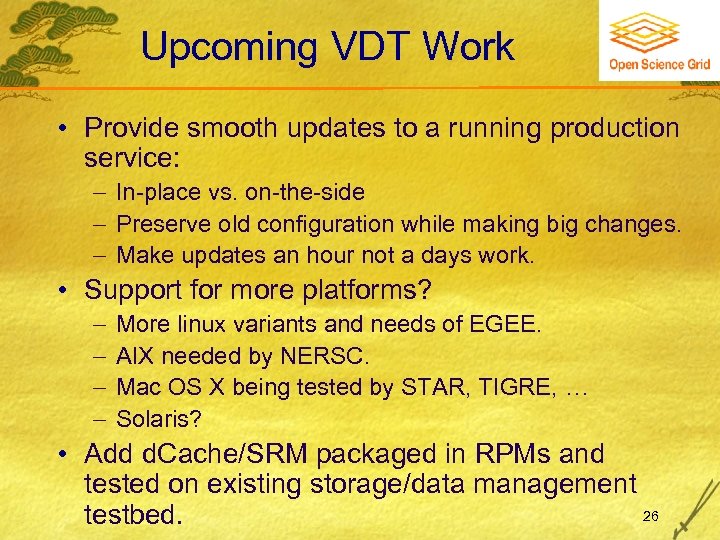

Upcoming VDT Work • Provide smooth updates to a running production service: In-place vs. on-the-side Preserve old configuration while making big changes. Make updates an hour not a days work. • Support for more platforms? More linux variants and needs of EGEE. AIX needed by NERSC. Mac OS X being tested by STAR, TIGRE, … Solaris? • Add d. Cache/SRM packaged in RPMs and tested on existing storage/data management 26 testbed.

Upcoming VDT Work • Provide smooth updates to a running production service: In-place vs. on-the-side Preserve old configuration while making big changes. Make updates an hour not a days work. • Support for more platforms? More linux variants and needs of EGEE. AIX needed by NERSC. Mac OS X being tested by STAR, TIGRE, … Solaris? • Add d. Cache/SRM packaged in RPMs and tested on existing storage/data management 26 testbed.

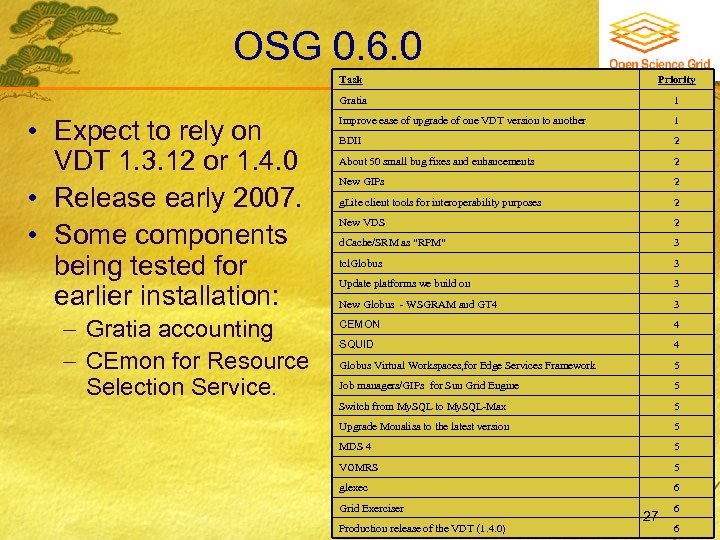

OSG 0. 6. 0 Task Gratia • Expect to rely on VDT 1. 3. 12 or 1. 4. 0 • Release early 2007. • Some components being tested for earlier installation: Gratia accounting CEmon for Resource Selection Service. Priority 1 Improve ease of upgrade of one VDT version to another 1 BDII 2 About 50 small bug fixes and enhancements 2 New GIPs 2 g. Lite client tools for interoperability purposes 2 New VDS 2 d. Cache/SRM as "RPM" 3 tcl. Globus 3 Update platforms we build on 3 New Globus - WSGRAM and GT 4 3 CEMON 4 SQUID 4 Globus Virtual Workspaces, for Edge Services Framework 5 Job managers/GIPs for Sun Grid Engine 5 Switch from My. SQL to My. SQL-Max 5 Upgrade Monalisa to the latest version 5 MDS 4 5 VOMRS 5 glexec 6 Grid Exerciser Production release of the VDT (1. 4. 0) 27 6 6

OSG 0. 6. 0 Task Gratia • Expect to rely on VDT 1. 3. 12 or 1. 4. 0 • Release early 2007. • Some components being tested for earlier installation: Gratia accounting CEmon for Resource Selection Service. Priority 1 Improve ease of upgrade of one VDT version to another 1 BDII 2 About 50 small bug fixes and enhancements 2 New GIPs 2 g. Lite client tools for interoperability purposes 2 New VDS 2 d. Cache/SRM as "RPM" 3 tcl. Globus 3 Update platforms we build on 3 New Globus - WSGRAM and GT 4 3 CEMON 4 SQUID 4 Globus Virtual Workspaces, for Edge Services Framework 5 Job managers/GIPs for Sun Grid Engine 5 Switch from My. SQL to My. SQL-Max 5 Upgrade Monalisa to the latest version 5 MDS 4 5 VOMRS 5 glexec 6 Grid Exerciser Production release of the VDT (1. 4. 0) 27 6 6

Summary • The Open Science Grid infrastructure is a part of the WLCG Collaboration. • OSG supports and evolves the VDT core middleware in support of the EGEE and the WLCG as well as other users. • The OSG software stack is deployed to interoperate with the EGEE and provide effective systems for the US LHC experiments among others. 28

Summary • The Open Science Grid infrastructure is a part of the WLCG Collaboration. • OSG supports and evolves the VDT core middleware in support of the EGEE and the WLCG as well as other users. • The OSG software stack is deployed to interoperate with the EGEE and provide effective systems for the US LHC experiments among others. 28