0564770df294b28a7b2f1f3d62fbc4c4.ppt

- Количество слайдов: 47

Open Science Grid Linking Universities and Laboratories in National Cyber. Infrastructure Paul Avery University of Florida avery@phys. ufl. edu SURA Infrastructure Workshop Austin, TX December 7, 2005 SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 1

Bottom-up Collaboration: “Trillium” Ø Trillium = PPDG + Gri. Phy. N + i. VDGL u PPDG: $12 M (DOE) (1999 – 2006) u Gri. Phy. N: $12 M (NSF) (2000 – 2005) u i. VDGL: $14 M (NSF) (2001 – 2006) Ø ~150 people with large overlaps between projects u Universities, Ø Strong labs, foreign partners driver for funding agency collaborations u Inter-agency: NSF – DOE u Intra-agency: Directorate – Directorate, Division – Division Ø Coordinated internally to meet broad goals u CS research, developing/supporting Virtual Data Toolkit (VDT) u Grid deployment, using VDT-based middleware u Unified entity when collaborating internationally SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 2

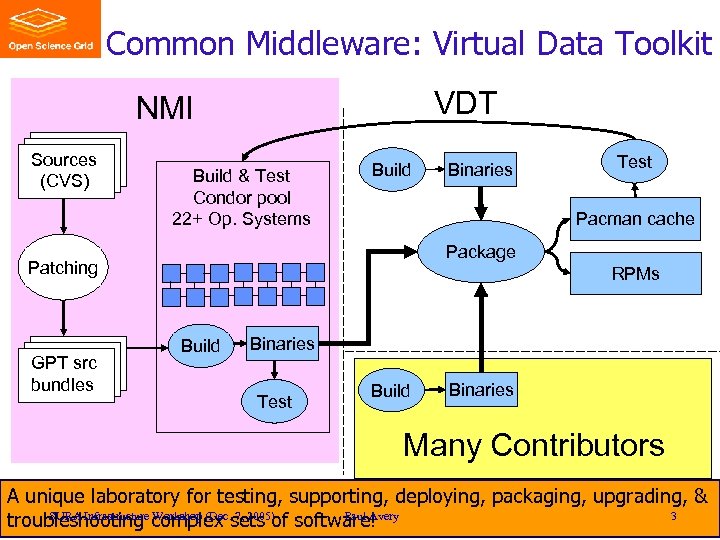

Common Middleware: Virtual Data Toolkit VDT NMI Sources (CVS) Build & Test Condor pool 22+ Op. Systems Build Test Pacman cache Package Patching GPT src bundles Binaries RPMs Build Binaries Test Build Binaries Many Contributors A unique laboratory for testing, supporting, deploying, packaging, upgrading, & SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 3 troubleshooting complex sets of software!

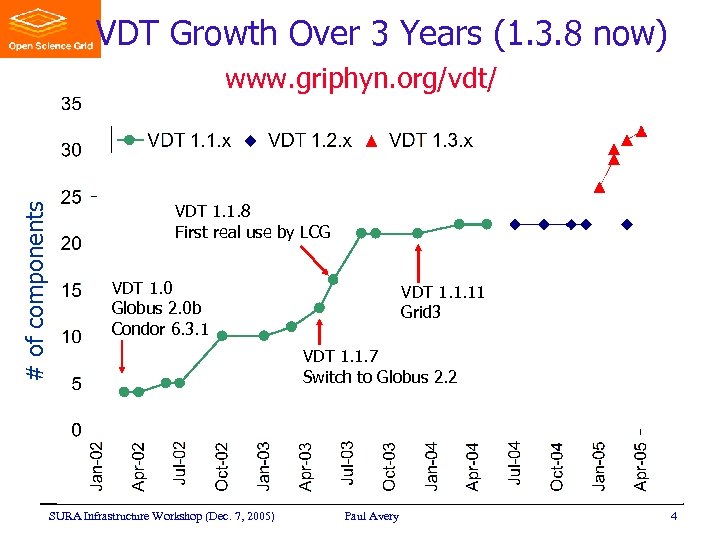

VDT Growth Over 3 Years (1. 3. 8 now) # of components www. griphyn. org/vdt/ VDT 1. 1. 8 First real use by LCG VDT 1. 0 Globus 2. 0 b Condor 6. 3. 1 VDT 1. 1. 11 Grid 3 VDT 1. 1. 7 Switch to Globus 2. 2 SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 4

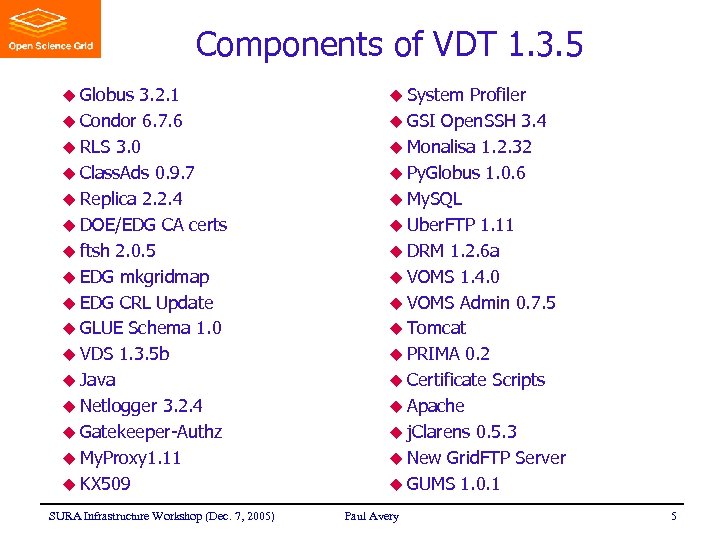

Components of VDT 1. 3. 5 u Globus 3. 2. 1 u Condor 6. 7. 6 u RLS 3. 0 u Class. Ads 0. 9. 7 u Replica 2. 2. 4 u DOE/EDG CA certs u ftsh 2. 0. 5 u EDG mkgridmap u EDG CRL Update u GLUE Schema 1. 0 u VDS 1. 3. 5 b u Java u Netlogger 3. 2. 4 u Gatekeeper-Authz u My. Proxy 1. 11 u KX 509 SURA Infrastructure Workshop (Dec. 7, 2005) u System Profiler u GSI Open. SSH 3. 4 u Monalisa 1. 2. 32 u Py. Globus 1. 0. 6 u My. SQL u Uber. FTP 1. 11 u DRM 1. 2. 6 a u VOMS 1. 4. 0 u VOMS Admin 0. 7. 5 u Tomcat u PRIMA 0. 2 u Certificate Scripts u Apache u j. Clarens 0. 5. 3 u New Grid. FTP Server u GUMS 1. 0. 1 Paul Avery 5

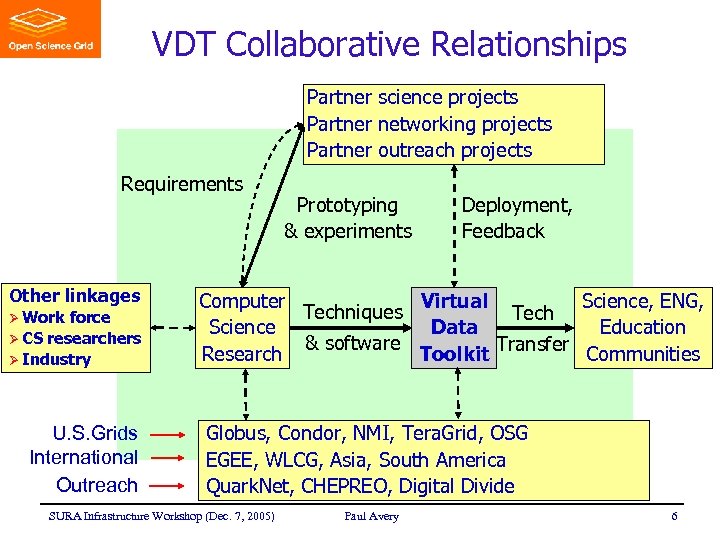

VDT Collaborative Relationships Partner science projects Partner networking projects Partner outreach projects Requirements Other linkages Ø Work force Ø CS researchers Ø Industry U. S. Grids International Outreach Prototyping & experiments Deployment, Feedback Computer Virtual Science, ENG, Techniques Tech Science Data Education & software Research Toolkit Transfer Communities Globus, Condor, NMI, Tera. Grid, OSG EGEE, WLCG, Asia, South America Quark. Net, CHEPREO, Digital Divide SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 6

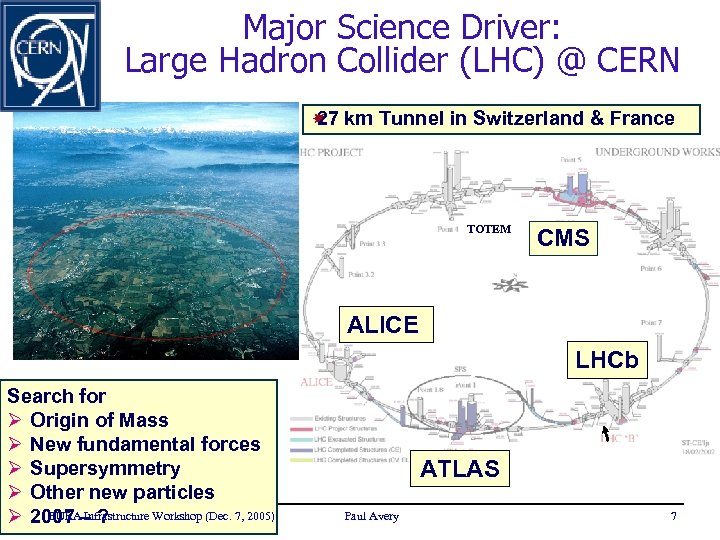

Major Science Driver: Large Hadron Collider (LHC) @ CERN km Tunnel in Switzerland & France 27 TOTEM CMS ALICE LHCb Search for Ø Origin of Mass Ø New fundamental forces Ø Supersymmetry Ø Other new particles SURA Infrastructure Workshop (Dec. 7, 2005) Ø 2007 – ? ATLAS Paul Avery 7

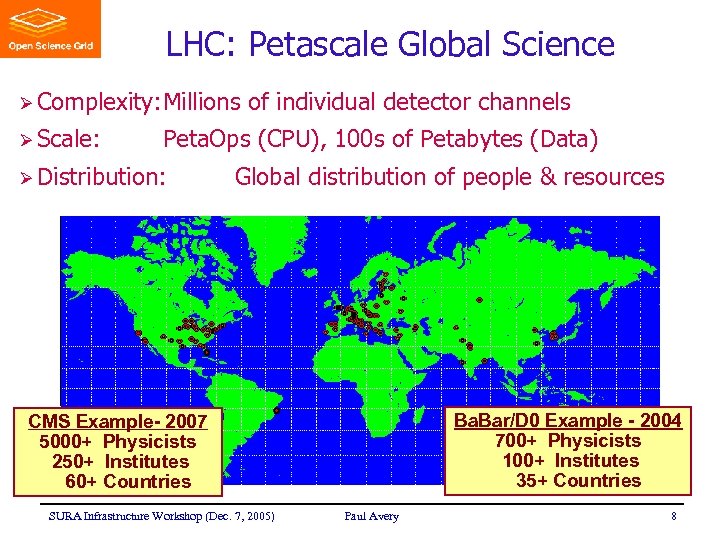

LHC: Petascale Global Science Ø Complexity: Millions Ø Scale: of individual detector channels Peta. Ops (CPU), 100 s of Petabytes (Data) Ø Distribution: Global distribution of people & resources Ba. Bar/D 0 Example - 2004 700+ Physicists 100+ Institutes 35+ Countries CMS Example- 2007 5000+ Physicists 250+ Institutes 60+ Countries SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 8

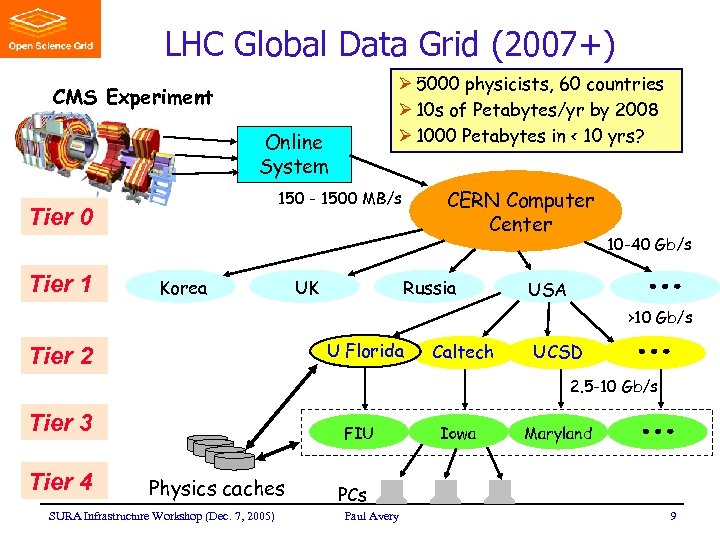

LHC Global Data Grid (2007+) Ø 5000 physicists, 60 countries Ø 10 s of Petabytes/yr by 2008 Ø 1000 Petabytes in < 10 yrs? CMS Experiment Online System Tier 0 Tier 1 CERN Computer Center 150 - 1500 MB/s Korea Russia UK 10 -40 Gb/s USA >10 Gb/s U Florida Tier 2 Caltech UCSD 2. 5 -10 Gb/s Tier 3 Tier 4 FIU Physics caches SURA Infrastructure Workshop (Dec. 7, 2005) Iowa Maryland PCs Paul Avery 9

Grid 3 and Open Science Grid SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 10

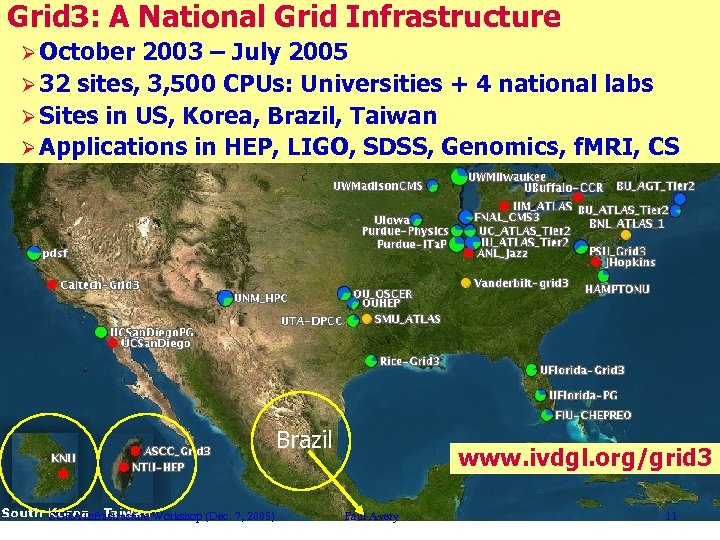

Grid 3: A National Grid Infrastructure Ø October 2003 – July 2005 Ø 32 sites, 3, 500 CPUs: Universities + 4 national labs Ø Sites in US, Korea, Brazil, Taiwan Ø Applications in HEP, LIGO, SDSS, Genomics, f. MRI, CS Brazil SURA Infrastructure Workshop (Dec. 7, 2005) www. ivdgl. org/grid 3 Paul Avery 11

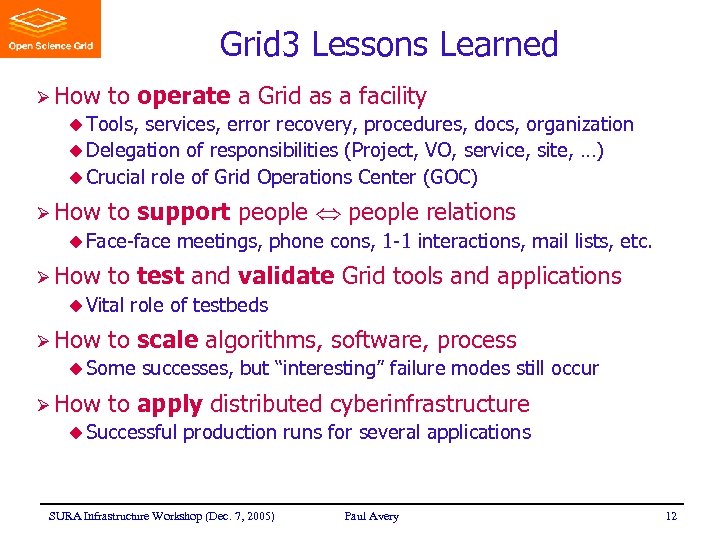

Grid 3 Lessons Learned Ø How to operate a Grid as a facility u Tools, services, error recovery, procedures, docs, organization u Delegation of responsibilities (Project, VO, service, site, …) u Crucial role of Grid Operations Center (GOC) Ø How to support people relations u Face-face Ø How to test and validate Grid tools and applications u Vital Ø How role of testbeds to scale algorithms, software, process u Some Ø How meetings, phone cons, 1 -1 interactions, mail lists, etc. successes, but “interesting” failure modes still occur to apply distributed cyberinfrastructure u Successful production runs for several applications SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 12

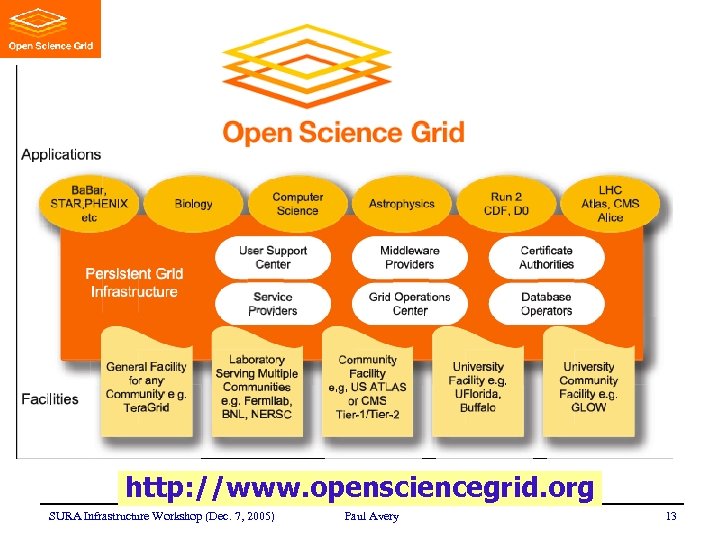

http: //www. opensciencegrid. org SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 13

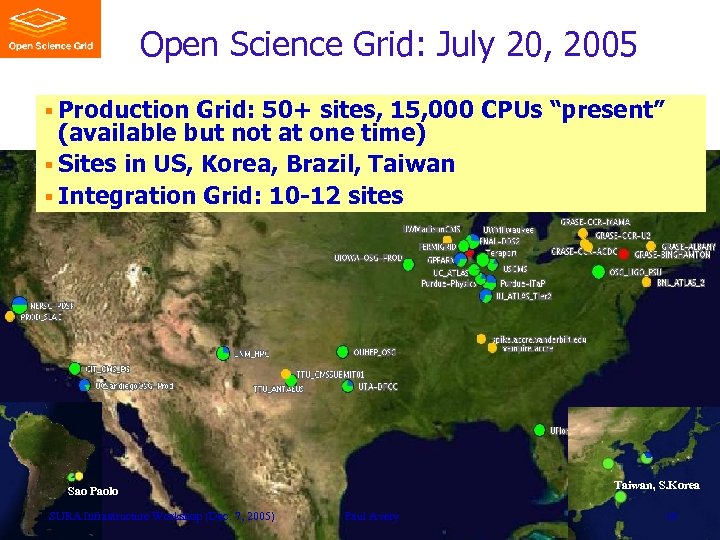

Open Science Grid: July 20, 2005 § Production Grid: 50+ sites, 15, 000 CPUs “present” (available but not at one time) § Sites in US, Korea, Brazil, Taiwan § Integration Grid: 10 -12 sites Taiwan, S. Korea Sao Paolo SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 14

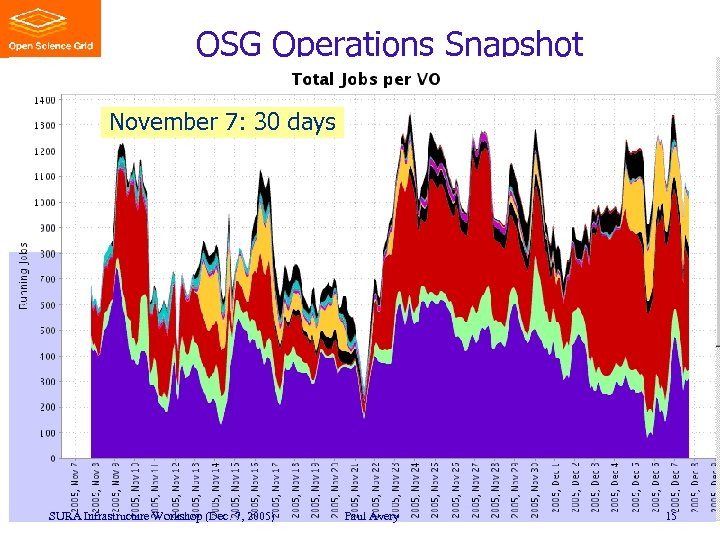

OSG Operations Snapshot November 7: 30 days SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 15

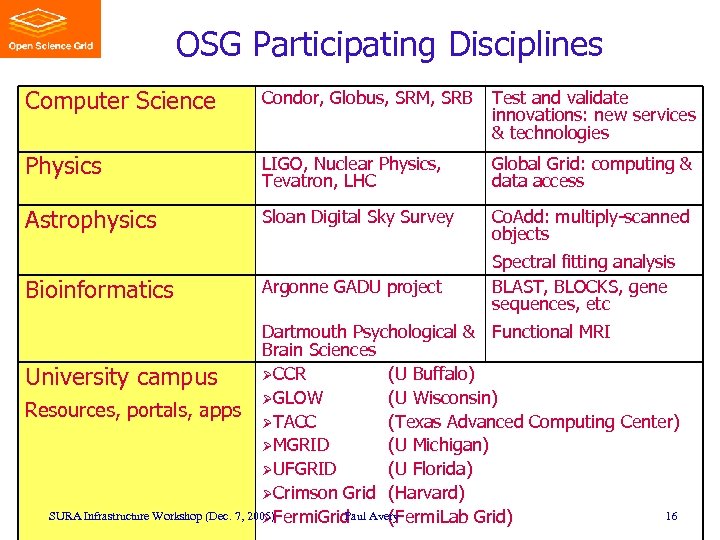

OSG Participating Disciplines Computer Science Condor, Globus, SRM, SRB Test and validate innovations: new services & technologies Physics LIGO, Nuclear Physics, Tevatron, LHC Global Grid: computing & data access Astrophysics Sloan Digital Sky Survey Co. Add: multiply-scanned objects Argonne GADU project Spectral fitting analysis BLAST, BLOCKS, gene sequences, etc Bioinformatics Dartmouth Psychological & Functional MRI Brain Sciences ØCCR (U Buffalo) University campus ØGLOW (U Wisconsin) Resources, portals, apps ØTACC (Texas Advanced Computing Center) ØMGRID (U Michigan) ØUFGRID (U Florida) ØCrimson Grid (Harvard) SURA Infrastructure Workshop (Dec. 7, 2005)Fermi. Grid Paul Avery 16 Ø (Fermi. Lab Grid)

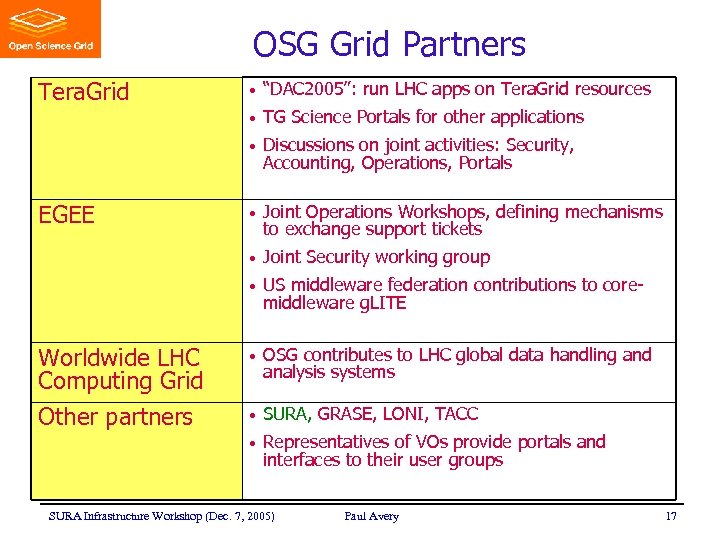

OSG Grid Partners Tera. Grid • “DAC 2005”: run LHC apps on Tera. Grid resources • TG Science Portals for other applications • Discussions on joint activities: Security, Accounting, Operations, Portals • Joint Operations Workshops, defining mechanisms to exchange support tickets • Joint Security working group • US middleware federation contributions to coremiddleware g. LITE Worldwide LHC Computing Grid • OSG contributes to LHC global data handling and analysis systems Other partners • SURA, GRASE, LONI, TACC • Representatives of VOs provide portals and interfaces to their user groups EGEE SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 17

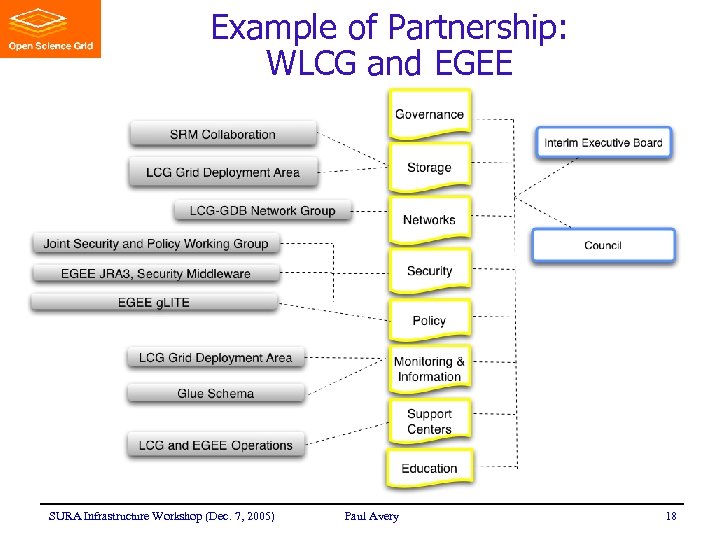

Example of Partnership: WLCG and EGEE SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 18

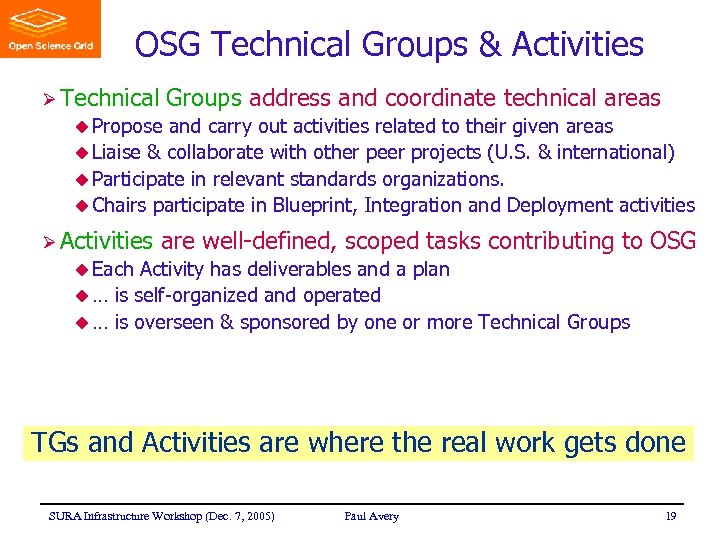

OSG Technical Groups & Activities Ø Technical Groups address and coordinate technical areas u Propose and carry out activities related to their given areas u Liaise & collaborate with other peer projects (U. S. & international) u Participate in relevant standards organizations. u Chairs participate in Blueprint, Integration and Deployment activities Ø Activities are well-defined, scoped tasks contributing to OSG u Each Activity has deliverables and a plan u … is self-organized and operated u … is overseen & sponsored by one or more Technical Groups TGs and Activities are where the real work gets done SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 19

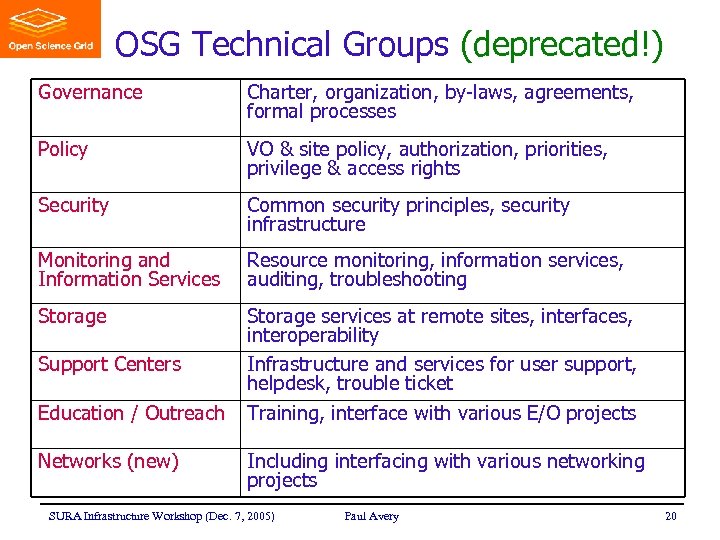

OSG Technical Groups (deprecated!) Governance Charter, organization, by-laws, agreements, formal processes Policy VO & site policy, authorization, priorities, privilege & access rights Security Common security principles, security infrastructure Monitoring and Information Services Resource monitoring, information services, auditing, troubleshooting Storage services at remote sites, interfaces, interoperability Infrastructure and services for user support, helpdesk, trouble ticket Training, interface with various E/O projects Support Centers Education / Outreach Networks (new) Including interfacing with various networking projects SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 20

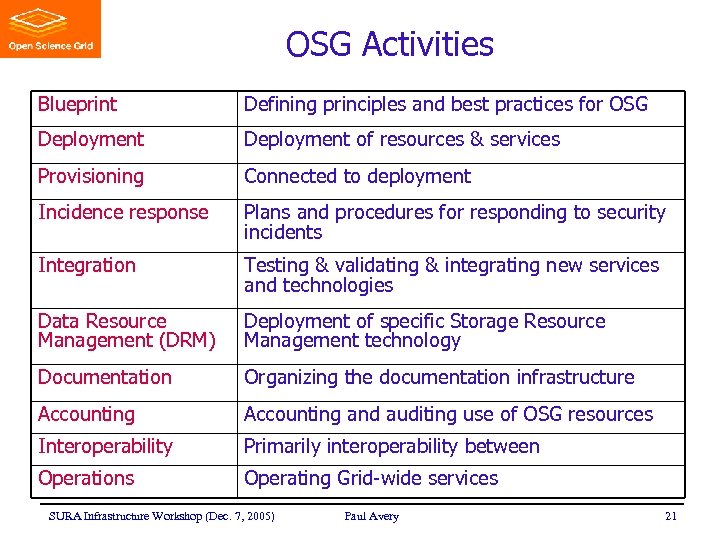

OSG Activities Blueprint Defining principles and best practices for OSG Deployment of resources & services Provisioning Connected to deployment Incidence response Plans and procedures for responding to security incidents Integration Testing & validating & integrating new services and technologies Data Resource Management (DRM) Deployment of specific Storage Resource Management technology Documentation Organizing the documentation infrastructure Accounting and auditing use of OSG resources Interoperability Primarily interoperability between Operations Operating Grid-wide services SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 21

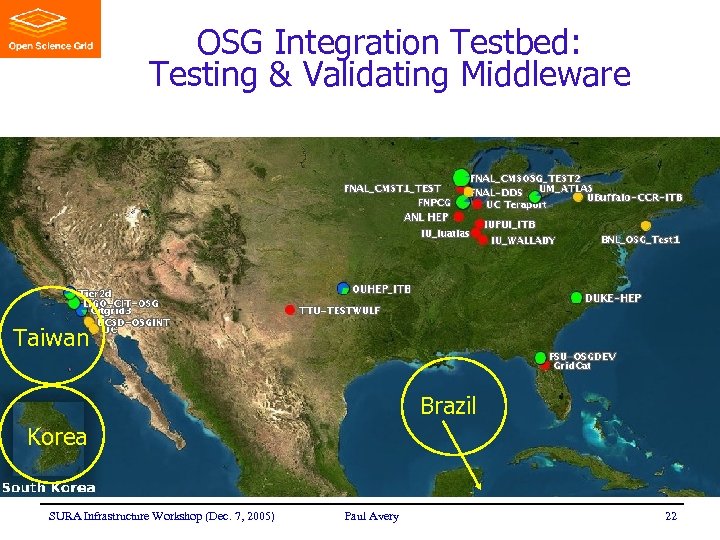

OSG Integration Testbed: Testing & Validating Middleware Taiwan Brazil Korea SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 22

Networks SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 23

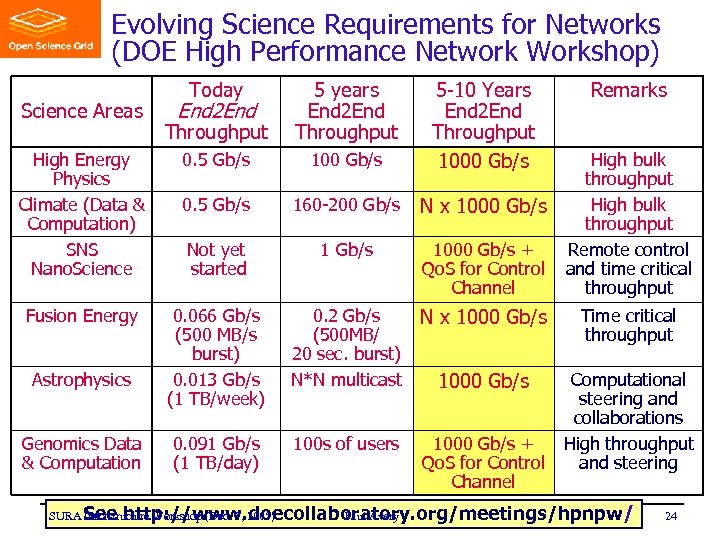

Evolving Science Requirements for Networks (DOE High Performance Network Workshop) End 2 End Throughput 5 years End 2 End Throughput High Energy Physics Climate (Data & Computation) SNS Nano. Science 0. 5 Gb/s 100 Gb/s 5 -10 Years End 2 End Throughput 1000 Gb/s 0. 5 Gb/s 160 -200 Gb/s N x 1000 Gb/s Not yet started 1 Gb/s 1000 Gb/s + Qo. S for Control Channel Fusion Energy 0. 066 Gb/s (500 MB/s burst) 0. 013 Gb/s (1 TB/week) 0. 2 Gb/s (500 MB/ 20 sec. burst) N*N multicast N x 1000 Gb/s Time critical throughput 1000 Gb/s 0. 091 Gb/s (1 TB/day) 100 s of users 1000 Gb/s + Qo. S for Control Channel Computational steering and collaborations High throughput and steering Science Areas Astrophysics Genomics Data & Computation Today Remarks High bulk throughput Remote control and time critical throughput SURA See http: //www. doecollaboratory. org/meetings/hpnpw Infrastructure Workshop (Dec. 7, 2005) Paul Avery / 24

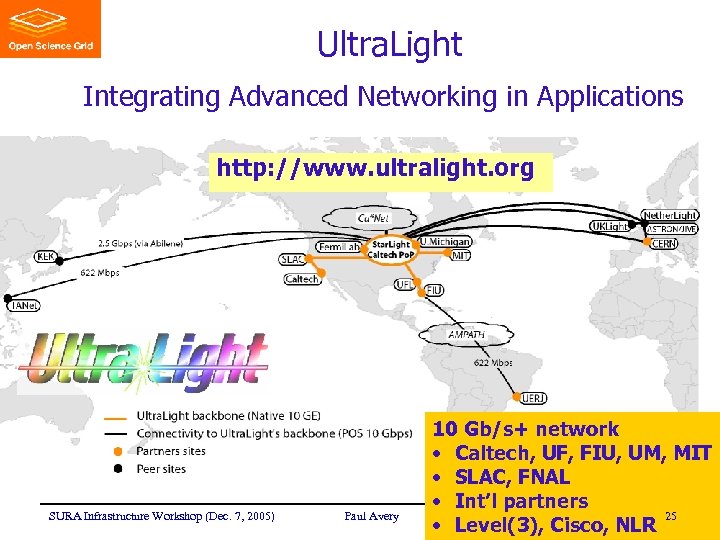

Ultra. Light Integrating Advanced Networking in Applications http: //www. ultralight. org SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 10 Gb/s+ network • Caltech, UF, FIU, UM, MIT • SLAC, FNAL • Int’l partners 25 • Level(3), Cisco, NLR

Education Training Communications SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 26

i. VDGL, Gri. Phy. N Education/Outreach Basics Ø Ø $200 K/yr Led by UT Brownsville Workshops, portals, tutorials Partnerships with Quark. Net, CHEPREO, LIGO E/O, … SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 27

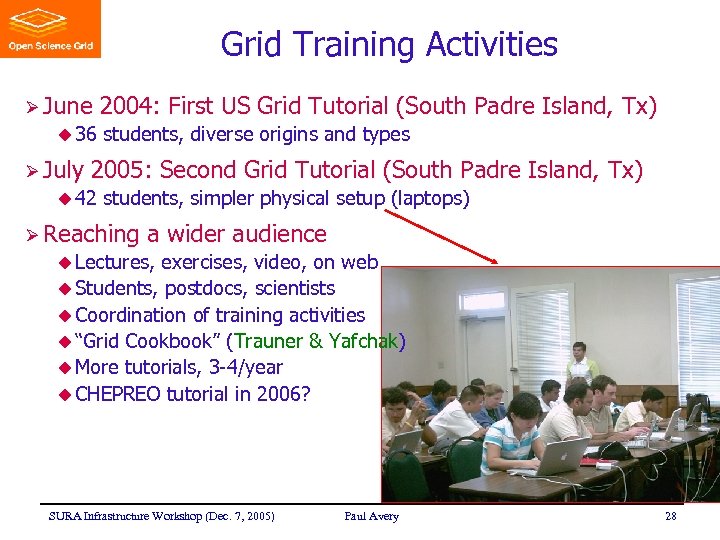

Grid Training Activities Ø June u 36 Ø July 2004: First US Grid Tutorial (South Padre Island, Tx) students, diverse origins and types 2005: Second Grid Tutorial (South Padre Island, Tx) u 42 students, simpler physical setup (laptops) Ø Reaching a wider audience u Lectures, exercises, video, on web u Students, postdocs, scientists u Coordination of training activities u “Grid Cookbook” (Trauner & Yafchak) u More tutorials, 3 -4/year u CHEPREO tutorial in 2006? SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 28

Quark. Net/Gri. Phy. N e-Lab Project http: //quarknet. uchicago. edu/elab/cosmic/home. jsp SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 29

CHEPREO: Center for High Energy Physics Research and Educational Outreach Florida International University § § Physics Learning Center CMS Research i. VDGL Grid Activities AMPATH network (S. America) Ø Funded September 2003 Ø $4 M initially (3 years) Ø MPS, CISE, EHR, INT

Grids and the Digital Divide Background Ø World Summit on Information Society Ø HEP Standing Committee on Interregional Connectivity (SCIC) Themes Ø Global collaborations, Grids and addressing the Digital Divide Ø Focus on poorly connected regions Ø Brazil (2004), Korea (2005) SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 31

Science Grid Communications Broad set of activities Ø(Katie Yurkewicz) ØNews releases, PR, etc. ØScience Grid This Week ØOSG Newsletter ØNot restricted to OSG www. interactions. org/sgtw SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 32

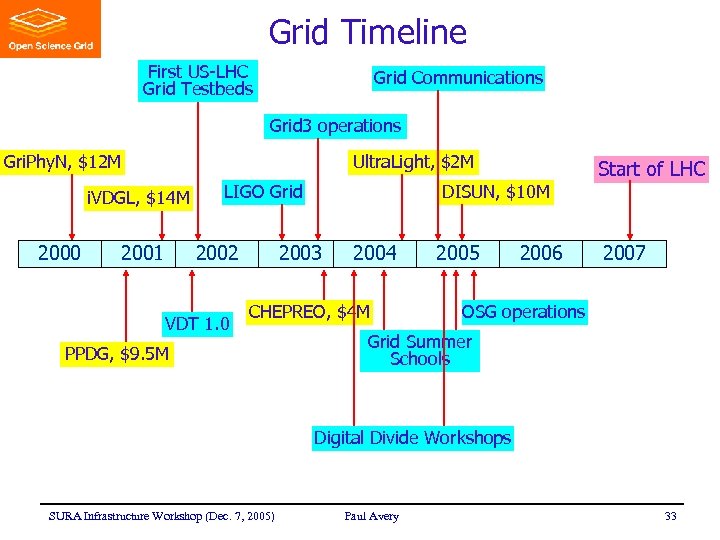

Grid Timeline First US-LHC Grid Testbeds Grid Communications Grid 3 operations Gri. Phy. N, $12 M Ultra. Light, $2 M i. VDGL, $14 M 2000 2001 LIGO Grid 2002 VDT 1. 0 DISUN, $10 M 2003 2004 CHEPREO, $4 M PPDG, $9. 5 M Start of LHC 2005 2006 2007 OSG operations Grid Summer Schools Digital Divide Workshops SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 33

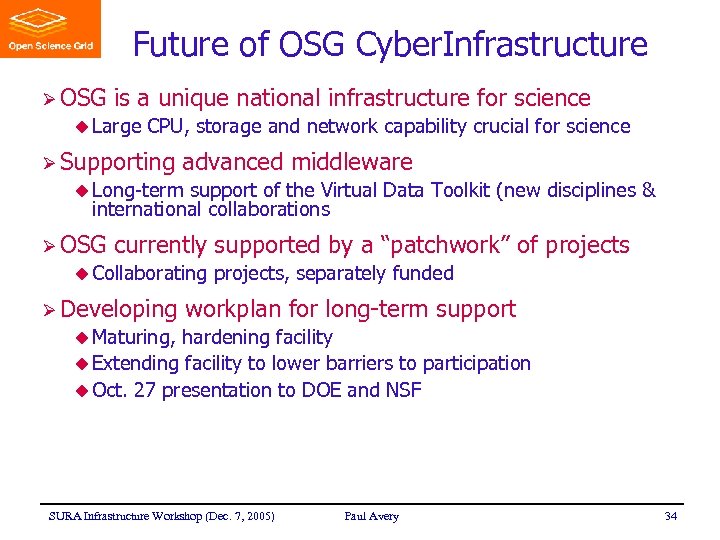

Future of OSG Cyber. Infrastructure Ø OSG is a unique national infrastructure for science u Large CPU, storage and network capability crucial for science Ø Supporting advanced middleware u Long-term support of the Virtual Data Toolkit (new disciplines & international collaborations Ø OSG currently supported by a “patchwork” of projects u Collaborating Ø Developing projects, separately funded workplan for long-term support u Maturing, hardening facility u Extending facility to lower barriers to participation u Oct. 27 presentation to DOE and NSF SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 34

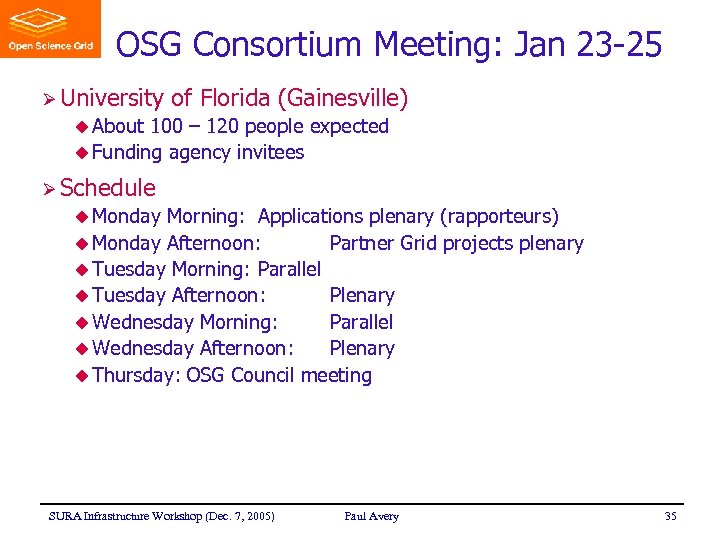

OSG Consortium Meeting: Jan 23 -25 Ø University of Florida (Gainesville) u About 100 – 120 people expected u Funding agency invitees Ø Schedule u Monday Morning: Applications plenary (rapporteurs) u Monday Afternoon: Partner Grid projects plenary u Tuesday Morning: Parallel u Tuesday Afternoon: Plenary u Wednesday Morning: Parallel u Wednesday Afternoon: Plenary u Thursday: OSG Council meeting Taiwan, S. Korea Sao Paolo SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 35

Disaster Planning Emergency Response SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 36

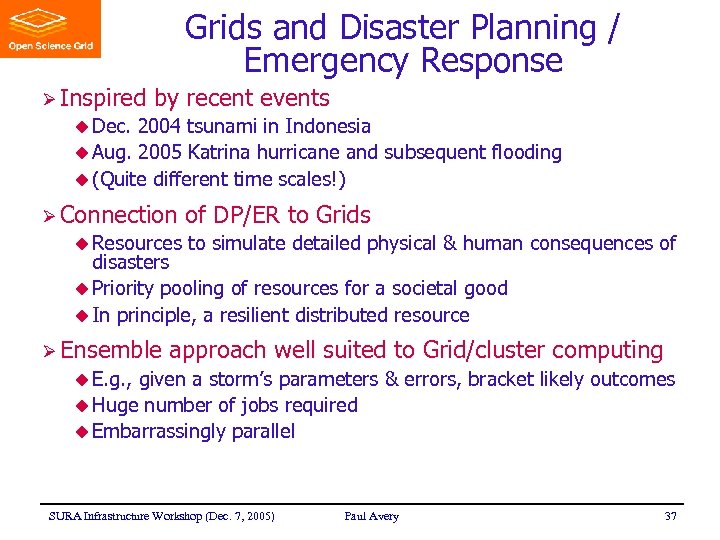

Grids and Disaster Planning / Emergency Response Ø Inspired by recent events u Dec. 2004 tsunami in Indonesia u Aug. 2005 Katrina hurricane and subsequent flooding u (Quite different time scales!) Ø Connection u Resources of DP/ER to Grids to simulate detailed physical & human consequences of disasters u Priority pooling of resources for a societal good u In principle, a resilient distributed resource Ø Ensemble approach well suited to Grid/cluster computing u E. g. , given a storm’s parameters & errors, bracket likely outcomes u Huge number of jobs required u Embarrassingly parallel SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 37

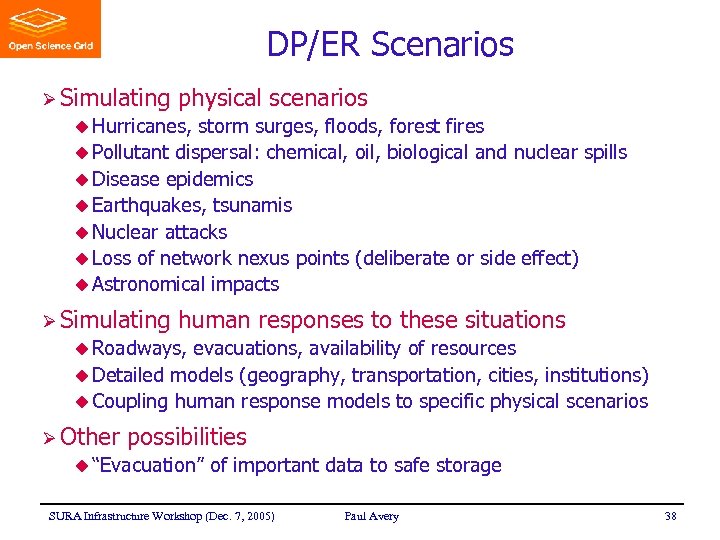

DP/ER Scenarios Ø Simulating physical scenarios u Hurricanes, storm surges, floods, forest fires u Pollutant dispersal: chemical, oil, biological and nuclear spills u Disease epidemics u Earthquakes, tsunamis u Nuclear attacks u Loss of network nexus points (deliberate or side effect) u Astronomical impacts Ø Simulating human responses to these situations u Roadways, evacuations, availability of resources u Detailed models (geography, transportation, cities, institutions) u Coupling human response models to specific physical scenarios Ø Other possibilities u “Evacuation” of important data to safe storage SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 38

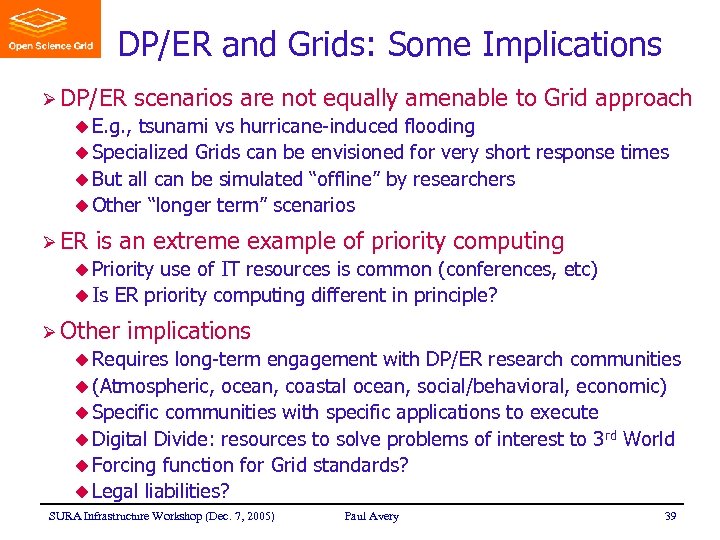

DP/ER and Grids: Some Implications Ø DP/ER scenarios are not equally amenable to Grid approach u E. g. , tsunami vs hurricane-induced flooding u Specialized Grids can be envisioned for very short response times u But all can be simulated “offline” by researchers u Other “longer term” scenarios Ø ER is an extreme example of priority computing u Priority use of IT resources is common (conferences, etc) u Is ER priority computing different in principle? Ø Other implications u Requires long-term engagement with DP/ER research communities u (Atmospheric, ocean, coastal ocean, social/behavioral, economic) u Specific communities with specific applications to execute u Digital Divide: resources to solve problems of interest to 3 rd World u Forcing function for Grid standards? u Legal liabilities? SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 39

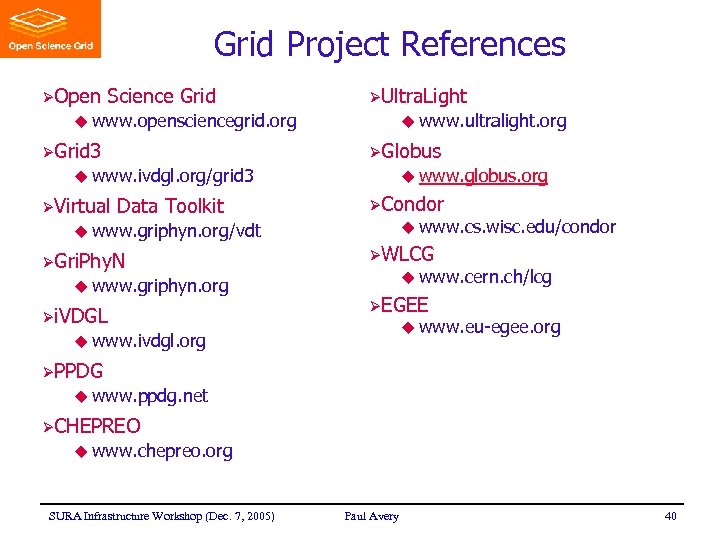

Grid Project References ØOpen Science Grid ØUltra. Light u www. opensciencegrid. org ØGrid 3 u www. ultralight. org ØGlobus u www. ivdgl. org/grid 3 ØVirtual Data Toolkit u www. griphyn. org/vdt ØGri. Phy. N u www. griphyn. org Øi. VDGL u www. ivdgl. org u www. globus. org ØCondor u www. cs. wisc. edu/condor ØWLCG u www. cern. ch/lcg ØEGEE u www. eu-egee. org ØPPDG u www. ppdg. net ØCHEPREO u www. chepreo. org SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 40

Extra Slides SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 41

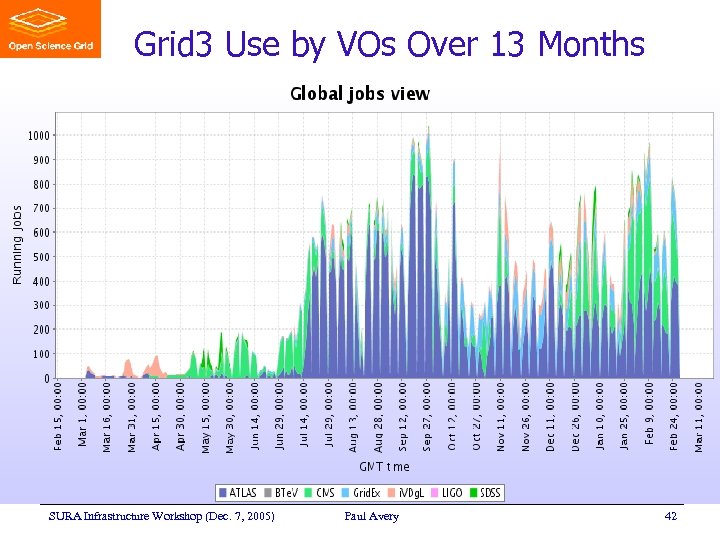

Grid 3 Use by VOs Over 13 Months SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 42

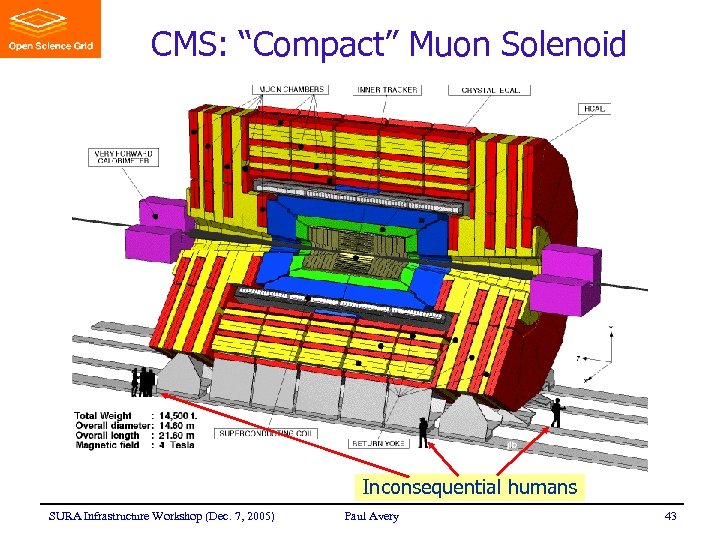

CMS: “Compact” Muon Solenoid Inconsequential humans SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 43

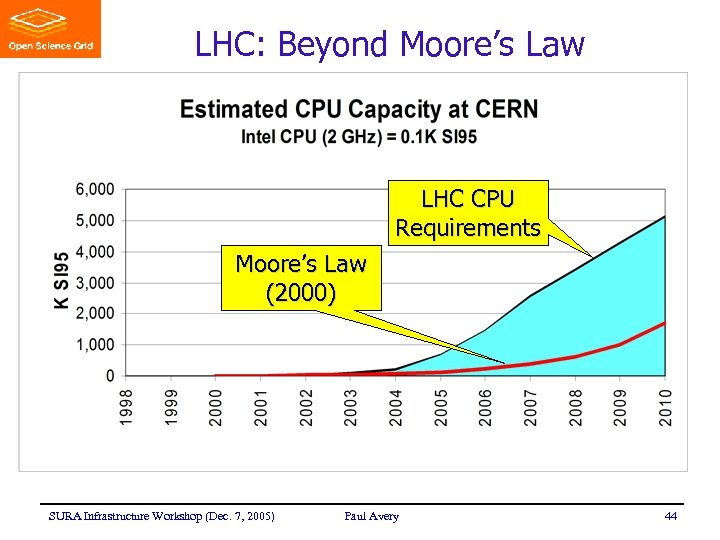

LHC: Beyond Moore’s Law LHC CPU Requirements Moore’s Law (2000) SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 44

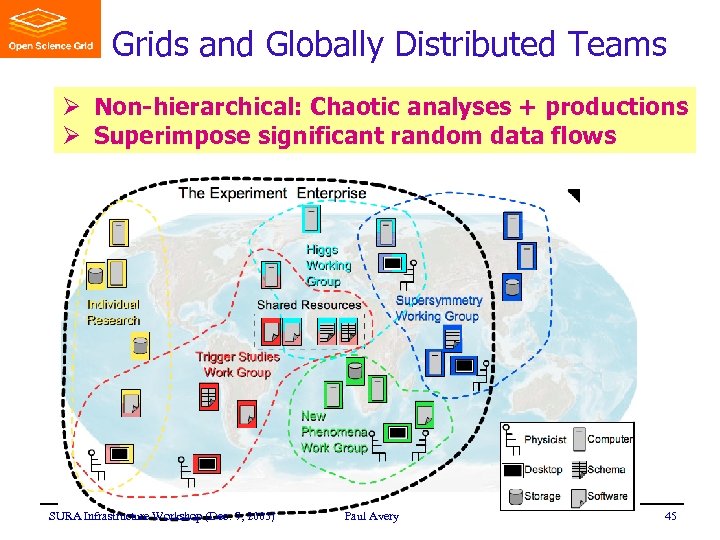

Grids and Globally Distributed Teams Ø Non-hierarchical: Chaotic analyses + productions Ø Superimpose significant random data flows SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 45

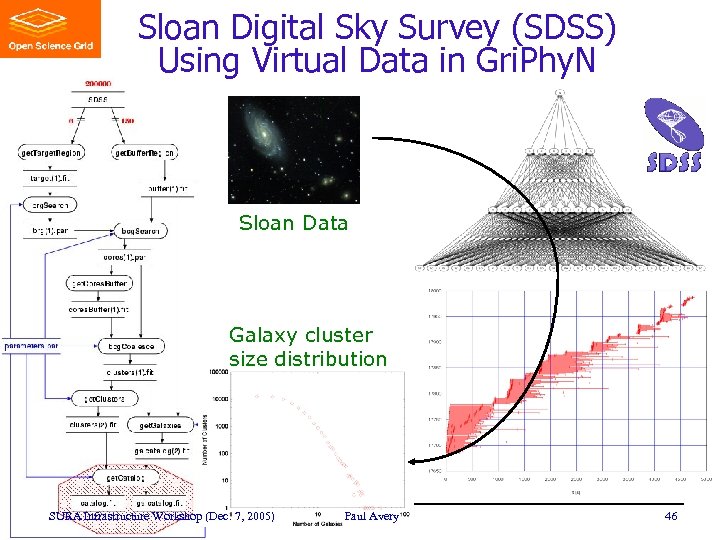

Sloan Digital Sky Survey (SDSS) Using Virtual Data in Gri. Phy. N Sloan Data Galaxy cluster size distribution SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 46

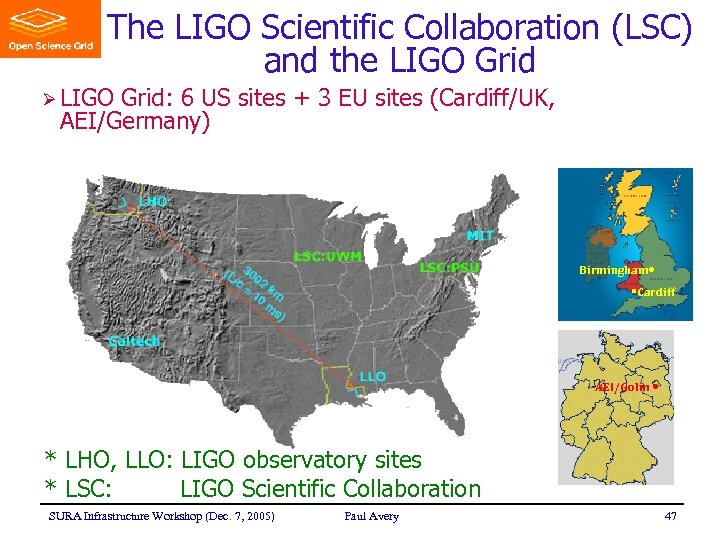

The LIGO Scientific Collaboration (LSC) and the LIGO Grid Ø LIGO Grid: 6 US sites + 3 EU sites (Cardiff/UK, AEI/Germany) Birmingham • §Cardiff AEI/Golm • * LHO, LLO: LIGO observatory sites * LSC: LIGO Scientific Collaboration SURA Infrastructure Workshop (Dec. 7, 2005) Paul Avery 47

0564770df294b28a7b2f1f3d62fbc4c4.ppt