d2b1ad1909d7806d1e7ff98edf2d28a9.ppt

- Количество слайдов: 77

Open. Flow in Service Provider Networks AT&T Tech Talks October 2010 Rob Sherwood Saurav Das Yiannis Yiakoumis

Open. Flow in Service Provider Networks AT&T Tech Talks October 2010 Rob Sherwood Saurav Das Yiannis Yiakoumis

Talk Overview • • Motivation What is Open. Flow Deployments Open. Flow in the WAN – Combined Circuit/Packet Switching – Demo • Future Directions

Talk Overview • • Motivation What is Open. Flow Deployments Open. Flow in the WAN – Combined Circuit/Packet Switching – Demo • Future Directions

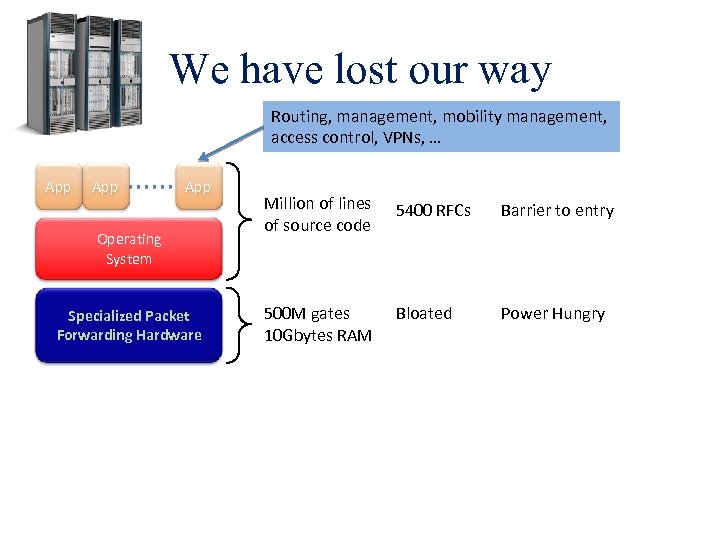

We have lost our way Routing, management, mobility management, access control, VPNs, … App App Operating System Specialized Packet Forwarding Hardware Million of lines of source code 5400 RFCs Barrier to entry 500 M gates 10 Gbytes RAM Bloated Power Hungry

We have lost our way Routing, management, mobility management, access control, VPNs, … App App Operating System Specialized Packet Forwarding Hardware Million of lines of source code 5400 RFCs Barrier to entry 500 M gates 10 Gbytes RAM Bloated Power Hungry

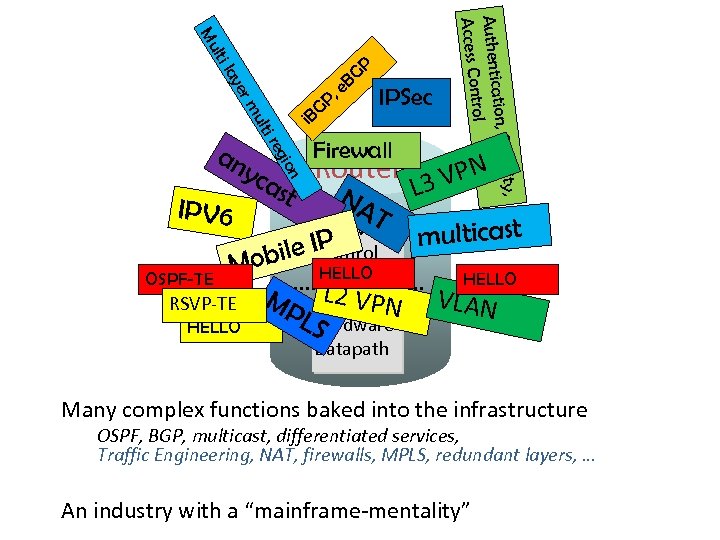

rm ye i la i re ult Firewall AT Software P IControl obile HELLO M OSPF-TE RSVP-TE MP L 2 VPN LS Hardware HELLO , Router 3 VPN L st N yca n gio IPV 6 IPSec ity tion, Secur Authentica trol Access Con lt Mu an P, G i. B GP e. B multicast HELLO VLAN Datapath Many complex functions baked into the infrastructure OSPF, BGP, multicast, differentiated services, Traffic Engineering, NAT, firewalls, MPLS, redundant layers, … An industry with a “mainframe-mentality”

rm ye i la i re ult Firewall AT Software P IControl obile HELLO M OSPF-TE RSVP-TE MP L 2 VPN LS Hardware HELLO , Router 3 VPN L st N yca n gio IPV 6 IPSec ity tion, Secur Authentica trol Access Con lt Mu an P, G i. B GP e. B multicast HELLO VLAN Datapath Many complex functions baked into the infrastructure OSPF, BGP, multicast, differentiated services, Traffic Engineering, NAT, firewalls, MPLS, redundant layers, … An industry with a “mainframe-mentality”

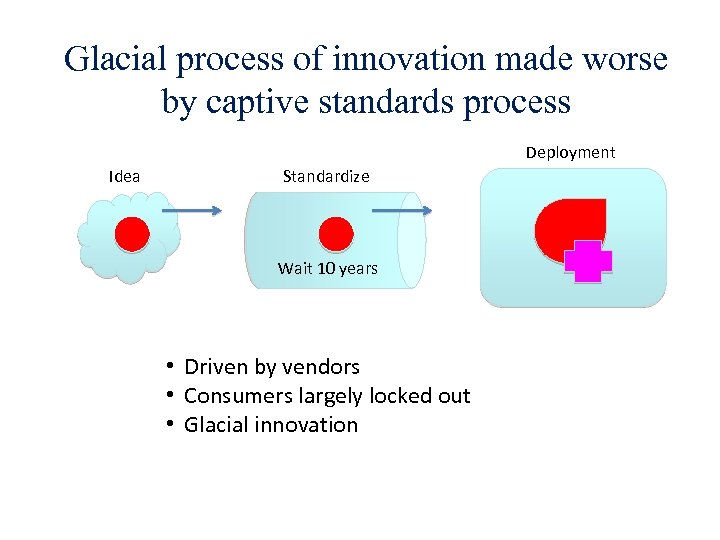

Glacial process of innovation made worse by captive standards process Deployment Idea Standardize Wait 10 years • Driven by vendors • Consumers largely locked out • Glacial innovation

Glacial process of innovation made worse by captive standards process Deployment Idea Standardize Wait 10 years • Driven by vendors • Consumers largely locked out • Glacial innovation

New Generation Providers Already Buy into It In a nutshell Driven by cost and control Started in data centers…. What New Generation Providers have been Doing Within the Datacenters Buy bare metal switches/routers Write their own control/management applications on a common platform 6

New Generation Providers Already Buy into It In a nutshell Driven by cost and control Started in data centers…. What New Generation Providers have been Doing Within the Datacenters Buy bare metal switches/routers Write their own control/management applications on a common platform 6

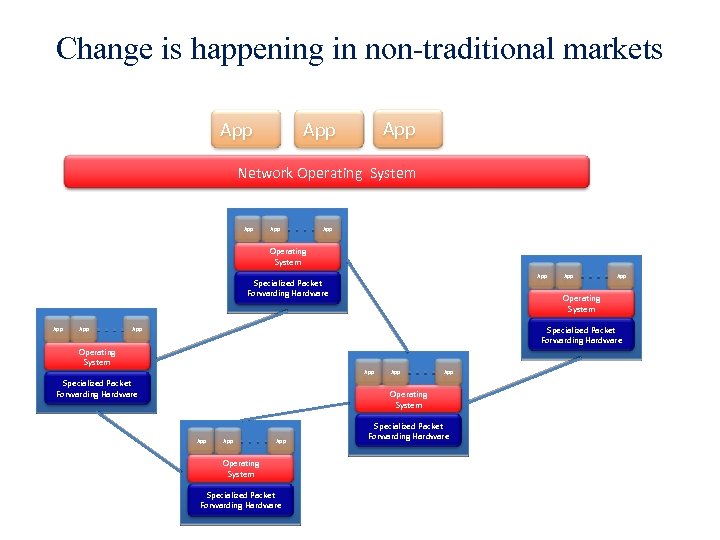

Change is happening in non-traditional markets App App Network Operating System App App Operating System App Specialized Packet Forwarding Hardware App App Operating System Specialized Packet Forwarding Hardware App Operating System App App Operating System Specialized Packet Forwarding Hardware

Change is happening in non-traditional markets App App Network Operating System App App Operating System App Specialized Packet Forwarding Hardware App App Operating System Specialized Packet Forwarding Hardware App Operating System App App Operating System Specialized Packet Forwarding Hardware

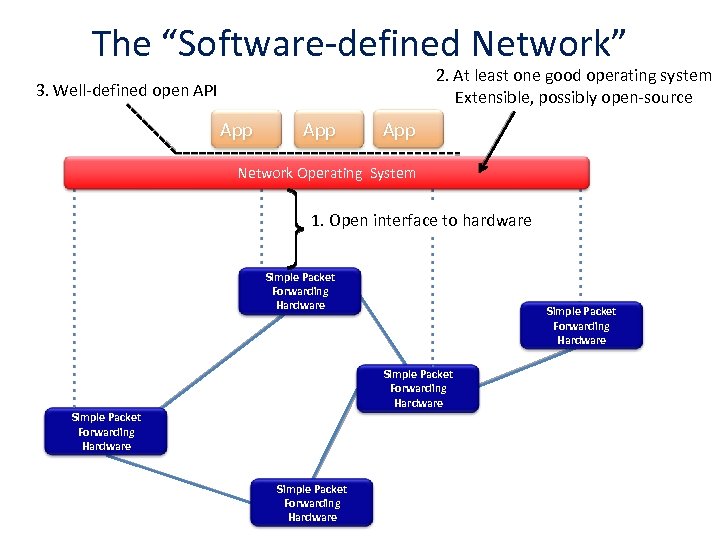

The “Software-defined Network” 2. At least one good operating system Extensible, possibly open-source 3. Well-defined open API App App Network Operating System 1. Open interface to hardware Simple Packet Forwarding Hardware Simple Packet Forwarding Hardware

The “Software-defined Network” 2. At least one good operating system Extensible, possibly open-source 3. Well-defined open API App App Network Operating System 1. Open interface to hardware Simple Packet Forwarding Hardware Simple Packet Forwarding Hardware

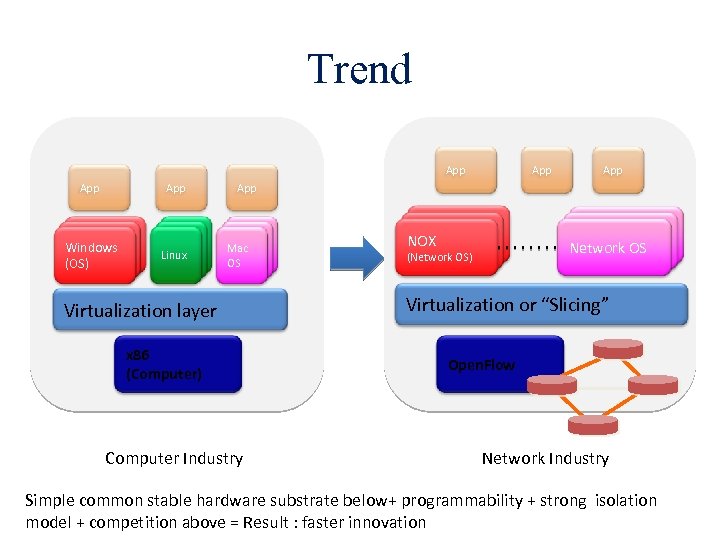

Trend App App Windows (OS) Linux App App Mac Mac OS OS OS Virtualization layer x 86 (Computer) Computer Industry Controller NOX Controller 11 (Network OS) Controller Network OS 22 Virtualization or “Slicing” Open. Flow Network Industry Simple common stable hardware substrate below+ programmability + strong isolation model + competition above = Result : faster innovation

Trend App App Windows (OS) Linux App App Mac Mac OS OS OS Virtualization layer x 86 (Computer) Computer Industry Controller NOX Controller 11 (Network OS) Controller Network OS 22 Virtualization or “Slicing” Open. Flow Network Industry Simple common stable hardware substrate below+ programmability + strong isolation model + competition above = Result : faster innovation

What is Open. Flow?

What is Open. Flow?

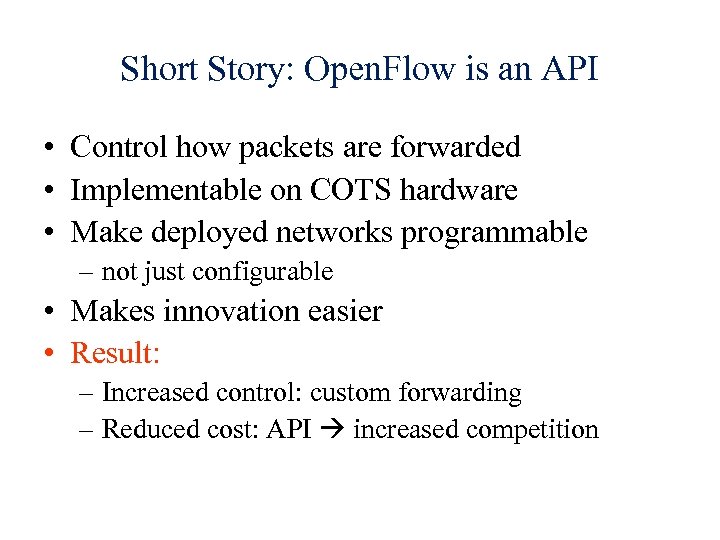

Short Story: Open. Flow is an API • Control how packets are forwarded • Implementable on COTS hardware • Make deployed networks programmable – not just configurable • Makes innovation easier • Result: – Increased control: custom forwarding – Reduced cost: API increased competition

Short Story: Open. Flow is an API • Control how packets are forwarded • Implementable on COTS hardware • Make deployed networks programmable – not just configurable • Makes innovation easier • Result: – Increased control: custom forwarding – Reduced cost: API increased competition

Ethernet Switch/Router

Ethernet Switch/Router

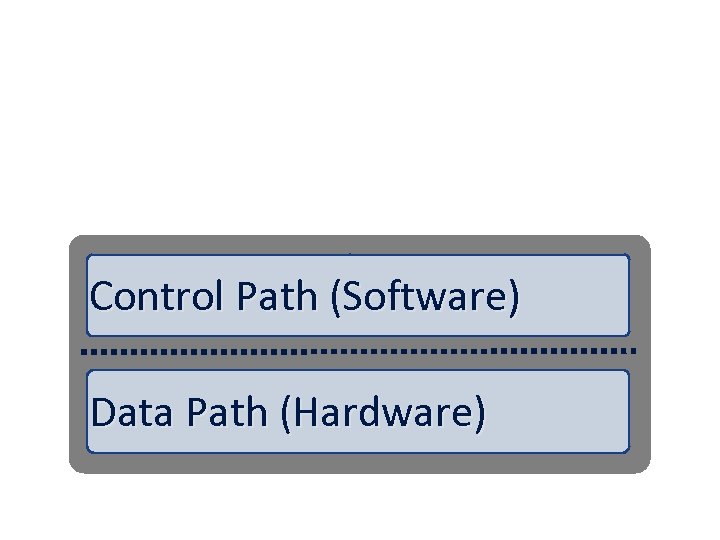

Control Path (Software) Data Path (Hardware)

Control Path (Software) Data Path (Hardware)

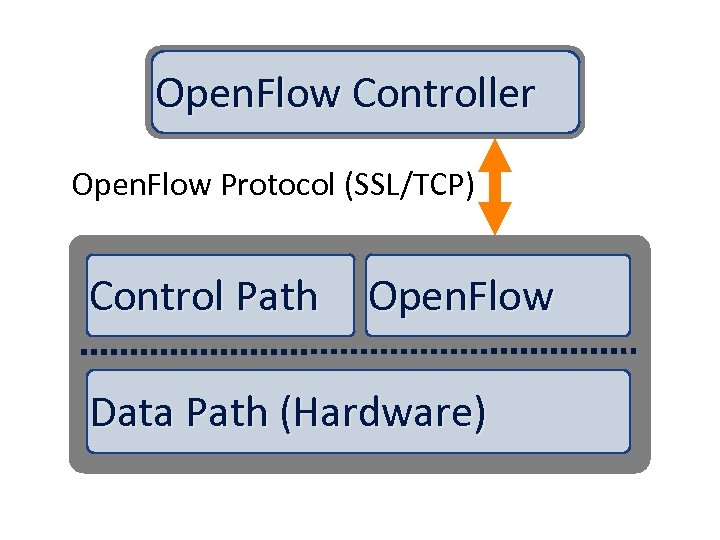

Open. Flow Controller Open. Flow Protocol (SSL/TCP) Control Path Open. Flow Data Path (Hardware)

Open. Flow Controller Open. Flow Protocol (SSL/TCP) Control Path Open. Flow Data Path (Hardware)

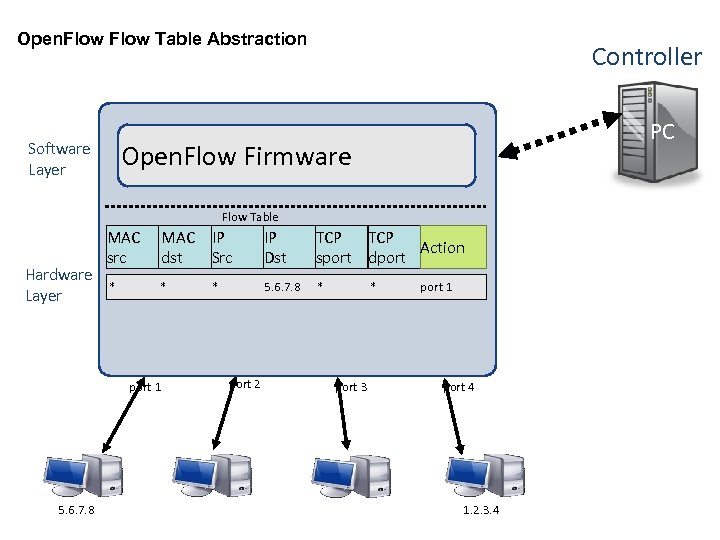

Open. Flow Table Abstraction Software Layer Controller PC Open. Flow Firmware Flow Table Hardware Layer MAC src MAC IP dst Src IP Dst TCP Action sport dport * * 5. 6. 7. 8 * port 1 5. 6. 7. 8 * port 2 * port 3 port 1 port 4 1. 2. 3. 4

Open. Flow Table Abstraction Software Layer Controller PC Open. Flow Firmware Flow Table Hardware Layer MAC src MAC IP dst Src IP Dst TCP Action sport dport * * 5. 6. 7. 8 * port 1 5. 6. 7. 8 * port 2 * port 3 port 1 port 4 1. 2. 3. 4

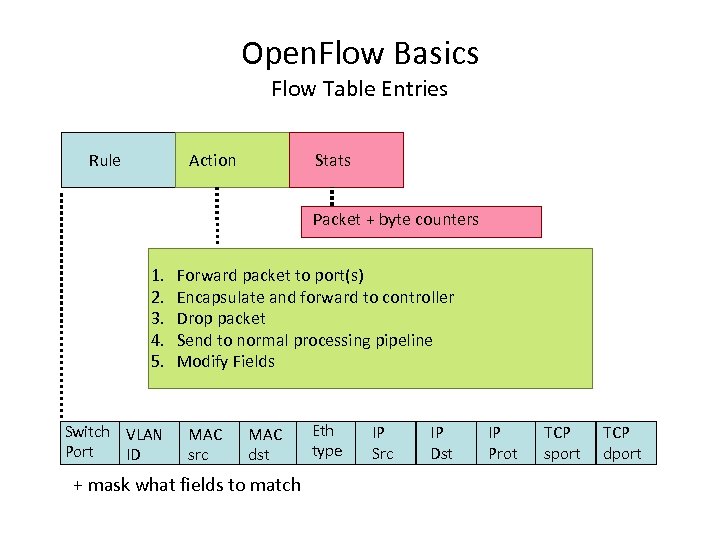

Open. Flow Basics Flow Table Entries Rule Action Stats Packet + byte counters 1. 2. 3. 4. 5. Switch VLAN Port ID Forward packet to port(s) Encapsulate and forward to controller Drop packet Send to normal processing pipeline Modify Fields MAC src MAC dst + mask what fields to match Eth type IP Src IP Dst IP Prot TCP sport TCP dport

Open. Flow Basics Flow Table Entries Rule Action Stats Packet + byte counters 1. 2. 3. 4. 5. Switch VLAN Port ID Forward packet to port(s) Encapsulate and forward to controller Drop packet Send to normal processing pipeline Modify Fields MAC src MAC dst + mask what fields to match Eth type IP Src IP Dst IP Prot TCP sport TCP dport

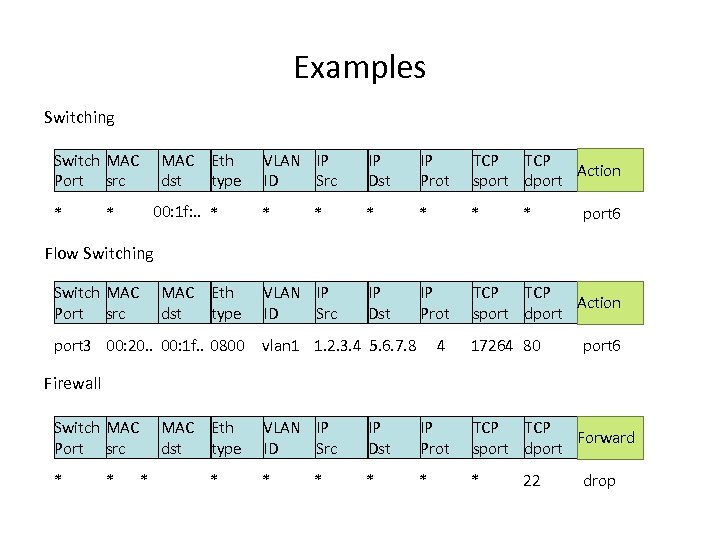

Examples Switching Switch MAC Port src * MAC Eth dst type 00: 1 f: . . * * VLAN IP ID Src IP Dst IP Prot TCP Action sport dport * * port 6 Flow Switching Switch MAC Port src MAC Eth dst type port 3 00: 20. . 00: 1 f. . 0800 VLAN IP ID Src vlan 1 1. 2. 3. 4 5. 6. 7. 8 4 17264 80 port 6 Firewall Switch MAC Port src * * MAC Eth dst type * * VLAN IP ID Src IP Dst IP Prot TCP Forward sport dport * * * 22 drop

Examples Switching Switch MAC Port src * MAC Eth dst type 00: 1 f: . . * * VLAN IP ID Src IP Dst IP Prot TCP Action sport dport * * port 6 Flow Switching Switch MAC Port src MAC Eth dst type port 3 00: 20. . 00: 1 f. . 0800 VLAN IP ID Src vlan 1 1. 2. 3. 4 5. 6. 7. 8 4 17264 80 port 6 Firewall Switch MAC Port src * * MAC Eth dst type * * VLAN IP ID Src IP Dst IP Prot TCP Forward sport dport * * * 22 drop

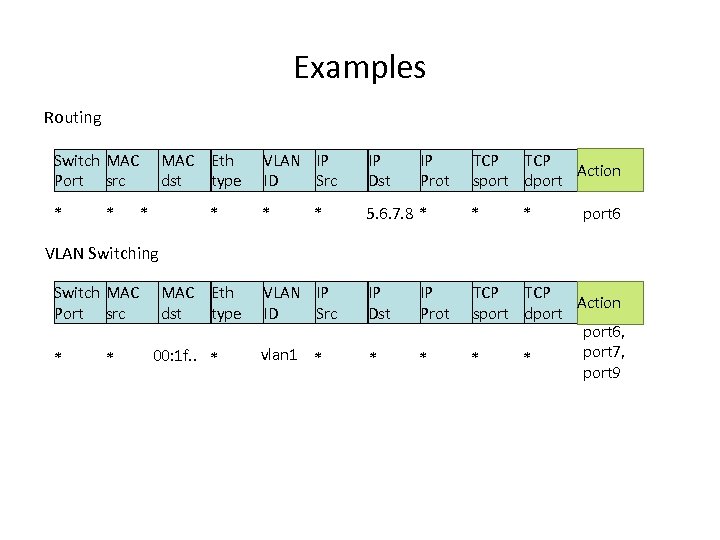

Examples Routing Switch MAC Port src * * MAC Eth dst type * * VLAN IP ID Src IP Dst * 5. 6. 7. 8 * * VLAN IP ID Src IP Dst IP Prot vlan 1 * * * TCP Action sport dport 6, port 7, * * port 9 * IP Prot TCP Action sport dport * port 6 VLAN Switching Switch MAC Port src * * MAC Eth dst type 00: 1 f. . *

Examples Routing Switch MAC Port src * * MAC Eth dst type * * VLAN IP ID Src IP Dst * 5. 6. 7. 8 * * VLAN IP ID Src IP Dst IP Prot vlan 1 * * * TCP Action sport dport 6, port 7, * * port 9 * IP Prot TCP Action sport dport * port 6 VLAN Switching Switch MAC Port src * * MAC Eth dst type 00: 1 f. . *

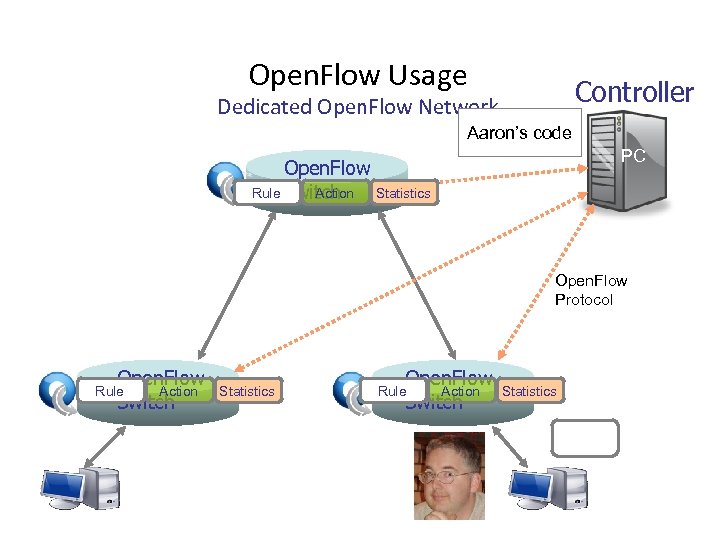

Open. Flow Usage Controller Dedicated Open. Flow Network Aaron’s code PC Open. Flow Rule Switch Action Statistics Open. Flow Protocol Open. Flow Action Switch Rule Open. Flow. Switch. org Statistics Open. Flow Action Switch Rule Statistics

Open. Flow Usage Controller Dedicated Open. Flow Network Aaron’s code PC Open. Flow Rule Switch Action Statistics Open. Flow Protocol Open. Flow Action Switch Rule Open. Flow. Switch. org Statistics Open. Flow Action Switch Rule Statistics

Network Design Decisions Forwarding logic (of course) Centralized vs. distributed control Fine vs. coarse grained rules Reactive vs. Proactive rule creation Likely more: open research area

Network Design Decisions Forwarding logic (of course) Centralized vs. distributed control Fine vs. coarse grained rules Reactive vs. Proactive rule creation Likely more: open research area

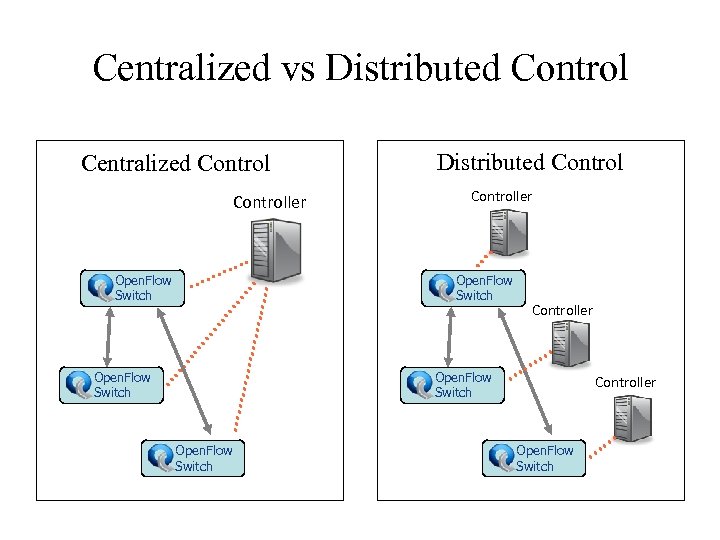

Centralized vs Distributed Control Centralized Controller Open. Flow Switch Distributed Controller Open. Flow Switch Controller Open. Flow Switch

Centralized vs Distributed Control Centralized Controller Open. Flow Switch Distributed Controller Open. Flow Switch Controller Open. Flow Switch

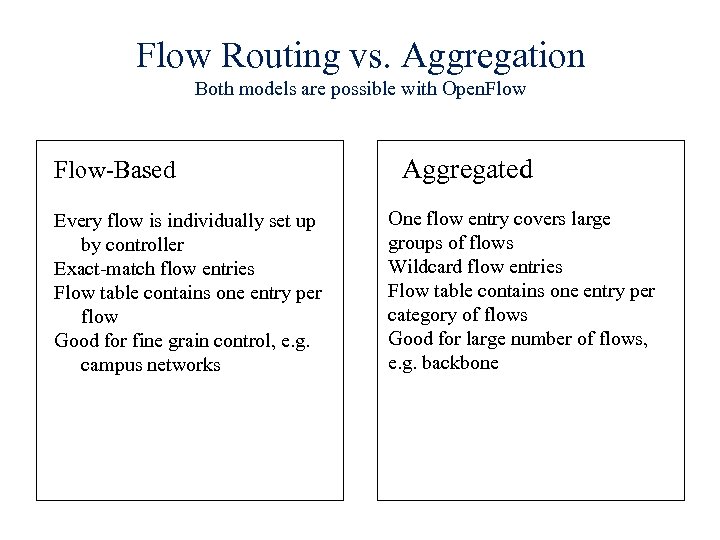

Flow Routing vs. Aggregation Both models are possible with Open. Flow-Based Aggregated Every flow is individually set up by controller Exact-match flow entries Flow table contains one entry per flow Good for fine grain control, e. g. campus networks One flow entry covers large groups of flows Wildcard flow entries Flow table contains one entry per category of flows Good for large number of flows, e. g. backbone

Flow Routing vs. Aggregation Both models are possible with Open. Flow-Based Aggregated Every flow is individually set up by controller Exact-match flow entries Flow table contains one entry per flow Good for fine grain control, e. g. campus networks One flow entry covers large groups of flows Wildcard flow entries Flow table contains one entry per category of flows Good for large number of flows, e. g. backbone

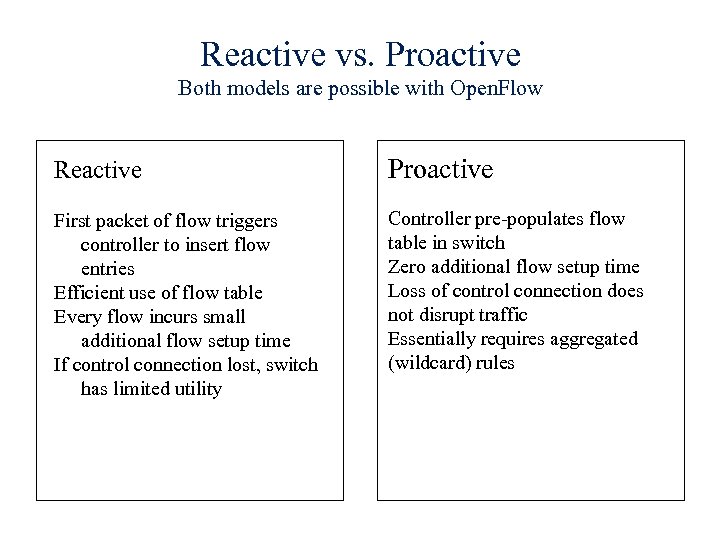

Reactive vs. Proactive Both models are possible with Open. Flow Reactive Proactive First packet of flow triggers controller to insert flow entries Efficient use of flow table Every flow incurs small additional flow setup time If control connection lost, switch has limited utility Controller pre-populates flow table in switch Zero additional flow setup time Loss of control connection does not disrupt traffic Essentially requires aggregated (wildcard) rules

Reactive vs. Proactive Both models are possible with Open. Flow Reactive Proactive First packet of flow triggers controller to insert flow entries Efficient use of flow table Every flow incurs small additional flow setup time If control connection lost, switch has limited utility Controller pre-populates flow table in switch Zero additional flow setup time Loss of control connection does not disrupt traffic Essentially requires aggregated (wildcard) rules

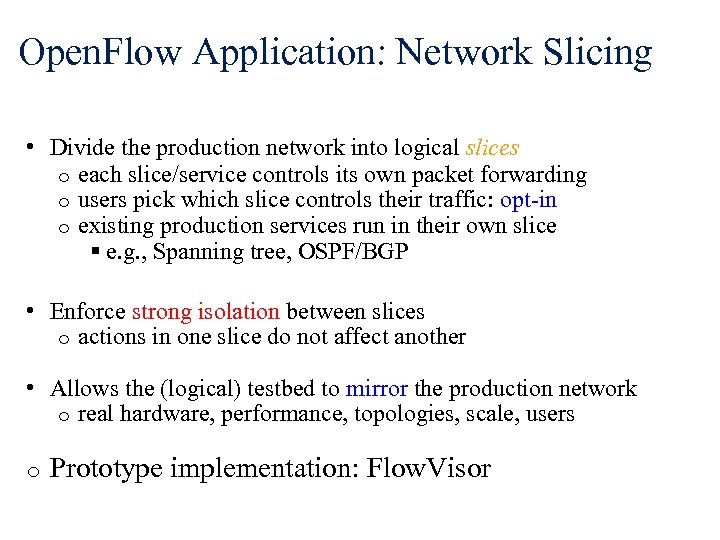

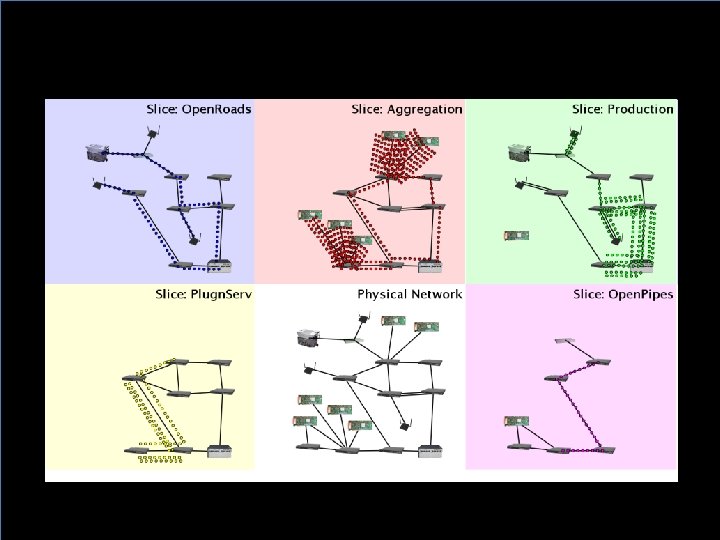

Open. Flow Application: Network Slicing • Divide the production network into logical slices o each slice/service controls its own packet forwarding o users pick which slice controls their traffic: opt-in o existing production services run in their own slice e. g. , Spanning tree, OSPF/BGP • Enforce strong isolation between slices o actions in one slice do not affect another • Allows the (logical) testbed to mirror the production network o real hardware, performance, topologies, scale, users o Prototype implementation: Flow. Visor

Open. Flow Application: Network Slicing • Divide the production network into logical slices o each slice/service controls its own packet forwarding o users pick which slice controls their traffic: opt-in o existing production services run in their own slice e. g. , Spanning tree, OSPF/BGP • Enforce strong isolation between slices o actions in one slice do not affect another • Allows the (logical) testbed to mirror the production network o real hardware, performance, topologies, scale, users o Prototype implementation: Flow. Visor

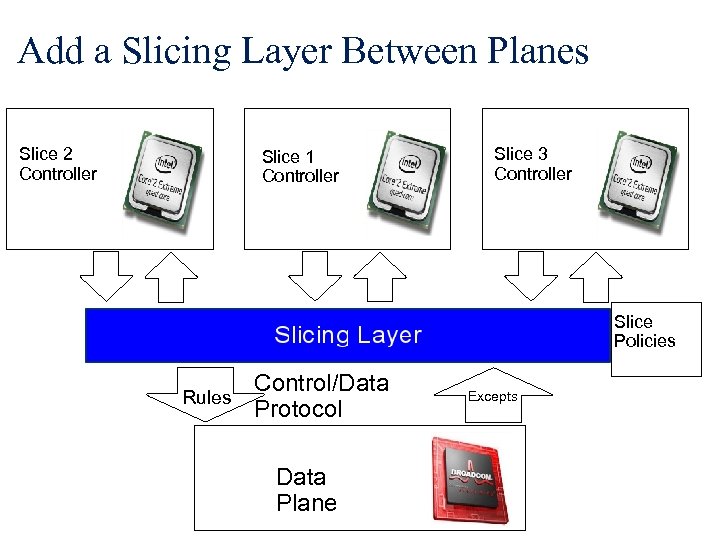

Add a Slicing Layer Between Planes Slice 2 Controller Slice 1 Controller Slice 3 Controller Slice Policies Rules Control/Data Protocol Data Plane Excepts

Add a Slicing Layer Between Planes Slice 2 Controller Slice 1 Controller Slice 3 Controller Slice Policies Rules Control/Data Protocol Data Plane Excepts

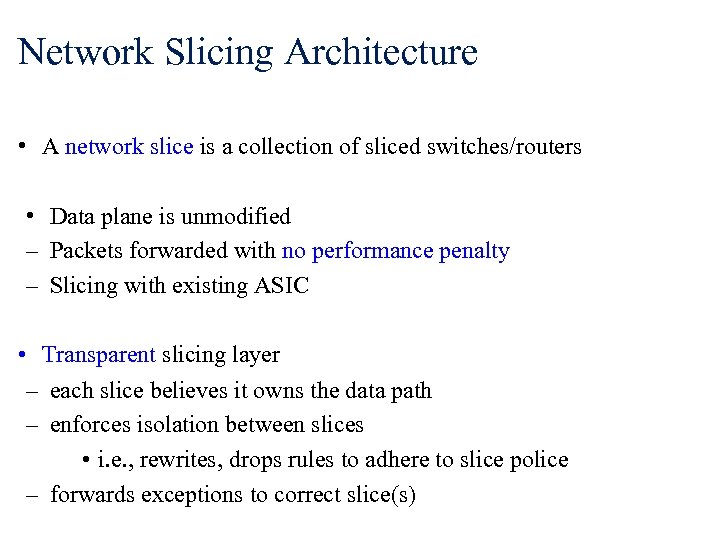

Network Slicing Architecture • A network slice is a collection of sliced switches/routers • Data plane is unmodified – Packets forwarded with no performance penalty – Slicing with existing ASIC • Transparent slicing layer – each slice believes it owns the data path – enforces isolation between slices • i. e. , rewrites, drops rules to adhere to slice police – forwards exceptions to correct slice(s)

Network Slicing Architecture • A network slice is a collection of sliced switches/routers • Data plane is unmodified – Packets forwarded with no performance penalty – Slicing with existing ASIC • Transparent slicing layer – each slice believes it owns the data path – enforces isolation between slices • i. e. , rewrites, drops rules to adhere to slice police – forwards exceptions to correct slice(s)

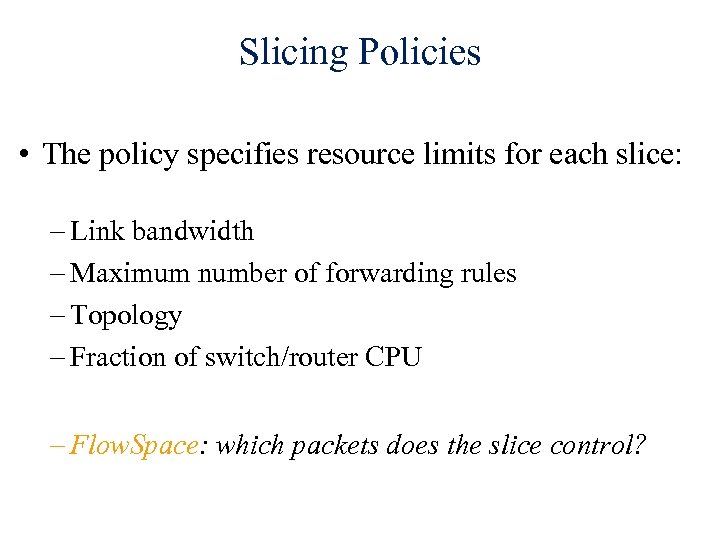

Slicing Policies • The policy specifies resource limits for each slice: – Link bandwidth – Maximum number of forwarding rules – Topology – Fraction of switch/router CPU – Flow. Space: which packets does the slice control?

Slicing Policies • The policy specifies resource limits for each slice: – Link bandwidth – Maximum number of forwarding rules – Topology – Fraction of switch/router CPU – Flow. Space: which packets does the slice control?

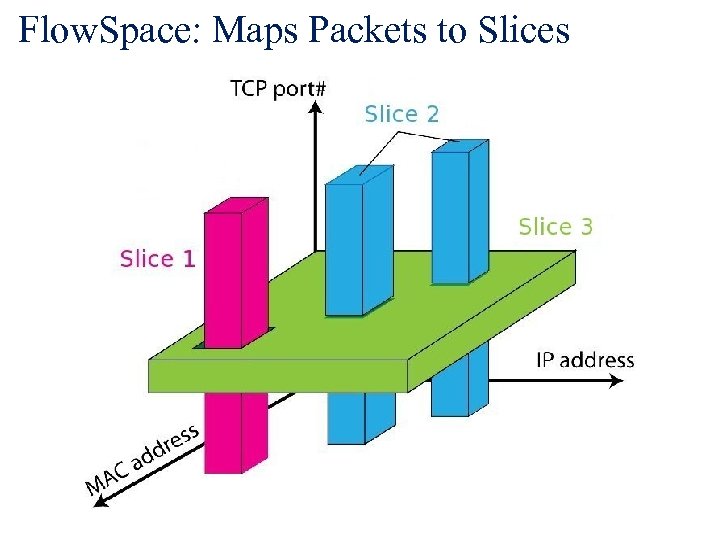

Flow. Space: Maps Packets to Slices

Flow. Space: Maps Packets to Slices

Real User Traffic: Opt-In • Allow users to Opt-In to services in real-time o Users can delegate control of individual flows to Slices o Add new Flow. Space to each slice's policy • Example: o "Slice 1 will handle my HTTP traffic" o "Slice 2 will handle my Vo. IP traffic" o "Slice 3 will handle everything else" • Creates incentives for building high-quality services

Real User Traffic: Opt-In • Allow users to Opt-In to services in real-time o Users can delegate control of individual flows to Slices o Add new Flow. Space to each slice's policy • Example: o "Slice 1 will handle my HTTP traffic" o "Slice 2 will handle my Vo. IP traffic" o "Slice 3 will handle everything else" • Creates incentives for building high-quality services

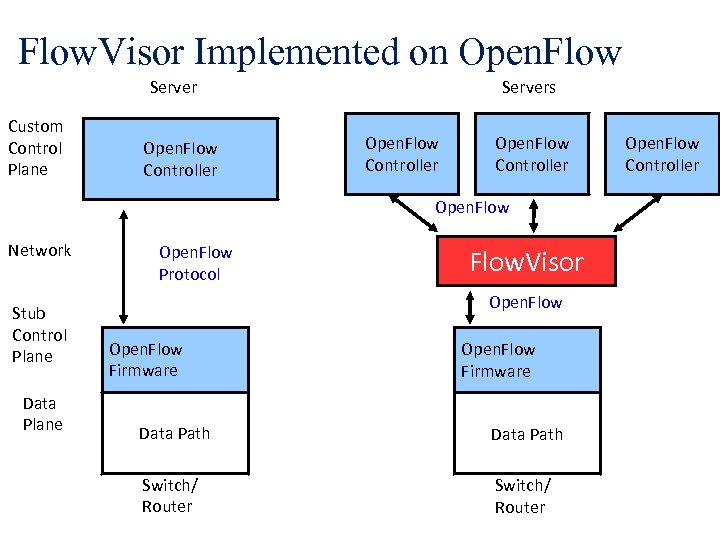

Flow. Visor Implemented on Open. Flow Server Custom Control Plane Open. Flow Controller Servers Open. Flow Controller Open. Flow Network Stub Control Plane Data Plane Open. Flow Protocol Flow. Visor Open. Flow Firmware Data Path Switch/ Router Open. Flow Controller

Flow. Visor Implemented on Open. Flow Server Custom Control Plane Open. Flow Controller Servers Open. Flow Controller Open. Flow Network Stub Control Plane Data Plane Open. Flow Protocol Flow. Visor Open. Flow Firmware Data Path Switch/ Router Open. Flow Controller

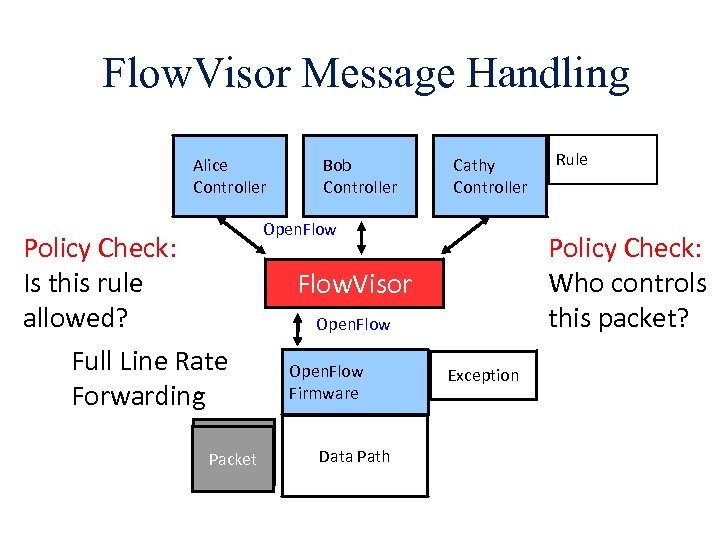

Flow. Visor Message Handling Alice Controller Bob Controller Cathy Controller Open. Flow Policy Check: Is this rule allowed? Policy Check: Who controls this packet? Flow. Visor Open. Flow Full Line Rate Forwarding Packet Open. Flow Firmware Data Path Rule Exception

Flow. Visor Message Handling Alice Controller Bob Controller Cathy Controller Open. Flow Policy Check: Is this rule allowed? Policy Check: Who controls this packet? Flow. Visor Open. Flow Full Line Rate Forwarding Packet Open. Flow Firmware Data Path Rule Exception

Open. Flow Deployments

Open. Flow Deployments

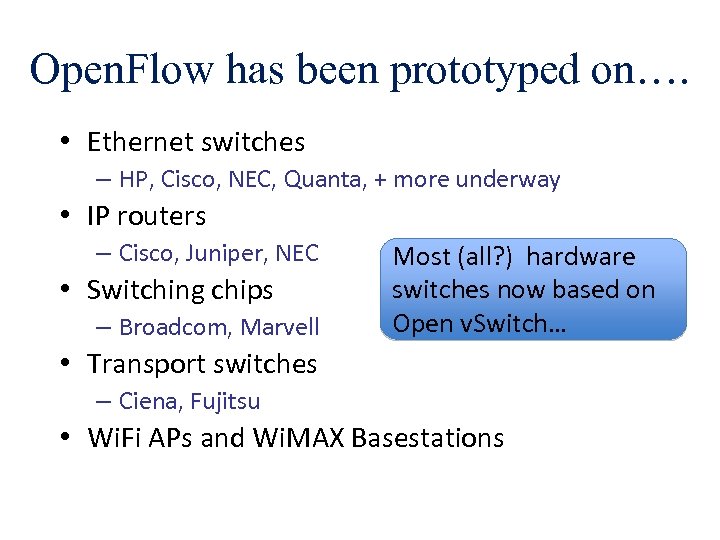

Open. Flow has been prototyped on…. • Ethernet switches – HP, Cisco, NEC, Quanta, + more underway • IP routers – Cisco, Juniper, NEC • Switching chips – Broadcom, Marvell Most (all? ) hardware switches now based on Open v. Switch… • Transport switches – Ciena, Fujitsu • Wi. Fi APs and Wi. MAX Basestations

Open. Flow has been prototyped on…. • Ethernet switches – HP, Cisco, NEC, Quanta, + more underway • IP routers – Cisco, Juniper, NEC • Switching chips – Broadcom, Marvell Most (all? ) hardware switches now based on Open v. Switch… • Transport switches – Ciena, Fujitsu • Wi. Fi APs and Wi. MAX Basestations

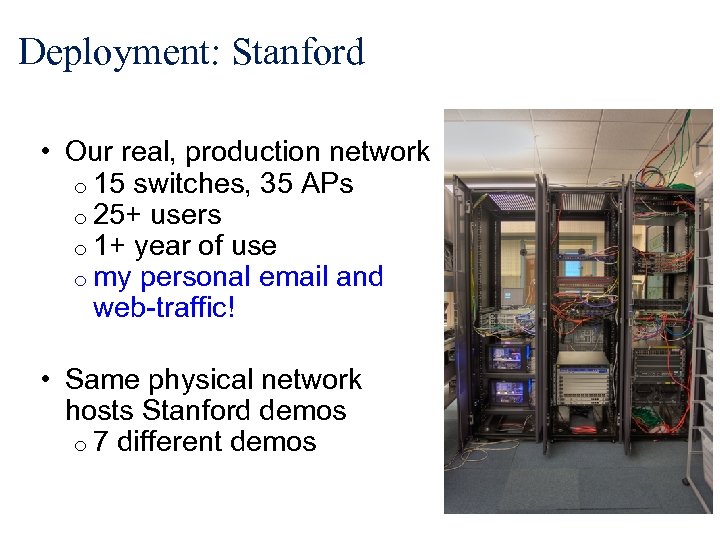

Deployment: Stanford • Our real, production network o 15 switches, 35 APs o 25+ users o 1+ year of use o my personal email and web-traffic! • Same physical network hosts Stanford demos o 7 different demos

Deployment: Stanford • Our real, production network o 15 switches, 35 APs o 25+ users o 1+ year of use o my personal email and web-traffic! • Same physical network hosts Stanford demos o 7 different demos

Demo Infrastructure with Slicing

Demo Infrastructure with Slicing

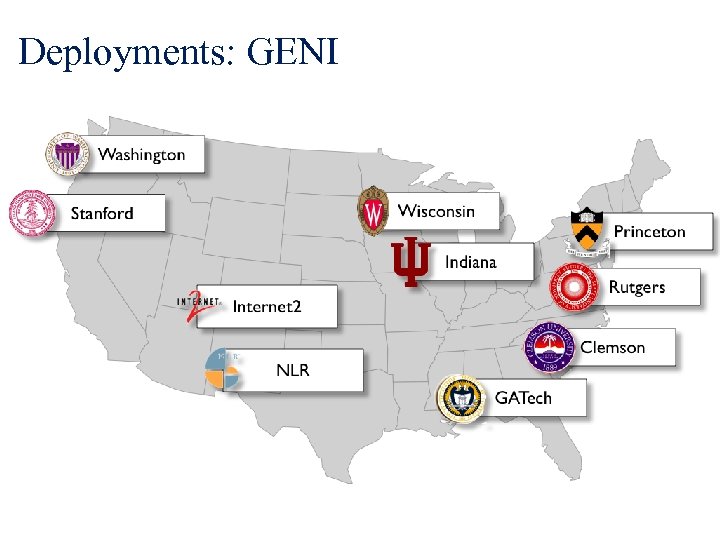

Deployments: GENI

Deployments: GENI

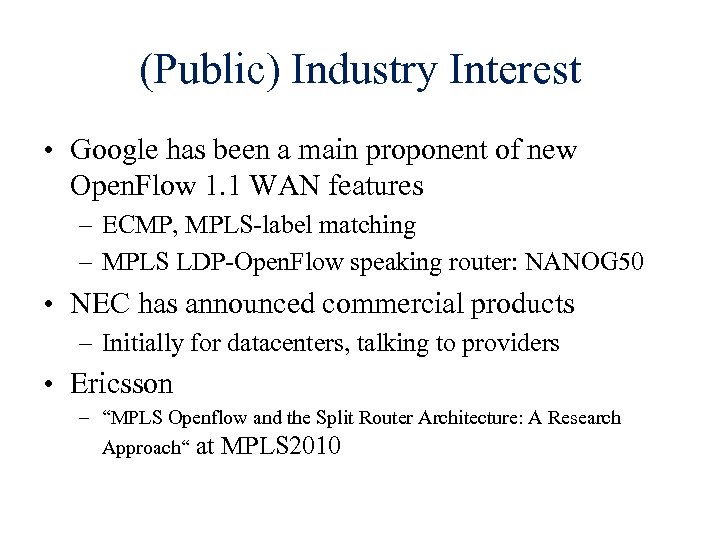

(Public) Industry Interest • Google has been a main proponent of new Open. Flow 1. 1 WAN features – ECMP, MPLS-label matching – MPLS LDP-Open. Flow speaking router: NANOG 50 • NEC has announced commercial products – Initially for datacenters, talking to providers • Ericsson – “MPLS Openflow and the Split Router Architecture: A Research Approach“ at MPLS 2010

(Public) Industry Interest • Google has been a main proponent of new Open. Flow 1. 1 WAN features – ECMP, MPLS-label matching – MPLS LDP-Open. Flow speaking router: NANOG 50 • NEC has announced commercial products – Initially for datacenters, talking to providers • Ericsson – “MPLS Openflow and the Split Router Architecture: A Research Approach“ at MPLS 2010

Open. Flow in the WAN

Open. Flow in the WAN

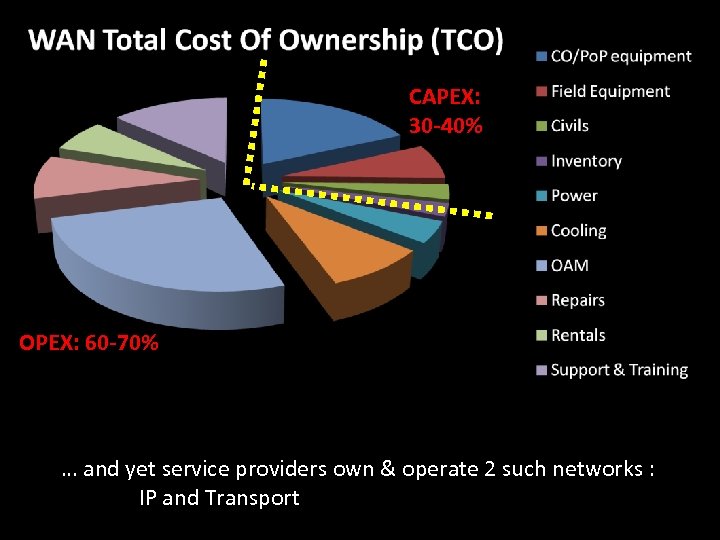

CAPEX: 30 -40% OPEX: 60 -70% … and yet service providers own & operate 2 such networks : IP and Transport

CAPEX: 30 -40% OPEX: 60 -70% … and yet service providers own & operate 2 such networks : IP and Transport

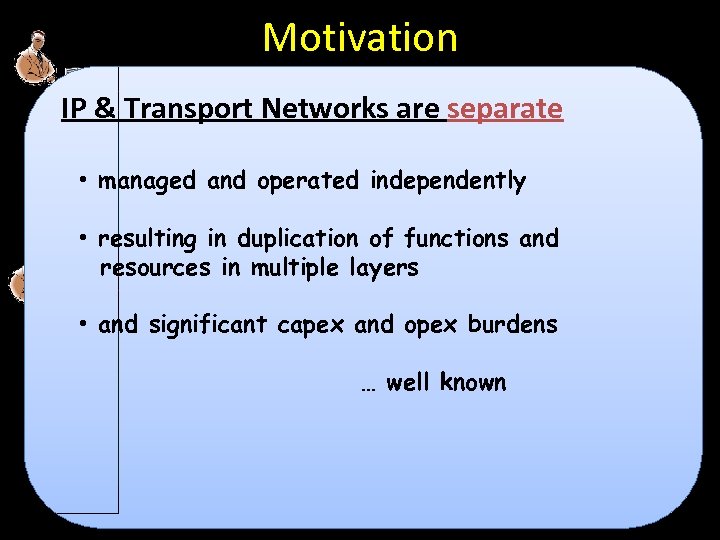

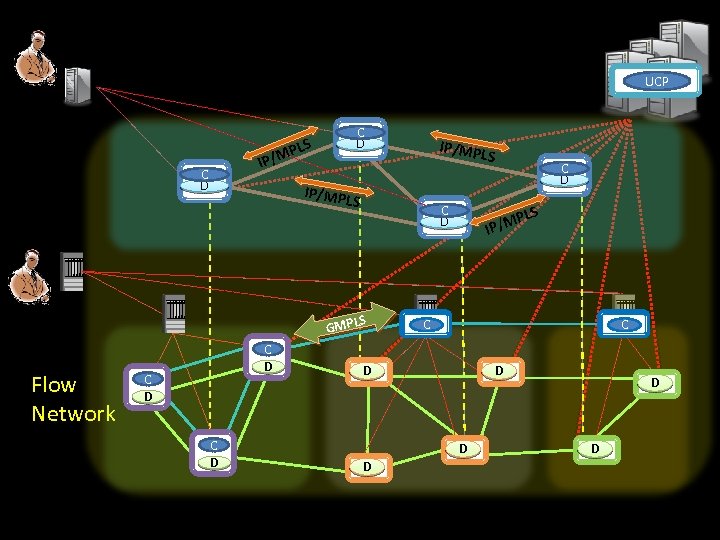

Motivation IP & Transport Networks are separate LS /MP IP C D IP/MP LS • managed and operated independently IP/M C D PLS C D LS /MP IP • resulting in duplication of functions and resources in multiple layers S C • and significant capex Pand opex burdens GM L C D C D … well known D D

Motivation IP & Transport Networks are separate LS /MP IP C D IP/MP LS • managed and operated independently IP/M C D PLS C D LS /MP IP • resulting in duplication of functions and resources in multiple layers S C • and significant capex Pand opex burdens GM L C D C D … well known D D

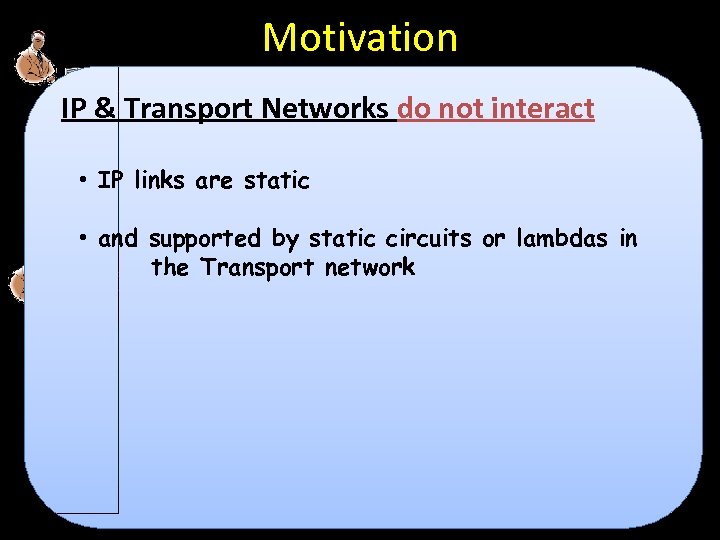

Motivation IP & Transport Networks do not interact LS /MP IP C D IP/MP LS • IP links are static. P/M I C D PLS C D LS /MP IP • and supported by static circuits or lambdas in the Transport network S GMPL C D C D C C D D D

Motivation IP & Transport Networks do not interact LS /MP IP C D IP/MP LS • IP links are static. P/M I C D PLS C D LS /MP IP • and supported by static circuits or lambdas in the Transport network S GMPL C D C D C C D D D

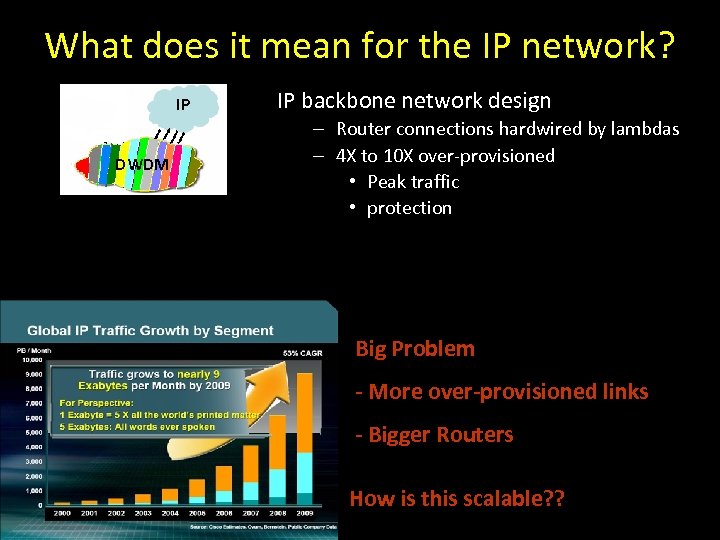

What does it mean for the IP network? IP DWDM IP backbone network design – Router connections hardwired by lambdas – 4 X to 10 X over-provisioned • Peak traffic • protection Big Problem - More over-provisioned links - Bigger Routers How is this scalable? ? *April, 02

What does it mean for the IP network? IP DWDM IP backbone network design – Router connections hardwired by lambdas – 4 X to 10 X over-provisioned • Peak traffic • protection Big Problem - More over-provisioned links - Bigger Routers How is this scalable? ? *April, 02

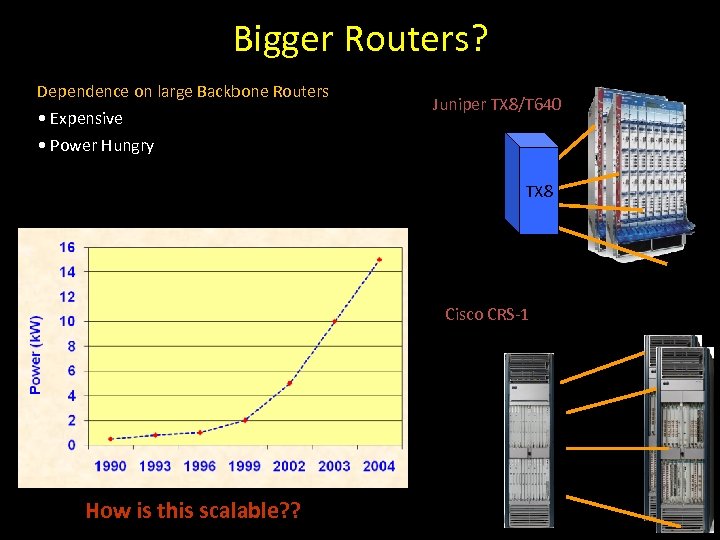

Bigger Routers? Dependence on large Backbone Routers • Expensive • Power Hungry Juniper TX 8/T 640 TX 8 Cisco CRS-1 How is this scalable? ?

Bigger Routers? Dependence on large Backbone Routers • Expensive • Power Hungry Juniper TX 8/T 640 TX 8 Cisco CRS-1 How is this scalable? ?

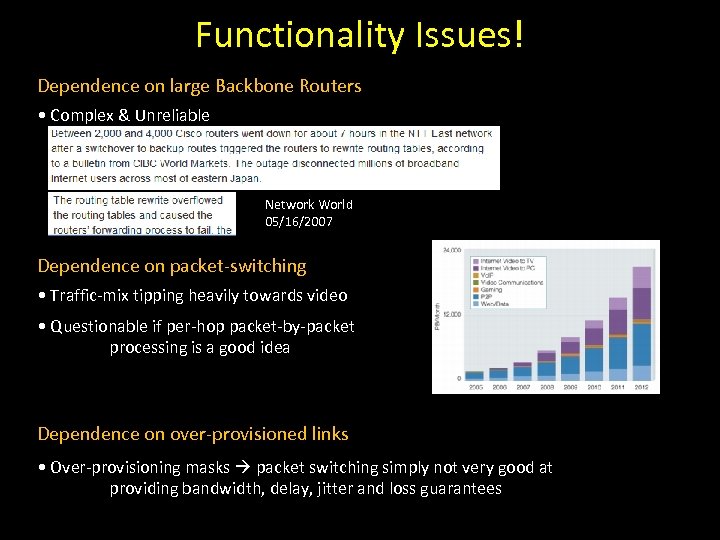

Functionality Issues! Dependence on large Backbone Routers • Complex & Unreliable Network World 05/16/2007 Dependence on packet-switching • Traffic-mix tipping heavily towards video • Questionable if per-hop packet-by-packet processing is a good idea Dependence on over-provisioned links • Over-provisioning masks packet switching simply not very good at providing bandwidth, delay, jitter and loss guarantees

Functionality Issues! Dependence on large Backbone Routers • Complex & Unreliable Network World 05/16/2007 Dependence on packet-switching • Traffic-mix tipping heavily towards video • Questionable if per-hop packet-by-packet processing is a good idea Dependence on over-provisioned links • Over-provisioning masks packet switching simply not very good at providing bandwidth, delay, jitter and loss guarantees

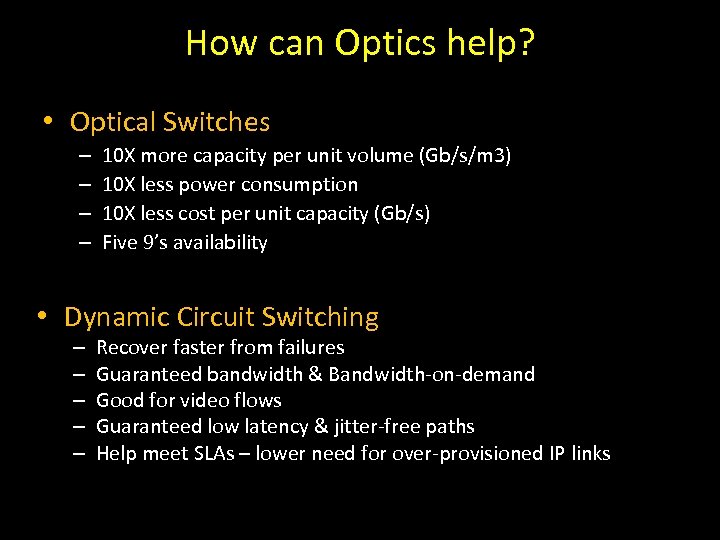

How can Optics help? • Optical Switches – – 10 X more capacity per unit volume (Gb/s/m 3) 10 X less power consumption 10 X less cost per unit capacity (Gb/s) Five 9’s availability • Dynamic Circuit Switching – – – Recover faster from failures Guaranteed bandwidth & Bandwidth-on-demand Good for video flows Guaranteed low latency & jitter-free paths Help meet SLAs – lower need for over-provisioned IP links

How can Optics help? • Optical Switches – – 10 X more capacity per unit volume (Gb/s/m 3) 10 X less power consumption 10 X less cost per unit capacity (Gb/s) Five 9’s availability • Dynamic Circuit Switching – – – Recover faster from failures Guaranteed bandwidth & Bandwidth-on-demand Good for video flows Guaranteed low latency & jitter-free paths Help meet SLAs – lower need for over-provisioned IP links

Motivation IP & Transport Networks do not interact LS /MP IP C D IP/MP LS • IP links are static. P/M I C D PLS C D LS /MP IP • and supported by static circuits or lambdas in the Transport network S GMPL C D C D C C D D D

Motivation IP & Transport Networks do not interact LS /MP IP C D IP/MP LS • IP links are static. P/M I C D PLS C D LS /MP IP • and supported by static circuits or lambdas in the Transport network S GMPL C D C D C C D D D

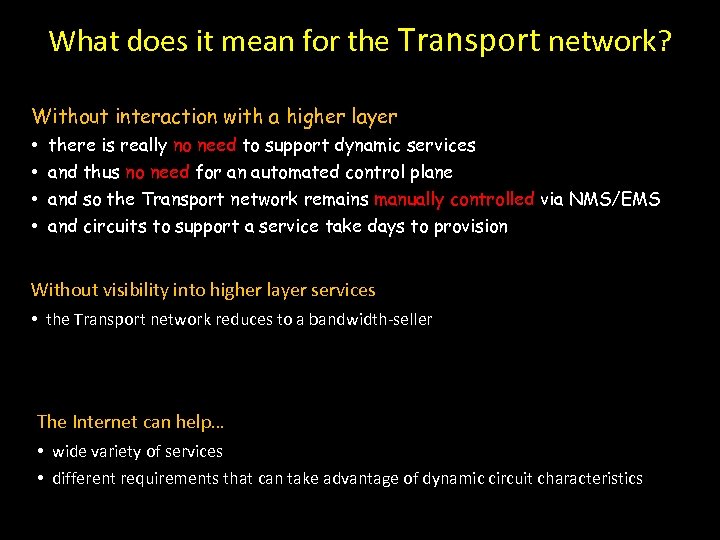

What does it mean for the Transport network? IP Without interaction with a higher layer • • there is really no need to support dynamic services DWDM and thus no need for an automated control plane and so the Transport network remains manually controlled via NMS/EMS and circuits to support a service take days to provision Without visibility into higher layer services • the Transport network reduces to a bandwidth-seller The Internet can help… • wide variety of services • different requirements that can take advantage of dynamic circuit characteristics *April, 02

What does it mean for the Transport network? IP Without interaction with a higher layer • • there is really no need to support dynamic services DWDM and thus no need for an automated control plane and so the Transport network remains manually controlled via NMS/EMS and circuits to support a service take days to provision Without visibility into higher layer services • the Transport network reduces to a bandwidth-seller The Internet can help… • wide variety of services • different requirements that can take advantage of dynamic circuit characteristics *April, 02

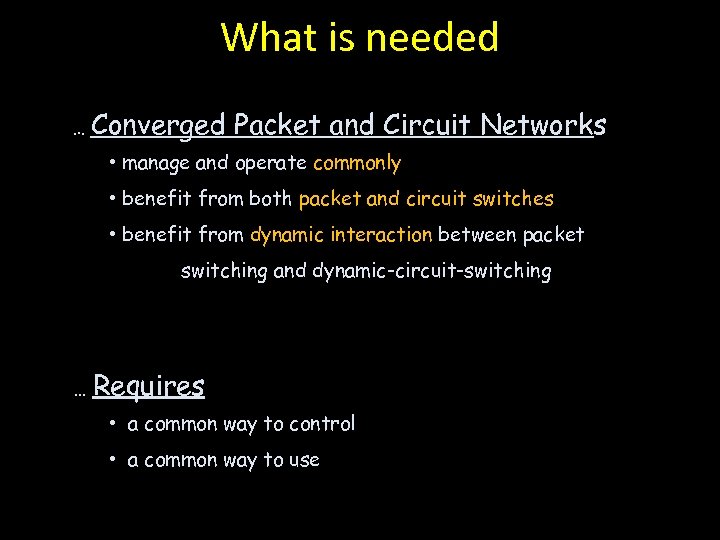

What is needed … Converged Packet and Circuit Networks • manage and operate commonly • benefit from both packet and circuit switches • benefit from dynamic interaction between packet switching and dynamic-circuit-switching … Requires • a common way to control • a common way to use

What is needed … Converged Packet and Circuit Networks • manage and operate commonly • benefit from both packet and circuit switches • benefit from dynamic interaction between packet switching and dynamic-circuit-switching … Requires • a common way to control • a common way to use

But … Convergence is hard … mainly because the two networks have very different architecture which makes integrated operation hard … and previous attempts at convergence have assumed that the networks remain the same … making what goes across them bloated and complicated and ultimately un-usable We believe true convergence will come about from architectural change!

But … Convergence is hard … mainly because the two networks have very different architecture which makes integrated operation hard … and previous attempts at convergence have assumed that the networks remain the same … making what goes across them bloated and complicated and ultimately un-usable We believe true convergence will come about from architectural change!

UCP C D LS /MP IP C D LS IP/MPL S C D S GMPL Flow Network IP/MP C D C D LS /MP IP C C D D D

UCP C D LS /MP IP C D LS IP/MPL S C D S GMPL Flow Network IP/MP C D C D LS /MP IP C C D D D

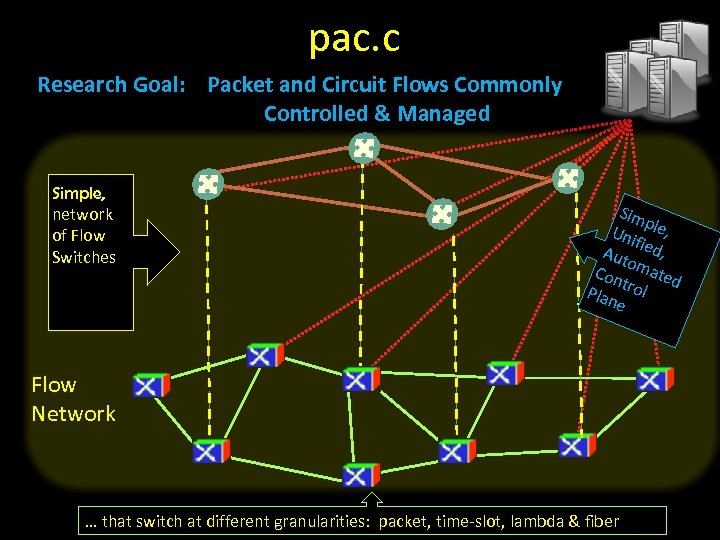

pac. c Research Goal: Packet and Circuit Flows Commonly Controlled & Managed Simple, network of Flow Switches Sim p Uni le, f Aut ied, o Con mated t Plan rol e Flow Network … that switch at different granularities: packet, time-slot, lambda & fiber

pac. c Research Goal: Packet and Circuit Flows Commonly Controlled & Managed Simple, network of Flow Switches Sim p Uni le, f Aut ied, o Con mated t Plan rol e Flow Network … that switch at different granularities: packet, time-slot, lambda & fiber

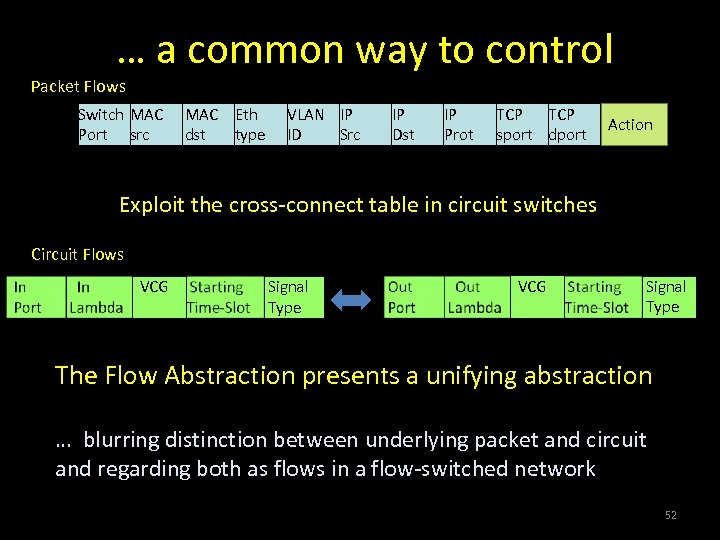

… a common way to control Packet Flows Switch MAC Port src MAC Eth dst type VLAN IP ID Src IP Dst IP Prot TCP sport dport Action Exploit the cross-connect table in circuit switches Circuit Flows VCG Signal 52 Type The Flow Abstraction presents a unifying abstraction … blurring distinction between underlying packet and circuit and regarding both as flows in a flow-switched network 52

… a common way to control Packet Flows Switch MAC Port src MAC Eth dst type VLAN IP ID Src IP Dst IP Prot TCP sport dport Action Exploit the cross-connect table in circuit switches Circuit Flows VCG Signal 52 Type The Flow Abstraction presents a unifying abstraction … blurring distinction between underlying packet and circuit and regarding both as flows in a flow-switched network 52

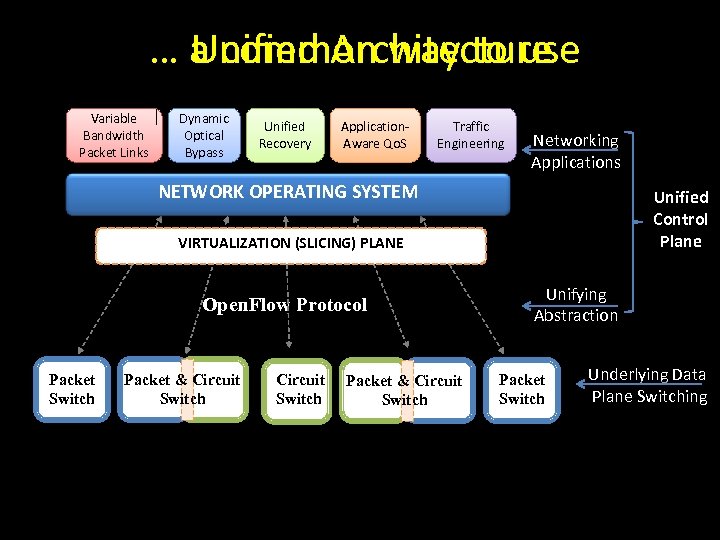

… a common way to use Unified Architecture Variable Bandwidth Packet Links Dynamic Optical Bypass Unified Recovery Application. Aware Qo. S Traffic Engineering Networking Applications NETWORK OPERATING SYSTEM Unified Control Plane VIRTUALIZATION (SLICING) PLANE Open. Flow Protocol Packet Switch Packet & Circuit Switch Unifying Abstraction Packet Switch Underlying Data Plane Switching

… a common way to use Unified Architecture Variable Bandwidth Packet Links Dynamic Optical Bypass Unified Recovery Application. Aware Qo. S Traffic Engineering Networking Applications NETWORK OPERATING SYSTEM Unified Control Plane VIRTUALIZATION (SLICING) PLANE Open. Flow Protocol Packet Switch Packet & Circuit Switch Unifying Abstraction Packet Switch Underlying Data Plane Switching

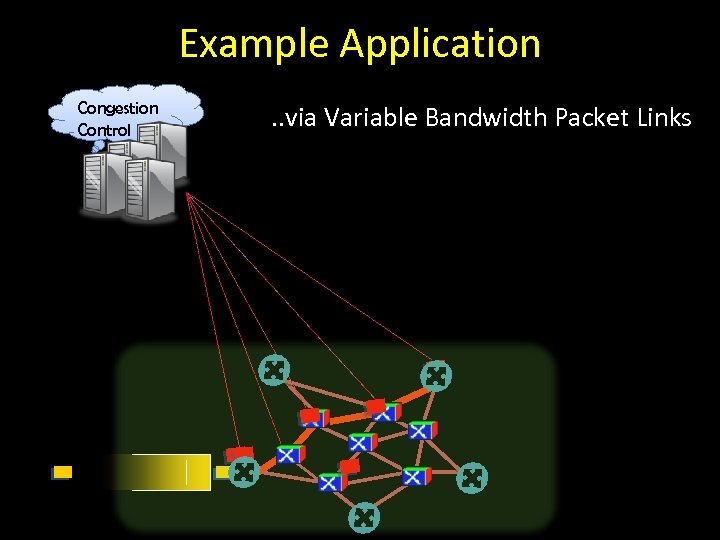

Example Application Congestion Control . . via Variable Bandwidth Packet Links

Example Application Congestion Control . . via Variable Bandwidth Packet Links

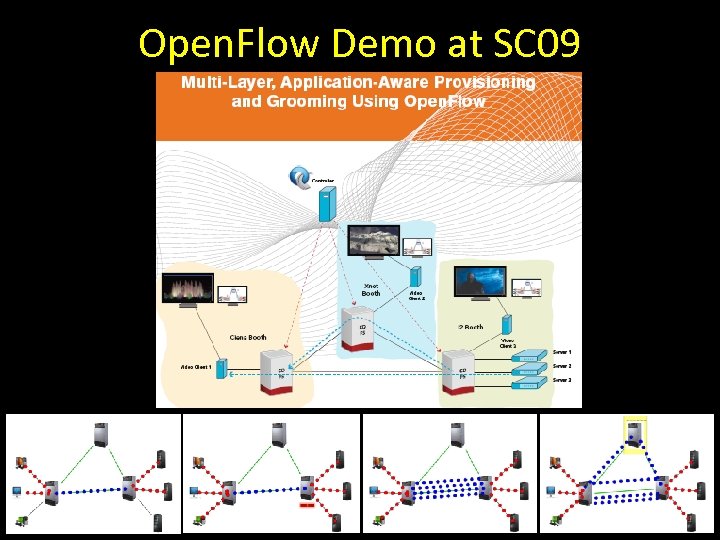

Open. Flow Demo at SC 09

Open. Flow Demo at SC 09

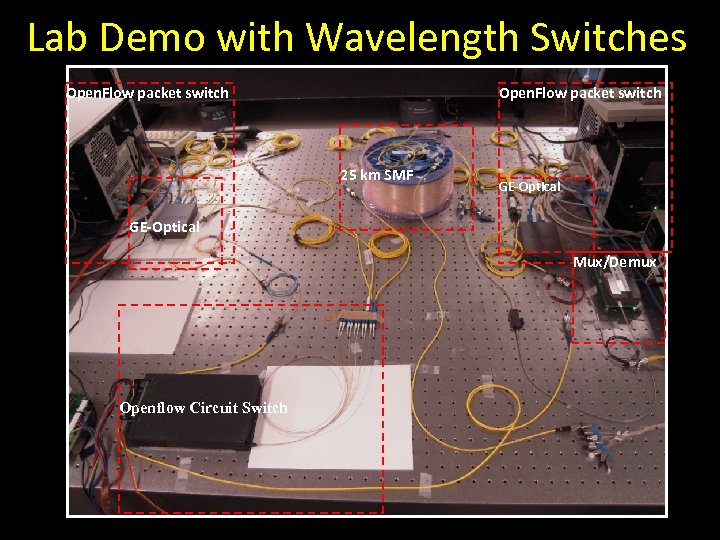

Lab Demo with Wavelength Switches Open. Flow Controller Open. Flow Protocol Net. FPGA based Open. Flow packet switch NF 1 to OSA E-O NF 2 O-E GE 25 km SMF GE AWG 1 X 9 Wavelength Selective Switch (WSS) to OSA WSS based Open. Flow circuit switch 192. 168. 3. 12 192. 168. 3. 15 Video Clients λ 1 1553. 3 nm λ 2 1554. 1 nm GE to DWDM SFP convertor 192. 168. 3. 10 Video Server

Lab Demo with Wavelength Switches Open. Flow Controller Open. Flow Protocol Net. FPGA based Open. Flow packet switch NF 1 to OSA E-O NF 2 O-E GE 25 km SMF GE AWG 1 X 9 Wavelength Selective Switch (WSS) to OSA WSS based Open. Flow circuit switch 192. 168. 3. 12 192. 168. 3. 15 Video Clients λ 1 1553. 3 nm λ 2 1554. 1 nm GE to DWDM SFP convertor 192. 168. 3. 10 Video Server

Lab Demo with Wavelength Switches Open. Flow packet switch 25 km SMF GE-Optical Mux/Demux Openflow Circuit Switch

Lab Demo with Wavelength Switches Open. Flow packet switch 25 km SMF GE-Optical Mux/Demux Openflow Circuit Switch

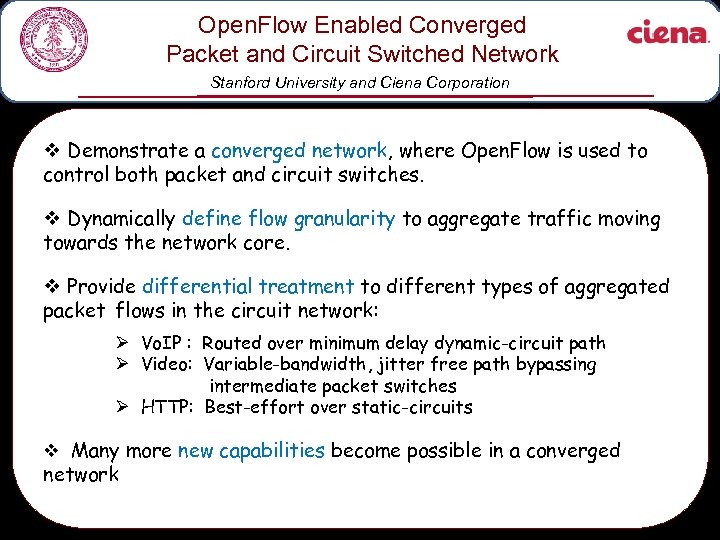

Open. Flow Enabled Converged Packet and Circuit Switched Network Stanford University and Ciena Corporation v Demonstrate a converged network, where Open. Flow is used to control both packet and circuit switches. v Dynamically define flow granularity to aggregate traffic moving towards the network core. v Provide differential treatment to different types of aggregated packet flows in the circuit network: Ø Vo. IP : Routed over minimum delay dynamic-circuit path Ø Video: Variable-bandwidth, jitter free path bypassing intermediate packet switches Ø HTTP: Best-effort over static-circuits v Many more new capabilities become possible in a converged network

Open. Flow Enabled Converged Packet and Circuit Switched Network Stanford University and Ciena Corporation v Demonstrate a converged network, where Open. Flow is used to control both packet and circuit switches. v Dynamically define flow granularity to aggregate traffic moving towards the network core. v Provide differential treatment to different types of aggregated packet flows in the circuit network: Ø Vo. IP : Routed over minimum delay dynamic-circuit path Ø Video: Variable-bandwidth, jitter free path bypassing intermediate packet switches Ø HTTP: Best-effort over static-circuits v Many more new capabilities become possible in a converged network

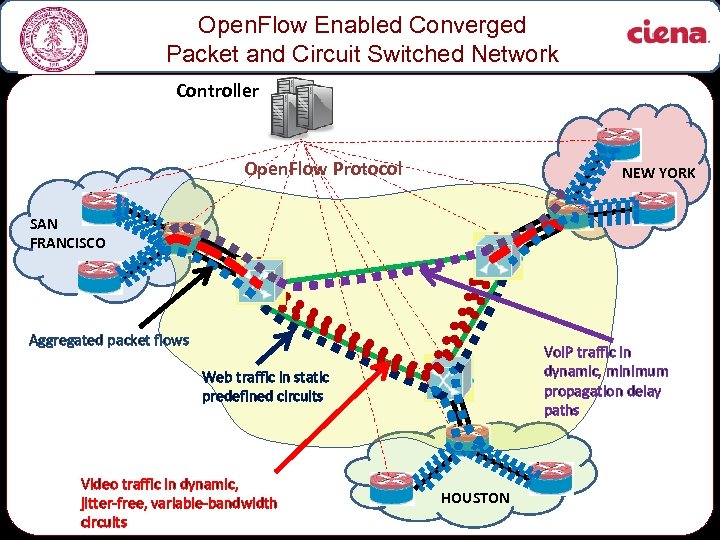

Open. Flow Enabled Converged Packet and Circuit Switched Network Controller Open. Flow Protocol NEW YORK SAN FRANCISCO Aggregated packet flows Vo. IP traffic in dynamic, minimum propagation delay paths Web traffic in static predefined circuits Video traffic in dynamic, jitter-free, variable-bandwidth circuits HOUSTON

Open. Flow Enabled Converged Packet and Circuit Switched Network Controller Open. Flow Protocol NEW YORK SAN FRANCISCO Aggregated packet flows Vo. IP traffic in dynamic, minimum propagation delay paths Web traffic in static predefined circuits Video traffic in dynamic, jitter-free, variable-bandwidth circuits HOUSTON

Demo Video

Demo Video

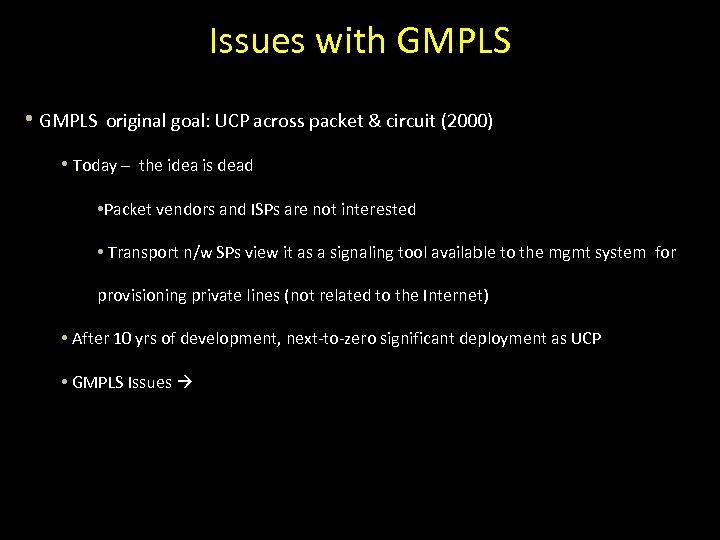

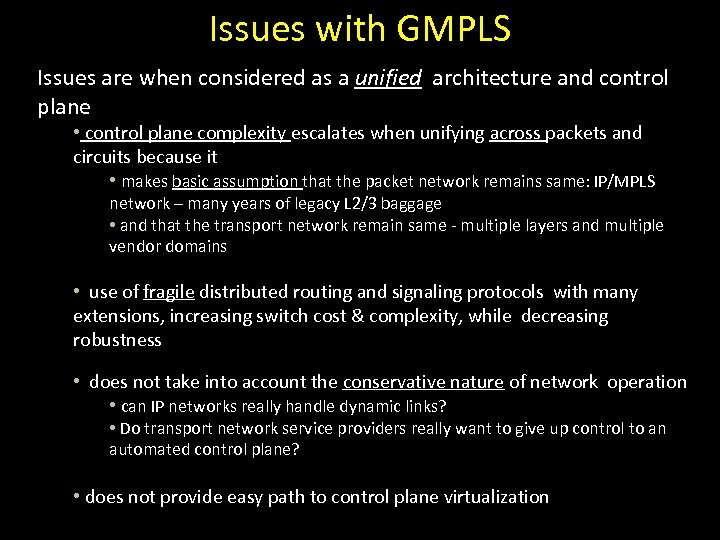

Issues with GMPLS • GMPLS original goal: UCP across packet & circuit (2000) • Today – the idea is dead • Packet vendors and ISPs are not interested • Transport n/w SPs view it as a signaling tool available to the mgmt system for provisioning private lines (not related to the Internet) • After 10 yrs of development, next-to-zero significant deployment as UCP • GMPLS Issues

Issues with GMPLS • GMPLS original goal: UCP across packet & circuit (2000) • Today – the idea is dead • Packet vendors and ISPs are not interested • Transport n/w SPs view it as a signaling tool available to the mgmt system for provisioning private lines (not related to the Internet) • After 10 yrs of development, next-to-zero significant deployment as UCP • GMPLS Issues

Issues with GMPLS Issues are when considered as a unified architecture and control plane • control plane complexity escalates when unifying across packets and circuits because it • makes basic assumption that the packet network remains same: IP/MPLS network – many years of legacy L 2/3 baggage • and that the transport network remain same - multiple layers and multiple vendor domains • use of fragile distributed routing and signaling protocols with many extensions, increasing switch cost & complexity, while decreasing robustness • does not take into account the conservative nature of network operation • can IP networks really handle dynamic links? • Do transport network service providers really want to give up control to an automated control plane? • does not provide easy path to control plane virtualization

Issues with GMPLS Issues are when considered as a unified architecture and control plane • control plane complexity escalates when unifying across packets and circuits because it • makes basic assumption that the packet network remains same: IP/MPLS network – many years of legacy L 2/3 baggage • and that the transport network remain same - multiple layers and multiple vendor domains • use of fragile distributed routing and signaling protocols with many extensions, increasing switch cost & complexity, while decreasing robustness • does not take into account the conservative nature of network operation • can IP networks really handle dynamic links? • Do transport network service providers really want to give up control to an automated control plane? • does not provide easy path to control plane virtualization

Conclusions • Current networks are complicated • Open. Flow is an API – Interesting apps include network slicing • Nation-wide academic trials underway • Open. Flow has potential for Service Providers – Custom control for Traffic Engineering – Combined Packet/Circuit switched networks • Thank you!

Conclusions • Current networks are complicated • Open. Flow is an API – Interesting apps include network slicing • Nation-wide academic trials underway • Open. Flow has potential for Service Providers – Custom control for Traffic Engineering – Combined Packet/Circuit switched networks • Thank you!

Conclusions • Current networks are complicated • Open. Flow is an API – Interesting apps include network slicing • Nation-wide academic trials underway • Open. Flow has potential for Service Providers – Custom control for Traffic Engineering – Combined Packet/Circuit switched networks • Thank you!

Conclusions • Current networks are complicated • Open. Flow is an API – Interesting apps include network slicing • Nation-wide academic trials underway • Open. Flow has potential for Service Providers – Custom control for Traffic Engineering – Combined Packet/Circuit switched networks • Thank you!

Backup

Backup

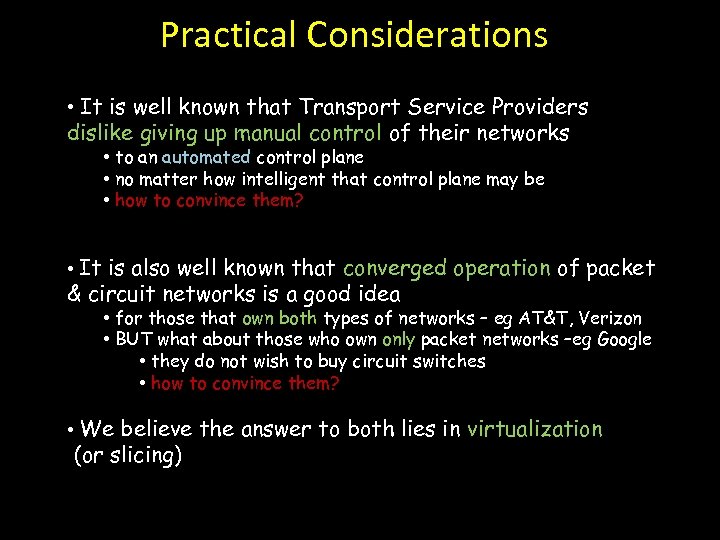

Practical Considerations • It is well known that Transport Service Providers dislike giving up manual control of their networks • to an automated control plane • no matter how intelligent that control plane may be • how to convince them? • It is also well known that converged operation of packet & circuit networks is a good idea • for those that own both types of networks – eg AT&T, Verizon • BUT what about those who own only packet networks –eg Google • they do not wish to buy circuit switches • how to convince them? • We believe the answer to both lies in virtualization (or slicing)

Practical Considerations • It is well known that Transport Service Providers dislike giving up manual control of their networks • to an automated control plane • no matter how intelligent that control plane may be • how to convince them? • It is also well known that converged operation of packet & circuit networks is a good idea • for those that own both types of networks – eg AT&T, Verizon • BUT what about those who own only packet networks –eg Google • they do not wish to buy circuit switches • how to convince them? • We believe the answer to both lies in virtualization (or slicing)

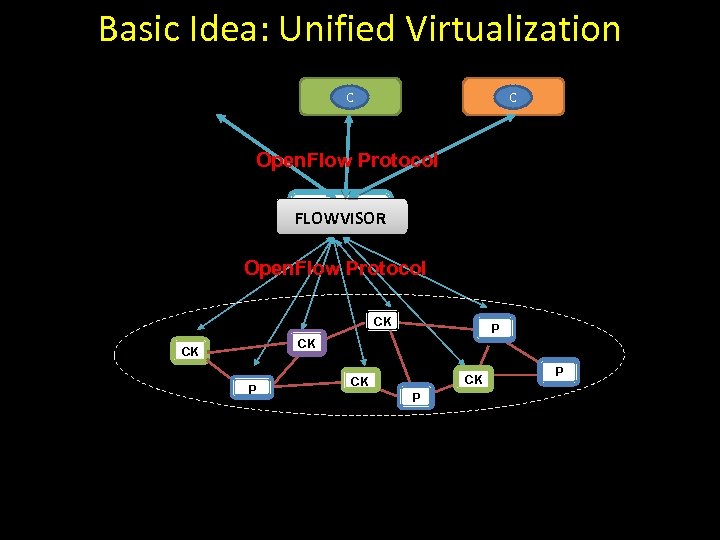

Basic Idea: Unified Virtualization C C Open. Flow Protocol C FLOWVISOR Open. Flow Protocol CK P CK CK P P

Basic Idea: Unified Virtualization C C Open. Flow Protocol C FLOWVISOR Open. Flow Protocol CK P CK CK P P

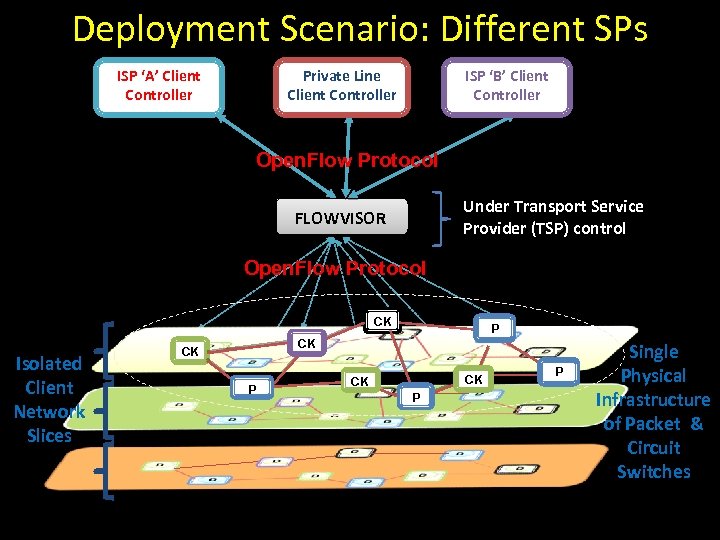

Deployment Scenario: Different SPs ISP ‘A’ Client Controller C Private Line Client Controller C ISP ‘B’ Client Controller C Open. Flow Protocol Under Transport Service Provider (TSP) control FLOWVISOR Open. Flow Protocol CK Isolated Client Network Slices P CK CK P P Single Physical Infrastructure of Packet & Circuit Switches

Deployment Scenario: Different SPs ISP ‘A’ Client Controller C Private Line Client Controller C ISP ‘B’ Client Controller C Open. Flow Protocol Under Transport Service Provider (TSP) control FLOWVISOR Open. Flow Protocol CK Isolated Client Network Slices P CK CK P P Single Physical Infrastructure of Packet & Circuit Switches

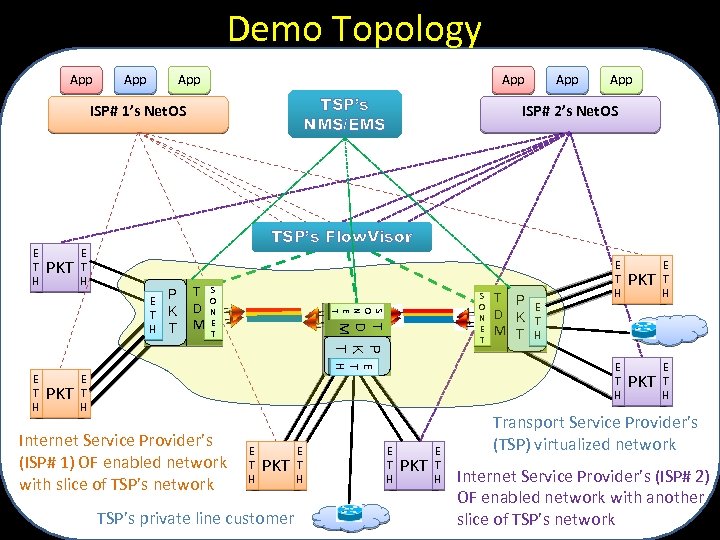

Demo Topology App App TSP’s NMS/EMS ISP# 1’s Net. OS E T H TSP’s Flow. Visor E P T K H T T D M S O N E T T P E D K T M T H PKT App ISP# 2’s Net. OS S O N E T H PKT E T H App E T H Internet Service Provider’s (ISP# 1) OF enabled network with slice of TSP’s network E T H PKT TSP’s private line customer E T H T P E D K T M T H E T H PKT E T H Transport Service Provider’s (TSP) virtualized network Internet Service Provider’s (ISP# 2) OF enabled network with another slice of TSP’s network

Demo Topology App App TSP’s NMS/EMS ISP# 1’s Net. OS E T H TSP’s Flow. Visor E P T K H T T D M S O N E T T P E D K T M T H PKT App ISP# 2’s Net. OS S O N E T H PKT E T H App E T H Internet Service Provider’s (ISP# 1) OF enabled network with slice of TSP’s network E T H PKT TSP’s private line customer E T H T P E D K T M T H E T H PKT E T H Transport Service Provider’s (TSP) virtualized network Internet Service Provider’s (ISP# 2) OF enabled network with another slice of TSP’s network

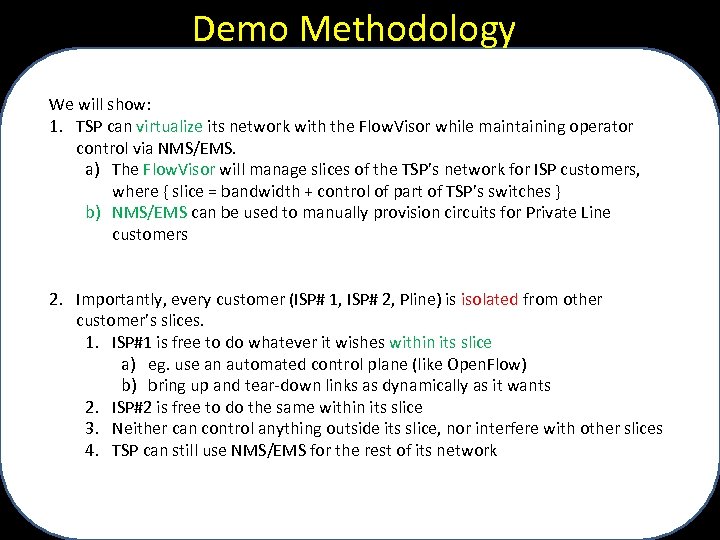

Demo Methodology We will show: 1. TSP can virtualize its network with the Flow. Visor while maintaining operator control via NMS/EMS. a) The Flow. Visor will manage slices of the TSP’s network for ISP customers, where { slice = bandwidth + control of part of TSP’s switches } b) NMS/EMS can be used to manually provision circuits for Private Line customers 2. Importantly, every customer (ISP# 1, ISP# 2, Pline) is isolated from other customer’s slices. 1. ISP#1 is free to do whatever it wishes within its slice a) eg. use an automated control plane (like Open. Flow) b) bring up and tear-down links as dynamically as it wants 2. ISP#2 is free to do the same within its slice 3. Neither can control anything outside its slice, nor interfere with other slices 4. TSP can still use NMS/EMS for the rest of its network

Demo Methodology We will show: 1. TSP can virtualize its network with the Flow. Visor while maintaining operator control via NMS/EMS. a) The Flow. Visor will manage slices of the TSP’s network for ISP customers, where { slice = bandwidth + control of part of TSP’s switches } b) NMS/EMS can be used to manually provision circuits for Private Line customers 2. Importantly, every customer (ISP# 1, ISP# 2, Pline) is isolated from other customer’s slices. 1. ISP#1 is free to do whatever it wishes within its slice a) eg. use an automated control plane (like Open. Flow) b) bring up and tear-down links as dynamically as it wants 2. ISP#2 is free to do the same within its slice 3. Neither can control anything outside its slice, nor interfere with other slices 4. TSP can still use NMS/EMS for the rest of its network

ISP #1’s Business Model ISP# 1 pays for a slice = { bandwidth + TSP switching resources } 1. Part of the bandwidth is for static links between its edge packet switches (like ISPs do today) 2. and some of it is for redirecting bandwidth between the edge switches (unlike current practice) 3. The sum of both static bandwidth and redirected bandwidth is paid for up-front. 4. The TSP switching resources in the slice are needed by the ISP to enable the redirect capability.

ISP #1’s Business Model ISP# 1 pays for a slice = { bandwidth + TSP switching resources } 1. Part of the bandwidth is for static links between its edge packet switches (like ISPs do today) 2. and some of it is for redirecting bandwidth between the edge switches (unlike current practice) 3. The sum of both static bandwidth and redirected bandwidth is paid for up-front. 4. The TSP switching resources in the slice are needed by the ISP to enable the redirect capability.

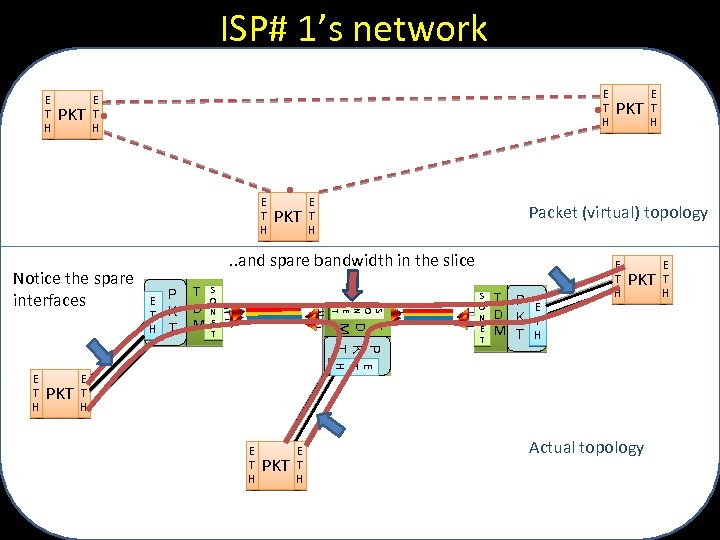

ISP# 1’s network E T H PKT E T H PKT . . and spare bandwidth in the slice E P T K H T T D M S O N E T T P E D K T M T H E T H Packet (virtual) topology S O N E T Notice the spare interfaces PKT E T H PKT S O N E T T P E D K T M T H E T H PKT E T H Actual topology E T H

ISP# 1’s network E T H PKT E T H PKT . . and spare bandwidth in the slice E P T K H T T D M S O N E T T P E D K T M T H E T H Packet (virtual) topology S O N E T Notice the spare interfaces PKT E T H PKT S O N E T T P E D K T M T H E T H PKT E T H Actual topology E T H

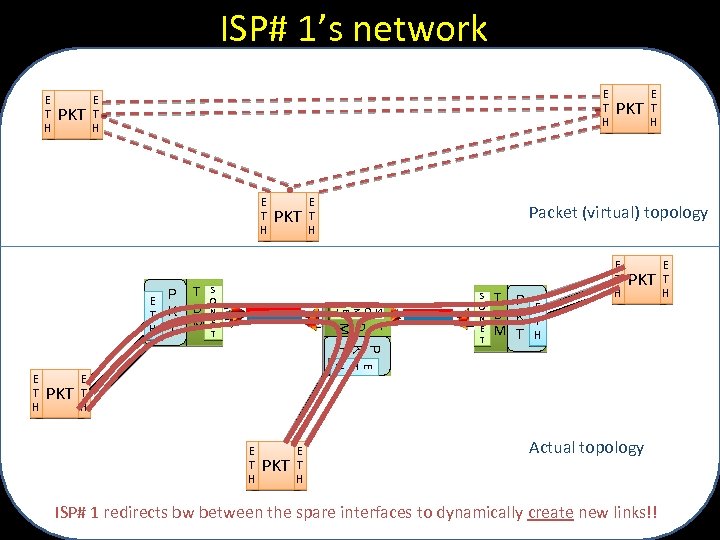

ISP# 1’s network E T H PKT E T H PKT S O N E T T P E D K T M T H E T H T D M Packet (virtual) topology S O N E T E P T K H T PKT E T H S O N E T T P E D K T M T H E T H PKT E T H Actual topology ISP# 1 redirects bw between the spare interfaces to dynamically create new links!! E T H

ISP# 1’s network E T H PKT E T H PKT S O N E T T P E D K T M T H E T H T D M Packet (virtual) topology S O N E T E P T K H T PKT E T H S O N E T T P E D K T M T H E T H PKT E T H Actual topology ISP# 1 redirects bw between the spare interfaces to dynamically create new links!! E T H

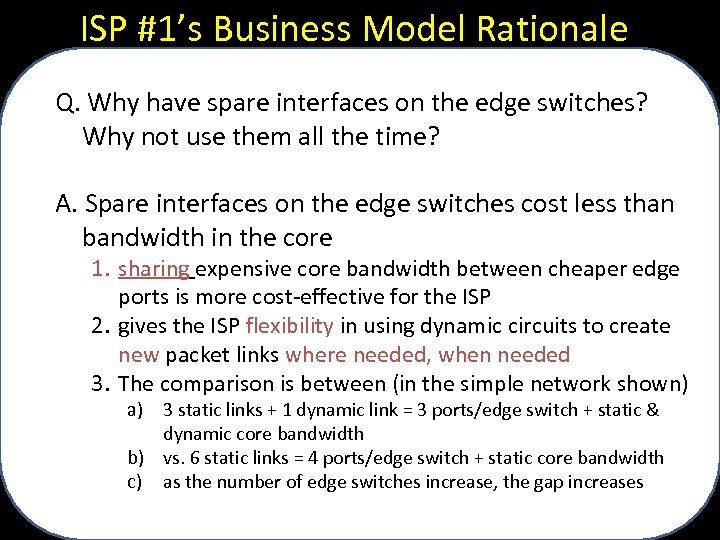

ISP #1’s Business Model Rationale Q. Why have spare interfaces on the edge switches? Why not use them all the time? A. Spare interfaces on the edge switches cost less than bandwidth in the core 1. sharing expensive core bandwidth between cheaper edge ports is more cost-effective for the ISP 2. gives the ISP flexibility in using dynamic circuits to create new packet links where needed, when needed 3. The comparison is between (in the simple network shown) a) 3 static links + 1 dynamic link = 3 ports/edge switch + static & dynamic core bandwidth b) vs. 6 static links = 4 ports/edge switch + static core bandwidth c) as the number of edge switches increase, the gap increases

ISP #1’s Business Model Rationale Q. Why have spare interfaces on the edge switches? Why not use them all the time? A. Spare interfaces on the edge switches cost less than bandwidth in the core 1. sharing expensive core bandwidth between cheaper edge ports is more cost-effective for the ISP 2. gives the ISP flexibility in using dynamic circuits to create new packet links where needed, when needed 3. The comparison is between (in the simple network shown) a) 3 static links + 1 dynamic link = 3 ports/edge switch + static & dynamic core bandwidth b) vs. 6 static links = 4 ports/edge switch + static core bandwidth c) as the number of edge switches increase, the gap increases

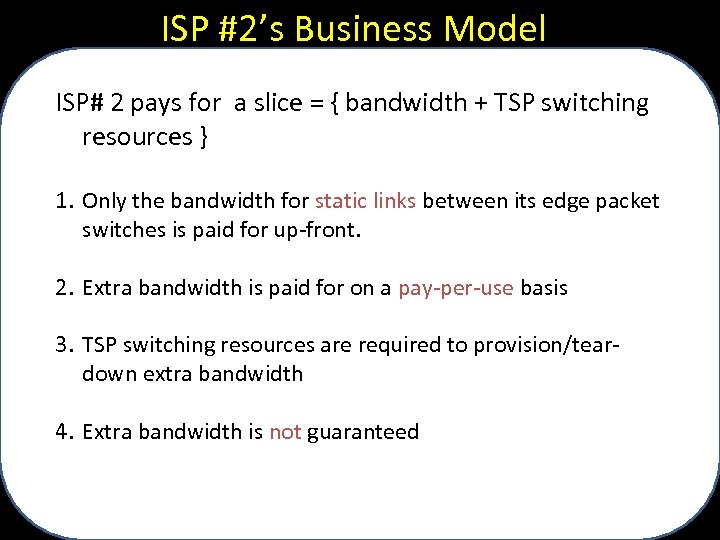

ISP #2’s Business Model ISP# 2 pays for a slice = { bandwidth + TSP switching resources } 1. Only the bandwidth for static links between its edge packet switches is paid for up-front. 2. Extra bandwidth is paid for on a pay-per-use basis 3. TSP switching resources are required to provision/teardown extra bandwidth 4. Extra bandwidth is not guaranteed

ISP #2’s Business Model ISP# 2 pays for a slice = { bandwidth + TSP switching resources } 1. Only the bandwidth for static links between its edge packet switches is paid for up-front. 2. Extra bandwidth is paid for on a pay-per-use basis 3. TSP switching resources are required to provision/teardown extra bandwidth 4. Extra bandwidth is not guaranteed

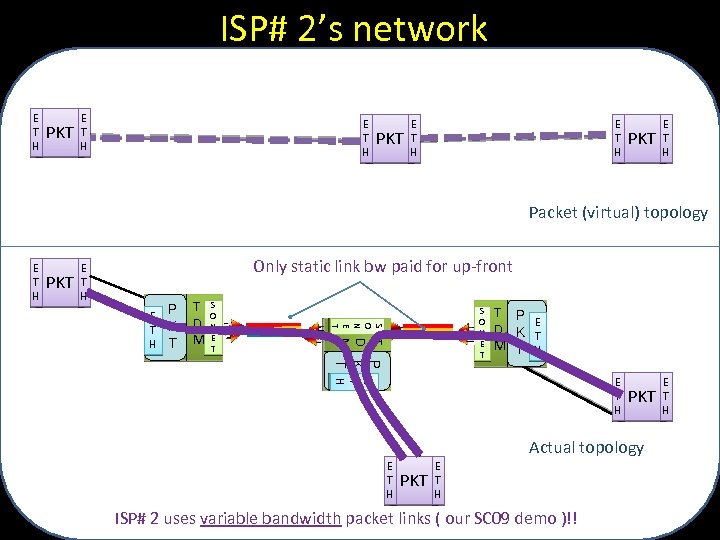

ISP# 2’s network E T H PKT E T H Packet (virtual) topology E T H PKT E T H Only static link bw paid for up-front S O N E T T P E D K T M T H T D M S O N E T E P T K H T T P E D K T M T H E T H PKT Actual topology E T H PKT E T H ISP# 2 uses variable bandwidth packet links ( our SC 09 demo )!! E T H

ISP# 2’s network E T H PKT E T H Packet (virtual) topology E T H PKT E T H Only static link bw paid for up-front S O N E T T P E D K T M T H T D M S O N E T E P T K H T T P E D K T M T H E T H PKT Actual topology E T H PKT E T H ISP# 2 uses variable bandwidth packet links ( our SC 09 demo )!! E T H

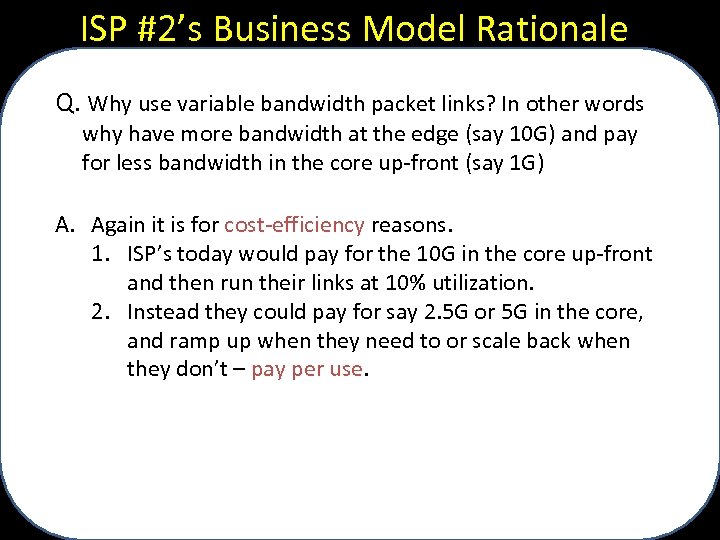

ISP #2’s Business Model Rationale Q. Why use variable bandwidth packet links? In other words why have more bandwidth at the edge (say 10 G) and pay for less bandwidth in the core up-front (say 1 G) A. Again it is for cost-efficiency reasons. 1. ISP’s today would pay for the 10 G in the core up-front and then run their links at 10% utilization. 2. Instead they could pay for say 2. 5 G or 5 G in the core, and ramp up when they need to or scale back when they don’t – pay per use.

ISP #2’s Business Model Rationale Q. Why use variable bandwidth packet links? In other words why have more bandwidth at the edge (say 10 G) and pay for less bandwidth in the core up-front (say 1 G) A. Again it is for cost-efficiency reasons. 1. ISP’s today would pay for the 10 G in the core up-front and then run their links at 10% utilization. 2. Instead they could pay for say 2. 5 G or 5 G in the core, and ramp up when they need to or scale back when they don’t – pay per use.