a3043ac19dd1e91f1af9443f9abc6948.ppt

- Количество слайдов: 20

OPC Koustenis, Breiter

General Comments • Surrogate for Control Group • Benchmark for Minimally Acceptable Values • Not a Control Group • Driven by Historical Data • Requires Pooling of Different Investigations

(continued) • Periodical Re-Evaluation and Updating the OPC’s • Policy not yet formalized – Specific Guidance on Methodology to Derive an OPC – Is urgently needed

Bayesian Issues in Developing OPC • Objective means? – Derived from (conditionally? ) exchangeable studies – Non-informative hyper-prior • For new Bayesian trials should the OPC be expressed as a (presumably tight) posterior distribution rather than a fixed number? – E. g. logit(opc) ~ normal(? , ? ), etc

Does OPC Preempt an Informative Prior? • An “objective” informative prior would be derived from some of the same trials used to set the OPC. • This could be dealt with by computing the joint posterior distribution of opc and pnew. But this would be extremely burdensome to implement for anything but an “inhouse” OPC (Breiter). • A non-informative prior might be “least burdensome.

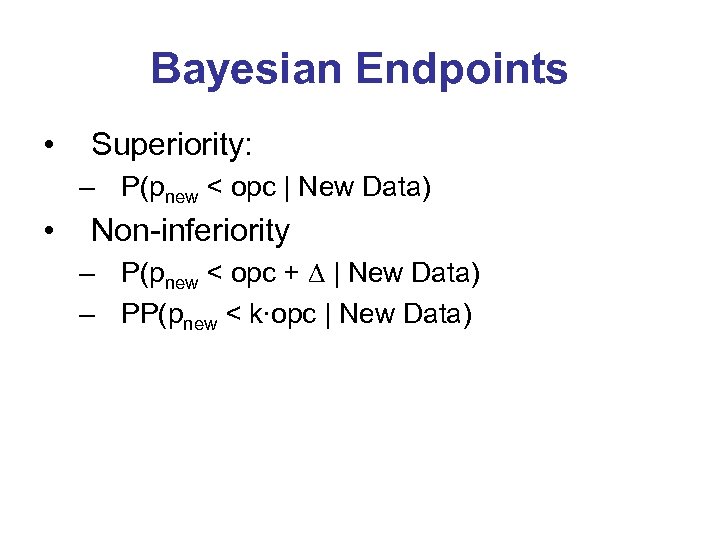

Bayesian Endpoints • Superiority: – P(pnew < opc | New Data) • Non-inferiority – P(pnew < opc + D | New Data) – PP(pnew < k∙opc | New Data)

OPC as an Agreed upon Standard • Historical Data + ? ? ? • Are evaluated to produce an agreed upon OPC as a fixed number with no uncertainty. • Can I used some of these same data to develop an informative prior? • Probably yes but needs work. The issue is what claim will be made for a successful device trial.

The prior depends on the Claim • Claim: “The complication rate (say) of the new device is not larger than (say) the median of comparable devices + D. ” – If the new device is exchangeable with a subset of comparable devices then the “correct” prior for the new device is the joint distribution of (pnew, opc) prior to the new data. – If the new device is not exchangeable with any comparable devices, then a non-informative prior should be used.

(continued) • Claim: “The complication rate of the new device is not greater than a given number (opc + D)”. – The prior can be based on device trials that are considered exchangeable with the planned trial (e. g. “in house”).

Logic Chopping? • Not necessarily. Consider – “The average male U of IA professor is taller than the average male professor. ” vs – The average male U of IA professor is taller than 5’ 11” • How you or I arrived at the 5’ 11” is not relevant to the posterior probability.

But perhaps that’s a bit disingenuous • The regulatory goal is clearly to set an OPC that will not permit the reduction of “average” safety or efficacy of a class of devices. • Of necessity, it has to be related to an estimate of some sort of “average”. • So a claim of superiority or non-inferiority to an opc is clearly made at least indirectly with reference to a “control”

Would it Make sense to Express the OPC as a PD? • If the OPC is derived from a hierarchical analysis of exchangeable device trials it would be possible to compute the predictive distribution of xnew. • Could inferiority (superiority) be defined as the observed xnew being below the 5 th (above the 95 th) percentile of the predictive distribution?

Poolability Roseann White

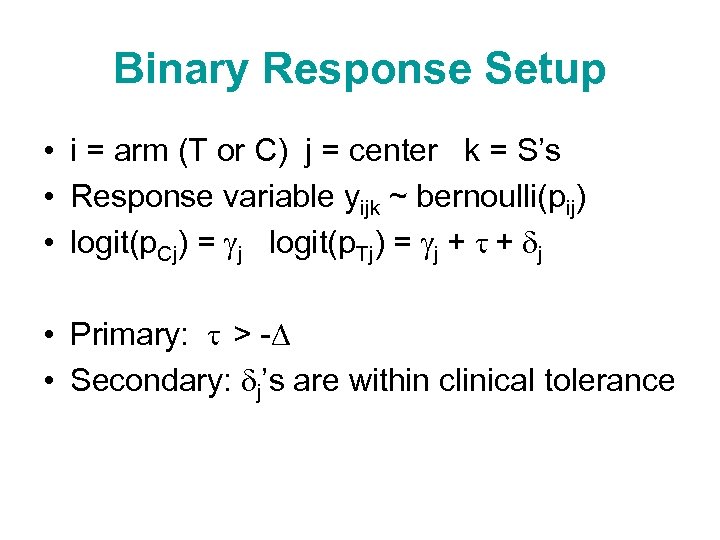

Binary Response Setup • i = arm (T or C) j = center k = S’s • Response variable yijk ~ bernoulli(pij) • logit(p. Cj) = gj logit(p. Tj) = gj + t + dj • Primary: t > -D • Secondary: dj’s are within clinical tolerance

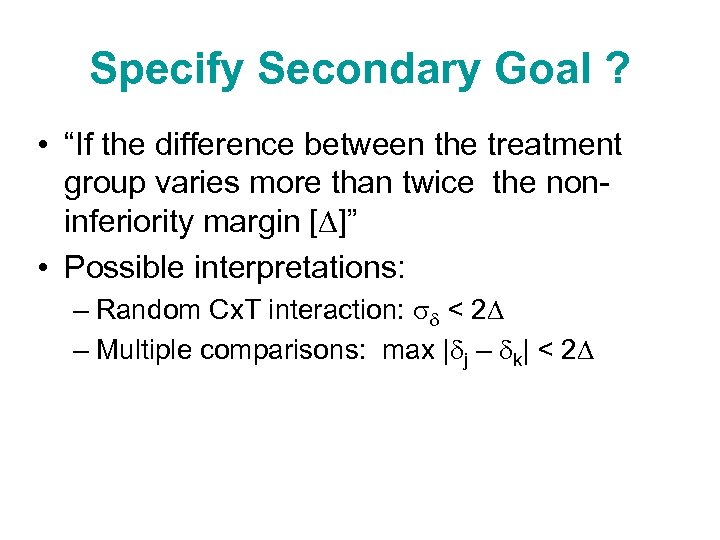

Specify Secondary Goal ? • “If the difference between the treatment group varies more than twice the noninferiority margin [D]” • Possible interpretations: – Random Cx. T interaction: sd < 2 D – Multiple comparisons: max |dj – dk| < 2 D

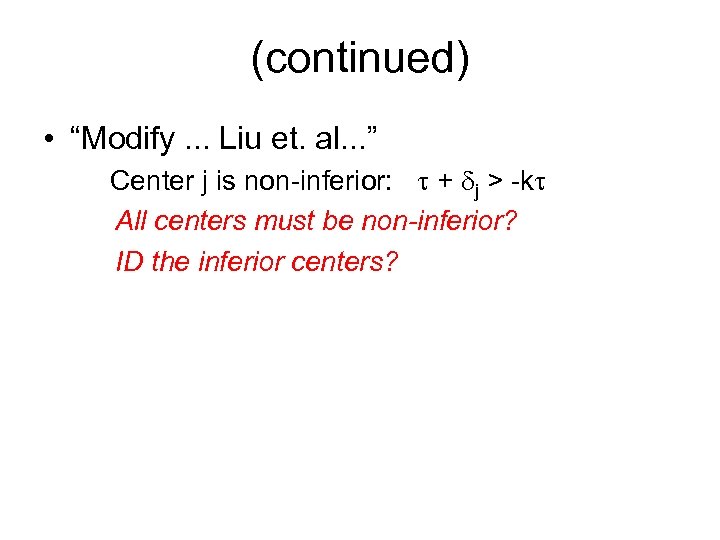

(continued) • “Modify. . . Liu et. al. . . ” Center j is non-inferior: t + dj > -kt All centers must be non-inferior? ID the inferior centers?

Why Bootstrap Resample? • To increase n of S’s in clusters? --Probably invalid • To generate a better approximation of the null sampling distribution? --- OK, but what are the details? Do you combine the two arms and resample? • Why not use random-effects Glimmix if you want to stick to frequentist methods.

Bayesian Analysis • Ad-hoc pooling is not necessary • Can produce the posterior distribution of any function of the parameters. • Can use non-informative hyper-priors, so is “objective” = data driven. • Will have the best frequentist operating characteristics (which could be calculated by simulation. )

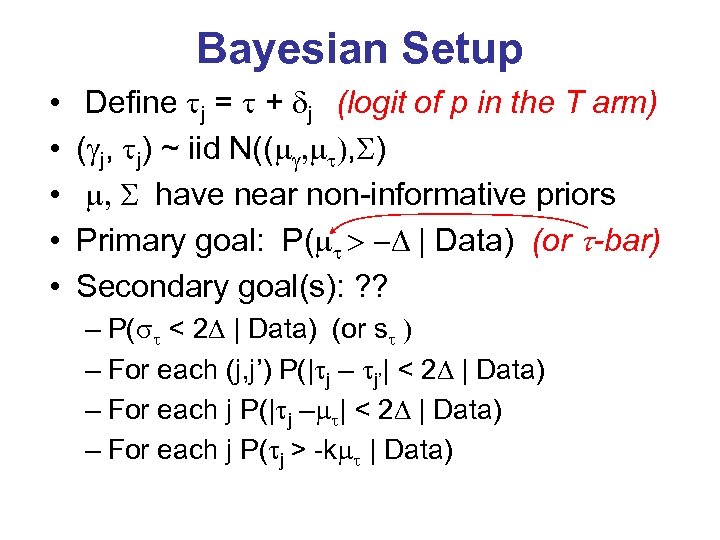

Bayesian Setup • • • Define tj = t + dj (logit of p in the T arm) (gj, tj) ~ iid N((mg, mt), S) m, S have near non-informative priors Primary goal: P(mt > -D | Data) (or t-bar) Secondary goal(s): ? ? – P(st < 2 D | Data) (or st ) – For each (j, j’) P(|tj – tj’| < 2 D | Data) – For each j P(|tj –mt| < 2 D | Data) – For each j P(tj > -kmt | Data)

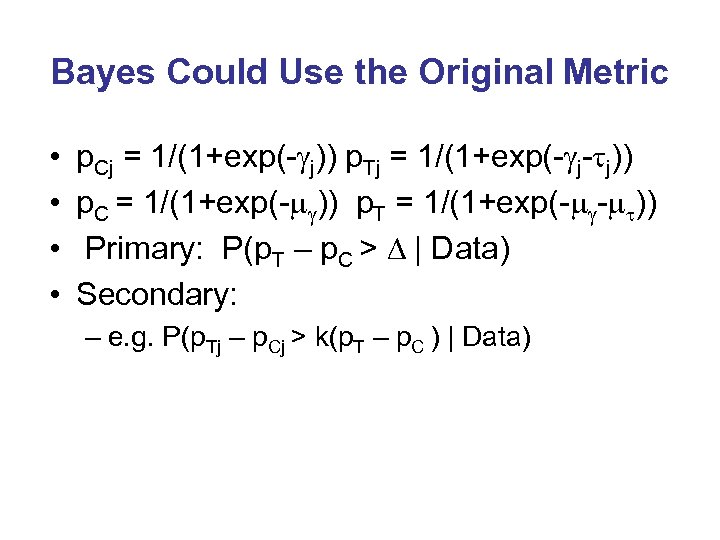

Bayes Could Use the Original Metric • • p. Cj = 1/(1+exp(-gj)) p. Tj = 1/(1+exp(-gj-tj)) p. C = 1/(1+exp(-mg)) p. T = 1/(1+exp(-mg-mt)) Primary: P(p. T – p. C > D | Data) Secondary: – e. g. P(p. Tj – p. Cj > k(p. T – p. C ) | Data)

a3043ac19dd1e91f1af9443f9abc6948.ppt