b3f20d22ede0c841da05478919145507.ppt

- Количество слайдов: 37

On-Demand Dynamic Software Analysis Joseph L. Greathouse Ph. D. Candidate Advanced Computer Architecture Laboratory University of Michigan December 12, 2011

On-Demand Dynamic Software Analysis Joseph L. Greathouse Ph. D. Candidate Advanced Computer Architecture Laboratory University of Michigan December 12, 2011

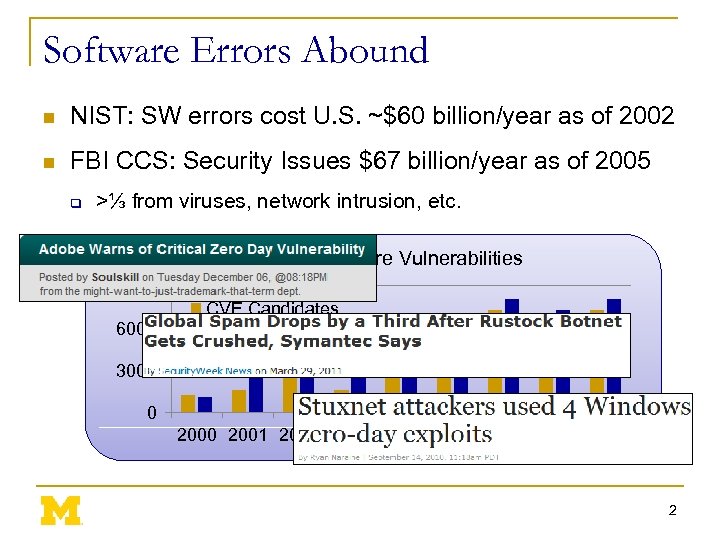

Software Errors Abound n NIST: SW errors cost U. S. ~$60 billion/year as of 2002 n FBI CCS: Security Issues $67 billion/year as of 2005 q >⅓ from viruses, network intrusion, etc. Cataloged Software Vulnerabilities 9000 6000 CVE Candidates CERT Vulnerabilities 3000 0 2001 2002 2003 2004 2005 2006 2007 2008 2

Software Errors Abound n NIST: SW errors cost U. S. ~$60 billion/year as of 2002 n FBI CCS: Security Issues $67 billion/year as of 2005 q >⅓ from viruses, network intrusion, etc. Cataloged Software Vulnerabilities 9000 6000 CVE Candidates CERT Vulnerabilities 3000 0 2001 2002 2003 2004 2005 2006 2007 2008 2

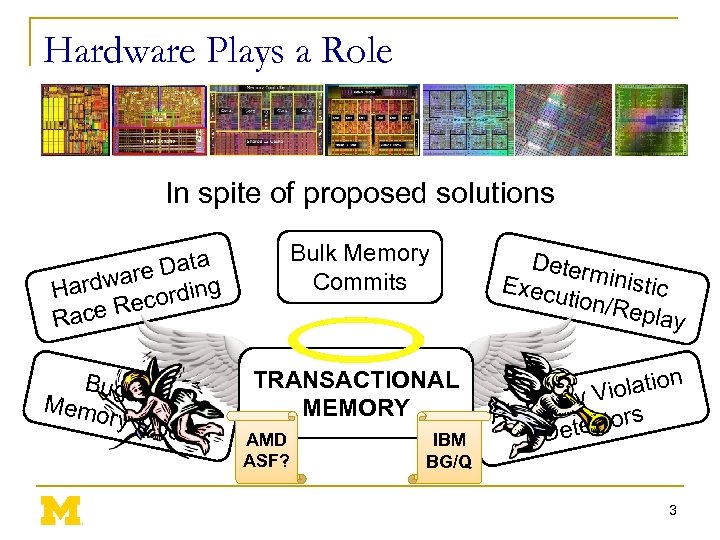

Hardware Plays a Role In spite of proposed solutions Bulk Memory Commits ta re Da a Hardw cording ce Re Ra Bug-F ree Memo ry Mo dels TRANSACTIONAL MEMORY AMD ASF? IBM BG/Q Deter Execu ministic tion/R eplay n io Violat y omicit tors At Detec 3

Hardware Plays a Role In spite of proposed solutions Bulk Memory Commits ta re Da a Hardw cording ce Re Ra Bug-F ree Memo ry Mo dels TRANSACTIONAL MEMORY AMD ASF? IBM BG/Q Deter Execu ministic tion/R eplay n io Violat y omicit tors At Detec 3

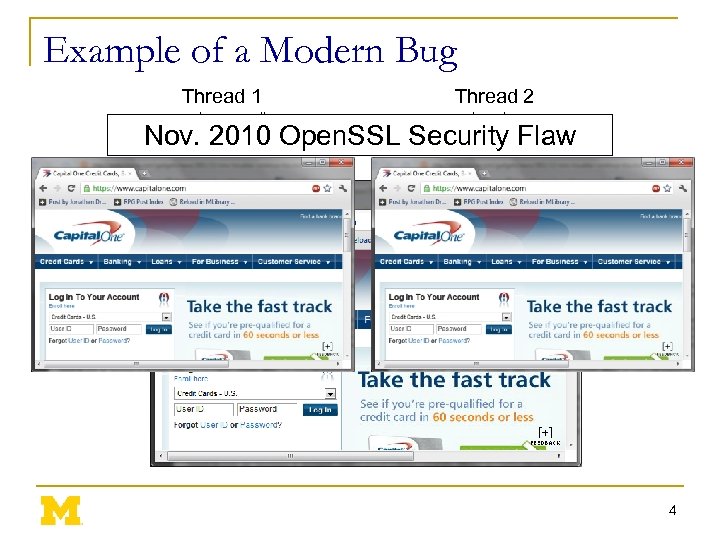

Example of a Modern Bug Thread 1 Thread 2 mylen=small mylen=large Nov. 2010 Open. SSL Security Flaw if(ptr == NULL) { len=thread_local->mylen; ptr=malloc(len); memcpy(ptr, data, len); } ptr ∅ 4

Example of a Modern Bug Thread 1 Thread 2 mylen=small mylen=large Nov. 2010 Open. SSL Security Flaw if(ptr == NULL) { len=thread_local->mylen; ptr=malloc(len); memcpy(ptr, data, len); } ptr ∅ 4

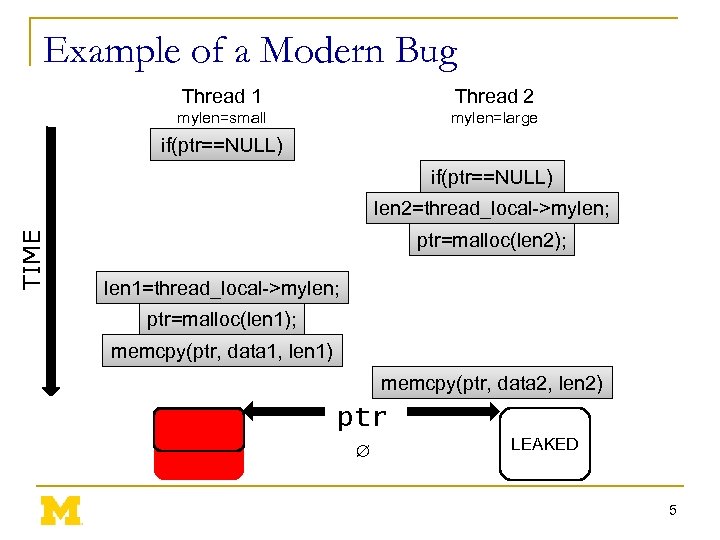

Example of a Modern Bug Thread 1 Thread 2 mylen=small mylen=large if(ptr==NULL) TIME len 2=thread_local->mylen; ptr=malloc(len 2); len 1=thread_local->mylen; ptr=malloc(len 1); memcpy(ptr, data 1, len 1) memcpy(ptr, data 2, len 2) ptr ∅ LEAKED 5

Example of a Modern Bug Thread 1 Thread 2 mylen=small mylen=large if(ptr==NULL) TIME len 2=thread_local->mylen; ptr=malloc(len 2); len 1=thread_local->mylen; ptr=malloc(len 1); memcpy(ptr, data 1, len 1) memcpy(ptr, data 2, len 2) ptr ∅ LEAKED 5

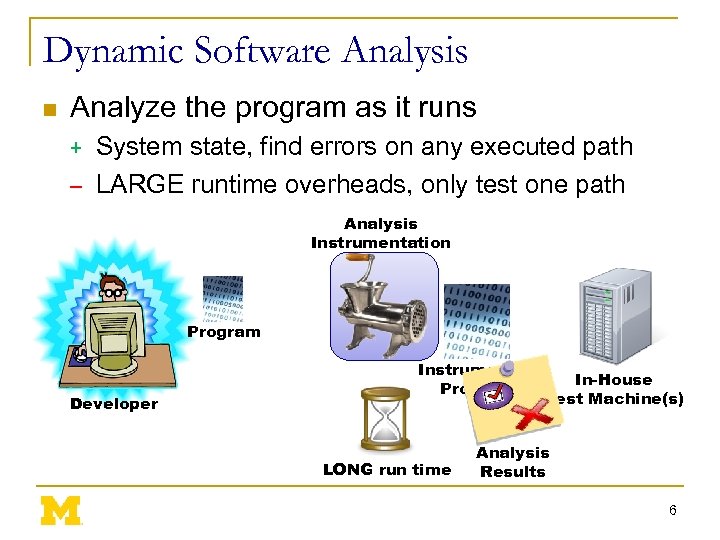

Dynamic Software Analysis n Analyze the program as it runs System state, find errors on any executed path – LARGE runtime overheads, only test one path + Analysis Instrumentation Program Developer Instrumented Program LONG run time In-House Test Machine(s) Analysis Results 6

Dynamic Software Analysis n Analyze the program as it runs System state, find errors on any executed path – LARGE runtime overheads, only test one path + Analysis Instrumentation Program Developer Instrumented Program LONG run time In-House Test Machine(s) Analysis Results 6

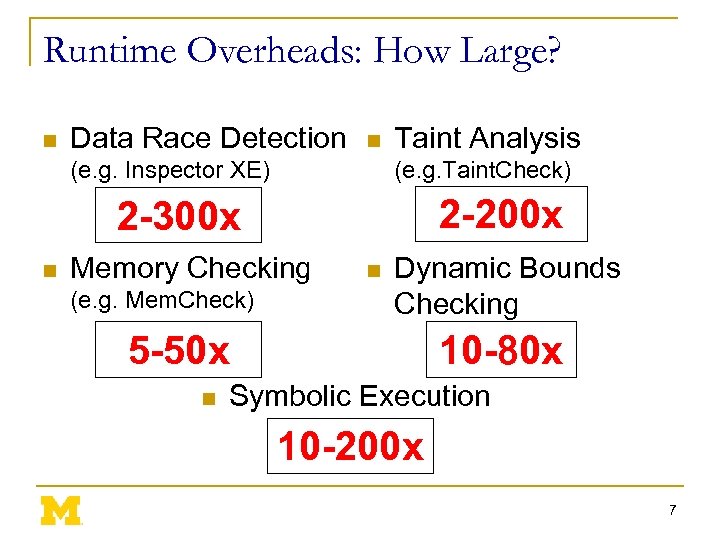

Runtime Overheads: How Large? n Data Race Detection n (e. g. Inspector XE) Taint Analysis (e. g. Taint. Check) 2 -200 x 2 -300 x n Memory Checking (e. g. Mem. Check) n Dynamic Bounds Checking 5 -50 x n 10 -80 x Symbolic Execution 10 -200 x 7

Runtime Overheads: How Large? n Data Race Detection n (e. g. Inspector XE) Taint Analysis (e. g. Taint. Check) 2 -200 x 2 -300 x n Memory Checking (e. g. Mem. Check) n Dynamic Bounds Checking 5 -50 x n 10 -80 x Symbolic Execution 10 -200 x 7

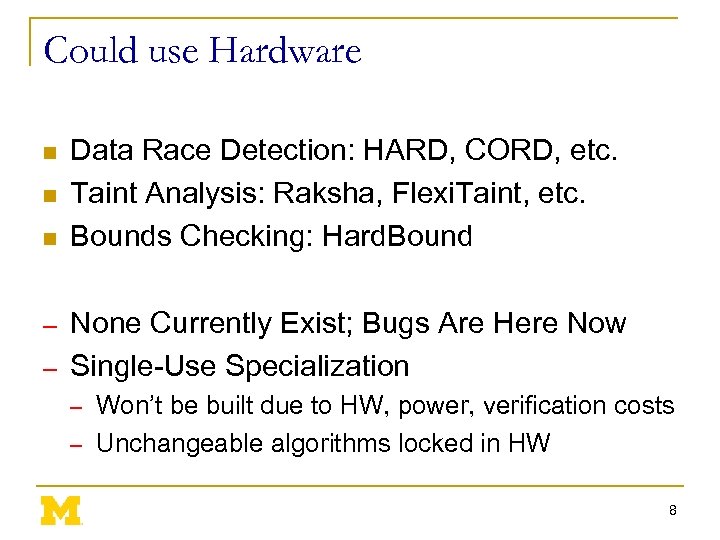

Could use Hardware n n n Data Race Detection: HARD, CORD, etc. Taint Analysis: Raksha, Flexi. Taint, etc. Bounds Checking: Hard. Bound None Currently Exist; Bugs Are Here Now – Single-Use Specialization – Won’t be built due to HW, power, verification costs – Unchangeable algorithms locked in HW – 8

Could use Hardware n n n Data Race Detection: HARD, CORD, etc. Taint Analysis: Raksha, Flexi. Taint, etc. Bounds Checking: Hard. Bound None Currently Exist; Bugs Are Here Now – Single-Use Specialization – Won’t be built due to HW, power, verification costs – Unchangeable algorithms locked in HW – 8

Goals of this Talk n Accelerate SW Analyses Using Existing HW n Run Tests On Demand: Only When Needed n Explore Future Generic HW Additions 9

Goals of this Talk n Accelerate SW Analyses Using Existing HW n Run Tests On Demand: Only When Needed n Explore Future Generic HW Additions 9

Outline n Problem Statement n Background Information q n Demand-Driven Dynamic Dataflow Analysis Proposed Solutions q q Demand-Driven Data Race Detection Unlimited Hardware Watchpoints 10

Outline n Problem Statement n Background Information q n Demand-Driven Dynamic Dataflow Analysis Proposed Solutions q q Demand-Driven Data Race Detection Unlimited Hardware Watchpoints 10

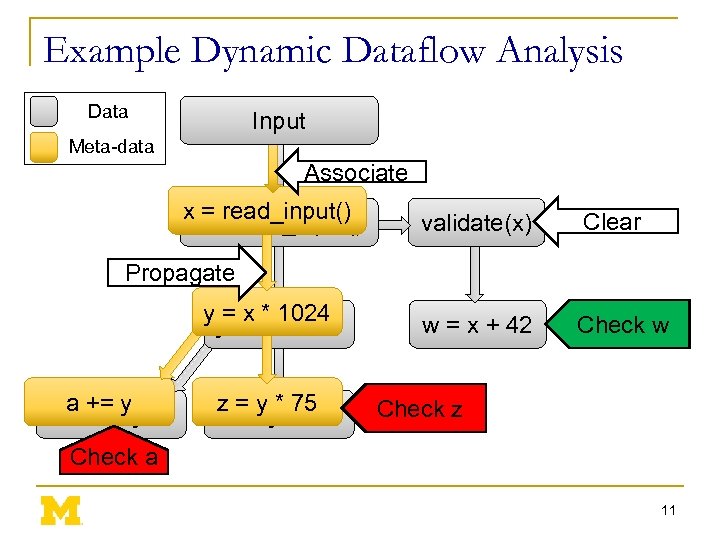

Example Dynamic Dataflow Analysis Data Input Meta-data Associate x = read_input() validate(x) Clear w = x + 42 Check w Propagate y = x * 1024 a += y z = y * 75 Check z Check a 11

Example Dynamic Dataflow Analysis Data Input Meta-data Associate x = read_input() validate(x) Clear w = x + 42 Check w Propagate y = x * 1024 a += y z = y * 75 Check z Check a 11

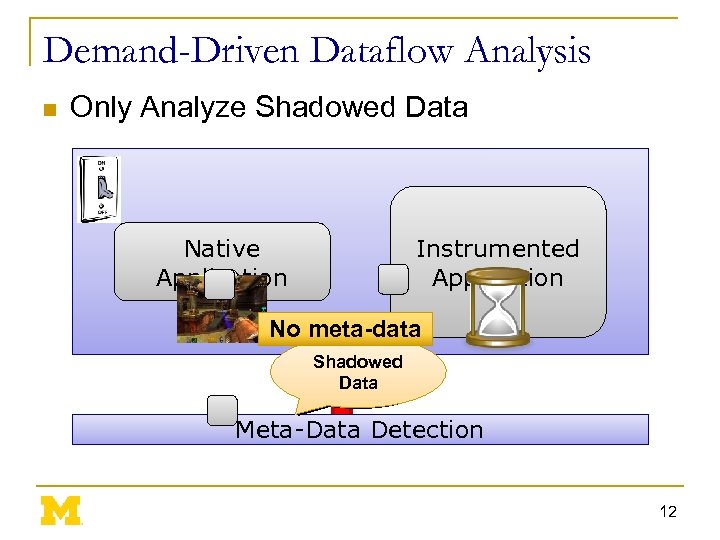

Demand-Driven Dataflow Analysis n Only Analyze Shadowed Data Instrumented Application Native Application No meta-data Non. Shadowed Data Meta-Data Detection 12

Demand-Driven Dataflow Analysis n Only Analyze Shadowed Data Instrumented Application Native Application No meta-data Non. Shadowed Data Meta-Data Detection 12

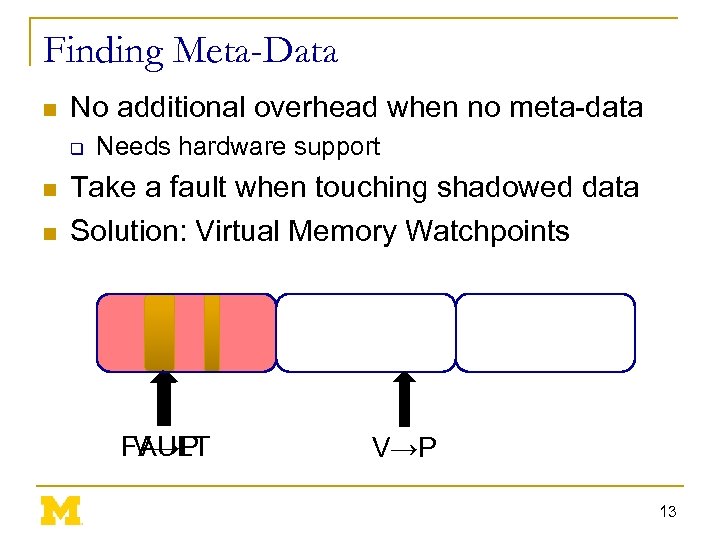

Finding Meta-Data n No additional overhead when no meta-data q n n Needs hardware support Take a fault when touching shadowed data Solution: Virtual Memory Watchpoints V→P FAULT V→P 13

Finding Meta-Data n No additional overhead when no meta-data q n n Needs hardware support Take a fault when touching shadowed data Solution: Virtual Memory Watchpoints V→P FAULT V→P 13

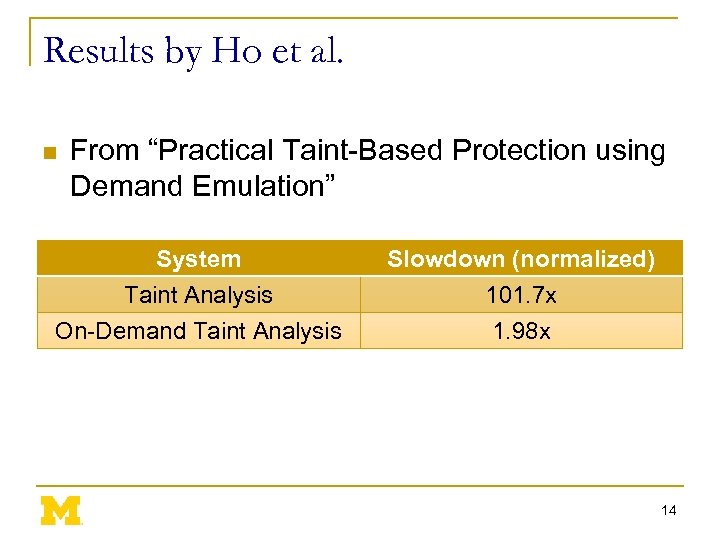

Results by Ho et al. n From “Practical Taint-Based Protection using Demand Emulation” System Taint Analysis On-Demand Taint Analysis Slowdown (normalized) 101. 7 x 1. 98 x 14

Results by Ho et al. n From “Practical Taint-Based Protection using Demand Emulation” System Taint Analysis On-Demand Taint Analysis Slowdown (normalized) 101. 7 x 1. 98 x 14

Outline n Problem Statement n Background Information q n Demand-Driven Dynamic Dataflow Analysis Proposed Solutions q q Demand-Driven Data Race Detection Unlimited Hardware Watchpoints 15

Outline n Problem Statement n Background Information q n Demand-Driven Dynamic Dataflow Analysis Proposed Solutions q q Demand-Driven Data Race Detection Unlimited Hardware Watchpoints 15

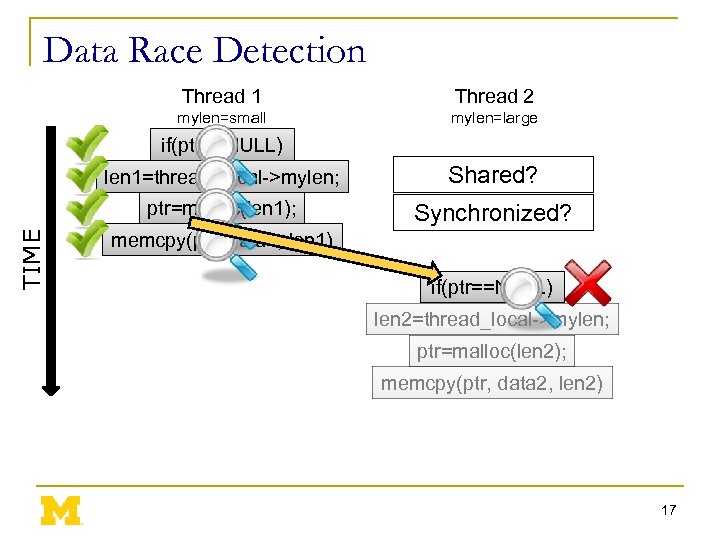

Software Data Race Detection n Add checks around every memory access n Find inter-thread sharing events n Synchronization between write-shared accesses? q No? Data race. 16

Software Data Race Detection n Add checks around every memory access n Find inter-thread sharing events n Synchronization between write-shared accesses? q No? Data race. 16

Data Race Detection Thread 1 Thread 2 mylen=small mylen=large if(ptr==NULL) Shared? ptr=malloc(len 1); TIME len 1=thread_local->mylen; Synchronized? memcpy(ptr, data 1, len 1) if(ptr==NULL) len 2=thread_local->mylen; ptr=malloc(len 2); memcpy(ptr, data 2, len 2) 17

Data Race Detection Thread 1 Thread 2 mylen=small mylen=large if(ptr==NULL) Shared? ptr=malloc(len 1); TIME len 1=thread_local->mylen; Synchronized? memcpy(ptr, data 1, len 1) if(ptr==NULL) len 2=thread_local->mylen; ptr=malloc(len 2); memcpy(ptr, data 2, len 2) 17

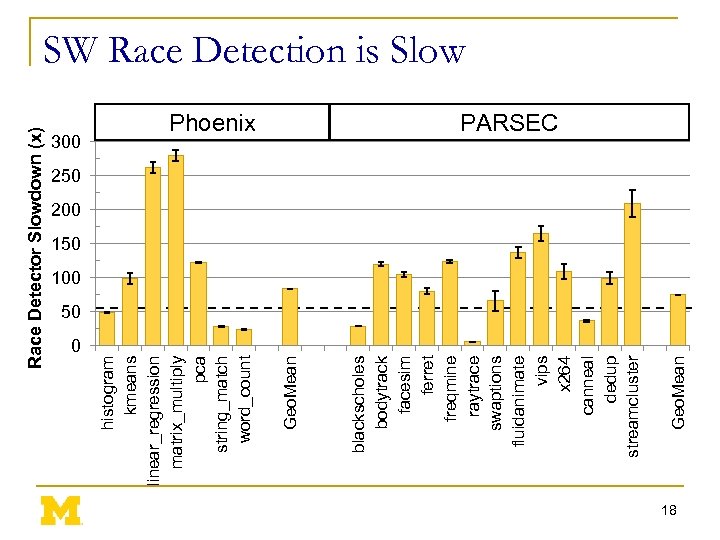

Race Detector Slowdown (x) 0 Geo. Mean Phoenix blackscholes bodytrack facesim ferret freqmine raytrace swaptions fluidanimate vips x 264 canneal dedup streamcluster Geo. Mean 300 histogram kmeans linear_regression matrix_multiply pca string_match word_count SW Race Detection is Slow PARSEC 250 200 150 100 50 18

Race Detector Slowdown (x) 0 Geo. Mean Phoenix blackscholes bodytrack facesim ferret freqmine raytrace swaptions fluidanimate vips x 264 canneal dedup streamcluster Geo. Mean 300 histogram kmeans linear_regression matrix_multiply pca string_match word_count SW Race Detection is Slow PARSEC 250 200 150 100 50 18

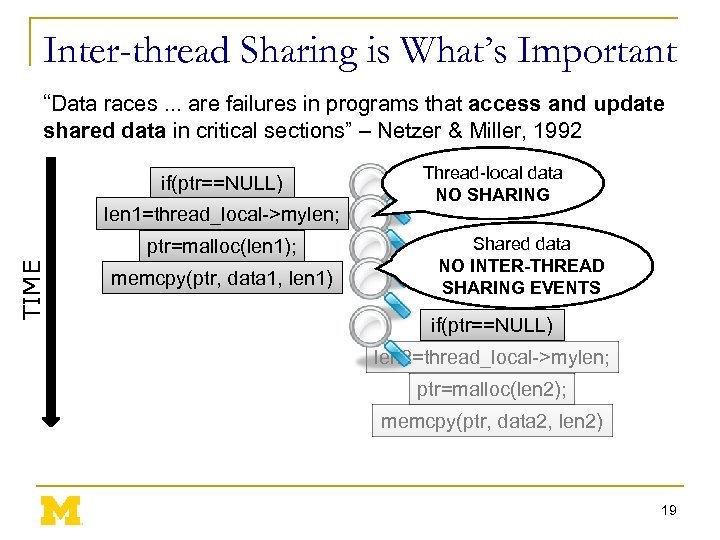

Inter-thread Sharing is What’s Important “Data races. . . are failures in programs that access and update shared data in critical sections” – Netzer & Miller, 1992 if(ptr==NULL) len 1=thread_local->mylen; TIME ptr=malloc(len 1); memcpy(ptr, data 1, len 1) Thread-local data NO SHARING Shared data NO INTER-THREAD SHARING EVENTS if(ptr==NULL) len 2=thread_local->mylen; ptr=malloc(len 2); memcpy(ptr, data 2, len 2) 19

Inter-thread Sharing is What’s Important “Data races. . . are failures in programs that access and update shared data in critical sections” – Netzer & Miller, 1992 if(ptr==NULL) len 1=thread_local->mylen; TIME ptr=malloc(len 1); memcpy(ptr, data 1, len 1) Thread-local data NO SHARING Shared data NO INTER-THREAD SHARING EVENTS if(ptr==NULL) len 2=thread_local->mylen; ptr=malloc(len 2); memcpy(ptr, data 2, len 2) 19

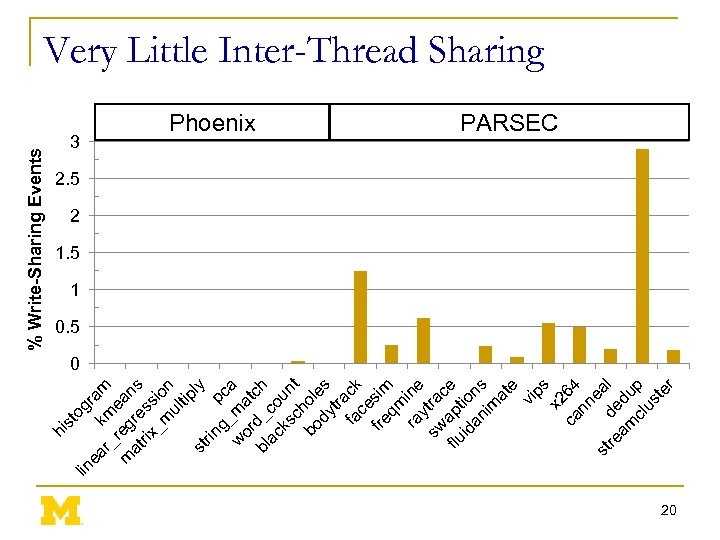

lin st og ea k ram r_ m m reg ean at re s rix ss _m io ul n tip ly st rin g_ pca w ma or t bl d_c ch ac o ks un c t bo hole dy s tra fa ck ce fre sim qm ra ine y sw tra c flu apt e id ion an s im at e vi ps x 2 ca 64 nn e st de al re am du cl p us te r hi % Write-Sharing Events Very Little Inter-Thread Sharing 3 Phoenix PARSEC 2. 5 2 1. 5 1 0. 5 0 20

lin st og ea k ram r_ m m reg ean at re s rix ss _m io ul n tip ly st rin g_ pca w ma or t bl d_c ch ac o ks un c t bo hole dy s tra fa ck ce fre sim qm ra ine y sw tra c flu apt e id ion an s im at e vi ps x 2 ca 64 nn e st de al re am du cl p us te r hi % Write-Sharing Events Very Little Inter-Thread Sharing 3 Phoenix PARSEC 2. 5 2 1. 5 1 0. 5 0 20

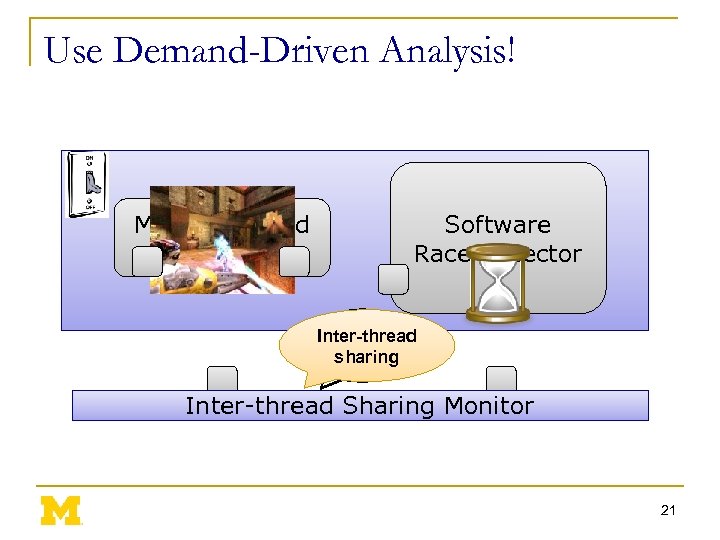

Use Demand-Driven Analysis! Multi-threaded Application Software Race Detector Inter-thread Local Access sharing Inter-thread Sharing Monitor 21

Use Demand-Driven Analysis! Multi-threaded Application Software Race Detector Inter-thread Local Access sharing Inter-thread Sharing Monitor 21

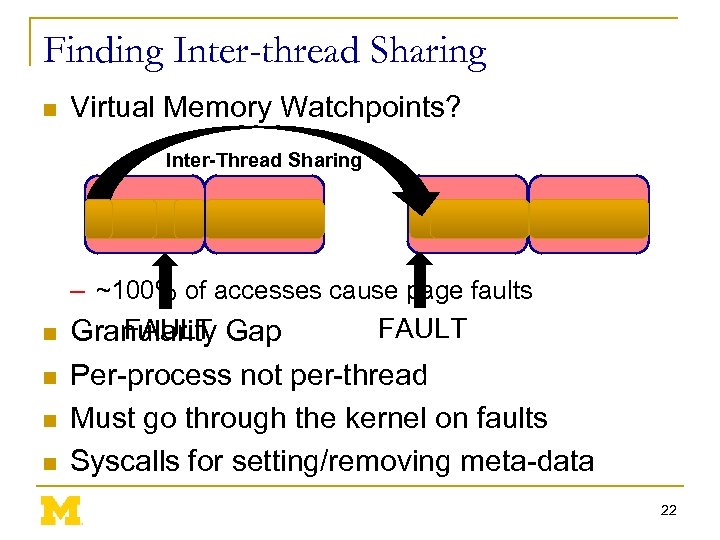

Finding Inter-thread Sharing n Virtual Memory Watchpoints? Inter-Thread Sharing – ~100% of accesses cause page faults n n FAULT Granularity Gap Per-process not per-thread Must go through the kernel on faults Syscalls for setting/removing meta-data 22

Finding Inter-thread Sharing n Virtual Memory Watchpoints? Inter-Thread Sharing – ~100% of accesses cause page faults n n FAULT Granularity Gap Per-process not per-thread Must go through the kernel on faults Syscalls for setting/removing meta-data 22

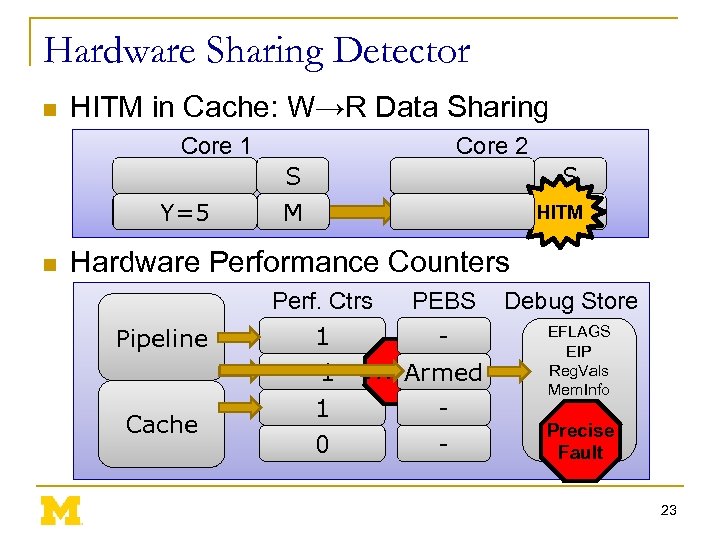

Hardware Sharing Detector n HITM in Cache: W→R Data Sharing Core 1 Write Y=5 n Core 2 S M I Read Y S HITM I Hardware Performance Counters Perf. Ctrs Pipeline Cache 1 0 -1 0 0 PEBS FAULT Armed - Debug Store EFLAGS EIP Reg. Vals Mem. Info Precise Fault 23

Hardware Sharing Detector n HITM in Cache: W→R Data Sharing Core 1 Write Y=5 n Core 2 S M I Read Y S HITM I Hardware Performance Counters Perf. Ctrs Pipeline Cache 1 0 -1 0 0 PEBS FAULT Armed - Debug Store EFLAGS EIP Reg. Vals Mem. Info Precise Fault 23

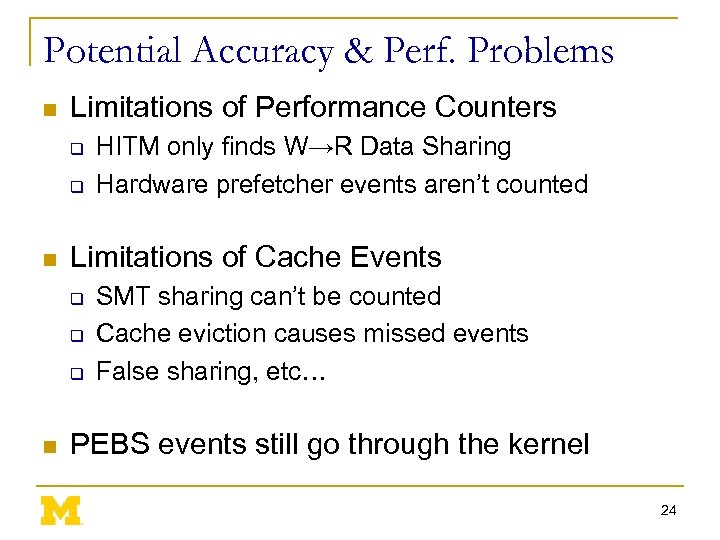

Potential Accuracy & Perf. Problems n Limitations of Performance Counters q q n Limitations of Cache Events q q q n HITM only finds W→R Data Sharing Hardware prefetcher events aren’t counted SMT sharing can’t be counted Cache eviction causes missed events False sharing, etc… PEBS events still go through the kernel 24

Potential Accuracy & Perf. Problems n Limitations of Performance Counters q q n Limitations of Cache Events q q q n HITM only finds W→R Data Sharing Hardware prefetcher events aren’t counted SMT sharing can’t be counted Cache eviction causes missed events False sharing, etc… PEBS events still go through the kernel 24

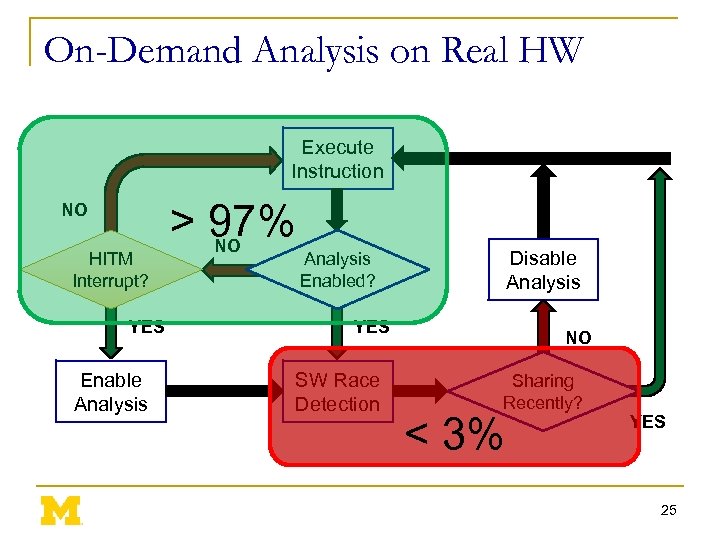

On-Demand Analysis on Real HW Execute Instruction NO HITM Interrupt? YES Enable Analysis > 97% NO Disable Analysis Enabled? YES SW Race Detection NO Sharing Recently? < 3% YES 25

On-Demand Analysis on Real HW Execute Instruction NO HITM Interrupt? YES Enable Analysis > 97% NO Disable Analysis Enabled? YES SW Race Detection NO Sharing Recently? < 3% YES 25

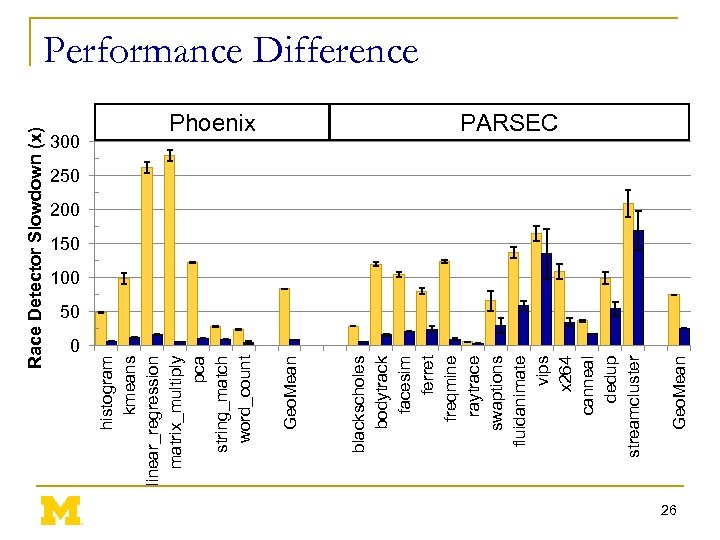

Race Detector Slowdown (x) 0 Geo. Mean Phoenix blackscholes bodytrack facesim ferret freqmine raytrace swaptions fluidanimate vips x 264 canneal dedup streamcluster Geo. Mean 300 histogram kmeans linear_regression matrix_multiply pca string_match word_count Performance Difference PARSEC 250 200 150 100 50 26

Race Detector Slowdown (x) 0 Geo. Mean Phoenix blackscholes bodytrack facesim ferret freqmine raytrace swaptions fluidanimate vips x 264 canneal dedup streamcluster Geo. Mean 300 histogram kmeans linear_regression matrix_multiply pca string_match word_count Performance Difference PARSEC 250 200 150 100 50 26

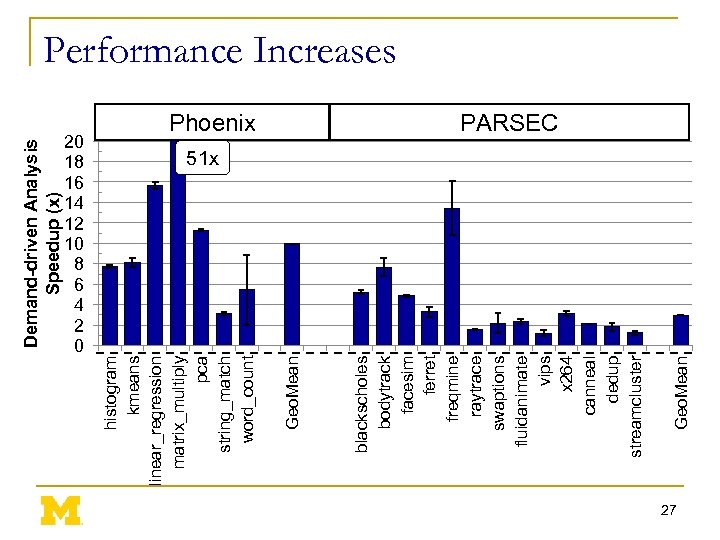

Demand-driven Analysis Speedup (x) 20 18 16 14 12 10 8 6 4 2 0 Geo. Mean Phoenix blackscholes bodytrack facesim ferret freqmine raytrace swaptions fluidanimate vips x 264 canneal dedup streamcluster Geo. Mean histogram kmeans linear_regression matrix_multiply pca string_match word_count Performance Increases PARSEC 51 x 27

Demand-driven Analysis Speedup (x) 20 18 16 14 12 10 8 6 4 2 0 Geo. Mean Phoenix blackscholes bodytrack facesim ferret freqmine raytrace swaptions fluidanimate vips x 264 canneal dedup streamcluster Geo. Mean histogram kmeans linear_regression matrix_multiply pca string_match word_count Performance Increases PARSEC 51 x 27

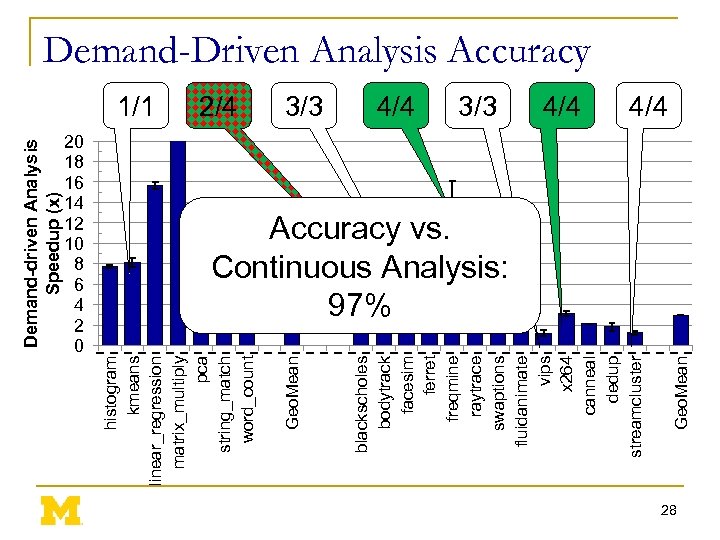

Demand-driven Analysis Speedup (x) 20 18 16 14 12 10 8 6 4 2 0 3/3 4/4 Geo. Mean 2/4 blackscholes bodytrack facesim ferret freqmine raytrace swaptions fluidanimate vips x 264 canneal dedup streamcluster 1/1 Geo. Mean histogram kmeans linear_regression matrix_multiply pca string_match word_count Demand-Driven Analysis Accuracy 4/4 Accuracy vs. Continuous Analysis: 97% 28

Demand-driven Analysis Speedup (x) 20 18 16 14 12 10 8 6 4 2 0 3/3 4/4 Geo. Mean 2/4 blackscholes bodytrack facesim ferret freqmine raytrace swaptions fluidanimate vips x 264 canneal dedup streamcluster 1/1 Geo. Mean histogram kmeans linear_regression matrix_multiply pca string_match word_count Demand-Driven Analysis Accuracy 4/4 Accuracy vs. Continuous Analysis: 97% 28

Outline n Problem Statement n Background Information q n Demand-Driven Dynamic Dataflow Analysis Proposed Solutions q q Demand-Driven Data Race Detection Unlimited Hardware Watchpoints 29

Outline n Problem Statement n Background Information q n Demand-Driven Dynamic Dataflow Analysis Proposed Solutions q q Demand-Driven Data Race Detection Unlimited Hardware Watchpoints 29

Watchpoints Globally Useful n Byte/Word Accurate and Per-Thread 30

Watchpoints Globally Useful n Byte/Word Accurate and Per-Thread 30

Watchpoint-Based Software Analyses n Taint Analysis n Data Race Detection n Deterministic Execution n Canary-Based Bounds Checking n Speculative Program Optimization n Hybrid Transactional Memory 31

Watchpoint-Based Software Analyses n Taint Analysis n Data Race Detection n Deterministic Execution n Canary-Based Bounds Checking n Speculative Program Optimization n Hybrid Transactional Memory 31

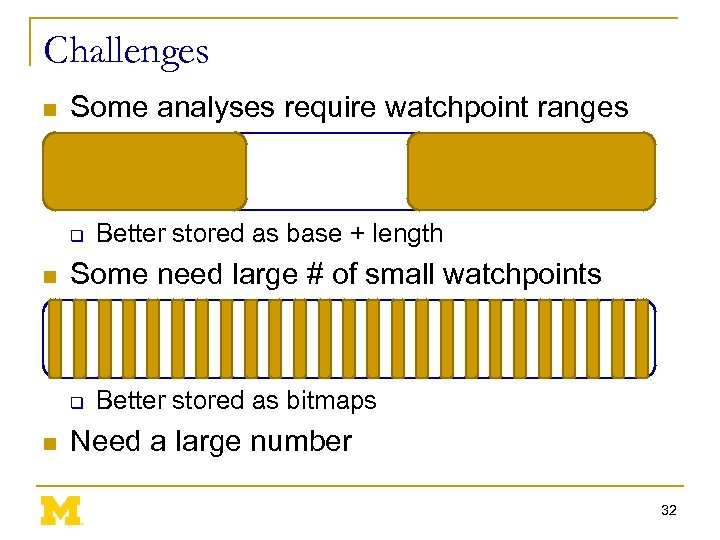

Challenges n Some analyses require watchpoint ranges q n Some need large # of small watchpoints q n Better stored as base + length Better stored as bitmaps Need a large number 32

Challenges n Some analyses require watchpoint ranges q n Some need large # of small watchpoints q n Better stored as base + length Better stored as bitmaps Need a large number 32

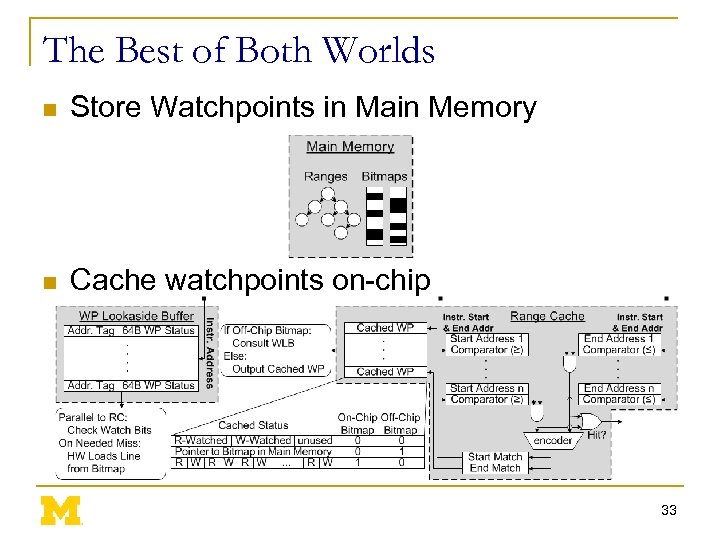

The Best of Both Worlds n Store Watchpoints in Main Memory n Cache watchpoints on-chip 33

The Best of Both Worlds n Store Watchpoints in Main Memory n Cache watchpoints on-chip 33

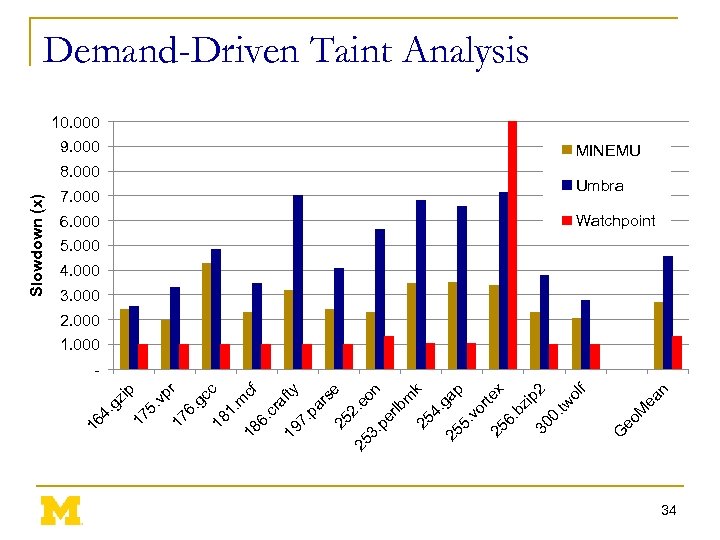

p 7. 000 6. 000 n 8. 000 M ea 9. 000 G eo 18 cf 6. cr af 19 ty 7. pa rs 25 e 25 2. e o 3. pe n rlb m 25 k 4. 25 gap 5. vo r 25 tex 6. bz ip 2 30 0. tw ol f m 18 1. gc c 17 6. . v pr 17 5 . g zi 16 4 Slowdown (x) Demand-Driven Taint Analysis 10. 000 MINEMU Umbra Watchpoint 5. 000 4. 000 3. 000 2. 000 1. 000 - 34

p 7. 000 6. 000 n 8. 000 M ea 9. 000 G eo 18 cf 6. cr af 19 ty 7. pa rs 25 e 25 2. e o 3. pe n rlb m 25 k 4. 25 gap 5. vo r 25 tex 6. bz ip 2 30 0. tw ol f m 18 1. gc c 17 6. . v pr 17 5 . g zi 16 4 Slowdown (x) Demand-Driven Taint Analysis 10. 000 MINEMU Umbra Watchpoint 5. 000 4. 000 3. 000 2. 000 1. 000 - 34

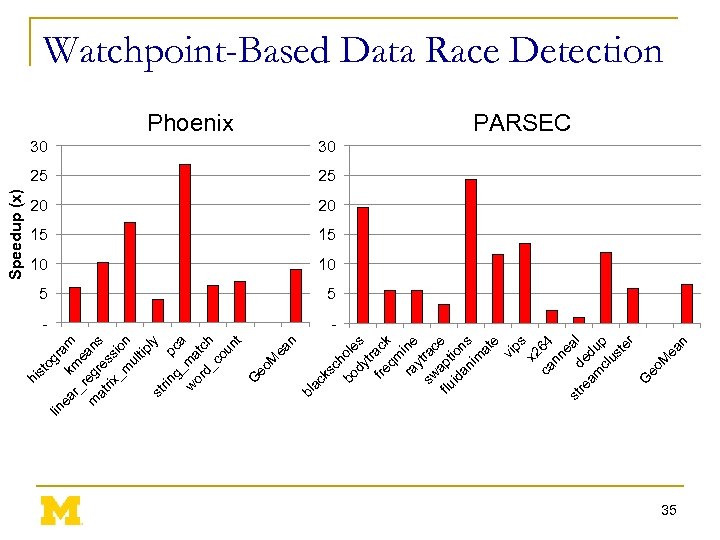

lin g ea k ram r_ me r m egr ans at e rix ss _m ion ul tip ly st rin g_ pca w ma or d_ tch co un t G eo M ea n bl ac ks ch bo ole dy s tr fre ack qm ra ine y sw trac flu apt e id ion an s im at e vi ps x 2 ca 64 nn ea st re de l am du cl p us te r G eo M ea n hi st o Speedup (x) Watchpoint-Based Data Race Detection Phoenix PARSEC 30 30 25 25 20 20 15 15 10 10 5 5 - 35

lin g ea k ram r_ me r m egr ans at e rix ss _m ion ul tip ly st rin g_ pca w ma or d_ tch co un t G eo M ea n bl ac ks ch bo ole dy s tr fre ack qm ra ine y sw trac flu apt e id ion an s im at e vi ps x 2 ca 64 nn ea st re de l am du cl p us te r G eo M ea n hi st o Speedup (x) Watchpoint-Based Data Race Detection Phoenix PARSEC 30 30 25 25 20 20 15 15 10 10 5 5 - 35

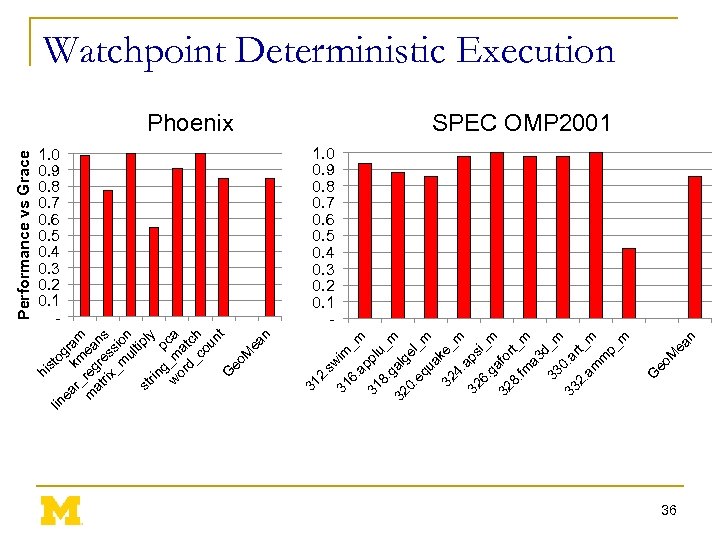

ea n eo M Phoenix G Performance vs Grace 1. 0 0. 9 0. 8 0. 7 0. 6 0. 5 0. 4 0. 3 0. 2 0. 1 - 31 2. sw im 31 _ 6. ap m p 31 8. lu_ m g 32 alg e 0. eq l_m ua 32 ke_ m 4. ap 32 6. si_m g 32 afo rt 8. fm _m a 3 d 33 _m 33 0. a rt 2. am _m m p_ m lin to gr ea r_ km am m reg ea at re ns rix s _m sio ul n tip st ly rin pc g w _m a or a d_ tch co un t G eo M ea n hi s Watchpoint Deterministic Execution SPEC OMP 2001 1. 0 0. 9 0. 8 0. 7 0. 6 0. 5 0. 4 0. 3 0. 2 0. 1 - 36

ea n eo M Phoenix G Performance vs Grace 1. 0 0. 9 0. 8 0. 7 0. 6 0. 5 0. 4 0. 3 0. 2 0. 1 - 31 2. sw im 31 _ 6. ap m p 31 8. lu_ m g 32 alg e 0. eq l_m ua 32 ke_ m 4. ap 32 6. si_m g 32 afo rt 8. fm _m a 3 d 33 _m 33 0. a rt 2. am _m m p_ m lin to gr ea r_ km am m reg ea at re ns rix s _m sio ul n tip st ly rin pc g w _m a or a d_ tch co un t G eo M ea n hi s Watchpoint Deterministic Execution SPEC OMP 2001 1. 0 0. 9 0. 8 0. 7 0. 6 0. 5 0. 4 0. 3 0. 2 0. 1 - 36

BACKUP SLIDES 37

BACKUP SLIDES 37