55fb409fed3389c6c4c190d5c3424bca.ppt

- Количество слайдов: 69

NSF Middleware Initiative: Focus on Collaboration Kevin Thompson, NSF Ken Klingenstein, Internet 2 John Mc. Gee, Grids Center Mary Fran Yafchak, SURA Ann West, EDUCAUSE/Internet 2

Copyright Kevin Thompson, Ken Klingenstein, John Mc. Gee, Mary Fran Yafchak, and Ann West 2003. This work is the intellectual property of the authors. Permission is granted for this material to be shared for non-commercial, educational purposes, provided that this copyright statement appears on the reproduced materials and notice is given that the copying is by permission of the authors. To disseminate otherwise or to republish requires written permission from the authors.

Topics • NMI Overview and New Awards • NMI-EDIT • GRIDS Center • NMI Integration Testbed • NMI and NMI-EDIT Outreach

NSF Middleware Initiative • Purpose • To design, develop, deploy and support a set of reusable, expandable set of middleware functions and services that benefit applications in a networked environment

A Vision for Middleware To allow scientists and engineers the ability to transparently use and share distributed resources, such as computers, data, and instruments To develop effective collaboration and communications tools such as Grid technologies, desktop video, and other advanced services to expedite research and education, and To develop a working architecture and approach which can be extended to Internet users around the world. Middleware is the stuff that makes “transparently use” happen, providing persistency, consistency, security, privacy and capability

NMI Status • 21 Active Awards (Prior to new awards) – 3 Cooperative Agreements – 18 individual research awards • Focus on integration and deployment of grid and middleware for S&E – Near-term deliverables (working code) – coordination and persuasion rather than standards – Significant effort on interoperability, testing, inclusion • NMI Software Releases, best practices, white papers – NMI Release 1 – May, 2002 – NMI Release 2 – Oct, 2002 – NMI Release 3 – Apr, 2003 – NMI Release 4 - est. Dec, 2003

NMI Organization Core NMI Team – GRIDS Center • ISI, NCSA, U Chicago, UCSD & U Wisconsin – EDIT Team (Enterprise and Desktop Integration Technologies) • EDUCAUSE, Internet 2 & SURA – Several additions in 2003 Grants for R & D – Year 1 -- 9 grants – Year 2 -- 9 grants – Year 3 (New) -- 10 grants

2003 NSF Middleware Initiative Program Awards • 20 awards totaling $9 M – 10 “System Integrator” awards • Focus – to further develop the integration and support infrastructure of middleware for the longer term • Themes - extending and deepening current activities, and expanding into new areas – 10 smaller awards focused on near-term capabilities and tool development • Focus – to encourage the development of additional new middleware components and capabilities for the NMI program

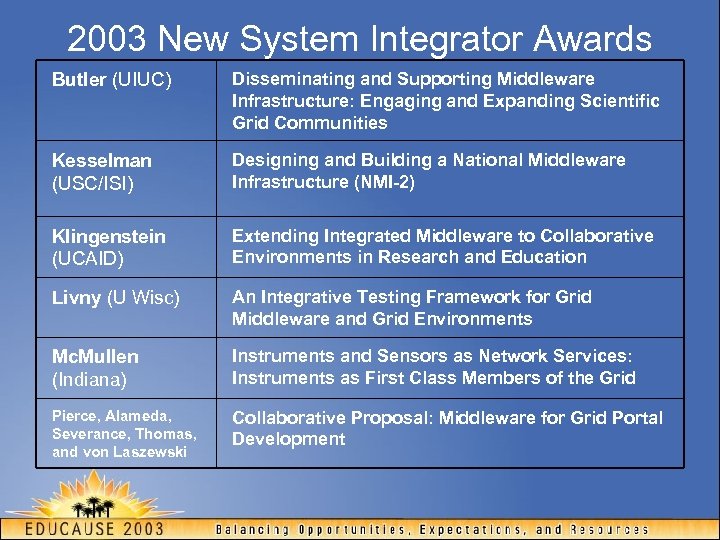

2003 New System Integrator Awards Butler (UIUC) Disseminating and Supporting Middleware Infrastructure: Engaging and Expanding Scientific Grid Communities Kesselman (USC/ISI) Designing and Building a National Middleware Infrastructure (NMI-2) Klingenstein (UCAID) Extending Integrated Middleware to Collaborative Environments in Research and Education Livny (U Wisc) An Integrative Testing Framework for Grid Middleware and Grid Environments Mc. Mullen (Indiana) Instruments and Sensors as Network Services: Instruments as First Class Members of the Grid Pierce, Alameda, Severance, Thomas, and von Laszewski Collaborative Proposal: Middleware for Grid Portal Development

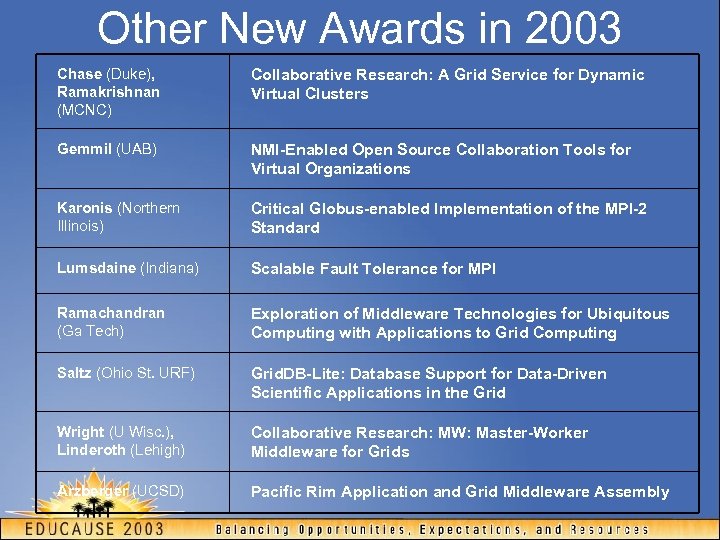

Other New Awards in 2003 Chase (Duke), Ramakrishnan (MCNC) Collaborative Research: A Grid Service for Dynamic Virtual Clusters Gemmil (UAB) NMI-Enabled Open Source Collaboration Tools for Virtual Organizations Karonis (Northern Illinois) Critical Globus-enabled Implementation of the MPI-2 Standard Lumsdaine (Indiana) Scalable Fault Tolerance for MPI Ramachandran (Ga Tech) Exploration of Middleware Technologies for Ubiquitous Computing with Applications to Grid Computing Saltz (Ohio St. URF) Grid. DB-Lite: Database Support for Data-Driven Scientific Applications in the Grid Wright (U Wisc. ), Linderoth (Lehigh) Collaborative Research: MW: Master-Worker Middleware for Grids Arzberger (UCSD) Pacific Rim Application and Grid Middleware Assembly

Looking Ahead • There will be an NMI solicitation in 2004 • Exact funding level not set • NMI program is expected to be a primary focus area under CISE’s new division - Shared Cyber. Infrastructure • October 23 Review at NSF for existing activities among the Grids Center and EDIT teams

Enterprise and Desktop Integration Technologies (EDIT) Consortium Ken Klingenstein Director, Internet 2 Middleware Initiative kjk@internet 2. edu

Overview • NMI-EDIT Overview • Research Findings • NMI Release Components • Building on the Future

NMI-EDIT Consortium • Enterprise and Desktop Integration Technologies Consortium –Internet 2, EDUCAUSE, SURA –Almost all funding passed through to campuses for work • Project Goals –Create a common, persistent and robust core middleware infrastructure for the R&E community –Provide tools and services in support of interinstitutional and inter-realm collaborations

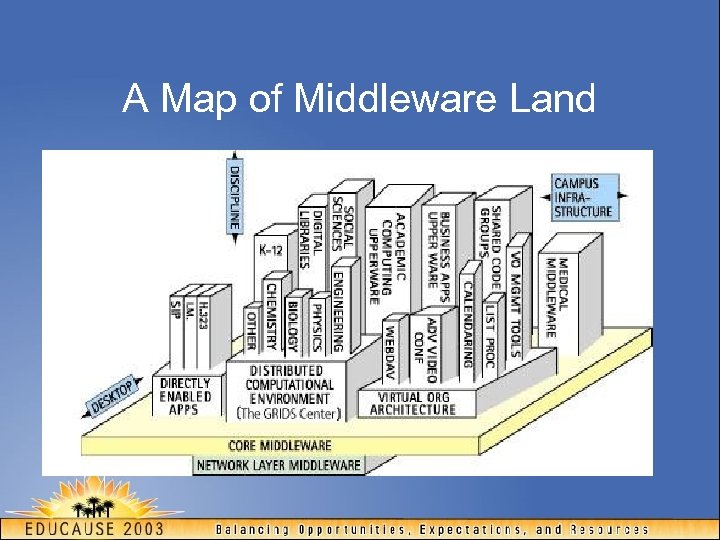

A Map of Middleware Land

NMI-EDIT Findings • Consensus on inter-institutional middleware standards and maturing architecture to support collaborative applications • Widespread interest in Shibboleth within R&E communities • Credential mapping from core enterprise to Grid service • Grid adoption of SAML in Open Grid Services Architecture (OGSA)

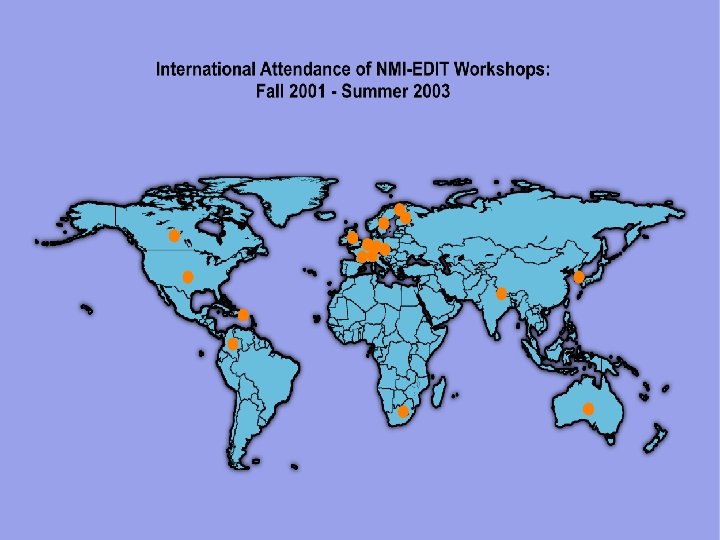

NMI-EDIT Findings (cont. ) • Creation and maintenance of a heavily referenced set of design and best practices documents • Effective linkages with International research communities • Discovery and development of campus IT staff, leading to • Influence on both federal and commercial standards • Direct outreach to over 320 institutions

NMI-EDIT Components from Three NMI Releases • Authentication: –Web. ISO solution, credential mapping from Kerberos to PKI, policy documents, registry service • Enterprise Directories: –Schemas; operational monitoring and schema analysis tools; practices in design, groups, and metadirectories • Authorization: –Architecture and related software and libraries for multi-institution collaboration

NMI-EDIT Components from Three NMI Releases (cont. ) • Integration Activities: –Credential mapping from campus to Grid environment, GLUE schema analysis tool • Education: –Venues for learning about enterprise middleware including CAMPs and on-line deployment materials for directories

The Upcoming Work • Generalizing the Stanford authority system • Middleware diagnostics • Virtual organizations

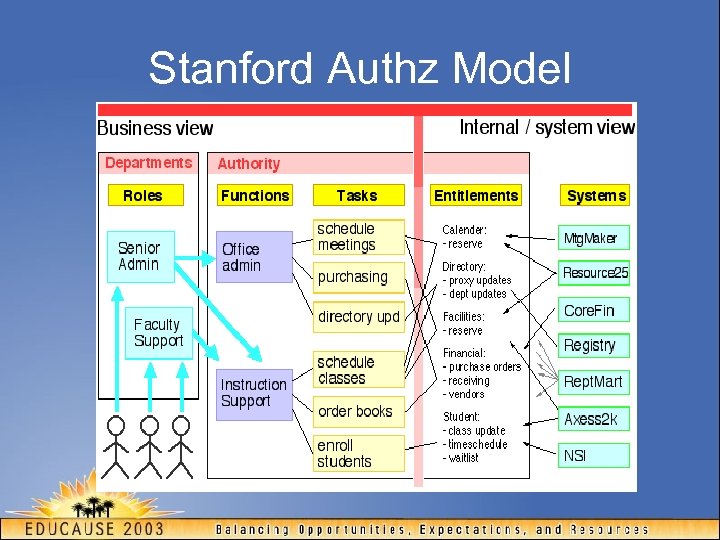

Stanford Authz Model

Stanford Authz Goals • Simplification of authority policy, management and interpretation. We should be able to summarize the full rights and privileges of an individual "at a glance" or let departmental administrators view and manage together all authority in their department or division. • Consistent application of authority rules via infrastructure services and synchronization of administrative authority data across systems.

Stanford Authz Goals (cont. ) • Integration of authority data with enterprise reference data to provide extended services such as delegation and automatic revocation of authority based on status and affiliation changes. • Role-based authority, that is, management of privileges based on job function and assignments rather than attached to individuals.

Deliverables The deliverables consist of • A recipe with accompanying case studies of how to take a role-based organization and develop appropriate groups, policies, attributes etc to operate an authority service • Templates and tools for registries and group management • Web interface and program APIs to provide distributed management (to the departments, to external programs) of access rights and privileges • Delivery of authority information through the infrastructure as directory data and authority events.

Middleware Diagnostics Problem Statement • The number and complexity of distributed application initiatives and products has exploded within the last 5 years • Each must create its own framework for providing diagnostic tools and performance metrics • Distributed applications have become increasingly dependent not only on the system and network infrastructure that they are built upon, but also each other

Goals • Create an event collection and dissemination infrastructure that uses existing system, network and application data (Unix/WIN logs, SNMP, Netflow©, etc. ) • Establish a standardized event record that normalizes all system, network and application events into a common data format • Build a rich tool platform to collect, distribute, access, filter, aggregate, tag, trace, probe, anonymize, query, archive, report, notify, perform forensic and performance analysis

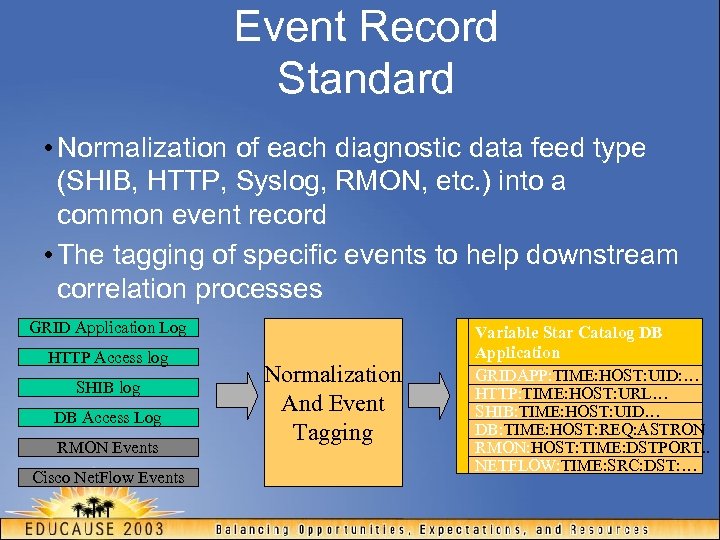

Event Record Standard • Normalization of each diagnostic data feed type (SHIB, HTTP, Syslog, RMON, etc. ) into a common event record • The tagging of specific events to help downstream correlation processes GRID Application Log HTTP Access log SHIB log DB Access Log RMON Events Cisco Net. Flow Events Normalization And Event Tagging Variable Star Catalog DB Application GRIDAPP: TIME: HOST: UID: … HTTP: TIME: HOST: URL… SHIB: TIME: HOST: UID… DB: TIME: HOST: REQ: ASTRON RMON: HOST: TIME: DSTPORT. . NETFLOW: TIME: SRC: DST: …

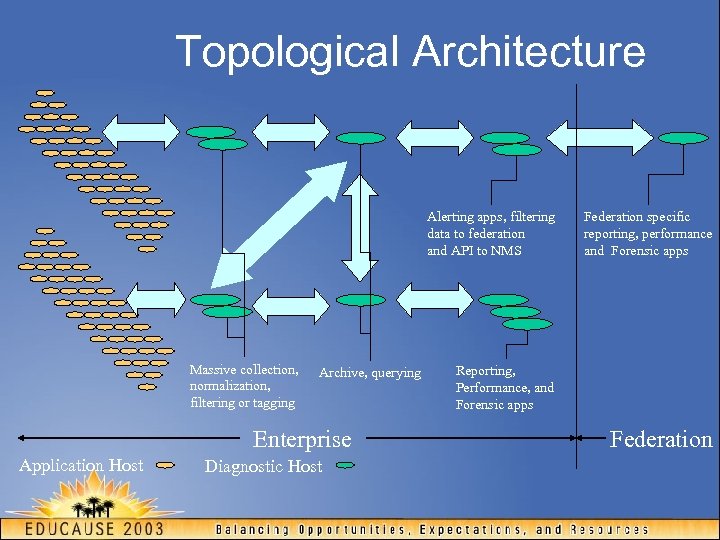

Topological Architecture Alerting apps, filtering data to federation and API to NMS Massive collection, normalization, filtering or tagging Archive, querying Enterprise Application Host Diagnostic Host Federation specific reporting, performance and Forensic apps Reporting, Performance, and Forensic apps Federation

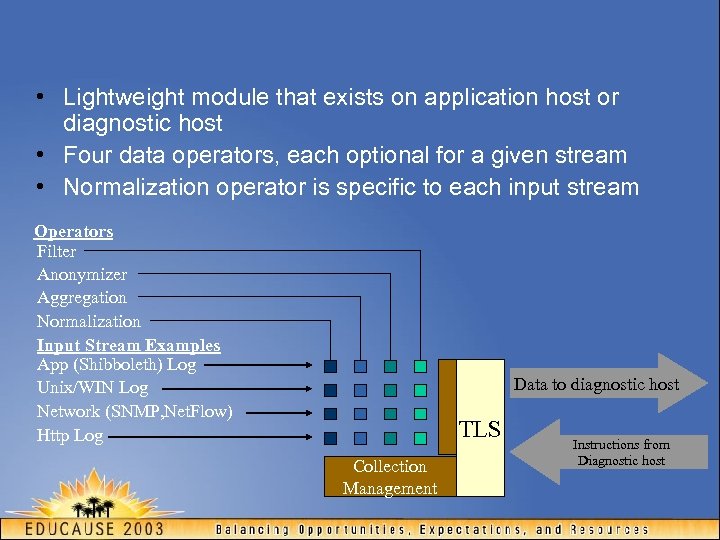

• Lightweight module that exists on application host or diagnostic host • Four data operators, each optional for a given stream • Normalization operator is specific to each input stream Operators Filter Anonymizer Aggregation Normalization Input Stream Examples App (Shibboleth) Log Unix/WIN Log Network (SNMP, Net. Flow) Http Log Data to diagnostic host TLS Collection Management Instructions from Diagnostic host

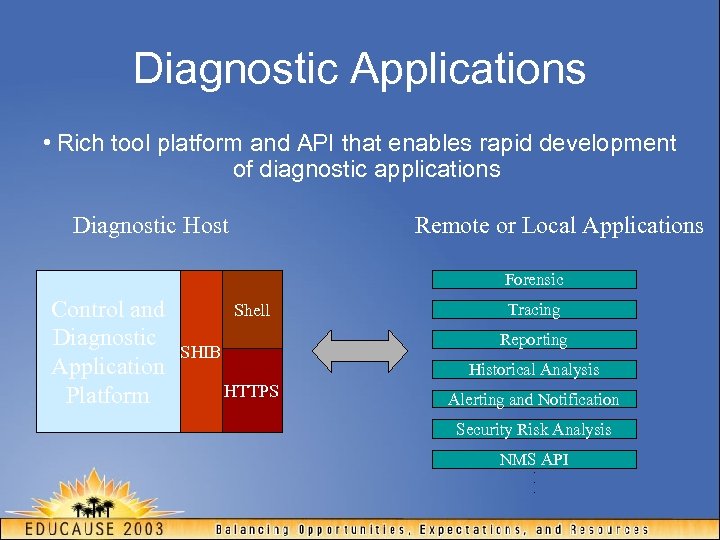

Diagnostic Applications • Rich tool platform and API that enables rapid development of diagnostic applications Diagnostic Host Remote or Local Applications Forensic Control and Diagnostic Application Platform Shell Tracing Reporting SHIB Historical Analysis HTTPS Alerting and Notification Security Risk Analysis NMS. API. .

Involved Groups and Organizations • Internet 2 • End-to-End Performance Initiative • Specific Middleware and GRIDS working groups • SHIB, P 2 P, I 2 IM, etc. • IETF working groups • SYSLOG • RMOMMIB • Efforts in academic and research

Virtual Organizations • Geographically distributed, enterprise distributed community that shares real resources as an organization. • Examples include team science (NEESGrid, HEP, BIRN, NEON), digital content managers (library cataloguers, curators, etc), life-long learning consortia, etc. • On a continuum from interrealm groups (no real resource management, few defined roles) to real organizations (primary identity/authentication providers) • A required cross-stitch on the enterprise trust fabric

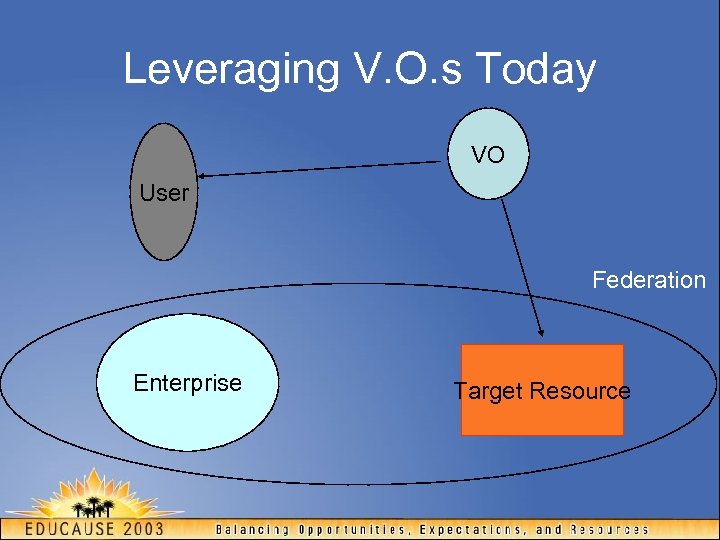

Leveraging V. O. s Today VO User Federation Enterprise Target Resource

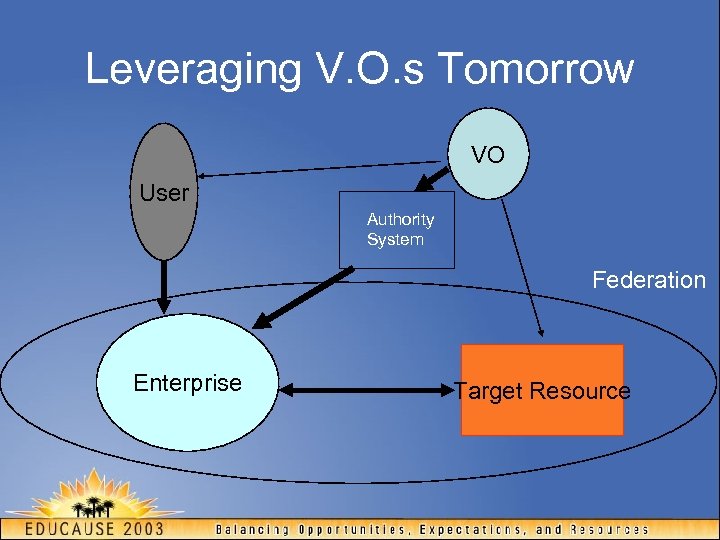

Leveraging V. O. s Tomorrow VO User Authority System Federation Enterprise Target Resource

New Collaborations • Work with JISC on Virtual Organizations –A key cross-stitch among enterprises for interinstitutional collaborations –VO’s range from Grids to digital libraries, from earthquake engineering to collaborative curation, from managing observatories to managing rights • Interworkings with Australian, Swiss, French universities • Corporate interactions with MS, Sun, Liberty Alliance, etc

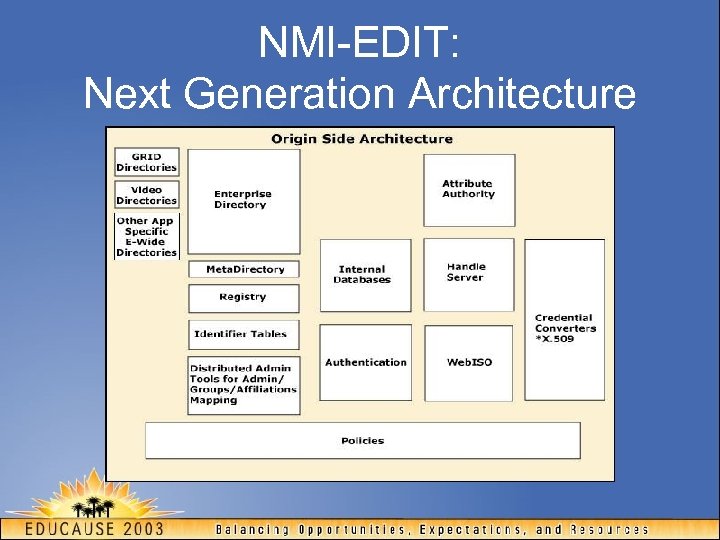

NMI-EDIT: Next Generation Architecture

The pieces fit together… • Campus infrastructure –Developing and encouraging the deployment of identity management components, tools, and support services • Inter-realm infrastructure –Leveraging the local organizational infrastructure to enable access to the broader community though • Building on campus identity management infrastructures • Extending them to contain standard schemas and data definitions • Enabling the exchange of access information in a private and secure way • Developing diagnostic tools to make complex middleware interactions easier to understand

The GRIDS Center: Defining and Deploying Grid Middleware John Mc. Gee University of Southern California Information Sciences Institute

NSF Middleware Initiative (NMI) • GRIDS is one of two original teams, the other being EDIT • New NMI teams just announced (Grid portals and instrumentation) • GRIDS releases well-tested, deployed and supported middleware based on common architectures that can be extended to Internet users around the world • NSF support of GRIDS leverages investment by DOE, NASA, DARPA, UK e-Science Program, and private industry

GRIDS Center • GRIDS = Grid Research Integration Development & Support • Partnership of leading teams in Grid computing – University of Chicago and Argonne National Lab – Information Sciences Institute at USC – NCSA at the University of Illinois at Urbana-Champaign – SDSC at the University of California at San Diego – University of Wisconsin at Madison – Plus other software contributors (to date: UC Santa Barbara, U. of Michigan) • GRIDS develops, tests, deploys and supports standard tools for: – Authentication, authorization, policy – Resource discovery and directory services – Remote access to computers, data, instruments

The Grid: What is it? • “Resource-sharing technology with software and services that let people access computing power, databases, and other tools securely online across corporate, institutional, and geographic boundaries without sacrificing local autonomy. ” • Three key Grid criteria: – coordinates distributed resources – using standard, open, general-purpose protocols and interfaces – to deliver qualities of service not possible with pre. Grid technologies

GRIDS Center Software Suite • Globus Toolkit®. The de facto standard for Grid computing, an open-source "bag of technologies" to simplify collaboration across organizations. Includes tools for authentication, scheduling, file transfer and resource description. • Condor-G. Enhanced version of the core Condor software optimized to work with GT for managing Grid jobs. • Network Weather Service (NWS). Periodically monitors and dynamically forecasts performance of network and computational resources. • Grid Packaging Tools (GPT). XML-based packaging data format defines complex dependencies between components.

GRIDS Center Software Suite (cont. ) • GSI-Open. SSH. Modified version adds support for Grid Security Infrastructure (GSI) authentication and single sign-on capability. • My. Proxy. Repository lets users retrieve a proxy credential on demand, without managing private key and certificate files across sites and applications. • MPICH-G 2. Grid-enabled implementation of the Message Passing Index (MPI) standard, based on the popular MPICH library. • Grid. Config. Manages the configuration of GRIDS components, letting users regenerate configuration files in native formats and ensure consistency. • KX. 509 and KCA. A tool from EDIT that bridges Kerberos and PKI infrastructure.

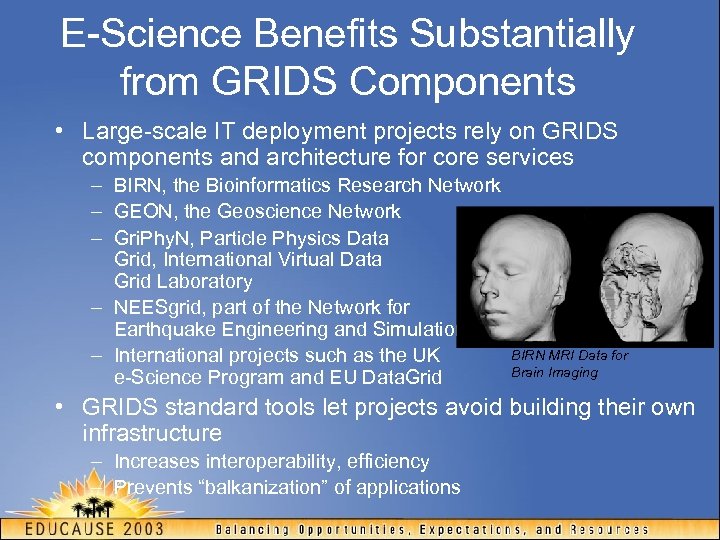

E-Science Benefits Substantially from GRIDS Components • Large-scale IT deployment projects rely on GRIDS components and architecture for core services – BIRN, the Bioinformatics Research Network – GEON, the Geoscience Network – Gri. Phy. N, Particle Physics Data Grid, International Virtual Data Grid Laboratory – NEESgrid, part of the Network for Earthquake Engineering and Simulation – International projects such as the UK e-Science Program and EU Data. Grid BIRN MRI Data for Brain Imaging • GRIDS standard tools let projects avoid building their own infrastructure – Increases interoperability, efficiency – Prevents “balkanization” of applications

Industrial and International Leaders Move to Grid Services • GRIDS leaders engage a worldwide community in defining specifications for Grid services – Very active working through Global Grid Forum – Over a dozen leading companies (IBM, HP, Platform) have committed to Globus-based Grid services for their products • NMI-R 4 in December will include Globus Toolkit 3. 0 – GT 3 is the first full-scale deployment of new Open Grid Services Infrastructure (OGSI) spec – Significant contributions from new international partners (University of Edinburgh and Swedish Royal Institute of Technology) for database access and security – UK Council for the Central Laboratory of the Research Councils (CCLRC) users rank deployment of GT 3 as their #1 priority

Acclaim for GRIDS Components • On July 15, the New York Times noted the “far-sighted simplicity” of the Grid services architecture • The Globus Toolkit has earned: – R&D 100 Award – Federal Laboratory Consortium Award for Excellence in Technology Transfer • MIT Technology Review named Grid one of “Ten Technologies That Will Change the World” • Info. World list of 2003’s top 10 innovators includes two GRIDS PIs • GRIDS co-PI Ian Foster named “Innovator of the Year” for 2003 by R&D Magazine

Future GRIDS Plans • GRIDS is completing its second year in October – Original three-year award, through Fall 2004 – Very successful in establishing processes, meeting twice/year release schedule, defining broadly accepted Grid middleware standards, and increasing public awareness of Grid computing • GRIDS Center 2 plans – Further develop and refine core NMI releases and processes – Deploy tools based on Open Grid Services Architecture – Expand testing capability – Create a federated bug-tracking facility – Public databases: Grid Projects and Deployments System and Grid Technology Repository – Increase outreach to communities at all levels: • Existing major Grid projects (e. g. , Tera. Grid, NEESgrid) • Major projects that should use Grid more (e. g. , SEEK, NEON) • New communities not yet using Grid (e. g. , Computer-Aided Diagnosis)

Upcoming Tutorials • GRIDS is extremely well-represented at SC 03, the supercomputing conference – Tutorials, technical papers, Bo. Fs, demonstrations – Phoenix, AZ, November 15 -21 – http: //www. sc-conference. org/sc 2003 • Globus. WORLD 2004 conference – – Co-sponsored by GRIDS San Francisco, CA, January 20 -23 Academia and Industry both well-represented http: //www. globusworld. org

For More Information The GRIDS Center http: //www. grids-center. org/ NSF Middleware Initiative http: //www. nsf-middleware. org/ The Globus Alliance http: //www. globus. org/

NMI Integration Testbed Mary Fran Yafchak maryfran@sura. org NMI Integration Testbed Manager SURA IT Program Coordinator Southeastern Universities Research Association

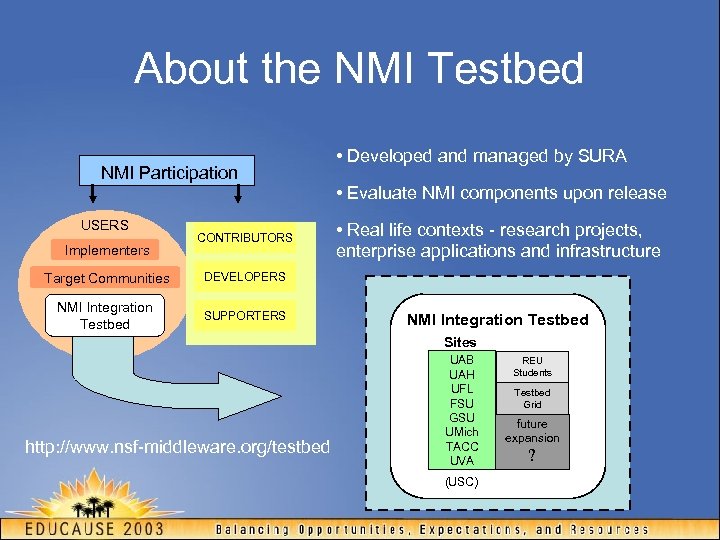

About the NMI Testbed NMI Participation USERS Implementers CONTRIBUTORS Target Communities SUPPORTERS • Evaluate NMI components upon release • Real life contexts - research projects, enterprise applications and infrastructure DEVELOPERS NMI Integration Testbed • Developed and managed by SURA NMI Integration Testbed Sites http: //www. nsf-middleware. org/testbed UAB UAH UFL FSU GSU UMich TACC UVA (USC) REU Students Testbed Grid future expansion ?

Activities to date • • • Evaluation of NMI Releases 1, 2 & 3 Project & enterprise integration Addition of REU student positions Workshops & Presentations Firing up of intra-Testbed grid

Evaluation of NMI R 2 & R 3 Once upon a time… • NMI R 1, completed September 2002. 18 components, 61 reports, focused “across the board” This past year… • NMI R 2, completed February 2003. 25 components, 59 reports, focused “across the board” • NMI R 3, completed August 2003. 30 components, 57 reports, heavy on grids and newer authn/authz components Trend towards more “practical” evaluation

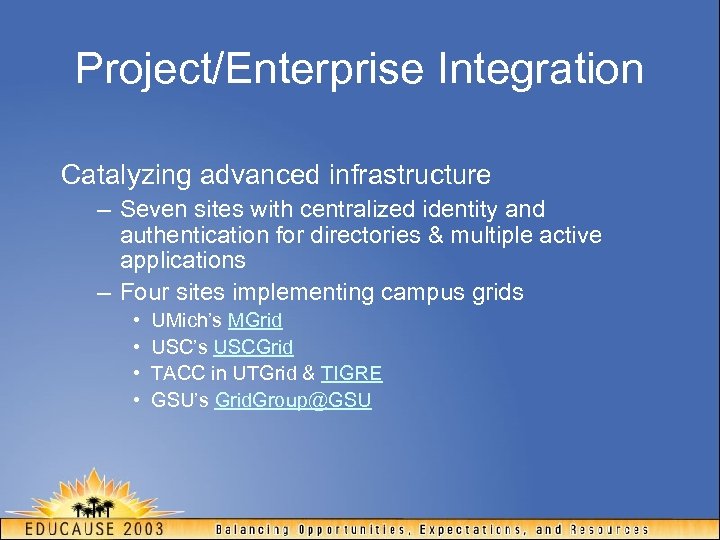

Project/Enterprise Integration Catalyzing advanced infrastructure – Seven sites with centralized identity and authentication for directories & multiple active applications – Four sites implementing campus grids • • UMich’s MGrid USC’s USCGrid TACC in UTGrid & TIGRE GSU’s Grid. Group@GSU

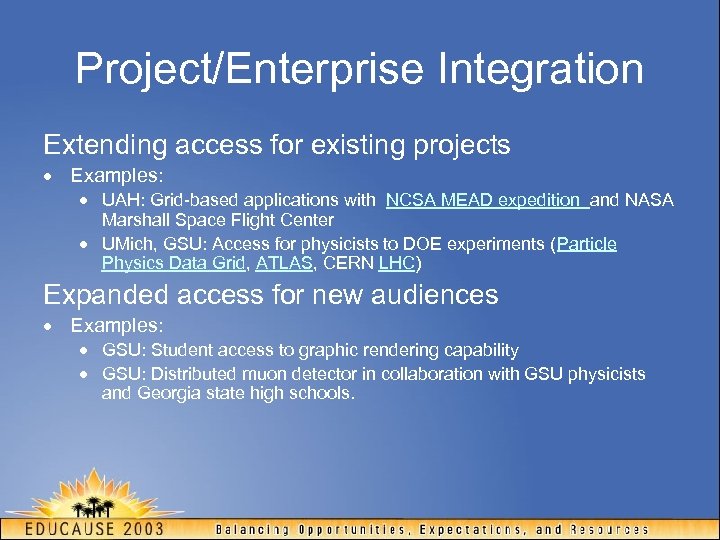

Project/Enterprise Integration Extending access for existing projects · Examples: · UAH: Grid-based applications with NCSA MEAD expedition and NASA Marshall Space Flight Center · UMich, GSU: Access for physicists to DOE experiments (Particle Physics Data Grid, ATLAS, CERN LHC) Expanded access for new audiences · Examples: · GSU: Student access to graphic rendering capability · GSU: Distributed muon detector in collaboration with GSU physicists and Georgia state high schools.

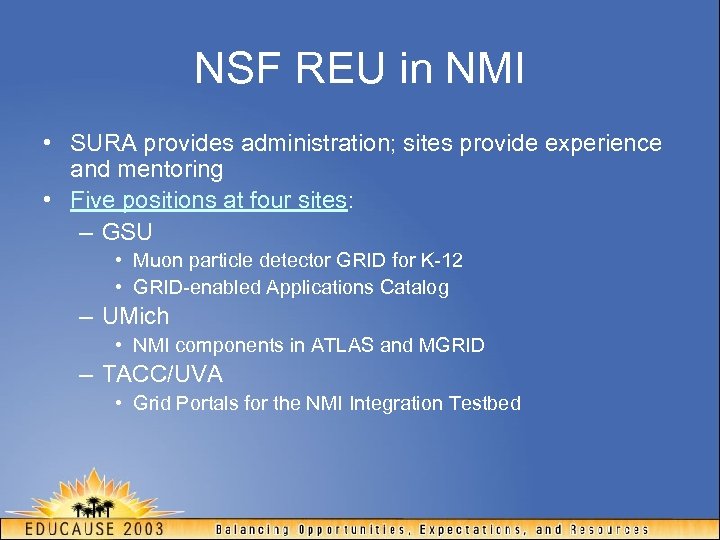

NSF REU in NMI • SURA provides administration; sites provide experience and mentoring • Five positions at four sites: – GSU • Muon particle detector GRID for K-12 • GRID-enabled Applications Catalog – UMich • NMI components in ATLAS and MGRID – TACC/UVA • Grid Portals for the NMI Integration Testbed

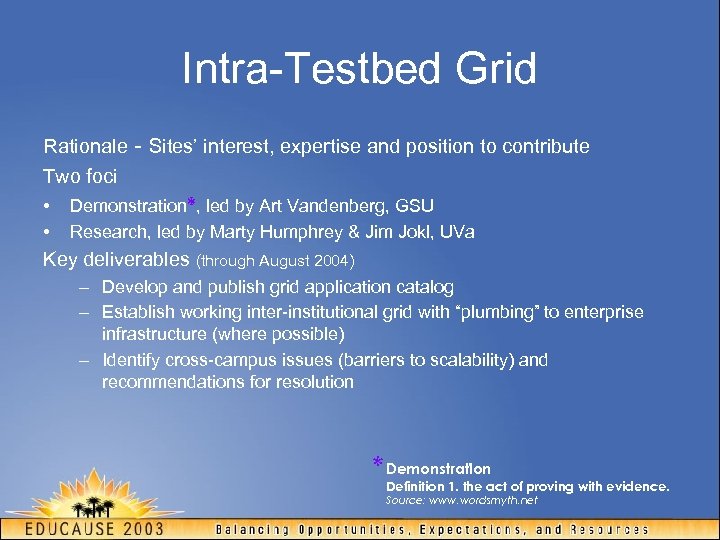

Intra-Testbed Grid Rationale - Sites’ interest, expertise and position to contribute Two foci • Demonstration*, led by Art Vandenberg, GSU • Research, led by Marty Humphrey & Jim Jokl, UVa Key deliverables (through August 2004) – Develop and publish grid application catalog – Establish working inter-institutional grid with “plumbing” to enterprise infrastructure (where possible) – Identify cross-campus issues (barriers to scalability) and recommendations for resolution * Demonstrationact of proving with evidence. Definition 1. the Source: www. wordsmyth. net

NMI Testbed Workshops • 1 st Testbed Results workshop – April 2003 at Internet 2 Spring Meeting • 2 nd Testbed Results workshop – This past Monday, preceding EDUCAUSE 2003 • SURA NMI PACS workshops – Small group training in enterprise directories and related applications (Aug & Sept. 2003)

NMI Testbed Presentations • I 2 Members’ meetings (Spring 2003, Fall 2003) • EDUCAUSE 2003 Tomorrow! • “Experiences in Middleware Deployment: Teach a Man to Fish…, ” Thursday, 11/6, 3: 55 - 4: 45 p. m. , 303 D • Globus. World 2004 • “Taking Grids out of the Lab and onto the Campus”, Thursday, January 22, 10: 30 a. m. - 12 p. m.

NMI and NMI-EDIT Outreach Ann West EDUCAUSE/Internet 2

Topics • NMI participation model • NMI-EDIT goals, products, and results • Education opportunities

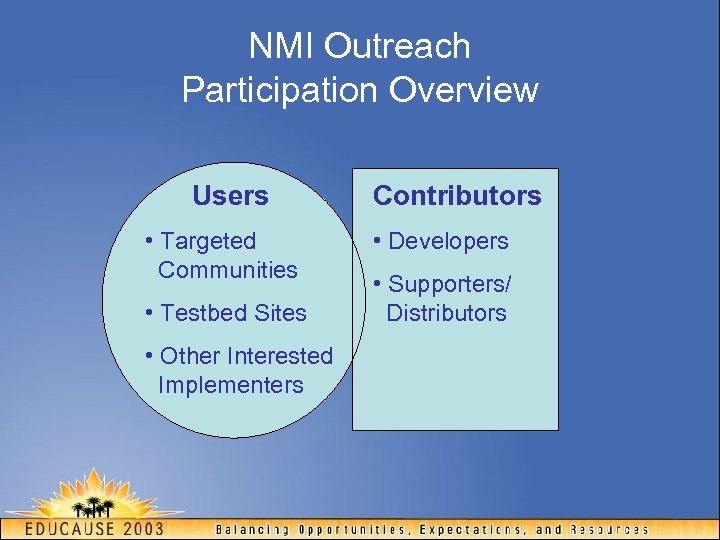

NMI Outreach Participation Overview Users Contributors • Targeted Communities • Developers • Testbed Sites • Other Interested Implementers • Supporters/ Distributors

NMI-EDIT Outreach Goals • Creating awareness • Encouraging deployment • Creating middleware communities • Serving the middleware R&D community • Establishing information and support persistence

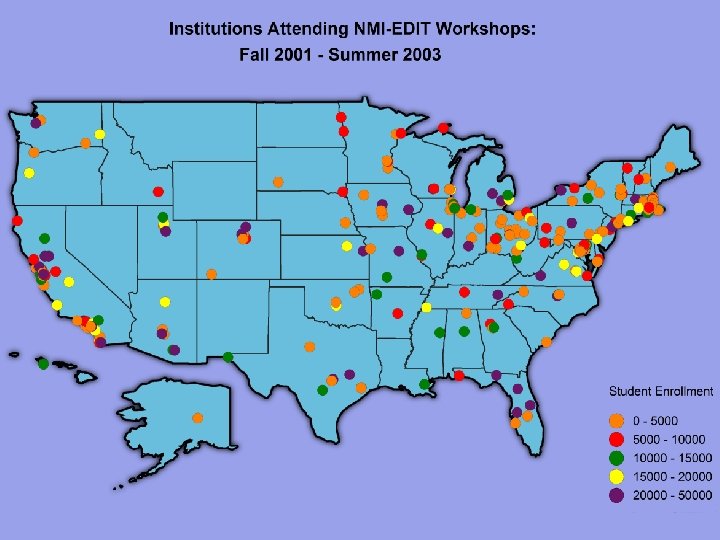

NMI-EDIT Outreach Products • Awareness presentations – 22 presentations last year • Workshops and tutorials – 1060 total attendance. – 718 distinct participants – 323 distinct organizations

NMI-EDIT Outreach Products • Content development – Articles – Directory roadmap • Community work – Minority-serving institutions and small colleges – Registrars (AACRAO) and CFOs (NACUBO) – Higher-education systems

Education Opportunities • Directory CAMP –Feb 3 -6, 2004 in Tempe AZ • EDUCAUSE regional meetings –Look for NMI-EDIT branded session • Getting started? –www. nmi-edit. org Check out Getting Started section Enterprise Directory Implementation Roadmap

More Information… • NMI –nsf-middleware. org • NMI-EDIT –www. nmi-edit. org –middleware. internet 2. edu –www. educause. edu/eduperson/ • GRIDS Center –www. grids-center. org

55fb409fed3389c6c4c190d5c3424bca.ppt