3ada240d90df098f82f92afa70436b83.ppt

- Количество слайдов: 24

No singing in this presentation Data Management on the Fusion Computational Pipeline, “End-to-end solutions” Presentation to Sci. DAC 2005 meeting 6/29/05 Scott A. Klasky PPPL M. Beck (UTK) , V. Bhat (PPPL), E. Feibush(PPPL) B. Ludäscher (UCD) , M. Parashar (Rutgers), A. Shoshani (LBL) D. Silver (Rutgers), M. Vouk (NCS) GPS Sci. DAC CEMM Sci. DAC Batchelor

No singing in this presentation Data Management on the Fusion Computational Pipeline, “End-to-end solutions” Presentation to Sci. DAC 2005 meeting 6/29/05 Scott A. Klasky PPPL M. Beck (UTK) , V. Bhat (PPPL), E. Feibush(PPPL) B. Ludäscher (UCD) , M. Parashar (Rutgers), A. Shoshani (LBL) D. Silver (Rutgers), M. Vouk (NCS) GPS Sci. DAC CEMM Sci. DAC Batchelor

Outline of Talk • The Fusion Simulation Project (FSP). • Computer Science enabling technologies. • The Scientific Investigation Process. • Technologies necessary for leadership class computing, such as the FSP. – – – Adaptive Workflow Technology Data streaming Collaborative code monitoring Integrated Data Analysis and Visualization Environment. Ubiquitous and Transparent Data Sharing.

Outline of Talk • The Fusion Simulation Project (FSP). • Computer Science enabling technologies. • The Scientific Investigation Process. • Technologies necessary for leadership class computing, such as the FSP. – – – Adaptive Workflow Technology Data streaming Collaborative code monitoring Integrated Data Analysis and Visualization Environment. Ubiquitous and Transparent Data Sharing.

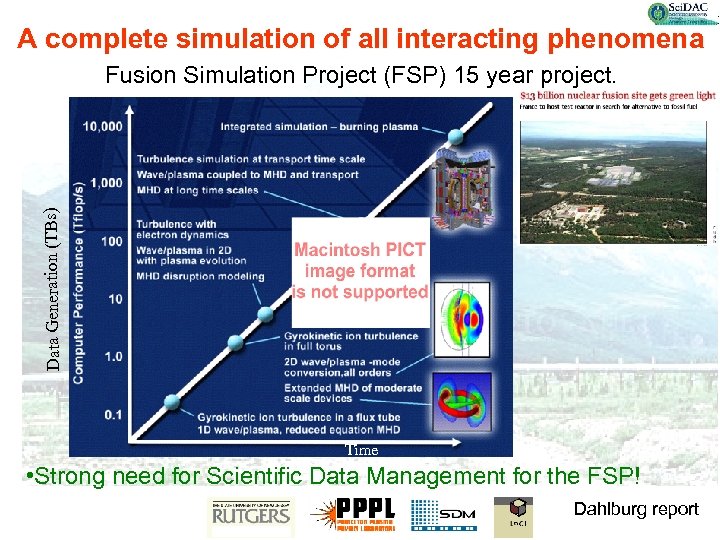

A complete simulation of all interacting phenomena Data Generation (TBs) Fusion Simulation Project (FSP) 15 year project. Time • Strong need for Scientific Data Management for the FSP! Dahlburg report

A complete simulation of all interacting phenomena Data Generation (TBs) Fusion Simulation Project (FSP) 15 year project. Time • Strong need for Scientific Data Management for the FSP! Dahlburg report

It’s about the enabling technologies Applications drive Applications Math Enabling technologies respond CS (Keyes)

It’s about the enabling technologies Applications drive Applications Math Enabling technologies respond CS (Keyes)

FSP has computer science/DM requirements • Coupling multiple codes/data – In-core and network-based • Analysis and visualization – Feature extraction, data juxtaposition for V&V • Dynamic monitoring and control – Parameter modification, snapshot generation, … • Data sharing among collaborators – Transparent and efficient data access • These requirements are shared with many other simulations across the DOE community.

FSP has computer science/DM requirements • Coupling multiple codes/data – In-core and network-based • Analysis and visualization – Feature extraction, data juxtaposition for V&V • Dynamic monitoring and control – Parameter modification, snapshot generation, … • Data sharing among collaborators – Transparent and efficient data access • These requirements are shared with many other simulations across the DOE community.

Six data technologies Fundamental to supporting the data management requirements for scientific applications • From the report from the DOE Office of Science Data-Management Workshops (March – May 2004) (R. Mount) – – – – • http: //www-user. slac. stanford. edu/rmount/dm-workshop-04/Final-report. pdf Workflow, data transformation Metadata, data description, logical organization Efficient access and queries, data integration Distributed data management, data movement, networks Storage and caching Data analysis, visualization, and integrated environments A path-finding FSP should develop and demonstrate these components

Six data technologies Fundamental to supporting the data management requirements for scientific applications • From the report from the DOE Office of Science Data-Management Workshops (March – May 2004) (R. Mount) – – – – • http: //www-user. slac. stanford. edu/rmount/dm-workshop-04/Final-report. pdf Workflow, data transformation Metadata, data description, logical organization Efficient access and queries, data integration Distributed data management, data movement, networks Storage and caching Data analysis, visualization, and integrated environments A path-finding FSP should develop and demonstrate these components

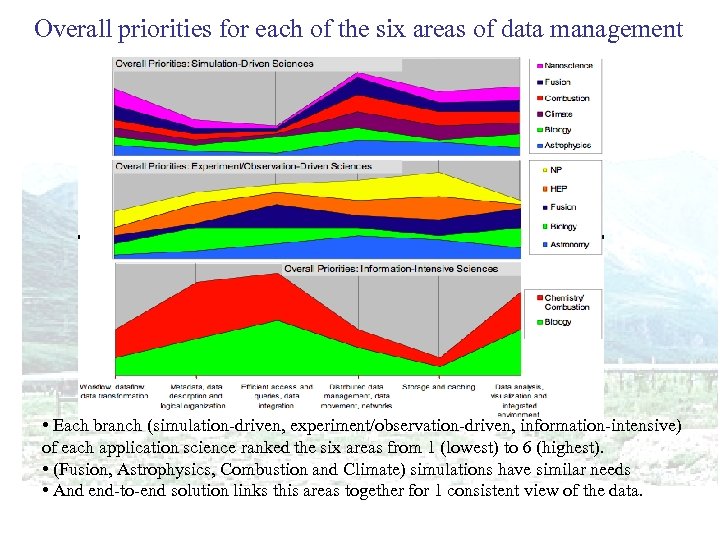

Overall priorities for each of the six areas of data management • Each branch (simulation-driven, experiment/observation-driven, information-intensive) of each application science ranked the six areas from 1 (lowest) to 6 (highest). • (Fusion, Astrophysics, Combustion and Climate) simulations have similar needs • And end-to-end solution links this areas together for 1 consistent view of the data.

Overall priorities for each of the six areas of data management • Each branch (simulation-driven, experiment/observation-driven, information-intensive) of each application science ranked the six areas from 1 (lowest) to 6 (highest). • (Fusion, Astrophysics, Combustion and Climate) simulations have similar needs • And end-to-end solution links this areas together for 1 consistent view of the data.

OFES has a clear need for advanced SDM • Current OFES Data management technologies work well for current experiments, but do not scale well for large data. • The time is ripe for OFES to join in collaborative efforts with other DOE data management researchers and design a system, which will be scaleable to a FSP and ultimately to the needs of ITER.

OFES has a clear need for advanced SDM • Current OFES Data management technologies work well for current experiments, but do not scale well for large data. • The time is ripe for OFES to join in collaborative efforts with other DOE data management researchers and design a system, which will be scaleable to a FSP and ultimately to the needs of ITER.

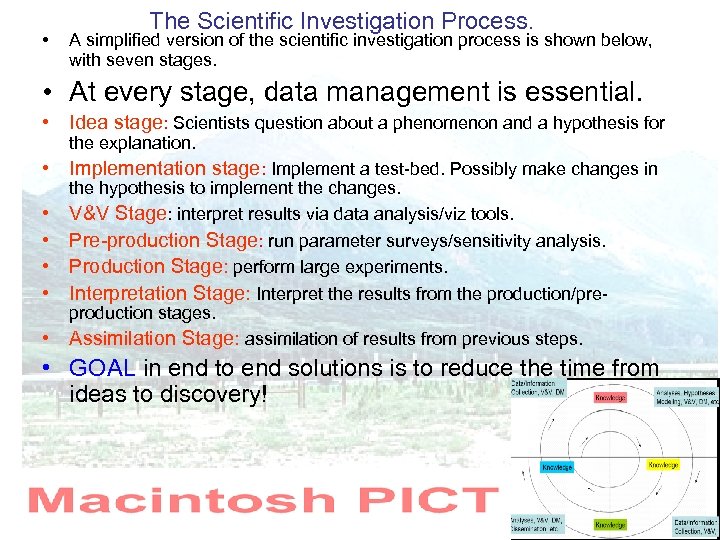

• The Scientific Investigation Process. A simplified version of the scientific investigation process is shown below, with seven stages. • At every stage, data management is essential. • Idea stage: Scientists question about a phenomenon and a hypothesis for the explanation. • Implementation stage: Implement a test-bed. Possibly make changes in • • • the hypothesis to implement the changes. V&V Stage: interpret results via data analysis/viz tools. Pre-production Stage: run parameter surveys/sensitivity analysis. Production Stage: perform large experiments. Interpretation Stage: Interpret the results from the production/preproduction stages. Assimilation Stage: assimilation of results from previous steps. • GOAL in end to end solutions is to reduce the time from ideas to discovery!

• The Scientific Investigation Process. A simplified version of the scientific investigation process is shown below, with seven stages. • At every stage, data management is essential. • Idea stage: Scientists question about a phenomenon and a hypothesis for the explanation. • Implementation stage: Implement a test-bed. Possibly make changes in • • • the hypothesis to implement the changes. V&V Stage: interpret results via data analysis/viz tools. Pre-production Stage: run parameter surveys/sensitivity analysis. Production Stage: perform large experiments. Interpretation Stage: Interpret the results from the production/preproduction stages. Assimilation Stage: assimilation of results from previous steps. • GOAL in end to end solutions is to reduce the time from ideas to discovery!

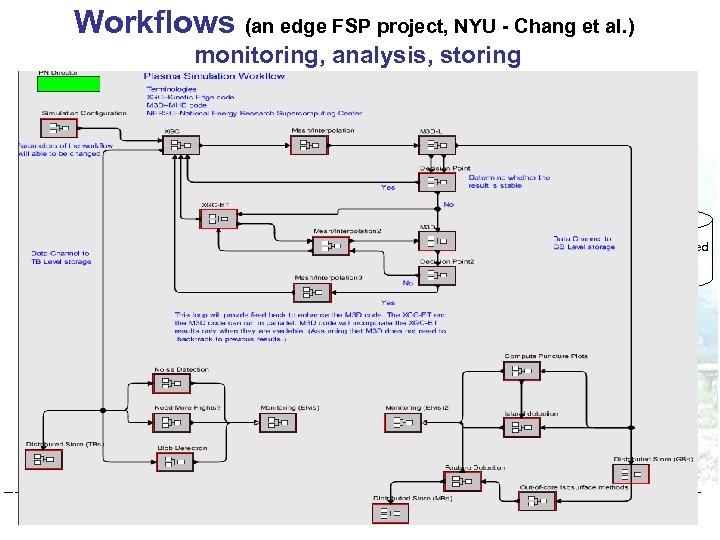

Workflows (an edge FSP project, NYU - Chang et al. ) monitoring, analysis, storing Start (L-H) XGC-ET Mesh/Interpolation Yes No XGC-ET M 3 D-L (Linear stability) Stable? Mesh/Interpolation M 3 D t Stable? Distributed Store No Mesh/Interpolation TBs Yes Compute Puncture Plots Noise Detection Portal (Elvis) Island detection Need More Flights? Blob Detection B healed? Distributed Store MBs I D A V E Feature Detection Out-of-core Isosurface methods Distributed Store GBs

Workflows (an edge FSP project, NYU - Chang et al. ) monitoring, analysis, storing Start (L-H) XGC-ET Mesh/Interpolation Yes No XGC-ET M 3 D-L (Linear stability) Stable? Mesh/Interpolation M 3 D t Stable? Distributed Store No Mesh/Interpolation TBs Yes Compute Puncture Plots Noise Detection Portal (Elvis) Island detection Need More Flights? Blob Detection B healed? Distributed Store MBs I D A V E Feature Detection Out-of-core Isosurface methods Distributed Store GBs

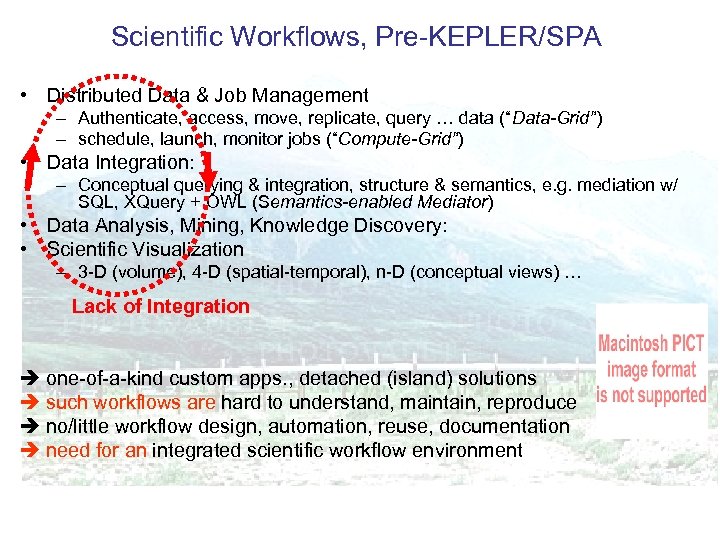

Scientific Workflows, Pre-KEPLER/SPA • Distributed Data & Job Management – Authenticate, access, move, replicate, query … data (“Data-Grid”) – schedule, launch, monitor jobs (“Compute-Grid”) • Data Integration: – Conceptual querying & integration, structure & semantics, e. g. mediation w/ SQL, XQuery + OWL (Semantics-enabled Mediator) • Data Analysis, Mining, Knowledge Discovery: • Scientific Visualization – 3 -D (volume), 4 -D (spatial-temporal), n-D (conceptual views) … Lack of Integration è one-of-a-kind custom apps. , detached (island) solutions è such workflows are hard to understand, maintain, reproduce è no/little workflow design, automation, reuse, documentation è need for an integrated scientific workflow environment

Scientific Workflows, Pre-KEPLER/SPA • Distributed Data & Job Management – Authenticate, access, move, replicate, query … data (“Data-Grid”) – schedule, launch, monitor jobs (“Compute-Grid”) • Data Integration: – Conceptual querying & integration, structure & semantics, e. g. mediation w/ SQL, XQuery + OWL (Semantics-enabled Mediator) • Data Analysis, Mining, Knowledge Discovery: • Scientific Visualization – 3 -D (volume), 4 -D (spatial-temporal), n-D (conceptual views) … Lack of Integration è one-of-a-kind custom apps. , detached (island) solutions è such workflows are hard to understand, maintain, reproduce è no/little workflow design, automation, reuse, documentation è need for an integrated scientific workflow environment

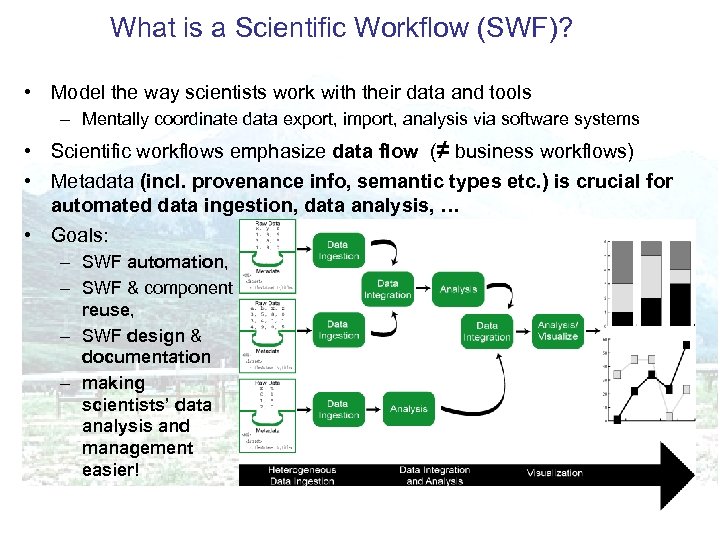

What is a Scientific Workflow (SWF)? • Model the way scientists work with their data and tools – Mentally coordinate data export, import, analysis via software systems • Scientific workflows emphasize data flow (≠ business workflows) • Metadata (incl. provenance info, semantic types etc. ) is crucial for automated data ingestion, data analysis, … • Goals: – SWF automation, – SWF & component reuse, – SWF design & documentation – making scientists’ data analysis and management easier!

What is a Scientific Workflow (SWF)? • Model the way scientists work with their data and tools – Mentally coordinate data export, import, analysis via software systems • Scientific workflows emphasize data flow (≠ business workflows) • Metadata (incl. provenance info, semantic types etc. ) is crucial for automated data ingestion, data analysis, … • Goals: – SWF automation, – SWF & component reuse, – SWF design & documentation – making scientists’ data analysis and management easier!

Interactive and Autonomic Control of Workflows/ Simulations • Scale, complexity and dynamism of the FSP requires simulations to be accessed, monitored and controlled during execution. • Development and deployment of applications that can be externally monitored and interactively or autonomically controlled. – Enable interactive and autonomic (policies driven) control of simulation elements, interactions and workflows. – A control network to enable elements to be accessed and managed externally. • Support runtime monitoring, dynamic data injection and simulation workflow control. • Support efficient and scalable implementations of monitoring, interactive and autonomic control and rule execution.

Interactive and Autonomic Control of Workflows/ Simulations • Scale, complexity and dynamism of the FSP requires simulations to be accessed, monitored and controlled during execution. • Development and deployment of applications that can be externally monitored and interactively or autonomically controlled. – Enable interactive and autonomic (policies driven) control of simulation elements, interactions and workflows. – A control network to enable elements to be accessed and managed externally. • Support runtime monitoring, dynamic data injection and simulation workflow control. • Support efficient and scalable implementations of monitoring, interactive and autonomic control and rule execution.

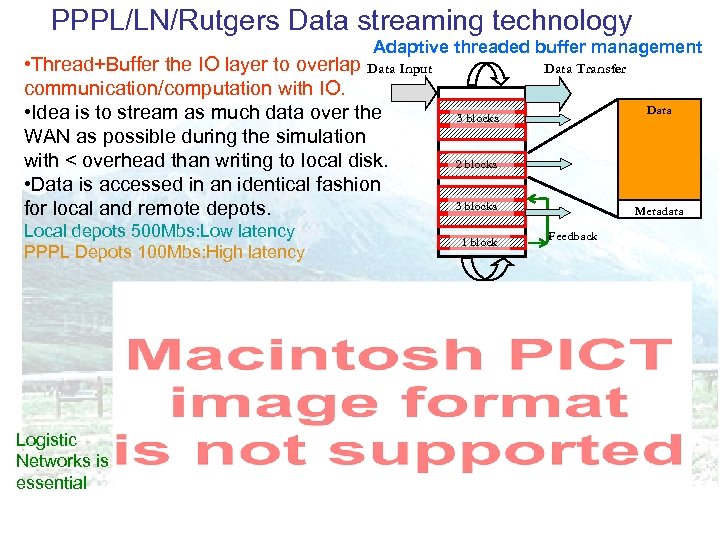

PPPL/LN/Rutgers Data streaming technology Adaptive threaded buffer management • Thread+Buffer the IO layer to overlap Data Input communication/computation with IO. • Idea is to stream as much data over the WAN as possible during the simulation with < overhead than writing to local disk. • Data is accessed in an identical fashion for local and remote depots. Local depots 500 Mbs: Low latency PPPL Depots 100 Mbs: High latency Logistic Networks is essential Data Transfer Data 3 blocks 2 blocks 3 blocks 1 block Metadata Feedback

PPPL/LN/Rutgers Data streaming technology Adaptive threaded buffer management • Thread+Buffer the IO layer to overlap Data Input communication/computation with IO. • Idea is to stream as much data over the WAN as possible during the simulation with < overhead than writing to local disk. • Data is accessed in an identical fashion for local and remote depots. Local depots 500 Mbs: Low latency PPPL Depots 100 Mbs: High latency Logistic Networks is essential Data Transfer Data 3 blocks 2 blocks 3 blocks 1 block Metadata Feedback

Network Adaptability Latency Aware Network Aware Self Adjusting Buffer.

Network Adaptability Latency Aware Network Aware Self Adjusting Buffer.

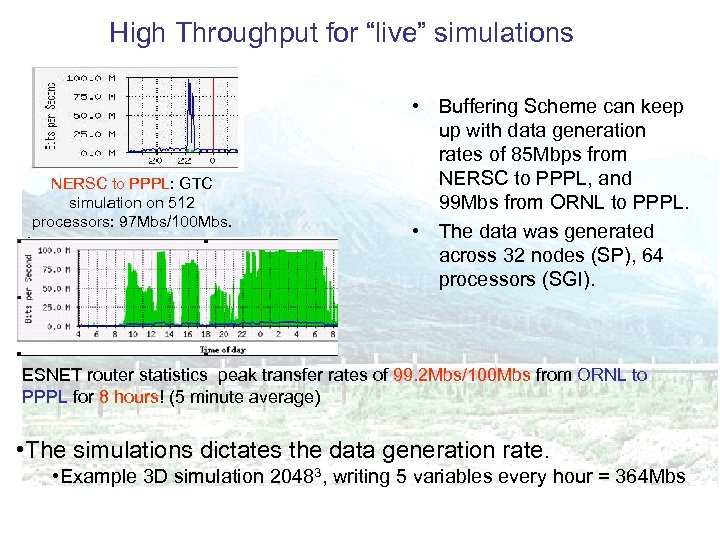

High Throughput for “live” simulations NERSC to PPPL: GTC simulation on 512 processors: 97 Mbs/100 Mbs. • Buffering Scheme can keep up with data generation rates of 85 Mbps from NERSC to PPPL, and 99 Mbs from ORNL to PPPL. • The data was generated across 32 nodes (SP), 64 processors (SGI). ESNET router statistics peak transfer rates of 99. 2 Mbs/100 Mbs from ORNL to PPPL for 8 hours! (5 minute average) • The simulations dictates the data generation rate. • Example 3 D simulation 20483, writing 5 variables every hour = 364 Mbs

High Throughput for “live” simulations NERSC to PPPL: GTC simulation on 512 processors: 97 Mbs/100 Mbs. • Buffering Scheme can keep up with data generation rates of 85 Mbps from NERSC to PPPL, and 99 Mbs from ORNL to PPPL. • The data was generated across 32 nodes (SP), 64 processors (SGI). ESNET router statistics peak transfer rates of 99. 2 Mbs/100 Mbs from ORNL to PPPL for 8 hours! (5 minute average) • The simulations dictates the data generation rate. • Example 3 D simulation 20483, writing 5 variables every hour = 364 Mbs

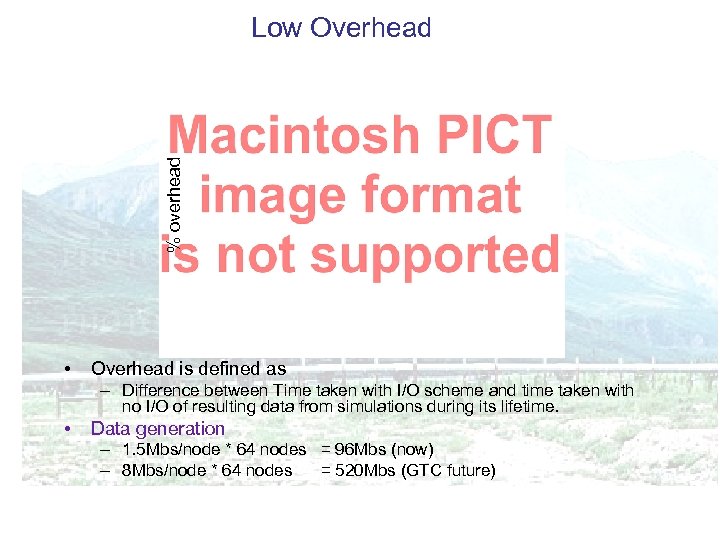

% overhead Low Overhead • Overhead is defined as – Difference between Time taken with I/O scheme and time taken with no I/O of resulting data from simulations during its lifetime. • Data generation – 1. 5 Mbs/node * 64 nodes = 96 Mbs (now) – 8 Mbs/node * 64 nodes = 520 Mbs (GTC future)

% overhead Low Overhead • Overhead is defined as – Difference between Time taken with I/O scheme and time taken with no I/O of resulting data from simulations during its lifetime. • Data generation – 1. 5 Mbs/node * 64 nodes = 96 Mbs (now) – 8 Mbs/node * 64 nodes = 520 Mbs (GTC future)

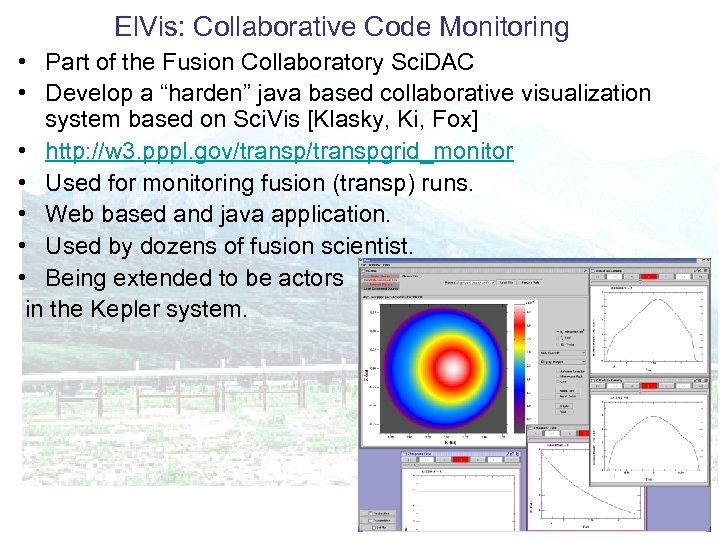

El. Vis: Collaborative Code Monitoring • Part of the Fusion Collaboratory Sci. DAC • Develop a “harden” java based collaborative visualization system based on Sci. Vis [Klasky, Ki, Fox] • http: //w 3. pppl. gov/transpgrid_monitor • Used for monitoring fusion (transp) runs. • Web based and java application. • Used by dozens of fusion scientist. • Being extended to be actors in the Kepler system.

El. Vis: Collaborative Code Monitoring • Part of the Fusion Collaboratory Sci. DAC • Develop a “harden” java based collaborative visualization system based on Sci. Vis [Klasky, Ki, Fox] • http: //w 3. pppl. gov/transpgrid_monitor • Used for monitoring fusion (transp) runs. • Web based and java application. • Used by dozens of fusion scientist. • Being extended to be actors in the Kepler system.

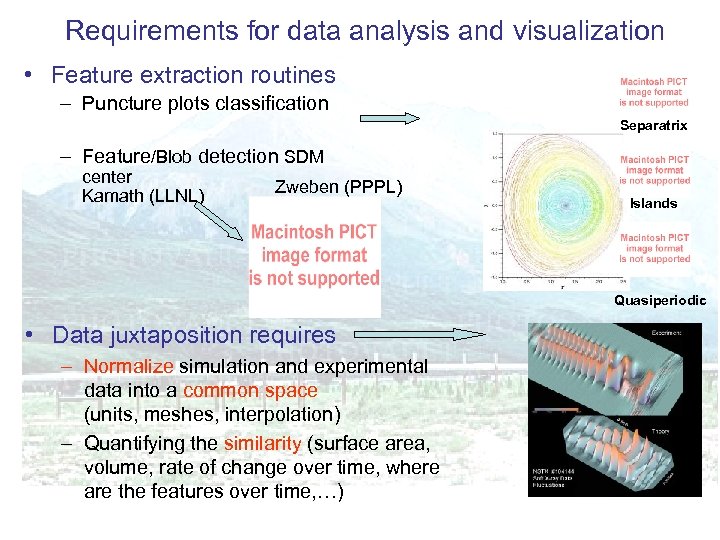

Requirements for data analysis and visualization • Feature extraction routines – Puncture plots classification Separatrix – Feature/Blob detection SDM center Kamath (LLNL) Zweben (PPPL) Islands Quasiperiodic • Data juxtaposition requires – Normalize simulation and experimental data into a common space (units, meshes, interpolation) – Quantifying the similarity (surface area, volume, rate of change over time, where are the features over time, …)

Requirements for data analysis and visualization • Feature extraction routines – Puncture plots classification Separatrix – Feature/Blob detection SDM center Kamath (LLNL) Zweben (PPPL) Islands Quasiperiodic • Data juxtaposition requires – Normalize simulation and experimental data into a common space (units, meshes, interpolation) – Quantifying the similarity (surface area, volume, rate of change over time, where are the features over time, …)

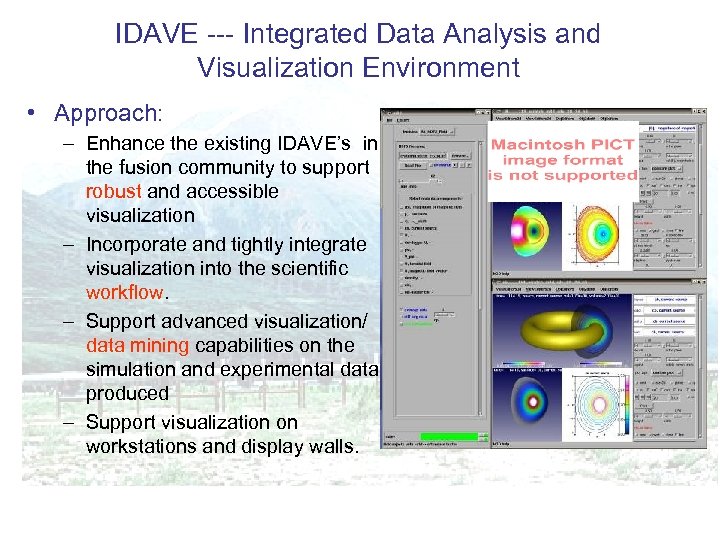

IDAVE --- Integrated Data Analysis and Visualization Environment • Approach: – Enhance the existing IDAVE’s in the fusion community to support robust and accessible visualization – Incorporate and tightly integrate visualization into the scientific workflow. – Support advanced visualization/ data mining capabilities on the simulation and experimental data produced – Support visualization on workstations and display walls.

IDAVE --- Integrated Data Analysis and Visualization Environment • Approach: – Enhance the existing IDAVE’s in the fusion community to support robust and accessible visualization – Incorporate and tightly integrate visualization into the scientific workflow. – Support advanced visualization/ data mining capabilities on the simulation and experimental data produced – Support visualization on workstations and display walls.

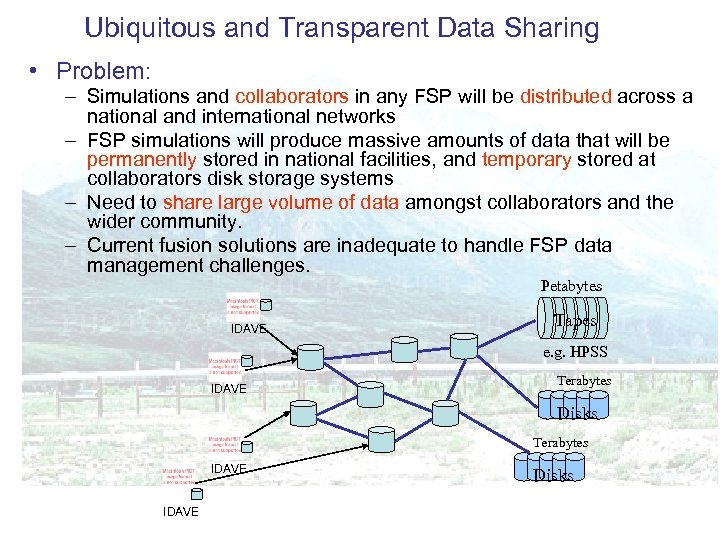

Ubiquitous and Transparent Data Sharing • Problem: – Simulations and collaborators in any FSP will be distributed across a national and international networks – FSP simulations will produce massive amounts of data that will be permanently stored in national facilities, and temporary stored at collaborators disk storage systems – Need to share large volume of data amongst collaborators and the wider community. – Current fusion solutions are inadequate to handle FSP data management challenges. Petabytes IDAVE Tapes e. g. HPSS IDAVE Terabytes Disks Terabytes IDAVE Disks

Ubiquitous and Transparent Data Sharing • Problem: – Simulations and collaborators in any FSP will be distributed across a national and international networks – FSP simulations will produce massive amounts of data that will be permanently stored in national facilities, and temporary stored at collaborators disk storage systems – Need to share large volume of data amongst collaborators and the wider community. – Current fusion solutions are inadequate to handle FSP data management challenges. Petabytes IDAVE Tapes e. g. HPSS IDAVE Terabytes Disks Terabytes IDAVE Disks

Ubiquitous and Transparent Data Sharing • What technology is required – Metadata system • To map user concepts to datasets and files • e. g. find {ITER, shot_1174, Var=P(2 D), Time=0 -10} • e. g. Yields: /iter/shot 1174/mhd – Logical to physical data (files) mapping • e. g. lors: //www. pppl. gov/fsp/shot 1174. xml • Support for multiple replicas based on access patterns – Technology to manage temporary space • Lifetime, garbage collection – Technology for fast access • Parallel streams, large transfer windows, data streaming – Robustness • If mass store unavailable, replicas can be used • Technology to recover from transient failure

Ubiquitous and Transparent Data Sharing • What technology is required – Metadata system • To map user concepts to datasets and files • e. g. find {ITER, shot_1174, Var=P(2 D), Time=0 -10} • e. g. Yields: /iter/shot 1174/mhd – Logical to physical data (files) mapping • e. g. lors: //www. pppl. gov/fsp/shot 1174. xml • Support for multiple replicas based on access patterns – Technology to manage temporary space • Lifetime, garbage collection – Technology for fast access • Parallel streams, large transfer windows, data streaming – Robustness • If mass store unavailable, replicas can be used • Technology to recover from transient failure

Ubiquitous and Transparent Data Sharing • Approach – Need logistical versions of standard libraries and tools (Net. CDF, HDF 5) for moving and accessing data across the network – Speed of transfer and control of placement are vital to performance and fault tolerance – Data staging, scheduling and tracking based on common SDM tools and policies – Global namespace and placement policies to enable community collaboration around distributed postprocessing, visualization tasks • Use: – Logistical Networking: distributed depot system, maps logical to physical, parallel access, file staging – Storage Resource Management (SRM): Disk & Tape Mgmt Systems, manage space, lifetime, garbage collection, – No dependence on a single system: SRM is a middleware standard for multiple storage systems

Ubiquitous and Transparent Data Sharing • Approach – Need logistical versions of standard libraries and tools (Net. CDF, HDF 5) for moving and accessing data across the network – Speed of transfer and control of placement are vital to performance and fault tolerance – Data staging, scheduling and tracking based on common SDM tools and policies – Global namespace and placement policies to enable community collaboration around distributed postprocessing, visualization tasks • Use: – Logistical Networking: distributed depot system, maps logical to physical, parallel access, file staging – Storage Resource Management (SRM): Disk & Tape Mgmt Systems, manage space, lifetime, garbage collection, – No dependence on a single system: SRM is a middleware standard for multiple storage systems

Summary • The scientific investigation process in the FSP will be limited without a strong data management -visualization approach highlighted in the 2004 DOE Data Management report. • Many DOE projects would benefit from End-to. End solutions. • Need to couple DOE/NSF computer science research with hardened solutions for applications. • 2004 Data Management Workshop: need $32 M/year of new funding!

Summary • The scientific investigation process in the FSP will be limited without a strong data management -visualization approach highlighted in the 2004 DOE Data Management report. • Many DOE projects would benefit from End-to. End solutions. • Need to couple DOE/NSF computer science research with hardened solutions for applications. • 2004 Data Management Workshop: need $32 M/year of new funding!