6622f2286f3478e09417541ef0afe022.ppt

- Количество слайдов: 52

Neural Networks An Overview Brad Morantz Ph. D Viral Immunology Center Georgia State University bradscientist@ieee. org www. geocities. com/bradscientist Copyright Brad Morantz 2003

Neural Networks I think, therefore I am Copyright Brad Morantz 2003

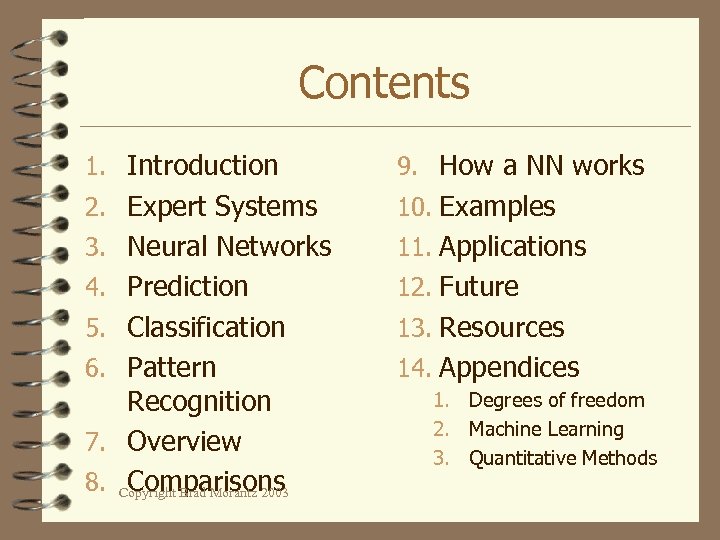

Contents 1. Introduction 9. How a NN works 2. Expert Systems 10. Examples 3. Neural Networks 11. Applications 4. Prediction 12. Future 5. Classification 13. Resources 6. Pattern 14. Appendices Recognition 7. Overview 8. Copyright Brad Morantz 2003 Comparisons 1. Degrees of freedom 2. Machine Learning 3. Quantitative Methods

The flight to Dallas is cancelled That’s Wonderful! Copyright Brad Morantz 2003

Why is it wonderful? 4 Eithere is something wrong with : – The airplane – The Pilot – Dallas If there is something wrong with any of these, I don’t want to be on the airplane This example courtesy of Ziglear Copyright Brad Morantz 2003

Has Anyone been turned down on a credit card transaction? 4 For reasons other than late payments? Copyright Brad Morantz 2003

I was ID’d buying a VCR 4 This is what I normally do: – I normally buy gas once a week – I usually spend about $10 – I buy a book once a month 4 This is MY pattern Copyright Brad Morantz 2003

Scene at the Store I need to see your ID Copyright Brad Morantz 2003 That’s Wonderful!

Why did the Salesperson ask for ID? 4 The credit card machine told her to 4 Why did the machine ask for it? – They have a pattern of my normal activity – Buying a VCR was not part of that pattern – Expensive purchases were not normal for me – It wanted to be sure that it was not a stolen card – Criminals with stolen cards often buy expensive electronic items like VCR’s – It is a pattern for a stolen credit card Copyright Brad Morantz 2003

How did the credit card machine do this? 4 It used Artificial Intelligence 4 Computer program – Expert System – Neural Network – Hybrid Copyright Brad Morantz 2003

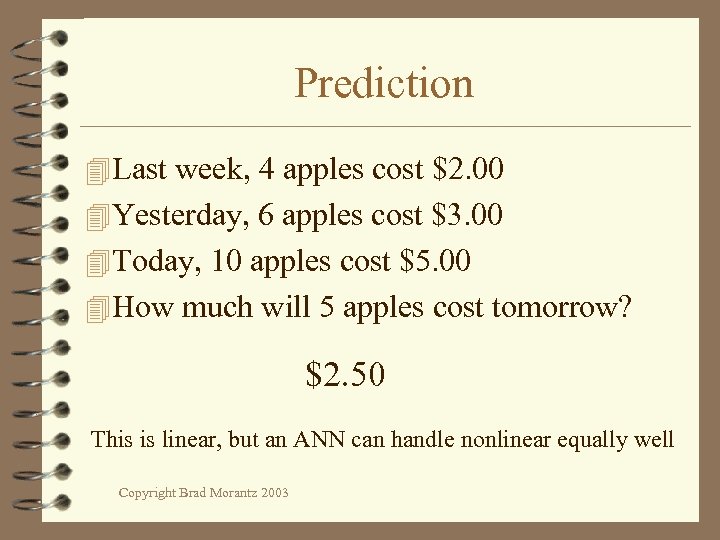

Prediction 4 Last week, 4 apples cost $2. 00 4 Yesterday, 6 apples cost $3. 00 4 Today, 10 apples cost $5. 00 4 How much will 5 apples cost tomorrow? $2. 50 This is linear, but an ANN can handle nonlinear equally well Copyright Brad Morantz 2003

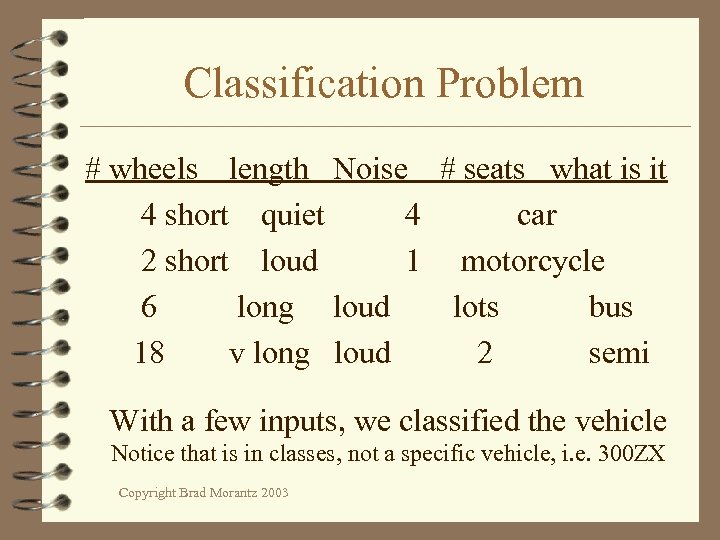

Classification Problem # wheels length Noise # seats what is it 4 short quiet 4 car 2 short loud 1 motorcycle 6 long loud lots bus 18 v long loud 2 semi With a few inputs, we classified the vehicle Notice that is in classes, not a specific vehicle, i. e. 300 ZX Copyright Brad Morantz 2003

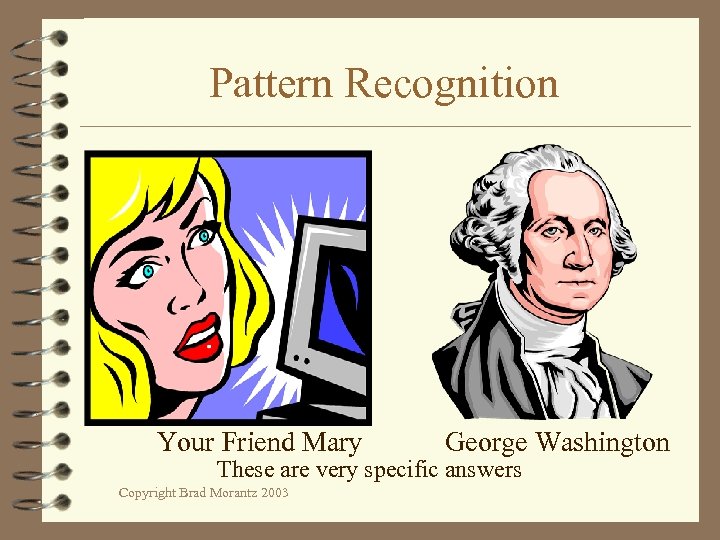

Pattern Recognition Your Friend Mary George Washington These are very specific answers Copyright Brad Morantz 2003

What do these examples have in common? 4 Learned 4 From experience 4 From historical data 4 By example 4 By organization Copyright Brad Morantz 2003

What is an Expert System 4 Knowledge based system 4 Having an expert that you can ask questions 4 Computer program – Has set of rules or heuristics – From a human expert – Behaves like human expert Copyright Brad Morantz 2003

Quick overview of expert system 4 Requires Expert 4 Requires Knowledge Engineer 4 Stores knowledge in set of rules 4 Must have rule for all possibilities 4 Contains bias, opinions, and emotions of expert 4 May not be current 4 Can ask it reason for its answer 4 Mycin, Teresius, etc (Gordon & Shortliffe) Copyright Brad Morantz 2003

What is a Neural Network? 4 A human Brain 4 A porpoise brain 4 The brain in a living creature 4 A computer program – Emulates biological brain – Limited connections 4 Specialized computer chip Copyright Brad Morantz 2003

Three types of Functions 4 Prediction and Time Series Forecasting – Like regression, but not constrained to linear 4 Classification – Sort into a class, like cluster analysis 4 Pattern Recognition – Fined tuned classification 4 Not constrained to linear or Gauss Normal distribution Copyright Brad Morantz 2003

Advantages of Neural Network 4 No Expert needed 4 No Knowledge Engineer needed 4 Does not have bias of expert 4 Can interpolate for all cases 4 Learns from facts 4 Can resolve conflicts 4 Variables can be correlated (multicollinearity) Copyright Brad Morantz 2003

More Advantages 4 Learns relationships 4 Can make good model with noisy or incomplete data 4 Can handle nonlinear or discontinuous data 4 Can Handle data of unknown or undefined distribution 4 Data Driven Copyright Brad Morantz 2003

Disadvantages of Neural Net 4 Black Box – don’t know why or how – not sure of what it is looking at 4 Operator dependent 4 Don’t have knowledge in hand * Many of these disadvantages are being overcome Copyright Brad Morantz 2003

Black Box input output 4 What happens inside the box is unknown 4 We can’t see into the box 4 We don’t know what it knows Copyright Brad Morantz 2003

Key to Neural Networks 4 Learns input to output relationship Copyright Brad Morantz 2003

Biological Neural Network 4 Human Brain has 4 x 1010 to 1011 Neurons 4 Each can have 10, 000 connections* 4 Human baby makes 1 million connections per second until age 2 4 Speed of synapse is 1 k. Hz, much slower than computer (3. 0 g. Hz) 4 Massively parallel structure * Some estimates are much greater Copyright Brad Morantz 2003

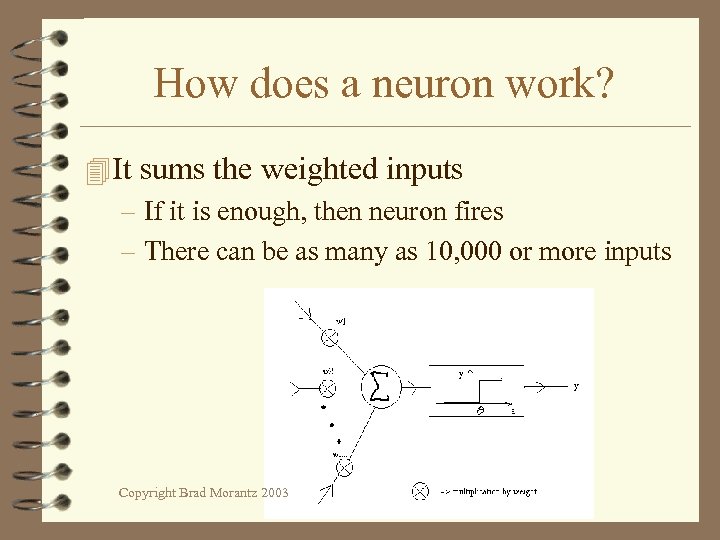

How does a neuron work? 4 It sums the weighted inputs – If it is enough, then neuron fires – There can be as many as 10, 000 or more inputs Copyright Brad Morantz 2003

Computer Neural Network 4 Von Neuman architecture 4 Serial machine with inherently parallel process 4 Series of mathematical equations 4 Simulates relatively small brain 4 Limited connectivity 4 Closely approximates complex nonlinear functions Copyright Brad Morantz 2003

Computer Emulation of NN 4 Called Artificial Neural Network (ANN) 4 Two uses – Applications as shown • Forecasting/Prediction • Classification • Pattern recognition – Use model to help understand biological neural network Copyright Brad Morantz 2003

Neuron Activation 4 Weights can be positive or negative 4 Negative weight inhibits neuron firing 4 Sum = W 1 N 1 + W 2 N 2 + …. + Wn. Nn 4 If sum is negative, neuron does not fire 4 If sum is positive neuron fires 4 Fire means an output from neuron 4 Non-linear function 4 Some models include a threshold Copyright Brad Morantz 2003

Neuron Activation 4 Linear 4 Sigmoidal – 1. 0/(1. 0+e-s) where s = Σ inputs – 0 or +1 result 4 Hyperbolic Tangent – (es – e-s) / (es + e-s) where s = Σ inputs – -1 or +1 result 4 Also called squashing or clamping function Copyright Brad Morantz 2003

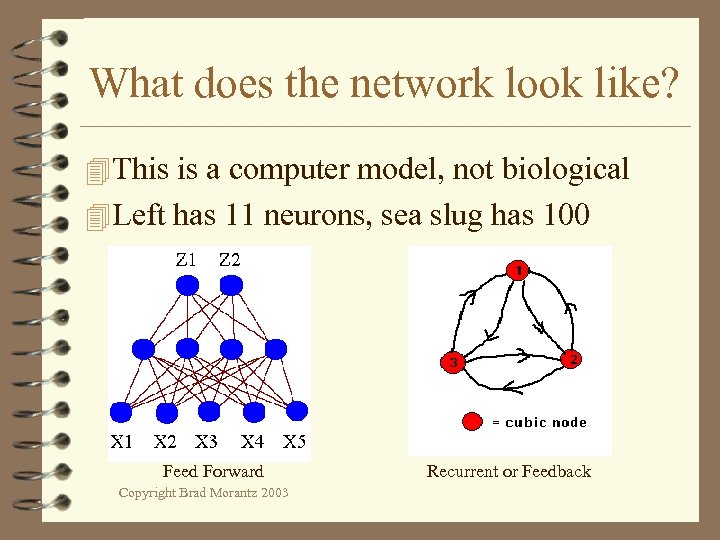

What does the network look like? 4 This is a computer model, not biological 4 Left has 11 neurons, sea slug has 100 Feed Forward Copyright Brad Morantz 2003 Recurrent or Feedback

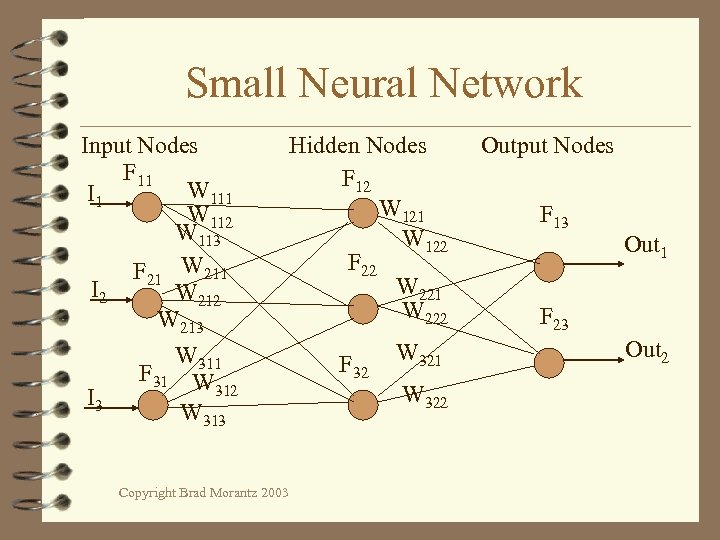

Small Neural Network Input Nodes F 11 W 111 I 1 W 112 W 113 F 21 W 211 I 2 W 213 W 311 F 31 W 312 I 3 W 313 Copyright Brad Morantz 2003 Hidden Nodes F 12 W 121 W 122 F 22 W 221 W 222 F 32 W 321 W 322 Output Nodes F 13 Out 1 F 23 Out 2

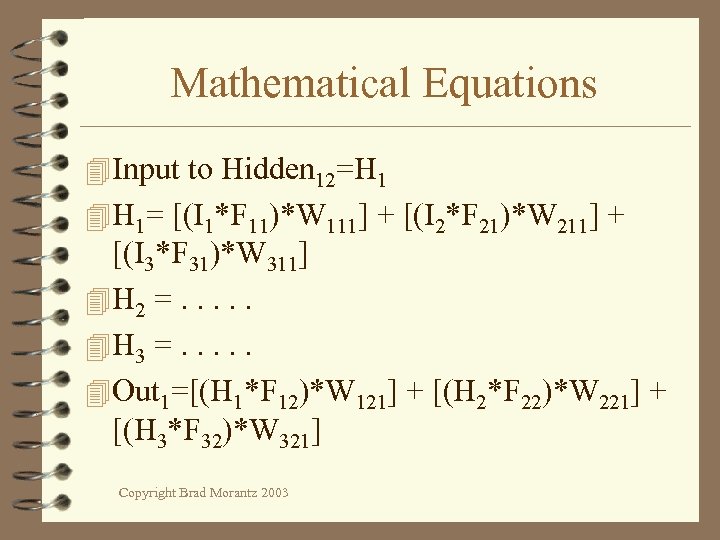

Mathematical Equations 4 Input to Hidden 12=H 1 4 H 1= [(I 1*F 11)*W 111] + [(I 2*F 21)*W 211] + [(I 3*F 31)*W 311] 4 H 2 =. . . 4 H 3 =. . . 4 Out 1=[(H 1*F 12)*W 121] + [(H 2*F 22)*W 221] + [(H 3*F 32)*W 321] Copyright Brad Morantz 2003

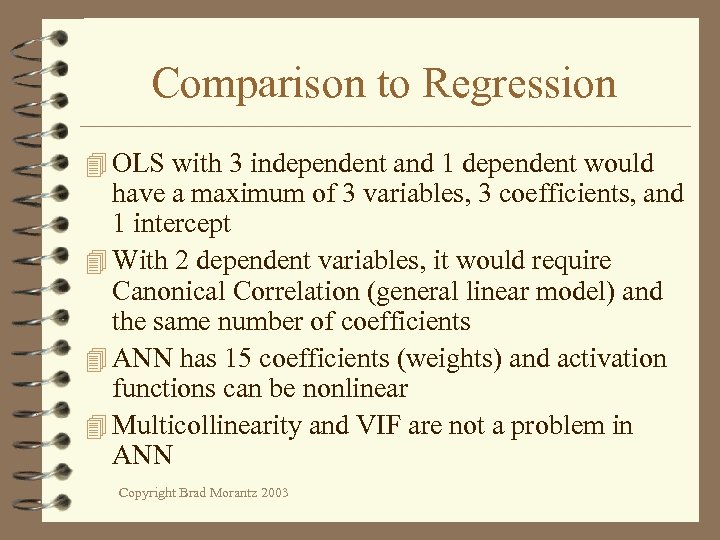

Comparison to Regression 4 OLS with 3 independent and 1 dependent would have a maximum of 3 variables, 3 coefficients, and 1 intercept 4 With 2 dependent variables, it would require Canonical Correlation (general linear model) and the same number of coefficients 4 ANN has 15 coefficients (weights) and activation functions can be nonlinear 4 Multicollinearity and VIF are not a problem in ANN Copyright Brad Morantz 2003

Macro View of Training 4 Setting all of the weights 4 To create optimal performance 4 Optimal adherence to training data 4 Really an optimization problem – Optimal methods depends on many variables – See optimization lecture 4 Need objective function 4 Beware of local minima! Copyright Brad Morantz 2003

Optimization Methods 4 Back Propagation (most popular) 4 Gradient Descent 4 Generalized reduced gradient (GRG) 4 Simulated Annealing 4 Genetic Algorithm 4 Two or more output nodes – Multi objective optimization 4 Many more methods Copyright Brad Morantz 2003

Training 4 Supervised – Data given, along with answer – Compare to prediction and go try again – Historical data and/or rules 4 Unsupervised – No teacher or feedback – Self organizing Copyright Brad Morantz 2003

Training Data Set 4 Need more observations than weights – Positive # degrees freedom 4 More observations is usually better – Lower variance – More knowledge 4 Watch aging of data Copyright Brad Morantz 2003

Data Window 4 Rolling window – Keep all data – Add new data as available 4 Moving window – Train with fixed amount of data – As new observation is added, oldest is deleted – Necessary when underlying factors change Copyright Brad Morantz 2003

Data Window Continued 4 Weighted Window – Morantz, Whalen, & Zhang – Superset of rolling & moving window – Oldest data is reduced in importance – Has reduced residual by as much as 50% – Multi factor ANOVA shows results significant in majority of applications with real world data Copyright Brad Morantz 2003

Dynamic Learning 4 Continuous learning – From mistakes and successes – From new information 4 Shooting baskets example – Too low. Learned: throw harder – Too high. Learned: throw softer, but not as soft as before – Basket! Learned: correct amount of “push” 4 Loaning $10 example Copyright Brad Morantz 2003

inputs 4 One per input node 4 Ratio 4 Logical 4 Dummy 4 Categorical 4 Ordinal Copyright Brad Morantz 2003

Outputs 4 One for single dependent variable 4 Multiple – Prediction – Classification – Pattern recognition Copyright Brad Morantz 2003

Hidden Layer(s) 4 Increase complexity 4 Can increase accuracy 4 Can reduce degrees of freedom – Need larger data set 4 Presently architecture up to programmer 4 Source for error 4 In future will be more automatic Copyright Brad Morantz 2003

Methodology 4 Split training data – 80/20 is most common – Other methods depending on # observations 4 Train on the 80% 4 Validate on the 20% 4 Never test on what you used for training Copyright Brad Morantz 2003

Hybrids 4 Use advantages of both Expert and ANN 4 Uses more knowledge than just one type 4 Can seed system with expert knowledge and then update with data 4 Sometimes hard to get all parts to work together 4 Harder to validate model Copyright Brad Morantz 2003

Example 4 You go some place that you have never been before, and get “bad vibes” – Atmosphere, temperature, lighting, smell, coloring, numerous things 4 For some reason, brain associates these together, possibly some past experience 4 Gives you “bad feeling” Copyright Brad Morantz 2003

Applications 4 Credit card fraud 4 Military: submarine, tank, sniper detect, or target recognition 4 Security 4 Classify stars & planets 4 Data mining 4 Natural language recognition Copyright Brad Morantz 2003

Future 4 Rule extraction 4 Hybrids 4 Dynamic learning 4 Parallel processing 4 Dedicated chips 4 Bigger & more automatic 4 Machine Learning Copyright Brad Morantz 2003

Contacts 4 IEEE Transactions on Neural Networks 4 IEEE Intelligent Systems Journal 4 IEEE Neural Network Society 4 AAAI American Association for Artificial Intelligence 4 www. ieee. org 4 www. zsolutions. com 4 Internet 4 www. geocities. com/bradscientist Copyright Brad Morantz 2003

Appendix – degrees of freedom 4 Number of values free to vary – Complex math definition 4 Must watch, not less than zero 4 i. e. 3 X + 4 Y = Z – 3 unknowns, if know Z only one other can vary – If have 3 equations, values can not vary 4 Large ANN needs big sample data Copyright Brad Morantz 2003

Appendix - Machine Learning 4 Machine learns from experience 4 From experience and data it improves its knowledge (rule base or weights) 4 Shooting baskets example Copyright Brad Morantz 2003

Appendix- Quantitative Methods 4 OLS Regression 4 Canonical Correlation- General Linear Model 4 Classification 4 Cluster Analysis Copyright Brad Morantz 2003

6622f2286f3478e09417541ef0afe022.ppt